Neural Network Direct Control with Online Learning for Shape Memory Alloy Manipulators

Abstract

1. Introduction

2. Materials and Methods

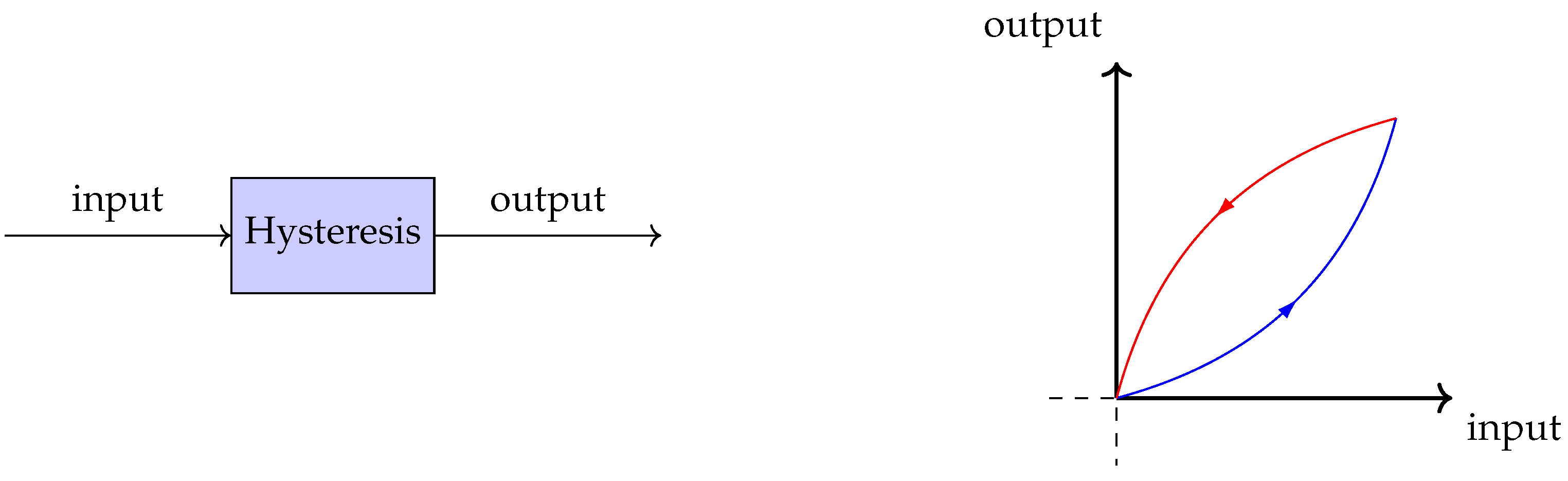

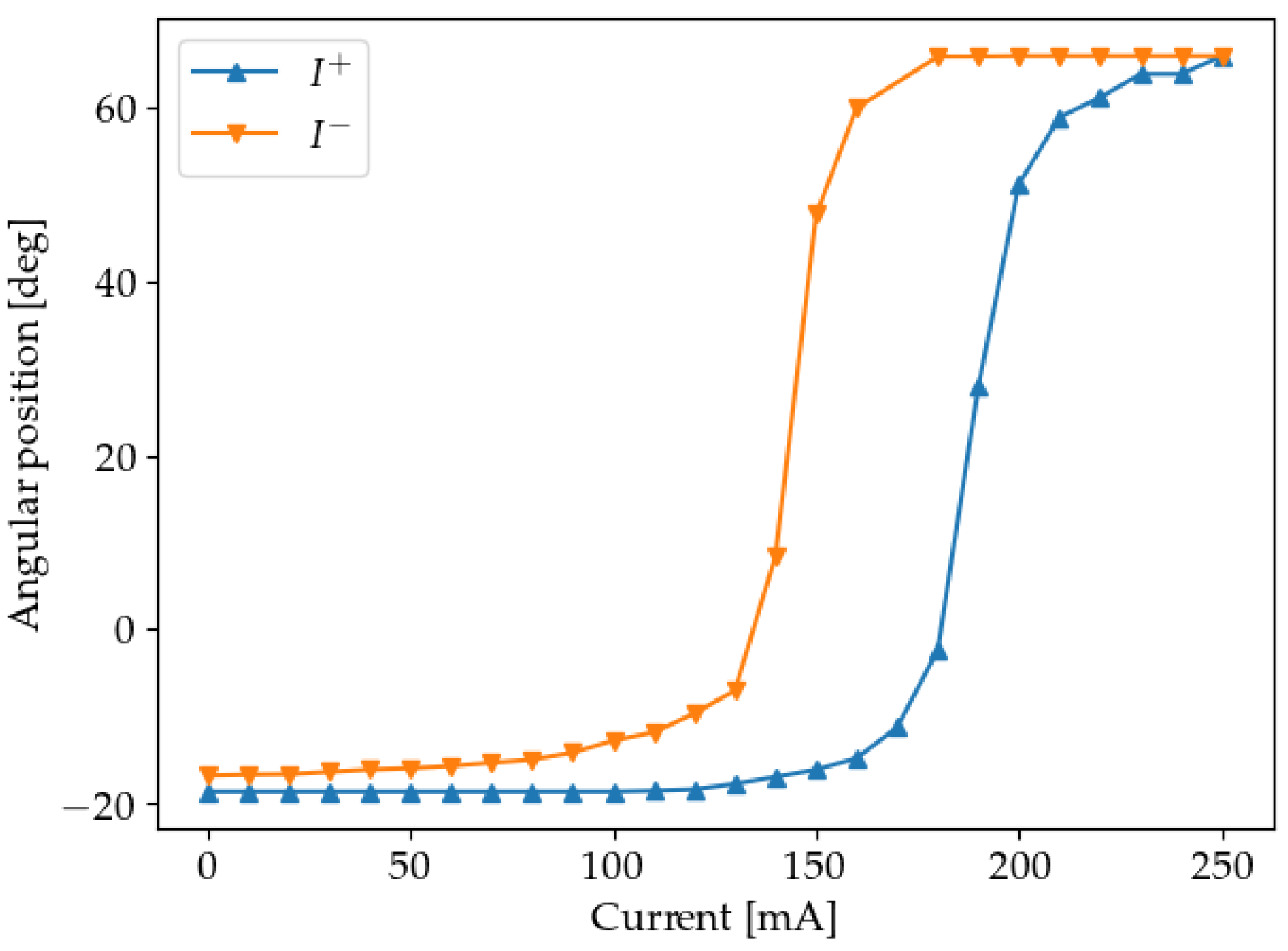

2.1. Hysteresis

The Classic Preisach Model

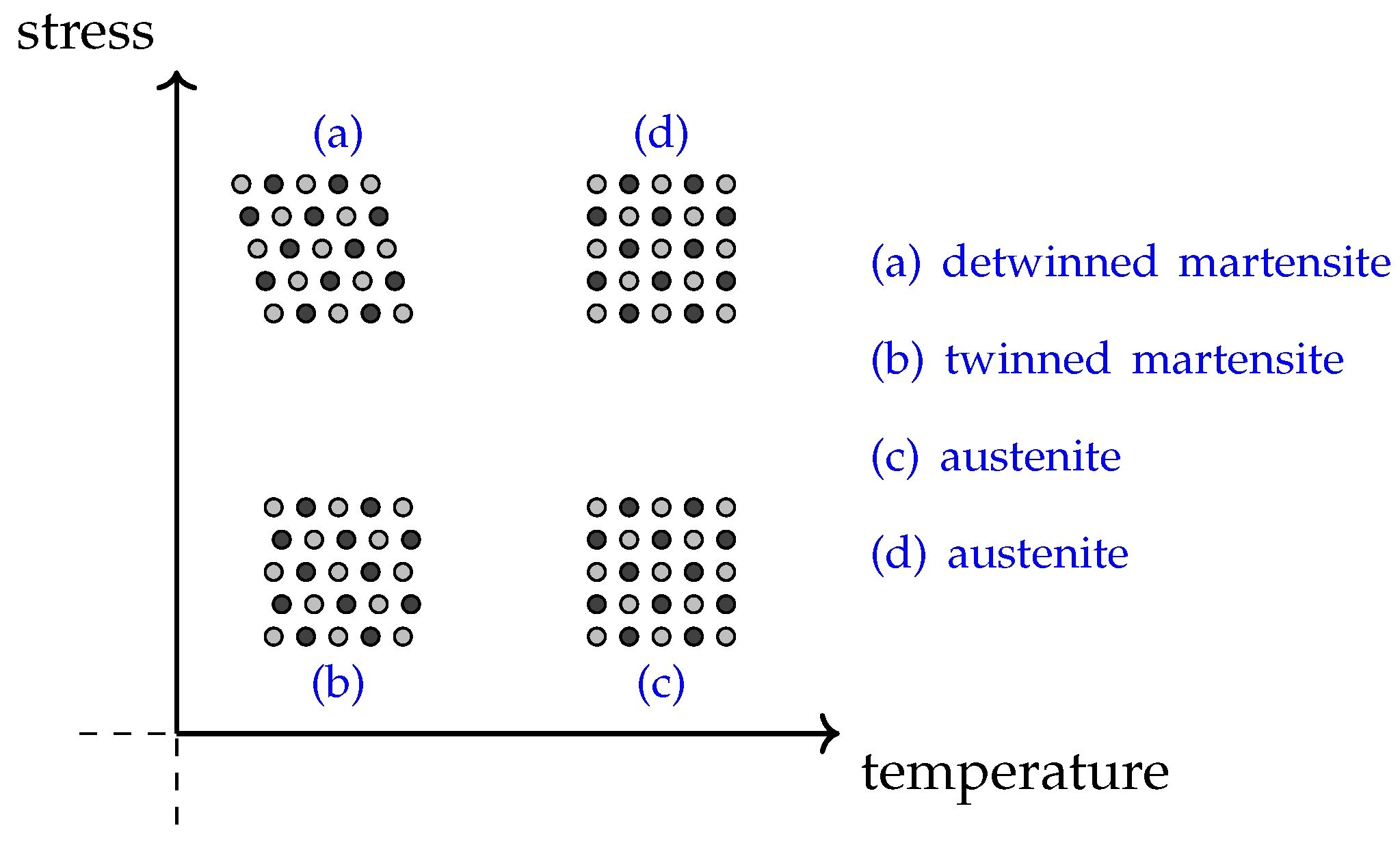

2.2. Shape Memory Alloys

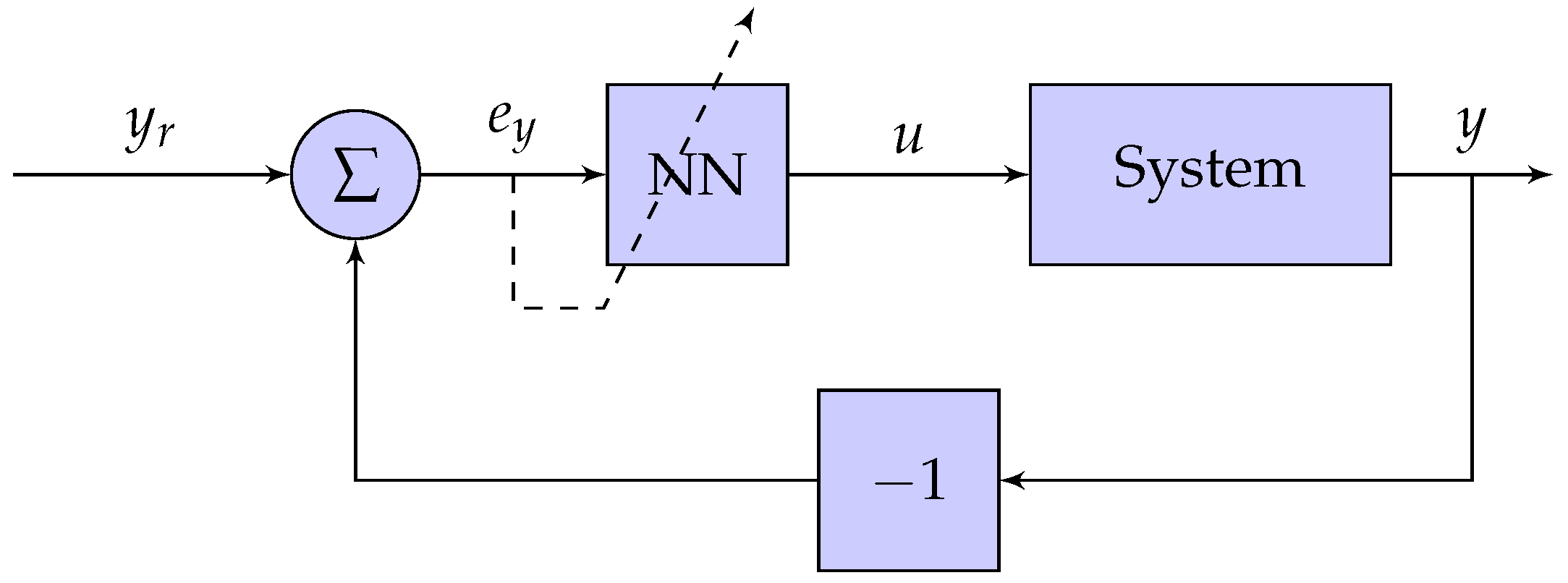

2.3. Neural Networks

2.3.1. Feedforward Neural Networks with Sliding Window

- the weight of the connection between input and hidden layer perceptron

- the weight of the connection between hidden layer perceptron and output perceptron.it has been regarded as items, please confirm

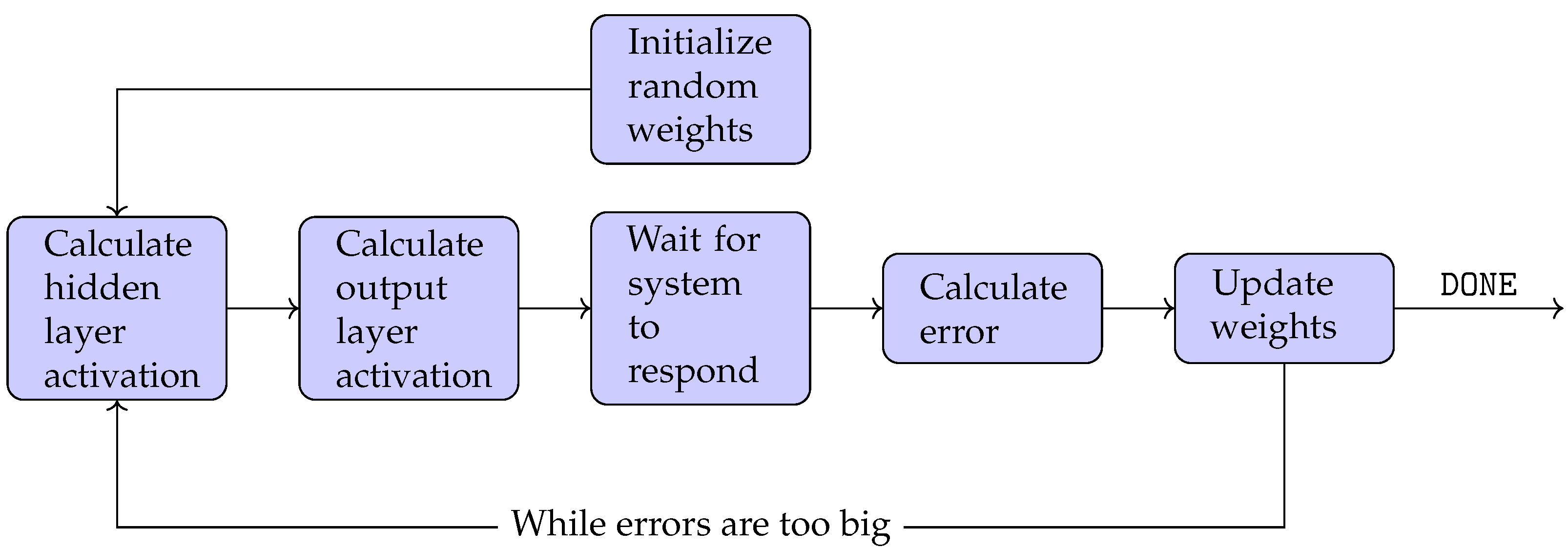

2.3.2. Back-Propagation

2.3.3. Calculation of the Gradient

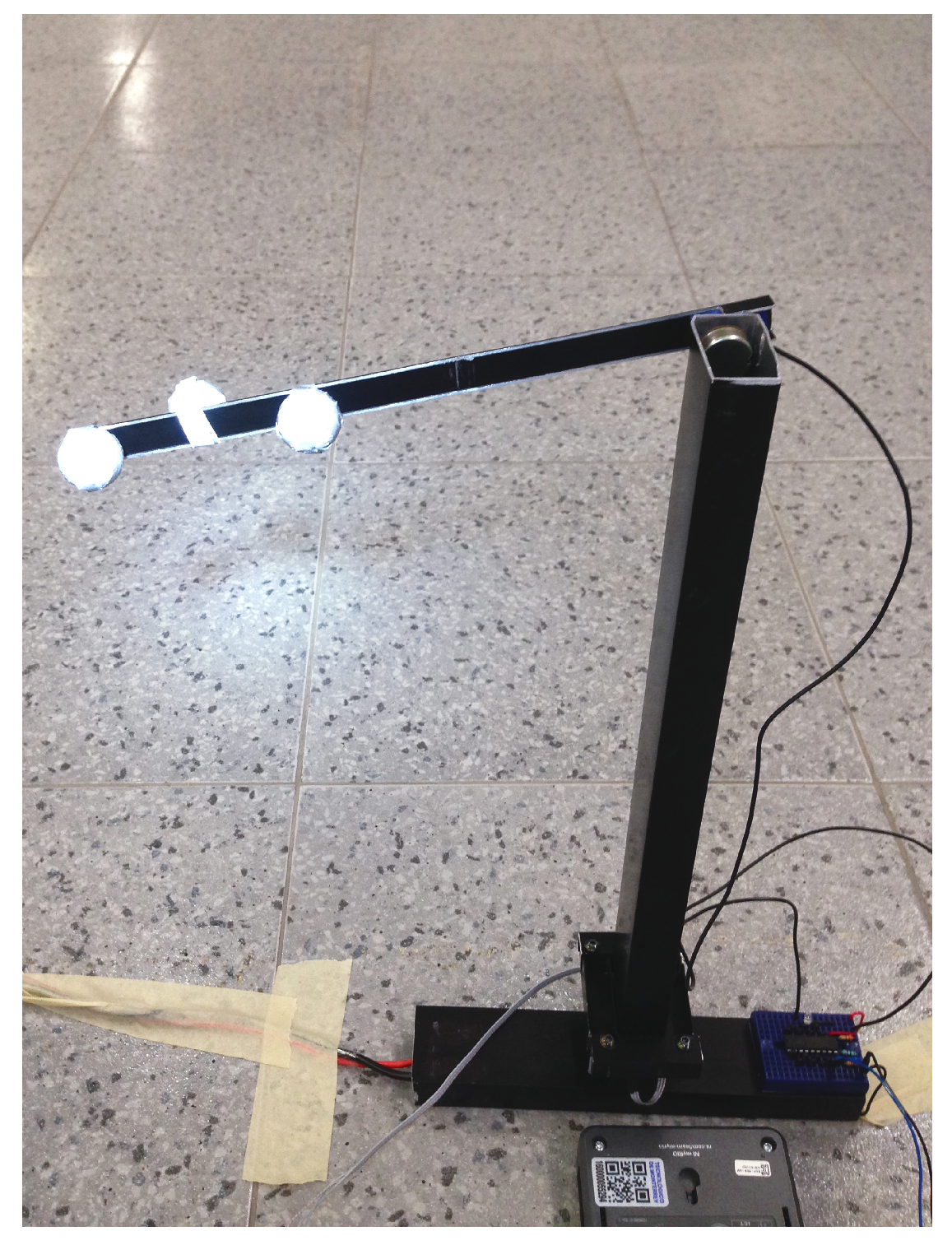

3. Experimental Setup

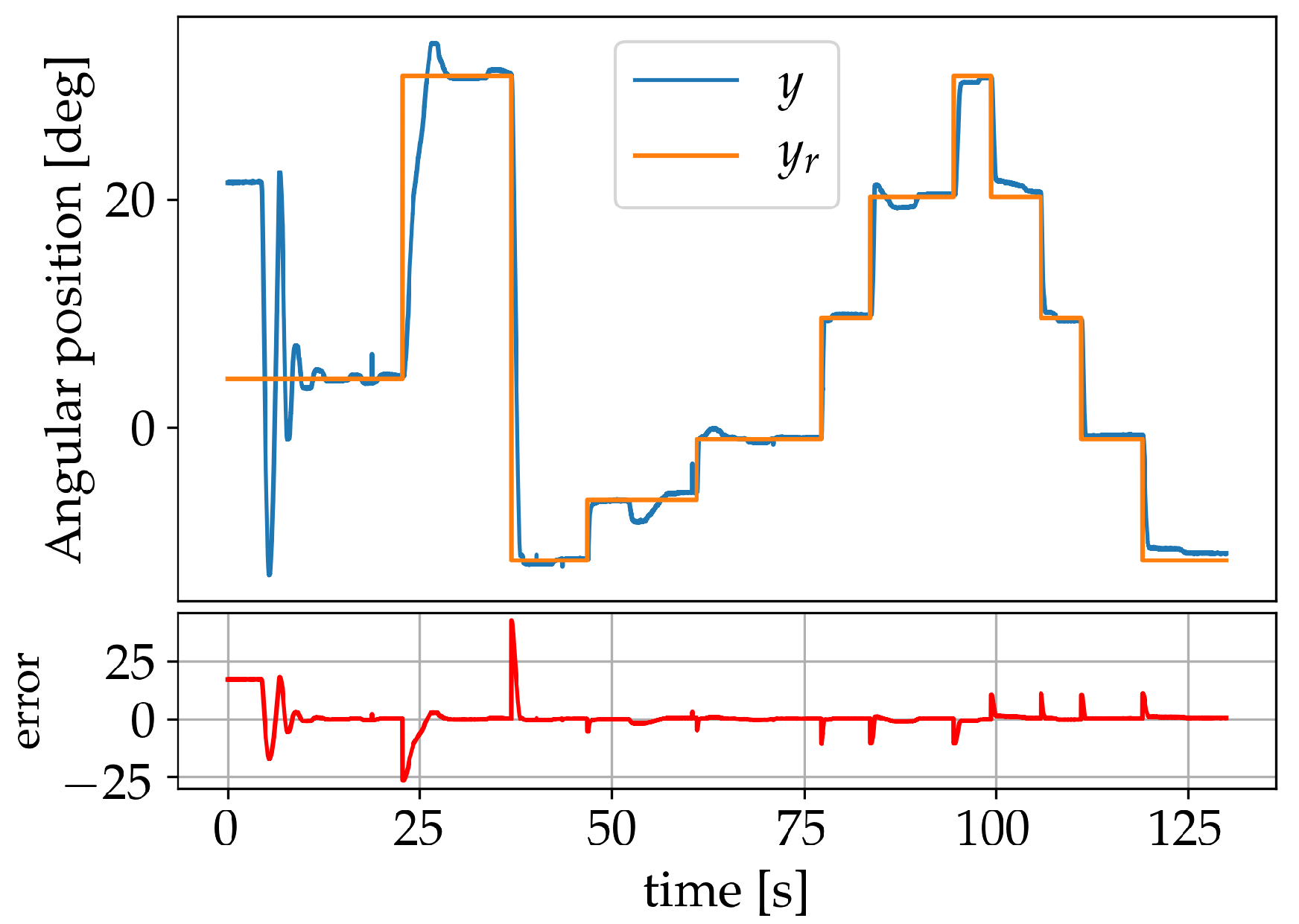

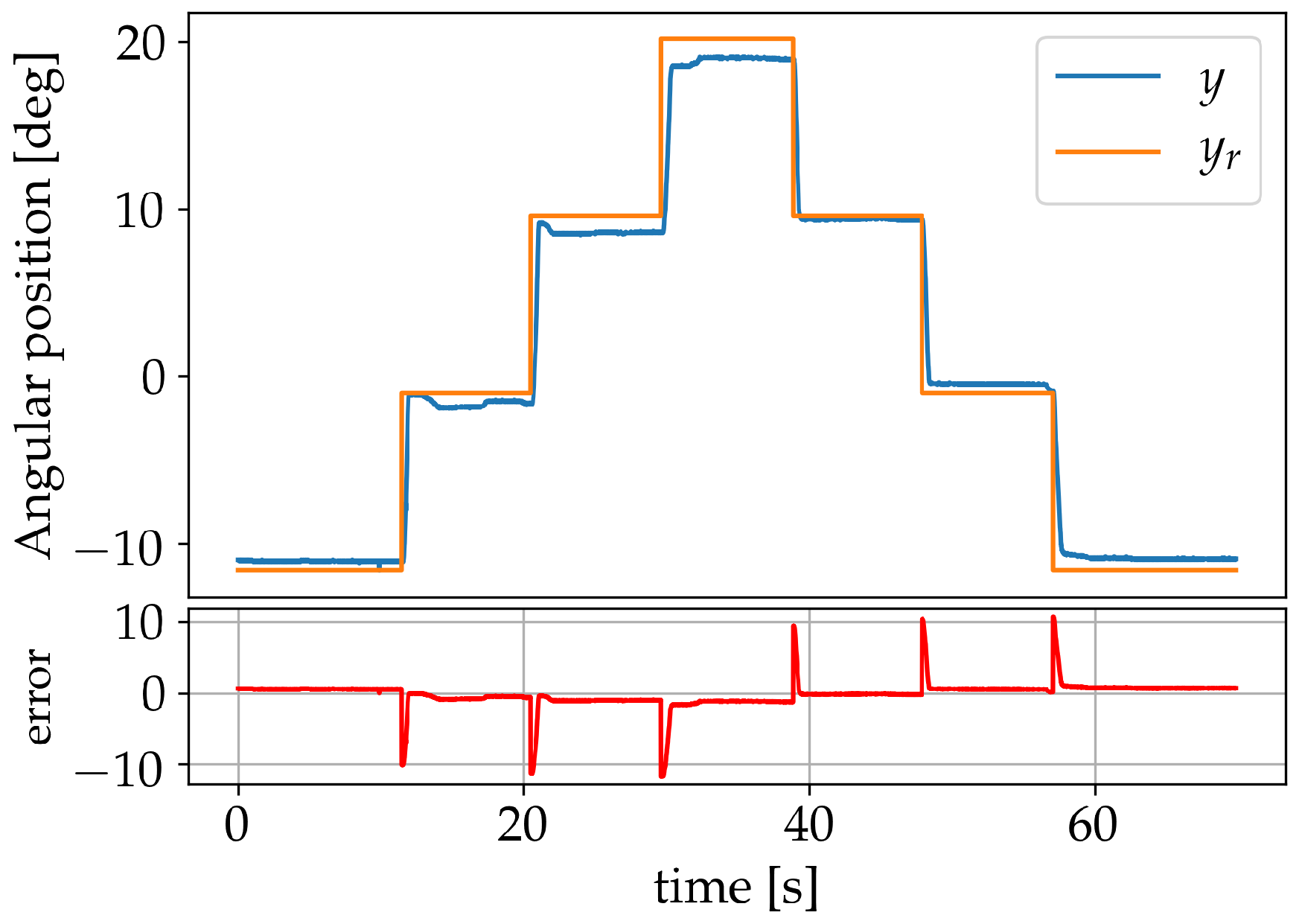

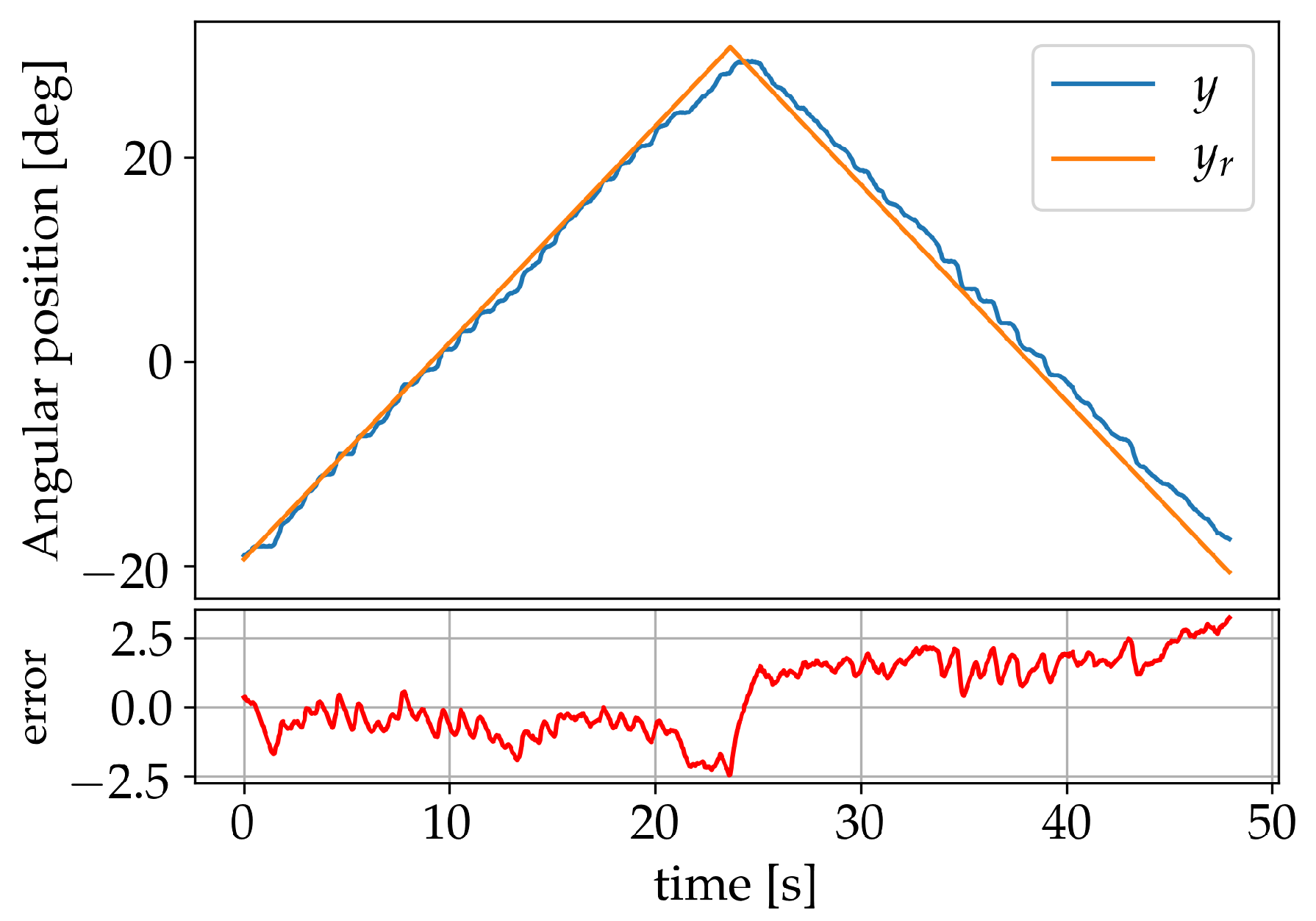

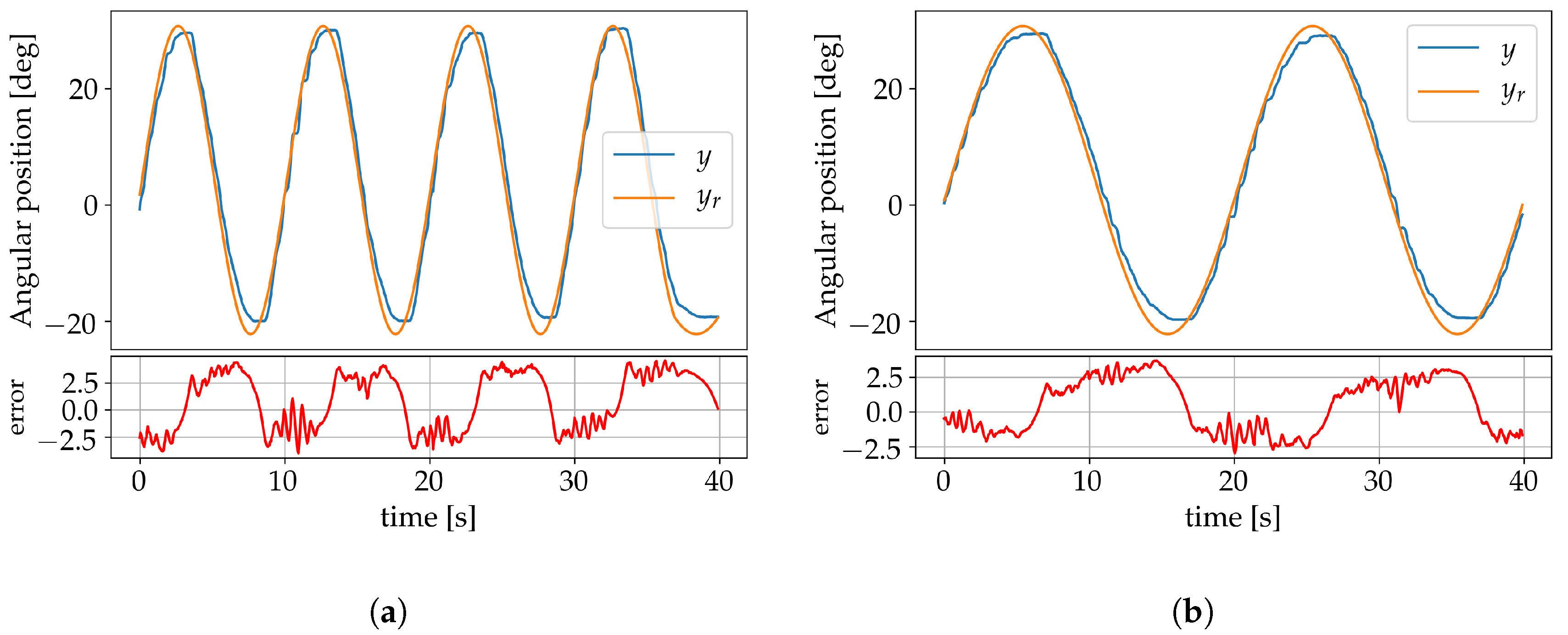

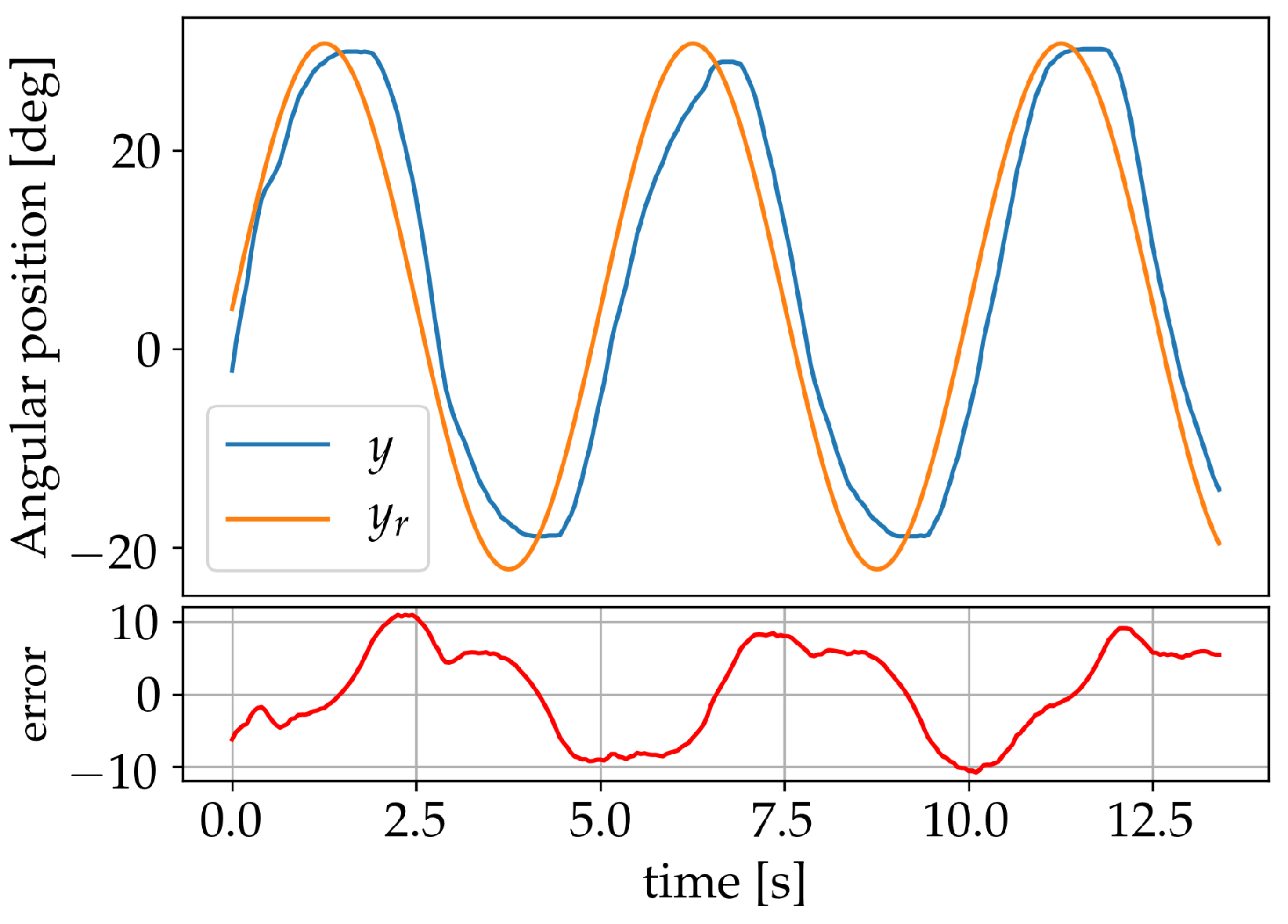

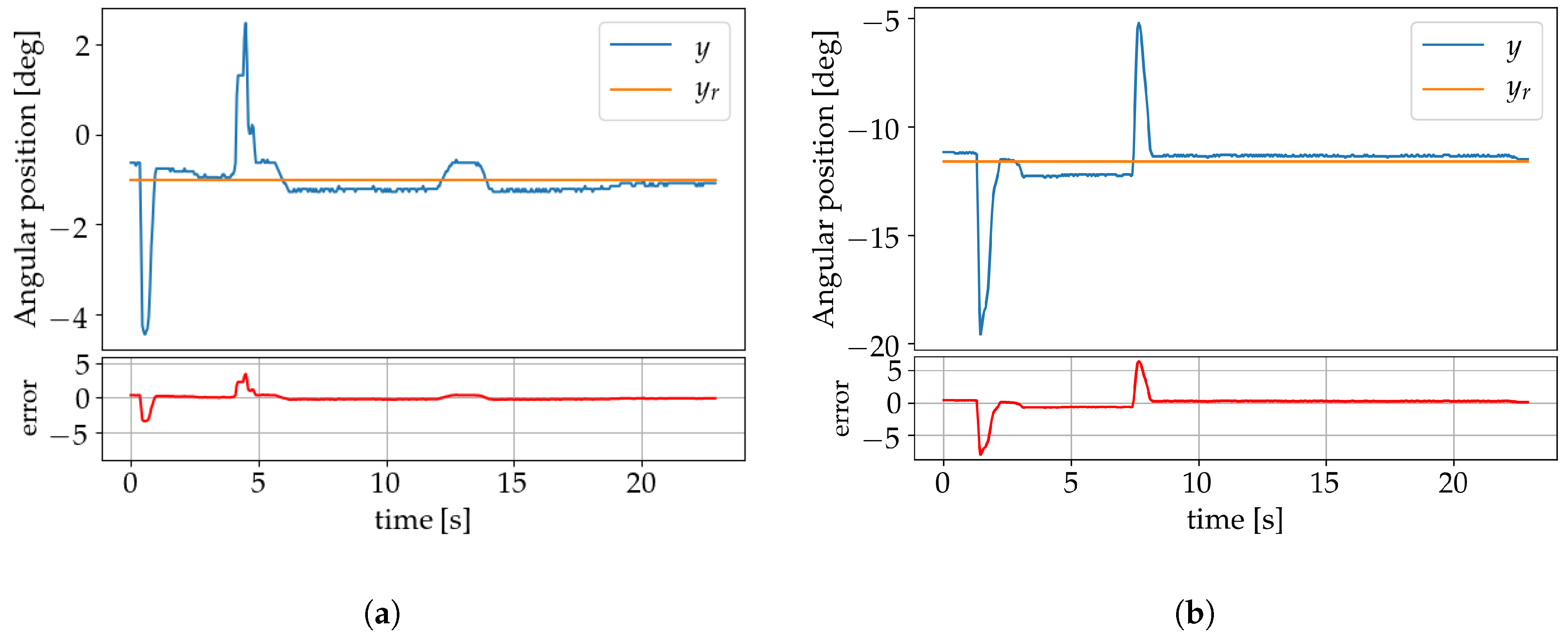

4. Results

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| (A)NN | (artificial) neural network |

| 1-DOF | one degree of freedom |

| MEMS | micro-electromechanical systems |

| MLP | multilayer perceptron |

| PID | proportional-integral-derivative |

| PWM | pulse-width modulation |

| SMA | shape memory alloy |

Appendix A. Motion Capture System

Appendix B. 1-DOF Manipulator

Appendix C. Back-Propagation

|

References

- Zhou, M.; Zhang, Q. Hysteresis model of magnetically controlled shape memory alloy based on a PID neural network. IEEE Trans. Magn. 2015, 51, 1–4. [Google Scholar]

- Chinni, F.; Spizzo, F.; Montoncello, F.; Mattarello, V.; Maurizio, C.; Mattei, G.; Bianco, L.D. Magnetic hysteresis in nanocomposite films consisting of a ferromagnetic AuCo alloy and ultrafine Co particles. Materials 2017, 10, 717. [Google Scholar] [CrossRef] [PubMed]

- Tan, Q.; Kang, H.; Xiong, J.; Qin, L.; Zhang, W.; Li, C.; Ding, L.; Zhang, X.; Yang, M. A wireless passive pressure microsensor fabricated in HTCC MEMS technology for harsh environments. Sensors 2013, 13, 9896–9908. [Google Scholar] [CrossRef] [PubMed]

- Tu, F.; Hu, S.; Zhuang, Y.; Lv, J.; Wang, Y.; Sun, Z. Hysteresis Curve Fitting Optimization of Magnetic Controlled Shape Memory Alloy Actuator. Actuators 2016, 5, 25. [Google Scholar] [CrossRef]

- Nasiri-Zarandi, R.; Mirsalim, M. Finite-element analysis of an axial flux hysteresis motor based on a complex permeability concept considering the saturation of the hysteresis loop. IEEE Trans. Ind. Appl. 2016, 52, 1390–1397. [Google Scholar]

- Li, Z.; Zhang, X.; Su, C.Y.; Chai, T. Nonlinear control of systems preceded by Preisach hysteresis description: A prescribed adaptive control approach. IEEE Trans. Control Syst. Technol. 2016, 24, 451–460. [Google Scholar] [CrossRef]

- Vázquez, M.; Nielsch, K.; Vargas, P.; Velázquez, J.; Navas, D.; Pirota, K.; Hernandez-Velez, M.; Vogel, E.; Cartes, J.; Wehrspohn, R.; et al. Modelling hysteresis of interacting nanowires arrays. Phys. B Condens. Matter 2004, 343, 395–402. [Google Scholar] [CrossRef]

- Dong, R.; Tan, Y. A modified Prandtl–Ishlinskii modeling method for hysteresis. Phys. B Condens. Matter 2009, 404, 1336–1342. [Google Scholar] [CrossRef]

- Oh, J.; Bernstein, D.S. Semilinear Duhem model for rate-independent and rate-dependent hysteresis. IEEE Trans. Autom. Control 2005, 50, 631–645. [Google Scholar]

- Almassri, A.; Wan Hasan, W.; Ahmad, S.; Shafie, S.; Wada, C.; Horio, K. Self-calibration algorithm for a pressure sensor with a real-time approach based on an artificial neural network. Sensors 2018, 18, 2561. [Google Scholar] [CrossRef]

- Liu, Z.; Lai, G.; Zhang, Y.; Chen, X.; Chen, C.L.P. Adaptive neural control for a class of nonlinear time-varying delay systems with unknown hysteresis. IEEE Trans. Neural Netw. Learn. Syst. 2014, 25, 2129–2140. [Google Scholar] [PubMed]

- Liu, Z.; Lai, G.; Zhang, Y.; Chen, C.L.P. Adaptive neural output feedback control of output-constrained nonlinear systems with unknown output nonlinearity. IEEE Trans. Neural Netw. Learn. Syst. 2015, 26, 1789–1802. [Google Scholar]

- Fulginei, F.R.; Salvini, A. Neural network approach for modelling hysteretic magnetic materials under distorted excitations. IEEE Trans. Magn. 2012, 48, 307–310. [Google Scholar] [CrossRef]

- Lin, F.J.; Shieh, H.J.; Huang, P.K. Adaptive wavelet neural network control with hysteresis estimation for piezo-positioning mechanism. IEEE Trans. Neural Netw. 2006, 17, 432–444. [Google Scholar] [CrossRef] [PubMed]

- Seidl, D.R.; Lam, S.L.; Putman, J.A.; Lorenz, R.D. Neural network compensation of gear backlash hysteresis in position-controlled mechanisms. IEEE Trans. Ind. Appl. 1995, 31, 1475–1483. [Google Scholar] [CrossRef]

- Liaw, H.C.; Shirinzadeh, B.; Smith, J. Robust neural network motion tracking control of piezoelectric actuation systems for micro/nanomanipulation. IEEE Trans. Neural Netw. 2009, 20, 356–367. [Google Scholar] [CrossRef] [PubMed]

- Cao, S.; Wang, B.; Zheng, J.; Huang, W.; Weng, L.; Yan, W. Hysteresis compensation for giant magnetostrictive actuators using dynamic recurrent neural network. IEEE Trans. Magn. 2006, 42, 1143–1146. [Google Scholar]

- Sayyaadi, H.; Zakerzadeh, M.R. Position control of shape memory alloy actuator based on the generalized Prandtl–Ishlinskii inverse model. Mechatronics 2012, 22, 945–957. [Google Scholar] [CrossRef]

- Asua, E.; Etxebarria, V.; García-Arribas, A. Neural network-based micropositioning control of smart shape memory alloy actuators. Eng. Appl. Artif. Intell. 2008, 21, 796–804. [Google Scholar] [CrossRef]

- Zhou, M.; Zhang, Q.; Wang, J. Feedforward-feedback hybrid control for magnetic shape memory alloy actuators based on the Krasnosel’skii-Pokrovskii model. PLoS ONE 2014, 9, e97086. [Google Scholar] [CrossRef]

- Ghasemi, Z.; Nadafi, R.; Kabganian, M.; Abiri, R. Identification and Control of Shape Memory Alloys. Meas. Control 2013, 46, 252–256. [Google Scholar] [CrossRef]

- Tai, N.T.; Ahn, K.K. A hysteresis functional link artificial neural network for identification and model predictive control of SMA actuator. J. Process Control 2012, 22, 766–777. [Google Scholar] [CrossRef]

- Nikdel, N.; Nikdel, P.; Badamchizadeh, M.A.; Hassanzadeh, I. Using neural network model predictive control for controlling shape memory alloy-based manipulator. IEEE Trans. Ind. Electron. 2014, 61, 1394–1401. [Google Scholar] [CrossRef]

- Nikdel, N.; Badamchizadeh, M.A. Design and implementation of neural controllers for shape memory alloy–actuated manipulator. J. Intell. Mater. Syst. Struct. 2015, 26, 20–28. [Google Scholar] [CrossRef]

- Janičić, V.; Ilić, V.; Pjevalica, N.; Nikolić, M. An approach to modeling the hysteresis in ferromagnetic by adaptation of Preisach model. In Proceedings of the 2014 22nd Telecommunications Forum Telfor (TELFOR), Belgrade, Serbia, 25–27 November 2014; pp. 761–764. [Google Scholar]

- Wang, X.; Sun, T.; Zhou, J. Identification of preisach model for a fast tool servo system using neural networks. In Proceedings of the 2008 IEEE International Symposium on Knowledge Acquisition and Modeling Workshop, Wuhan, China, 21–22 December 2008; pp. 232–234. [Google Scholar]

- Sun, L.; Huang, W.M.; Ding, Z.; Zhao, Y.; Wang, C.C.; Purnawali, H.; Tang, C. Stimulus-responsive shape memory materials: A review. Mater. Des. 2012, 33, 577–640. [Google Scholar] [CrossRef]

- Hernández-Alvarado, R.; García-Valdovinos, L.G.; Salgado-Jiménez, T.; Gómez-Espinosa, A.; Fonseca-Navarro, F. Neural network-based self-tuning PID control for underwater vehicles. Sensors 2016, 16, 1429. [Google Scholar] [CrossRef] [PubMed]

- Cui, X.; Shin, K.G. Direct control and coordination using neural networks. IEEE Trans. Syst. Man Cybern. 1993, 23, 686–697. [Google Scholar]

- An, L.; Huang, W.M.; Fu, Y.Q.; Guo, N. A note on size effect in actuating NiTi shape memory alloys by electrical current. Mater. Des. 2008, 29, 1432–1437. [Google Scholar] [CrossRef]

| Model No. | Diameter | Length | Operational Current | Transition Temperature | Pull Force | Resistance |

|---|---|---|---|---|---|---|

| STD-005-90 | 0.13 mm | 305 mm | 200 mA | 90° | 0.22 kg | 0.75 Ω/cm |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gómez-Espinosa, A.; Castro Sundin, R.; Loidi Eguren, I.; Cuan-Urquizo, E.; Treviño-Quintanilla, C.D. Neural Network Direct Control with Online Learning for Shape Memory Alloy Manipulators. Sensors 2019, 19, 2576. https://doi.org/10.3390/s19112576

Gómez-Espinosa A, Castro Sundin R, Loidi Eguren I, Cuan-Urquizo E, Treviño-Quintanilla CD. Neural Network Direct Control with Online Learning for Shape Memory Alloy Manipulators. Sensors. 2019; 19(11):2576. https://doi.org/10.3390/s19112576

Chicago/Turabian StyleGómez-Espinosa, Alfonso, Roberto Castro Sundin, Ion Loidi Eguren, Enrique Cuan-Urquizo, and Cecilia D. Treviño-Quintanilla. 2019. "Neural Network Direct Control with Online Learning for Shape Memory Alloy Manipulators" Sensors 19, no. 11: 2576. https://doi.org/10.3390/s19112576

APA StyleGómez-Espinosa, A., Castro Sundin, R., Loidi Eguren, I., Cuan-Urquizo, E., & Treviño-Quintanilla, C. D. (2019). Neural Network Direct Control with Online Learning for Shape Memory Alloy Manipulators. Sensors, 19(11), 2576. https://doi.org/10.3390/s19112576