Audio-Based System for Automatic Measurement of Jump Height in Sports Science

Abstract

1. Introduction

2. Materials and Methods

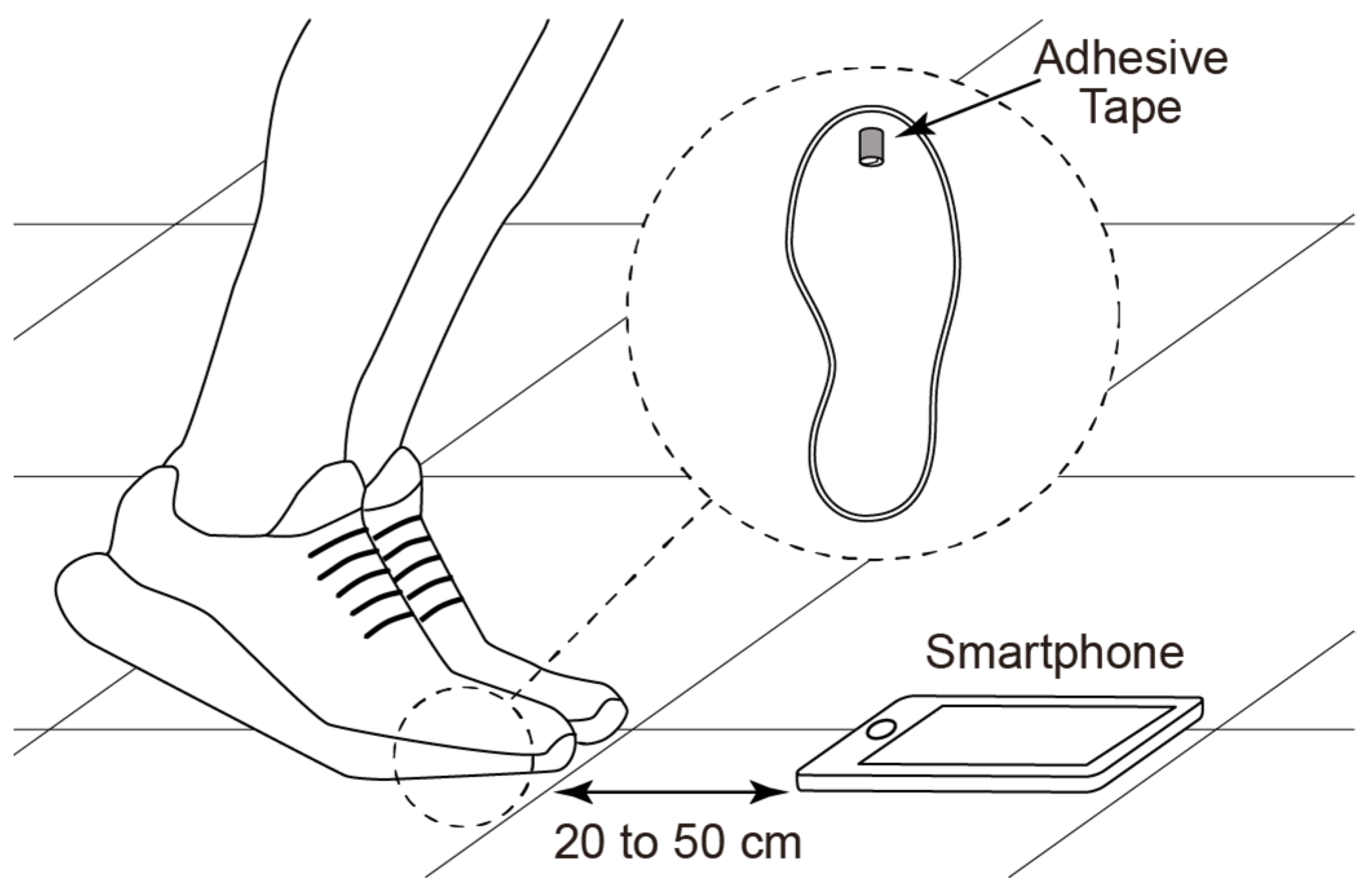

2.1. Experimental Procedure

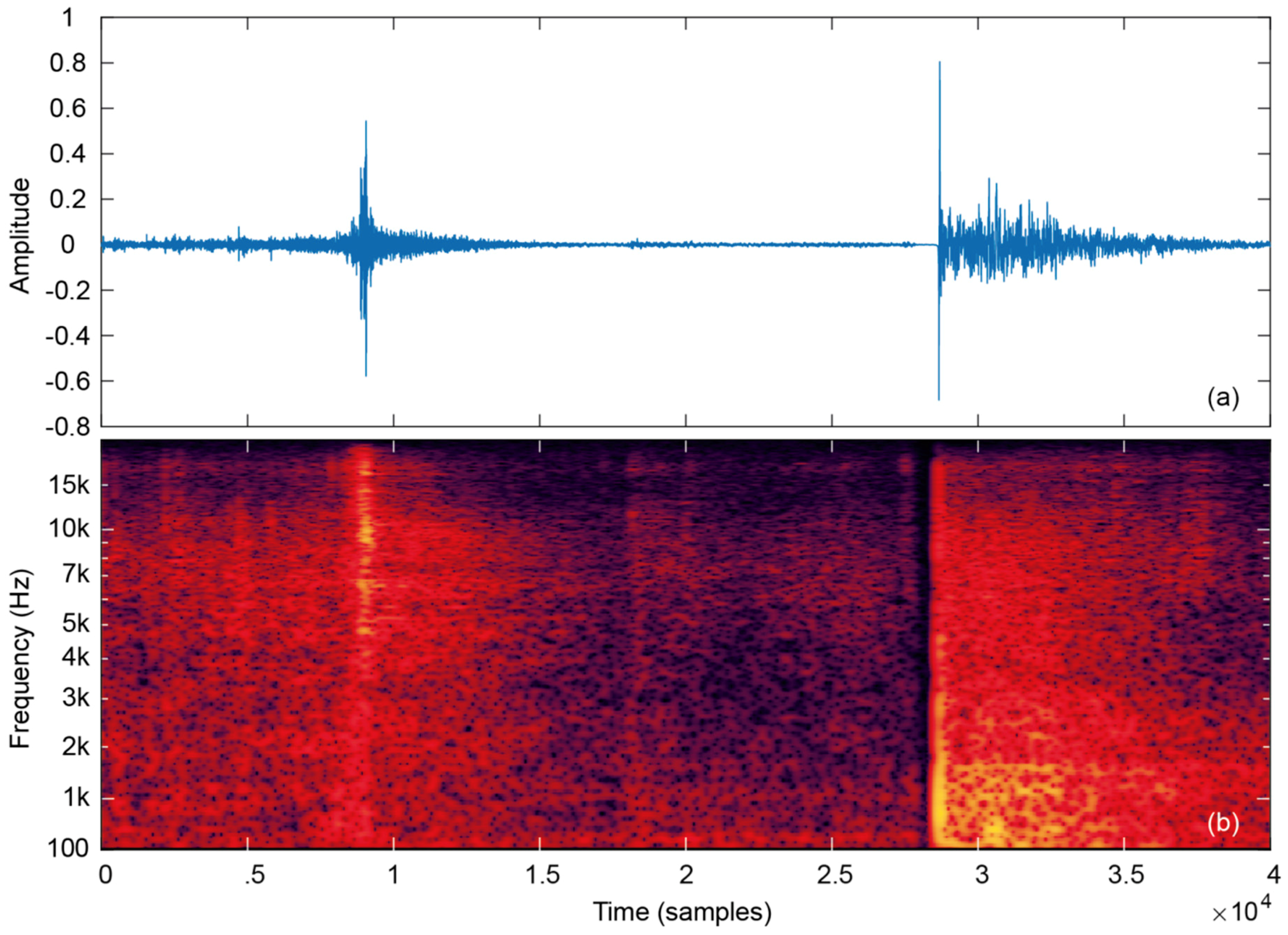

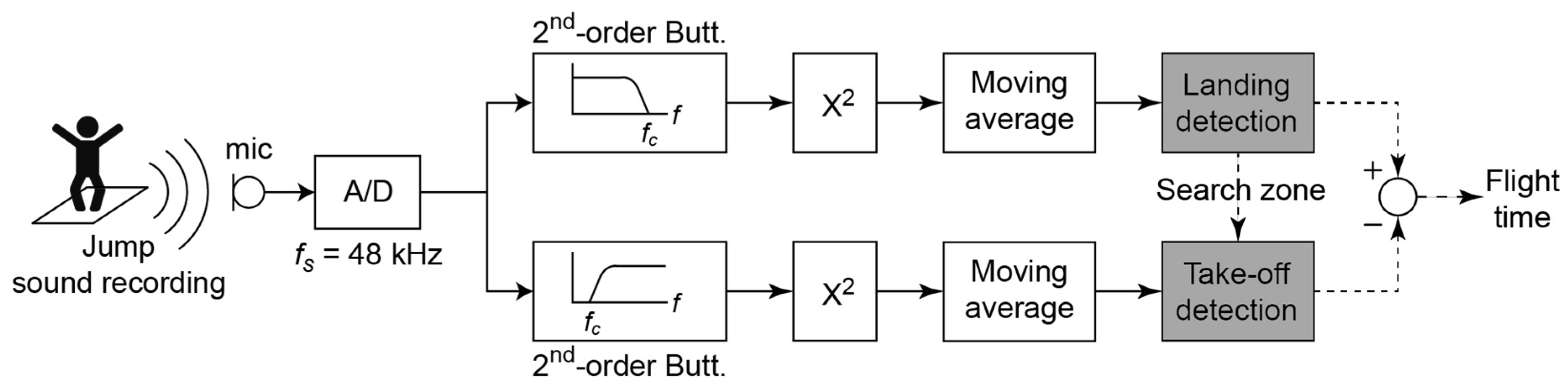

2.2. Audio Signal Processing

- Immunity to background noise in typical scenarios of use

- Not affected by the reverberation time of typical scenarios of use

- Moderate complexity to be used in real time on smartphones

- Totally unsupervised

2.3. Noise and Signal Levels of Recording Scenarios

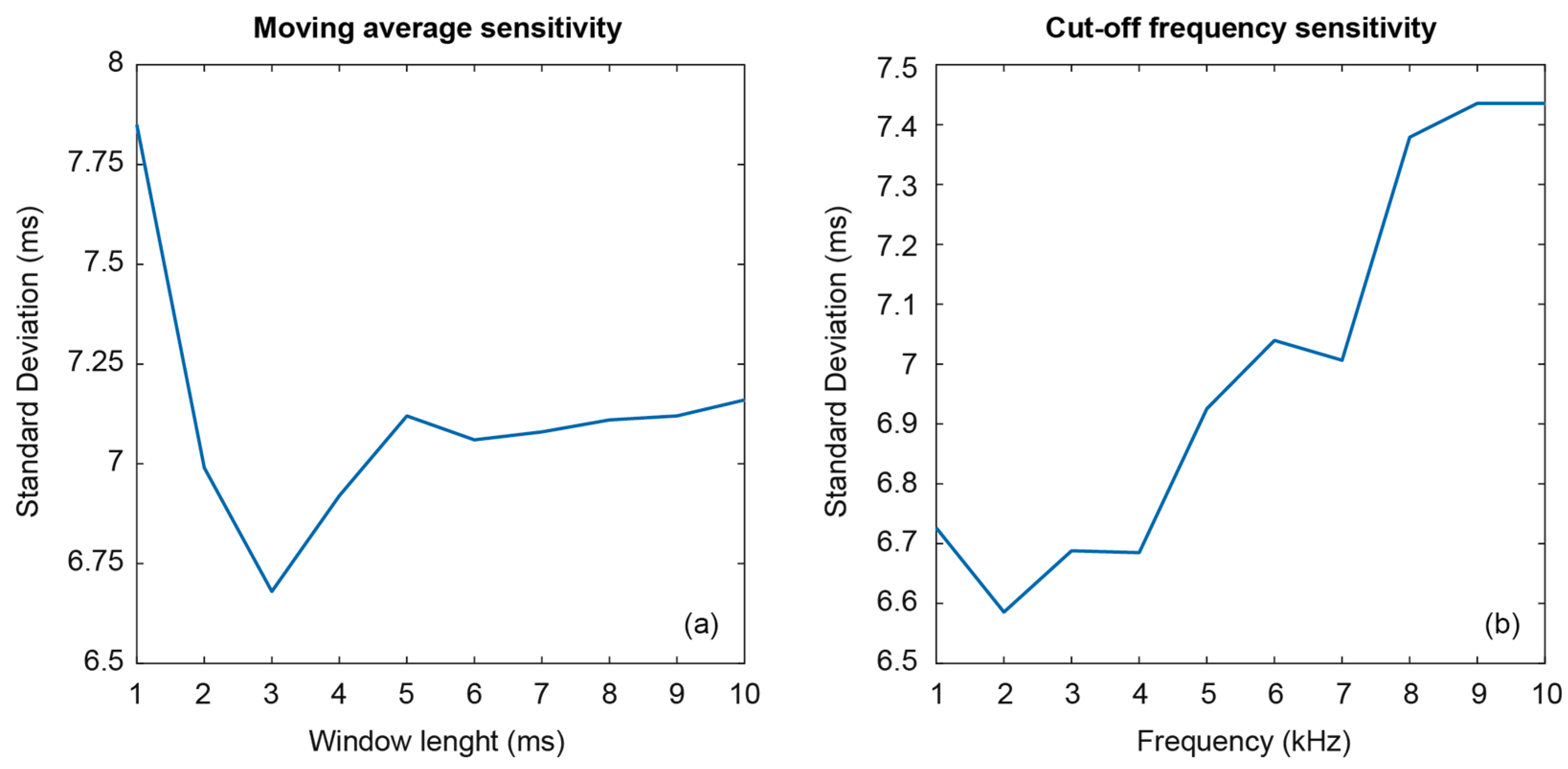

2.4. Optimization of the Algorithm Parameters

2.5. Final Algorithm for Flight Time Extraction

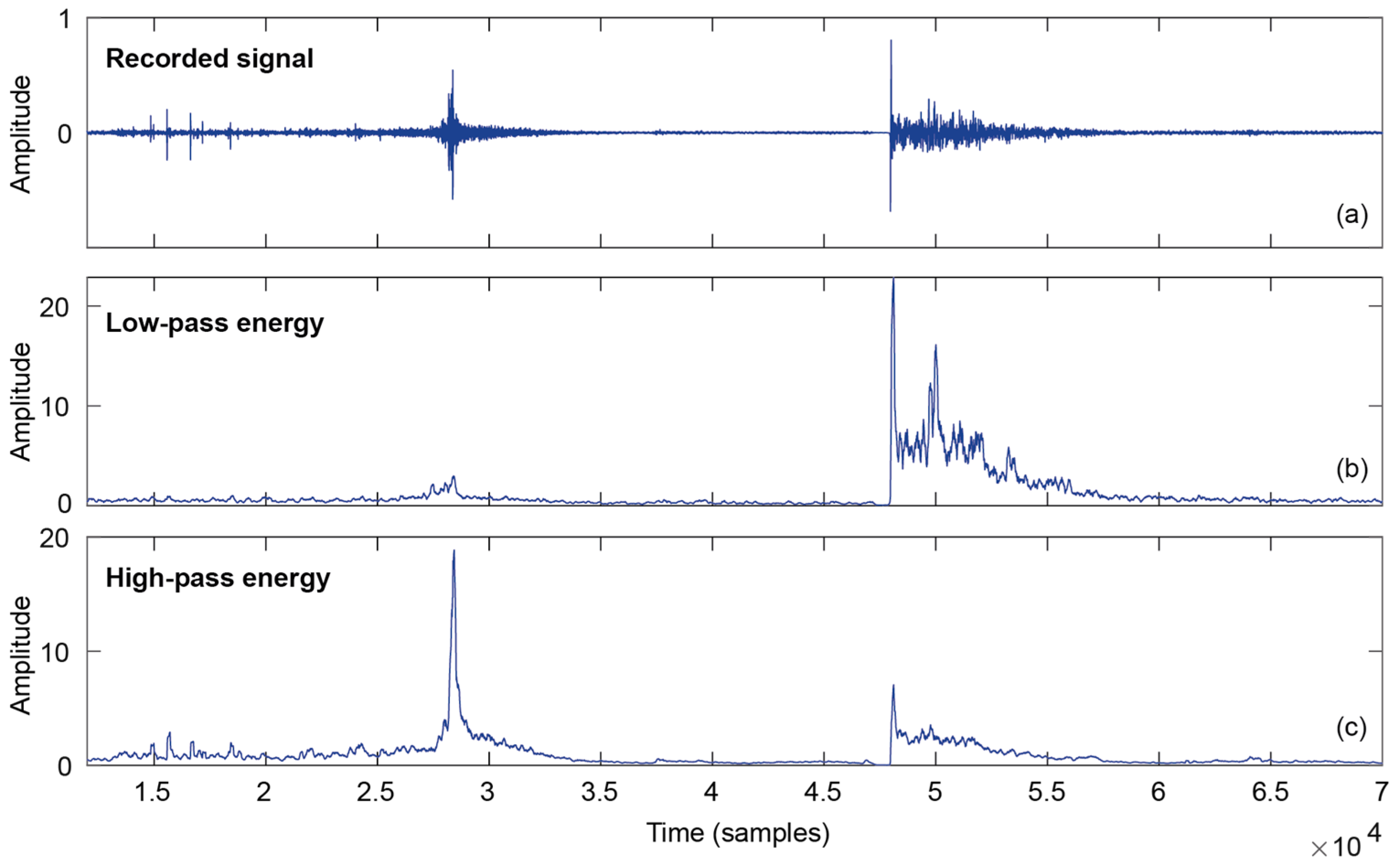

- The incoming signal is divided into two paths. In one path, a second-order Butterworth low-pass filter is applied with cut-off frequency of 3 kHz. In the other path, a second-order Butterworth high-pass filter is applied with a cut-off frequency of 3 kHz.

- The energy of the signal is computed in each path and averaged in time with a moving average of 3 ms, equivalent to 144 samples at fs = 48 kHz.

- The landing event is detected in the low frequency path by a fixed threshold. Once detected, a fine-tuning process is used to accurately detect the beginning of the impulsive signal looking for a big step in the time domain signal.

- For finding the take-off event, a search zone prior to the landing event is defined. This zone includes possible realistic jumps from the smaller to the largest values and has been fixed between 0.2 to 0.8 s.

- The maximum average energy in the high-frequency path inside the search area is considered the take-off event.

- Finally, flight time is computed as the difference between the two events.

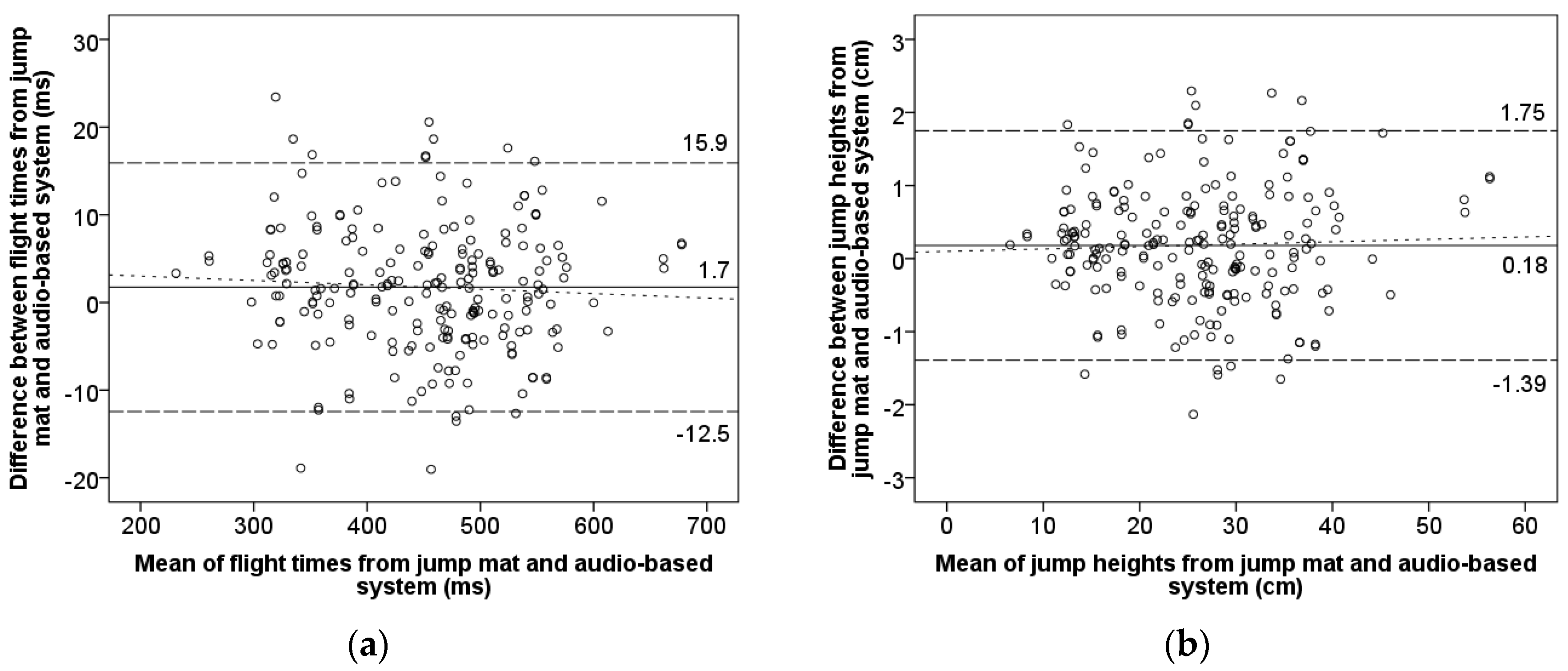

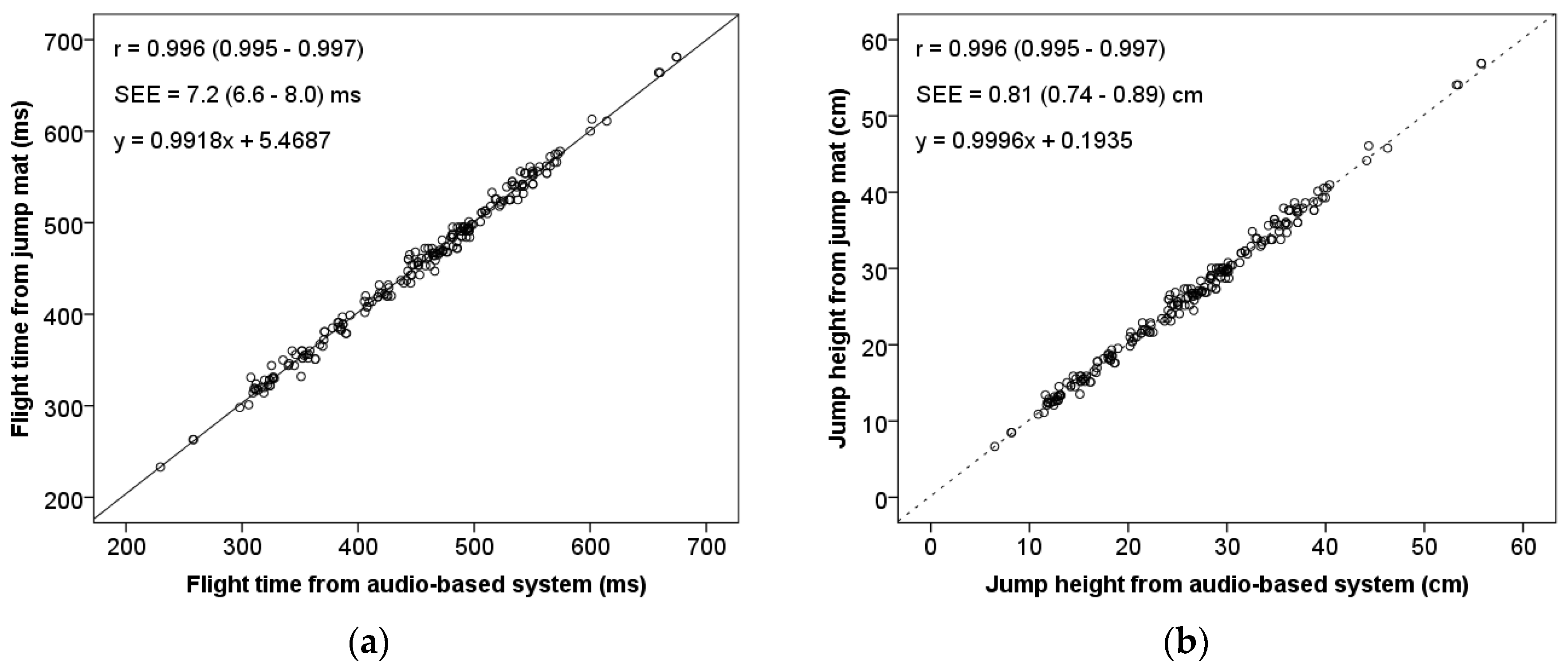

2.6. Instrument Validation

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Buchheit, M.; Spencer, M.; Ahmaidi, S. Reliability, usefulness, and validity of a repeated sprint and jump ability test. Int. J. Sports Physiol. Perform. 2010, 5, 3–17. [Google Scholar] [PubMed]

- Markovic, G.; Dizdar, D.; Jukic, I.; Cardinale, M. Reliability and Factorial Validity of Squat and Countermovement Jump Tests. J. Strength Cond. Res. 2004, 18, 551–555. [Google Scholar] [CrossRef] [PubMed]

- Bosco, C.; Luhtanen, P.; Komi, P. V A simple method for measurement of mechanical power in jumping. Eur. J. Appl. Physiol. Occup. Physiol. 1983, 50, 273–282. [Google Scholar] [CrossRef] [PubMed]

- Baca, A. A comparison of methods for analyzing drop jump performance. Med. Sci. Sports Exerc. 1999, 31, 437–442. [Google Scholar] [CrossRef] [PubMed]

- Barris, S.; Button, C. A review of vision-based motion analysis in sport. Sport. Med. 2008, 38, 1025–1043. [Google Scholar] [CrossRef]

- Aragón, L.F. Evaluation of Four Vertical Jump Tests: Methodology, Reliability, Validity, and Accuracy. Meas. Phys. Educ. Exerc. Sci. 2000, 4, 215–228. [Google Scholar] [CrossRef]

- Hatze, H. Validity and reliability of methods for testing vertical jumping performance. J. Appl. Biomech. 1998, 14, 127–140. [Google Scholar] [CrossRef]

- Buckthorpe, M.; Morris, J.; Folland, J.P. Validity of vertical jump measurement devices. J. Sport. Sci 2012, 30, 63–69. [Google Scholar] [CrossRef]

- Pueo, B.; Lipinska, P.; Jiménez-Olmedo, J.M.; Zmijewski, P.; Hopkins, W.G. Accuracy of jump-mat systems for measuring jump height. Int. J. Sports Physiol. Perform. 2017, 12. [Google Scholar] [CrossRef]

- García-López, J.; Morante, J.C.; Ogueta-Alday, A.; Rodríguez-Marroyo, J.A. The type of mat (contact vs. photocell) affects vertical jump height estimated from flight time. J. Strength Cond. Res. 2013, 27, 1162–1167. [Google Scholar] [CrossRef]

- Castagna, C.; Ganzetti, M.; Ditroilo, M.; Giovannelli, M.; Rocchetti, A.; Mazi, V. Concurrent Validity of Vertical Jump Performance Assessment Systems. J. Strength Cond. Res. 2013, 27, 761–768. [Google Scholar] [CrossRef] [PubMed]

- Balsalobre-Fernandez, C.; Glaister, M.; Lockey, R.A. The validity and reliability of an iPhone app for measuring vertical jump performance. J. Sports Sci. 2015, 33, 1574–1579. [Google Scholar] [CrossRef] [PubMed]

- Linthorne, N.P. Analysis of standing vertical jumps using a force platform. Am. J. Phys. 2001, 69, 1198–1204. [Google Scholar] [CrossRef]

- Pueo, B. High speed cameras for motion analysis in sports science. J. Hum. Sport Exerc. 2016, 11, 53–73. [Google Scholar] [CrossRef]

- Bartlett, J.W.; Frost, C. Reliability, repeatability and reproducibility: Analysis of measurement errors in continuous variables. Ultrasound Obstet. Gynecol. 2008, 31, 466–475. [Google Scholar] [CrossRef] [PubMed]

- Stowell, D.; Giannoulis, D.; Benetos, E.; Lagrange, M.; Plumbley, M.D. Detection and Classification of Acoustic Scenes and Events. IEEE Trans. Multimed. 2015, 17, 1733–1746. [Google Scholar] [CrossRef]

- DCASE2019 Challenge―DCASE. Available online: http://dcase.community/challenge2019/index (accessed on 5 April 2019).

- Phan, H.; Koch, P.; Katzberg, F.; Maass, M.; Mazur, R.; McLoughlin, I.; Mertins, A. What makes audio event detection harder than classification? In Proceedings of the 25th European Signal Processing Conference, EUSIPCO 2017, Kos Island, Greece, September 2017; pp. 2739–2743. [Google Scholar]

- Hopkins, W.G.; Marshall, S.W.; Batterham, A.M.; Hanin, J. Progressive statistics for studies in sports medicine and exercise science. Med. Sci. Sports Exerc. 2009, 41, 3–12. [Google Scholar] [CrossRef] [PubMed]

- Koo, T.K.; Li, M.Y. A Guideline of Selecting and Reporting Intraclass Correlation Coefficients for Reliability Research. J. Chiropr. Med. 2016, 15, 155–163. [Google Scholar] [CrossRef] [PubMed]

- Buchheit, M. The Numbers will Love You Back in Return—I Promise. Int. J. Sports Physiol. Perform. 2016, 11, 551–554. [Google Scholar] [CrossRef]

- Haugen, T.; Buchheit, M. Sprint Running Performance Monitoring: Methodological and Practical Considerations. Sport. Med. 2016, 46, 641–656. [Google Scholar] [CrossRef]

- Bland, J.M.; Altman, D.G. Statistical Methods for Assessing Agreement between Two Methods of Clinical Measurement. Lancet 1986, 327, 307–310. [Google Scholar] [CrossRef]

- Atkinson, G.; Nevill, A. Statistical Methods for Assesing Measurement Error (Reliability) in Variables Relevant to Sports Medicine. Sport. Med. 1998, 26, 217–238. [Google Scholar] [CrossRef] [PubMed]

- Hopkins, W.G. sportsci.org. Sportscience 2018, 8, 1–7. [Google Scholar]

- Pyne, D. Measurement studies in sports science research. Int. J. Sports Physiol. Perform. 2008, 3, 409–410. [Google Scholar] [CrossRef] [PubMed]

- Hopkins, W.G. Spreadsheet for analysis of validity and reliability. Sportscience 2015, 19, 36–42. [Google Scholar]

- O’Donoghue, P. Research Methods for Sports Performance Analysis; Routledge: London, UK, 2009. [Google Scholar]

- Batterham, A.M.; Hopkins, W.G. Making Meaningful Inferences about Magnitudes. Int. J. Sports Physiol. Perform. 2006, 1, 50–57. [Google Scholar] [CrossRef]

| Number of Jumps | Reverberation (T60) | Description | |

|---|---|---|---|

| Gymnasium | 80 | 1.3 | High-volume room with plenty of people and sports equipment. Frequent impulsive noises of moderate intensity and constant background noise. |

| Workout room | 75 | 0.7 | Medium-sized room with moderate concurrence of people. Generally, background music is playing and occasional high-intensity impulse noises. |

| Noisy corridor | 35 | 0.6 | Longitudinal room with few people. High background noise from the computer hum of an adjacent room. |

| Small fitness room | 35 | 0.35 | Low-sized room with small groups of people. Low impulsive noises and moderate background noise, mainly from ambient music. |

| Background Noise LP | Landing Signal Level | SNR Landing | Background Noise HP | Take-off Signal Level | SNR Take-off | |

|---|---|---|---|---|---|---|

| Gymnasium | −29.3 | −11.2 | 18.1 | −37.4 | −13.0 | 24.4 |

| Workout room | −28.0 | −10.5 | 17.5 | −36.0 | −13.3 | 22.7 |

| Noisy corridor | −26.8 | −8.3 | 18.5 | −38.4 | −15.2 | 23.2 |

| Small fitness room | −37.4 | −9.1 | 28.3 | −35.7 | −12.8 | 22.9 |

| Jump Mat vs. Audio-Based System | ||

|---|---|---|

| Flight Time | Jump Height | |

| ICC (2,1)# (95% CI) | 0.996 (0.995–0.997) | 0.996 (0.995–0.997) |

| ICC (2,1)§ (95% CI) | 0.996 (0.995–0.997) | 0.996 (0.995–0.997) |

| Cronbach’s α | 0.998 | 0.998 |

| Mean difference (95% CI) | 1.7 * (0.8–2.7) ms | 0.18 * (0.08–0.29) cm |

| SWC (95% CI) | 17.1 (15.6–18.9) ms | 1.9 (1.7–2.1) cm |

| SEE (95% CI) | 7.2 (6.62–8.01) ms | 0.81 (0.74–0.89) cm |

| Standardized SEE (95% CI) | 0.09 (0.07–0.10) | 0.08 (0.07–0.10) |

| SEE Effect Size | Trivial | Trivial |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pueo, B.; Lopez, J.J.; Jimenez-Olmedo, J.M. Audio-Based System for Automatic Measurement of Jump Height in Sports Science. Sensors 2019, 19, 2543. https://doi.org/10.3390/s19112543

Pueo B, Lopez JJ, Jimenez-Olmedo JM. Audio-Based System for Automatic Measurement of Jump Height in Sports Science. Sensors. 2019; 19(11):2543. https://doi.org/10.3390/s19112543

Chicago/Turabian StylePueo, Basilio, Jose J. Lopez, and Jose M. Jimenez-Olmedo. 2019. "Audio-Based System for Automatic Measurement of Jump Height in Sports Science" Sensors 19, no. 11: 2543. https://doi.org/10.3390/s19112543

APA StylePueo, B., Lopez, J. J., & Jimenez-Olmedo, J. M. (2019). Audio-Based System for Automatic Measurement of Jump Height in Sports Science. Sensors, 19(11), 2543. https://doi.org/10.3390/s19112543