Synthesis problems, in view of evidence theory, have been analyzed by many scholars, who have presented a series of effective solutions. Some of these approaches adopt a view that does not tally with the actual result for the synthesis rule. Holders of this viewpoint attempt to improve the evidence combination rules by using modified conflict information. Another view holds that the inaccuracy of the synthesis results derives from the evidence source rather than the synthesis rules of traditional evidence theory [

36,

37,

38]. Additionally, there is a new view that the error of the result comes from an incomplete identification framework [

39]. In this paper, evidence theory can be improved by modifying combination rules and evidence bodies [

40].

4.1. Weight Distribution of Conflicting Evidence

At present, the weight distribution of conflicting evidence mainly adopts the conflict weight distribution method based on the distance between two pieces of evidence [

41] or the similarity coefficient [

42]. These commonly used methods usually only consider the mutual support degree between evidence and do not consider the role of evidence itself in decision making [

43,

44]. When the uncertainty degree of the evidence itself is high and the conflict caused by the evidence is low, the impact of the evidence on decision making is also low. Therefore, under the premise of good consistency between evidence and the decision-making, the less uncertain the evidence is, the more effective the decision will be. When the weight of conflict is allocated, the weight of the evidence should be higher, so as to make better decisions.

Let there be an identification frame

. The basic probability distribution of any event in the identification framework, which is presented in Equation (9), is converted into fuzzy membership

:

Then, the distance between any two evidences is defined as:

where

represents intersection (smaller value) and

represents union set (larger value). In order to avoid the scenario that the denominator of the fraction in the equation is 0, it is required to identify focal elements in the framework. The gap between two evidence bodies is represented by the evidence distance, which is another way of expressing evidence conflict. When the distance between evidences is 0, it means that there is no conflict between the two pieces of evidence, and they are completely consistent. That is, the degree of consistency between the two evidences is 1. When the distance between the evidences is 1, it means that there is complete conflict between the two evidences and there is no similarity, that is, the degree of consistency between the two evidences is 0.

Thus, the similarity coefficient of the two pieces of evidence is

Assuming that the number of evidences obtained by the system is

m, the evidence similarity number can be calculated by using the above formula, and the similarity matrix is thus:

The degree of consistency between two evidences reflects the degree of mutual support between the two pieces of evidence [

45,

46]; therefore, the support degree of each piece of evidence for

can be obtained by adding the rows or columns of the similarity matrix as follows [

47]:

Obviously, the greater the degree to which a piece of evidence is supported by other evidence, the higher its credibility will be, and vice versa. Therefore, inter-evidence support is often used to represent the credibility of a single piece of evidence. Equation (14) is normalized to calculate the credibility of the evidence, as shown in

In recent decades, various uncertainty measures have been proposed, e.g., Yager’s dissonance measure, Shannon entropy, and others derived from the Boltzmann–Gibbs (BG) entropy in thermodynamics and statistical mechanics, which have been used as an indicator to measure uncertainty associated with a probability density function (PDF). In this paper, a new entropy, called the Deng entropy, is proposed to handle the uncertain measure of Basic Probability Assignment (BPA). Deng entropy can be seen as a generalized Shannon entropy [

48]. When the BPA is degenerate with the probability distribution, the Deng entropy is degenerate with the Shannon entropy. Benefiting from the above research, the uncertainty measure of probability has a widely accepted solution [

49]; assuming that the recognition framework contains

n pieces of evidence, which yields the corresponding basic probability assignment, the Deng entropy of the

ith piece of evidence is defined as follows:

where

m is a mass function defined on the frame of discernment

,

is a focal element of

m, and

is the cardinality of

. As shown in the above definition, Deng entropy is formally similar to the classical Shannon entropy, however, the belief for each focal element

is divided by a term

, which represents the potential number of states in

(of course, the empty set is not included). Through a simple transformation, it is found that Deng entropy is actually a type of composite measure, as follows:

where the term

is interpreted as a measure of the total non-specificity in the mass function

m, and the term

is a measure of the discord of the mass function among various focal elements.

Deng entropy can measure the uncertainty degree of some information. The higher the Deng entropy, the higher the degree of uncertainty. In a decision-making system, the more uncertain the information is (high Deng entropy), the lower its influence on decision making should be. Conversely, the more certain the information is (low Deng entropy), the higher the impact on decision making should be. Evidence consistency is used to reflect this idea in the overall decision-making process, as well as the degree of conflict with other evidence. The higher the consistency of evidence, the lower the degree of conflict with other evidence, and the more likely it will be to yield correct decisions. In the decision system, the average basic probability assignment of each subset (decision attribute) in the identification framework can reflect the correct trend of decision making in the evidence set. If there is a large distance between the basic probability assignment [

50] of a subset in the identification framework and the average basic probability assignment, the evidence consistency is low. Conversely, if there is a small distance between the two, the evidence consistency is higher.

For an identification framework

with n pieces of evidence, the recognition framework is

, and

is the corresponding basic probability assignment. Thus, the evidence consistency is defined as [

51]:

where

represents the average basic probability assignment of each subset within the recognition framework.

Evidence validity shows the influence of evidence on making correct decisions, which can be comprehensively measured by the consistency of evidence and the certainty of evidence. When the degree and consistency of evidence are high, it indicates that the evidence is of great help to make correct decisions and has high effectiveness. When the degree of evidence confirmation is high and the consistency is low, although the evidence has a high role in decision making, its effectiveness should be low, so as to avoid the adverse impact of increasing evidence on making correct decisions. The evidence validity can be defined as:

Since evidence validity reflects the influence of the evidence itself on correct decision making, and the credibility is based on the similarity between one piece of evidence other evidence, this paper redefines the weight distribution of conflicting evidence by using both evidence validity and evidence credibility as follows:

The weight distribution method of conflicting evidence increases the weight of conflicting evidence that can help make correct decisions and reduces the weight of conflicting evidence that may make wrong decisions, so as to achieve better convergence results.

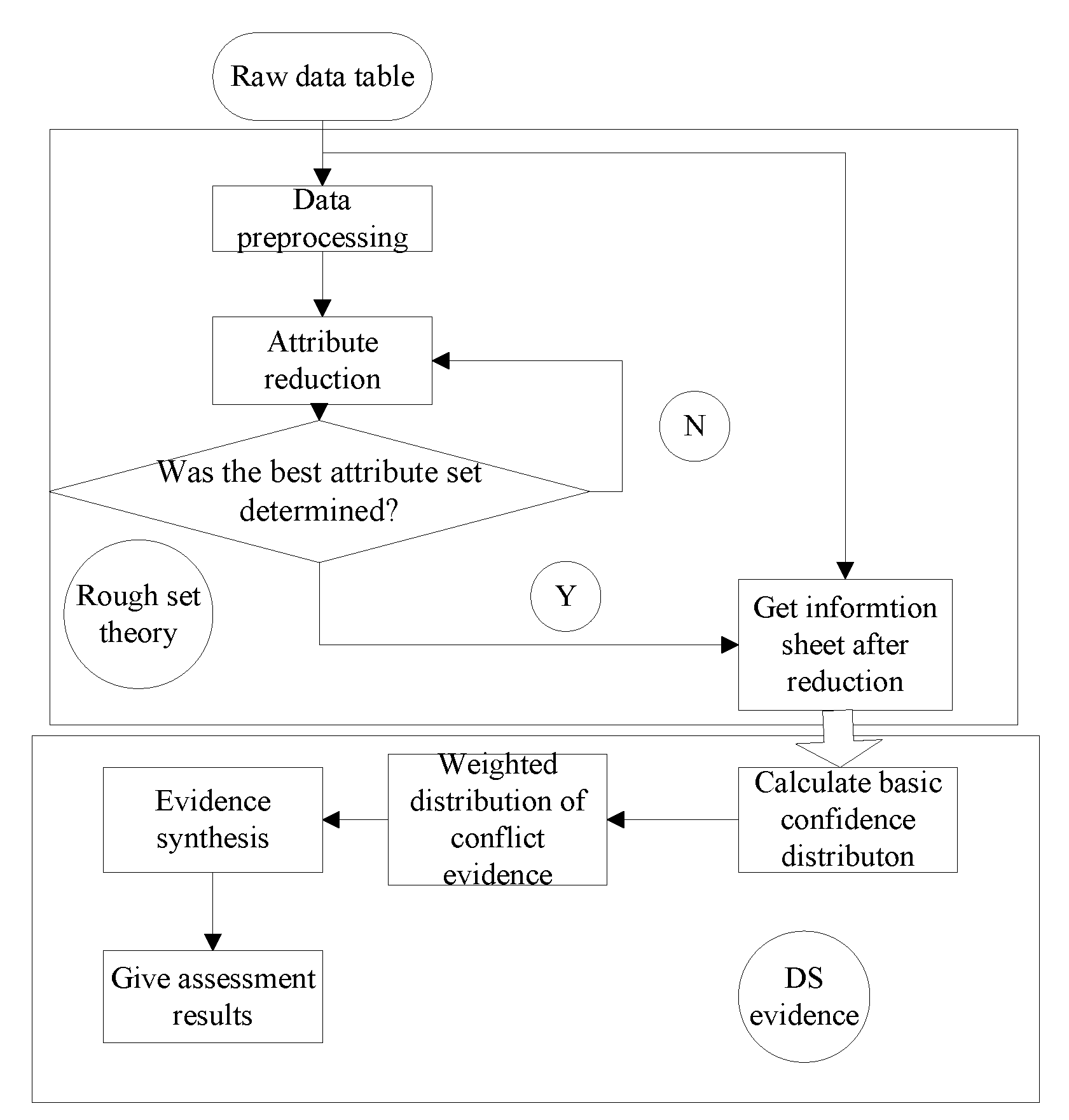

4.2. Steps to Improve the Method

According to the new conflict evidence weights, an improved conflict evidence synthesis method can be obtained. The specific steps of this method are as follows:

Step 1: For the n pieces of evidence within the recognition framework, the similarity coefficients between every two pieces of evidence are calculated, and the similarity matrix is obtained.

Step 2: Calculate the support degree of the ith piece of evidence according to the similarity matrix. After normalization of the support degree, obtain the credibility for each evidence.

Step 3: Calculate the information entropy and consistency of each evidence and obtain the evidence validity according to Equation (18).

Step 4: Considering the influence of evidence credibility and evidence validity on conflicting evidence, the weight of the piece of evidence is calculated according to Equation (19).

Step 5: According to this new weight distribution for the conflict evidence, redefine the conflict distribution function

f(A) to obtain a new conformity rule, via:

where

.

4.3. The Performance of Our Proposed Synthesis Method Compared to Other Methods

In order to verify the effectiveness of the new conflict evidence synthesis rule designed in this paper, a comparative analysis was conducted with the basic probability assignment given by the high conflict evidence in

Table 3, as an example. As the conflict factor

, the classical DS synthesis method cannot be used. We compare our proposed approach to several other methods briefly introduced below.

Yager’s formula [

52] removes the normalization factor and assigns the conflict information generated by the evidence to the identification framework

. The rules of composition are as follows:

where

. This method has the problem of “one vote no”, and the result is not ideal when multiple evidence bodies are synthesized.

By defining the concept of evidence credibility, Sun Quan et al. [

53] set the evidence conflict parameter K, average support degree

, and credibility parameter

. They further used the credibility to allocate the parts of the conflict information assigned to unknown items. Suppose that the evidence

corresponding to

is as follows [

54]:

With

and

.

The smaller the conflict parameter K, the less the conflict between pieces of evidence will be. The fusion result is mainly determined by . When the evidence conflict is larger or there is complete conflict, K is closer to 1, and the results of the approach average the credibility and the support degree Furthermore, the conflict between the distribution of the information is related to the evidence on the degree of the average support . Thus, the probability of the unknowns dominates the distribution results against our judgment and analysis, and this method is not reasonable to effectively deal with conflict problems.

In Li Bicheng’s opinion [

55], conflicting evidence can all be used, and an average distribution of evidence is defined. However, the average idea proposed by this method is not consistent with reality.

In the literature [

56], conflicts caused by evidence are allocated to conflicting focal points according to the BPA proportional relation of conflicting focal points [

56]. The commutativity of the rules of evidence theory synthesis cannot be satisfied. When the order of synthesis is different, the results of synthesis are different, which limits the application of the rules of evidence theory synthesis.

In this paper, the above synthesis method is adopted. To compare and illustrate the effectiveness of the evidence synthesis method and the rationality of the fusion result, we take four samples

,

,

,

and the basic probability assignment of high conflict evidence as an example for comparative analysis, as shown in

Table 3. The results are shown in

Table 4.

m(A),

m(B),

m(C) respectively represents the basic probability distribution function of A, B, C. Where

is the support for target A after synthesis of evidence

,

,

,

,

is the fundamental probability of the unknown.

As can be seen from the results in

Table 4, both Yager’s method and the method from Reference [

54] assign the conflict probability to unknown terms, and the fusion result cannot make a correct decision. The methods in References [

55] and [

56] modify the composition rules, assign the conflict to the basic probability of the conflict focal element, improve the rationality of the composition results, and can thus help one make a correct decision. By introducing the weight of evidence for effectiveness, the method in this paper improves on the other methods. Our approach guarantees a low conflict evidence weight distribution, improves the degree of determining the weight distribution for high consistency and good conflict evidence, and ensures the consistency degree is high by assigning poor weight allocation to conflicting evidence. Thus, our approach has better convergence effects, is more accurate, and can be used to make correct decisions.