1. Introduction

Before vehicle road tests or quality inspection, pollutant emission tests must be carried out. Over the past decade, these tests have been conducted by people who drive vehicles and follow the world light-duty test cycle (WLTC) target curve reported in Reference [

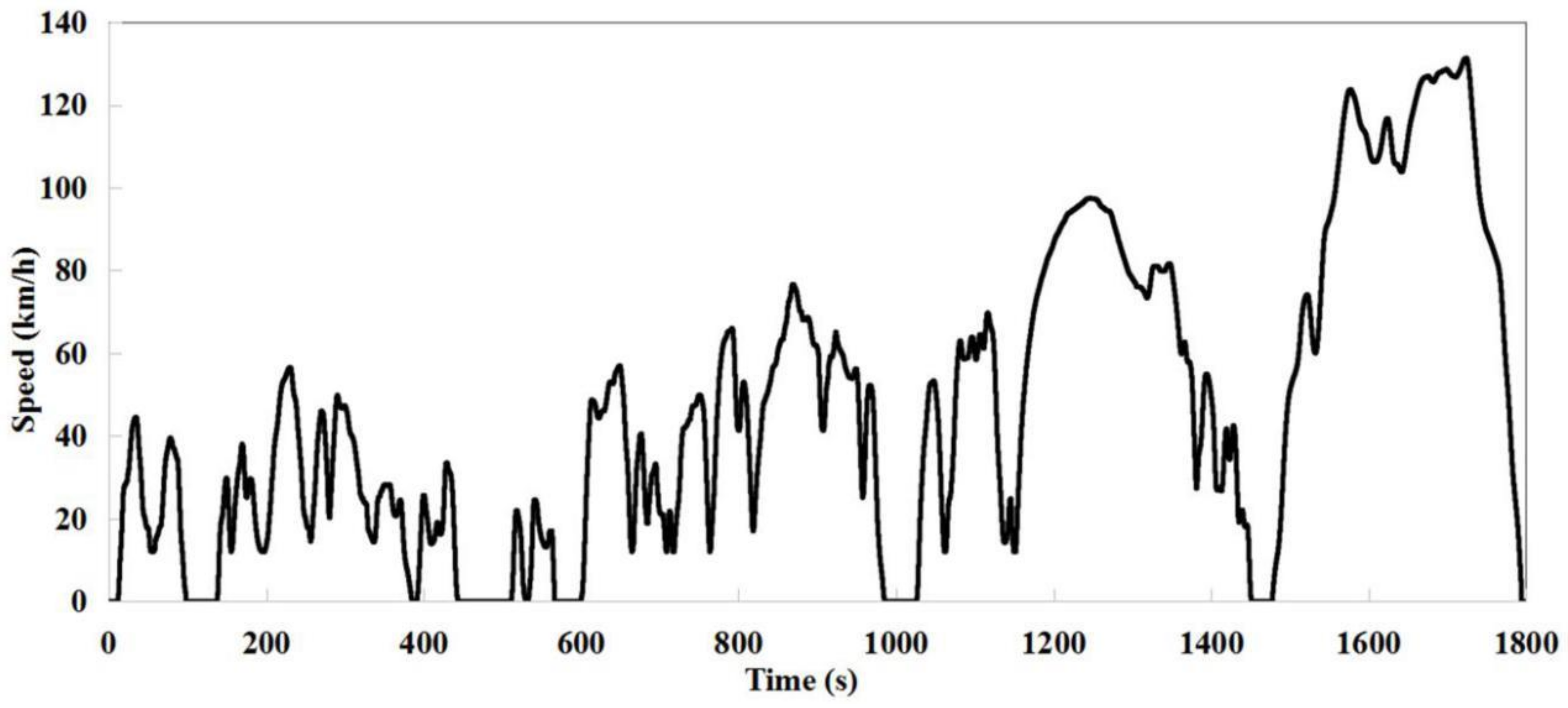

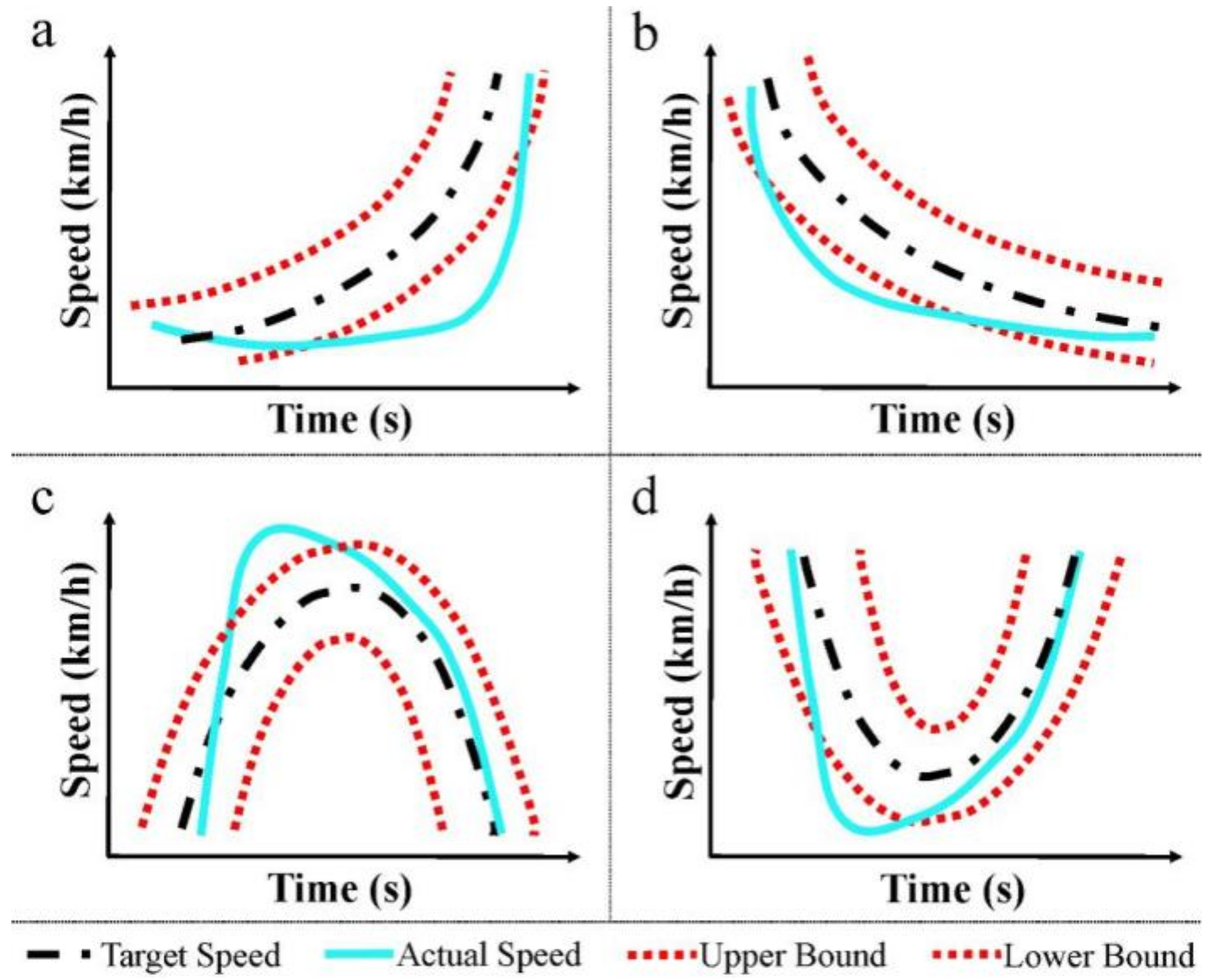

1]. As shown in

Figure 1, it is a time-speed curve that lasts 1800 s. In fact, when a vehicle is driven, the actual curve often deviates from the target curve. The noise in the test environment is too loud to be tolerated by operators, which may cause human error. In addition, research shows that although the deviation caused by robot control is less than that caused by human control, the number of deviations still needs to be reduced to almost zero. Generally, when the deviation between the actual curve and the target curve is greater than 2 km/h, a fault will occur. Different control parameters of pedal robot are needed for different types of faults. Therefore, how to identify fault types is still the most challenging part of robot control optimization.

Traditional fault diagnosis is analyzed by skilled engineers in time domain [

2], which is difficult and time-consuming, especially in the case of large samples and/or high-dimensional samples. With the increase of data size, analysis becomes quite complex. It is necessary to reduce data size by feature extraction [

3]. So far, many studies on feature extraction methods have been reported for the dynamic characteristics of fault signals [

4]. One of the traditional feature extraction techniques is Fourier transform [

5], but a Fourier coefficient represents a component that lasts for all time. Therefore, Fourier analysis is less suitable for nonstationary signals [

6]. Other feature extraction algorithms, such as local mean decomposition, correlation coefficient, and kurtosis, can also extract features. In general, these features are directly used as input of the classifier. The calculation speed of these methods is fast, but they are not able to present adequate information of fault signals. Autoencoder, a deep learning algorithm, is used to extract more abstract features from the raw input. A major feature component is received via t-distributed stochastic neighbor embedding (t-SNE) algorithm or principal component analysis (PCA) method. These extracted feature components, or representations, are fused into a new matrix, which may contain more local major feature information. Thus, this work proposes a fusion methodology of multi-feature learning models via Autoencoder, t-SNE, and PCA.

PCA generates a set of orthogonal bases that capture the direction of the largest variance in training data [

7]. Dunia et al. [

8] used PCA for sensor fault identification via reconstruction. The disadvantage of PCA is that it can only extract linear features, while t-SNE can solve nonlinear problems. T-SNE, as proposed in Reference [

9], is a very effective nonlinear dimension reduction technology, which was widely used recently. It is most commonly used to generate two-dimensional embedding of high-dimensional data in order to simplify cluster recognition [

10]. However, PCA and t-SNE need to decompose the problem into different parts and merge the results in the final stage. In addition, most of the features in machine learning need to be recognized by experts, which is very troublesome. Deep learning has no such problems mentioned above, and can obtain the representations with sensitive and valuable feature through automatically learning from the original feature set [

11]. In addition, because of the deep model [

12], deep learning has stronger adaptability and stability. Therefore, an Autoencoder-based learning algorithm is used to extract abstract features from the raw input. The Autoencoder model also a special kind of neural network and is able to learn richer representation of input data, as well as represent the target output [

13]. The aim of this model is to reconstruct input from the learnt representation [

14]. Although the Autoencoder can automatically learn features, it also has some disadvantages. For example, when the training set is small, or the training sample does not follow the same distribution as the test sample, the accuracy of feature extraction will be reduced. Clearly, PCA, t-SNE, and Autoencoder have their own advantages and shortcomings over the feature learning. In order to make full use of their advantages, this work proposes a novel additive combination feature representation respectively from PCA, t-SNE, and Autoencoder. The features provided by them will contain more dimensional information of fault signals.

After feature extraction, the features are combined into a high-dimensional feature set. In order to speed up the calculation and visualize the dimension reduction results, it is necessary to reduce the dimension of feature set to three. Treelet Transform is a data dimension reduction technology [

15]. It generates some sparse components, which is able to reveal the inherent structure of data [

16]. After dimension reduction, a pattern classification method is needed. Gaussian process classifier based on Bayesian theory is one of the machine learning methods [

17]. It can effectively classify nonlinear samples [

18]. Gaussian process is used to learn the extracted features and present the fault detection results.

In this paper, a new fault diagnosis method based on multi-feature learning model is proposed. This method can identify fault type and help optimize the control of the pedal robot. The experimental results are compared with some typical feature extraction algorithms and the proposed model in terms of accuracy. The structure of this paper is as follows:

Section 2 gives the proposed methodology,

Section 3 gives experiment setup,

Section 4 presents the results and discussion, and the conclusion is arranged in

Section 5.

2. Methodology

The proposed fault diagnosis method for pedal robot mainly includes three steps: Feature extraction, dimension reduction, and fault classification. First, fault samples are extracted from the curve samples. Second, three-dimensional features are extracted from the fault samples by PCA, t-SNE, and Autoencoder, respectively. Next, since PCA, t-SNE, and Autoencoder cannot extract feature information adequately when they are used alone, and the types of features extracted by them are different, the features are combined into a nine-dimensional feature set to reduce information loss. Then, the feature set is reduced to three dimensions by Treelet Transform, thus, the calculation speed could be accelerated, and the fault samples could be visualized in three-dimensional space. Finally, after being classified by Gaussian process classifier, the accuracy and result of fault diagnosis are obtained.

2.1. Data Preprocessing

A fault sample contains two curves, namely, the target curve and the actual curve. Ten seconds before and after the midpoint of the deviation, the segment was extracted as a fault sample. Since the curve took a point at 0.1 s intervals, a fault sample had 200 points. In order to extract more features, data integration was necessary. The 200 points of the actual curve were combined with the 200 points of the corresponding target curve to form a 400-dimensional feature. In addition, this study also collected the time-displacement curves of the pedal robot’s two end-effectors. Therefore, the 400-dimensional feature and the 200 points of each end-effector displacement at the corresponding time were combined to form an 800-dimensional feature, which could be used as a fault sample.

2.2. Feature Extraction

This work explores the feasibility of feature extraction by using PCA, t-SNE, and Autoencoder to extract three-dimensional features, respectively, then these three three-dimensional features are combined into a nine-dimensional feature matrix.

2.2.1. Feature Extraction by PCA

The process of feature extraction by PCA consists of the following five stages:

Stage 1: Each sample is 800 dimensions, and 11430 samples constitute a sample array:

then, the sample elements are normalized as follows:

where

thus the normalized matrix

Z is found.

Stage 2: Calculate the correlation coefficient matrix of the normalized matrix Z.

Stage 3: Solve the eigenvalue of matrix

R, then 800 eigenvalues are got to determine the principal components. The value of

m is determined by the following equation [

19]:

For each , solve the equations set to obtain the unit eigenvector .

Stage 4: Convert the normalized indicator variables into principal components:

where

is the first principal component,

is the second principal component and

is the

principal component.

Stage 5: Comprehensive evaluation of m principal components.

In this paper, the total eigenvalues contribution of the first three eigenvalues was 99.37%. It indicates that the first three dimensions already contain enough information of the original sample. Thus, they were extracted as features of the high-dimensional fault samples.

2.2.2. Feature Extraction by t-SNE

The d-dimensional input dataset is denoted by

. Before using t-SNE algorithm, PCA is used to initialize data. t-SNE computes a s-dimensional embedding of the points in

, denoted by

, where

s<

d. The joint probability

measuring the similarity between

and

is computed as:

The bandwidth of the Gaussian kernel,

, is often chosen such that the perplexity of

matches a user defined value, where

is the conditional distribution across all data point given

. The similarity between

and

in the low dimensional embedding is defined as:

t-SNE finds the points

which minimize the Kullback-Leibler divergence between the joint distribution

P of points in the input space and the joint distribution

Q of points in the embedding space:

The point y is initialized randomly, and the cost function is minimized using gradient descent. In this paper, d = 800, and s = 3. Thus, three-dimensional feature was extracted from the high-dimensional feature set.

2.2.3. Feature Extraction by Autoencoder

An Autoencoder learns a feed-forward, hidden representation

of the input

, and a reconstruction

could be got, which is as close as possible to

.

where

and

are matrices,

b and

c are vectors, g is a nonlinear activation function and

. The loss function used in this paper is cross-entropy loss:

Training the Autoencoder corresponds to optimizing the parameters to reduce the average loss on the training examples. In this paper, the hidden layer has three neurons, which represent the features extracted from the input.

2.3. Dimension Reduction by Treelet Transform

After using PCA, t-SNE, and Autoencoder to extract three-dimensional features, respectively, the features are combined into a nine-dimensional feature set. Thus, a feature set matrix is extracted from the fault samples matrix. Then, this feature set is reduced to three-dimensional feature set by Treelet Transform.

Stage 1: Normalize the features extracted by PCA, t-SNE, and Autoencoder, respectively.

Stage 2: Define the clustering levels

, where

p is the dimension 9. At level

, each signal

is represented by the original variables

where

. Initialize similarity matrix and covariance matrix by the following Equation:

with

,

where is the correlation coefficients of the similarity matrix , and . Initialize the basis matrix as an identity matrix whose size is . Initialize . For the L-level Treelet Transform, let , repeat the following stages:

Stage 3: At each level of the tree, the most similar variables are obtained according to the similarity matrix

, denote the two variables which have the maximum correlation coefficients as

and

:

Stage 4: Find the Jacobi rotation matrix:

where

and

, and the rotation angle

can be obtained by:

This transformation corresponds to a change of basis , and new coordinates . Update the similarity matrix accordingly.

Stage 5: Multi-resolution analysis. If , define the difference and sum as and . Define the scaling and detail functions and as columns and of the basis matrix .

Stage 6: Repeat stage 2 to stage 4 until reach the highest level

. Then, the final orthogonal basis matrix

B can be obtained as follows:

where

is the scale of the highest level, and

is the detail vector of the

level. Then, the orthonormal treelet decomposition at level

l can be obtained:

Stage 7: Normalize the obtained eigenvectors after Treelet Transform.

In this paper, the input was the feature set matrix, and the maximum level . The output was three-dimensional normalized eigenvectors. The score of remaining three dimensions was 94.61%, which indicated that almost all the information had been extracted from the feature set matrix. So, the remaining three dimensions were input into the classifier as a representative of the high-dimensional feature set matrix.

2.4. Fault Classification by Gaussian Process Classifier

After feature extraction and dimension reduction, a matrix of fault samples is obtained. Then, these fault samples are trained and formed into four classes by Gaussian process classifier.

Assume the dataset is

, where

is the input vector of dimension

and

is the class labels +1/−1. The input

matrix is denoted as

. Predictions for new inputs

are made out of this given training data using the GP model. And GP binary classifier is done by first calculating the distribution over the latent function

corresponding to the test case,

where

is the latent variable posterior. So, the probabilistic prediction is made by

In this work, and . Since the outputs are divided into four classes, a multi-classification method is presented as: The first class consists of larger samples, and other three sample sets form the second class. This completes one classification and presents the classification probability of larger samples. Next, this step is repeated at the other three sample sets. Finally, one probability of every sample at each classification is obtained. The maximum probability is endowed to this sample. The accuracy is computed by comparing the predictive labels with the expert experience-based labels.

3. Experiment

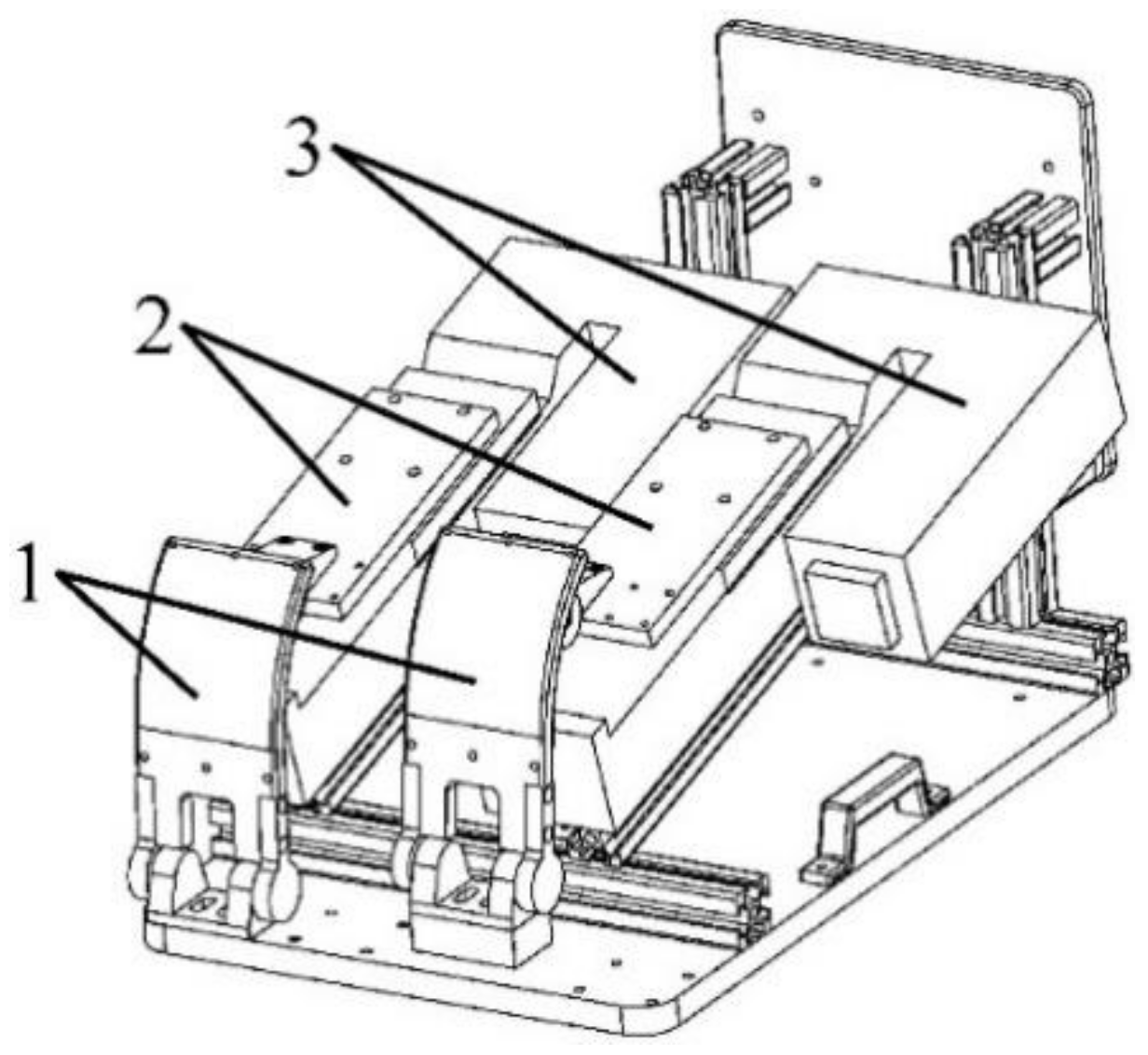

In order to realize the automation of pollutant emission tests without changing and disassembling the structure of vehicle products, a pedal robot instead of a human-driven vehicle is designed. As shown in

Figure 2, the main components of the pedal robot are servo motors, sliders, and end-effectors. The servo motor pushes the end effector by controlling the slider. In order to improve the stability of control, the end-effector is designed to imitate the shape of human foot. As shown in

Figure 3, the robot system consists of three parts: Control box, manipulator, and end effector.

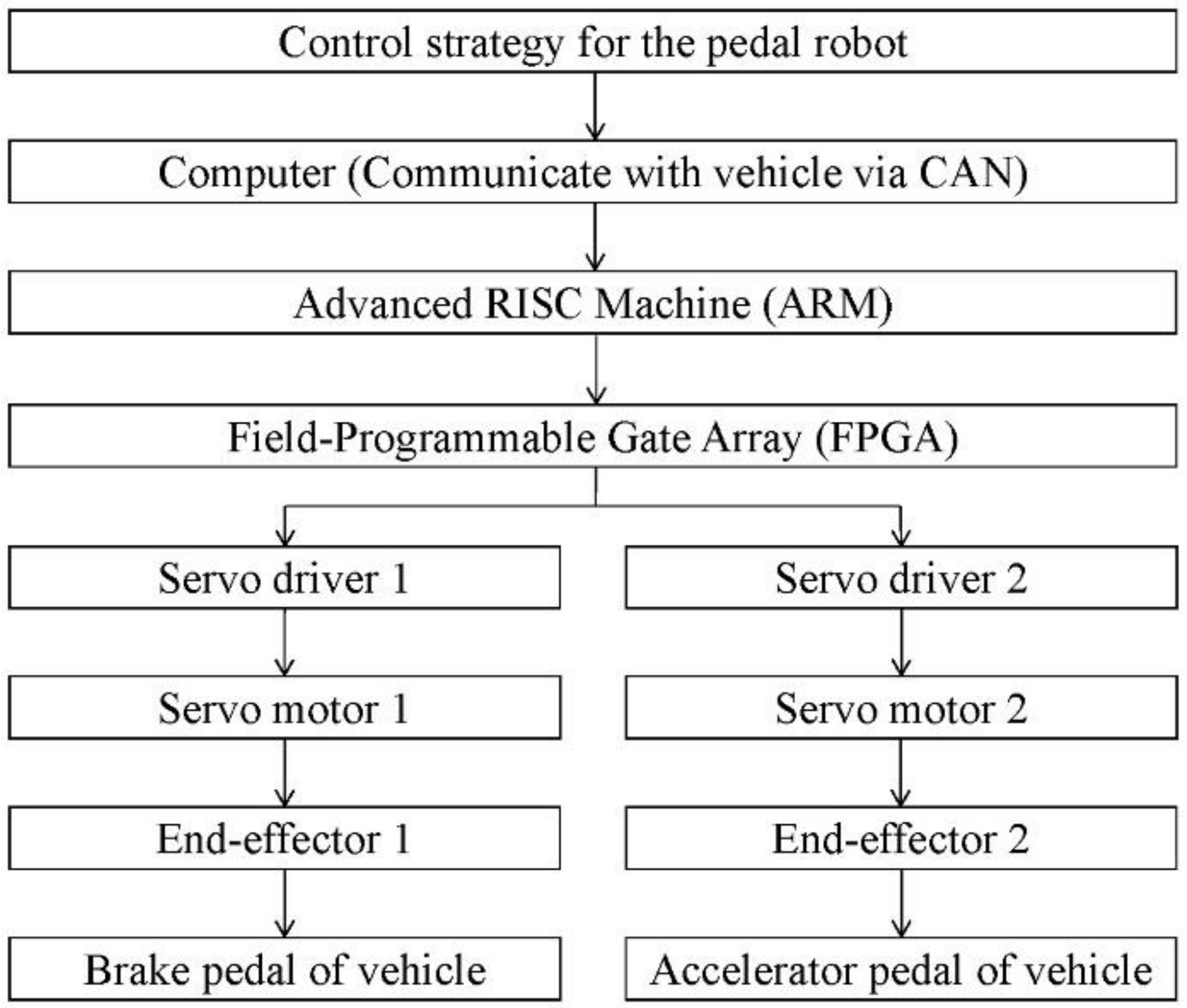

As shown in

Figure 4, the operation process is as follows: First, the control strategy of the robot is transmitted to the advanced RISC machine (ARM) by computer. Next, ARM sends control signals to Field Programmable Gate Array (FPGA). Then, the servo motor is controlled by the servo driver. Finally, the servo motor pushes the end-effector to drive the brake pedal or acceleration pedal of the vehicle. During emission testing, the pedal robot will control the vehicle and make the time-speed curve of the vehicle follow the WLTC curve. The actual speed of the vehicle will be fed back to the computer every 0.1 s by Controller Area Network (CAN). Therefore, the faults can be analyzed according to the feedback information.

In this work, 50 different vehicles are used. After each vehicle test, the parameters of the robot control are adjusted according to the type and number of the faults, and the test is done again. On average, each vehicle undergoes about seven optimization processes, and finally, the number of faults in one test is reduced to almost zero. The fault appearance of each vehicle is not the same. By testing 50 vehicles and testing each vehicle several times, the usability of our method is improved, and the accuracy of fault prediction is also improved.

4. Results and Discussion

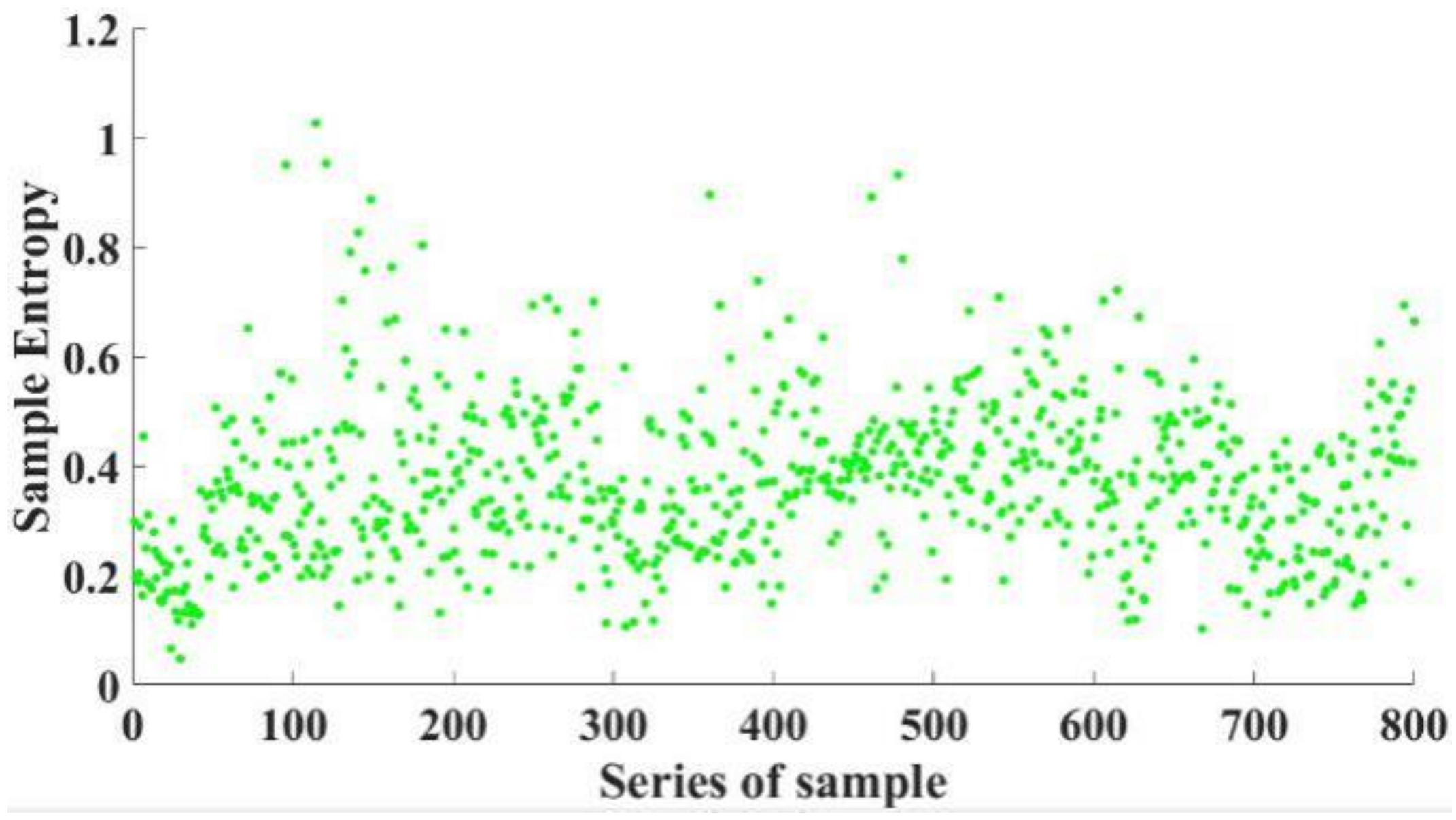

In this work, 346 pollutant emission tests are carried out, and 11,430 fault samples are obtained.

Figure 5 shows the sample entropy of 800 samples. Based on expert experience, the fault samples are classified into four types, and each fault sample has a type label. The expert experience-based labels is the opinion of human operators. The operators are experienced, and by comparing the actual curve with the target curve, the type of fault can be correctly identified.

Figure 6 shows the schematic diagram of four types of faults. According to the time-speed curve, faults can be classified into four types: Acceleration fault, deceleration fault, convex fault, and concave fault.

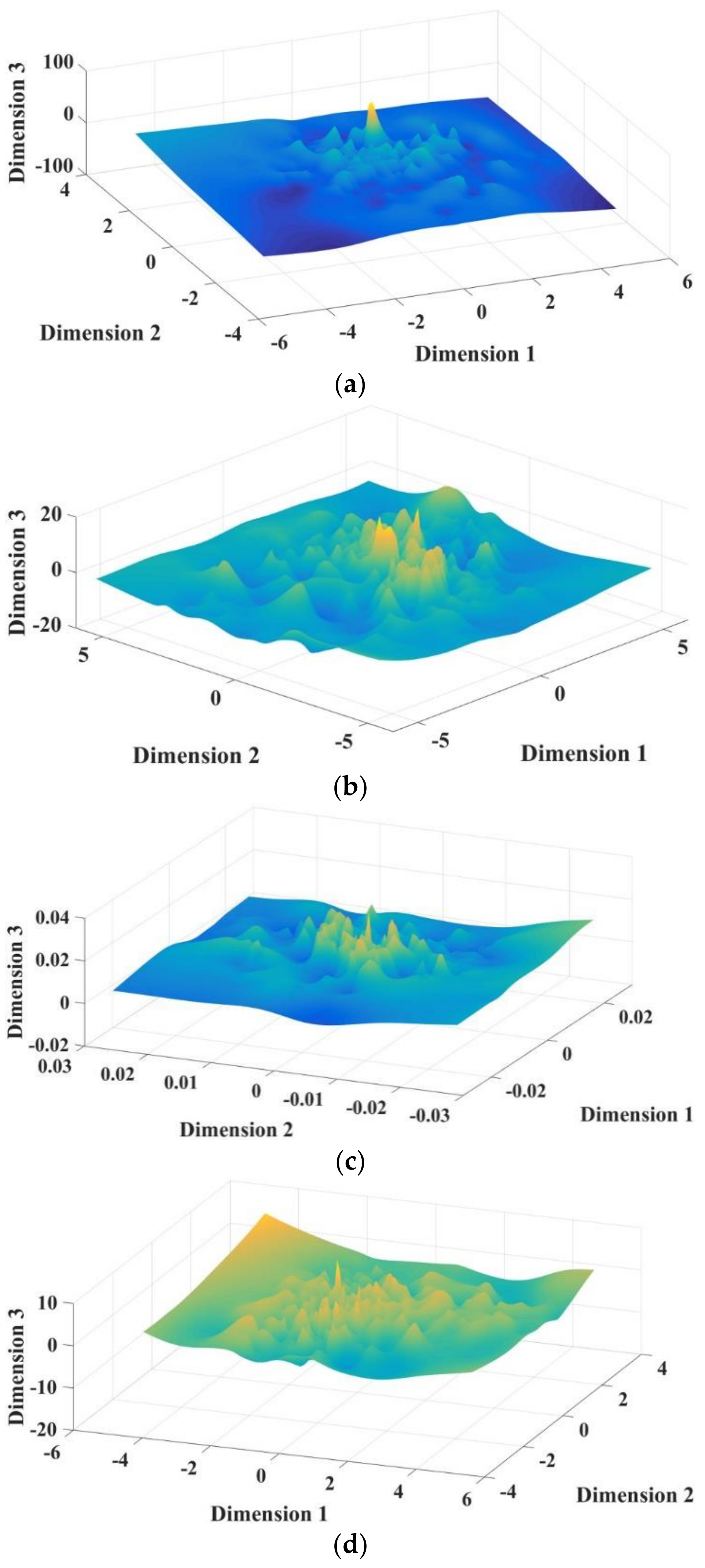

Gaussian process classification is quite time-consuming. If the dimension of the extracted feature is high, the total processing time will be long. But, if the dimension is low, the information will be lost. After many tests, it is found that the first three dimensions already contain enough information. It can not only reduce the dimension of data and accelerate the calculation speed, but also retain the feature information and the classification accuracy. Furthermore, 3D is good for visualization. After feature extraction, three-dimensional features are extracted from the fault samples by PCA, t-SNE, and Autoencoder, respectively. After dimension reduction by Treelet Transform, the feature set is reduced to a three-dimensional feature set matrix.

Figure 7 is the 3D surface graph of three-dimensional features extracted by PCA, t-SNE, Autoencoder, and Treelet Transform, respectively. The values of x, y, and z coordinates are extracted three vectors.

The fault samples are classified into four types by Gaussian process classifier. Since ten-fold cross-validation has good performance [

20], it is used in this paper. The average accuracy and standard deviation comparison between different methods is shown in

Table 1. Method 4 is the method proposed in this paper, which has the minimum standard deviation and almost the maximum accuracy.

As shown in

Table 1, when PCA, t-SNE, and Autoencoder are used to extract features as the input to Gaussian process classifier, respectively, the accuracy of Autoencoder is higher than that of t-SNE and PCA. This shows that the deep learning method is more effective than other two feature extraction methods. Since PCA, t-SNE, and Autoencoder cannot extract feature information adequately when they are used alone, and the feature types extracted by them are different, these features are combined into a nine-dimensional feature set as the input of Gaussian process classifier. And the accuracy of this method (Method 5) is 98.41%, which is much higher than that of PCA, t-SNE, and Autoencoder, respectively. This shows that the information loss can be effectively prevented by multi-features fusion.

In addition, as shown in

Table 1, the processing time of Method 4 is far less than that of Method 5. Because the higher the dimension, the longer the time required for Gaussian process classification. The accuracy of Method 4 is only 0.24% lower than that of Method 5, but the processing time of one sample in Method 4 is 6.73% less than that of Method 5. These clearly prove that Treelet Transform can not only reduce the dimension of data and accelerate the calculation speed, but also retain the feature information and the classification accuracy. Therefore, the hybrid multi-models and Treelet Transform methodology is more effective than other methods.

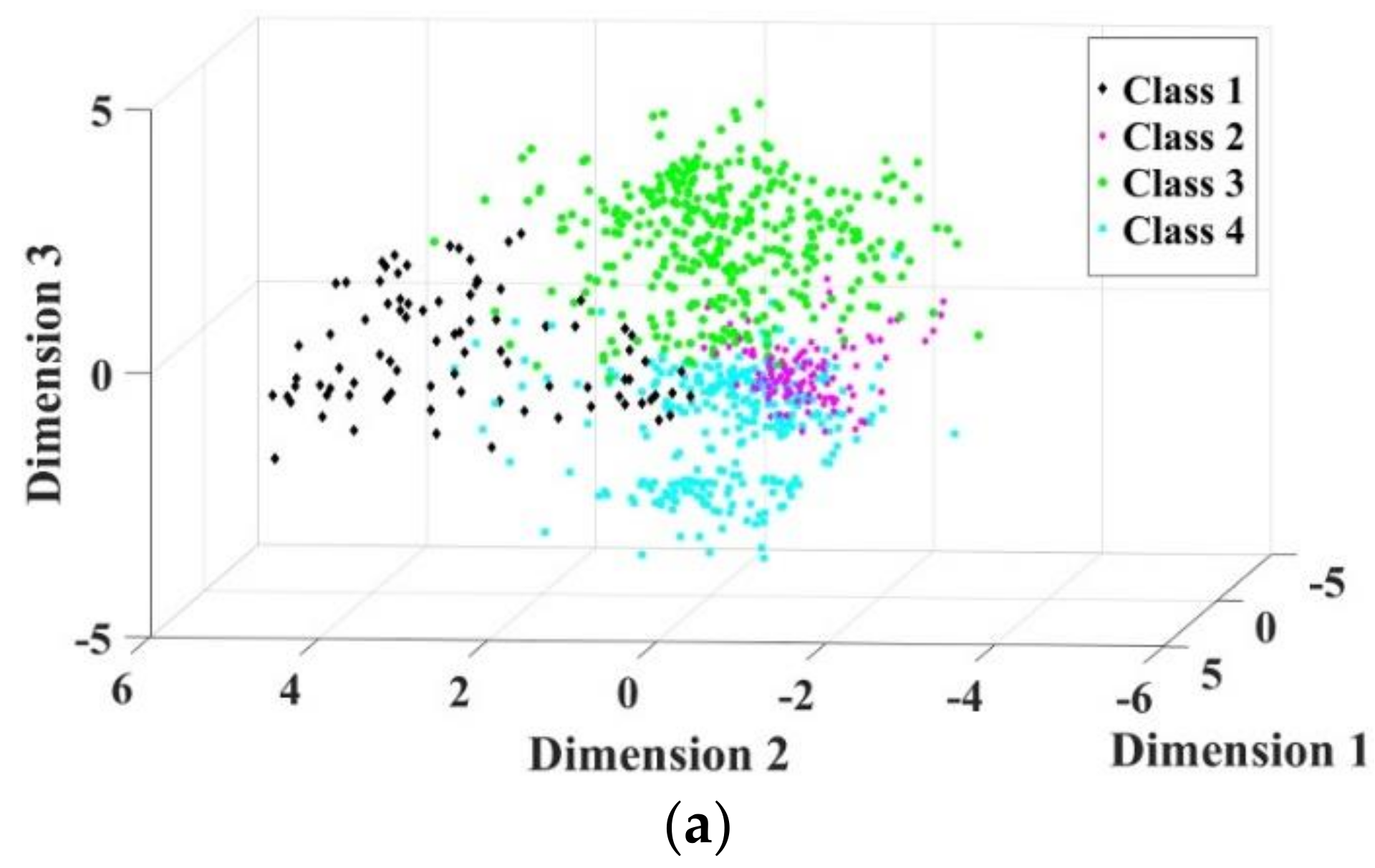

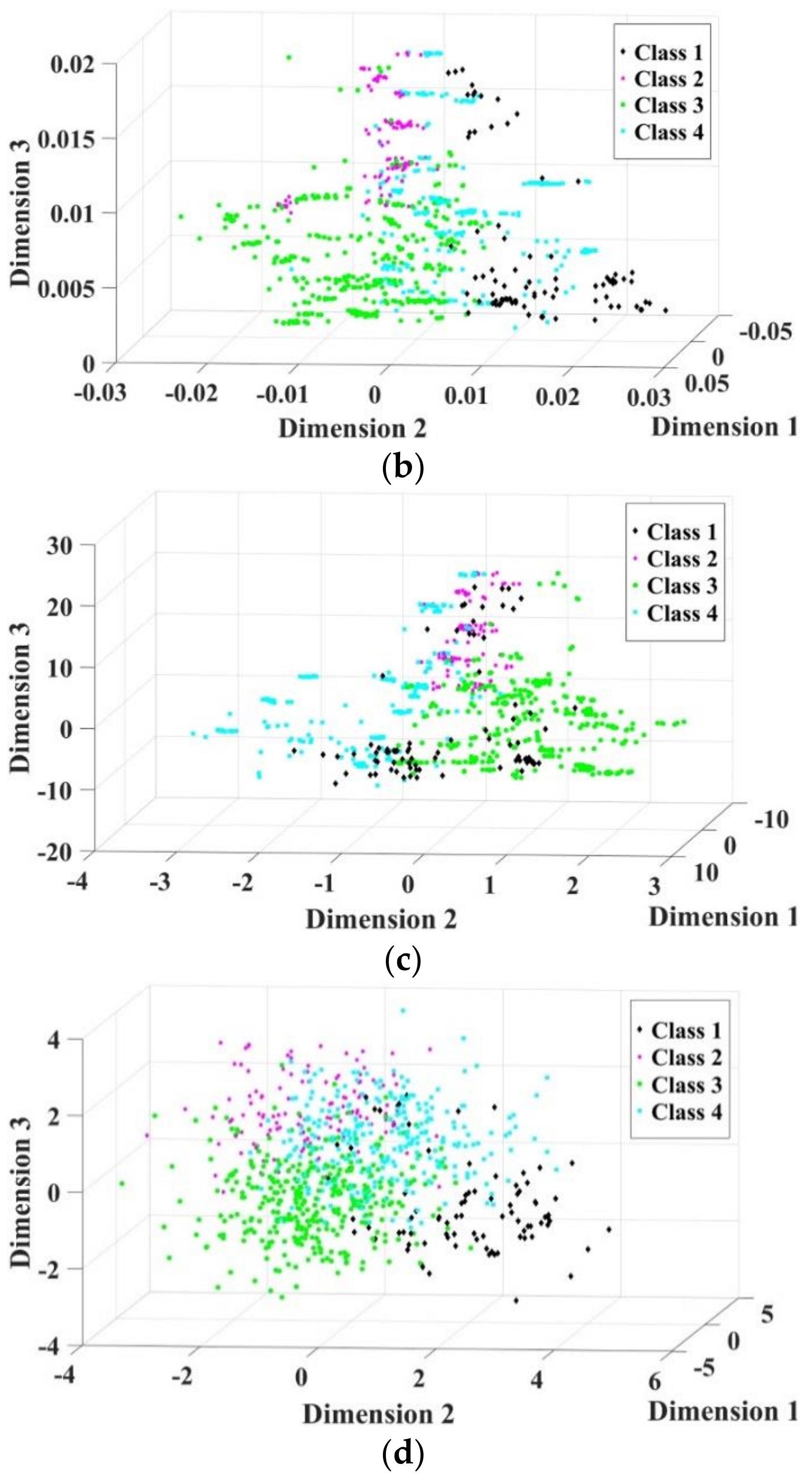

Furthermore, since the feature set is reduced to three dimensions, the fault samples could be visualized in three-dimensional space. This paper randomly selects some samples to make the figure clearer. As shown in

Figure 8, the same position of three-dimensional axes are imposed in all the figures. The dots of Method 4 have more obvious interfaces than those of Method 1, Method 2, and Method 3. This also indicates that the method proposed in this paper produces great performance.

5. Conclusions

In order to realize the automation of pollutant emission tests, a pedal robot is designed instead of a human-driven vehicle. The actual time-speed curve of the vehicle should follow the WLTC target curve. However, sometimes the actual curve will deviate from the upper or lower limit of the target curve, which will cause a fault. In this paper, a new fault diagnosis method is proposed and applied to the fault diagnosis of pedal robot. There were 346 pollutant emission tests carried out, and 11430 fault samples obtained for fault diagnosis. To find more effective feature information from the fault samples, a deep learning algorithm, Autoencoder, is used for feature extraction. The results show that it is better than other feature extraction algorithms. Since PCA, t-SNE, and Autoencoder cannot extract feature information adequately when they are used alone, and the feature types they extract are different, three types of feature components extracted by PCA, t-SNE, and Autoencoder are fused to form a nine-dimensional feature set. Then, the feature set is reduced into three-dimensional space via Treelet Transform. Finally, the fault samples are classified into four types by using Gaussian process classifier. The experimental results show that the proposed method has the minimum standard deviation, 0.0078, and almost the maximum accuracy, 98.17%. Compared with the method using only one algorithm to extract features, the proposed method has much higher classification accuracy. This shows that the information loss can be effectively prevented by multi-features fusion.

Moreover, Treelet Transform is used to reduce dimension of feature set, so that the fault samples can be visualized in three-dimensional space. The accuracy of the proposed method is only 0.24% lower than that without Treelet Transform, but the processing time of one sample is 6.73% less than that without Treelet Transform. These clearly prove that Treelet Transform can not only reduce the dimension of data and accelerate the calculation speed, but also retain the feature information and the classification accuracy. It indicates that the hybrid multi-models and Treelet Transform method is quite effective. Therefore, the proposed method can efficiently identify the type of the faults, which can help optimize the control of the pedal robot in pollutant emission test.