DAQUA-MASS: An ISO 8000-61 Based Data Quality Management Methodology for Sensor Data

Abstract

1. Introduction

2. Data Quality Challenges in SCP Environments

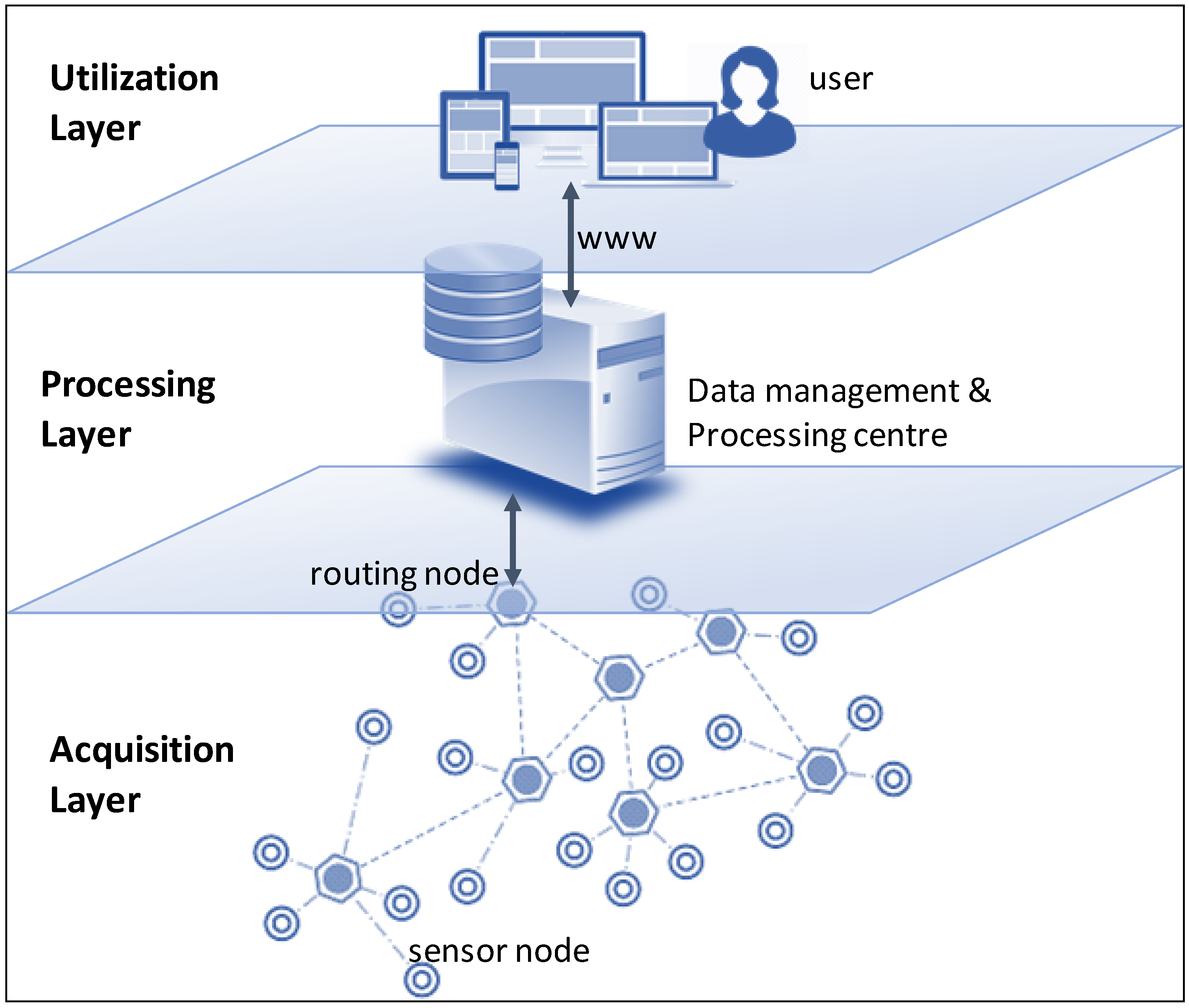

- Acquisition layer refers to the sensor data collection system where sensors, raw (or sensed) and pre-processed data are managed. This is the main focus of this paper.

- Processing layer involves data resulting from data processing and management centre where energy, storage and analyse capabilities are more significant.

- Utilization layer concerns delivered data (or post-processed data) exploited, for example, over a GIS or combined with other services or applications.

- Sensor data: data that is generated by sensors and digitalized in a computer-readable format (for example, the camera sensor readings).

- Device data: It is integrated by sensor data; observed metadata (metadata that characterizes the sensor data, e.g., timestamp of sensor data); and device meta data (metadata that characterizes the device, e.g., device model, sensor model, manufacturer, etc.), so device data, for example, can be data coming from the camera (device).

- General data: data related to/or coming from devices which has been modified or computed to derive different data plus business data (i.e., data for business use such as operation, maintenance, service, customers, etc.).

- IoT data: general data plus device data.

- DQ characteristics to assess data quality in use within a specific context. This aspect considers selected criteria to estimate the quality of raw sensor data at the acquisition and processing layer. There are some DQ characteristics considered, which make it possible to estimate the quality on data sources, their context of acquisition and their transmission to the data management and processing. These DQ characteristics are accuracy and completeness according to ISO/IEC 25012 [25] and reliability and communication reliability as proposed in [9]. It is also related to the utilization layer and includes availability regarding ISO/IEC 25012 [25] plus timeliness and adequacy as defined in [9].

- DQ Characteristics aimed at managing internal data quality. The main goal of managing internal data quality is to avoid inconsistent data and maintain the temporality of sensor data at the processing layer. These characteristics are consistency and currency according to ISO/IEC 25012 [25] and volatility as proposed in [9].

3. Related Work

3.1. Sensor Data Quality

3.2. Data Quality Methodologies Comparison

4. DAQUA-Model: A Data Quality Model

- Accuracy. It is the degree to which data has attributes that correctly represent the true value of the intended attribute of a concept or event in a specific context of use. In SCP environments, a low degree of accuracy could be derived from devices that provide values that could differ from the value on the real world. For example, a low degree of accuracy can be when a humidity sensor reads a value of 30% and the real value is 50%. Low levels of accuracy could be directly related to sensor errors such as constant or offset, outlier errors and noise errors. Also, accuracy could be indirectly affected by continuous varying or drifting and trimming error (see Table 4).

- Completeness. It is the degree to which subject data associated with an entity has values for all expected attributes and related entity instances in a specific context of use. In SCP environments, a low degree of completeness could be derived from devices that are reading and sending no values. For example, a low degree of completeness can happen when the records of sensor data have missing values. Low levels of completeness could be related directly to sensor errors such as crash or jammed errors and indirectly, trimming and noise errors (see Table 4).

- Consistency. It represents the degree to which data has attributes that are free from contradiction and are coherent with other data in a specific context of use. It can be either or both among data regarding one entity and across similar data for comparable entities. In SCP environments, a low degree of consistency could happen when two sensors produce contradictory data. For example, for proximity sensors that provide the relative distance to the sensor position, a consistency problem for a single sensor could be a negative distance value, while a consistency problem between two sensors in the same position could be two different values. Thus, low levels of consistency could be related with continuous varying/drifting error and indirectly with constant or offset errors, trimming and noise error (see Table 4).

- Credibility. It is defined as the degree to which data has attributes that are regarded as true and believable by users in a specific context of use. In SCP environments, a low degree of credibility could be derived from a single sensor placed in someplace and the data cannot be validated by another entity or even sensor. For example, a credibility issue could happen when a sensor whose data is compared with another sensor placed near does not match. Low levels of credibility could be related directly to sensor errors such as outlier errors and indirectly with constant or offset error, continuous varying/drifting error and noise error (see Table 4).

- Currentness. It is the degree to which data has attributes that are of the right age in a specific context of use. In SCP environments, a low degree of currentness could be derived from a sensor that can be indicating past values as current value (see Table 4). For example, if an irrigation sensor produces a value that indicates that the field must be irrigated, it has been irrigated and the data is not updated. The data indicates whether it is necessary irrigation, or it is already irrigated, so this would be data without sufficient currentness.

- Accessibility. It is the degree to which data can be accessed in a specific context of use, particularly by people who need supporting technology or special configuration because of some disability. In SCP environments, a low degree of accessibility could be derived due to the necessary user is not allowed in the precise moment. For example, data produced by a specific sensor is unreachable due to network issues.

- Compliance. It refers to the degree to which data has attributes that adhere to standards, conventions or regulations in force and similar rules relating to data quality in a specific context of use. In SCP environments, a low degree of compliance could be derived from data sensor that is not being using the standards formats established on the organization. For example, if the organization establishes that for distance sensors the unit for values is meters, and if some sensors produce values expressed in meters and other in miles these data have low compliance levels.

- Confidentiality. It is the degree to which data has attributes that ensure that it is only accessible and interpretable by authorized users in a specific context of use. In SCP environments, a low degree of confidentiality could be derived from an inefficient security management of sensor data. For example, a confidentiality leak might happen when data produced by a sensor placed in a nuclear power plant can be freely accessed from external networks even when this data was marked as sensible and, therefore, confidential in order to prevent possible terrorist acts.

- Efficiency. It is the degree to which data has attributes that can be processed and provide the expected levels of performance by using the appropriate amounts and types of resources in a specific context of use. For example, a sensor send data about where is placed and send a code and a description every time the sensor sends o stores a record, it has low efficiency because only the code is enough to know all about the place. In SCP environments, a low degree of efficiency could be derived from the storage of duplicated data that could take more time and resources to send or manipulate the data.

- Precision. It is the degree to which data has attributes that are exact or that provide discrimination in a specific context of use. In SCP environments, a low degree of precision could be derived from devices that are providing inexact values as in the next example. For example, sensor data that store weight with no decimals and it is required a minimum of three decimals. Low levels of consistency could be related directly with trimming errors, and indirectly with noise errors (see Table 4).

- Traceability. The degree to which data has attributes that provide an audit trail of access to the data and of any changes made to the data in a specific context of use. In SCP environments, a low degree of traceability could be derived from sensor data with no metadata. For example, data logs contain information about who has acceded to sensor data and operations made with them. Low levels of traceability could be related indirectly to crash or jammed errors as well as to temporal delay errors (see Table 4).

- Understandability. The degree to which data has attributes that enable it to be read and interpreted by users, and are expressed in appropriate languages, symbols and units in a specific context of use. In SCP environments, a low degree of understandability could be derived from sensor data represented with codes instead of acronyms. For example, records of data about temperature on a car has an attribute to know the place of the sensor in the car. This attribute can be stored as a code like “xkq1”, but if is stored as “GasolineTank” it is supposed to have a higher level of understandability.

- Availability. The degree to which data has attributes that enable it to be retrieved by authorized users and/or applications in a specific context of use. In SCP environments, a low degree of availability could be derived from the insufficient resources of the system in which sensor data is stored. For example, to assure sensor data availability, sensor replication can be used to make it available even if there is some issue on a sensor. Low levels of availability could be related indirectly with temporal delay error and crash or jammed errors (see Table 4).

- Portability. The degree to which data has attributes that enable it to be installed, replaced or moved from one system to another preserving the existing quality in a specific context of use. For example, sensor data is going to be shared with a concrete system or even other organization or department, data loss can occur. If this happens, for example, due to a data model mismatching or a problem with the data format, the reason is directly related to portability of data. In SCP environments, a low degree of portability could be derived from sensor data that does not follow a specific data model (see Table 4) or the format present some problems.

- Recoverability. The degree to which data has attributes that enable it to maintain and preserve a specified level of operations and quality, even in the event of failure, in a specific context of use. In SCP environments, a low degree of recoverability could be derived from devices that does not have a mechanism failure tolerant or backup. For example, when a device has a failure, data stored in that device should be recoverable. Low levels of recoverability could be related indirectly with temporal delay error and crash or jammed errors (see Table 4).

5. DAQUA-MASS: A Data Quality Management Methodology for Data Sensors

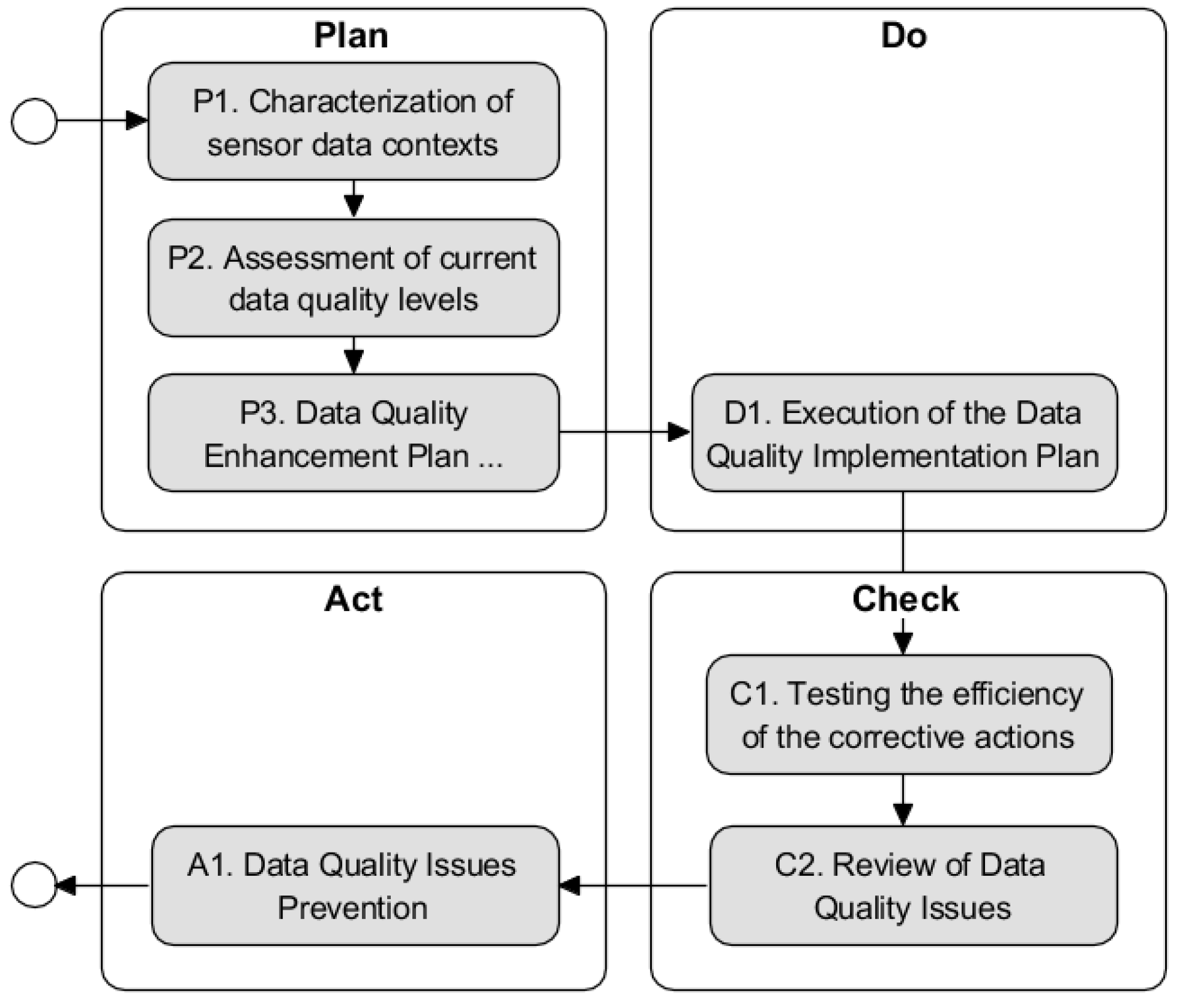

- The plan phase establishes the strategy and the data quality improvement implementation plan as necessary to deliver results in accordance with data requirements;

- In the do phase the data quality improvement implementation plan is executed;

- During the check phase, it is conducted the monitorization of data quality and process performance against the strategy and data requirements and report the results to validate the efficiency of the corrective actions; and finally

- The act phase takes actions to continually improve process performance.

- Chief Information Officer (CIO). It is the most senior executive in an enterprise responsible for the traditional information technology and computer systems that support enterprise goal.

- Chief Data Officer (CDO). It is a corporate officer responsible for enterprise wide data governance and utilization of data as an asset, via data processing, analysis, data mining, information trading and other means. CIO and CDO are at the executive level.

- Data Governance Manager (DGM). He or she is overseeing enterprise data governance program development and is responsible for architecting certain solutions and frameworks. It is at the strategic level.

- Data Quality Steward for SCP domain (SCP DQ Steward). It is a DQ steward at tactical level for the area of SCP environments by considering its implications in DQ.

- Data Quality Steward (DQ Steward). It is responsible for utilizing an organization’s data quality governance processes to ensure fitness of data elements—both the content and metadata. Data stewards have a specialist role that incorporates processes, policies, guidelines and responsibilities for administering organizations’ entire data in compliance with DQ policies and procedures. This is at operational level.

- SCP Technical Architect (SCP Arch). A SCP architect is a SCP (and in general IoT) expert who makes high-level design choices and dictates technical standards, including SCP technology standards, tools, and platforms.

5.1. The Plan Phase

5.1.1. P1. Characterization of the Current State of the Sensor Data Contexts

- P1-1. Sensor and sensor data lifecycle management specification. The lifecycle of sensors [2] and sensor data [39] have to be defined so that it can be managed. For sensors, the lifecycle starts when the device is obtained, and the last step is when it is necessary to replace o remove the sensor because it became useless. For sensor data, the lifecycle starts when sensors produce the data and ends when the data is eliminated. The end of the sensor data life cycle should not be confused with the moment in which the data goes from being operational to historical data. Operational sensor data is used on a day-to-day basis, but when data is no longer useful on a day-to-day basis due to its age, these data is separated and stored in another database and become into historical data. It is important to highlight that sensor and sensor data lifecycle is used to better contextualize the environment of the data.

- P1-2. Management of quality policies, standards and procedures for data quality management. Specify fundamental intentions and rules for data quality management. Ensure the data quality policies, standards and procedures are appropriate for the data quality strategy, comply with data requirements and establish the foundation for continual improvement of the effectiveness and efficiency of data quality management in all the key SCP operations. The purpose of data quality policy/standards/procedures management is to capture rules that apply to performing data quality control, data quality assurance, data quality improvement, data-related support and resource provision consistently. Before the implementation and definition of the complete plan, it should be defined the policies, standards and procedures in order to define the implementation plan based on them.

- P1-3. Provision of sensor data and work specifications. Developing specifications that describe characteristics of data and work instructions for smart connected products enables Data processing and data quality monitoring and control. To support the description of the provision of the sensor data work specifications some metadata must be provided. Metadata can help with one of the biggest problems of SCP: interoperability. Interoperability refers to the ability for one or more smart connected products to communicate and exchange [15].

- P1-4. Identification, prioritization and validation of sensor data requirements. Collect the needs and expectations related to sensor data from devices and stakeholders. Then, it is translated by identification, prioritization and validation of data requirements. The purpose of Requirements Management is to establish the basis for creating or for refining a data quality strategy for SCP environments aligned to the needs and expectations of stakeholders. It is important to have well defined and implemented good sensor data requirements to avoid problems since the start and to facilitate the collection and integration of sensor data.

5.1.2. P2. Assessment of the Current State of the Levels of Data Quality

- P2-1. Identification of the data quality characteristics representing quality requirements and determination and development of metrics and measurement methods. Develop or select the measurement indicators, corresponding metrics and measurement methods used to measure the quality levels of data produced by devices during all the SCP operations.

- P2-2. Measurement and analysis of data quality levels. Measure the data quality levels by implementing the measurement plans and determining the measurement results. This means the application of the measurement algorithms defined and developed on the previous step (P2-1). Such algorithms strongly depend on the IT infrastructure landscape of every organization. Thereby, every organization often develop their own algorithms or use commercial solutions which are compliant with their own infrastructure. Merino et al. [7] presents algorithms and metrics for each data quality characteristic. After data quality levels have been measured, these can be quantitatively analysed to extract insights about the SCP environment being managed. As a result, a list of nonconformities can be elaborated in the next step, with which to make informed decisions.

5.1.3. P3. Data Quality Enhancement Plan Definition

- P3-1. Analysis of root causes of data nonconformities. Analysing the root causes of each data quality issue and assess the effect of the issue on business processes. The purpose of root cause analysis and solution development of non-solved data quality non-conformities is to establish, in accordance with the data quality strategy and with the priorities identified by Data Quality Assurance, the basis on which to perform data cleansing and/or process improvement for data nonconformity prevention.

- P3-2. Data quality risk assessment. Identify risks throughout the data life cycle, analyse the impact if each risk was to occur and determine risk priorities to establish the basis for monitoring and control of processes and data. The purpose of data quality monitoring and control is, by following applicable work instructions, to identify and respond when Data Processing fails to deliver data that meet the requirements in the corresponding data specification. It allows to control what is happening on data or even on the SCP environment. For example, with the control of sensor data quality, it is possible to identify certain issues on devices. Data is often the most important thing to protect, because although the direct cost of losing it may be small compared with research data or intellectual property. If a sensor is not functioning any more due to some reason, it can be replaced, but if a data loss is produced is very difficult to recover it or even impossible to recover. This can bring not only a data loss but can also be the root cause of other problems. In conclusion, data quality risks should be identified in order to avoid them. As Karkouch et al. highlight in [6], the main factors affecting DQ in SCP or IoT environments are: deployment scale, resources constraints, network, sensors (as physical devices), environment, vandalism, fail-dirty, privacy preservation, security vulnerability and data stream processing.

- P3-3. Development of improvement solutions to eliminate the root causes. Propose solutions to eliminate the root causes and prevent recurrence of nonconformities. Evaluate the feasibility of the proposed improvements through cost benefit analysis.

- P3-4. Definition of improvement targets. The purpose of this activity is to analyse possible improvements areas according to the business processes, risk catalogue and the data quality strategy; and then it selects those that are more aligned with the data quality strategy and/or are able to lead to greater data quality enhancement regarding previous detected risks. Specific areas or sub-nets of devices in the organization’s SCP environments could also serve as a criterion to determine specific improvement targets.

- P3-5. Establishment of the data quality enhancement plan. Define the scope and target of data quality and prepare detailed implementation plans, defining and allocating the resources needed.

5.2. The Do Phase

D1. Execution of the Data Quality Improvement Plan

- D1-1. Establish flags to convey information about the sensor data. Flags or qualifiers convey information about individual data values, typically using codes that are stored in a separate field to correspond with each value. Flags can be highly specific to individual studies and data sets or standardized across all data.

- D1-2. Definition of the optimal node placement plan. It is a very challenging problem that has been proven to be NP-hard (non-deterministic polynomial-time hardness) for most of the formulations of sensor deployment [40,41]. To tackle such complexity, several heuristics have been proposed to find sub-optimal solutions [42,43]. However, the context of these optimization strategies is mainly static in the sense that assessing the quality of candidate positions is based on a structural quality metric such as distance, network connectivity and/or basing the analysis on a fixed topology. Also, application-level interest can vary over time and the available network resources may change as new nodes join the network, or as existing nodes run out of energy [44]. Also, if we talk about node placement, node or sensor replication. Replicating data sensors is important for purposes of high availability and disaster recovery. Also, replication of this data on cloud storage needs to be implemented efficiently. Archiving is one way to recover lost or damaged data in primary storage space, but replicas of data repositories that are updated concurrently with the primary repositories can be used for sensitive systems with strong data availability requirements. Replication can be demanding in terms of storage and may degrade performance due to if a concurrent updates strategy is enforced.

- D1-3. Redesign the software or hardware that includes sensors to eliminate root causes. It is an alternative process to the optimal re-placements of sensors. For example, redesign and reimplement a fragment of a SCP’s firmware could improve fault tolerance.

- D1-4. Data Cleansing. The purpose of Data Cleansing is to ensure, in response to the results of root cause analysis and solution development, the organization can access data sets that contain no nonconformities capable of causing unacceptable disruption to the effectiveness and efficiency of decision making using those data. Also, the nonconformities are corrected implementing developed solutions and make a record of the corrections.

- D1-5. Force an appropriate level of human inspection. If performed by trained and experienced technicians, visual inspection is used to monitor the state of industrial equipment and to identify necessary repairs. There are also technologies used to assist with fault recognition or even to automate inspections. Shop floor workers are made responsible for basic maintenance including cleaning machines, visual inspection and initial detection of machine degradation.

- D1-6. Implement an automated alert system to warn about potential sensor data quality issues. Having only human inspection can be a complex task for maintenance staff. It is necessary to implement a system in which some key indicators are constantly reading if the sensors status is correct or not. If a sensor is not working properly, the root cause can be due to a hardware, network or software failure and affects to data quality. Furthermore, an automated data quality procedure might identify anomalous spikes in the data and flag them. Even though, it is almost always necessary the human supervision, intervention and inspection as stated in [45,46]; the inclusion of automated quality is often an improvement, because it ensures consistency and reduces human bias. Automated data quality procedures are also more efficient at handling the vast quantities of data that are being generated by streaming sensor networks and reduces the amount of human inspection required.

- D1-7. Schedule sensor maintenance to minimize data quality issues. Sensors require routine maintenance and scheduled calibration that, in some cases, can be done only by the manufacturer. Ideally, maintenance and repairs are scheduled to minimize data loss (e.g., snow-depth sensors repaired during the summer) or staggered in such a way that data from a nearby sensor can be used to fill gaps. In cases in which unscheduled maintenance is required, stocking replacement parts on site ensures that any part of the network can be replaced immediately.

5.3. The Check Phase

5.3.1. C1. Testing the Efficiency of the Corrective Actions

- C1-1. Monitoring and control of the enhanced data. According to the identified risk priorities, monitor and measure conformity of data to the applicable specification. Monitoring and measuring takes place either at intervals or continuously and in accordance with applicable work instructions. This work instructions can be: perform range checks on numerical data, perform domain checks on categorical data and perform slope and persistence checks on continuous data streams. If data nonconformities are found, then correct the data when viable and distribute to stakeholders a record of the viability and degree of success for each corrective action.

- C1-2. Definition of an interstice comparison plan. Create policies for comparing data with data from related sensors. If no replicate sensors exist, interstice comparisons are useful, whereby data from one location are compared with data from nearby identical sensors.

5.3.2. C2. Review of Data Quality Issues

- C2-1. Issue analysis. Review non-solved nonconformities arising from Data Processing to identify those that are possibly connected to the reported issue that has triggered the need for Data Quality Assurance. This review creates a set of related nonconformities. This set is the basis for further investigation through the measurement of data quality levels in SCP environments. Respond to the reporting of unresolved data nonconformities from within Data Quality Control, indications of the recurrence of types of nonconformity or other issues raised against the results of Data Quality Planning or Data Quality Control.

5.4. The Act Phase

A1. Data Quality Issues Prevention

- A1-1. Make available ready access to replacement parts. Schedule routine calibration of instruments and sensors based on manufacturer specifications. Maintaining additional calibrated sensors of the same make/model can allow immediate replacement of sensors removed for calibration to avoid data loss. Otherwise, sensor calibrations can be scheduled at non-critical times or staggered such that a nearby sensor can be used as a proxy to fill gaps.

- A1-2. Update the strategy for node replacement. Controlled replacement is often pursued for only a selected subset of the employed nodes with the goal of structuring the network topology in a way that achieves the desired application requirements. In addition to coverage, the nodes’ positions affect numerous network performance metrics such as energy consumption, delay and throughput. For example, large distances between nodes weaken the communication links, lower the throughput and increase energy consumption. Additionally, it can anticipate some common repairs and maintain inventory replacement parts. This means that sensors could be replaced before failure where sensor lifetimes are known or can be estimated.

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Ashton, K. That ‘internet of things’ thing. RFID J. 2009, 22, 97–114. [Google Scholar]

- Weber, R.H. Internet of things–governance quo vadis? Comput. Law Secur. Rev. 2013, 29, 341–347. [Google Scholar] [CrossRef]

- Hassanein, H.S.; Oteafy, S.M. Big Sensed Data Challenges in the Internet of Things. In Proceedings of the 13th International Conference on Distributed Computing in Sensor Systems (DCOSS), Ottawa, ON, Canada, 5–7 June 2017. [Google Scholar]

- Atzori, L.; Iera, A.; Morabito, G. The internet of things: A survey. Comput. Netw. 2010, 54, 2787–2805. [Google Scholar] [CrossRef]

- Zhang, W.; Zhang, Z.; Chao, H.C. Cooperative fog computing for dealing with big data in the internet of vehicles: Architecture and hierarchical resource management. IEEE Commun. Mag. 2017, 55, 60–67. [Google Scholar] [CrossRef]

- Karkouch, A.; Mousannif, H.; Al Moatassime, H.; Noel, T. Data quality in internet of things: A state-of-the-art survey. J. Netw. Comput. Appl. 2016, 73, 57–81. [Google Scholar] [CrossRef]

- Merino, J.; Caballero, I.; Rivas, B.; Serrano, M.; Piattini, M. A data quality in use model for big data. Future Gener. Comput. Syst. 2016, 63, 123–130. [Google Scholar] [CrossRef]

- Jesus, G.; Casimiro, A.; Oliveira, A. A Survey on Data Quality for Dependable Monitoring in Wireless Sensor Networks. Sensors 2017, 17, 2010. [Google Scholar] [CrossRef] [PubMed]

- Rodríguez, C.C.G.; Servigne, S. Managing Sensor Data Uncertainty: A Data Quality Approach. Int. J. Agric. Environ. Inf. Syst. 2014, 4, 35–54. [Google Scholar] [CrossRef]

- Klein, A.; Hackenbroich, G.; Lehner, W. How to Screen a Data Stream-Quality-Driven Load Shedding in Sensor Data Streams. 2009. Available online: http://mitiq.mit.edu/ICIQ/Documents/IQ%20Conference%202009/Papers/3-A.pdf (accessed on 13 September 2018).

- Mühlhäuser, M. Smart products: An introduction. In Proceedings of the European Conference on Ambient Intelligence, Darmstadt, Germany, 7–10 November 2007; Springer: Berlin, Germany, 2007; pp. 158–164. [Google Scholar]

- Laney, D.B. Infonomics: How to Monetize, Manage, and Measure Information as an Asset for Competitive Advantage; Routledge: London, UK, 2017. [Google Scholar]

- ISO/IEC. ISO/IEC 25000:2014. Systems and Software Engineering—Systems and Software Quality Requirements and Evaluation (SQuaRE)—Guide to SQuaRE. 2014. Available online: https://www.iso.org/standard/64764.html (accessed on 13 September 2018).

- Qin, Z.; Han, Q.; Mehrotra, S.; Venkatasubramanian, N. Quality-aware sensor data management. In The Art of Wireless Sensor Networks; Springer: Berlin/Heidelberg, Germany, 2014; pp. 429–464. [Google Scholar]

- Campbell, J.L.; Rustad, L.E.; Porter, J.H.; Taylor, J.R.; Dereszynski, E.W.; Shanley, J.B.; Gries, C.; Henshaw, D.L.; Martin, M.E.; Sheldon, W.M. Quantity is nothing without quality: Automated QA/QC for streaming environmental sensor data. Bioscience 2013, 63, 574–585. [Google Scholar] [CrossRef]

- Klein, A.; Lehner, W. Representing data quality in sensor data streaming environments. J. Date Inf. Qual. 2009, 1, 10. [Google Scholar] [CrossRef]

- ISO. ISO 8000-61: Data Quality—Part 61: Data Quality Management: Process Reference Model. 2016. Available online: https://www.iso.org/standard/63086.html (accessed on 13 September 2018).

- Cook, D.; Das, S.K. Smart Environments: Technology, Protocols and Applications; John Wiley Sons: Hoboken, NJ, USA, 2004. [Google Scholar]

- Porter, M.E.; Heppelmann, J.E. How smart, connected products are transforming competition. Harvard Bus. Rev. 2014, 92, 64–88. [Google Scholar]

- Ostrower, D. Smart Connected Products: Killing Industries, Boosting Innovation. 2014. Available online: https://www.wired.com/insights/2014/11/smart-connected-products (accessed on 13 September 2018).

- Wuenderlich, N.V.; Heinonen, K.; Ostrom, A.L.; Patricio, L.; Sousa, R.; Voss, C.; Lemmink, J.G. “Futurizing” smart service: implications for service researchers and managers. J. Serv. Mark. 2015, 29, 442–447. [Google Scholar] [CrossRef]

- Allmendinger, G.; Lombreglia, R. Four strategies for the age of smart services. Harvard Bus. Rev. 2005, 83, 131. [Google Scholar]

- Tilak, S.; Abu-Ghazaleh, N.B.; Heinzelman, W. A taxonomy of wireless micro-sensor network models. ACM SIGMOBILE Mob. Comput. Commun. Rev. 2002, 6, 28–36. [Google Scholar] [CrossRef]

- Barnaghi, P.; Bermudez-Edo, M.; Tönjes, R. Challenges for quality of data in smart cities. J. Date Inf. Qual. 2015, 6, 6. [Google Scholar] [CrossRef]

- ISO/IEC. ISO/IEC 25012: Software Engineering-Software Product Quality Requierements and Evaluation (SQuaRE)—Data Quality Model. 2008. Available online: https://www.iso.org/standard/35736.html (accessed on 13 September 2018).

- Badawy, R.; Raykov, Y.P.; Evers, L.J.; Bloem, B.R.; Faber, M.J.; Zhan, A.; Claes, K.; Little, M.A. Automated Quality Control for Sensor Based Symptom Measurement Performed Outside the Lab. Sensors 2018, 18, 1215. [Google Scholar] [CrossRef] [PubMed]

- Al-Ruithe, M.; Mthunzi, S.; Benkhelifa, E. Data governance for security in IoT & cloud converged environments. In Proceedings of the 2016 IEEE/ACS 13th International Conference of Computer Systems and Applications (AICCSA), Agadir, Morocco, 29 November–2 December 2016. [Google Scholar]

- Lee, Y.W.; Strong, D.M.; Kahn, B.K.; Wang, R.Y. AIMQ: A methodology for information quality assessment. Inf. Manag. 2002, 40, 133–146. [Google Scholar] [CrossRef]

- McGilvray, D. Executing Data Quality Projects: Ten Steps to Quality Data and Trusted Information (TM); Elsevier: Burlington, VT, USA, 2008. [Google Scholar]

- ISO. ISO/TS 8000-150:2011 Data Quality—Part 150: Master Data: Quality Management Framework. 2011. Available online: https://www.iso.org/standard/54579.html (accessed on 13 September 2018).

- ISO. ISO 9001:2015 Quality Management Systems—Requirements, in ISO 9000 Family—Quality Management. 2015. Available online: https://www.iso.org/standard/62085.html (accessed on 13 September 2018).

- Batini, C.; Cappiello, C.; Francalanci, C.; Maurino, A. Methodologies for data quality assessment and improvement. ACM Comput. Surv. 2009, 41, 16. [Google Scholar] [CrossRef]

- Woodall, P.; Oberhofer, M.; Borek, A. A classification of data quality assessment and improvement methods. Int. J. Inf. Qual. 2014, 3, 298–321. [Google Scholar] [CrossRef]

- Strong, D.M.; Lee, Y.W.; Wang, R.Y. Data quality in context. Commun. ACM 1997, 40, 103–110. [Google Scholar] [CrossRef]

- Wang, R.Y. A product perspective on total data quality management. Commun. ACM 1998, 41, 58–65. [Google Scholar] [CrossRef]

- Srivannaboon, S. Achieving competitive advantage through the use of project management under the plan-do-check-act concept. J. Gen. Manag. 2009, 34, 1–20. [Google Scholar]

- Chen, P.Y.; Cheng, S.M.; Chen, K.C. Information fusion to defend intentional attack in internet of things. IEEE Internet Things J. 2014, 1, 337–348. [Google Scholar] [CrossRef]

- Blasch, E.; Steinberg, A.; Das, S.; Llinas, J.; Chong, C.; Kessler, O.; Waltz, E.; White, F. Revisiting the JDL model for information Exploitation. In Proceedings of the 16th International Conference on Information Fusion (FUSION), Istanbul, Turkey, 9–12 July 2013. [Google Scholar]

- Pastorello, G.Z., Jr. Managing the Lifecycle of Sensor Data: From Production to Consumption. 2008. Available online: https://lis-unicamp.github.io/wp-content/uploads/2014/09/tese_GZPastorelloJr.pdf (accessed on 13 September 2018).

- Cerpa, A.; Estrin, D. ASCENT: Adaptive self-configuring sensor networks topologies. IEEE Trans. Mob. Comput. 2004, 3, 272–285. [Google Scholar] [CrossRef]

- Cheng, X.; Du, D.Z.; Wang, L.; Xu, B. Relay sensor placement in wireless sensor networks. Wirel. Netw. 2008, 14, 347–355. [Google Scholar] [CrossRef]

- Dhillon, S.S.; Chakrabarty, K. Sensor placement for effective coverage and surveillance in distributed sensor networks. In Proceedings of the 2003 IEEE Wireless Communications and Networking, New Orleans, LA, USA, 16–20 March 2003. [Google Scholar]

- Pan, J.; Cai, L.; Hou, Y.T.; Shi, Y.; Shen, S.X. Optimal Base-Station Locations in Two-Tiered Wireless Sensor Networks. IEEE Trans. Mob. Comput. 2005, 4, 458–473. [Google Scholar] [CrossRef]

- Wu, J.; Yang, S. SMART: A scan-based movement-assisted sensor deployment method in wireless sensor networks. IEEE INFOCOM 2005, 4, 2313. [Google Scholar]

- Peppler, R.A.; Long, C.; Sisterson, D.; Turner, D.; Bahrmann, C.; Christensen, S.W.; Doty, K.; Eagan, R.; Halter, T.; Iveyh, M. An overview of ARM Program Climate Research Facility data quality assurance. Open Atmos. Sci. J. 2008, 2, 192–216. [Google Scholar] [CrossRef]

- Fiebrich, C.A.; Grimsley, D.L.; McPherson, R.A.; Kesler, K.A.; Essenberg, G.R. The value of routine site visits in managing and maintaining quality data from the Oklahoma Mesonet. J. Atmos. Ocean. Technol. 2006, 23, 406–416. [Google Scholar] [CrossRef]

| SCP Factor | Side Effect in Data Quality | Acquisition | Processing | Utilization |

|---|---|---|---|---|

| Deployment Scale | SCPs are expected to be deployed on a global scale. This leads to a huge heterogeneity in data sources (not only computers but also daily objects). Also, the huge number of devices accumulates the chance of error occurrence. | X | X | |

| Resources constraints | For example, computational and storage capabilities that do not allow complex operations due, in turn, to the battery-power constraints among others. | X | X | |

| Network | Intermittent loss of connection in the IoT is recurrent. Things are only capable of transmitting small-sized messages due to their scarce resources. | X | X | |

| Sensors | Embedded sensors may lack precision or suffer from loss of calibration or even low accuracy. Faulty sensors may also result in inconsistencies in data sensing. | X | ||

| Environment | SCP devices will not be deployed only in tolerant and less aggressive environments. To monitor some phenomenon, sensors may be deployed in environments with extreme conditions. Data errors emerge when the sensor experiences the surrounding environment influences [23]. | X | X | |

| Vandalism | Things are generally defenceless from outside physical threats (both from humans and animals). | X | X | |

| Fail-dirty. | A sensor node fails, but it keeps up reporting readings which are erroneous. It is a common problem for SCP networks and an important source of outlier readings. | X | X | |

| Privacy | Privacy preservation processing, thus DQ could be intentionally reduced. | X | ||

| Security vulnerability | Sensor devices are vulnerable to attack, e.g., it is possible for a malicious entity to alter data in an SCP device. | X | X | |

| Data stream processing | Data gathered by smart things are sent in the form of streams to the back-end pervasive applications which make use of them. Some stream processing operators could affect quality of the underlying data [10].Other important factors are data granularity and variety [24]. Granularity concerns interpolation and spatio-temporal density while variety refers to interoperability and dynamic semantics. | X | X |

| Error | Description | Example |

|---|---|---|

| Temporal delay error | The observations are continuously produced with a constant temporal deviation |  |

| Constant or offset error | The observations continuously deviate from the expected value by a constant offset. |  |

| Continuous varying or drifting error | The deviation between the observations and the expected value is continuously changing according to some continuous time-dependent function (linear or non-linear). |  |

| Crash or jammed error | The sensor stops providing any readings on its interface or gets jammed and stuck in some incorrect value. |  |

| Trimming error | Data is correct for values within some interval but are modified for values outside the interval. Beyond the interval, the data can be trimmed or may vary proportionally. |  |

| Outliers error | The observations occasionally deviate from the expected value, at random points in the time domain. |  |

| Noise error | The observations deviate from the expected value stochastically in the value domain and permanently in the temporal domain. |  |

| Sensor Fault | DQ Problem | Root Cause | Solution |

|---|---|---|---|

| Omission faults | Absence of values | Missing sensor | Network reliability, retransmission |

| Crash faults (fading/intermittent) | Inaccuracy/absence of values | Environment interference | Redundancy/estimating with past values |

| Delay faults | Inaccuracy | Time domain | Timeline solutions |

| Message corruption | Integrity | Communication | Integrity validation |

| DQ Characteristics | Inherent | System Dependent | Temporal Delay Error | Constant or Offset Error | Continuous Varying/Drifting Error | Crash or Jammed Error | Trimming Error | Outliers Error | Noise Error |

|---|---|---|---|---|---|---|---|---|---|

|  |  |  |  |  |  | |||

| Accuracy | x | P | S | S | P | P | |||

| Completeness | x | P | S | S | |||||

| Consistency | x | S | P | S | S | ||||

| Credibility | x | S | S | P | S | ||||

| Currentness | x | P | S | ||||||

| Accessibility | x | x | |||||||

| Compliance | x | x | |||||||

| Confidentiality | x | x | |||||||

| Efficiency | x | x | |||||||

| Precision | x | x | P | S | |||||

| Traceability | x | x | S | S | |||||

| Understandability | x | x | |||||||

| Availability | x | S | S | ||||||

| Portability | x | ||||||||

| Recoverability | x | S | S |

| Step | Act. | Input | Output | CIO | CDO | DGM | SCP DQ Steward | DQ Steward | SCP Arch |

|---|---|---|---|---|---|---|---|---|---|

| P1 | P1-1 |

|

| I | A | C | R | ||

| P1-2 |

|

| I | A | R | R | C | ||

| P1-3 |

|

| I | C | A R | C | |||

| P1-4 |

|

| I | A | R | R | R | C | |

| P2 | P2-1 |

|

| I | A | C | R | ||

| P2-2 |

|

| A | Q | Q | R | |||

| P3 | P3-1 |

|

| I | A | R | R | R Q | |

| P3-2 |

|

| I | I | A | R | R | C | |

| P3-3 |

|

| I | A | R | R | R C | ||

| P3-4 |

|

| |||||||

| P3-5 |

|

| I | Q | A | R | R | C |

| Step | Act. | Input | Output | CIO | CDO | DGM | SCP DQ Steward | DQ Steward | SCP Arch |

|---|---|---|---|---|---|---|---|---|---|

| D1 | D1-1 |

|

| A | Q | R | |||

| D1-2 |

|

| I | A | Q | R | |||

|

| I | A | Q | R | ||||

| D1-3 |

|

| I | A Q | R | C | |||

| D1-4 |

|

| I | A | Q | R | C | ||

| D1-5 |

| I | C Q | A R | |||||

| D1-6 |

|

| I | A | Q | R |

| Step | Act. | Input | Output | CIO | CDO | DGM | SCP DQ Steward | DQ Steward | SCP Arch |

|---|---|---|---|---|---|---|---|---|---|

| C1 | C1-1 |

|

| I | A | R | R | R | |

| C1-2 |

|

| I | A | Q | R | |||

| C2 | C2-1 |

|

| I | I | A | R | Q |

| Step | Act. | Input | Output | CIO | CDO | DGM | SCP DQ Steward | DQ Steward | SCP Arch |

|---|---|---|---|---|---|---|---|---|---|

| A1 | A1-1 |

|

| I | A | C | R | ||

| A1-2 |

| I | A | C | R |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Perez-Castillo, R.; Carretero, A.G.; Caballero, I.; Rodriguez, M.; Piattini, M.; Mate, A.; Kim, S.; Lee, D. DAQUA-MASS: An ISO 8000-61 Based Data Quality Management Methodology for Sensor Data. Sensors 2018, 18, 3105. https://doi.org/10.3390/s18093105

Perez-Castillo R, Carretero AG, Caballero I, Rodriguez M, Piattini M, Mate A, Kim S, Lee D. DAQUA-MASS: An ISO 8000-61 Based Data Quality Management Methodology for Sensor Data. Sensors. 2018; 18(9):3105. https://doi.org/10.3390/s18093105

Chicago/Turabian StylePerez-Castillo, Ricardo, Ana G. Carretero, Ismael Caballero, Moises Rodriguez, Mario Piattini, Alejandro Mate, Sunho Kim, and Dongwoo Lee. 2018. "DAQUA-MASS: An ISO 8000-61 Based Data Quality Management Methodology for Sensor Data" Sensors 18, no. 9: 3105. https://doi.org/10.3390/s18093105

APA StylePerez-Castillo, R., Carretero, A. G., Caballero, I., Rodriguez, M., Piattini, M., Mate, A., Kim, S., & Lee, D. (2018). DAQUA-MASS: An ISO 8000-61 Based Data Quality Management Methodology for Sensor Data. Sensors, 18(9), 3105. https://doi.org/10.3390/s18093105