Computer-Aided Approach for Rapid Post-Event Visual Evaluation of a Building Façade

Abstract

1. Introduction

2. System Overview

2.1. Image Collection

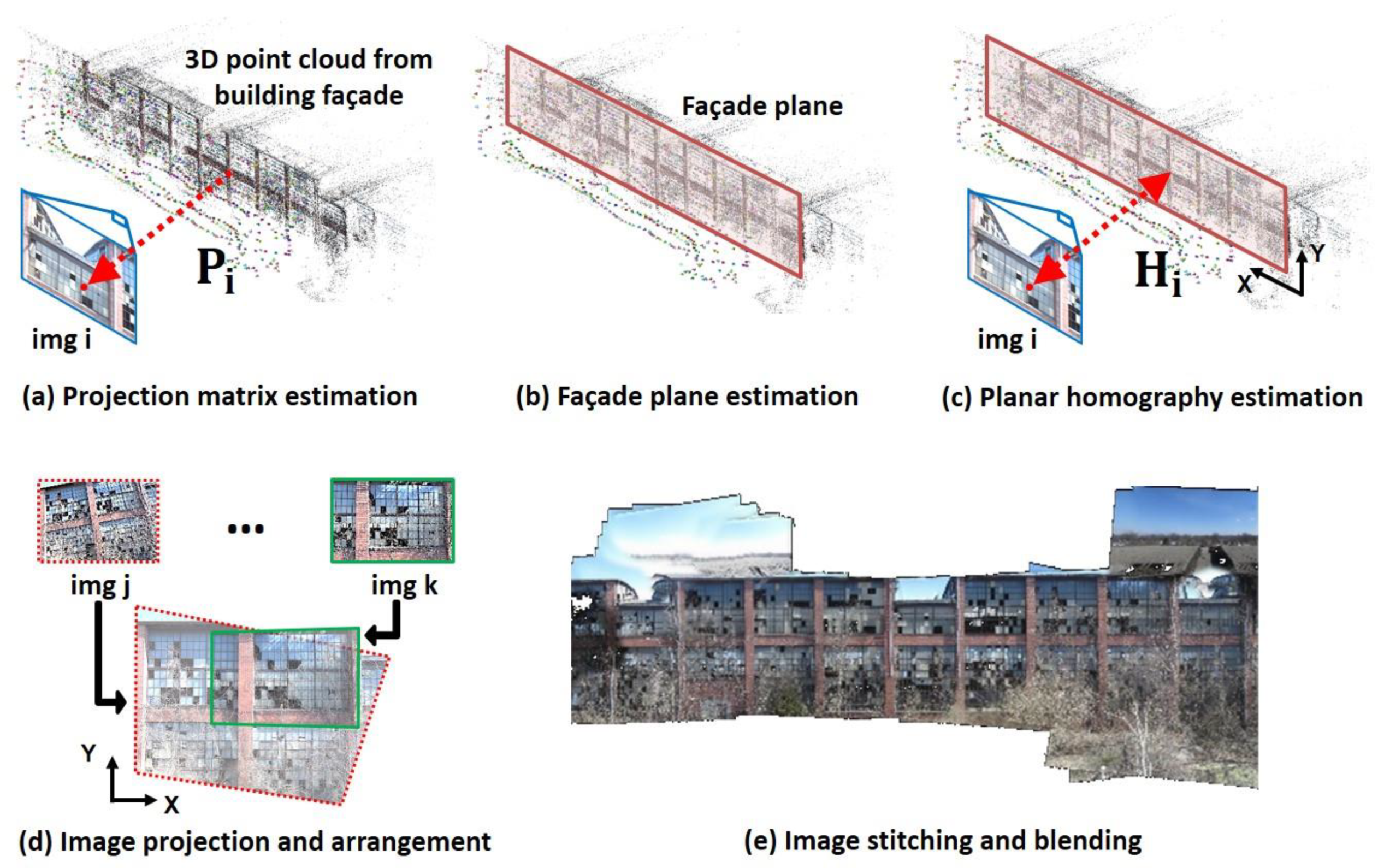

2.2. Orthophoto Generation

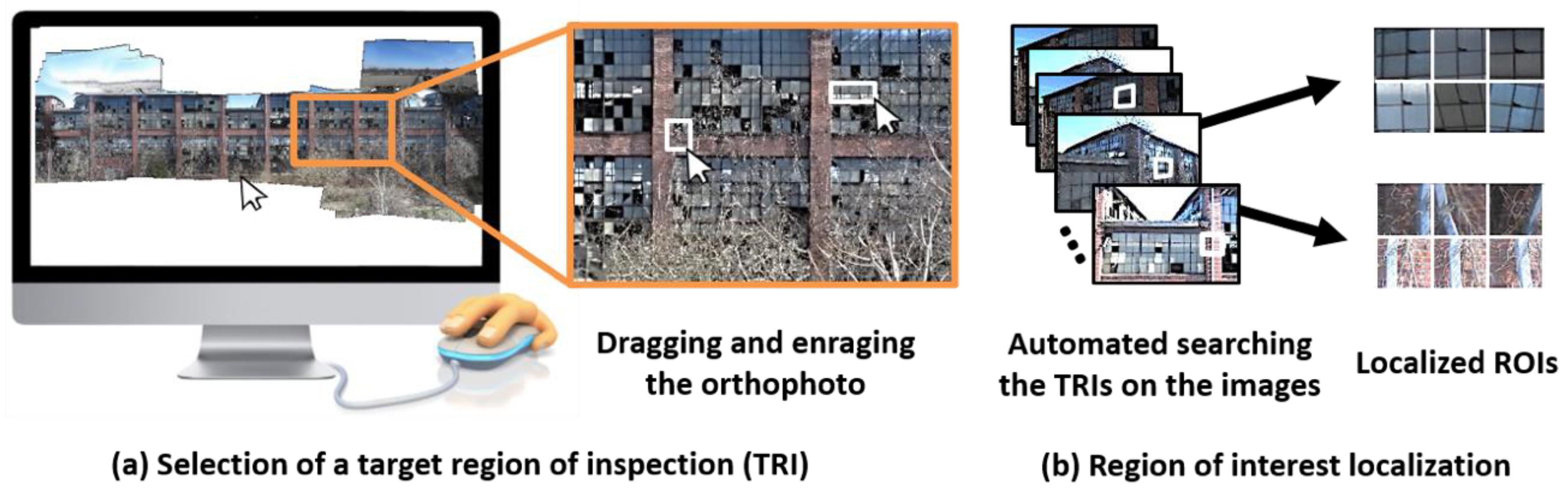

2.3. Region-of-Interest Localization

3. Experimental Validation

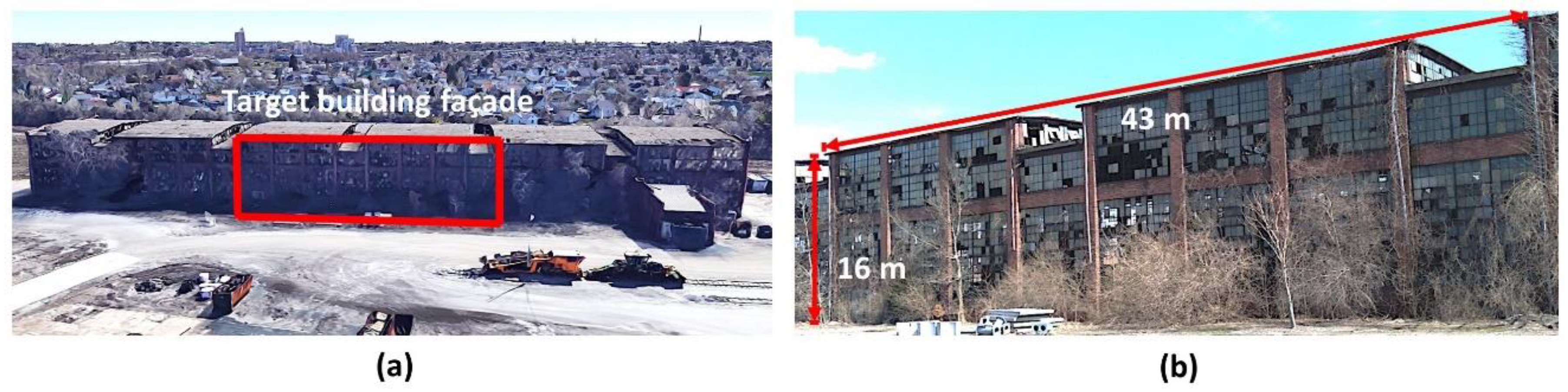

3.1. Description of the Test Building

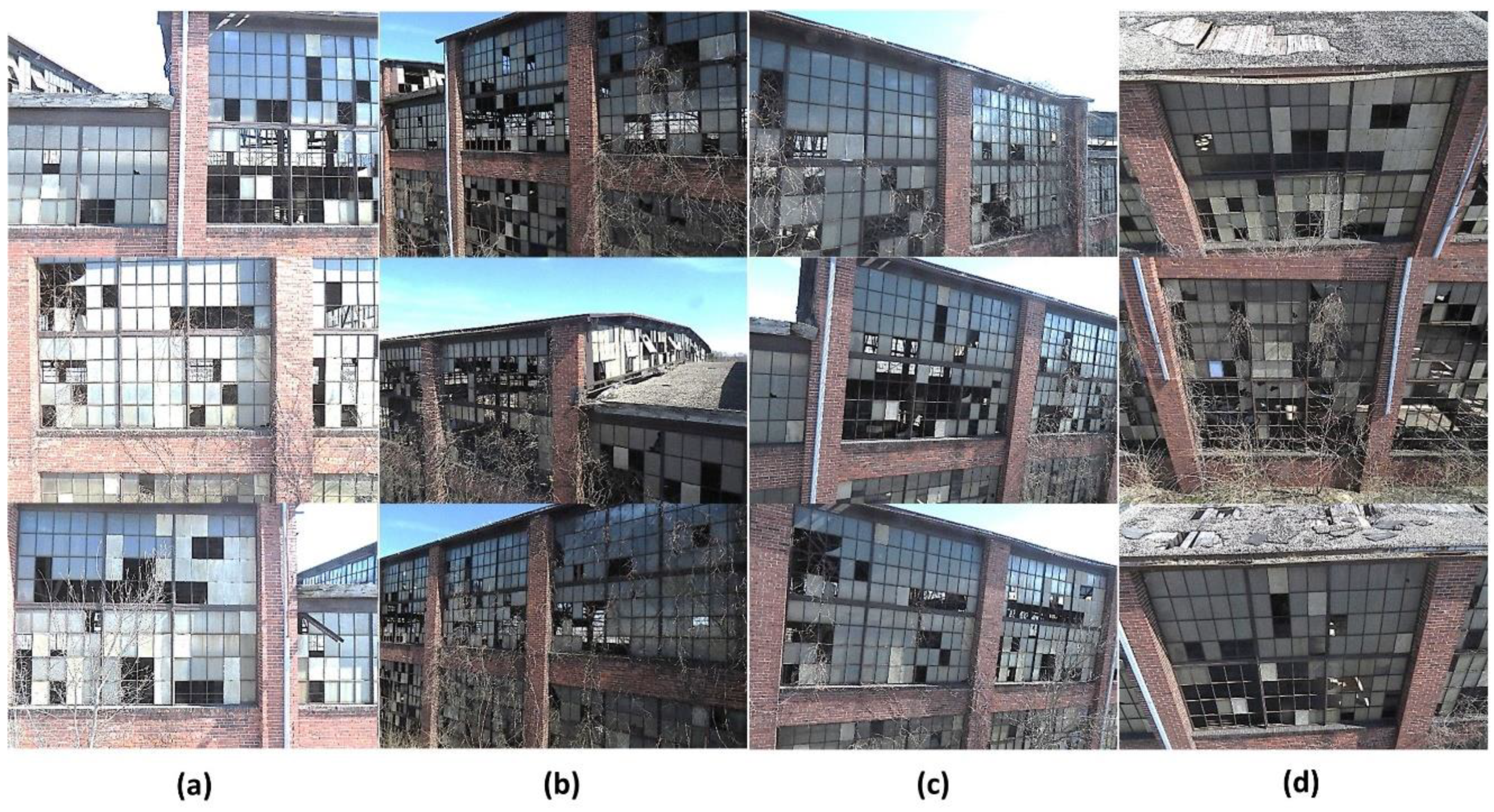

3.2. Collection of the Images from the Test Building

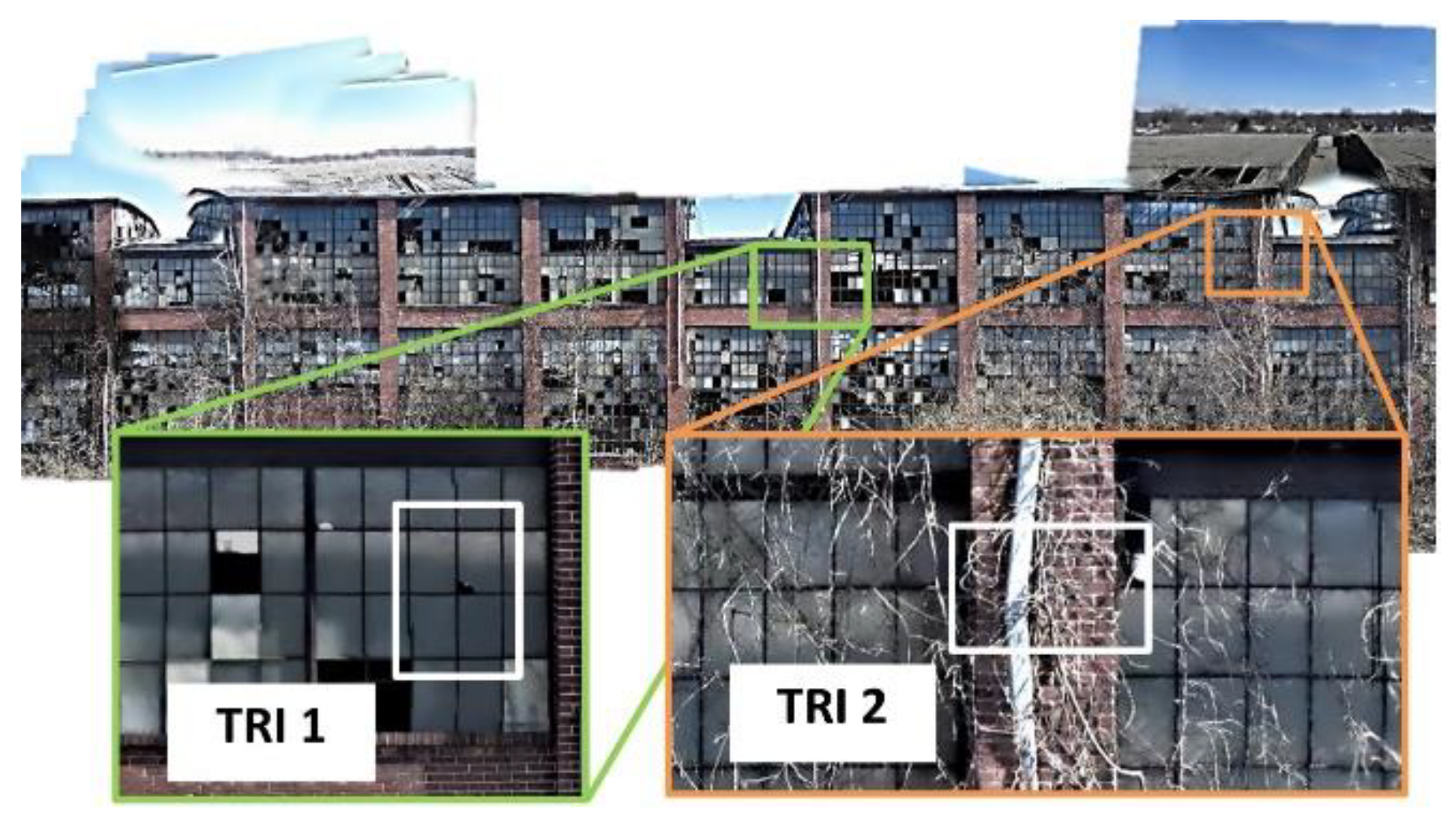

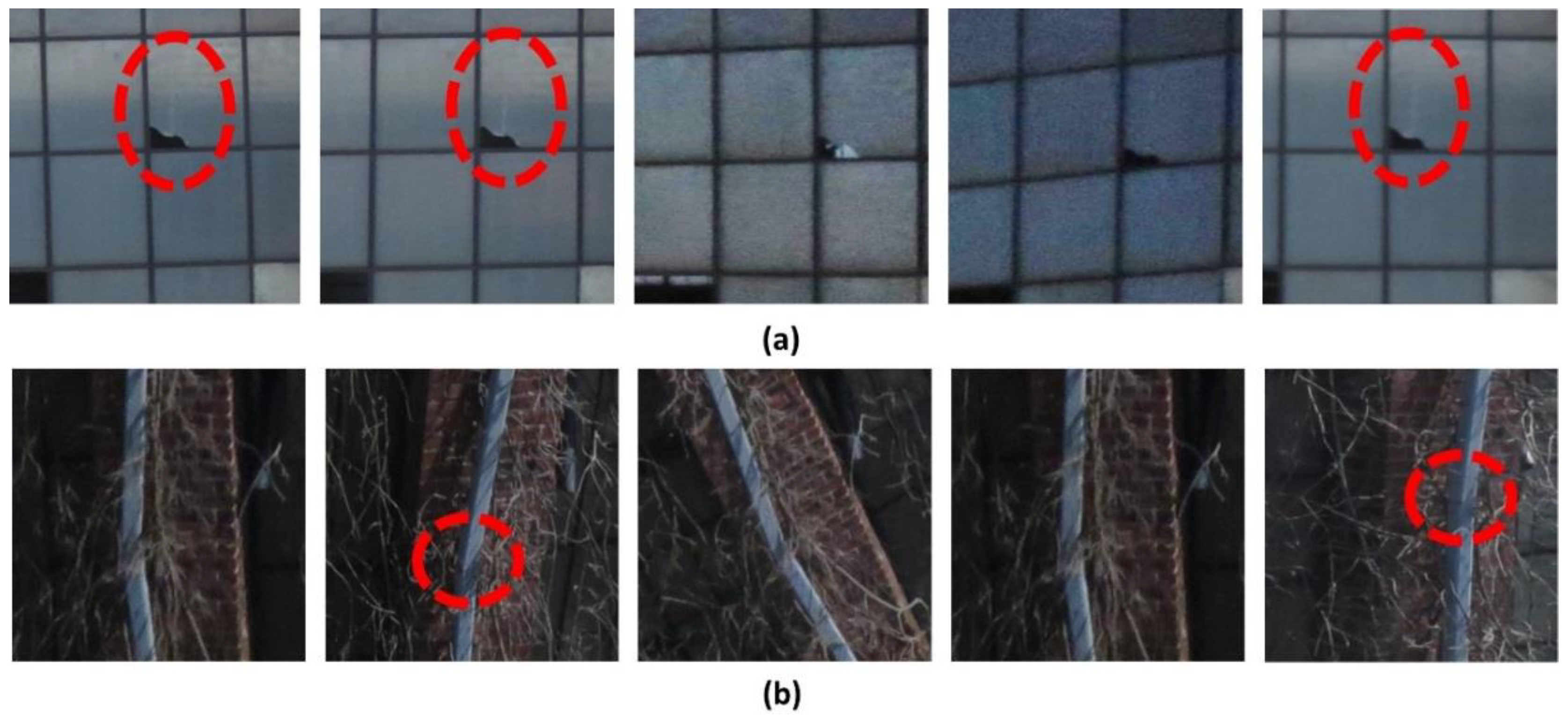

3.3. Results of Orthophoto Generation and Region-of-Interest Localization

4. Conclusions

- Large geometric variations in the TBF (e.g., extrusions or intrusions), which are not placed within the same plane, will induce large distortions in the orthophoto. It is recommended in such a case that more images be captured parallel to the TBF and a smaller angle threshold be used for the orthophoto construction to reduce distortions due to a relief displacement coming from different elevations on the plane [37]. If the TBF does not lie within a single plane, engineers can generate multiple orthophotos and conduct visual inspection using each of the orthophotos. However, if the building façade is reasonably flat, a single orthophoto is sufficient to make the best use of the technique.

- As seen in Figure 8b, the presence of unwanted foreground objects (e.g., branch, tree, street light) may obstruct the view of the TRIs in the ROIs. In such a case, the only possible solution is to collect images from additional viewpoints. A similar issue occurs when the geometry of the structure is complex. Alternatively, one may further apply an image classification technique to filter out unnecessary ROIs and utilize only useful ROIs [21,23,38,39,40].

- In some cases, the existence of incorrect feature matches will introduce significant errors or even failures in the SfM process. The mis-associated features should be adaptively handled to enhance to the accuracy of the SfM outcomes. To address this issue, the authors have developed an adaptive resection-intersection bundle adjustment approach that refines the 3D points and camera poses separately [41].

Author Contributions

Funding

Acknowledgements

Conflicts of Interest

References

- Baird, A.; Palermo, A.; Pampanin, S. Façade damage assessment of concrete buildings in the 2011 Christchurch earthquake. Struct. Concr. 2012, 13, 3–13. [Google Scholar] [CrossRef]

- Tomaževič, M. Earthquake-Resistant Design of Masonry Buildings; Series on Innovation in Structures and Construction; Imperial College Press: London, UK, 1999; ISBN 978-1-62870-085-5. [Google Scholar]

- Jahanshahi, M.R.; Kelley, J.S.; Masri, S.F.; Sukhatme, G.S. A survey and evaluation of promising approaches for automatic image-based defect detention of bridge structures. Struct. Infrastruct. Eng. 2009, 5, 455–486. [Google Scholar] [CrossRef]

- Jahanshahi, M.R.; Masri, S.F.; Sukhatme, G.S. Multi-image stitching and scene reconstruction for evaluating defect evolution in structures. Struct. Health Monit. 2011, 10, 643–657. [Google Scholar] [CrossRef]

- Abdel-Qader, I.; Abudayyeh, O.; Kelly, M.E. Analysis of edge-detection techniques for crack identification in bridges. J. Comput. Civ. Eng. 2003, 17, 255–263. [Google Scholar] [CrossRef]

- O’Byrne, M.; Schoefs, F.; Pakrashi, V.; Ghosh, B. Regionally enhanced multi-phase segmentation technique for damaged surfaces. Comput. Aided Civ. Infrastruct. Eng. 2004, 29, 644–658. [Google Scholar] [CrossRef]

- Choi, K.; Kim, S. Morphological analysis and classification of types of surface corrosion damage by digital image processing. Corros. Sci. 2005, 47, 1–15. [Google Scholar] [CrossRef]

- Pakrashi, V.; Schoefs, F.; Memet, J.B.; O’Connor, A. ROC dependent event isolation method for image processing based assessment of corroded harbour structures. Struct. Infrastruct. Eng. 2010, 6, 365–378. [Google Scholar] [CrossRef]

- Medeiros, F.N.S.; Ramalho, G.L.B.; Bento, M.P.; Medeiros, L.C.L. On the evaluation of texture and color features for nondestructive corrosion detection. EURASIP J. Adv. Signal Process. 2010, 7, 817473. [Google Scholar] [CrossRef]

- Pidaparti, R.M.; Aghazadeh, B.S.; Whitfield, A.; Raob, A.; Mercier, G.P. Classification of corrosion defects in NiAl bronze through image analysis. Corros. Sci. 2010, 52, 3661–3666. [Google Scholar] [CrossRef]

- Ghanta, S.; Karp, T.; Lee, S. Wavelet domain detection of rust in steel bridge images. In Proceedings of the 2011 IEEE International Conference on Acoustics, Speech and Signal Processing, Prague, Czech Republic, 22–27 May 2011. [Google Scholar]

- Moller, P.S. CALTRANS Bridge Inspection Aerial Robot Final Report; No. CA 08–0182; Office of Transportation Management: Washington, DC, USA, 2008.

- Miller, J. Robotic Systems for Inspection and Surveillance of Civil Structures. Ph.D. Thesis, The University of Vermont D, Burlington, VT, USA, 2004. [Google Scholar]

- Kasireddy, V.; Akinci, B. Towards the integration of inspection data with bridge information models to support visual condition assessment. In Proceedings of the 2015 International Workshop on Computing in Civil Engineering, Austin, TX, USA, 21–23 June 2015. [Google Scholar]

- Kasireddy, V.; Zou, Z.; Akinci, B.; Rosenberry, J. Evaluation and Comparison of Different Virtual Reality Environments towards Supporting Tasks Done on a Virtual Construction Site. Constr. Res. Congr. 2016, 2371–2381. [Google Scholar] [CrossRef]

- Yoon, H.; Shin, J.; Spencer, B.F., Jr. Structural Displacement Measurement using an Unmanned Aerial System. Comput. Aided Civ. Inf. 2018, 33, 183–192. [Google Scholar] [CrossRef]

- Kong, X.; Li, J. Vision-Based Fatigue Crack Detection of Steel Structures Using Video Feature Tracking. Comput. Aided Civ. Inf. 2018. [CrossRef]

- Kim, I.H.; Jeon, H.; Baek, S.C.; Hong, W.H.; Jung, H.J. Application of Crack Identification Techniques for an Aging Concrete Bridge Inspection Using an Unmanned Aerial Vehicle. Sensors 2018, 18, 1881. [Google Scholar] [CrossRef] [PubMed]

- Choi, J.; Yeum, C.M.; Dyke, S.J.; Jahanshahi, M.R.; Pena, F.; Park, G.W. Machine-Aided Rapid Visual Evaluation of Building Façade. In Proceedings of the EWSHM 2018, Manchester, UK, 10–13 July 2018. [Google Scholar]

- Parkinson, B.W. “GPS error analysis”, Global Positioning Systems. Theory Appl. 1996, II, 469–483. [Google Scholar]

- Yeum, C.M.; Dyke, S.J. Vision-Based Automated Crack Detection for Bridge Inspection. Comput. Aided Civ. Inf. 2015, 30, 759–770. [Google Scholar] [CrossRef]

- Yeum, C.M.; Choi, J.; Dyke, S.J. Autonomous image localization for visual inspection of civil infrastructure. Smart Mater. Struct. 2017, 26, 12. [Google Scholar] [CrossRef]

- Yeum, C.M.; Choi, J.; Dyke, S.J. Automated region-of-interest localization and classification for vision-based visual assessment of civil infrastructure. Struct. Health Monit. 2018. [Google Scholar] [CrossRef]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision, 2nd ed.; Cambridge University Press: Cambridge, UK, 2004; ISBN 0521540518. [Google Scholar]

- Snavely, N.; Seitz, S.; Szeliski, R. Modeling the World from Internet Photo Collections. Int. J. Comput. Vis. 2008, 80, 189–210. [Google Scholar] [CrossRef]

- Yeum, C.M.; Lund, A.; Dyke, S.J.; Ramirez, J.A. Automated Recovery of Documents from Earthquake Reconnaissance Images. J. Comput. Civ. Eng. 2018. accepted. [Google Scholar]

- Kraus, K. Photogrammetry: Geometry from Images and Laser Scans; Walter de Gruyter: Berlin, Germany, 2011. [Google Scholar]

- James, M.R.; Robson, S. Mitigating systematic error in topographic models derived from UAV and ground-based image networks. Earth Surf. Process. Landf. 2014, 39, 1413–1420. [Google Scholar] [CrossRef]

- Allène, C.; Pons, J.P.; Keriven, R. Seamless image-based texture atlases using multi-band blending. In Proceedings of the 2008 19th International Conference on Pattern Recognition, Tampa, FL, USA, 8–11 December 2008. [Google Scholar]

- LafayetteShops–Home of Moron. Available online: http://www.monon.monon.org/bygone/lafayette2.html (accessed on 30 October 2017).

- Google Maps. Available online: https://www.google.com/maps/@40.4465762,-86.8775183,1043m/data=!3m1!1e3 (accessed on 4 November 2017).

- 3DR SOLO User Manual. Available online: https://3dr.com/blog/solo-specs-just-the-facts-14480cb55722/ (accessed on 3 March 2017).

- Canon EOS Digital SLR Cameras and PowerShot Digital Cameras|Canon Online Store. Available online: https://shop.usa.canon.com/shop/en/catalog/cameras (accessed on 27 March 2017).

- VisualSFM–A Visual Structure from Motion System. Available online: http://ccwu.me/vsfm/ (accessed on 31 May 2016).

- Wu, C. Critical Configurations for Radial Distortion Self-Calibration. In Proceedings of the IEEE Conference on Computer Vision and Patter Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 25–32. [Google Scholar]

- MATLAB. Version 9.3.0.713539 (R2016b). The MathWorks Inc.: Natick, MA, USA, 2017. [Google Scholar]

- Kuzmin, Y.P.; Korytnik, S.A.; Long, O. Polygon-based true orthophoto generation. Remote Sens. Spat. Inf. Sci. 2004, 35, 529–531. [Google Scholar]

- Jahanshahi, M.R.; Masri, S.; Padgett, C.; Sukhatme, G. An innovative methodology for detection and quantification of cracks through incorporation of depth perception. Mach. Vis. Appl. 2013, 24, 227–241. [Google Scholar] [CrossRef]

- Jahanshahi, M.R.; Masri, S.F. Parametric Performance Evaluation of Wavelet-Based Corrosion Detection Algorithms for Condition Assessment of Civil Infrastructure Systems. J. Comput. Civ. Eng. 2013, 27, 345–358. [Google Scholar] [CrossRef]

- Atha, D.J.; Jahanshahi, M.R. Evaluation of deep learning approaches based on convolutional neural networks for corrosion detection. Struct. Health Monit. 2017. [CrossRef]

- Jahanshahi, M.R.; Chen, F.C.; Ansar, A.; Padgett, C.W.; Clouse, D.; Bayard, D.S. Accurate and Robust Scene Reconstruction in the Presence of Misassociated Features for Aerial Sensing. J. Comput. Civ. Eng. 2017, 31. [Google Scholar] [CrossRef]

- Yeum, C.M.; Mohan, A.; Dyke, S.J.; Jahanshahi, M.R.; Choi, J.; Zhao, Z.; Lenjani, A.; Ramirez, J.A. Image-Based Collection and Measurements for Construction Pay Items; JTRP Technical Reports; Purdue e-Pubs: West Lafayette, IN, USA, 2017. [Google Scholar]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Choi, J.; Yeum, C.M.; Dyke, S.J.; Jahanshahi, M.R. Computer-Aided Approach for Rapid Post-Event Visual Evaluation of a Building Façade. Sensors 2018, 18, 3017. https://doi.org/10.3390/s18093017

Choi J, Yeum CM, Dyke SJ, Jahanshahi MR. Computer-Aided Approach for Rapid Post-Event Visual Evaluation of a Building Façade. Sensors. 2018; 18(9):3017. https://doi.org/10.3390/s18093017

Chicago/Turabian StyleChoi, Jongseong, Chul Min Yeum, Shirley J. Dyke, and Mohammad R. Jahanshahi. 2018. "Computer-Aided Approach for Rapid Post-Event Visual Evaluation of a Building Façade" Sensors 18, no. 9: 3017. https://doi.org/10.3390/s18093017

APA StyleChoi, J., Yeum, C. M., Dyke, S. J., & Jahanshahi, M. R. (2018). Computer-Aided Approach for Rapid Post-Event Visual Evaluation of a Building Façade. Sensors, 18(9), 3017. https://doi.org/10.3390/s18093017