1. Introduction

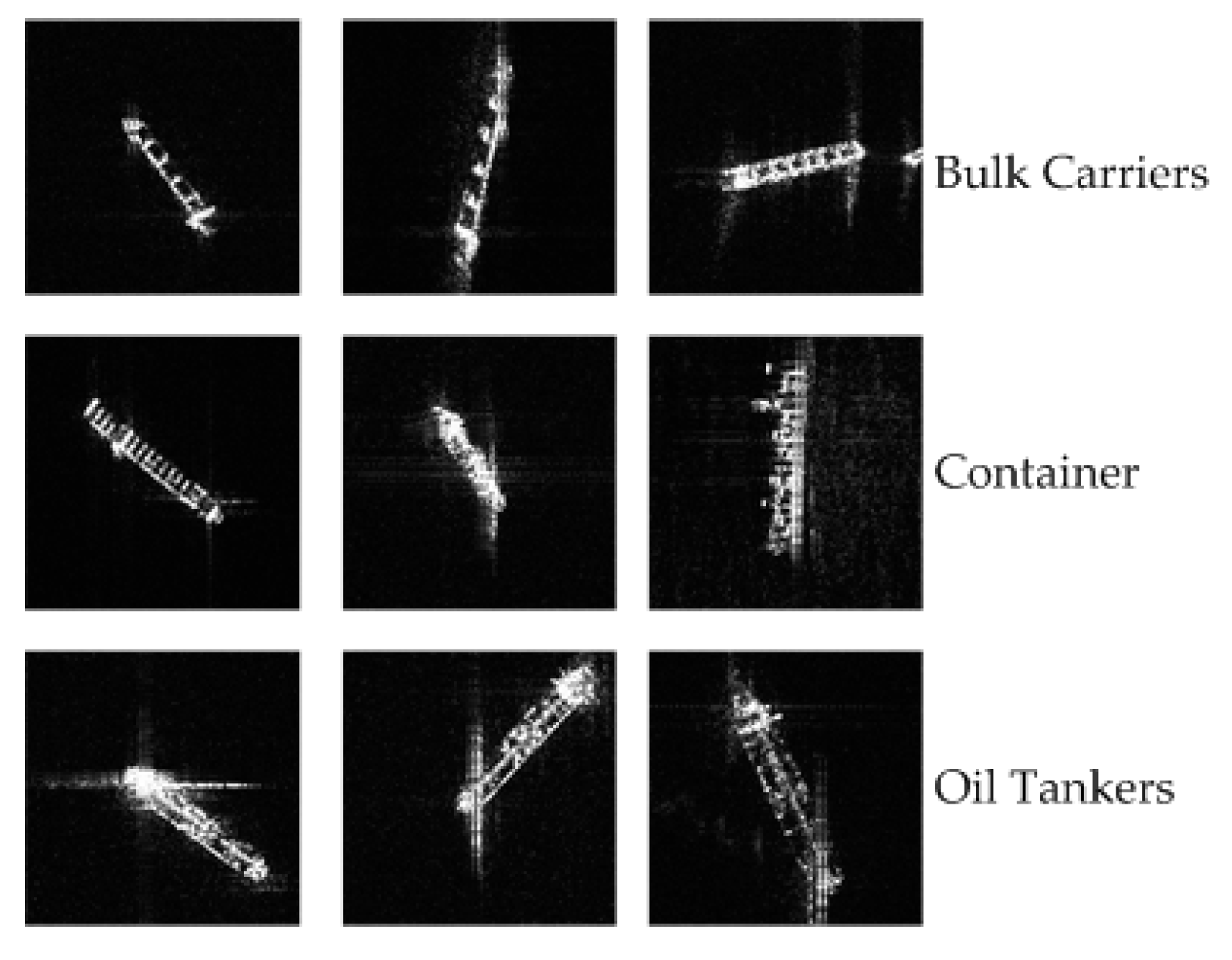

Due to their all-weather, all-day, and high-resolution advantages, synthetic aperture radar (SAR) images have recently been used for ship classification in marine surveillance. There are several satellites that have provided high-resolution SAR images since 2007, such as ASI’s COSMO-SkyMed, DLR’s TerraSAR-X, Japan’s ALOS-2, and China’s Gaofen-3, These high-resolution SAR images provide a resolution greater than 3 m that contain rich information about the targets, such as the geometry of ships, which makes discriminating different types of ships possible [

1,

2,

3,

4].

The methods used for ship classification with SAR images mainly focus on feature selection and optimized classifier techniques [

1,

2,

3,

4,

5,

6,

7,

8,

9,

10,

11,

12,

13]. Currently, commonly used features are (1) geometric features, such as ship length, ratio of length to width, distribution of scattering centers, covariance coefficient, contour features [

11], and ship scale; and (2) scattering features, such as 2D comb features [

7], local radar cross section (RCS) density [

1], permanent symmetric scatterers [

12], and polarimetric characteristics [

13]. For classifiers, models from machine learning can be adapted, such as support vector machines [

14] and artificial neural networks [

15]. Besides, many researchers provide classifiers that aim for high-classification accuracy given the particularity of ships in SAR images, such as the analytical hierarchy process [

2] and hierarchical scheme [

3]. Since these methods are highly dependent on features and classifiers, researchers exploit several strategies to relieve the processes of feature selection and classifier optimization, such as hierarchical feature selection [

6], multiple kernels to combine various features [

9] and joint feature and classifier selections [

8].

Since Hinton [

16] integrated the single-layer, restricted Boltzmann machine into a deep neural network, deep learning, which is the automatic learning of discriminative features, has achieved enormous success in object classification, object detection, and semantic segmentation studies from normal RGB images [

17]. Even in SAR images, deep-learning models are gradually used [

18,

19,

20]. Zhu et al. [

18] reviewed the potential of deep learning when applied to remote sensing and gave a list of deep-learning materials. Zhang et al. [

19] surveyed perspectives for the application of deep learning to remote sensing, especially in hyperspectral communities. Nogueira et al. [

20] presented three methods for six popular ConvNets on two optical datasets and one multispectral dataset and demonstrated that the method with fine-tuning features and the SVM classifier achieved the best results. Bentes et al. [

21] explored convolutional neural networks for ship classification via TerraSAR-X images. The benefit of deep leaning is that unlike feature selection and optimized classifiers, it automatically learns the representation of SAR images and provides an end-to-end scheme for a given application without human interference [

22], which saves time for feature extraction and selection and classifier optimization. Based on the advantages of deep learning, convolutional neural networks (CNNs) are adapted in this paper.

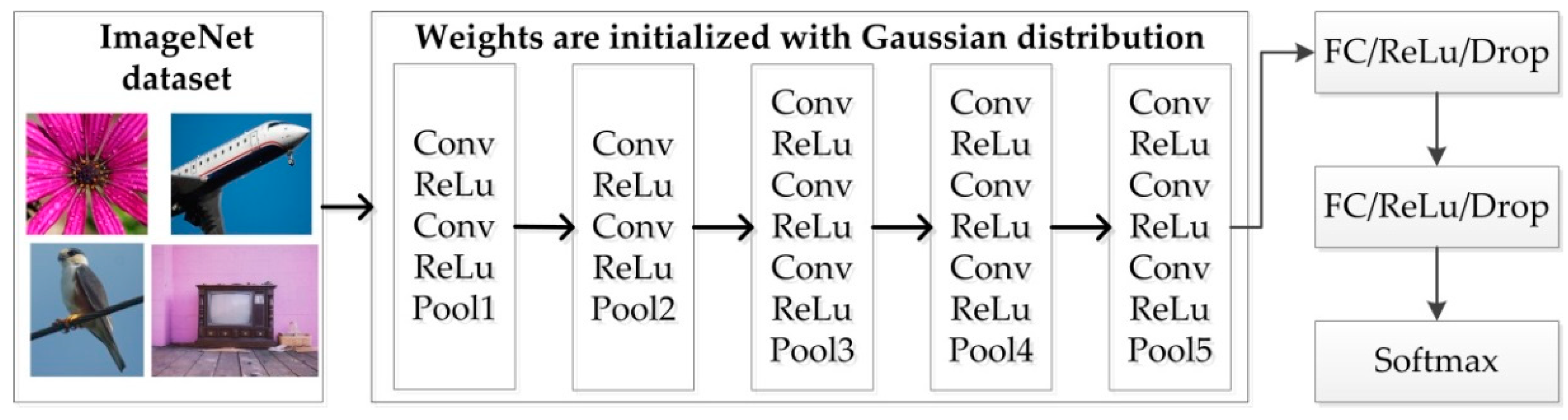

One of the major bottlenecks when applying deep learning is that it needs a considerably large training dataset [

22]. Since this volume of labeled data is time consuming and almost impossible to obtain, there are two methods to address small datasets during training: fine tuning and transfer learning [

22]. Transfer learning and fine tuning need the model to be pretrained on a large portion of the dataset. Currently, the widely used datasets from natural images, such as ImageNet [

23] or Coco [

24]. However, by considering the difference between SAR images and natural images (e.g., imaging mechanisms and target information [

25,

26]), features extracted from natural images via the pretrained model are not suitable for SAR images. Thus, it is imperative to use the SAR images to modify the weights of the pretrained model. Transfer learning treats the learned model as a feature extractor, as it changes the last few layers and only trains the parameters in the modified layers [

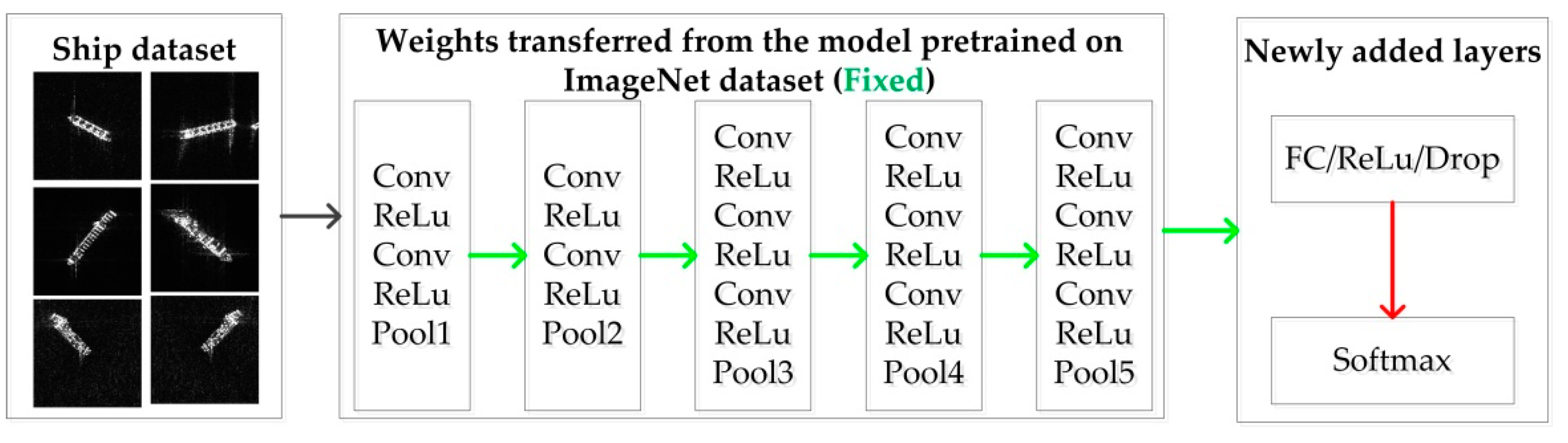

27]. Unlike transfer learning, fine tuning takes the weights of trained models for initialization, modifies the top layers, and then trains the model with the target dataset. Therefore, based on the above analysis, fine tuning is adapted to train the ship classification model.

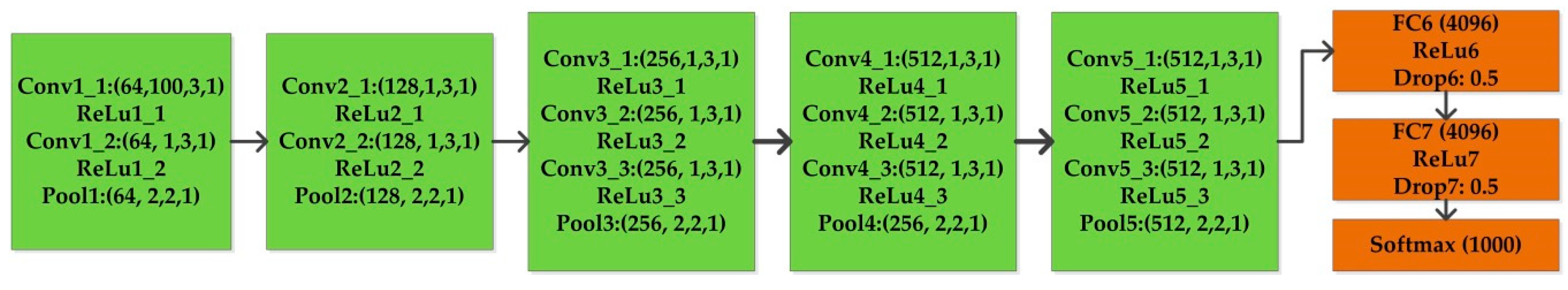

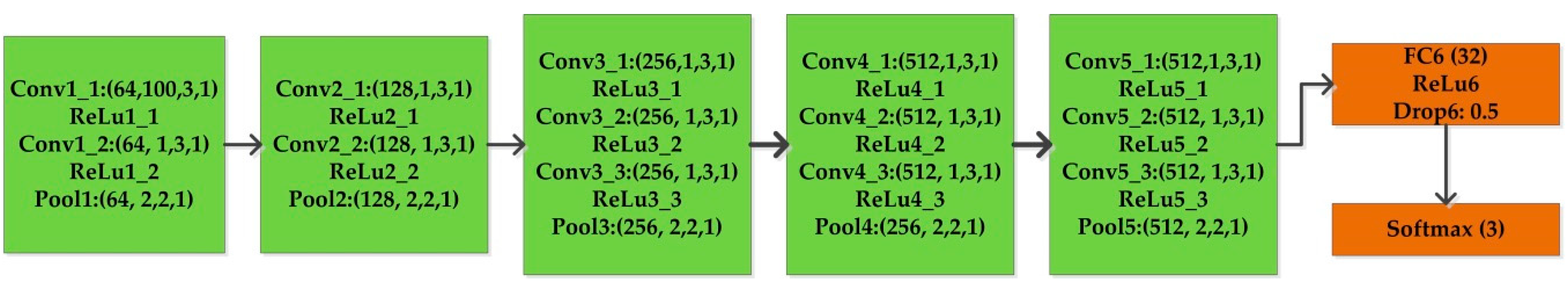

In addition, with deeper and increased deep-learning models, the features from the pretrained model become more abstract [

28]. Since SAR images are different from natural images (e.g., range compression [

26]), the greater features of pretrained models may not suitable for SAR images. To relieve this, a relatively shallow convolutional network (i.e., VGG [

29]) is selected as our original model. Especially, VGG16, one kind of VGG, is the winner in the ILSVRC-2014 competition and has 23 layers. Compared with other deep learning models, such as InceptionV3 (159 layers), VGG19 (29 layers), and Xception (126 layers), VGG16 has a relatively shallow depth. It is used to construct a ship classification model in this paper. To evaluate the effectiveness of the proposed method, deeper models, such as VGG19 [

29], are also used to evaluate our proposed method.

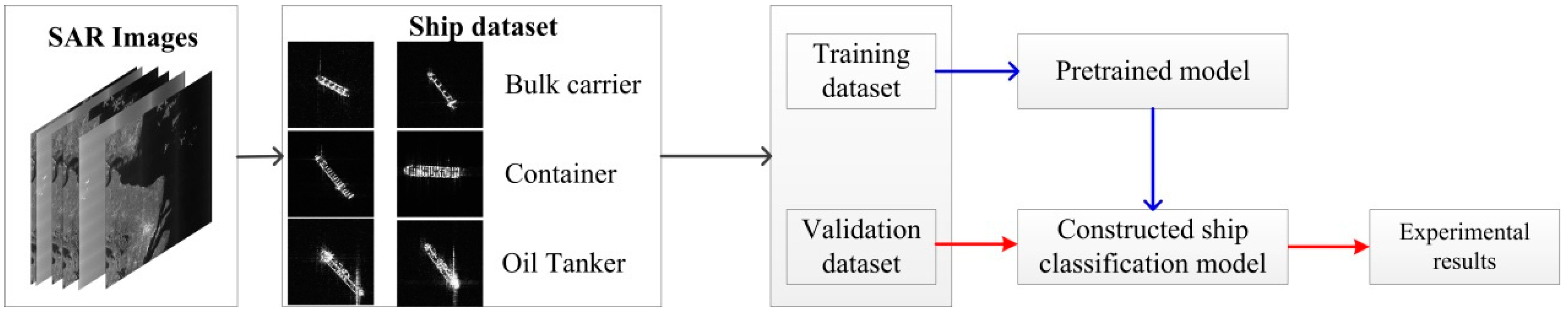

Based on the above analysis and to better exploit the benefits of deep learning with the capability to automatically learning features of structurally complex objects, VGG16 is used to construct a ship classification model in this paper. In addition, 4 models, including VGG16, VGG19, Xception [

30], and InceptionV3 [

31], and two training methods, including transfer learning and fine tuning, are conducted individually. The aim of this paper focuses on the application of convolutional neural networks (CNNs) to ship classification in high-resolution SAR images using small dataset. Compared to the feature-based method, CNNs automatically learn the discriminative features without human interference. To address the limited dataset when training the model, fine tuning is proposed to train the model with the consideration of SAR characteristics.

The organization of this paper is organized as follows.

Section 2 relates the materials to the background of the VGG16 and our proposed ship classification model and also presents the workflow of our experiments, especially our training scheme with small datasets.

Section 3 introduces our experimental results and analysis.

Section 4 and

Section 5 are the discussion and conclusions of this paper, respectively.

4. Discussion

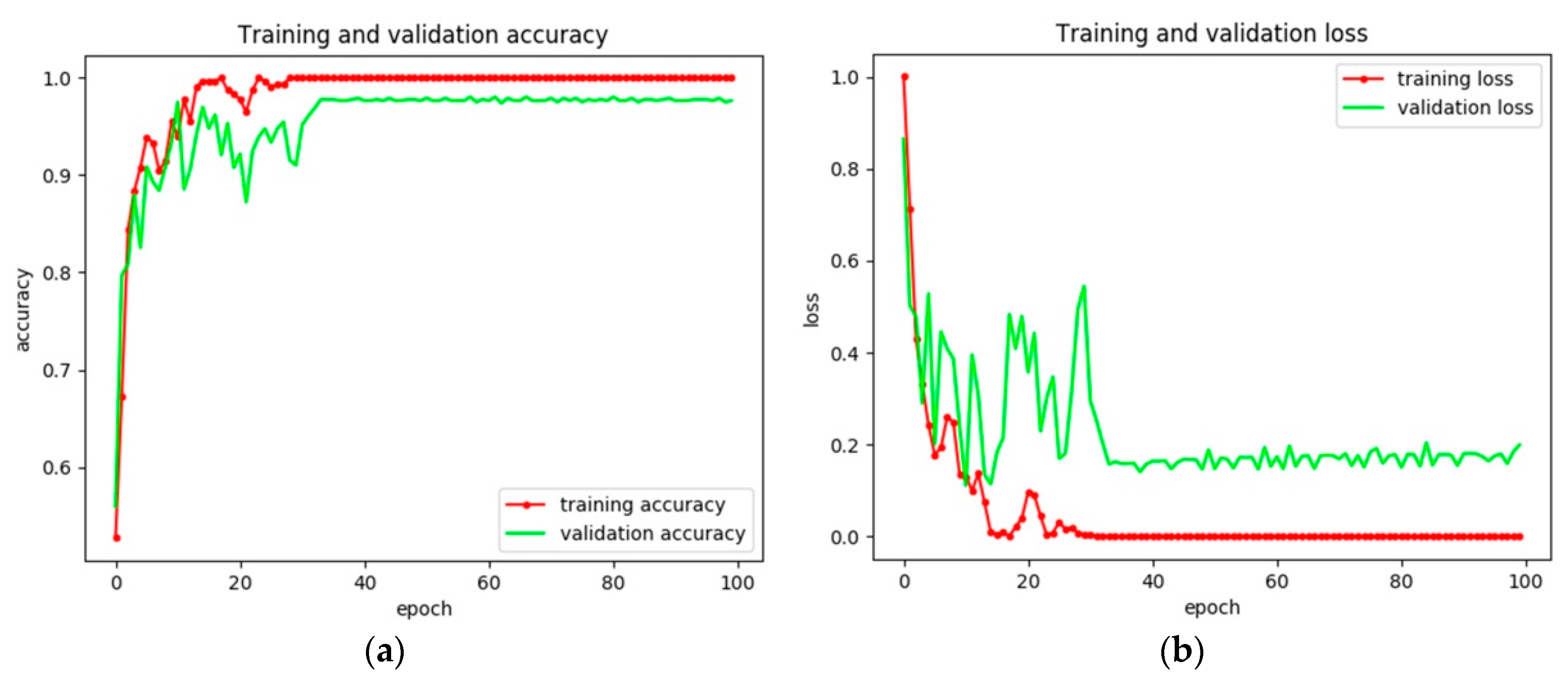

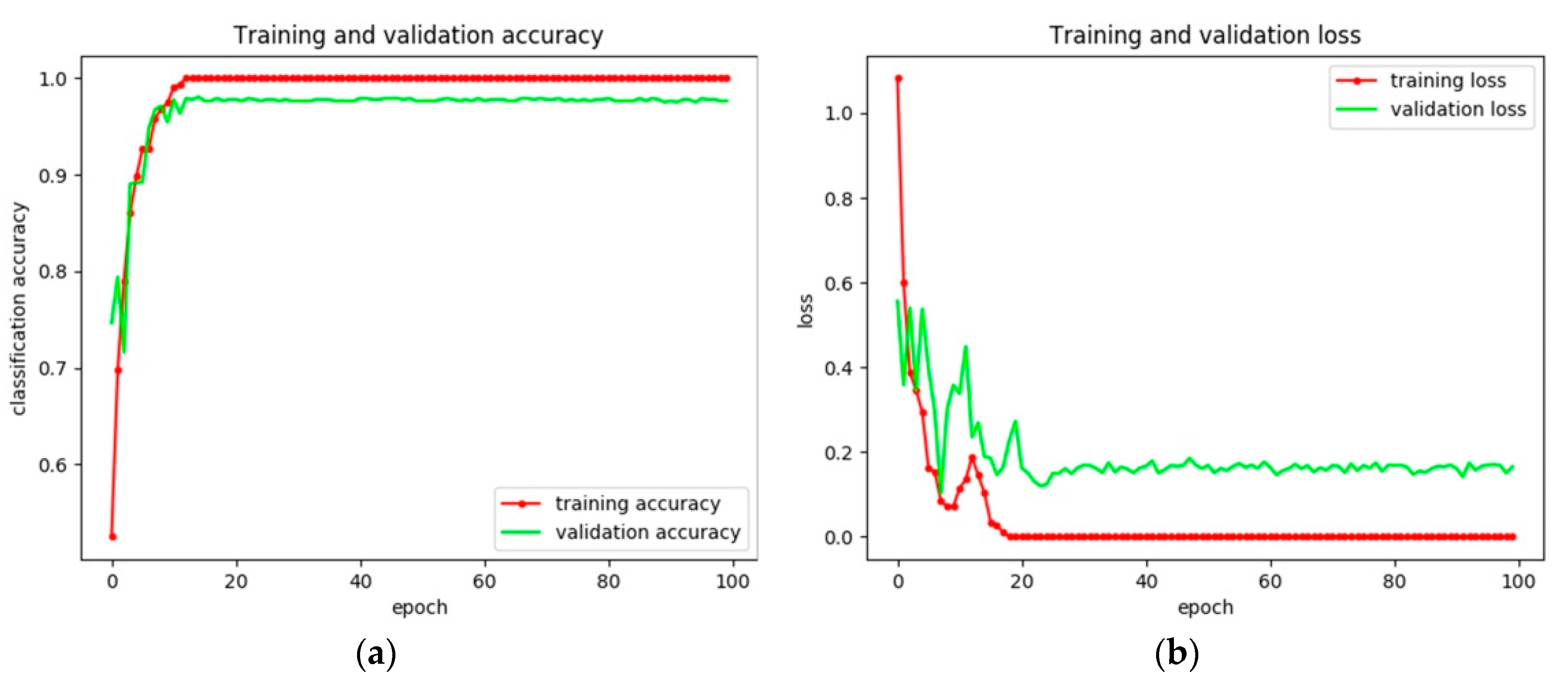

Since the volume of ship constructed datasets is small, cross validation is important to evaluate the performance of the classifier. In this paper, 5-cross validation is used.

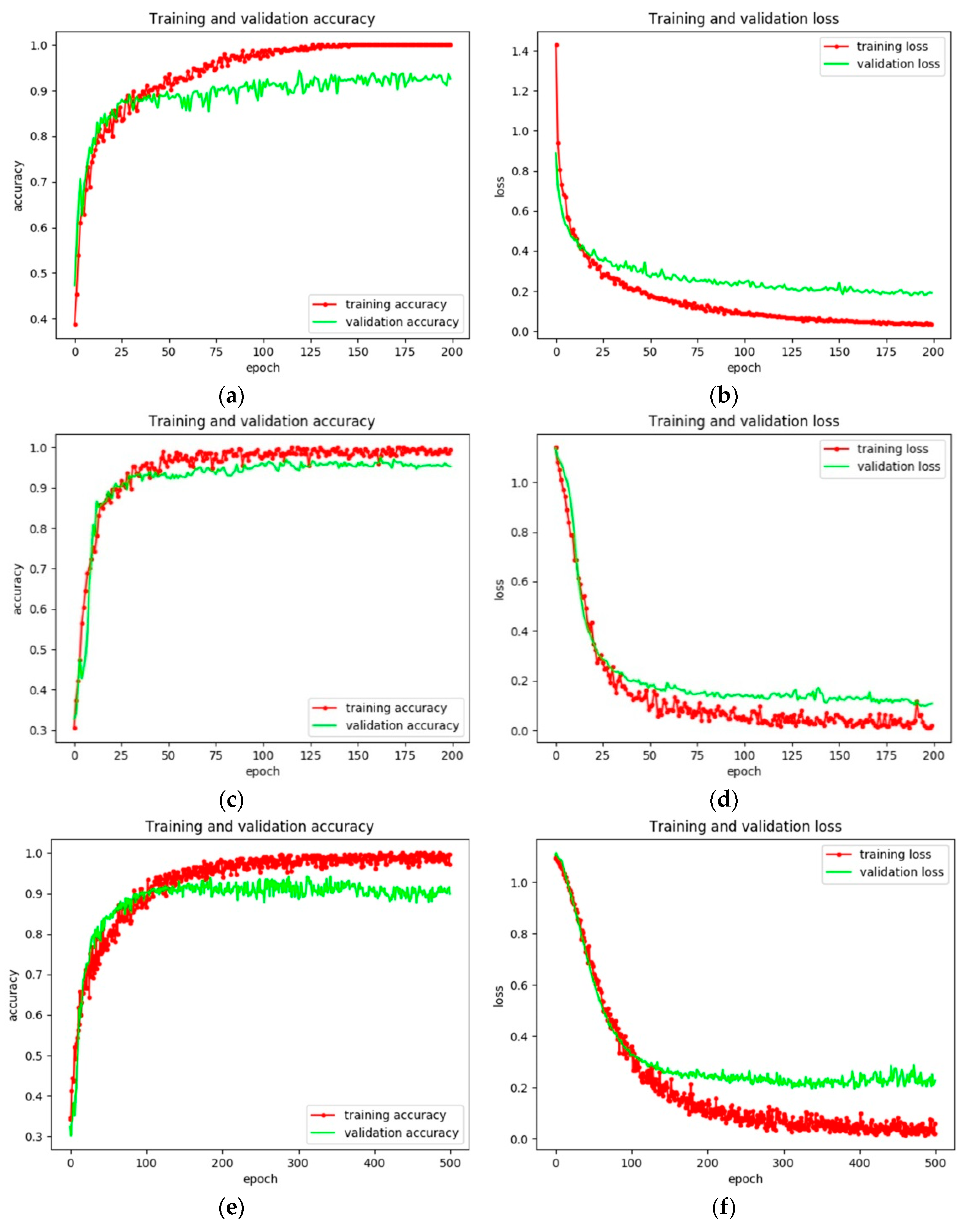

Table 11 is the cross validation accuracy for transfer learning and fine tuning of VGG16 and fine tuning of VGG19. It is obvious that compared with transfer learning, fine tuning achieves higher average classification accuracy and lower standard deviation. This is because fine tuning also modifies the transferred weights, which may make the learned features suitable for SAR images.

Through the above experiments and analysis, it can be clearly seen that among the above four models, each model has its own advantages. The model based on VGG16 achieves the best F

1 score and the best observations of Oil Tankers. The model based on VGG19 has the best observations of Bulk Carriers. The model based on InceptionV3 has the best precision for Oil Tankers and the best observations of Containers. The model based on Xception has the best precision for containers and the best observations of oil tankers. It will be our future goal to adapt ensemble learning [

41] to take advantage of these models and achieve the best performance. However, among these four models, i.e., VGG16, VGG19, Xception, and InceptionV3, but not Xception, the other three models have no significant change for ship classification with McNemar’s test. This may be characteristic of this dataset and more ship classification datasets are needed to verify this in the future. In addition, due to the differences between SAR images and optical images, large SAR image datasets will be constructed to better exploit the methodology of deep learning, and future research will be conducted on pretrained models with SAR images, which may show the benefits of transfer learning and combined SAR inherent characteristics (e.g., statistical distributions with convolutional neural networks) to enhance the classification results of ship classifications. Not only does fine tuning achieve promising results, it also results in overfitting of the present model. The increase in datasets is likely to solve this problem.