Sensorless PV Power Forecasting in Grid-Connected Buildings through Deep Learning

Abstract

1. Introduction

2. Related Work

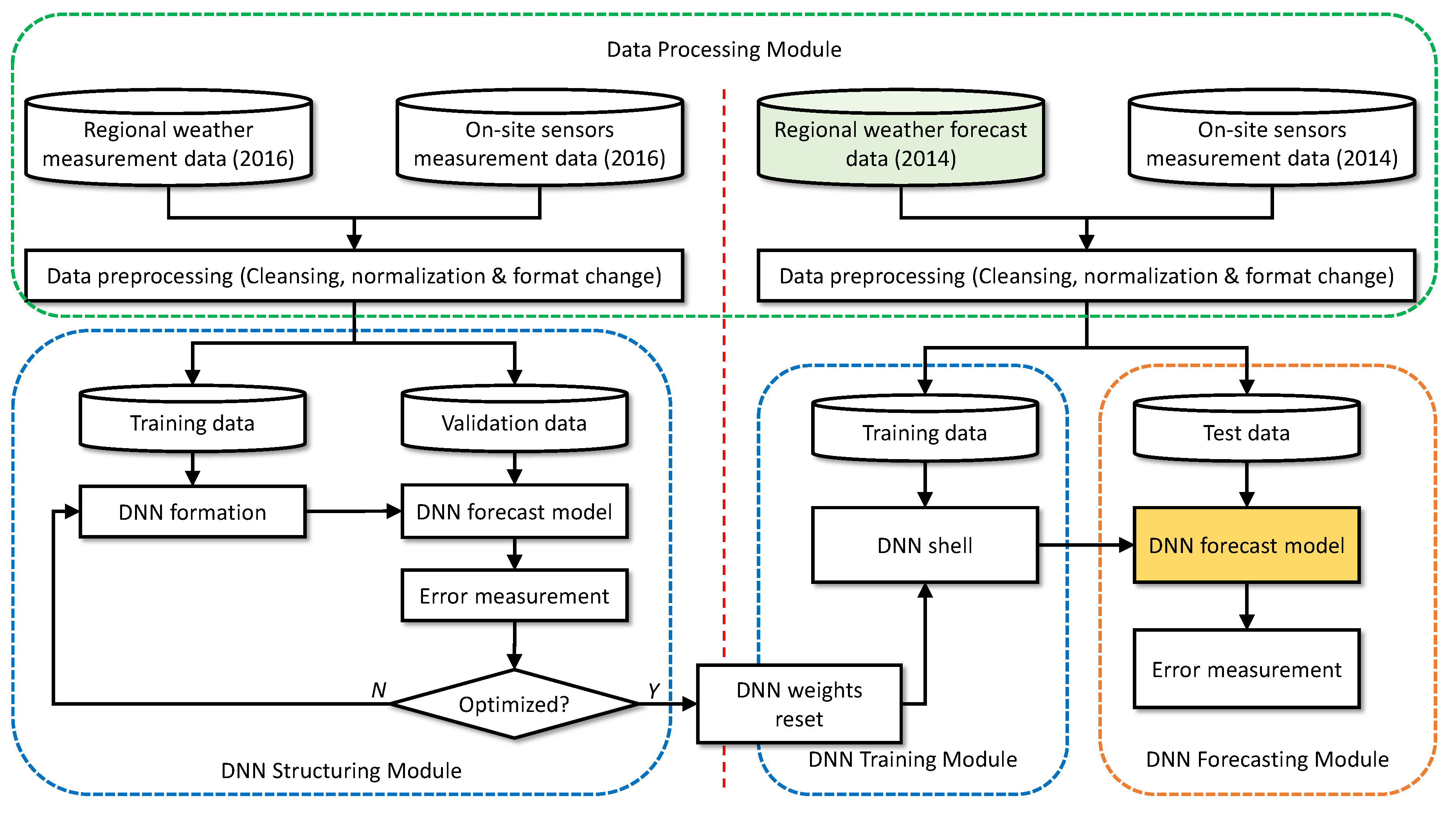

3. Materials and Methods

3.1. Historical Data

- Weather forecast data posted every three hours by the Korean Meteorological Administration (KMA) with the forecast horizon of up to 67 h; collected during 7:00 a.m.–6:00 p.m. only

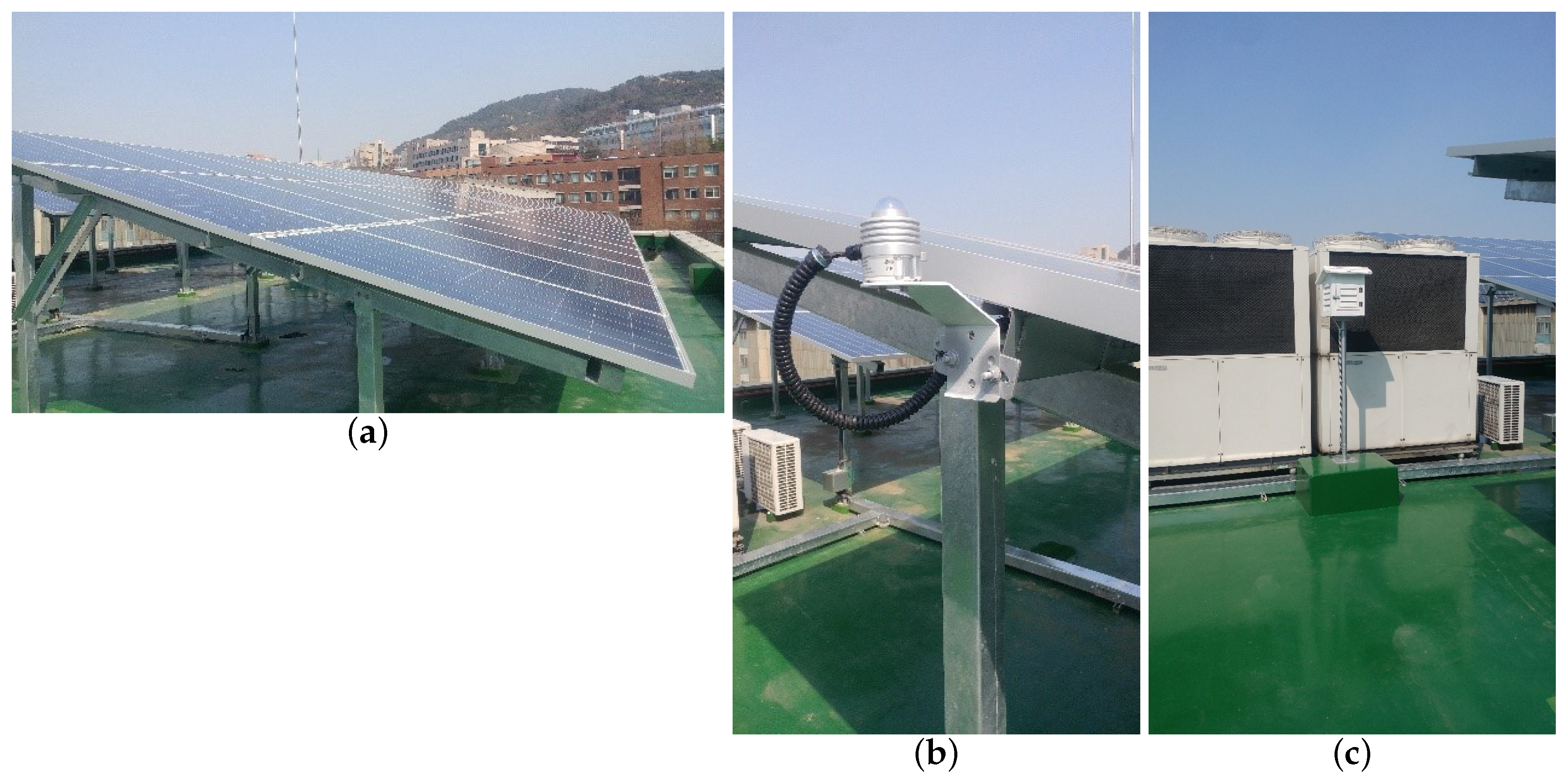

- On-site temperature, humidity, and solar radiation sensor measurement data from the installation (see Figure 1) in the same duration

- PV power output in the same duration

- Hourly weather measurement data from the KMA

- On-site temperature, humidity, and solar radiation sensor measurement data from the installation in the same duration

- PV power output in the same duration

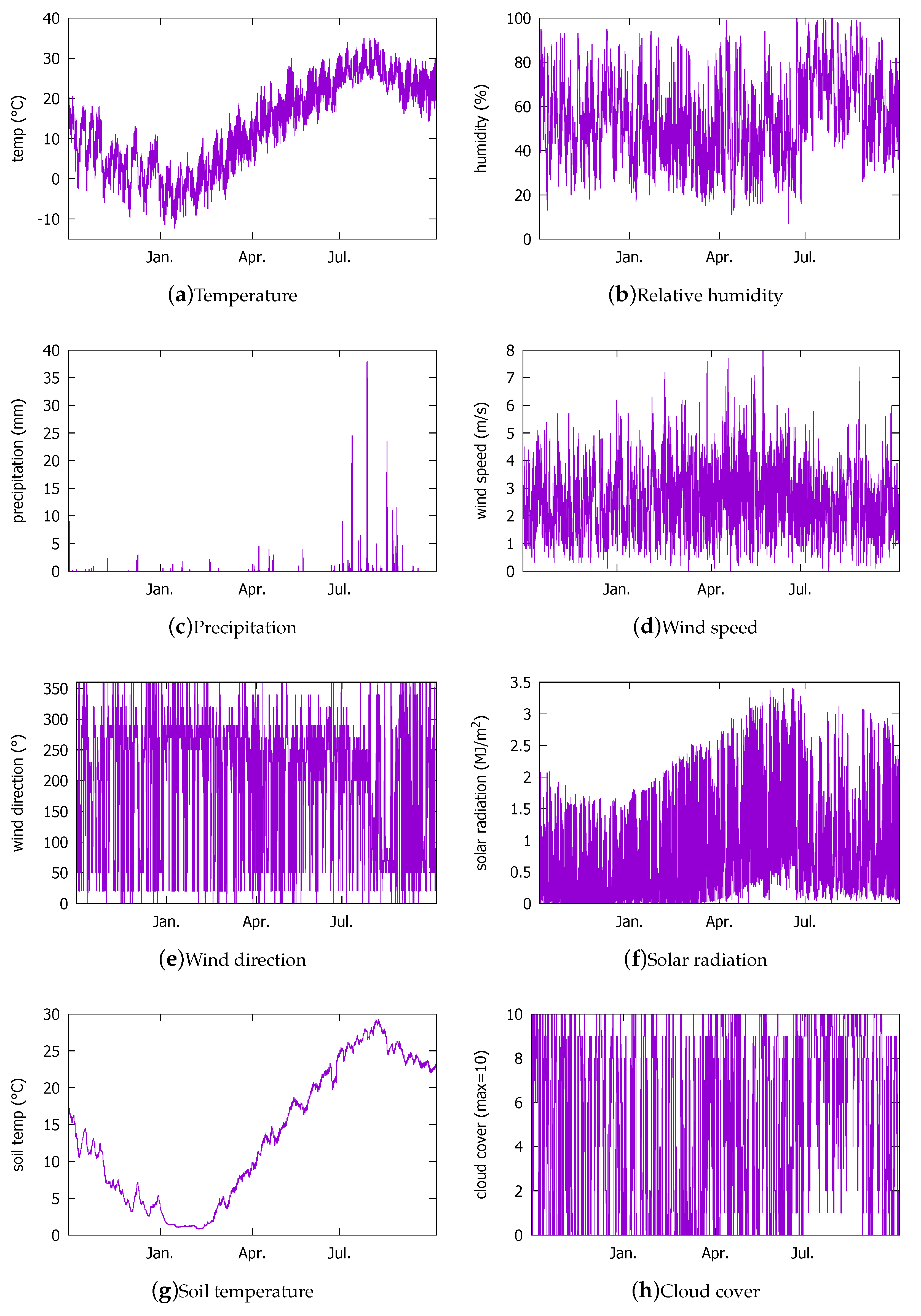

3.1.1. Weather Measurement Data from the KMA (2016)

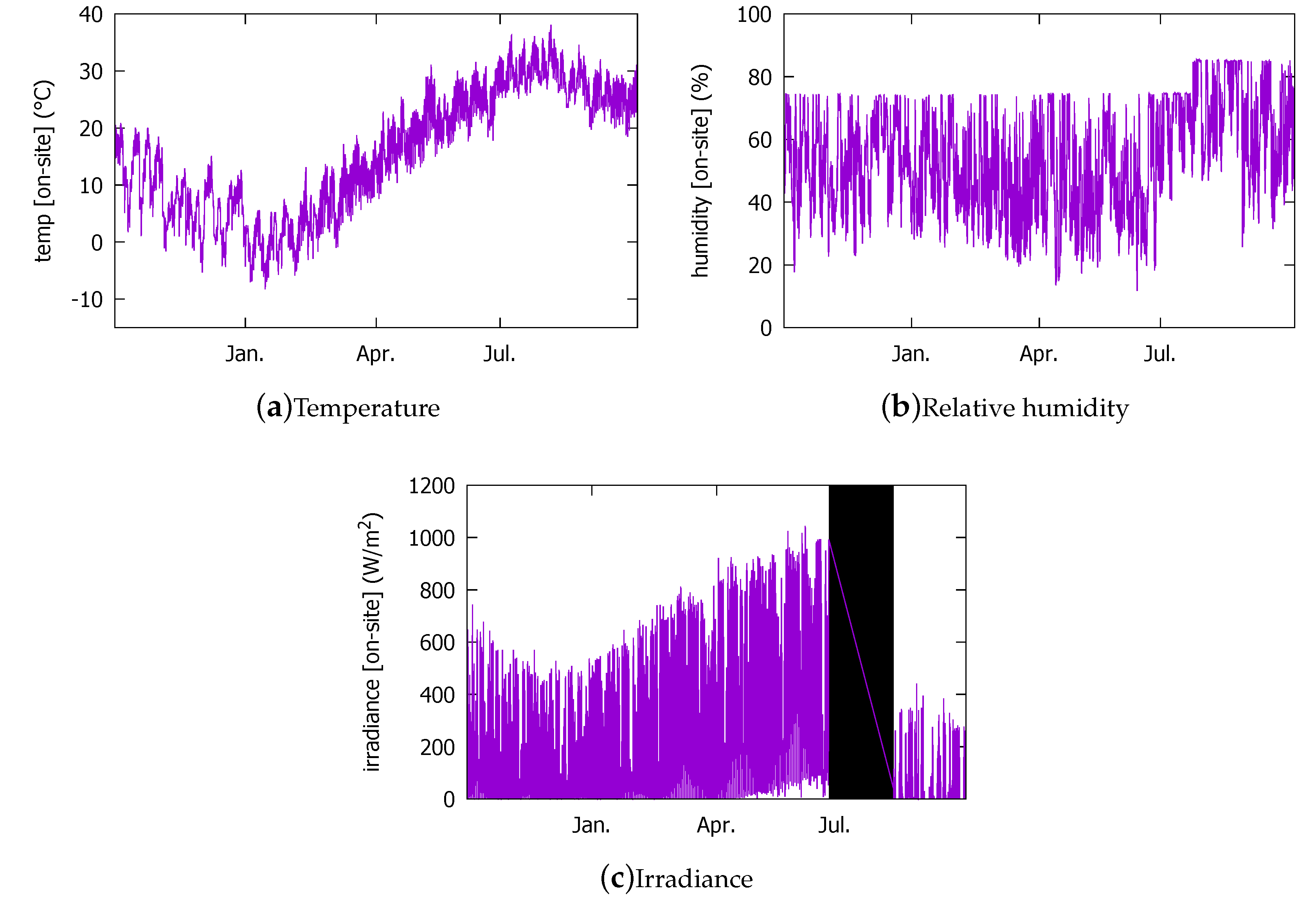

3.1.2. Measured Data from the On-Site Sensors (2016)

3.1.3. Weather Forecast Data from the KMA (2014)

3.1.4. Actual PV Power Output (2014)

3.2. Comparison of the Existing and the Proposed Approaches

3.2.1. Conventional Approach to PV Power Output Prediction

- Expected angle of incidence of the Sun, considering the latitude, longitude, and panel tilting angle,

- Forecasted amount of precipitation,

- Forecasted cloudiness index,

- : net area of solar panel surface (m),

- : fraction of surface area with active solar cell,

- : module conversion efficiency,

- : direct current (DC) to alternating current (AC) conversion efficiency.

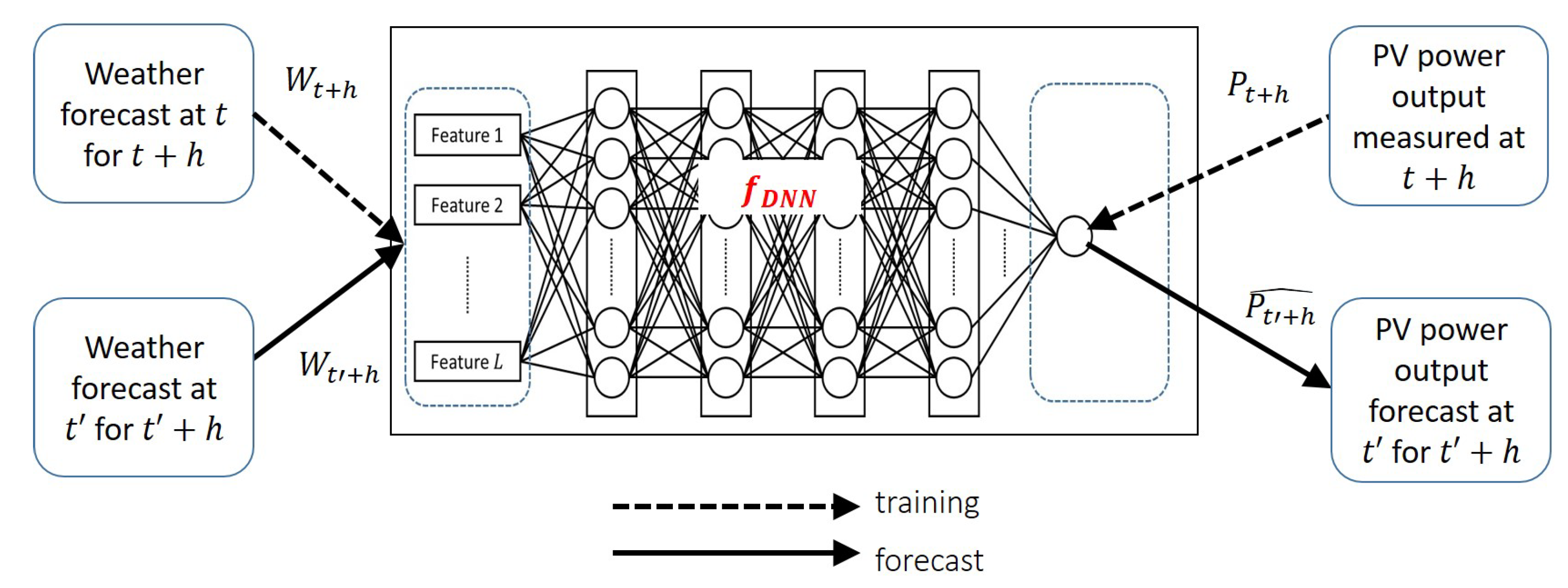

3.2.2. Proposed Deep Learning Approach

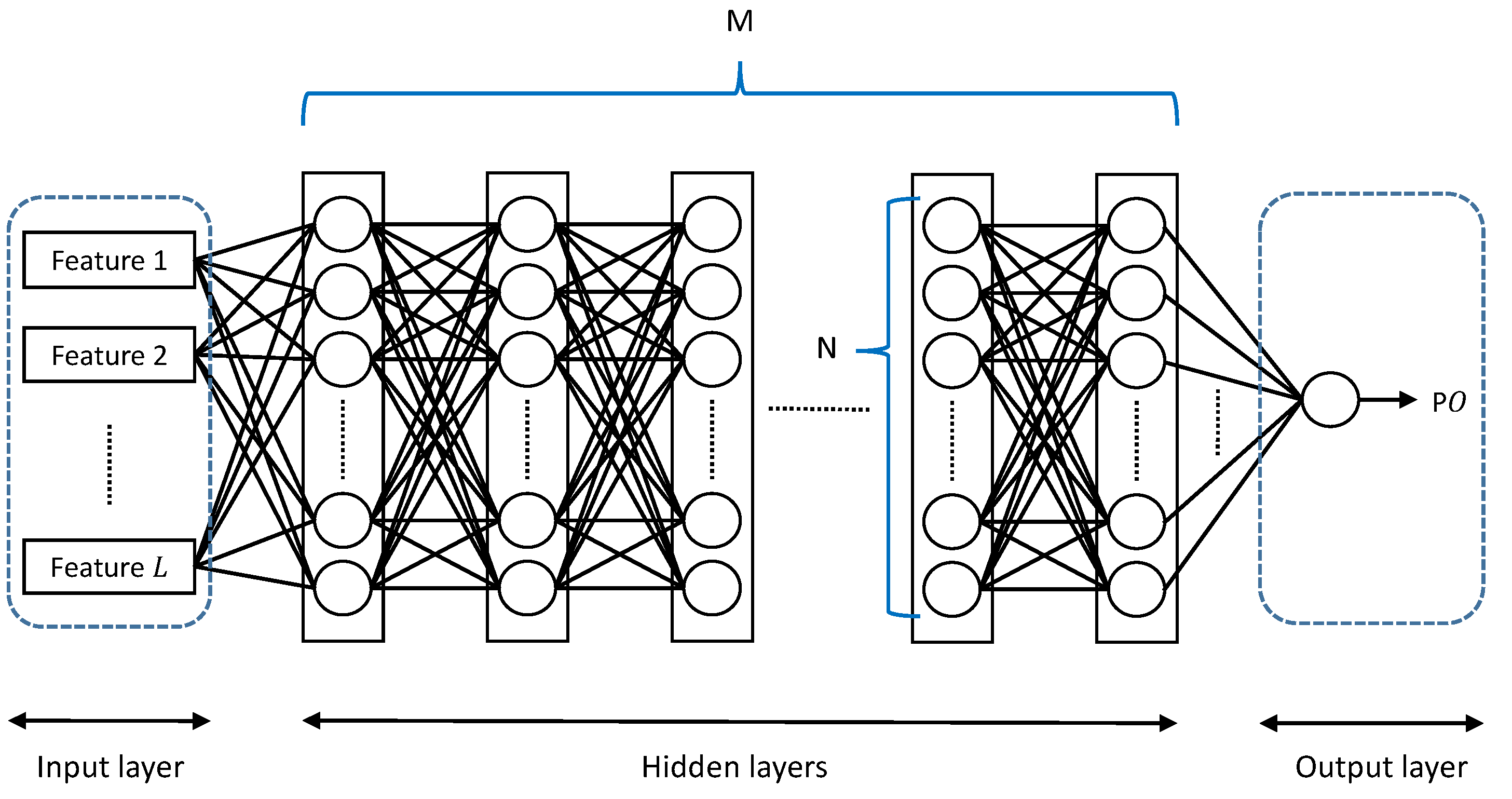

3.3. Architecturing the DNN

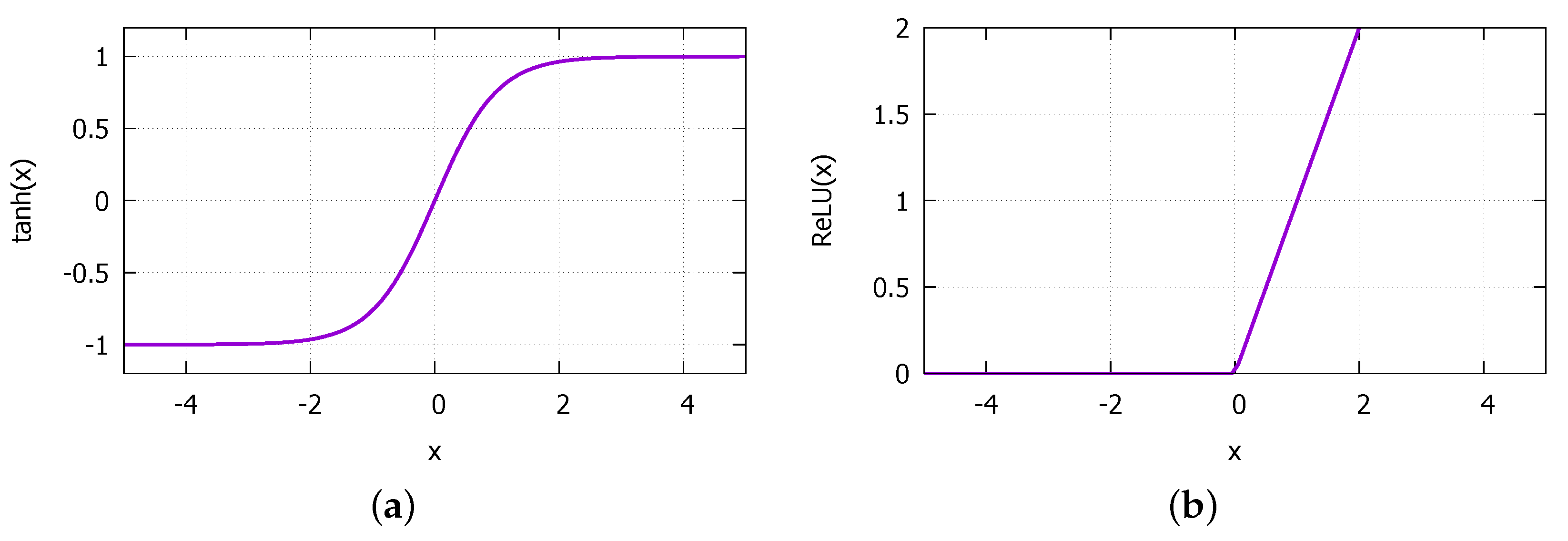

3.3.1. Selecting the Deep Learning Model

3.3.2. Searching for Appropriate Hyperparameters and Input Parameters

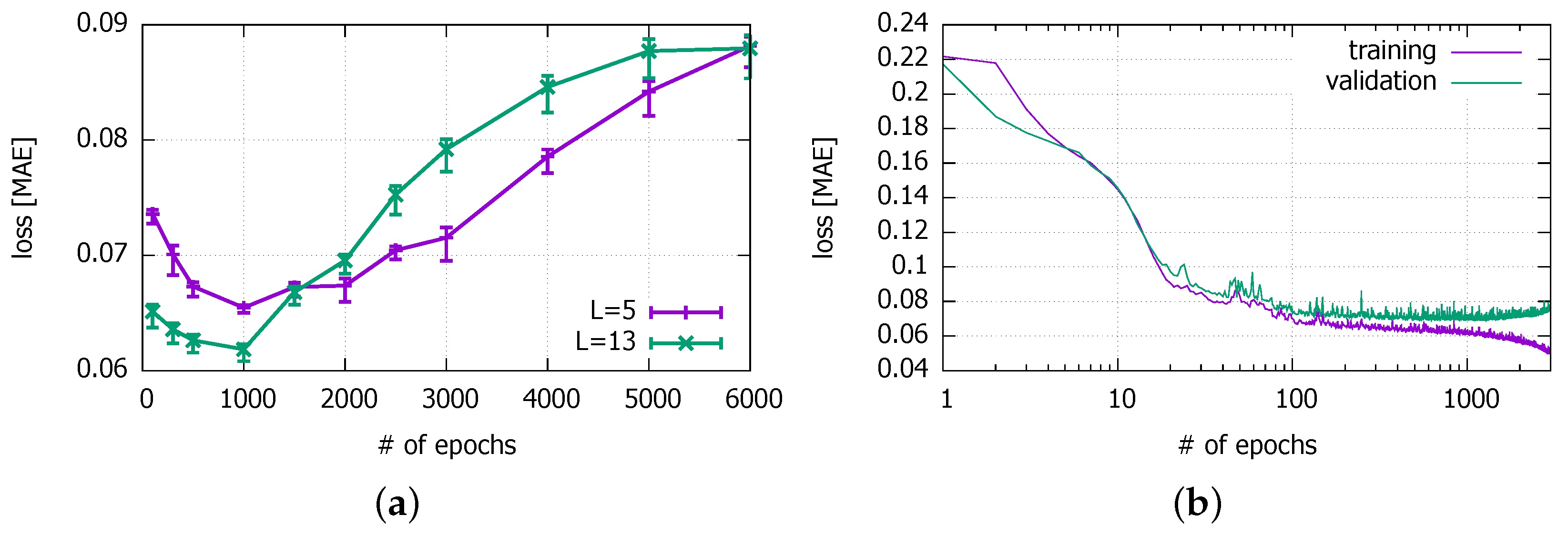

3.3.3. Training Time E

3.3.4. Number of Hidden Layers M

3.3.5. Input Features L

- Exclude the on-site temperature and humidity sensor values in addition to the already excluded irradiance because there are no matching values available in the 2014 weather forecast data.

- Keep the cloud cover. Although it does not have an identical item in the 2014 data set, we can approximate it with the cloudiness index there.

- Exclude the precipitation because there is strong dependency between the precipitation and the relative humidity (KMA). In other words, the relative humidity is always 100 % when it rains. Thus, pick the relative humidity instead.

- Exclude the soil temperature because it is the least relevant.

- Keep the month and the hour because the season and the time-of-day directly affect the solar radiation. However, exclude the date that is less likely to have correlation with the solar radiation or other weather conditions.

- Month,

- Hour,

- Cloud cover,

- Temperature,

- Relative humidity.

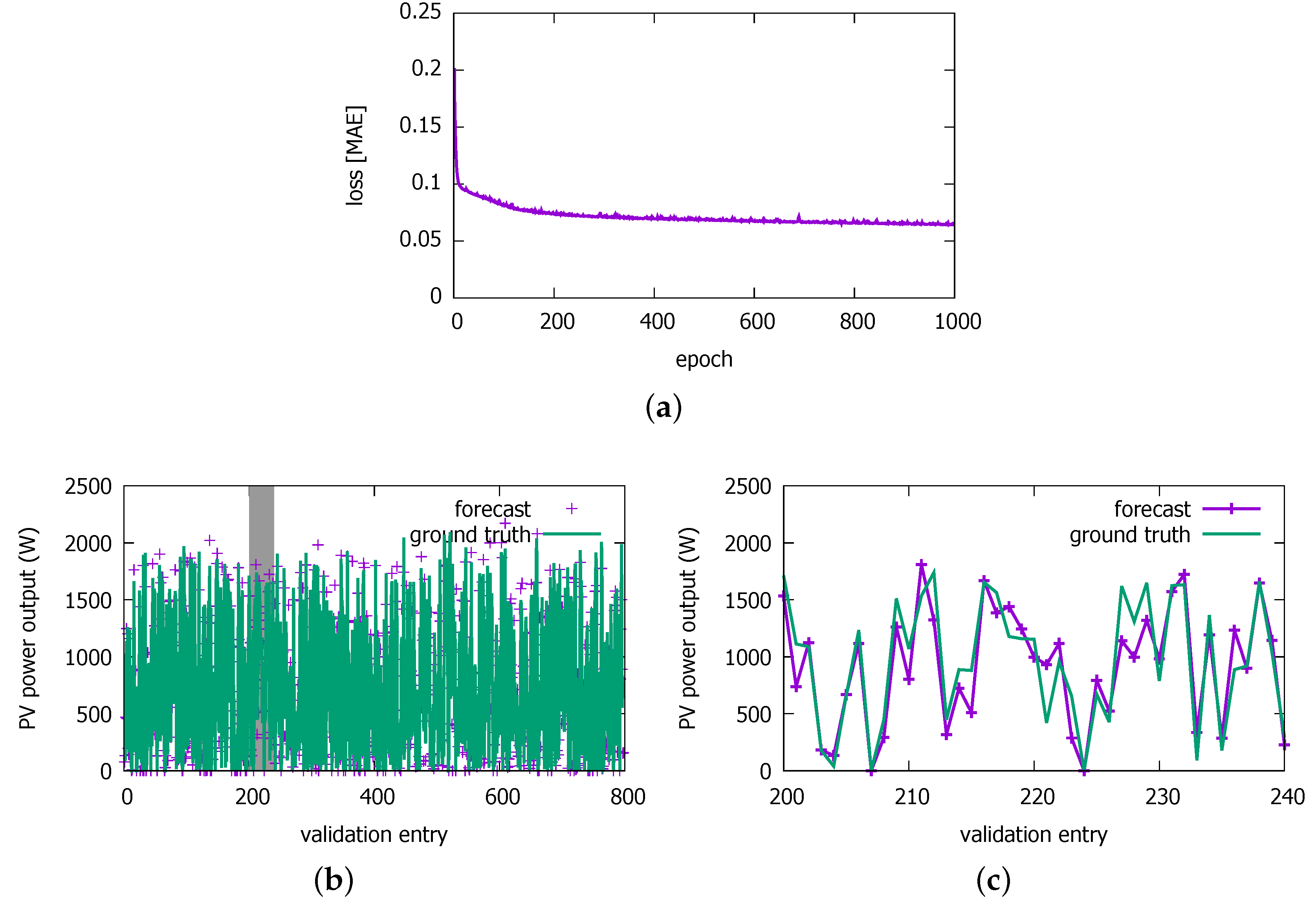

4. Results

4.1. Changes in Input and Output

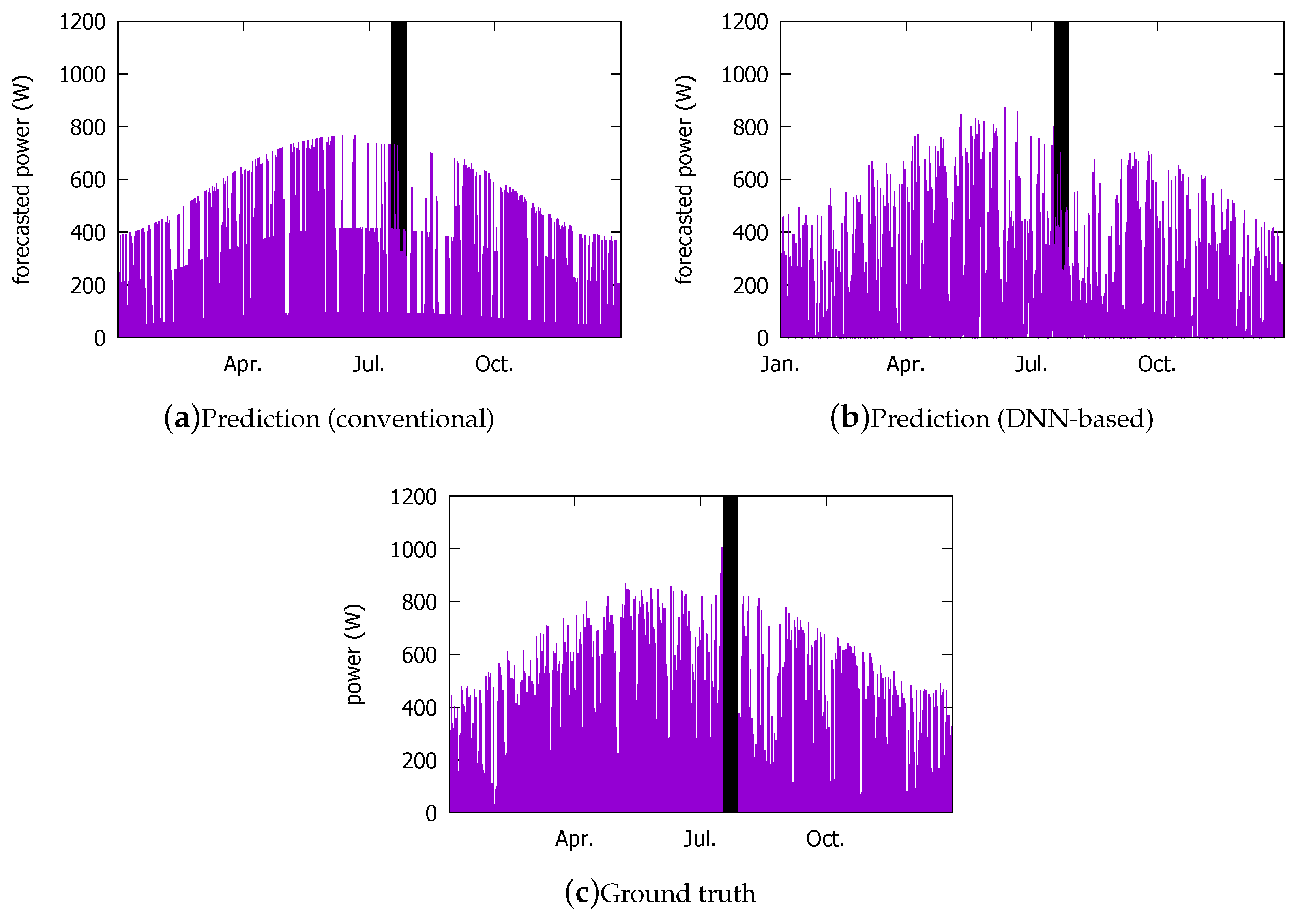

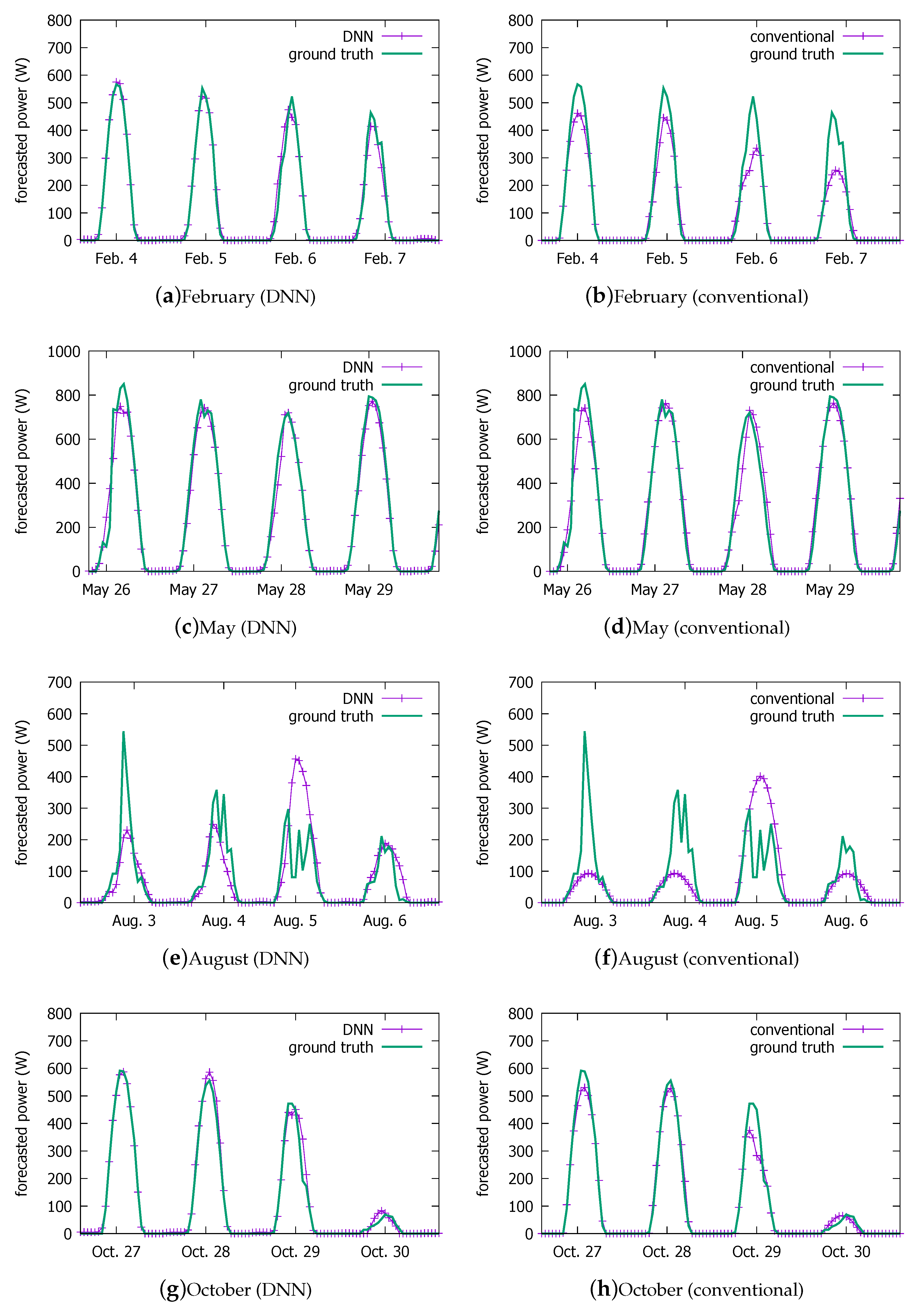

4.2. Visual Comparison

4.3. Numeric Comparison

4.3.1. Performance Measures

4.3.2. Comparison with the Conventional System

4.3.3. Seosonal Performance of the DNN-Based Forecast Model

4.3.4. Performance of the DNN-Based Forecast Model under Different Types of Weather

4.3.5. Comparison with an ANN-Based Model

5. Discussion

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

References

- Gaspar, R. How Solar PV is Winning over CSP. Renewable Energy World. Available online: https://www.renewableenergyworld.com/ugc/articles/2013/03/how-solar-pv-is-winning-over-csp.html (accessed on 1 August 2018).

- Sharma, N.; Sharma, P.; Irwin, D.E.; Shenoy, P.J. Predicting solar generation from weather forecasts using machine learning. In Proceedings of the 2nd IEEE International Conference on Smart Grid Communications (SmartGridComm), Brussels, Belgium, 17–20 October 2011. [Google Scholar]

- Chen, C.; Duan, S.; Cai, T.; Liu, B. Online 24-h solar power forecasting based on weather type classification using artificial neural network. Sol. Energy 2011, 85, 2856–2870. [Google Scholar] [CrossRef]

- Inman, R.H.; Pedro, H.T.C.; Coimbra, C.F.M. Solar forecasting methods for renewable energy integration. Prog. Energy Combust. Sci. 2013, 39, 535–576. [Google Scholar] [CrossRef]

- Diagne, M.; David, M.; Lauret, P.; Boland, J.; Schmutz, N. Review of solar irradiance forecasting methods and a proposition for small-scale insular grids. Renew. Sustain. Energy Rev. 2013, 27, 65–76. [Google Scholar] [CrossRef]

- Monteiro, C.; Fernandez-Jimenez, L.; Ramirez-Rosado, I.; Munõz-Jimenez, A.; Lara-Santillan, P. Short-term forecasting models for photovoltaic plants: analytical versus soft-computing techniques. Math. Probl. Eng. 2013, 2013. [Google Scholar] [CrossRef]

- Dolara, A.; Grimaccia, F.; Leva, S.; Mussetta, M.; Ogliari, E. A physical hybrid artificial neural network for short term forecasting of PV plant power output. Energies 2015, 8, 1138–1153. [Google Scholar] [CrossRef]

- Soman, S.; Zareipour, H.; Malik, O.; Mandal, P. A review of wind power and wind speed forecasting methods with different time horizons. In Proceedings of the IEEE North American Power Symposium (NAPS), Arlington, TX, USA, 26–28 September 2010. [Google Scholar]

- Oudjana, S.; Hellal, A.; Mahamed, I. Short term photovoltaic power generationn forecasting using neural network. In Proceedings of the 11th international conference on environment and electrical engineering (EEEIC), Venice, Italy, 18–25 May 2012. [Google Scholar]

- Brown, R.G. Exponential Smoothing for Predicting Demand; Arthur D. Little: Cambridge, MA, USA, 1956. [Google Scholar]

- Huang, R.; Huang, T.; Gadh, R.; Li, N. Solar generation prediction using the ARMA model in a laboratory-level micro-grid. In Proceedings of the 3rd IEEE International Conference on Smart Grid Communications (SmartGridComm), Tainan, Taiwan, 5–8 November 2012. [Google Scholar]

- Rajagopalan, S.; Santoso, S. Wind power forecasting and error analysis using the autoregressive moving average modeling. In Proceedings of the IEEE Power & Energy Society General Meeting, Calgary, AB, Canada, 26–30 July 2009. [Google Scholar]

- Boland, J. Time Series Modelling of Solar Radiation. In Modeling solar radiation at the earth’s surface; Badescu, V., Ed.; Springer: Heidelberg, Germany, 2008; pp. 283–312. ISBN 978-3-540-77454-9. [Google Scholar]

- Box, G.; Jenkins, G.; Reinsel, G.; Ljung, G. Time Series Analysis: Forecasting and Control, 5th ed.; John Wiley & Sons: Hoboken, NJ, USA, 2015; ISBN 978-1-118-67502-1. [Google Scholar]

- Wan Ahmad, W.; Ahmad, S.; Ishak, A.; Hashim, I.; Ismail, E.; Nazar, R. Arima model and exponential smoothing method: A comparison. In Proceedings of the 20th National Symposium on Mathematical Sciences: Research in Mathematical Sciences: A Catalyst for Creativity and Innovation, Putrajaya, Malaysia, 18–20 December 2012. [Google Scholar]

- Reikard, G. Predicting solar radiation at high resolutions: A comparison of time series forecasts. Sol. Energy 2009, 83, 342–349. [Google Scholar] [CrossRef]

- Bacher, P.; Madsen, H.; Nielson, H.A. Online short-term solar power forecasting. Sol. Energy 2009, 83, 342–349. [Google Scholar] [CrossRef]

- Mori, H.; Takahashi, M. Development of GRBFN with global structure for PV generation output forecasting. In Proceedings of the IEEE Power and Energy Society General Meeting, San Diego, CA, USA, 22–26 July 2012. [Google Scholar]

- Piorno, J.R.; Bergonzini, C.; Atienza, D.; Rosing, T.S. Prediction and management in energy harvested wireless sensor nodes. In Proceedings of the 1st International Conference on Wireless Communication, Vehicular Technology, Information Theory and Aerospace & Electronic Systems Technology (VITAE), Aalborg, Denmark, 17–20 May 2009. [Google Scholar]

- Bergonzini, C.; Brunelli, D.; Benini, L. Comparison of energy intake prediction algorithms for systems powered by photovoltaic harvesters. Microelectron. J. 2010, 41, 766–777. [Google Scholar] [CrossRef]

- Shi, J.; Lee, W.-J.; Liu, Y.; Yang, Y.; Wang, P. Forecasting power output of photovoltaic systems based on weather classification and support vector machines. IEEE Trans. Ind. Appl. 2012, 48, 1064–1069. [Google Scholar] [CrossRef]

- Yang, X.; Ren, J.; Yue, H. Photovoltaic power forecasting with a rough set combination method. In Proceedings of the UKACC 11th international conference on control, Belfast, UK, 31 August–2 September 2016. [Google Scholar]

- Zhang, F.; Deb, C.; Lee, S.; Yang, J.; Shah, K. Time series forecasting for building energy consumption using weighted Support Vector Regression with differential evolution optimization technique. Energy Build. 2016, 126, 94–103. [Google Scholar] [CrossRef]

- Cococcioni, M.; D’Andrea, E.; Lazzerini, B. 24-hour-ahead forecasting of energy production in solar PV systems. In Proceedings of the 11th International Conference on Intelligent Systems Design and Applications (ISDA), Cordoba, Spain, 22–24 November 2011. [Google Scholar]

- Mandal, P.; Madhira, S.; Meng, J.; Pineda, R. Forecasting power output of solar photovoltaic system using wavelet transform and artificial intelligence techniques. Procedia Comput. Sci. 2012, 12, 332–337. [Google Scholar] [CrossRef]

- Mellit, A. Recurrent neural network-based forecasting of the daily electricity generation of a Photovoltaic power system. In Proceedings of the 4th International Conference on Ecological Vehicle and Renewable Energy, Monte Carlo, Monaco, 26–29 March 2009. [Google Scholar]

- Chu, Y.; Urquhart, B.; Gohari, S.M.I.; Pedro, H.T.C.; Kleissl, J.; Combra, C.F.M. Short-term reforecasting of power output from a 48 MWe solar PV plant. Sol. Energy 2015, 112, 68–77. [Google Scholar] [CrossRef]

- Zhu, H.; Li, X.; Sun, Q.; Nie, L.; Yao, J.; Zhao, G. A Power Prediction Method for Photovoltaic Power Plant Based on Wavelet Decomposition and Artificial Neural Networks. Energies 2015, 9, 11. [Google Scholar] [CrossRef]

- Zhu, H.; Lian, W.; Lu, L.; Dai, S.; Hu, Y. An Improved Forecasting Method for Photovoltaic Power Based on Adaptive BP Neural Network with a Scrolling Time Window. Energies 2017, 10, 1542. [Google Scholar] [CrossRef]

- Yousif, J.; Kazem, H.A.; Boland, J. Predictive Models for Photovoltaic Electricity Production in Hot Weather Conditions. Energies 2017, 10, 971. [Google Scholar] [CrossRef]

- Chupong, C.; Plangklang, B. Forecasting power output of PV grid connected system in Thailand without using solar radiation measurement. Energy Procedia 2011, 9, 230–237. [Google Scholar] [CrossRef]

- Leva, S.; Dolara, A.; Grimaccia, F.; Mussetta, M.; Ogliari, E. Analysis and validation of 24 hours ahead neural network forecasting of photovoltaic output power. Math. Comput. Simul. 2017, 131, 88–100. [Google Scholar] [CrossRef]

- Ramsami, P.; Oree, V. A hybrid method for forecasting the energy output of photovoltaic systems. Energy Convers. Manag. 2015, 95, 406–413. [Google Scholar] [CrossRef]

- Yona, A.; Senjyu, T.; Funabashi, T.; Kim, C.-H. Determination Method of Insolation Prediction With Fuzzy and Applying Neural Network for Long-Term Ahead PV Power Output Correction. IEEE Trans. Sustain. Energy 2013, 4, 527–533. [Google Scholar] [CrossRef]

- Gensler, A.; Henze, J.; Sick, B.; Raabe, N. Deep Learning for solar power forecasting—An approach using AutoEncoder and LSTM Neural Networks. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics (SMC), Budapest, Hungary, 9–12 October 2016. [Google Scholar]

- Grimaccia, F.; Leva, S.; Mussetta, M.; Ogliari, E. ANN Sizing Procedure for the Day-Ahead Output Power Forecast of a PV Plant. Appl. Sci. 2017, 7, 622. [Google Scholar] [CrossRef]

- Ogliari, E.; Niccolai, A.; Leva, S.; Zich, R.E. Computational Intelligence Techniques Applied to the Day Ahead PV Output Power Forecast: PHANN, SNO and Mixed. Energies 2018, 11, 1487. [Google Scholar] [CrossRef]

- Korea Meteorological Administration. Meteorological Data Open Portal (in Korean). Available online: https://data.kma.go.kr/cmmn/main.do (accessed on 28 May 2018).

- Lorenz, E.; Hurka, J.; Heinemann, D.; Beyer, H.G. Irradiance Forecasting for the Power Prediction of Grid-Connected Photovoltaic Systems. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2009, 2, 2–10. [Google Scholar] [CrossRef]

- Yona, A.; Senjyu, T.; Funabashi, T. Application of recurrent neural network to short-term-ahead generating power forecasting for photovoltaic system. In Proceedings of the IEEE Power Engineering Society General Meeting, Tampa, FL, USA, 24–28 June 2007. [Google Scholar]

- Mellit, A.; Benghanem, M.; Hadj Arab, A.; Guessoum, A. A simplified model for generating sequences of global solar radiation data for isolated sites: Using artificial neural network and a library of Markov transition matrices approach. Sol. Energy 2005, 79, 469–482. [Google Scholar] [CrossRef]

- Rasmussen, C.E. Gaussian Processes in Machine Learning. In Lecture Notes in Computer Science, vol 3176: Advanced Lectures on Machine Learning; Bousquet, O., von Luxburg, U., Rätsch, G., Eds.; Springer: Berlin, Germany, 2004; pp. 63–71. ISBN 978-3-540-28650-9. [Google Scholar]

- Lawrence Berkeley National Laboratory. EnergyPlus Documentation Engineering Reference. Available online: https://energyplus.net/sites/default/files/pdfs_v8.3.0/EngineeringReference.pdf (accessed on 28 May 2018).

- Skoplaki, E.; Palyvos, J.A. On the temperature dependence of photovoltaic module electrical performance: A review of efficiency/power correlations. Sol. Energy 2009, 83, 614–624. [Google Scholar] [CrossRef]

- Liu, J.; Fang, W.; Zhang, X.; Yang, C. An Improved Photovoltaic Power Forecasting Model With the Assistance of Aerosol Index Data. IEEE Trans. Sustain. Energy 2015, 6, 434–442. [Google Scholar] [CrossRef]

- Achleitner, S.; Kamthe, A.; Liu, T.; Cerpa, A.E. SIPS: Solar Irradiance Prediction System. In Proceedings of the 13th International Symposium on Information Processing in Sensor Networks, Berlin, Germany, 15–17 April 2014. [Google Scholar]

- Malvoni, M.; De Giorgi, M.G.; Congedo, P.M. Forecasting of PV Power Generation using weather input data-preprocessing techniques. Energy Procedia 2017, 126, 651–658. [Google Scholar] [CrossRef]

- De Giorgi, M.G.; Congedo, P.M.; Malvoni, M. Photovoltaic power forecasting using statistical methods: impact of weather data. IET Sci. Meas. Technol. 2014, 8, 90–97. [Google Scholar] [CrossRef]

- Dumitru, C.-D.; Gligor, A.; Enachescu, C. Solar Photovoltaic Energy Production Forecast Using Neural Networks. Procedia Technol. 2016, 22, 808–815. [Google Scholar] [CrossRef]

- Yona, A.; Senjyu, T.; Saber, A.; Funabashi, T.; Sekine, H.; Kim, C.-H. Application of neural network to one-day-ahead 24h generating power forecasting for photovoltaic system. In Proceedings of the IEEE International Conference on Intelligent Systems Applications to Power Systems, Niigata, Japan, 5–8 November 2007. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the 3rd International Conference for Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Abdel-Nasser, M.; Mahmoud, K. Accurate photovoltaic power forecasting models using deep LSTM-RNN. Neural Comput. Appl. 2017, 1–14. [Google Scholar] [CrossRef]

- Tetko, I.V.; Livingstone, D.J.; Luik, A.I. Neural network studies. 1. Comparison of overfitting and overtraining. J. Chem. Inf. Comput. Sci. 1995, 35, 826–833. [Google Scholar] [CrossRef]

- Priddy, K.L.; Keller, P.E. Artificial Neural Networks—An Introduction. Tutorial Texts in Optical Engineering, vol. TT68; SPIE Press: Bellingham, WA, USA, 2005; ISBN 978-0819459879. [Google Scholar]

- Beyer, G.G.; Martinez, J.P.; Suri, M.; Torres, J.L.; Lorenz, E.; Muller, S.; Hoyer-Klick, C.; Ineichen, P. Benchmarking of radiation products. Mesor Report D.1.1.3. 2009. Available online: http://www.mesor.org/docs/MESoR_Benchmarking_of_radiation_products.pdf (accessed on 1 June 2018).

- Sfetsos, A.; Coonick, A.H. Univariate and multivariate forecasting of hourly solar radiation with artificial intelligence techniques. Sol. Energy 2000, 68, 169–178. [Google Scholar] [CrossRef]

- Marquez, R.; Coimbra, C.F.M. Forecasting of global and direct solar irradiance using stochastic learning methods, ground experiments and the NWS database. Sol. Energy 2011, 85, 746–756. [Google Scholar] [CrossRef]

- Pelland, S.; Remund, J.; Kleissl, J.; Oozeki, T.; De Brabandere, K. Photovoltaic and solar forecasting: State of the art. IEA PVPS Task 2013, 14, 1–36. [Google Scholar]

- Korea Meteorological Administration. Climate of Korea. Available online: https://web.kma.go.kr/eng/biz/climate_01.jsp (accessed on 22 June 2018).

| 2016 (Hourly Measurements) | Source | 2014 (Forecast every 3 h up to 67 h Horizon) | Source |

|---|---|---|---|

| Month | Month | ||

| Date | Date | ||

| Hour | Hour | ||

| Temperature (C) | KMA | Temperature (C) | KMA |

| - | Daily high temp. (C) | KMA | |

| - | Daily low temp. (C) | KMA | |

| Precipitation (mm) | KMA | Amount of rain (mm; next 12 h) | KMA |

| - | Amount of snow (mm; next 12 h) | KMA | |

| - | Prob. of precipitation (%) | KMA | |

| Wind speed (m/s) | KMA | Wind speed (m/s) | KMA |

| Wind direction (0–36; in 10) | KMA | Wind direction (0–36; in 10) | KMA |

| Relative humidity (%) | KMA | Relative humidity (%) | KMA |

| Solar radiation (MJ/m) | KMA | - | |

| Cloud cover (0–10) | KMA | - | |

| Soil temp. (C; 30 cm below surface) | KMA | - | |

| - | Cloudiness index | KMA | |

| - | Precipitation index | KMA | |

| - | Weather index | KMA | |

| Temperature (C) | On-site sensor | - | |

| Relative humidity (%) | On-site sensor | - | |

| Irradiance (W/m) | On-site sensor | - |

| Parameter | Value |

|---|---|

| Longitude | 126.8852906E |

| Latitude | 37.4702759N |

| Altitude | 68 m |

| Azimuth | 180 |

| Tilt | 15 |

| Mounting disposition | Flat roof |

| Field type | Fixed tilted plane |

| Installed capacity | 1.224 kWp (2014)/2.448 kWp (2016) |

| Technology | Amorphous silicon |

| PV module | Solar Laminate PVL-136 |

| M | E | MAE | E | MAE |

|---|---|---|---|---|

| 1 | 1000 | 0.067 | ||

| 2 | 1000 | 0.066 | ||

| 3 | 500 | 0.066 | ||

| 1000 | 0.064 | |||

| 1500 | 0.064 | |||

| 2000 | 0.068 | |||

| 4 | 500 | 0.065 | ||

| 1000 | 0.062 | |||

| 1500 | 0.068 | |||

| 2000 | 0.070 | |||

| 5 | 1000 | 0.067 | ||

| 6 | 1000 | 0.067 |

| Weather Condition | Pearson Cross-Correlation Coefficient | ||||

|---|---|---|---|---|---|

| Cloud Cover | Temperature | Humidity | Wind Speed | Wind Direction | |

| Clear | 0.000 | 0.175 | −0.062 | −0.044 | −0.122 |

| Cloudy | −0.104 | 0.219 | −0.178 | 0.058 | −0.086 |

| Overcast | −0.015 | 0.259 | −0.104 | 0.002 | 0.000 |

| Rainy | −0.222 | 0.226 | −0.189 | −0.095 | −0.027 |

| Total | −0.352 | 0.129 | −0.287 | 0.032 | 0.035 |

| L | Excluded Features from Table 1 | MAE |

|---|---|---|

| 13 | Irradiance | 0.062 |

| 9 | (13)+Other on-site sensor features, date | 0.069 |

| 8 | (9)+Soil temperature | 0.071 |

| 7 | (8)+Precipitation | 0.067 |

| 6 | (7)+Wind speed | 0.067 |

| 6 | (7)+Wind direction | 0.070 |

| 5 | (8)+Wind speed/direction | 0.067 |

| Month | Date | Forecasted Weather Changes during Day Hours (7:00 a.m.–6:00 p.m.) |

|---|---|---|

| February | 4 | Clear |

| 5 | Partly Cloudy | |

| 6 | Mostly Cloudy → Partly Cloudy | |

| 7 | Partly Cloudy → Mostly Cloudy → Overcast | |

| May | 26 | Mostly Cloudy → Partly Cloudy → Clear |

| 27 | Clear | |

| 28 | Mostly Cloudy → Partly Cloudy | |

| 29 | Clear → Partly Cloudy | |

| August | 3 | Rain |

| 4 | Overcast → Rain | |

| 5 | Mostly Cloudy | |

| 6 | Overcast → Rain → Overcast | |

| October | 27 | Clear |

| 28 | Clear | |

| 29 | Partly Cloudy | |

| 30 | Partly Cloudy → Mostly Cloudy |

| DNN Model | Current System | |||

|---|---|---|---|---|

| Training | Test | Training | Test | |

| RMSE | 0.061 | 0.064 | 0.071 | 0.066 |

| MAE | 0.027 | 0.029 | 0.033 | 0.030 |

| AbsDev | 0.239 | 0.266 | 0.291 | 0.277 |

| Bias | 0.007 | 0.008 | −0.004 | −0.002 |

| Correlation | 0.936 | 0.929 | 0.910 | 0.921 |

| Spring (March–May) | Summer (June–August) | Autumn (September–November) | Winter (December–February) | |

|---|---|---|---|---|

| RMSE | 0.057 | 0.100 | 0.045 | 0.032 |

| MAE | 0.028 | 0.052 | 0.020 | 0.015 |

| AbsDev | 0.195 | 0.477 | 0.190 | 0.208 |

| Bias | 0.009 | 0.010 | 0.008 | 0.004 |

| Correlation | 0.962 | 0.819 | 0.965 | 0.966 |

| Clear (1) | Cloudy (2 to 3) | Overcast (4) | Rain/Snow (5 to 7) | |

|---|---|---|---|---|

| RMSE | 0.026 | 0.051 | 0.059 | 0.051 |

| MAE | 0.012 | 0.018 | 0.027 | 0.023 |

| AbsDev | 0.107 | 0.227 | 0.600 | 0.484 |

| Bias | 0.006 | 0.004 | 0.012 | −0.005 |

| Correlation | 0.991 | 0.935 | 0.778 | 0.833 |

| DNN Model | ANN Model (N = 10) | ANN Model (N = 120) | |

|---|---|---|---|

| RMSE | 0.064 | 0.068 | 0.068 |

| MAE | 0.029 | 0.034 | 0.034 |

| AbsDev | 0.266 | 0.310 | 0.320 |

| Bias | 0.008 | 0.004 | 0.005 |

| Correlation | 0.929 | 0.916 | 0.915 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Son, J.; Park, Y.; Lee, J.; Kim, H. Sensorless PV Power Forecasting in Grid-Connected Buildings through Deep Learning. Sensors 2018, 18, 2529. https://doi.org/10.3390/s18082529

Son J, Park Y, Lee J, Kim H. Sensorless PV Power Forecasting in Grid-Connected Buildings through Deep Learning. Sensors. 2018; 18(8):2529. https://doi.org/10.3390/s18082529

Chicago/Turabian StyleSon, Junseo, Yongtae Park, Junu Lee, and Hyogon Kim. 2018. "Sensorless PV Power Forecasting in Grid-Connected Buildings through Deep Learning" Sensors 18, no. 8: 2529. https://doi.org/10.3390/s18082529

APA StyleSon, J., Park, Y., Lee, J., & Kim, H. (2018). Sensorless PV Power Forecasting in Grid-Connected Buildings through Deep Learning. Sensors, 18(8), 2529. https://doi.org/10.3390/s18082529