Application of Crack Identification Techniques for an Aging Concrete Bridge Inspection Using an Unmanned Aerial Vehicle

Abstract

:1. Introduction

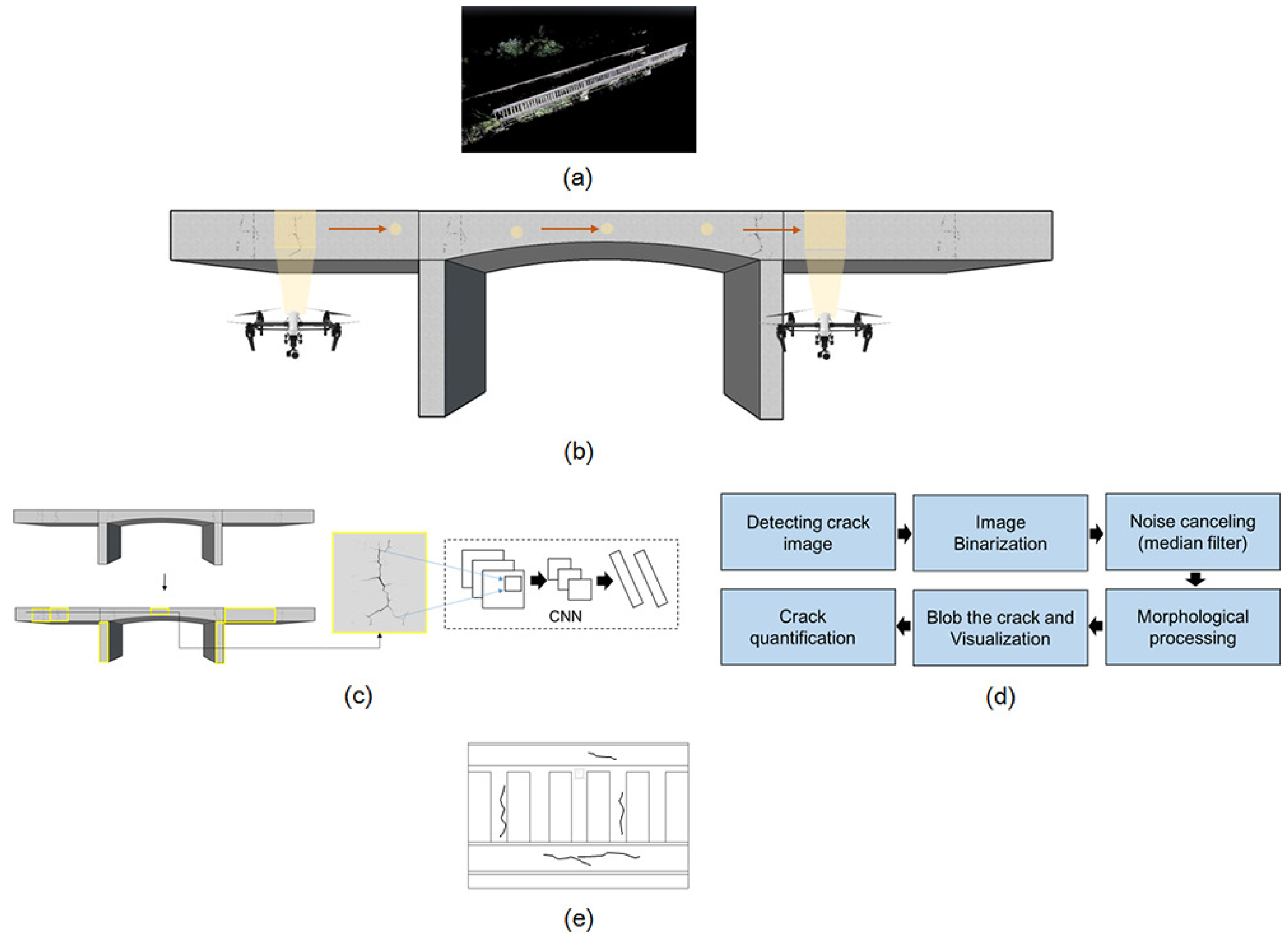

2. Proposed Methodology

2.1. Overview

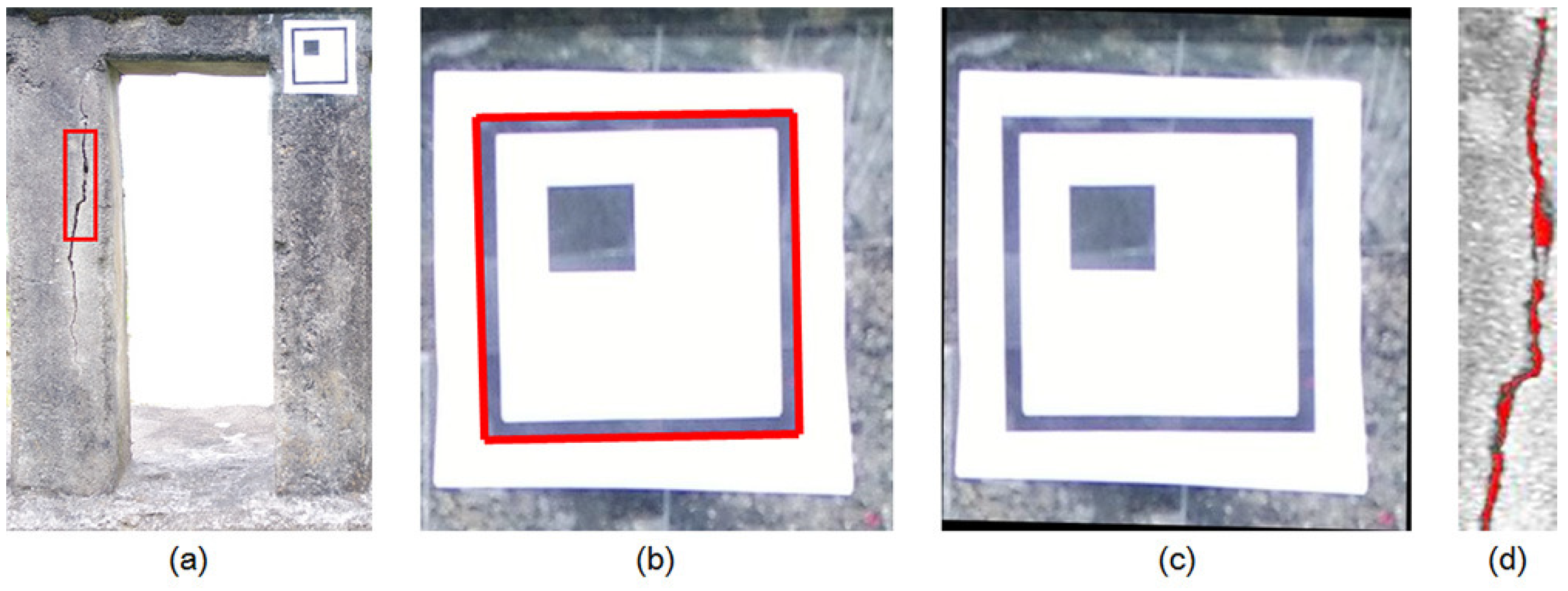

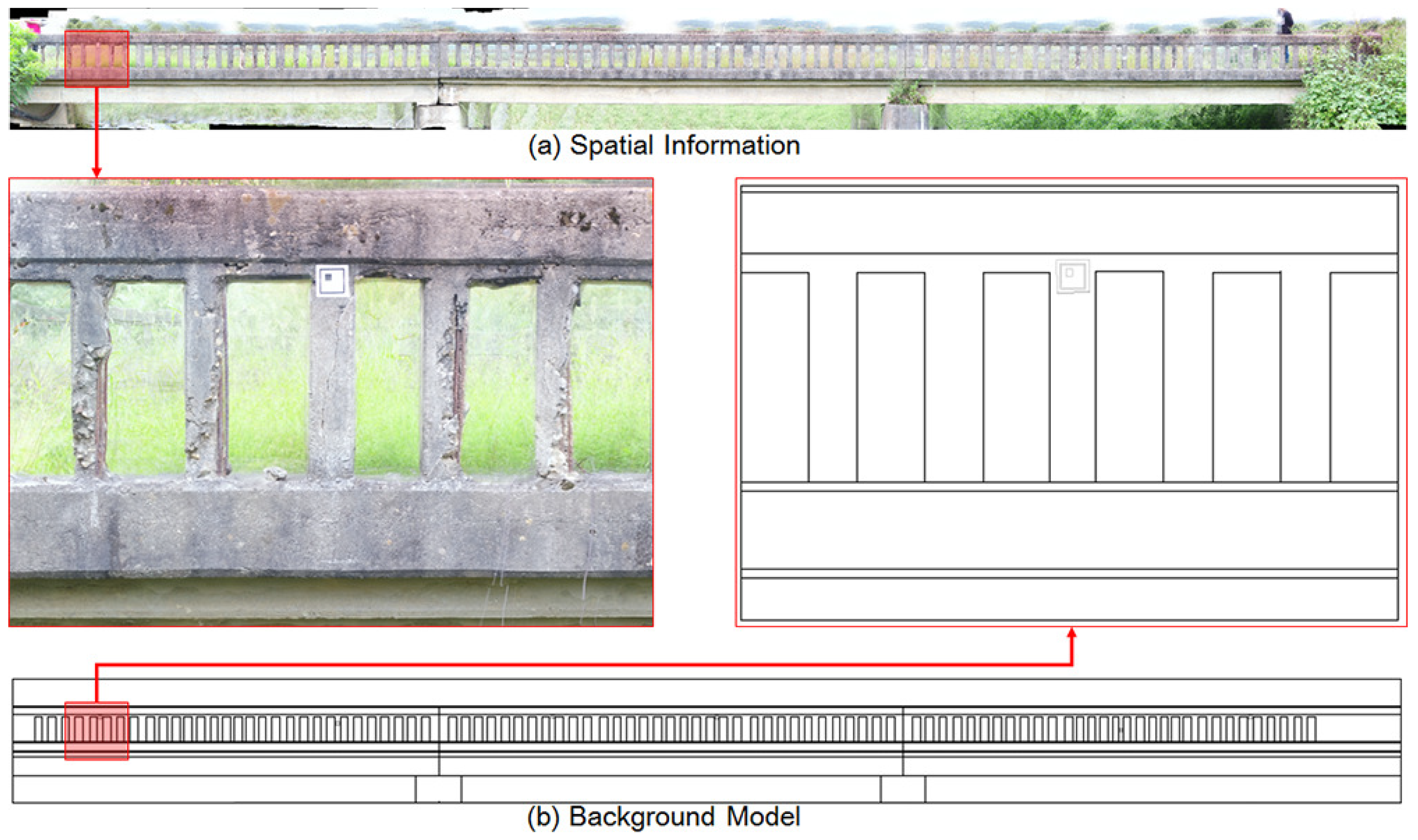

2.2. Background Model Generation for Building the Spatial Information

2.3. Image Acquisition with a High Resolution Camera on the UAV

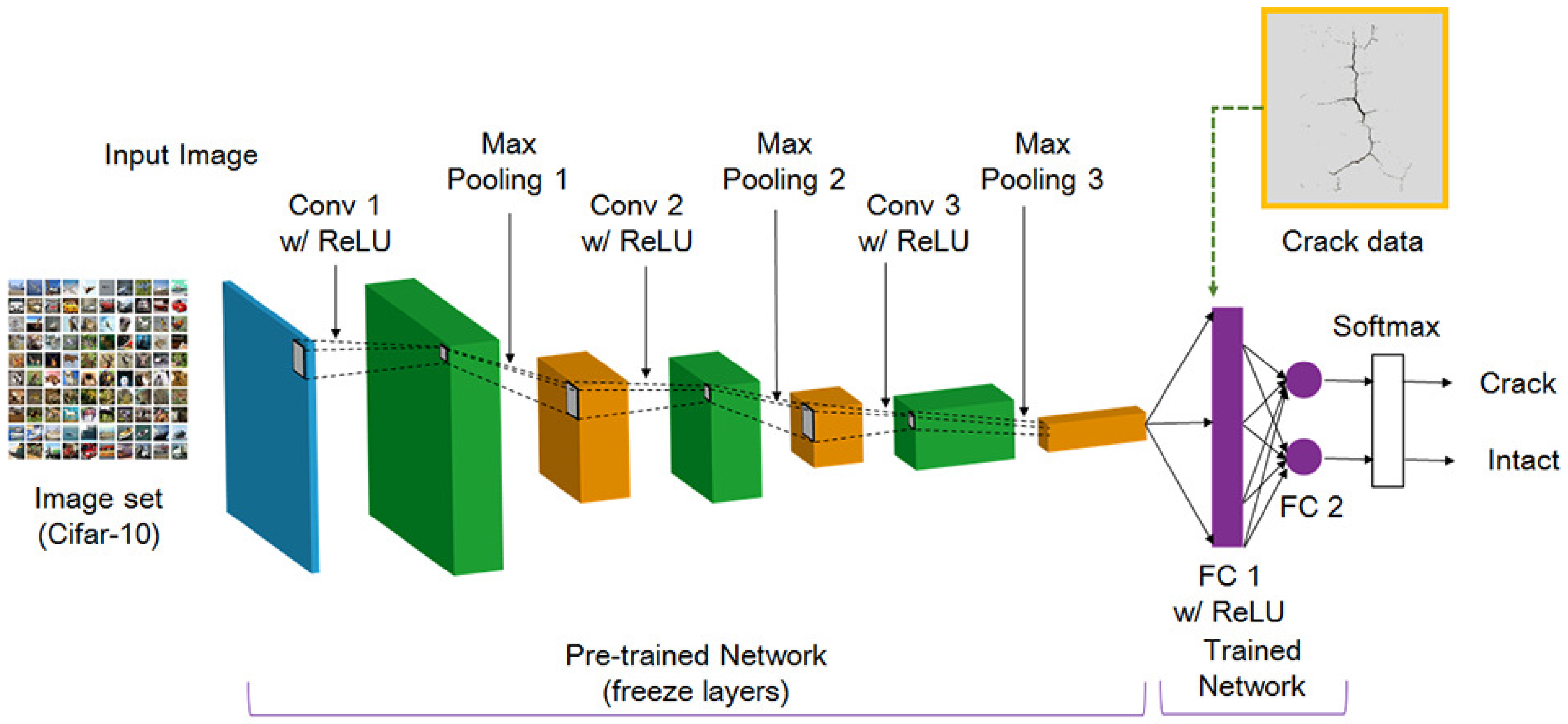

2.4. Crack Detection Using Deep Learning

2.5. Image Processing for Crack Quantification

3. Experimental Validation

3.1. Generating the Background Model

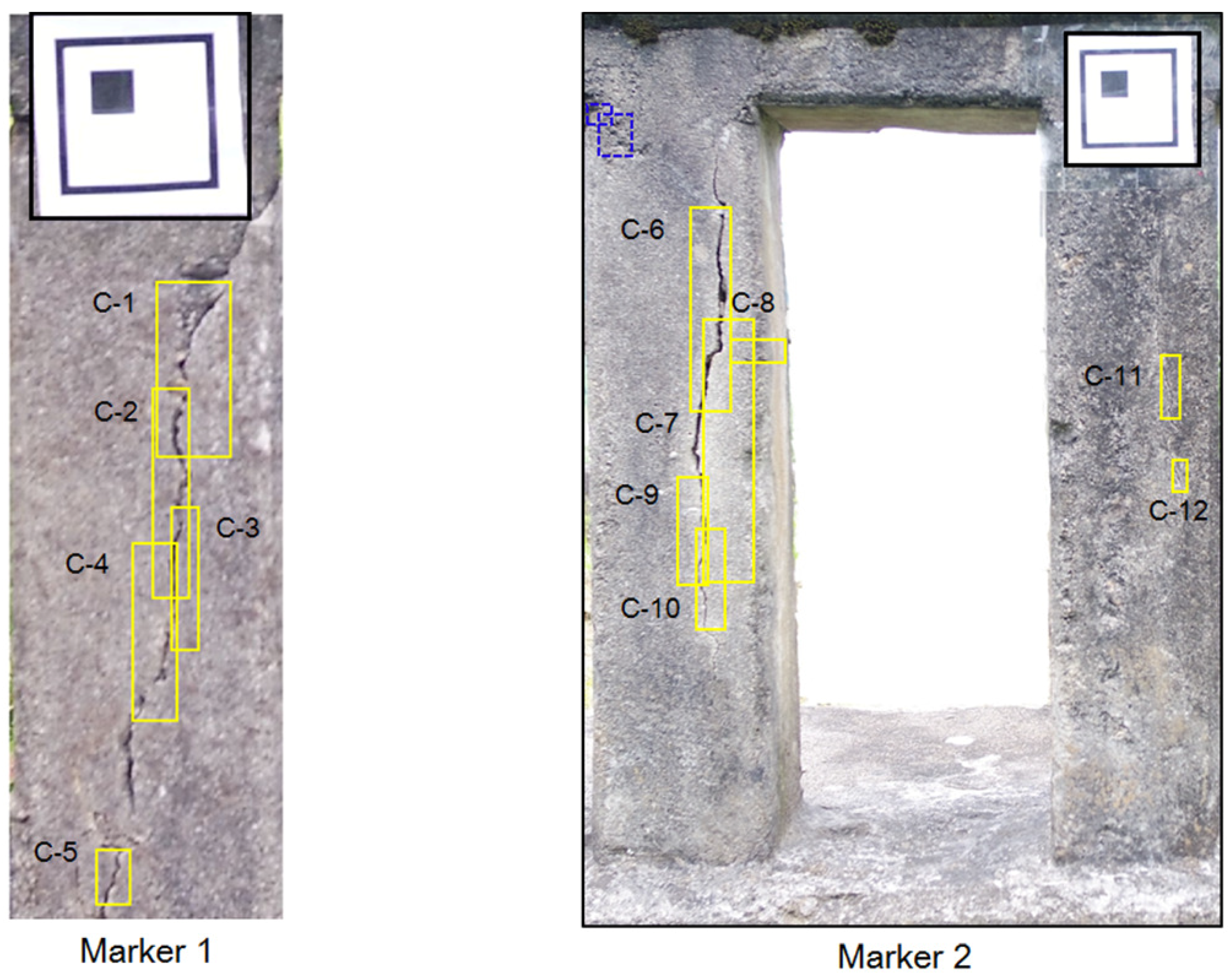

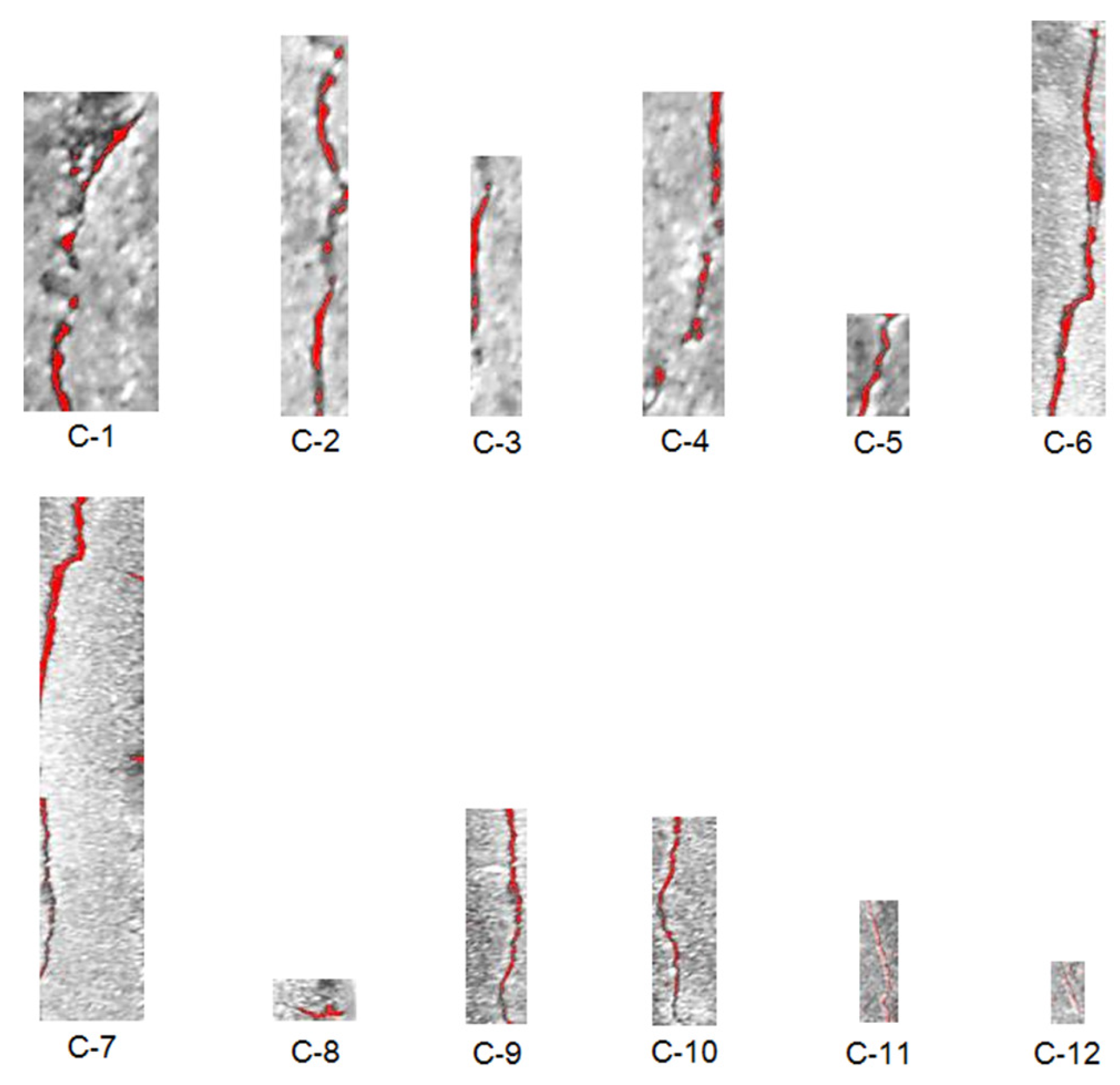

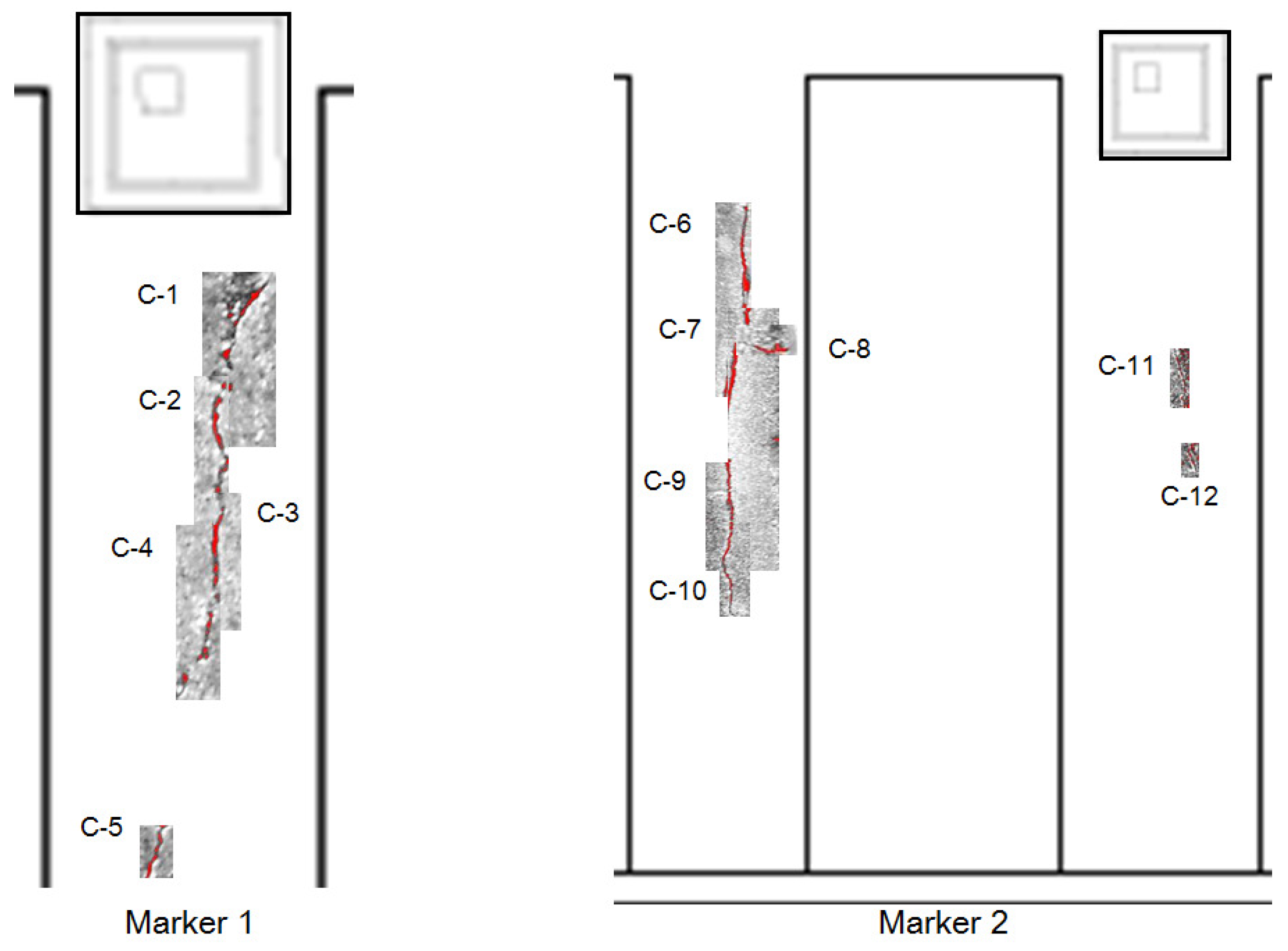

3.2. Crack Detection

3.3. Crack Quantification

3.4. Bridge Inspection Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Jung, H.J.; Lee, J.H.; Yoon, S.S.; Kim, I.H.; Jin, S.S. Condition assessment of bridges based on unmanned aerial vehicles with hybrid imaging devices. In Proceedings of the 2017 World Congress on Advances in Structural Engineering and Mechanics (ASEM17), Ilsan, Korea, 28 August–1 September 2017. [Google Scholar]

- Yu, Q.; Guo, J.; Wang, S.; Zhu, Q.; Tao, B. Study on new bridge crack detection robot based on machine vision. In Proceedings of the International Conference on Intelligent Robotics and Applications (ICIRA 2012): Intelligent Robotics and Applications, Montreal, QC, Canada, 3–5 October 2012; pp. 174–184. [Google Scholar]

- Su, T.-C. Application of computer vision to crack detection of concrete structure. IACSIT Int. J. Eng. Technol. 2013, 5, 457–461. [Google Scholar] [CrossRef]

- Zhang, W.; Zhang, Z.; Qi, D.; Liu, Y. Automatic crack detection and classification method for subway tunnel safety monitoring. Sensors 2014, 14, 19307–19328. [Google Scholar] [CrossRef] [PubMed]

- Bu, G.P.; Chanda, S.; Guan, H.; Jo, J.; Blumenstein, M.; Loo, Y.C. Crack detection using a texture analysis-based technique for visual bridge inspection. Electron. J. Struct. Eng. 2015, 14, 41–48. [Google Scholar]

- Yehia, S.; Abudayyeh, O.; Nabulsi, S.; Abdelqader, I. Detection of common defects in concrete bridge decks using nondestructive evaluation techniques. J. Bridge Eng. 2007, 12. [Google Scholar] [CrossRef]

- Kee, S.H.; Oh, T.; Popovics, J.S.; Arndt, R.W.; Zhu, J. Nondestructive bridge deck testing with air-coupled impact-echo and infrared thermography. J. Bridge Eng. 2012, 17. [Google Scholar] [CrossRef]

- Lee, H.; Kim, M.S.; Jeong, D.; Delwiche, S.R.; Chao, K.; Cho, B.K. Detection of cracks on tomatoes using a hyperspectral near-infrared reflectance imaging system. Sensors 2014, 14, 18837–18850. [Google Scholar] [CrossRef] [PubMed]

- Mercier, G.; Lennon, M. Support Vector Machines for Hyperspectral Image Classification with Spectral-based Kernels. In Proceedings of the 2003 IEEE International Geoscience and Remote Sensing Symposium (IGARSS’03), Toulouse, France, 21–25 July 2003; pp. 288–290. [Google Scholar]

- Sharma, A.; Mehta, N. Structural health monitoring using image processing techniques—A review. Int. J. Mod. Comput. Sci. 2016, 4, 93–97. [Google Scholar]

- Wang, P.; Huang, H. Comparison analysis on present image-based crack detection methods in concrete structures. In Proceedings of the 2010 3rd International Congress on Image and Signal Processing (CISP2010), Yantai, China, 16–18 October 2010; pp. 2530–2533. [Google Scholar]

- Mohan, A.; Poobal, S. Crack detection using image processing: A critical review and analysis. Alex. Eng. J. 2017. [Google Scholar] [CrossRef]

- Talab, A.M.A.; Huang, Z.; Xi, F.; HaiMing, L. Detection crack in image using Otsu method and multiple filtering in image processing techniques. Opt. Int. J. Light Electron Opt. 2016, 127, 1030–1033. [Google Scholar] [CrossRef]

- Kim, H.J.; Lee, J.H.; Ahn, E.J.; Cho, S.J.; Shin, M.S.; Sim, S.H. Concrete crack identification using a UAV incorporating hybrid image processing. Sensors 2017, 17, 2052. [Google Scholar] [CrossRef] [PubMed]

- Wikipedia. Edge Detection. Available online: https://en.wikipedia.org/wiki/Edge_detection (accessed on 30 January 2018).

- Abdel-Qader, I.; Abudayyeh, O.; Kelly, M.E. Analysis of edge-detection techniques for crack identification in bridges. J. Comput. Civ. Eng. 2003, 17, 255–263. [Google Scholar] [CrossRef]

- Zou, Q.; Cao, Y.; Li, Q.; Mao, Q.; Wang, S. CrackTree: Automatic crack detection from pavement images. Pattern Recognit. Lett. 2012, 33, 227–238. [Google Scholar] [CrossRef]

- Li, Q.; Zou, Q.; Zhang, D.; Mao, Q. FoSA: F* seed-growing approach for crack-line detection form pavement images. Image Vis. Comput. 2011, 29, 861–872. [Google Scholar] [CrossRef]

- Shi, Y.; Cui, L.; Qi, Z.; Meng, F.; Chen, Z. Automatic road crack detection using random structured forests. IEEE Trans. Intell. Transp. Syst. 2016, 17, 3434–3445. [Google Scholar] [CrossRef]

- American Association of State Highway and Transportation Officials (AASHTO). Survey Finds a Growing Number of State DOTS Are Using Drones to Improve Safety and Collect Data Faster and Better—Saving Time and Money. Available online: http://asphaltmagazine.com/wp-content/uploads/2016/05/Dronesss.pdf (accessed on 6 June 2018).

- Dorafshan, S.; Maguire, M.; Hoffer, N.V.; Coopmans, C. Challenges in bridge inspection using small unmanned aerial system: Results and lessons learned. In Proceedings of the International Conference on Uamanned Aircraft System (ICUAS), Miami, FL, USA, 13–16 June 2017. [Google Scholar]

- Han, K.; Lin, J.; Golparvar-Fard, M. A formalism for utilization of autonomous vision-based systems and integrate project models for construction progress monitoring. In Proceedings of the Conference on Autonomous and Robotic Construction of Infrastucture, Ames, IA, USA, 2–3 June 2015. [Google Scholar]

- Munguia, R.; Urzua, S.; Bolea, Y.; Grau, A. Vision-based SLAM system for unmanned aerial vehicles. Sensors 2016, 16, 372. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sabatini, R.; Gardi, A.; Richardson, M.A. LIDAR obstacle warning and avoidance system for unmanned aircraft. Int. J. Comput. Syst. Eng. 2014, 8, 711–722. [Google Scholar]

- Klein, J.; Murray, D. Parallel tracking and mapping for small AR workspaces. In Proceedings of the 6th IEEE and ACM International Symposium on Mixed and Augmented Reality (ISMAR), Nara, Japan, 13–16 November 2007. [Google Scholar]

- Eschmann, C.; Kuo, C.M.; Kuo, C.H.; Boller, C. High-resolution multisensor infrastructure inspection with unmanned aircraft systems. In Proceedings of the International Archives of the Phtogrammetry, Remote Sensing and Spatial Information Sciences, Rostock, Germany, 4–6 September 2013. [Google Scholar]

- Pereira, F.C.; Pereira, C.E. Embedded image processing systems for automatic recognition of cracks using UAVs. IFAC-PapersOnLine 2015, 48, 16–21. [Google Scholar] [CrossRef]

- Cha, Y.J.; Choi, W.; Büyüköztürk, O. Deep learning-based crack damage detection using convolutional neural networks. Comput.-Aided Civ. Infrastruct. Eng. 2017, 32, 361–378. [Google Scholar] [CrossRef]

- Cha, Y.J.; Choi, W.; Suh, G.; Mahmoudkhani, S. Autonomous structural visual inspection using region-based deep learning for detecting multiple damage types. Comput.-Aided Civ. Infrastruct. Eng. 2017, 1–17. [Google Scholar] [CrossRef]

- Feng, C.; Liu, M.Y.; Kao, C.C.; Lee, T.Y. Deep Active Learning for Civil Infrastructure Defect Detection and Classification. Mitsubishi Electric Research Laboratories. Available online: https://www.merl.com/publications/docs/TR2017-034.pdf (accessed on 6 June 2018).

- Lovelace, B. Unmanned Aerial Vehicle Bridge Inspection Demonstration Project. Available online: http://www.dot.state.mn.us/research/TS/2015/201540.pdf (accessed on 6 June 2018).

- Baek, S.C.; Hong, W.H. A Study on the Construction of a Background Model for Structure Appearance Examination Chart using UAV. In Proceedings of the 2017 World Congress on Advances in Structural Engineering and Mechanics (ASEM), Ilsan, Korea, 28 August–1 September 2017. [Google Scholar]

- Yang, I.T.; Park, K.; Shin, M.S. A Study on Reverse Engineering of Bobsleigh Structure using Terrestrial LiDAR. In Proceedings of the 2014 International 16th Organizing Committee of GISUP, Nagasaki, Japan, 19–21 February 2014; pp. 61–70. [Google Scholar]

- Wang, R. 3D Building Modeling using Images and LiDAR: A Review. Int. J. Image Data Fusion 2013, 4, 273–292. [Google Scholar] [CrossRef]

- Mendes, T.; Henriques, S.; Catalao, J.; Redweik, P.; Vieira, G. Photogrammetry with UAV’s: Quality Assessment of Open-Source Software for Generation of Ortophotos and Digital Surface Models. In Proceedings of the VIII Conferencia Nacional De Cartografia e Geodesia, Lisbon, Portugal, 29–30 October 2015; pp. 29–30. [Google Scholar]

- Alidoost, F.; Arefi, H. Comparison of UAS-based photogrammetry software for 3D point cloud generation: A survey over a historical site. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 55–61. [Google Scholar] [CrossRef]

- Jaud, M.; Passot, S.; Le Bivic, R.; Delacourt, C.; Grandjean, P.; Le Dantec, N. Assessing the Accuracy of High Resolution Digital Surface Models Computed by PhotoScan and MicMac in Sub-Optimal Survey Conditions. Remote Sens. 2016, 8, 465. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. arXiv, 2015; arXiv:1506.01497. [Google Scholar]

- Protopapadakis, E.; Doulamis, N. Image Based Approaches for Tunnels’ Defects Recognition via Robotic Inspectors. In Proceedings of the International Symoposium on Visual Computing, Las Vegas, NV, USA, 14–16 December 2015; pp. 706–716. [Google Scholar]

- Zhang, L.; Yang, F.; Zhang, Y.; Zhu, Y. Confirmed. In Proceedings of the 2016 IEEE International Conference on Imgae Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3708–3712. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR14), Washington, DC, USA, 23–28 June 2014. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F.-F. ImageNet: A large-scale hierarchical image database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR09), Miami, FL, USA, 20–25 June 2009. [Google Scholar]

- Krizhevsky, A. Learning Multiple Layers of Features from Tiny Images. Available online: https://www.cs.toronto.edu/~kriz/learning-features-2009-TR.pdf (accessed on 6 June 2018).

- Nair, V.; Hinton, G.E. Rectified linear units imporve restricted Boltzmann machines. In Proceedings of the 27th International Conference on Machine Learning (ICML-10), Haifa, Israel, 21–24 June 2010. [Google Scholar]

| Layer | Operator | Dimension (Height × Width × Depth) | Kernel (Height × Width) | Stride | Padding |

|---|---|---|---|---|---|

| Input | Conv 1 w/ReLU | 32 × 32 × 3 | 5 × 5 | 1 | 2 |

| Layer 1 | Pool 1 | 32 × 32 × 32 | 3 × 3 | 2 | 0 |

| Layer 2 | Conv 2 w/ReLU | 16 × 16 × 32 | 5 × 5 | 1 | 2 |

| Layer 3 | Pool 2 | 16 × 16 × 32 | 3 × 3 | 2 | 0 |

| Layer 4 | Conv 3 w/ReLU | 8 × 8 × 32 | 5 × 5 | 1 | 2 |

| Layer 5 | Pool 3 | 8 × 8 × 64 | 3 × 3 | 2 | 0 |

| Layer 6 | - | 4 × 4 × 64 | - | - | - |

| Layer 7 | FC 1 | 1 × 1 × 64 | - | - | - |

| Layer 8 | FC 2 | 1 × 1 × 2 | - | - | - |

| Layer 9 | Softmax | 1 × 1 × 2 | - | - | - |

| Crack Thickness (mm) | Crack Length (mm) | |

|---|---|---|

| C-1 | 1.92 | 48.68 |

| C-2 | 1.10 | 60.09 |

| C-3 | 1.10 | 27.94 |

| C-4 | 1.37 | 48.59 |

| C-5 | 1.37 | 17.08 |

| C-6 | 1.92 | 63.56 |

| C-7 | 2.47 | 78.43 |

| C-8 | 1.59 | 6.60 |

| C-9 | 1.10 | 35.01 |

| C-10 | 0.53 | 30.79 |

| C-11 | 0.55 | 19.96 |

| C-12 | 0.55 | 8.32 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, I.-H.; Jeon, H.; Baek, S.-C.; Hong, W.-H.; Jung, H.-J. Application of Crack Identification Techniques for an Aging Concrete Bridge Inspection Using an Unmanned Aerial Vehicle. Sensors 2018, 18, 1881. https://doi.org/10.3390/s18061881

Kim I-H, Jeon H, Baek S-C, Hong W-H, Jung H-J. Application of Crack Identification Techniques for an Aging Concrete Bridge Inspection Using an Unmanned Aerial Vehicle. Sensors. 2018; 18(6):1881. https://doi.org/10.3390/s18061881

Chicago/Turabian StyleKim, In-Ho, Haemin Jeon, Seung-Chan Baek, Won-Hwa Hong, and Hyung-Jo Jung. 2018. "Application of Crack Identification Techniques for an Aging Concrete Bridge Inspection Using an Unmanned Aerial Vehicle" Sensors 18, no. 6: 1881. https://doi.org/10.3390/s18061881

APA StyleKim, I.-H., Jeon, H., Baek, S.-C., Hong, W.-H., & Jung, H.-J. (2018). Application of Crack Identification Techniques for an Aging Concrete Bridge Inspection Using an Unmanned Aerial Vehicle. Sensors, 18(6), 1881. https://doi.org/10.3390/s18061881