H-SLAM: Rao-Blackwellized Particle Filter SLAM Using Hilbert Maps

Abstract

1. Introduction

1.1. Underwater SLAM State of the Art

1.2. Contribution

- Bring the map representation named Hilbert Maps (HMs) to the underwater environment.

- Implement a new SLAM framework, the H-SLAM.

- (a)

- Use sonar measurements with HM representation.

- (b)

- PF based.

- (c)

- Capable of running online on an AUV.

- Simulated experiments and results of the method proposed.

- (a)

- Experiment with a known map. Localization only (TBN).

- (b)

- Full SLAM experiment.

- Real experiments and results of the method proposed.

- (a)

- Datasets obtained by an AUV.

1.3. Paper Organization

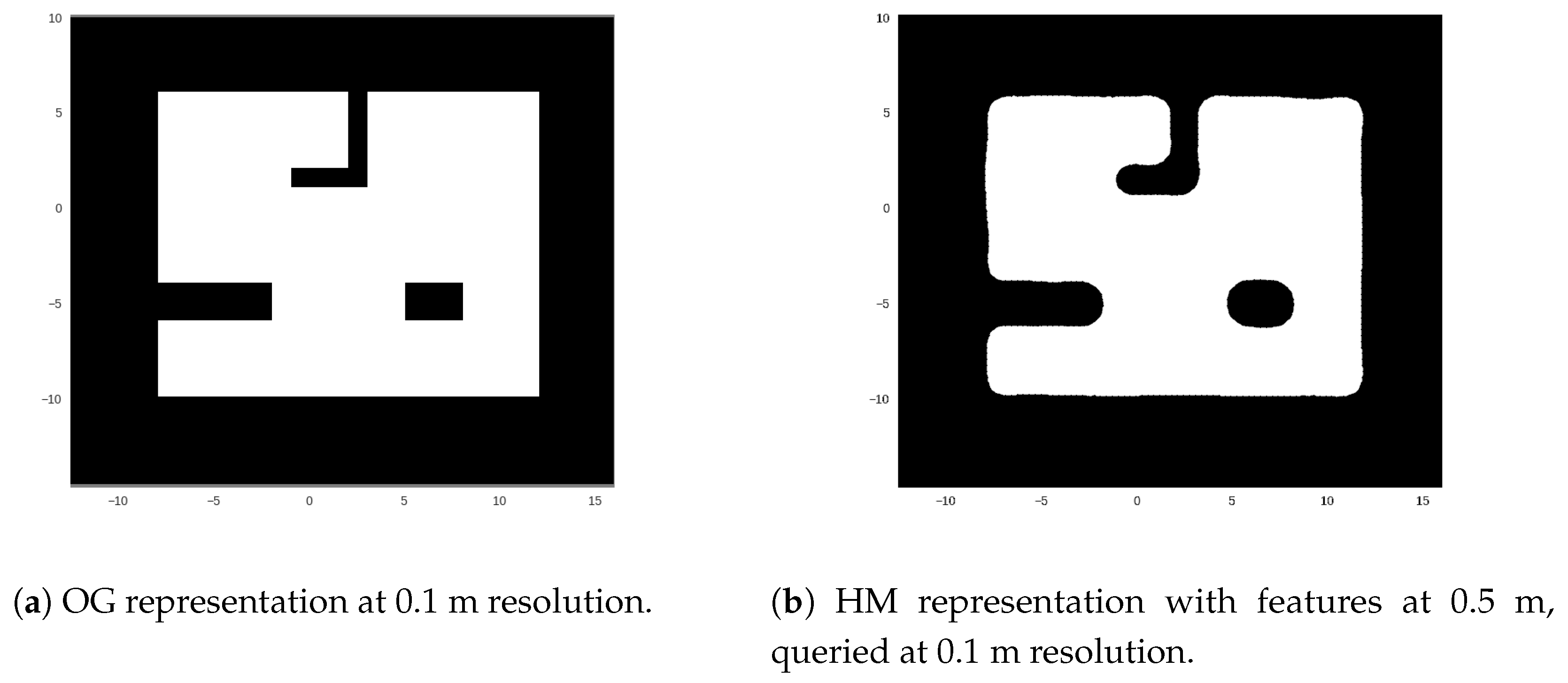

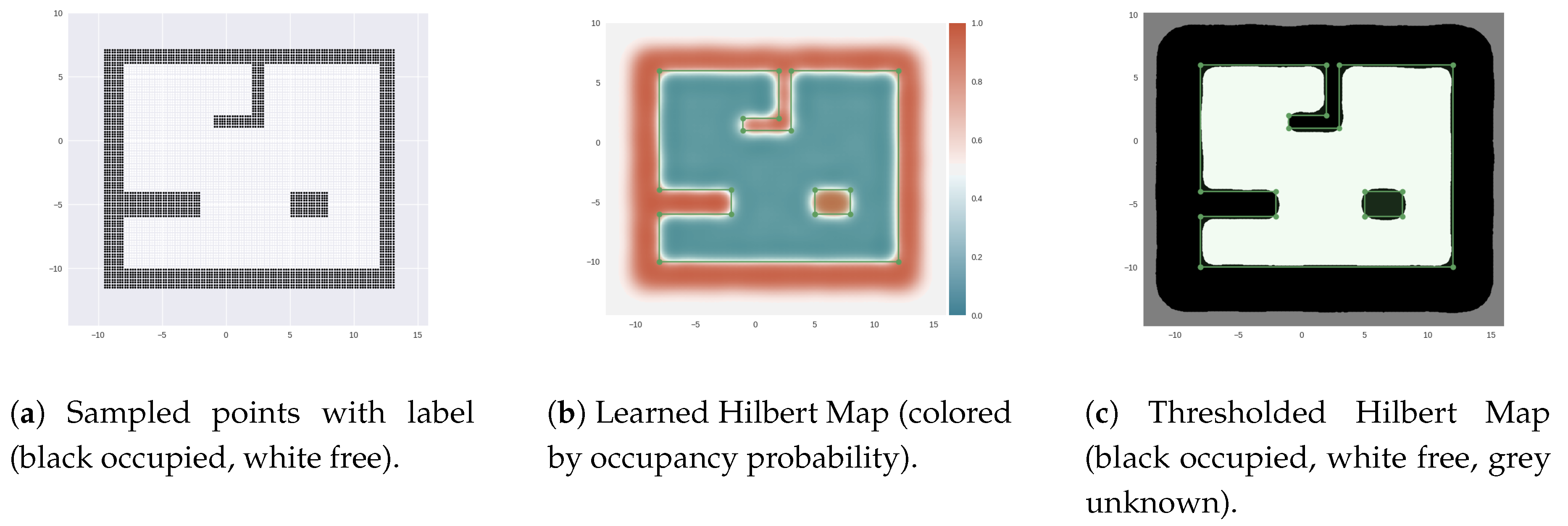

2. Hilbert Maps

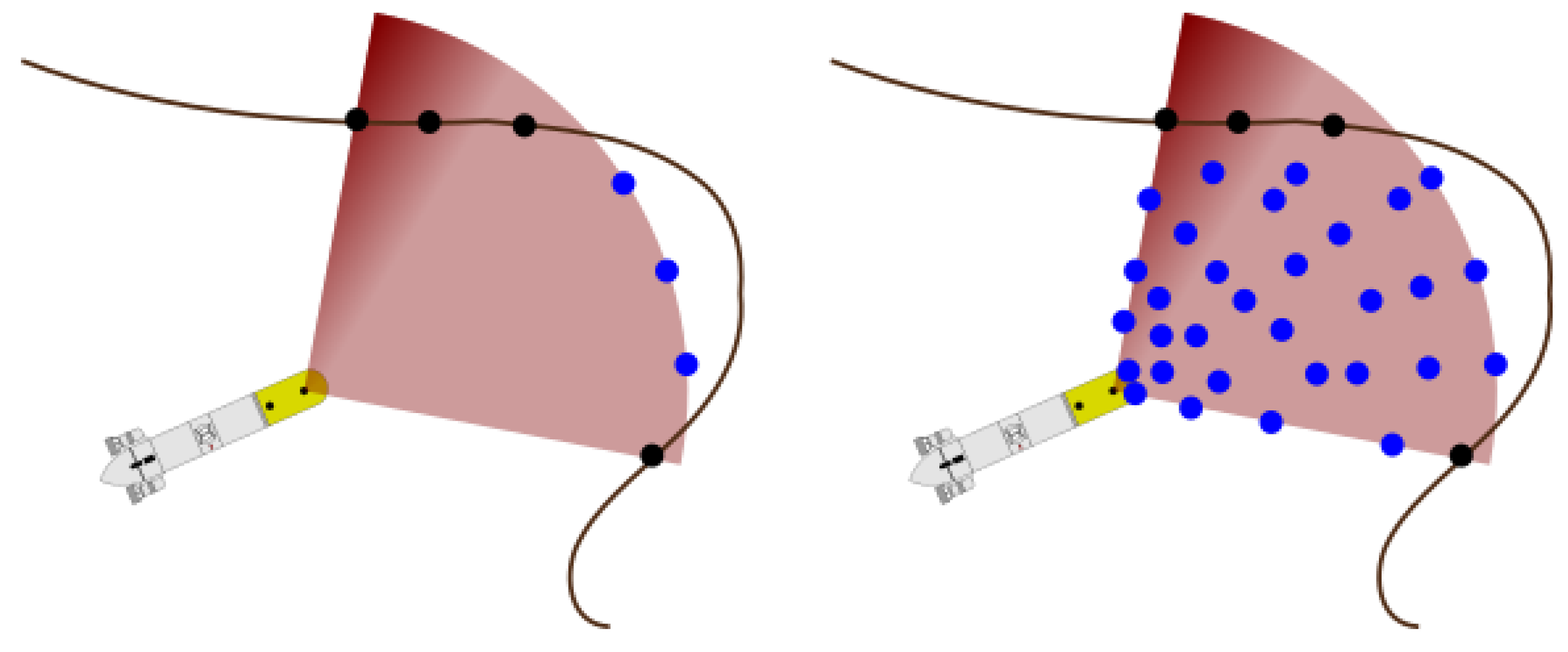

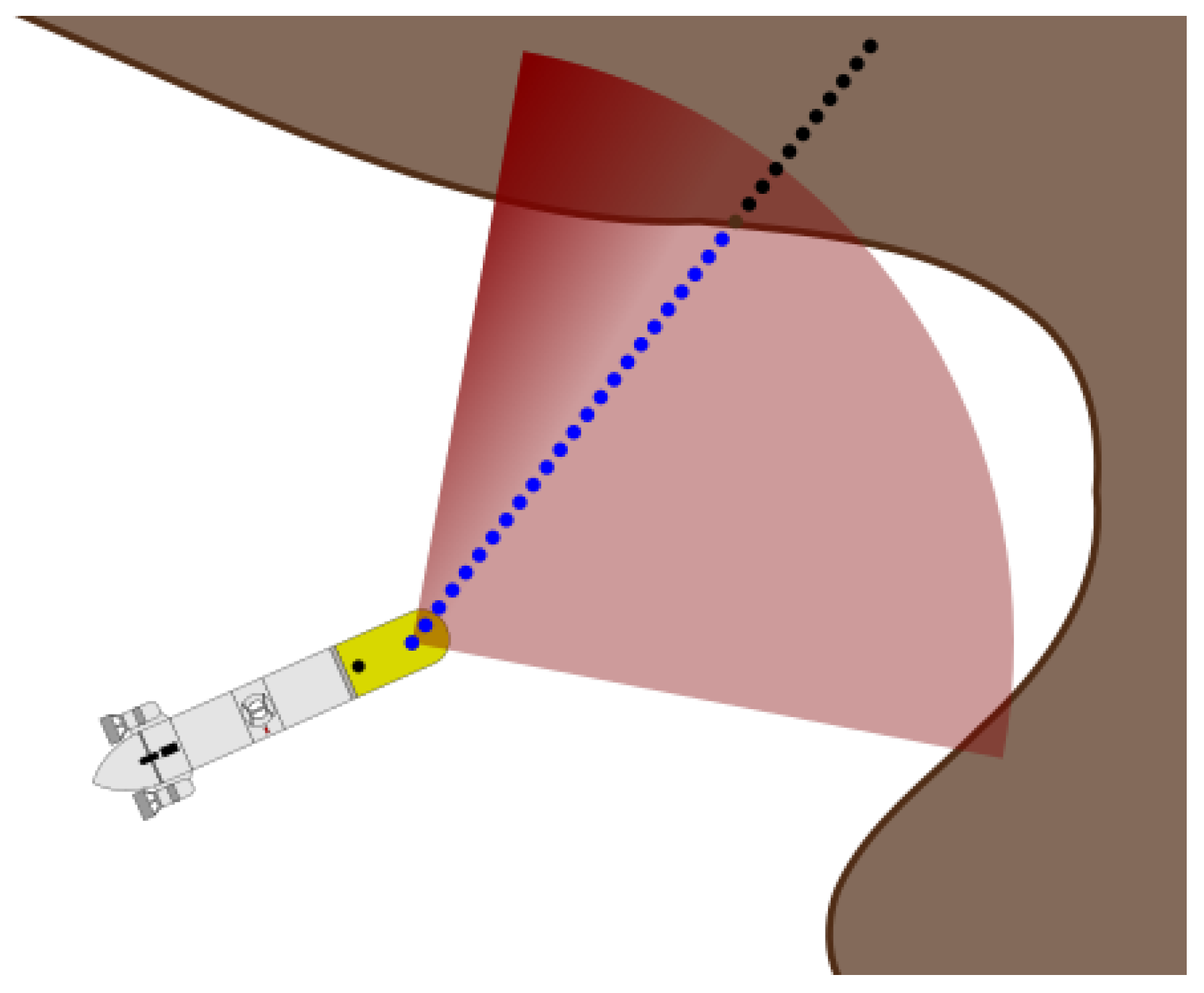

Hilbert Map Learning and Raycasting

3. Rao-Blackwellized Particle Filter with Hilbert Maps

3.1. State Propagation

3.2. State Update

3.3. Weighting, Learning and Resampling

4. Datasets

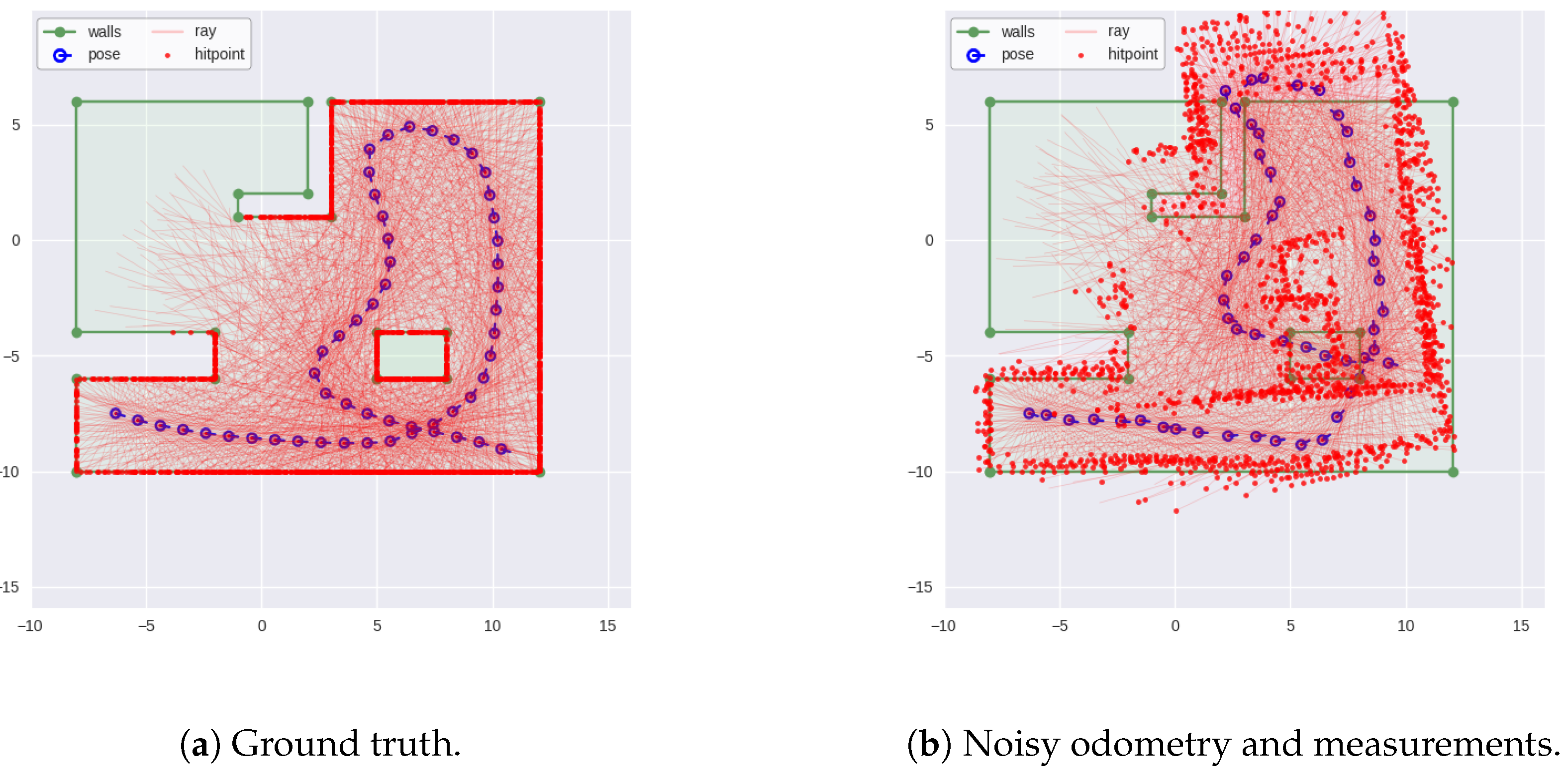

4.1. Simulated Dataset

4.2. Real-World Datasets

5. Results

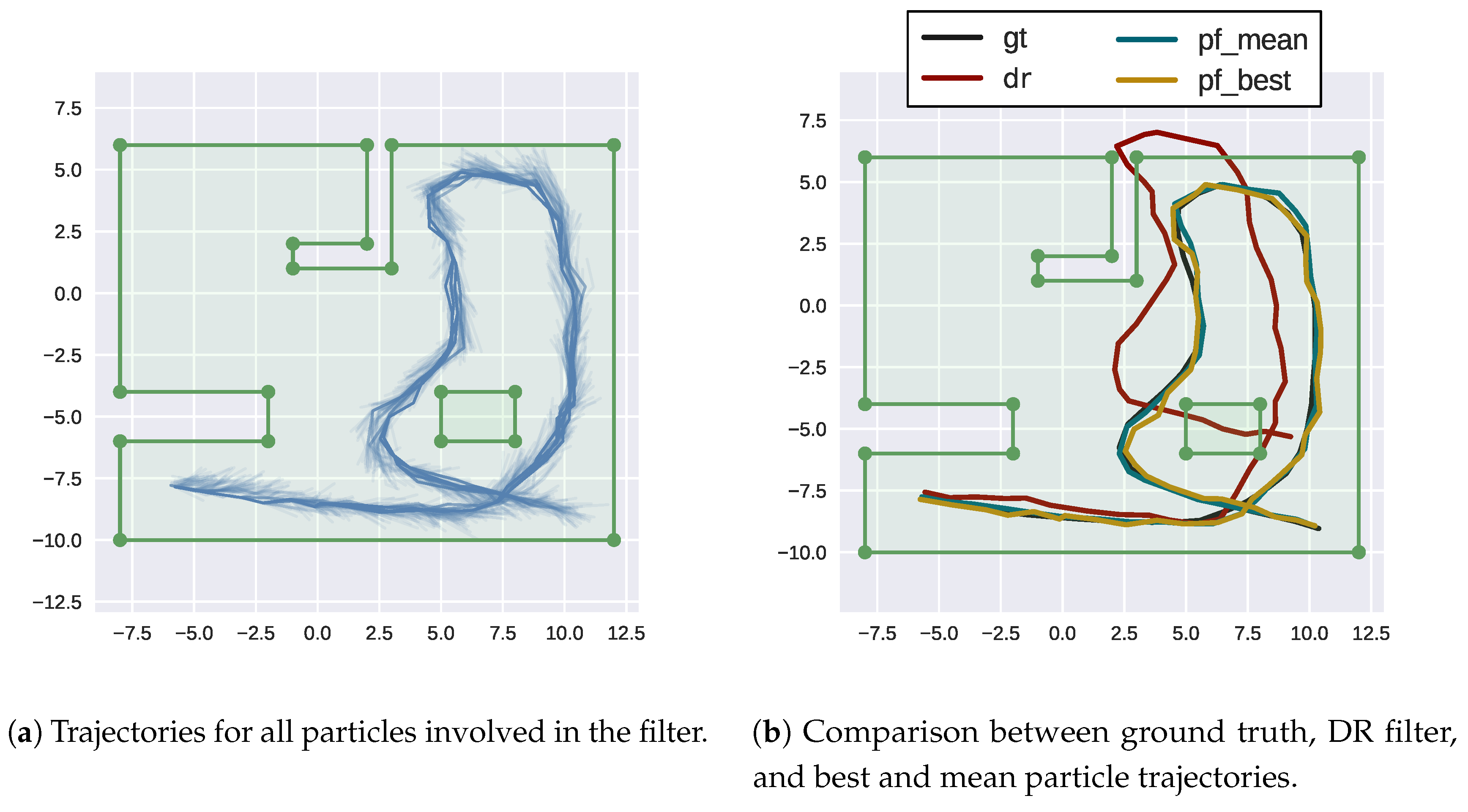

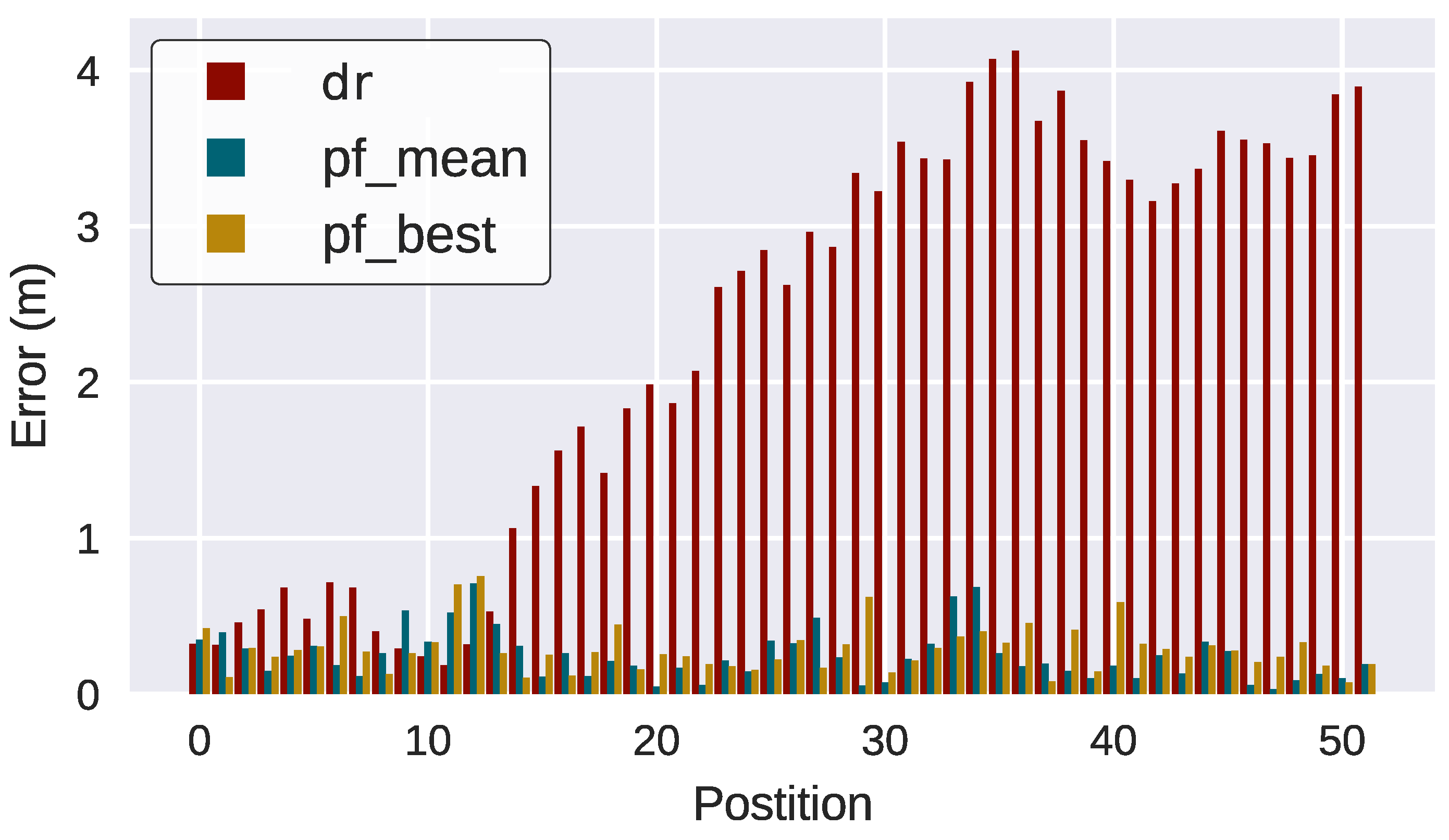

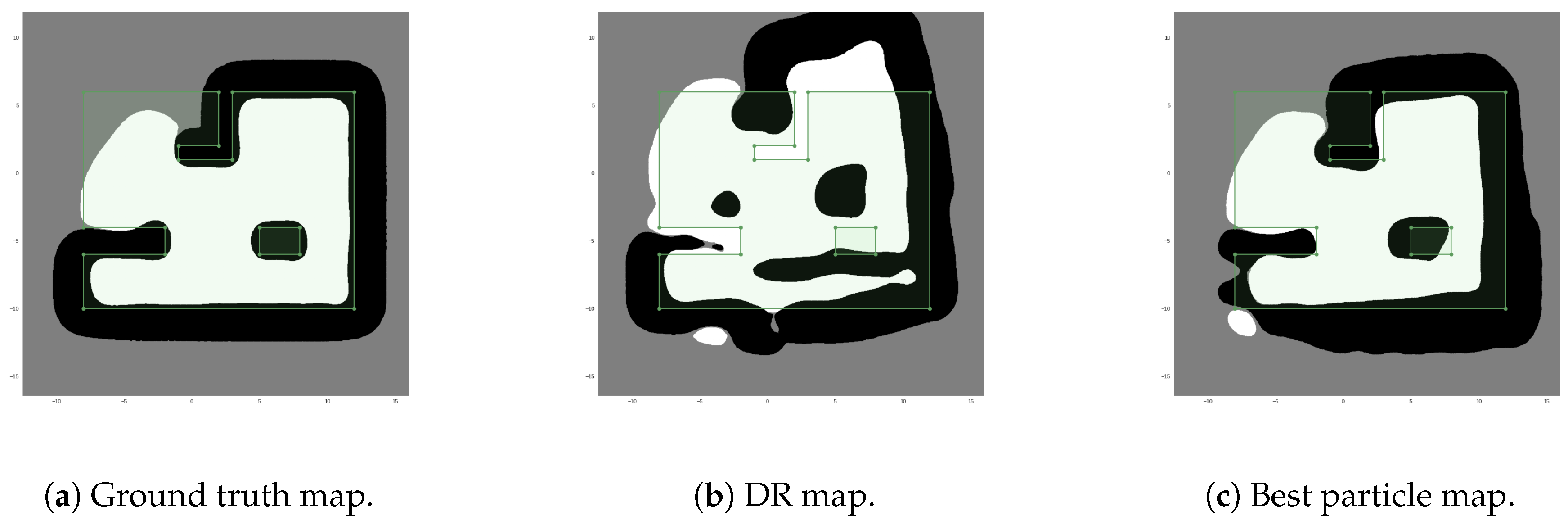

5.1. Simulated Dataset

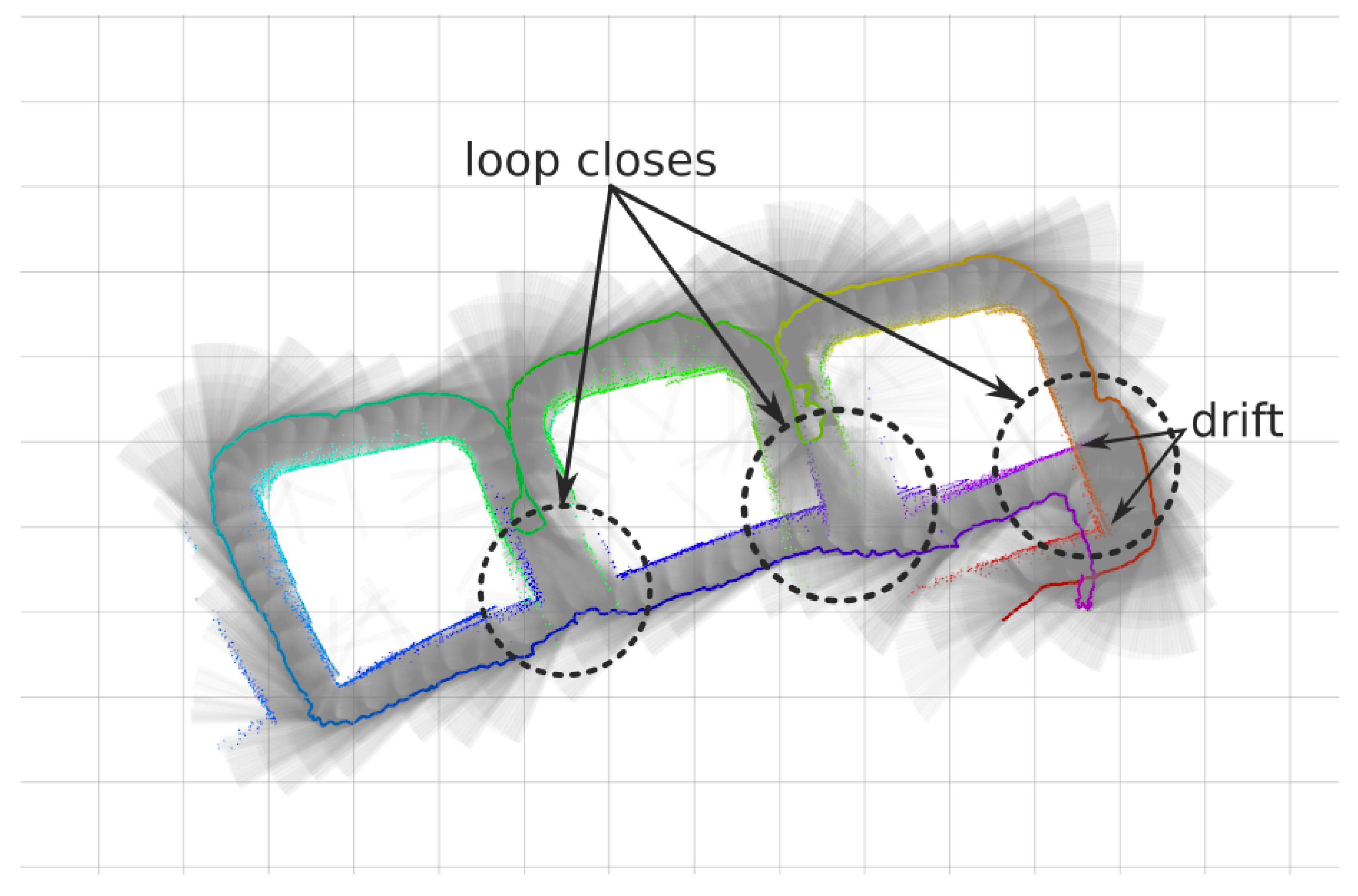

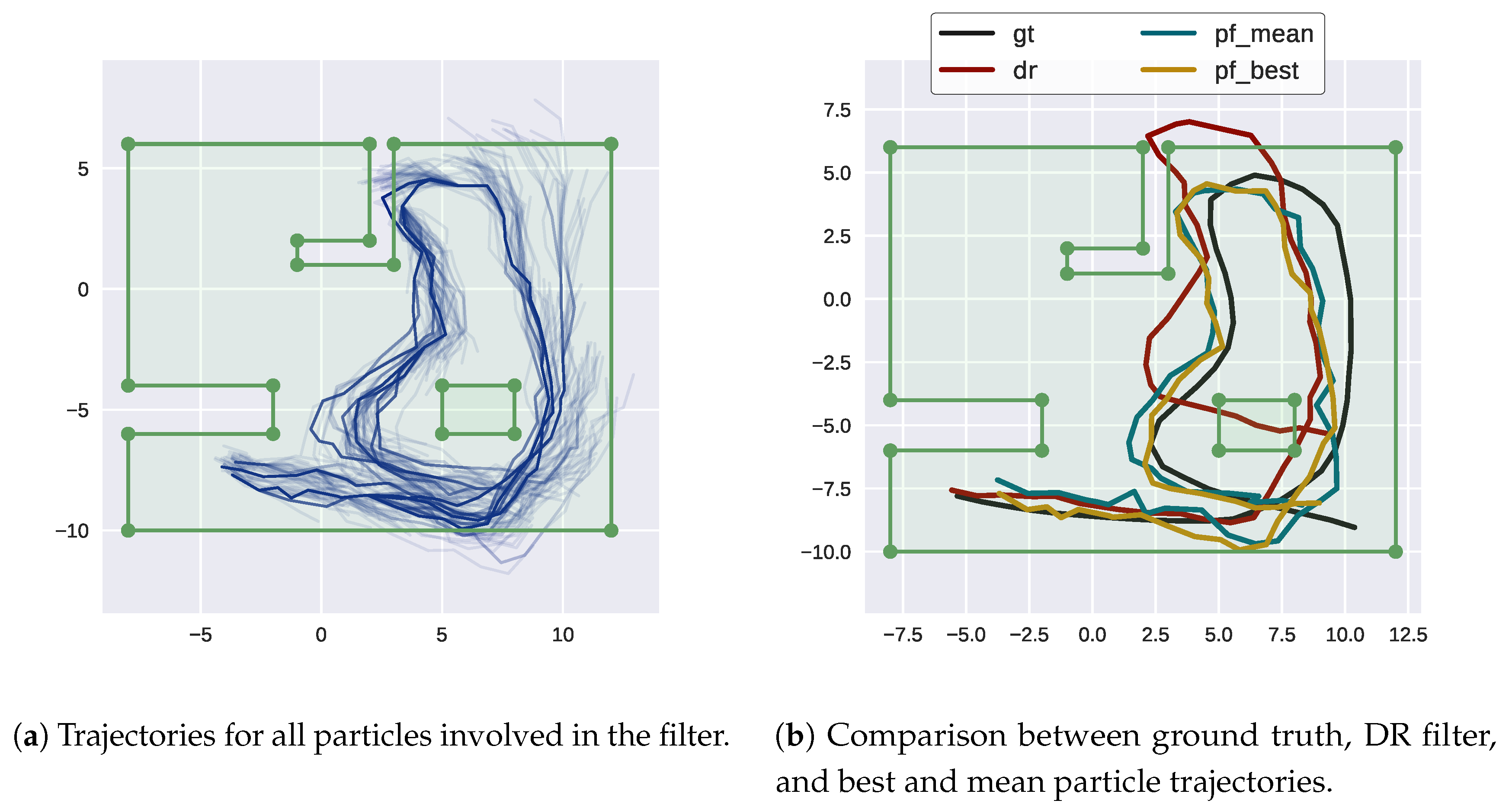

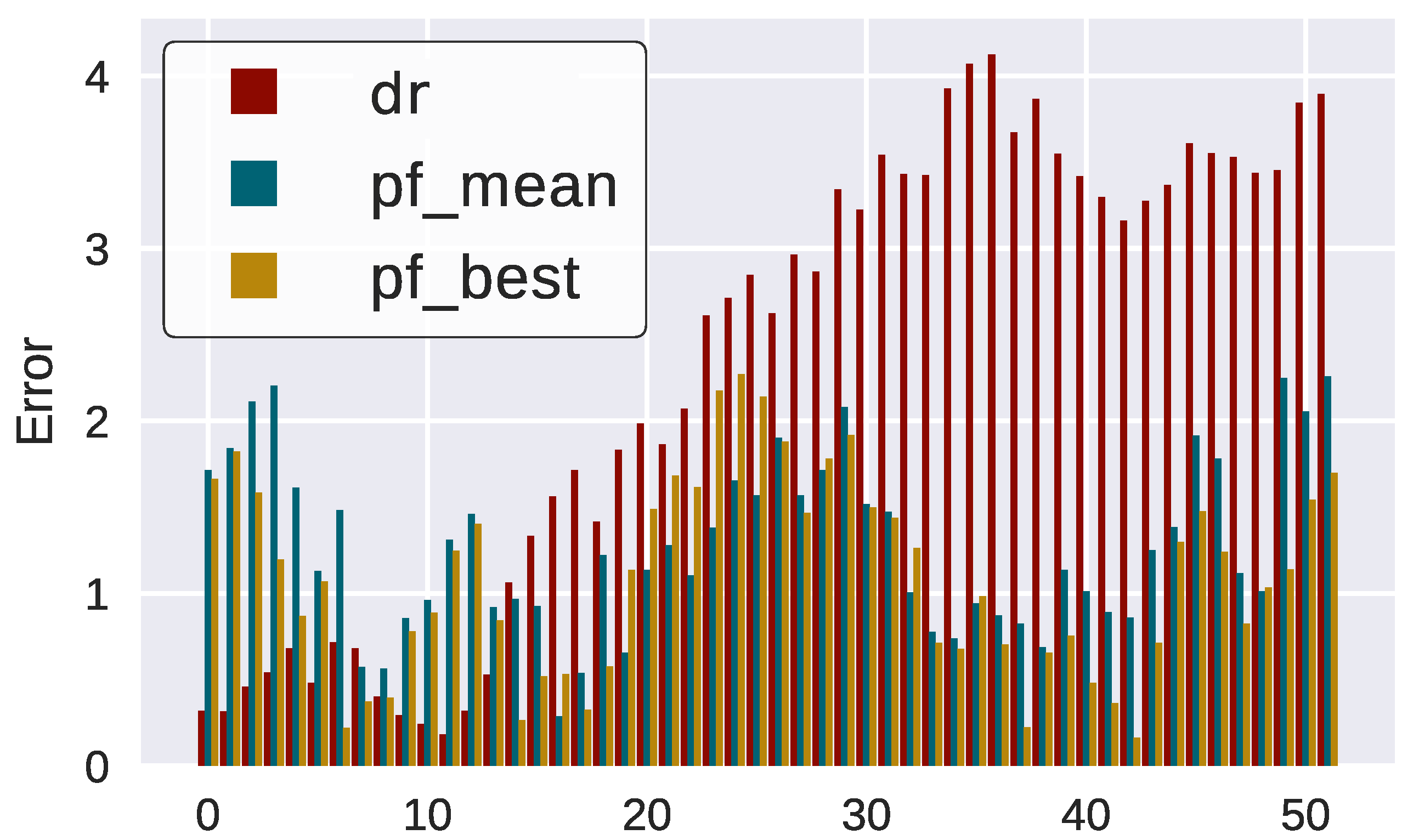

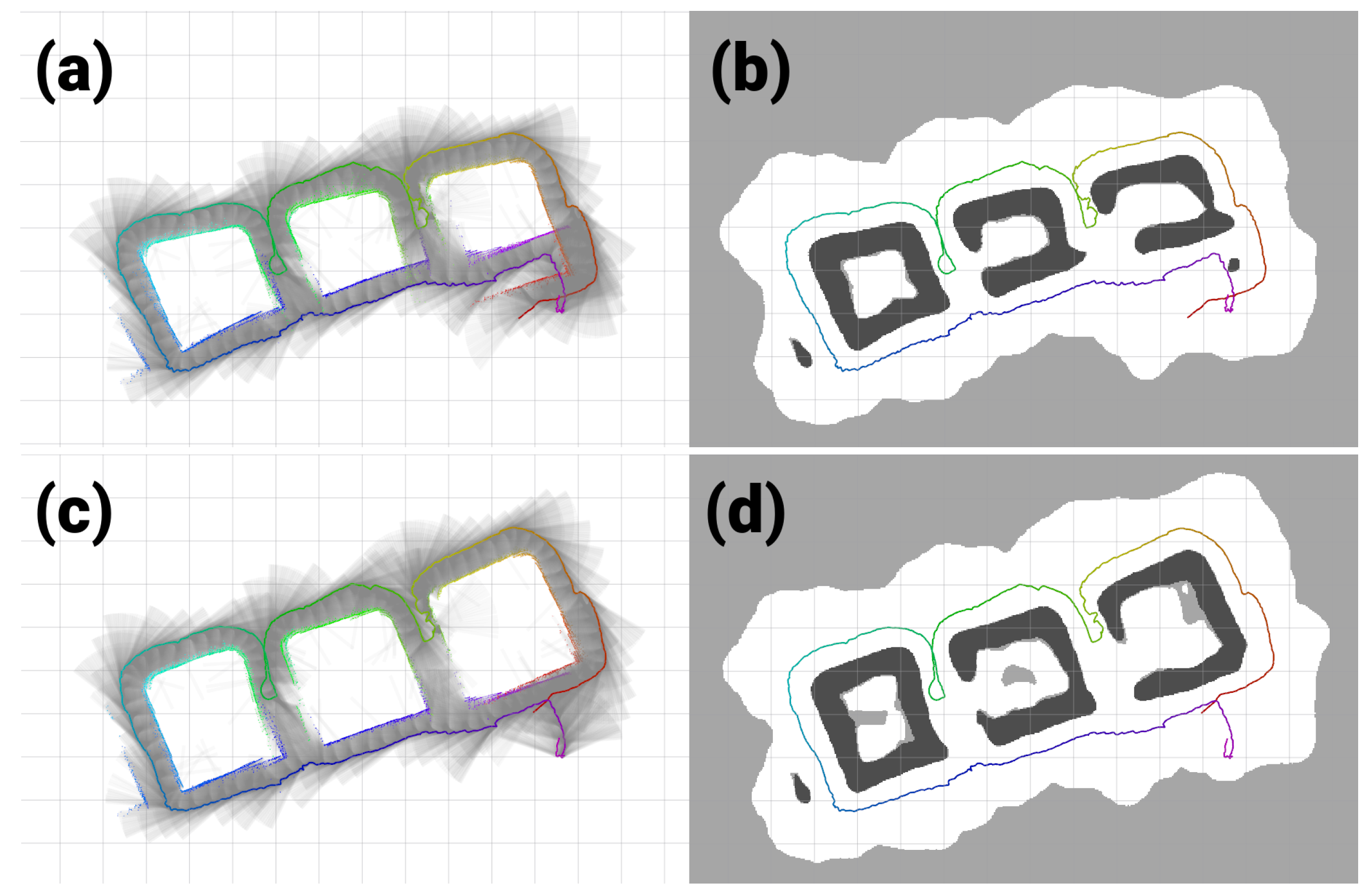

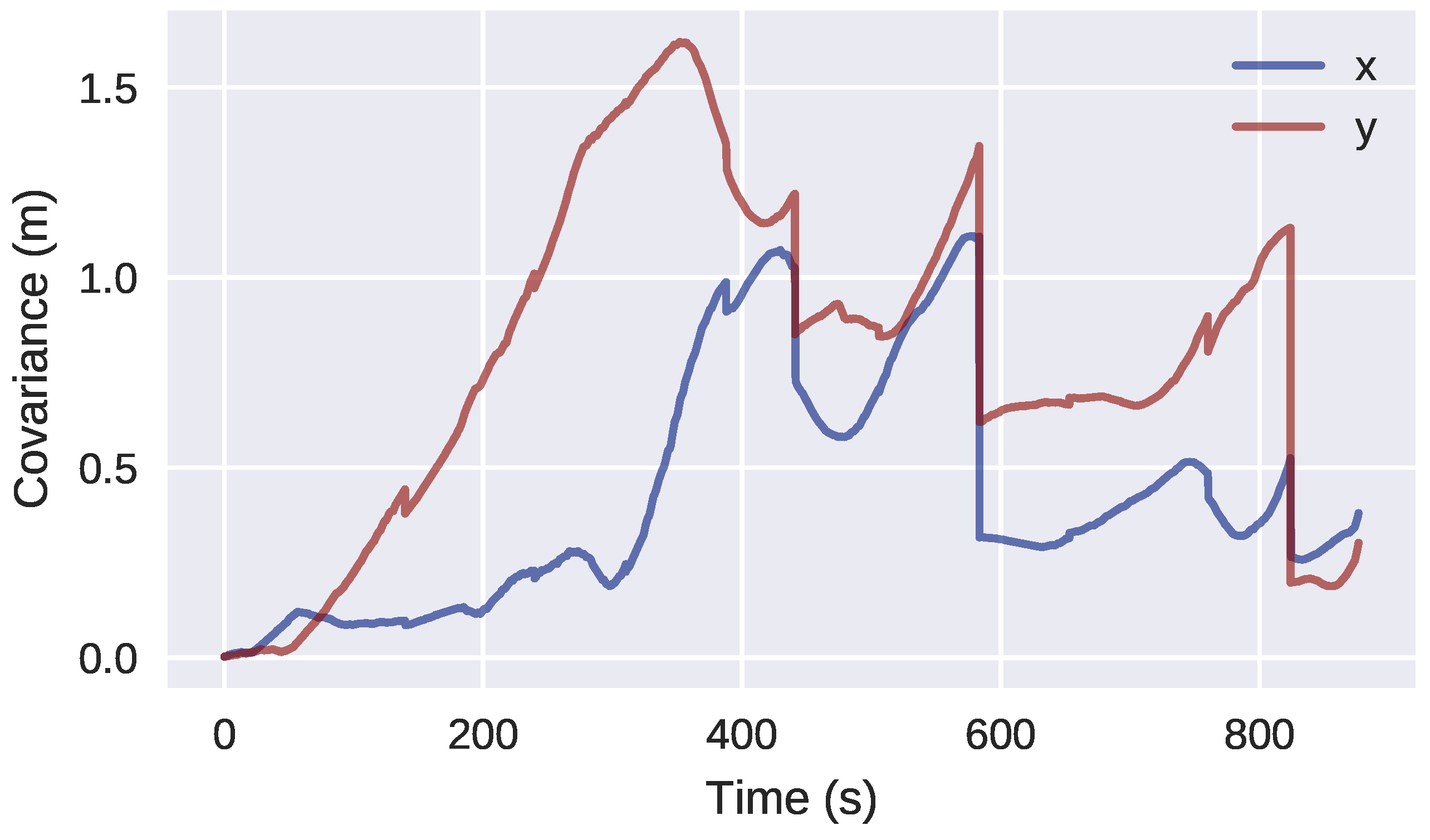

5.2. Breakwater Dataset

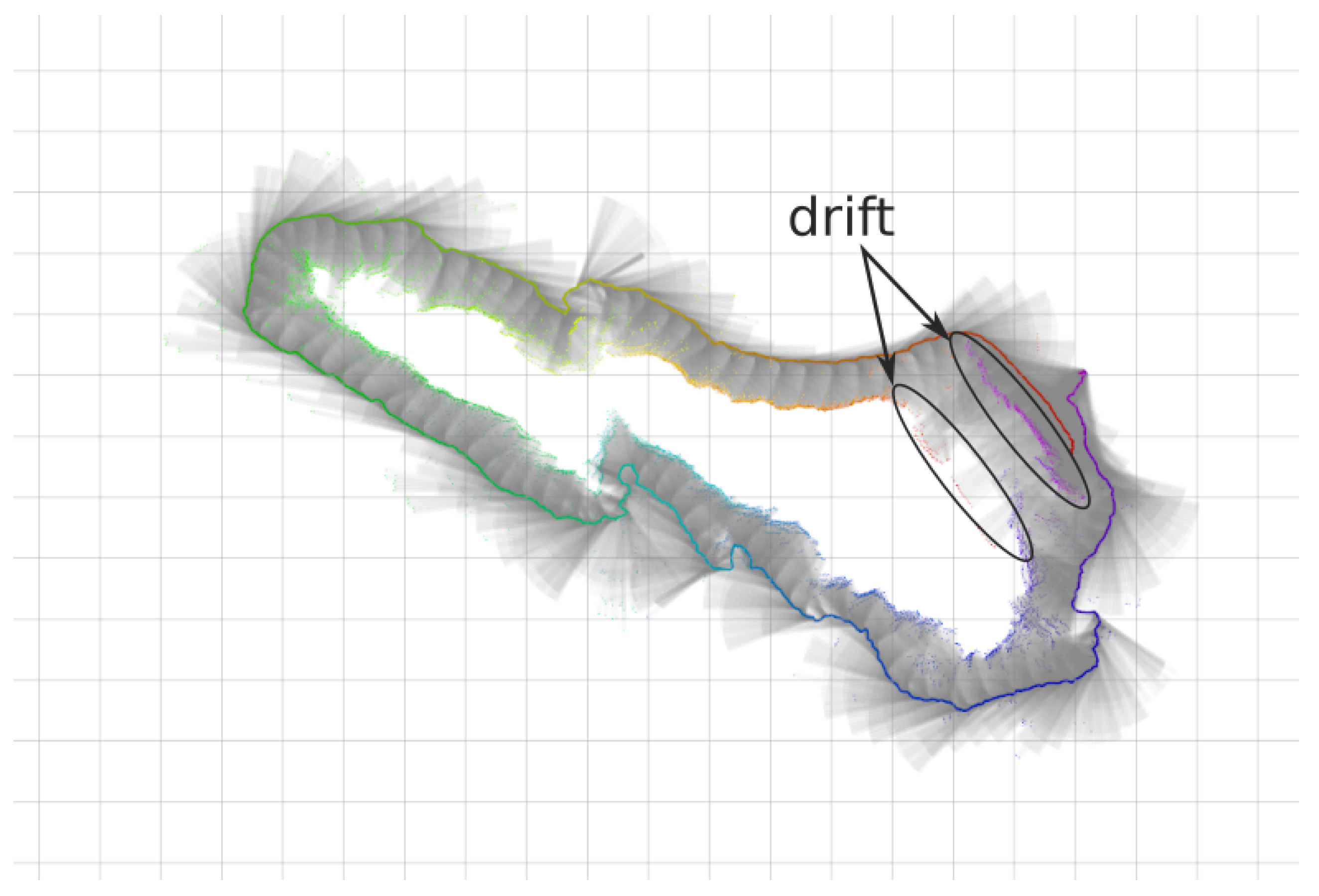

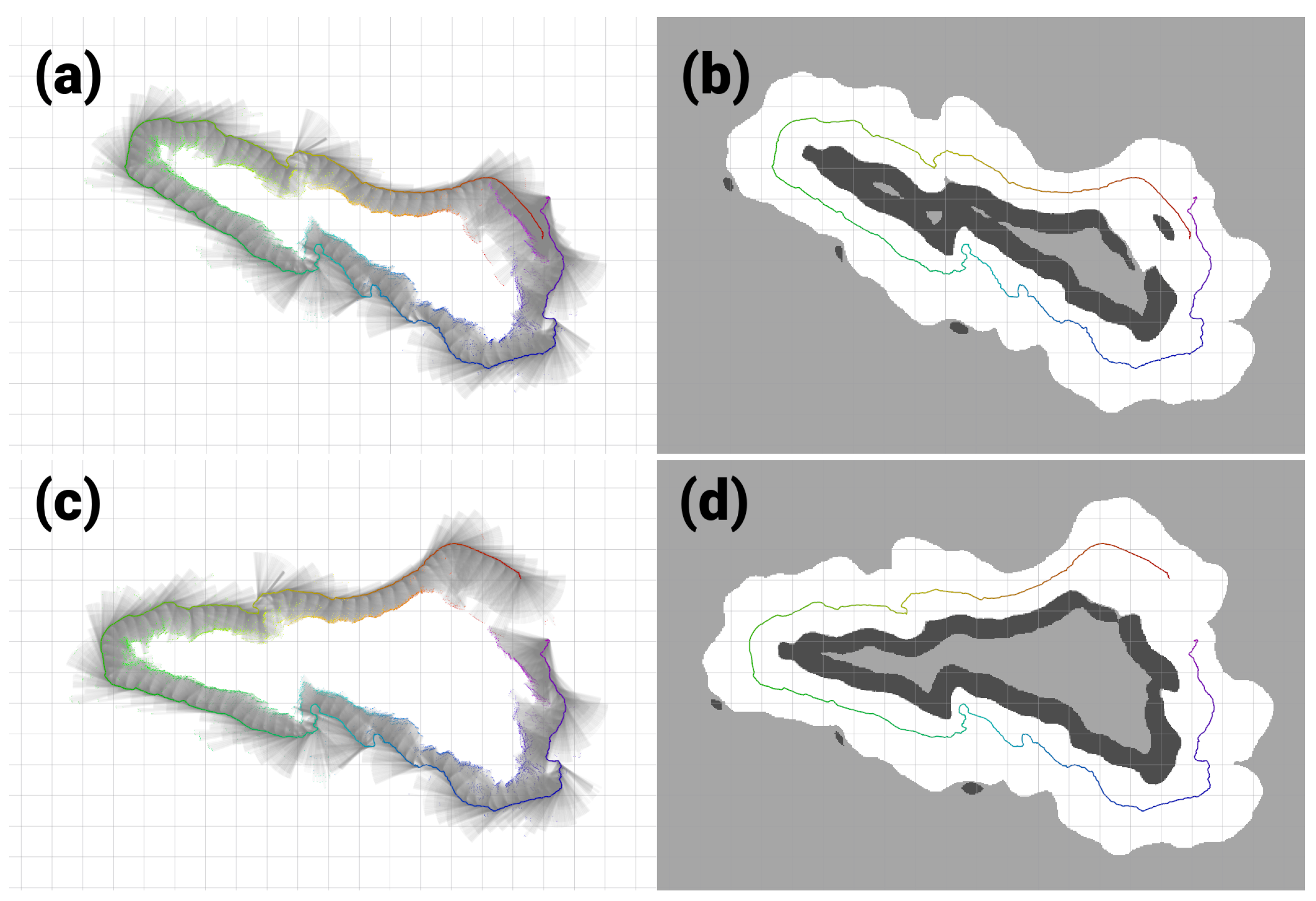

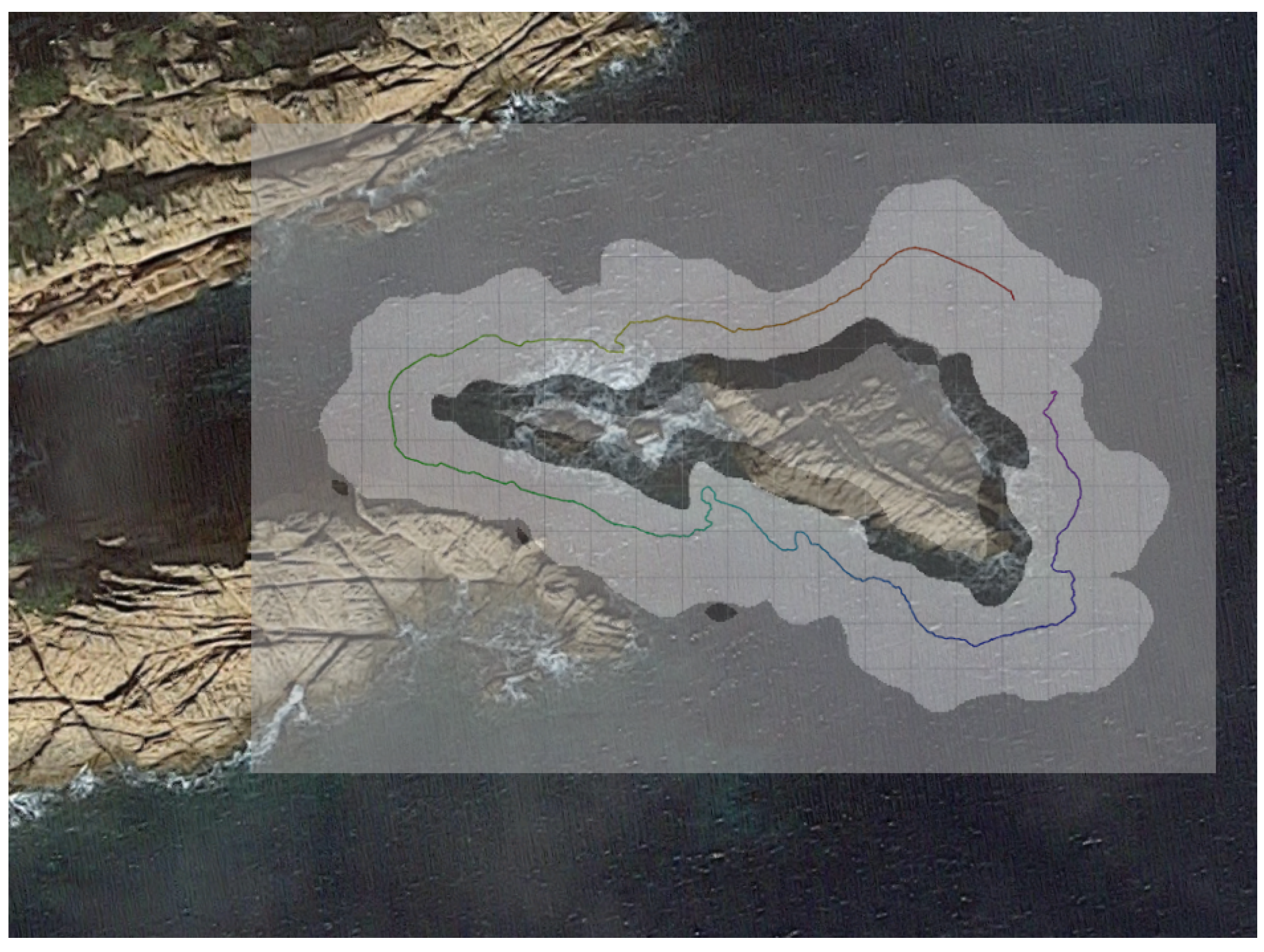

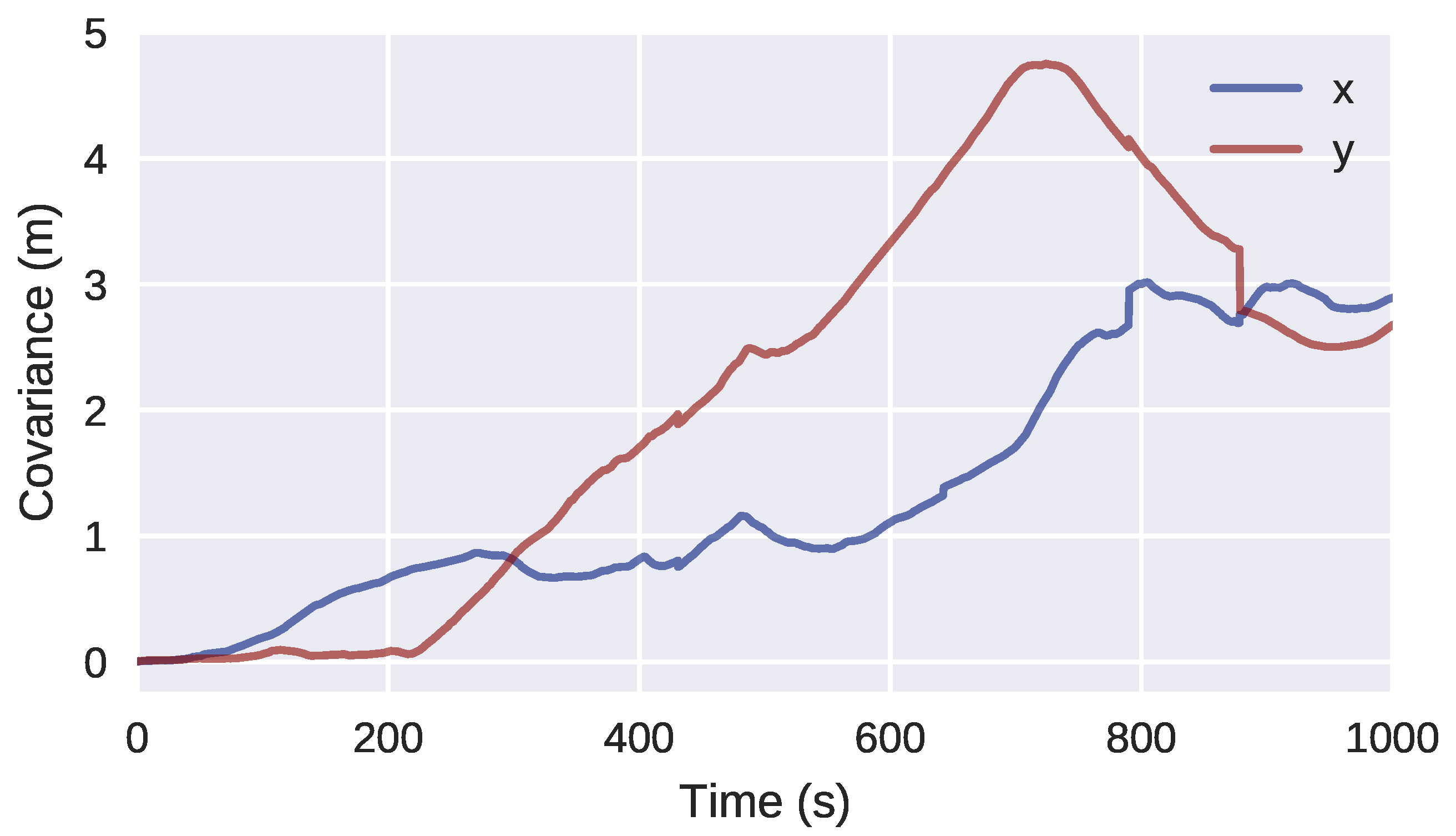

5.3. Rocks Dataset

5.4. Performance

6. Conclusions

7. Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Mandt, M.; Gade, K.; Jalving, B. Integrating DGPS-USBL position measurements with inertial navigation in the HUGIN 3000 AUV. In Proceedings of the 8th Saint Petersburg International Conference on Integrated Navigation Systems, Saint Petersburg, Russia, 28–30 May 2001; pp. 28–30. [Google Scholar]

- Thomas, H.G. GIB buoys: An interface between space and depths of the oceans. In Proceedings of the 1998 Workshop on Autonomous Underwater Vehicles, Cambridge, MA, USA, 20–21 August 1998; pp. 181–184. [Google Scholar]

- Batista, P.; Silvestre, C.; Oliveira, P. Single beacon navigation: Observability analysis and filter design. In Proceedings of the American Control Conference (ACC), Baltimore, MD, USA, 30 June–2 July 2010; pp. 6191–6196. [Google Scholar]

- Vallicrosa, G.; Ridao, P. Sum of gaussian single beacon range-only localization for AUV homing. Ann. Rev. Control 2016, 42, 177–187. [Google Scholar] [CrossRef]

- Melo, J.; Matos, A. Survey on advances on terrain based navigation for autonomous underwater vehicles. Ocean Eng. 2017, 139, 250–264. [Google Scholar] [CrossRef]

- Durrant-Whyte, H.; Bailey, T. Simultaneous localization and mapping: Part I. IEEE Robot. Autom. Mag. 2006, 13, 99–110. [Google Scholar] [CrossRef]

- Bailey, T.; Durrant-Whyte, H. Simultaneous localization and mapping (SLAM): Part II. IEEE Robot. Autom. Mag. 2006, 13, 108–117. [Google Scholar] [CrossRef]

- Dissanayake, M.G.; Newman, P.; Clark, S.; Durrant-Whyte, H.F.; Csorba, M. A solution to the simultaneous localization and map building (SLAM) problem. IEEE Trans. Robot. Autom. 2001, 17, 229–241. [Google Scholar] [CrossRef]

- Davison, A.J.; Reid, I.D.; Molton, N.D.; Stasse, O. MonoSLAM: Real-time single camera SLAM. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1052–1067. [Google Scholar] [CrossRef] [PubMed]

- Mur-Artal, R.; Tardós, J.D. ORB-SLAM2: An Open-Source SLAM System for Monocular, Stereo, and RGB-D Cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Kaess, M.; Ranganathan, A.; Dellaert, F. iSAM: Incremental smoothing and mapping. IEEE Trans. Robot. 2008, 24, 1365–1378. [Google Scholar] [CrossRef]

- Kümmerle, R.; Grisetti, G.; Strasdat, H.; Konolige, K.; Burgard, W. g2o: A general framework for graph optimization. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 3607–3613. [Google Scholar]

- Salas-Moreno, R.F.; Newcombe, R.A.; Strasdat, H.; Kelly, P.H.; Davison, A.J. Slam++: Simultaneous localisation and mapping at the level of objects. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Portland, OR, USA, 23–28 June 2013; pp. 1352–1359. [Google Scholar]

- Montemerlo, M.; Thrun, S.; Koller, D.; Wegbreit, B. FastSLAM: A factored solution to the simultaneous localization and mapping problem. In Proceedings of the AAAI National Conference on Artificial Intelligence, Edmonton, AB, Canada, 28 July–1 August 2002. [Google Scholar]

- Eustice, R.; Pizarro, O.; Singh, H.; Howland, J. UWIT: Underwater Image Toolbox for optical image processing and mosaicking in MATLAB. In Proceedings of the 2002 International Symposium on Underwater Technology, Tokyo, Japan, 16–19 April 2002; pp. 141–145. [Google Scholar]

- Gracias, N.R.; Van Der Zwaan, S.; Bernardino, A.; Santos-Victor, J. Mosaic-based navigation for autonomous underwater vehicles. IEEE J. Ocean. Eng. 2003, 28, 609–624. [Google Scholar] [CrossRef]

- Ridao, P.; Carreras, M.; Ribas, D.; Garcia, R. Visual inspection of hydroelectric dams using an autonomous underwater vehicle. J. F. Robot. 2010, 27, 759–778. [Google Scholar] [CrossRef]

- Escartín, J.; Garcia, R.; Delaunoy, O.; Ferrer, J.; Gracias, N.; Elibol, A.; Cufi, X.; Neumann, L.; Fornari, D.J.; Humphris, S.E.; et al. Globally aligned photomosaic of the Lucky Strike hydrothermal vent field (Mid- Atlantic Ridge, 37°18.5′ N): Release of georeferenced data, mosaic construction, and viewing software. Geochem. Geophys. Geosyst. 2008, 9. [Google Scholar] [CrossRef]

- Singh, H.; Howland, J.; Pizarro, O. Advances in large-area photomosaicking underwater. IEEE J. Ocean. Eng. 2004, 29, 872–886. [Google Scholar] [CrossRef]

- Bingham, B.; Foley, B.; Singh, H.; Camilli, R.; Delaporta, K.; Eustice, R.; Mallios, A.; Mindell, D.; Roman, C.; Sakellariou, D. Robotic tools for deep water archaeology: Surveying an ancient shipwreck with an autonomous underwater vehicle. J. F. Robot. 2010, 27, 702–717. [Google Scholar] [CrossRef]

- Elibol, A.; Gracias, N.; Garcia, R.; Gleason, A.; Gintert, B.; Lirman, D.; Reid, P. Efficient autonomous image mosaicing with applications to coral reef monitoring. In Proceedings of the IROS 2011 Workshop on Robotics for Environmental Monitoring, San Francisco, CA, USA, 25–30 September 2011. [Google Scholar]

- Eustice, R.; Singh, H.; Leonard, J.J.; Walter, M.R.; Ballard, R. Visually Navigating the RMS Titanic with SLAM Information Filters. Robot. Sci. Syst. 2005, 6, 57–64. [Google Scholar]

- Williams, S.; Mahon, I. Simultaneous localisation and mapping on the great barrier reef. In Proceedings of the 2004 IEEE International Conference on Robotics and Automation, New Orleans, LA, USA, 26 April–1 May 2004; Volume 2, pp. 1771–1776. [Google Scholar]

- Pizarro, O.; Eustice, R.; Singh, H. Large area 3D reconstructions from underwater surveys. In Proceedings of the OCEANS’04, MTTS/IEEE TECHNO-OCEAN’04, Kobe, Japan, 9–12 November 2004; Volume 2, pp. 678–687. [Google Scholar]

- Nicosevici, T.; Gracias, N.; Negahdaripour, S.; Garcia, R. Efficient three-dimensional scene modeling and mosaicing. J. F. Robot. 2009, 26, 759–788. [Google Scholar] [CrossRef]

- Zhang, H.; Negahdaripour, S. EKF-based recursive dual estimation of structure and motion from stereo data. IEEE J. Ocean. Eng. 2010, 35, 424–437. [Google Scholar] [CrossRef]

- Johnson-Roberson, M.; Pizarro, O.; Williams, S.B.; Mahon, I. Generation and visualization of large-scale three-dimensional reconstructions from underwater robotic surveys. J. F. Robot. 2010, 27, 21–51. [Google Scholar] [CrossRef]

- Kim, K.; Neretti, N.; Intrator, N. Mosaicing of acoustic camera images. IEE Proc. Radar Sonar Navig. 2005, 152, 263–270. [Google Scholar] [CrossRef]

- Negahdaripour, S.; Firoozfam, P.; Sabzmeydani, P. On processing and registration of forward-scan acoustic video imagery. In Proceedings of the 2nd Canadian Conference on Computer and Robot Vision, Victoria, BC, Canada, 9–11 May 2005; pp. 452–459. [Google Scholar]

- Aykin, M.D.; Negahdaripour, S. On Feature Matching and Image Registration for Two-dimensional Forward-scan Sonar Imaging. J. F. Robot. 2013, 30, 602–623. [Google Scholar] [CrossRef]

- Hurtós, N.; Ribas, D.; Cufí, X.; Petillot, Y.; Salvi, J. Fourier-based Registration for Robust Forward-looking Sonar Mosaicing in Low-visibility Underwater Environments. J. F. Robot. 2015, 32, 123–151. [Google Scholar] [CrossRef]

- Roman, C.; Singh, H. Improved vehicle based multibeam bathymetry using sub-maps and SLAM. In Proceedings of the 2005 IEEE/RSJ International Conference on Intelligent Robots and Systems, Edmonton, AB, Canada, 2–6 August 2005; pp. 3662–3669. [Google Scholar]

- Barkby, S.; Williams, S.B.; Pizarro, O.; Jakuba, M.V. A featureless approach to efficient bathymetric SLAM using distributed particle mapping. J. F. Robot. 2011, 28, 19–39. [Google Scholar] [CrossRef]

- Barkby, S.; Williams, S.B.; Pizarro, O.; Jakuba, M.V. Bathymetric particle filter SLAM using trajectory maps. Int. J. Robot. Res. 2012, 31, 1409–1430. [Google Scholar] [CrossRef]

- Palomer, A.; Ridao, P.; Ribas, D. Multibeam 3D underwater SLAM with probabilistic registration. Sensors 2016, 16, 560. [Google Scholar] [CrossRef] [PubMed]

- Ribas, D.; Ridao, P.; Tardós, J.D.; Neira, J. Underwater SLAM in man-made structured environments. J. F. Robot. 2008, 25, 898–921. [Google Scholar] [CrossRef]

- Fairfield, N.; Kantor, G.; Wettergreen, D. Real-Time SLAM with Octree Evidence Grids for Exploration in Underwater Tunnels. J. F. Robot. 2007, 24, 3–21. [Google Scholar] [CrossRef]

- Mallios, A.; Ridao, P.; Ribas, D.; Hernández, E. Scan matching SLAM in underwater environments. Auton. Robot. 2014, 36, 181–198. [Google Scholar] [CrossRef]

- Hernández, J.D.; Vidal, E.; Greer, J.; Fiasco, R.; Jaussaud, P.; Carreras, M.; García, R. AUV online mission replanning for gap filling and target inspection. In Proceedings of the OCEANS 2017-Aberdeen, Aberdeen, UK, 19–22 June 2017; pp. 1–4. [Google Scholar]

- Palomeras, N.; Carrera, A.; Hurtós, N.; Karras, G.C.; Bechlioulis, C.P.; Cashmore, M.; Magazzeni, D.; Long, D.; Fox, M.; Kyriakopoulos, K.J.; et al. Toward persistent autonomous intervention in a subsea panel. Auton. Robot. 2016, 40, 1279–1306. [Google Scholar] [CrossRef]

- Ramos, F.; Ott, L. Hilbert maps: Scalable continuous occupancy mapping with stochastic gradient descent. Int. J. Robot. Res. 2016, 35, 1717–1730. [Google Scholar] [CrossRef]

- O’Callaghan, S.T.; Ramos, F.T. Gaussian process occupancy maps. Int. J. Robot. Res. 2012, 31, 42–62. [Google Scholar] [CrossRef]

- Guizilini, V.; Ramos, F. Large-scale 3D scene reconstruction with Hilbert Maps. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 3247–3254. [Google Scholar]

- Guizilini, V.C.; Ramos, F.T. Unsupervised Feature Learning for 3D Scene Reconstruction with Occupancy Maps. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; pp. 3827–3833. [Google Scholar]

- Amanatides, J.; Woo, A. A fast voxel traversal algorithm for ray tracing. Eurographics 1987, 87, 3–10. [Google Scholar]

- Hata, A.Y.; Wolf, D.F.; Ramos, F.T. Particle filter localization on continuous occupancy maps. In International Symposium on Experimental Robotics; Springer: Roppongi, Tokyo, Japan, 3–6 October 2016; pp. 742–751. [Google Scholar]

- Doucet, A.; De Freitas, N.; Murphy, K.; Russell, S. Rao-Blackwellised particle filtering for dynamic Bayesian networks. In Proceedings of the Sixteenth Conference on Uncertainty in Artificial Intelligence, San Francisco, CA, USA, 30 June–3 July 2000; Morgan Kaufmann Publishers Inc.: San Mateo, CA, USA, 2000; pp. 176–183. [Google Scholar]

- Thrun, S. Probabilistic Robotics. Commun. ACM 2002, 45, 52–57. [Google Scholar] [CrossRef]

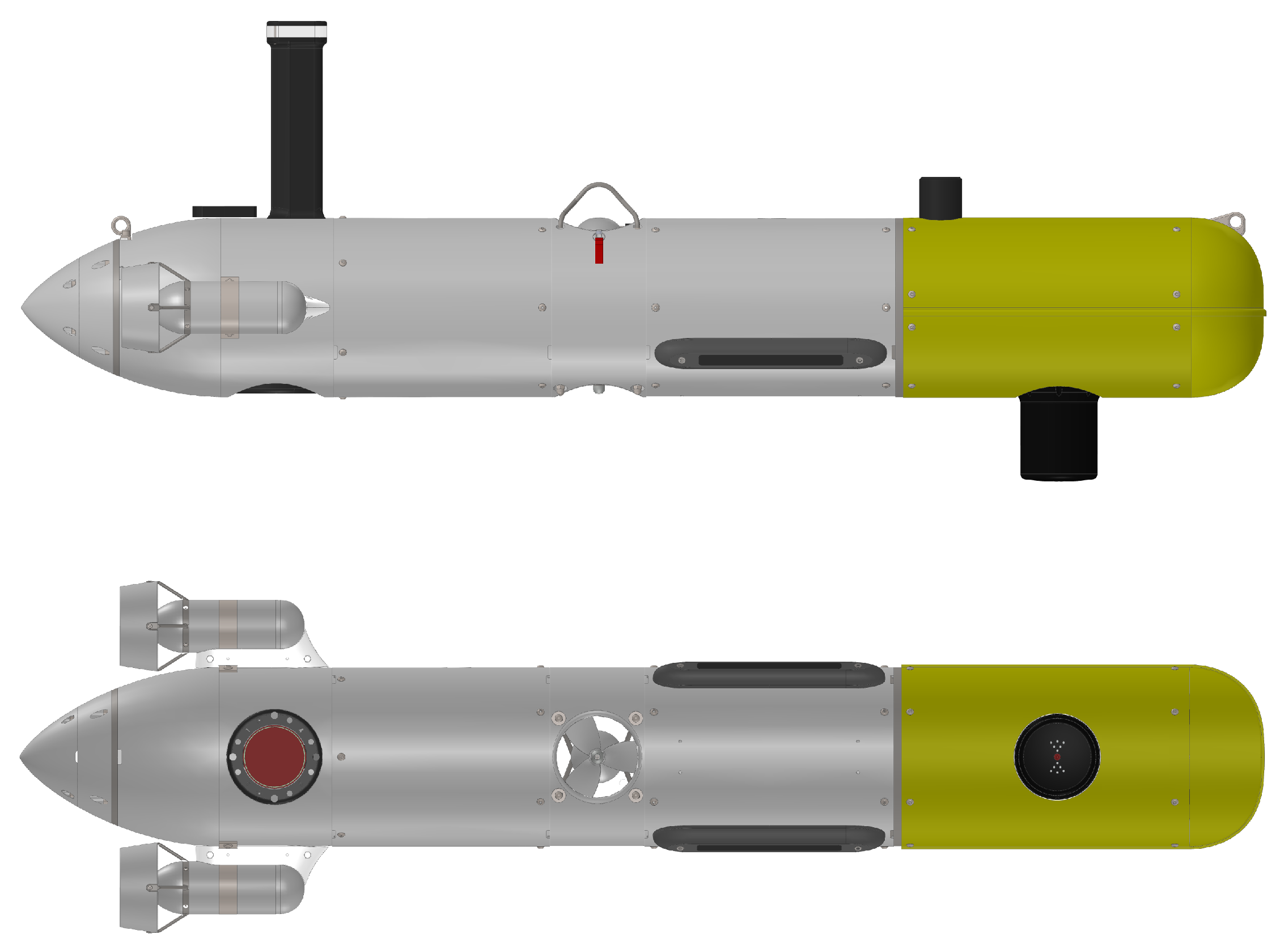

- Carreras, M.; Hernández, J.D.; Vidal, E.; Palomeras, N.; Ribas, D.; Ridao, P. Sparus II AUV—A Hovering Vehicle for Seabed Inspection. IEEE J. Ocean. Eng. 2018. [Google Scholar] [CrossRef]

- Vidal, E.; Hernández, J.D.; Istenič, K.; Carreras, M. Optimized environment exploration for autonomous underwater vehicles. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018. (to be published). [Google Scholar]

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A.Y. ROS: An open-source Robot Operating System. In Proceedings of the ICRA Workshop on Open Source Software, Kobe, Japan, 17 May 2009; Volume 3, p. 5. [Google Scholar]

| Parameter | Simulated | Breakwater | Rocks |

|---|---|---|---|

| Feature resolution (m) | 0.5 | 1.0 | 1.0 |

| Radius neighbourhood (m) | 1.5 | 2.0 | 2.0 |

| Linear covariance (m) | 0.25 | - | - |

| Angular covariance (degree) | 2 | - | - |

| Range covariance (m) | 0.05 | 0.4 | 0.4 |

| Number of particles | 40 | 40 | 40 |

| Breakwater | Rocks | |

|---|---|---|

| Time to obtain dataset | 14 min 36 s | 16 min 54 s |

| Time to run H-SLAM | 02 min 26 s | 03 min 42 s |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vallicrosa, G.; Ridao, P. H-SLAM: Rao-Blackwellized Particle Filter SLAM Using Hilbert Maps. Sensors 2018, 18, 1386. https://doi.org/10.3390/s18051386

Vallicrosa G, Ridao P. H-SLAM: Rao-Blackwellized Particle Filter SLAM Using Hilbert Maps. Sensors. 2018; 18(5):1386. https://doi.org/10.3390/s18051386

Chicago/Turabian StyleVallicrosa, Guillem, and Pere Ridao. 2018. "H-SLAM: Rao-Blackwellized Particle Filter SLAM Using Hilbert Maps" Sensors 18, no. 5: 1386. https://doi.org/10.3390/s18051386

APA StyleVallicrosa, G., & Ridao, P. (2018). H-SLAM: Rao-Blackwellized Particle Filter SLAM Using Hilbert Maps. Sensors, 18(5), 1386. https://doi.org/10.3390/s18051386