A Single RF Emitter-Based Indoor Navigation Method for Autonomous Service Robots

Abstract

:1. Introduction

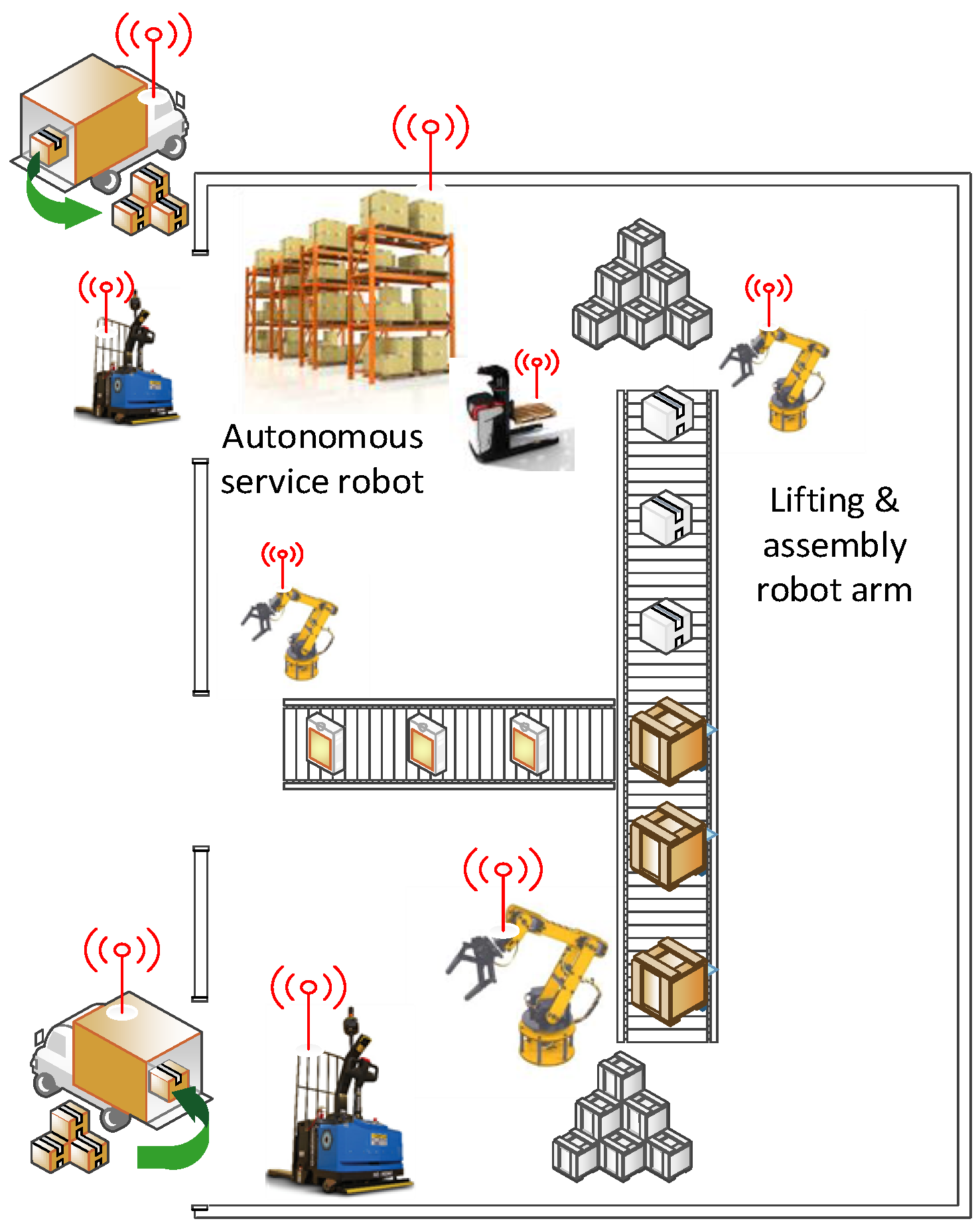

2. Motivating Scenario: Autonomous Factory Service Robot

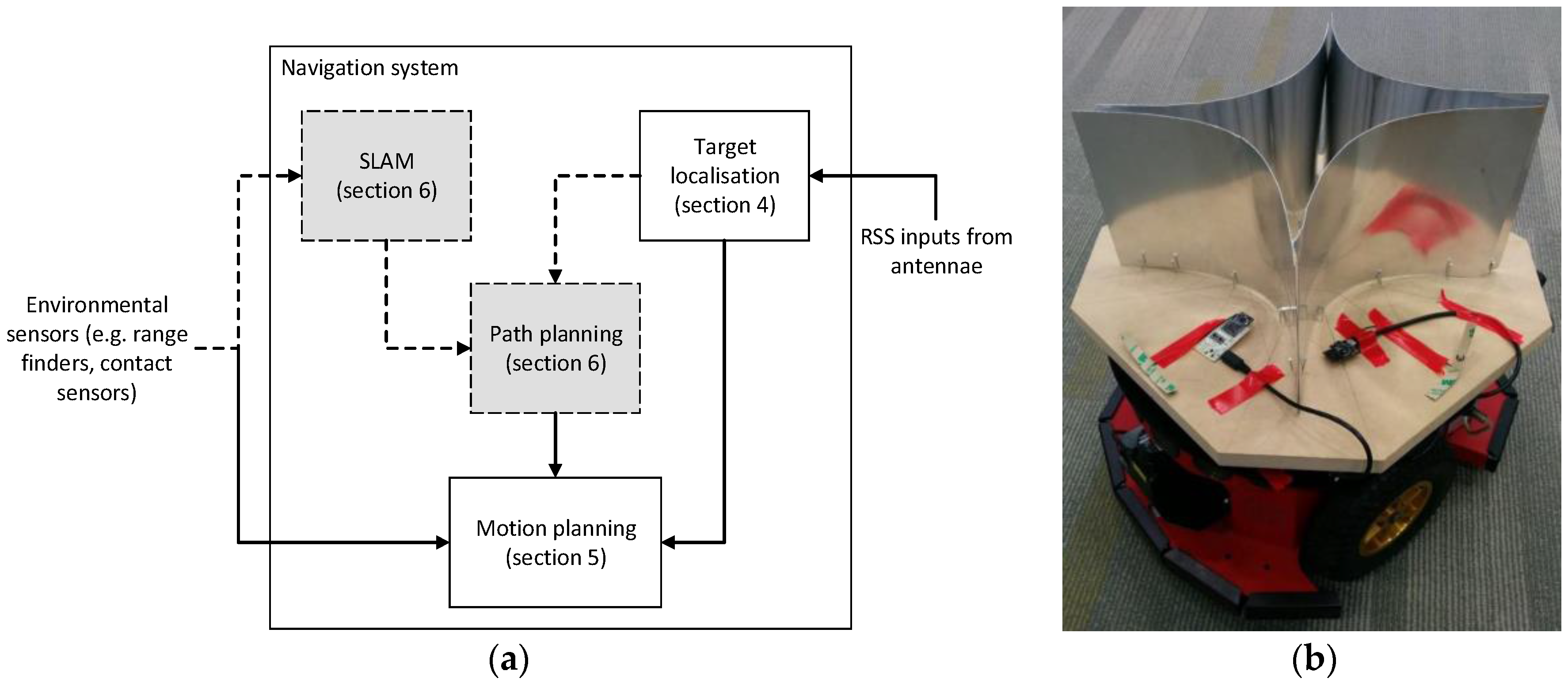

3. Autonomous Robot System Design

4. RF-Based Target Localisation

4.1. Related Works

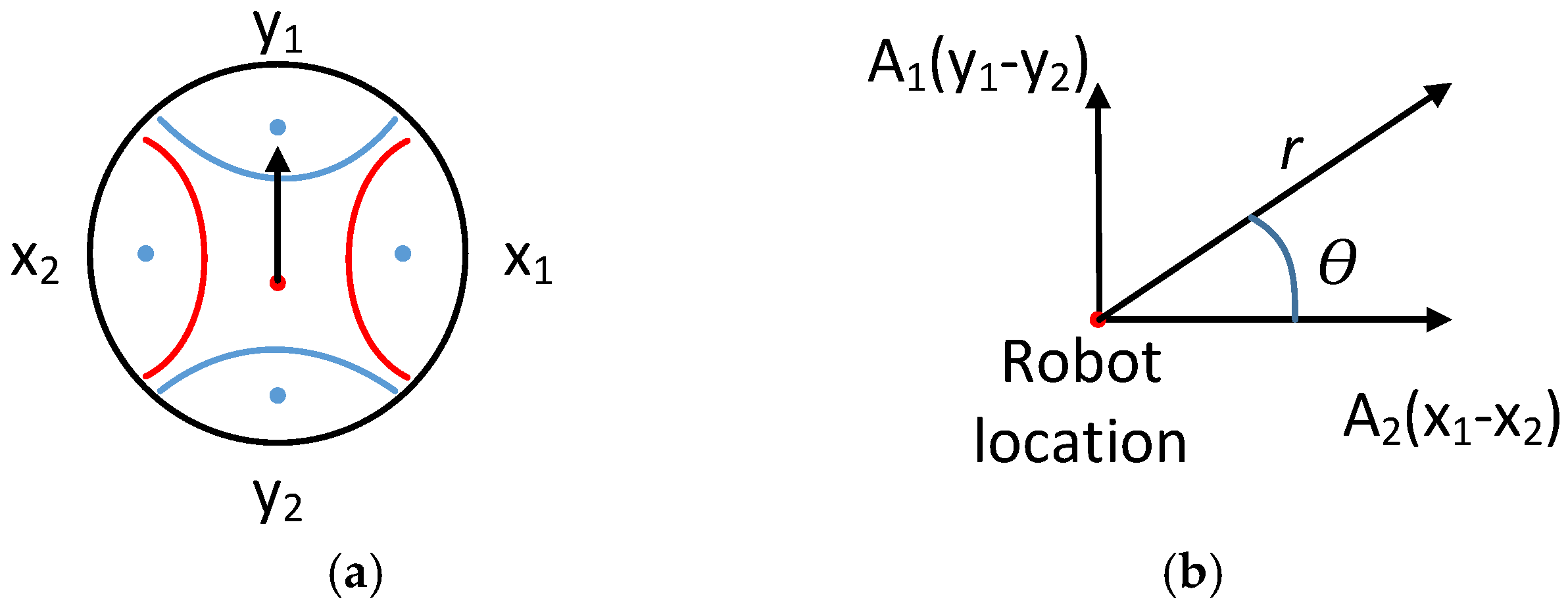

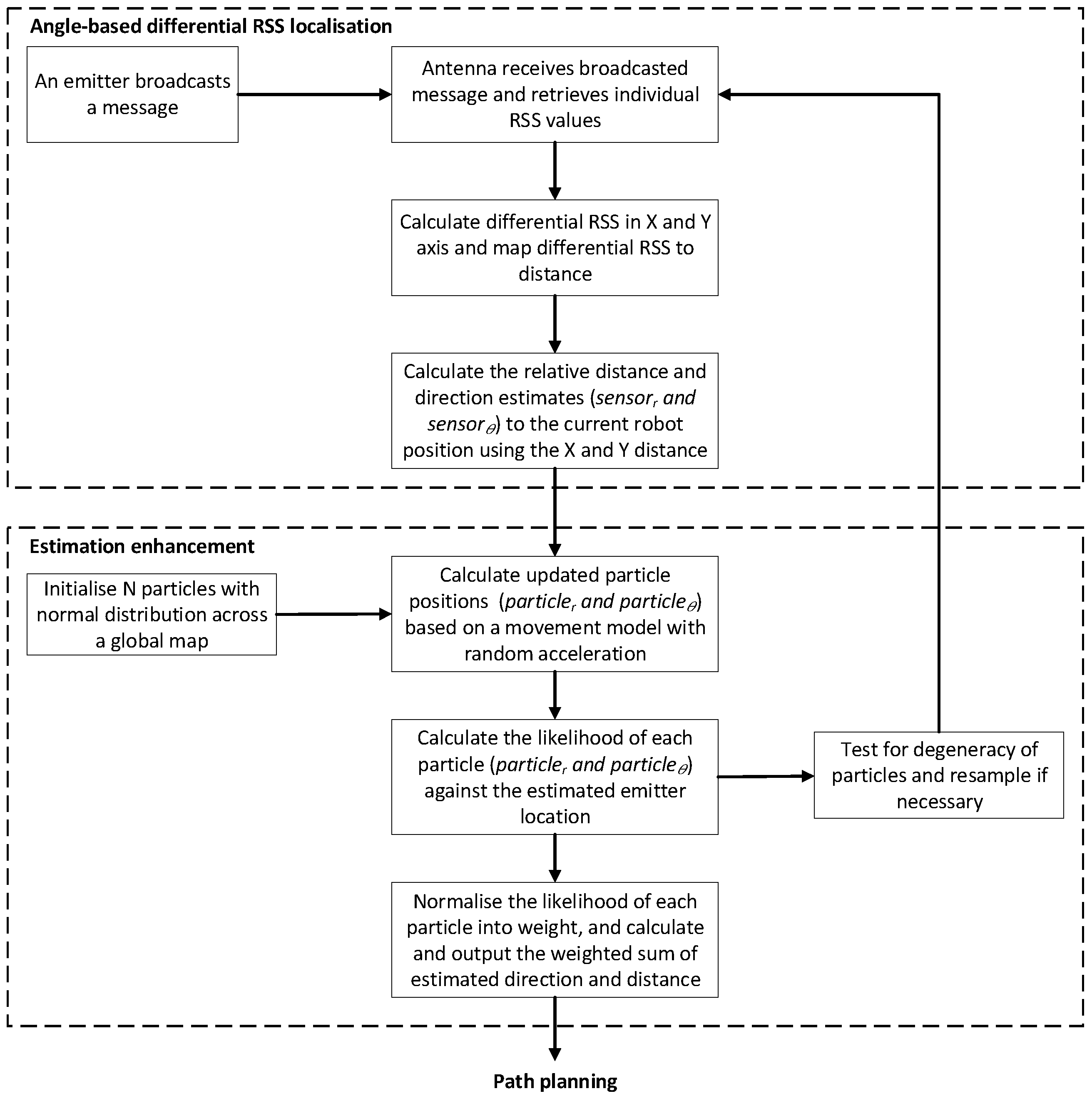

4.2. Design Concept and Methodology

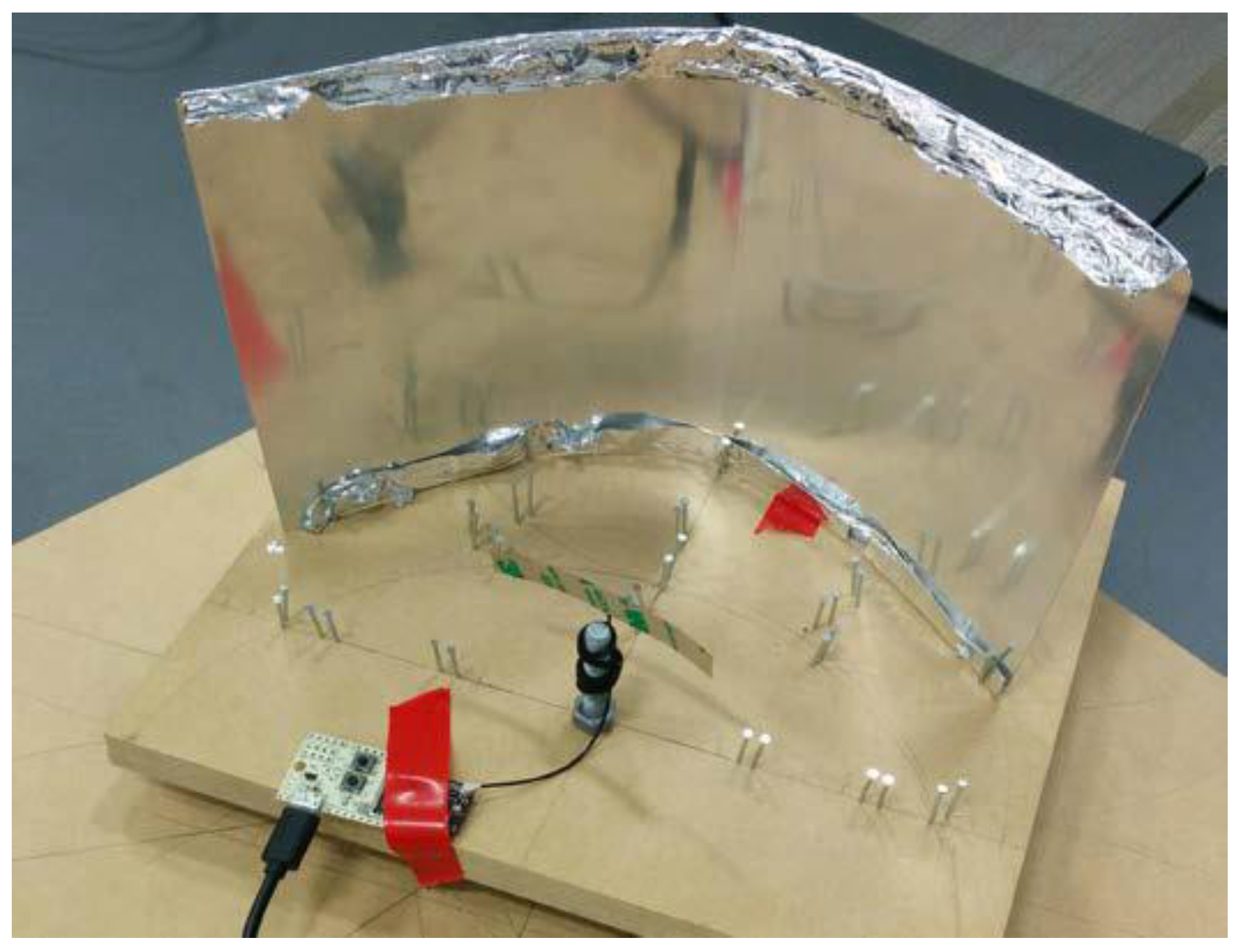

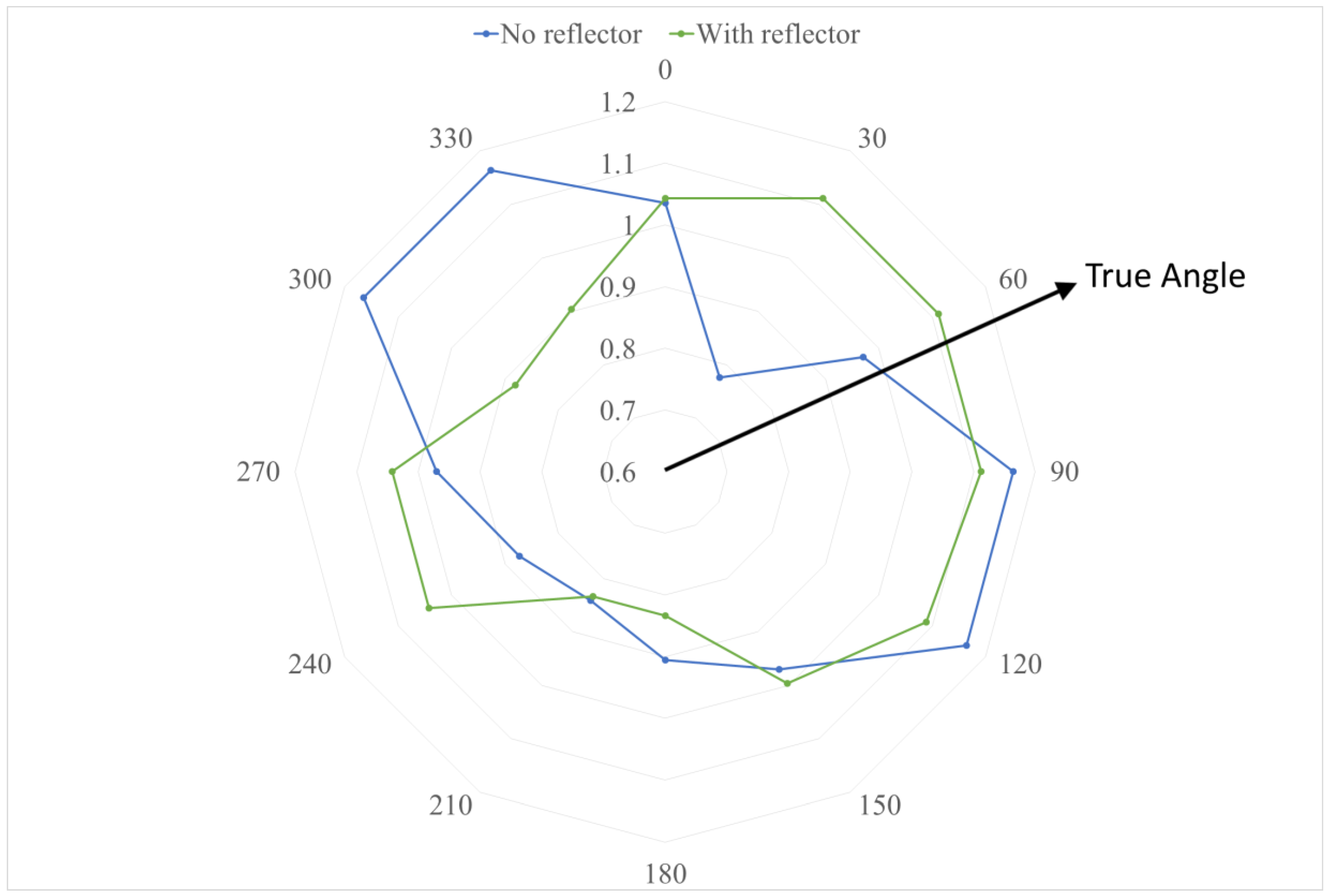

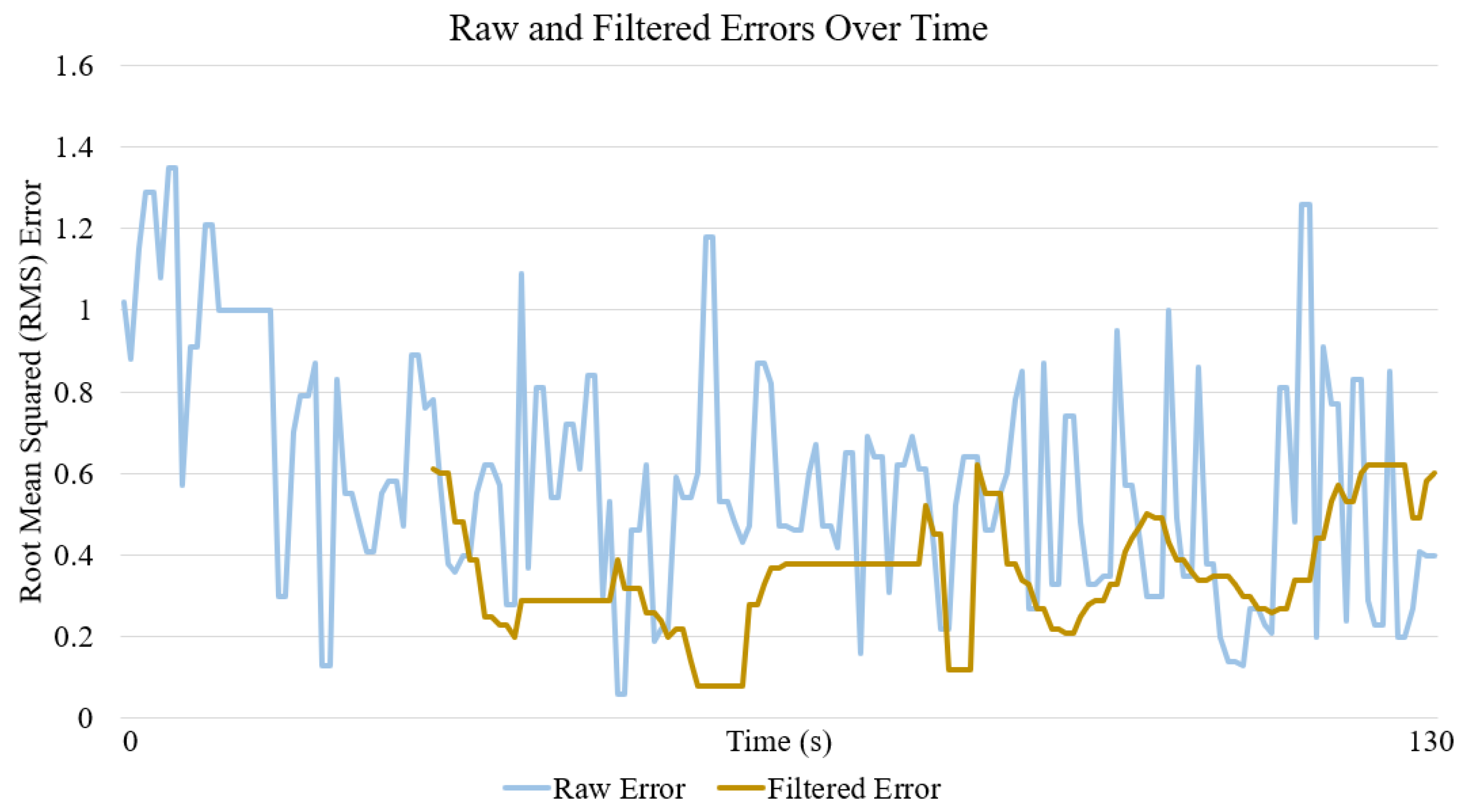

4.3. Angle-Based Differential RSS Localisation Validation

4.4. Enhancing Angle and Distance Estimation

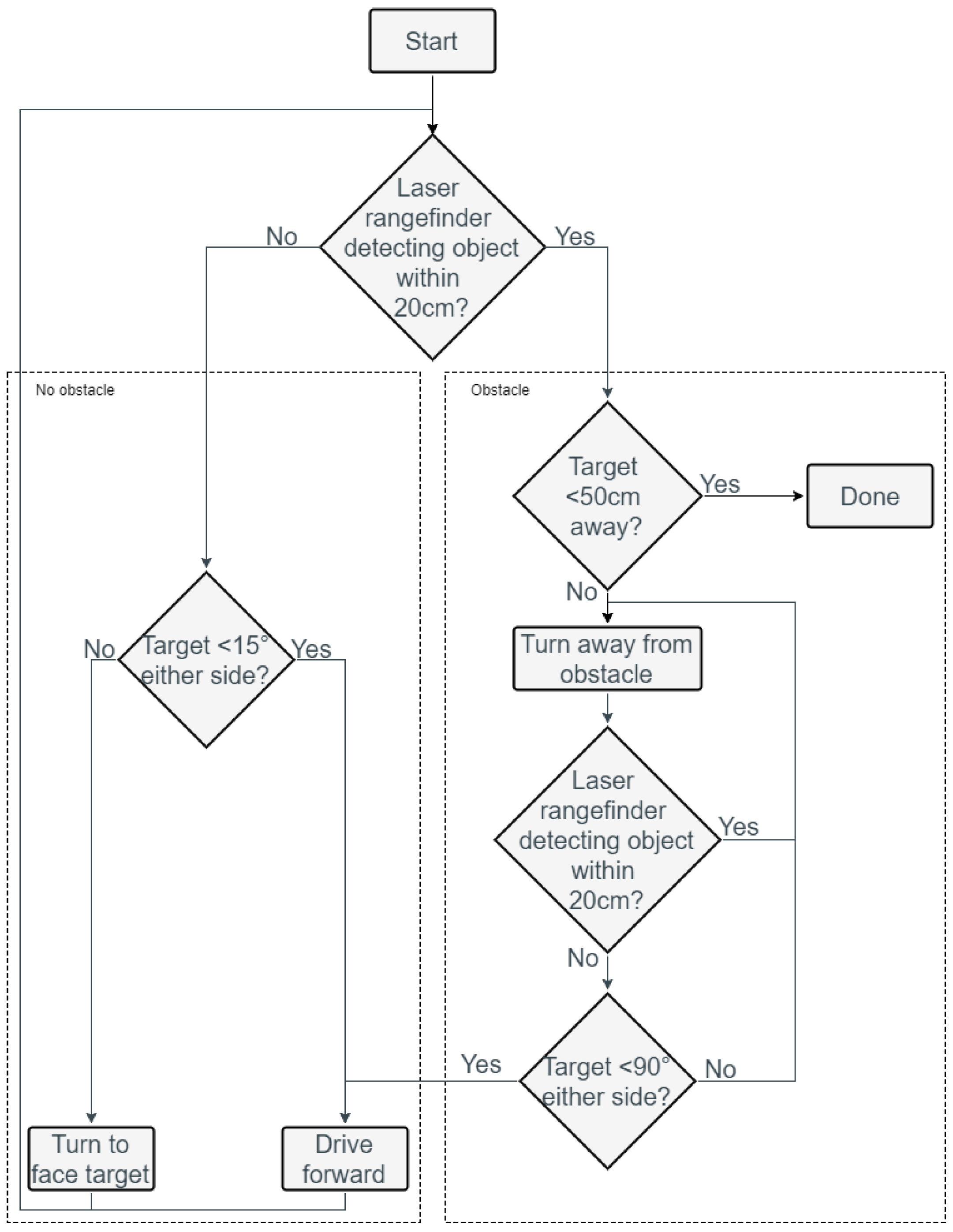

5. Motion Planning

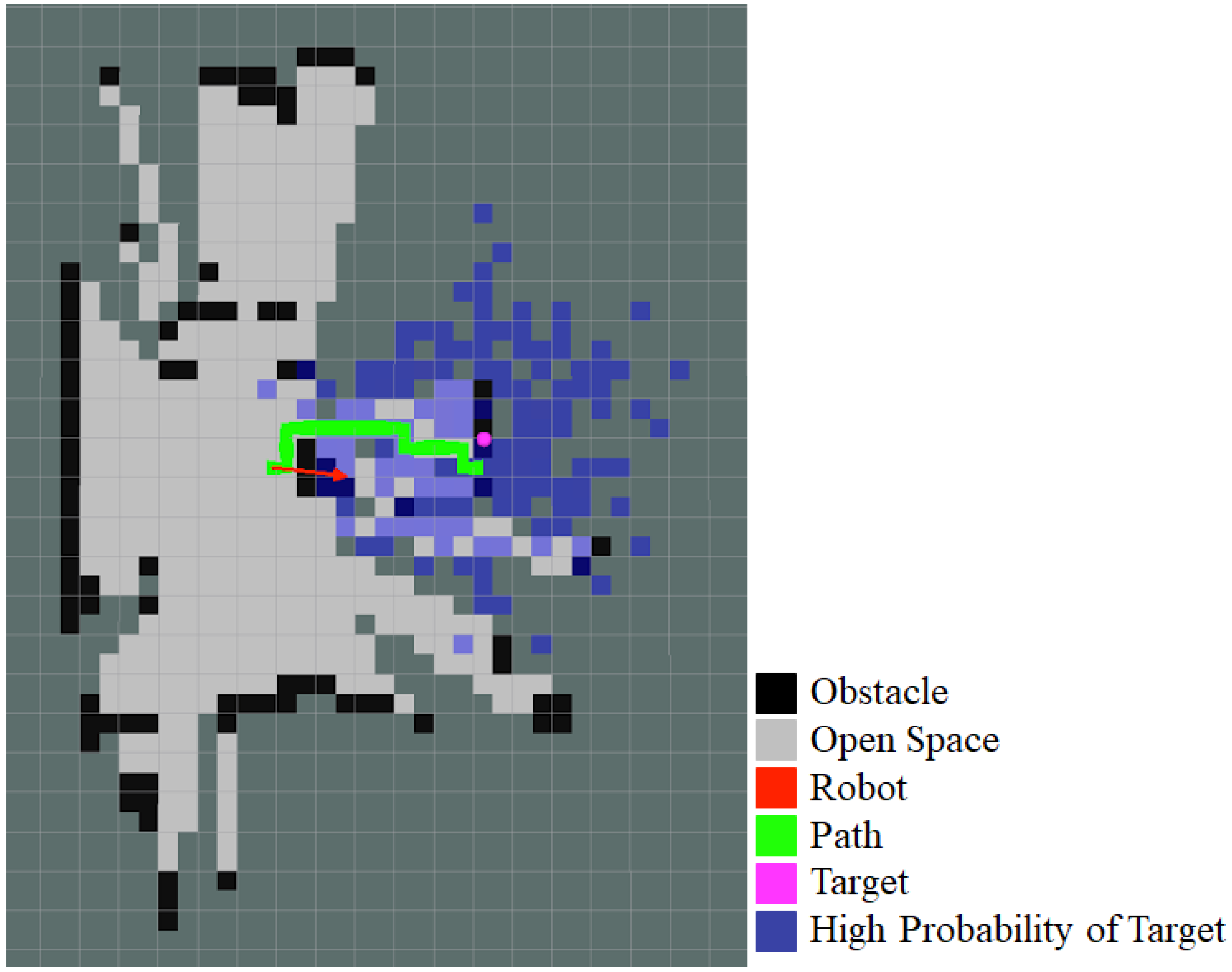

6. Mapping and Path Planning

6.1. Simultaneous Localisation and Mapping (SLAM)

6.2. Path Planning

7. Navigation Testing

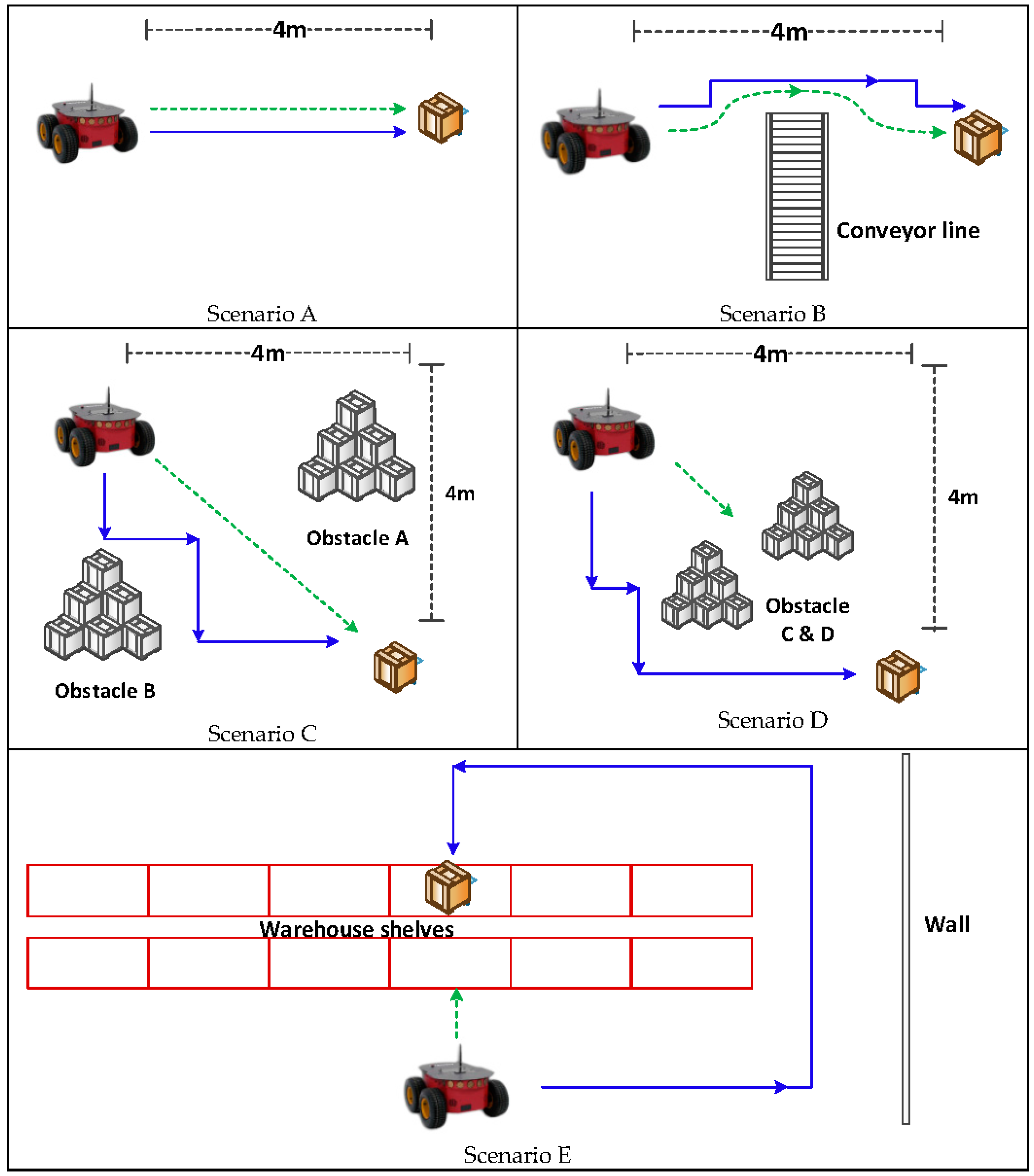

7.1. Testing Scenarios

7.2. Results and Discussions

8. Conclusions and Future Work

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Long, L.N.; Hanford, S.D.; Janrathitikarn, O.; Sinsley, G.L.; Miller, J.A. A Review of Intelligent Systems Software for Autonomous Vehicles. In Proceedings of the IEEE Symposium on Computational Intelligence in Security and Defense Applications, Honolulu, HI, USA, 1–5 April 2007; pp. 69–76. [Google Scholar]

- Miller, P.A.; Farrell, J.A.; Zhao, Y.; Djapic, V. Autonomous Underwater Vehicle Navigation. IEEE J. Ocean. Eng. 2010, 35, 663–678. [Google Scholar] [CrossRef]

- Riisgaard, S.; Blas, M.R. SLAM for Dummies: A Tutorial Approach to Simultaneous Localization and Mapping; SLAM: Genova, Italy, 2005; pp. 4–28. [Google Scholar]

- Zunino, G. Simultaneous Localization and Mapping for Navigation in Realistic Environments. Licentiate Thesis, Numerical Analysis and Computer Science, Royal Institute of Technology, Stockholm, Sweden, 2002. [Google Scholar]

- Guivant, J.; Nebot, E.; Baiker, S. Autonomous navigation and map building using laser range sensors in outdoor applications. J. Robot. Syst. 2000, 17, 565–583. [Google Scholar] [CrossRef]

- Aref, M.M.; Vihonen, J.; Ghabcheloo, R.; Mattila, J. On Latencies and Noise Effects in Vision-Based Control of Mobile Robots. In Advances in Service and Industrial Robotics; Ferraresi, C., Quaglia, G., Eds.; Springer: Cham, Switzerland, 2017; pp. 191–199. [Google Scholar]

- Ostafew, C.J.; Schoellig, A.P.; Barfoot, T.D.; Collier, J. Learning-based Nonlinear Model Predictive Control to Improve Vision-based Mobile Robot Path Tracking. J. Field Robot. 2016, 33, 133–152. [Google Scholar] [CrossRef]

- Bamberger, R.J.; Moore, J.G.; Goonasekeram, R.P.; Scheidt, D.H. Autonomous Geo location of RF Emitters Using Small, Unmanned Platforms. Johns Hopkins APL Tech. Dig. 2013, 32, 636–646. [Google Scholar]

- Uddin, M.; Nadeem, T. RF-Beep: A light ranging scheme for smart devices. In Proceedings of the IEEE International Conference on Pervasive Computing and Communications, San Diego, CA, USA, 18–22 March 2013; pp. 114–122. [Google Scholar]

- Sherwin, T.; Easte, M.; Wang, K.I.-K. Wireless Indoor Localisation for Autonomous Service Robot with a Single Emitter. In Proceedings of the 14th IEEE International Conference on Ubiquitous Intelligence and Computing, San Francisco, CA, USA, 4–8 August 2017. [Google Scholar]

- Olivka, P.; Mihola, M.; Novák, P.; Kot, T.; Babjak, J. The Design of 3D Laser Range Finder for Robot Navigation and Mapping in Industrial Environment with Point Clouds Preprocessing. In Modelling and Simulation for Autonomous Systems. Lecture Notes in Computer Science; Hodicky, J., Ed.; Springer: Cham, Switzerland, 2016; pp. 371–383. [Google Scholar]

- Dobrev, Y.; Vossiek, M.; Christmann, M.; Bilous, I.; Gulden, P. Steady Delivery: Wireless Local Positioning Systems for Tracking and Autonomous Navigation of Transport Vehicles and Mobile Robots. IEEE Microw. Mag. 2017, 18, 26–37. [Google Scholar] [CrossRef]

- Chung, H.Y.; Hou, C.C.; Chen, Y.S. Indoor Intelligent Mobile Robot Localization Using Fuzzy Compensation and Kalman Filter to Fuse the Data of Gyroscope and Magnetometer. IEEE Trans. Ind. Electron. 2015, 62, 6436–6447. [Google Scholar] [CrossRef]

- Parashar, P.; Fisher, R.; Simmons, R.; Veloso, M.; Biswas, J. Learning Context-Based Outcomes for Mobile Robots in Unstructured Indoor Environments. In Proceedings of the 14th IEEE International Conference on Machine Learning and Applications (ICMLA), Miami, FL, USA, 9–11 December 2015; pp. 703–706. [Google Scholar] [CrossRef]

- Li, C.; Liu, X.; Zhang, Z.; Bao, K.; Qi, Z.T. Wheeled delivery robot control system. In Proceedings of the 12th IEEE/ASME International Conference on Mechatronic and Embedded Systems and Applications (MESA), Auckland, New Zealand, 29–31 August 2016; pp. 1–5. [Google Scholar] [CrossRef]

- Adept Technology, Pioneer 3-DX Specifications. 2011. Available online: http://www.mobilerobots.com/Libraries/Downloads/Pioneer3DX-P3DX-RevA.sflb.ashx (accessed on 11 February 2018).

- Nazario-Casey, B.; Newsteder, H.; Kreidl, O.P. Algorithmic decision making for robot navigation in unknown environments. In Proceedings of the IEEE SoutheastCon, Charlotte, NC, USA, 30 March–2 April 2017; pp. 1–2. [Google Scholar] [CrossRef]

- Kamil, F.; Hong, T.S.; Khaksar, W.; Moghrabiah, M.Y.; Zulkifli, N.; Ahmad, S.A. New robot navigation algorithm for arbitrary unknown dynamic environments based on future prediction and priority behaviour. Expert Syst. Appl. 2017, 86, 274–291. [Google Scholar] [CrossRef]

- Tomic, T.; Schmid, K.; Lutz, P.; Domel, A.; Kassecker, M.; Mair, E.; Grixa, I.L.; Ruess, F.; Suppa, M.; Burschka, D. Toward a fully autonomous UAV: Research platform for indoor and outdoor urban search and rescue. IEEE Robot. Autom. Mag. 2012, 19, 46–56. [Google Scholar] [CrossRef]

- Ricardo, S.; Bein, D.; Panagadan, A. Low-cost, real-time obstacle avoidance for mobile robots. In Proceedings of the 7th Annual IEEE Computing and Communication Workshop and Conference, Las Vegas, NV, USA, 9–11 January 2017; pp. 1–7. [Google Scholar]

- Schwarz, B. LIDAR: Mapping the world in 3D. Nat. Photonics 2010, 4, 429. [Google Scholar] [CrossRef]

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A.Y. ROS: An open-source Robot Operating System. In Proceedings of the International Conference on Robotics and Automation Workshop on Open Source Software, Kobe, Japan, 17 May 2009. [Google Scholar]

- Dardari, D.; Closas, P.; Djurić, P.M. Indoor Tracking: Theory, Methods, and Technologies. IEEE Trans. Veh. Technol. 2015, 64, 1263–1278. [Google Scholar] [CrossRef]

- Liu, H.; Darabi, H.; Banerjee, P.; Liu, J. Survey of Wireless Indoor Positioning Techniques and Systems. IEEE Trans. Syst. Man Cybern. C Appl. Rev. 2007, 37, 1067–1080. [Google Scholar] [CrossRef]

- Zhan, J.; Liu, H.L.; Tan, J. Research on ranging accuracy based on RSSI of wireless sensor network. In Proceedings of the 2nd International Conference on Information Science and Engineering, Hangzhou, China, 4–6 December 2010; pp. 2338–2341. [Google Scholar]

- Hood, B.N.; Barooah, P. Estimating DoA from Radio-Frequency RSSI Measurements Using an Actuated Reflector. IEEE Sens. J. 2011, 11, 413–417. [Google Scholar] [CrossRef]

- Xiong, T.-W.; Liu, J.J.; Yang, Y.-Q.; Tian, X.; Min, H. Design and implementation of a passive UHF RFID-based Real Time Location System. In Proceedings of the 2010 International Symposium on VLSI Design Automation and Test (VLSI-DAT), Hsin Chu, Taiwan, 26–29 April 2010; pp. 95–98. [Google Scholar]

- Alarifi, A.; Al-Salman, A.; Alsaleh, M.; Alnafessah, A.; Al-Hadhrami, S.; Al-Ammar, M.A.; Al-Khalifa, H.S. Ultra Wideband Indoor Positioning Technologies: Analysis and Recent Advances. Sensors 2016, 16, 707. [Google Scholar] [CrossRef] [PubMed]

- Kho, Y.H.; Chong, N.S.; Ellis, G.A.; Kizilirmak, R.C. Exploiting RF signal attenuation for passive indoor location tracking of an object. In Proceedings of the International Conference on Computer, Communications, and Control Technology (I4CT), Kuching, Malaysia, 21–23 April 2015; pp. 152–156. [Google Scholar]

- Gleser, A.; Ondřáček, O. Real time locating with RFID: Comparison of different approaches. In Proceedings of the 24th International Conference on Radioelektronika (RADIOELEKTRONIKA), Bratislava, Slovakia, 15–16 April 2014; pp. 1–4. [Google Scholar]

- Popp, J.D.; Lopez, J. Real time digital signal strength tracking for RF source location. In Proceedings of the IEEE Radio and Wireless Symposium (RWS), San Diego, CA, USA, 25–28 January 2015; pp. 218–220. [Google Scholar]

- Anderson, H.R. Fixed Broadband Wireless System Design; John Wiley & Sons: Hoboken, NJ, USA, 2003; pp. 206–207. [Google Scholar]

- Smith, R.; Self, M.; Cheeseman, P. Estimating uncertain spatial relationships in robotics. In Autonomous Robot Vehicles; Springer: New York, NY, USA, 1990; pp. 167–193. [Google Scholar]

- Atmel Corporation. Low Power, 700/800/900MHz Transceiver for ZigBee, IEEE 802.15.4, 6LoWPAN, and ISM Applications. 2013. Available online: http://ww1.microchip.com/downloads/en/DeviceDoc/Atmel-42002-MCU_Wireless-AT86RF212B_Datasheet.pdf (accessed on 11 February 2018).

- Evennou, F.; Marx, F.; Novakov, E. Map-aided indoor mobile positioning system using particle filter. In Proceedings of the IEEE Wireless Communications and Networking Conference, New Orleans, LA, USA, 13–17 March 2005; Volume 4, pp. 2490–2494. [Google Scholar]

- Gustafsson, F. Particle filter theory and practice with positioning applications. IEEE Aerosp. Electron. Syst. Mag. 2010, 25, 53–82. [Google Scholar] [CrossRef]

- Kalman, R. A new approach to linear filtering and prediction problems. Trans. J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef]

- Jazwinsky, A. Stochastic Process and Filtering Theory, Volume 64; Academic Press: Cambridge, MA, USA, 1970. [Google Scholar]

- Grisetti, G.; Stachniss, C.; Burgard, W. Improved Techniques for Grid Mapping with Rao-Blackwellized Particle Filters. IEEE Trans. Robot. 2007, 23, 34–46. [Google Scholar] [CrossRef]

- Masudur Rahman Al-Arif, S.M.; Iftekharul Ferdous, A.H.M.; Nijami, S.H. Comparative Study of Different Path Planning Algorithms: A Water based Rescue System. Int. J. Comput. Appl. 2012, 39, 25–29. [Google Scholar]

- Anderson, J.; Mohan, S. Sequential Coding Algorithms: A Survey and Cost Analysis. IEEE Trans. Commun. 1984, 32, 169–176. [Google Scholar] [CrossRef]

| Scenario | Mean Time (Sec) Taken in Preliminary System | Mean Time (Sec) Taken in Full System |

|---|---|---|

| (A) 4 m straight line | 89 | 90 |

| (B) Blocked 4 m straight line | 126 | 230 |

| (C) Partially blocked 4 m right angle | 252 | 225 |

| (D) Blocked 4 m right angle | 391 | 222 |

| (E) U-turn | Could not complete | 455 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sherwin, T.; Easte, M.; Chen, A.T.-Y.; Wang, K.I.-K.; Dai, W. A Single RF Emitter-Based Indoor Navigation Method for Autonomous Service Robots. Sensors 2018, 18, 585. https://doi.org/10.3390/s18020585

Sherwin T, Easte M, Chen AT-Y, Wang KI-K, Dai W. A Single RF Emitter-Based Indoor Navigation Method for Autonomous Service Robots. Sensors. 2018; 18(2):585. https://doi.org/10.3390/s18020585

Chicago/Turabian StyleSherwin, Tyrone, Mikala Easte, Andrew Tzer-Yeu Chen, Kevin I-Kai Wang, and Wenbin Dai. 2018. "A Single RF Emitter-Based Indoor Navigation Method for Autonomous Service Robots" Sensors 18, no. 2: 585. https://doi.org/10.3390/s18020585

APA StyleSherwin, T., Easte, M., Chen, A. T.-Y., Wang, K. I.-K., & Dai, W. (2018). A Single RF Emitter-Based Indoor Navigation Method for Autonomous Service Robots. Sensors, 18(2), 585. https://doi.org/10.3390/s18020585