Head pose estimation is a key step in understanding human behavior and can have different interpretations depending on the context. From the computer vision point of view, head pose estimation is the task of inferring the direction of head from digital images or range data compared to the imaging sensor coordinate system. In the literature, the head is assumed to be a rigid object with three degrees of freedom, i.e., the head pose estimation is expressed in terms of yaw, roll and pitch. Generally, the previous works on head pose estimation can be divided into two categories: (i) the methods based on 2D images; and (ii) depth data [

1]. The pose estimators based on 2D images generally require some pre-processing steps to translate the pixel-based representation of the head into some direction cues. Several challenges such as camera distortion, projective geometry, lighting or changes in facial expression exist in 2D image-based head pose estimators. A comprehensive study of pose estimation is given in [

1] and the reader can refer to this reference for more details on the literature.

Unlike the 2D pose estimators, the systems based on 3D range data or their combination with 2D images have demonstrated very good performance in the literature [

2,

3,

4,

5,

6,

7]. While most of the work on 3D pose estimation in the literature is based on non-consumer level sensors [

8,

9,

10], recent advances in production of consumer level RGB-D sensors such as the Microsoft Kinect or the Asus Xtion has facilitated the design and implementation of real-time facial performance capture systems such as consumer-level 3D pose estimators, 3D face tracking systems, 3D facial expression capture systems and 3D eye gaze estimators. In this paper, we focus on the recent 3D pose estimators and tracking systems and their application in appearance-based eye gaze estimation systems using consumer level RGB-D sensors.

1.1. Related Work on 3D Pose Estimation Using RGB-D Sensors

The 3D head pose estimation systems can be divided into three categories: (i) statistical approaches; (ii) model-based posed estimation methods; and (iii) facial feature-based pose estimation techniques [

11]. Each of these approaches comes with their specific limits and advantages. Statistical methods may need a large database for training a regressor. However, they can estimate the subject head pose on air, i.e., the system can estimate the head pose for each frame even in a shuffled video sequence. In contrast, model-based approaches generally need an offline step for subject-specific head model reconstruction with significant subject cooperation. Next, a point cloud registration technique such as rigid/non-rigid ICP should be used to register the model with depth data. In other words, unlike the supervised learning based approaches, they are generally based on tracking. Thus, re-initialization becomes a challenge. Facial feature-based pose estimation techniques try to track facial features or patches, which, in turn, can help in calculation of pose using techniques such as PnP [

10] or encoding the face 3D shape using view-invariant descriptors and infer head pose through matching [

12].

To the best of our knowledge, one of the most important works on pose estimation using consumer level

RGB-D sensors is the work of Fanelli et al. [

2,

3]. As the authors provided a ground truth data and a database for comparison, their work has become the gold standard for comparison in the literature. Their work falls in the category of statistical approaches. In their work, the authors proposed a pose estimation system based on Random Forests. For the evaluation of their system, they acquired a database of 20 subjects, which is called the Biwi head pose database. Next, they divided the database into a training and test sets. Afterwards, a commercial face tracker is used for annotation of the training set, i.e., a subject-specific head model is constructed using the commercial system to match each person’s identity and track the head in training depth frames. The commercial tracker measures a subject’s 3D head locations and orientations, which, in turn, are used to train their regression based system. Finally, some patches of fixed size from the region of the image containing the head as positives samples, and from outside the head region as negatives were randomly selected for training the system. A major limitation of this system is that it requires an offline training phase with subject cooperation. Moreover, the performance of the system in the testing phase is subject to the output of the commercial head tracker in the training phase. In [

3], the authors continued their previous work [

2] by creating a dataset of synthetic depth images of heads, and extracting the positive patches from the synthetic data, while using the original depth data to to extract negative patches. A drawback of this system is the limited number of synthetic models and negative patches for performing a regression task, without learning subject’s own head [

3]. Ref. [

13] proposed a system based on cascaded tree classifiers with higher accuracies than Fanelli et al. [

8] proposes a 3D face tracker based on particle filters. The main idea in their system is the combination of depth and 2D image data in the observation model of the particle filter.

1.2. Related Work on 3D Gaze Estimation Using RGB-D Sensors

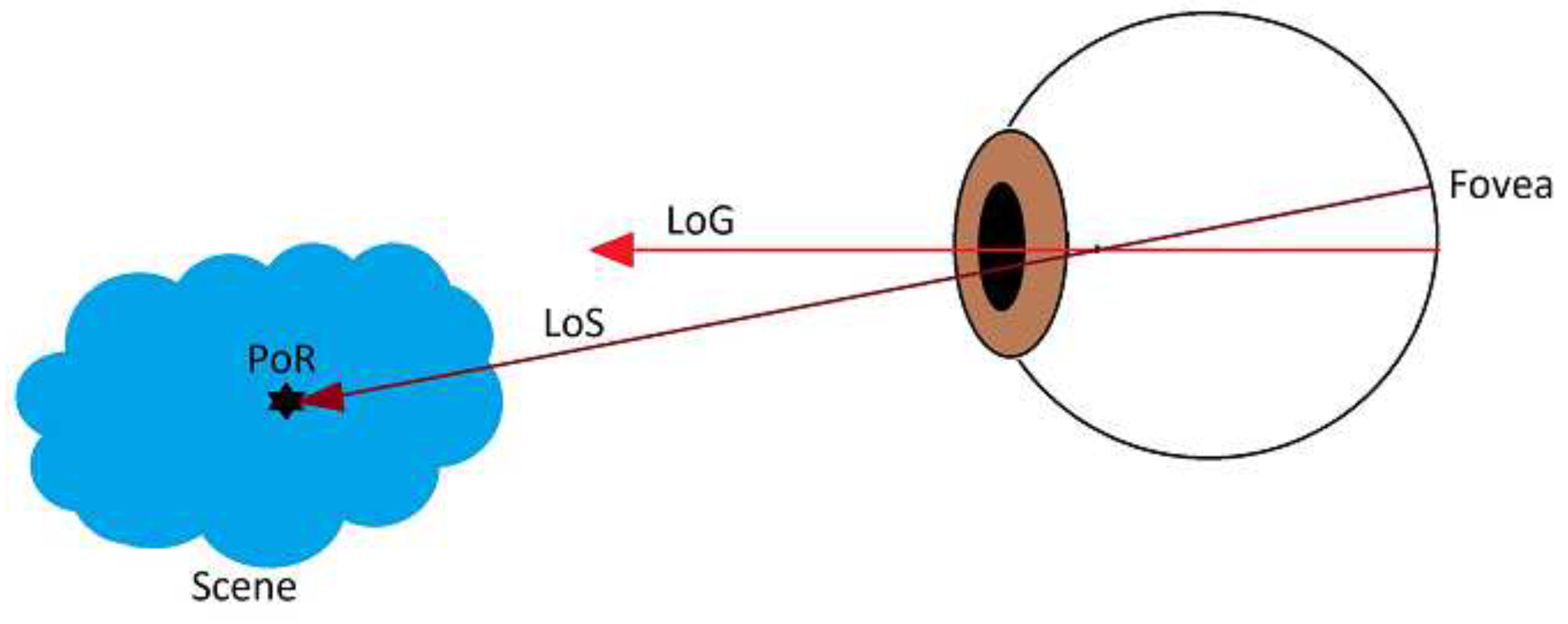

Based on the context, the term

Gaze Estimation can be interpreted as one of the following closely related concepts: (i) 3D Line of Sight (LoS); (ii) 3D Line of Gaze (LoG); and (iii) Point of Regard (PoR). Within the eyeball coordinate system, the LoG is simply the optical axis, while the LoS is the ray pointing out from fovea and eyeball rotation center. The PoR is a 3D point in the scene to which the LoS points.

Figure 1 demonstrates a simplified schematic of human eye with LoS, GoS and PoR. In the literature non-intrusive gaze estimation approaches generally fall into one of the following categories: (i) feature-based approaches; and (ii) appearance-based approaches [

14].

Feature-based approaches extract some eye specific features such as eye corner, eye contour, limbus, iris, pupil, etc. These features may be aggregated with the reflection of external light setup on the eye (called glints or Purkinje images) to infer the gaze. These methods are generally divided into two categories: (i) model-based (geometric); and (ii) interpolation-based.

Model based methods rely on the geometry of the eye. These methods directly calculate the point of regard by calculating the gaze direction (LoS) first. Next, the intersection of the gaze direction and the nearest object of the scene (e.g., a monitor in many applications) generates the point of regard. Most of the model-based approaches require some prior knowledge such as the camera calibration or the global geometric model of the external lighting setup [

15,

16,

17,

18,

19,

20].

Unlike the model-based methods, the interpolation-based approaches do not perform an explicit calculation about the LoS. Instead, they rely on a training session based on interpolation (i.e., a regression problem in a supervised learning context). In these methods, the feature vector between pupil center and corneal glint is mapped to the corresponding gaze coordinates on a frontal screen. The interpolation problem is formalized using a parametric mapping function such as a polynomial transformation function. The function is used later to estimate the PoR on the screen during the testing session. A calibration board maybe used during the training session [

21,

22,

23,

24,

25,

26,

27]. The main challenge with the interpolation-based approaches is that they can not handle the head pose movements [

14]. Notice that feature-based approaches in general need high resolution images to precisely extract the eye specific features as well as the glints. Moreover, they may require external lighting setups which are not ubiquitous. This motivates the researchers to train appearance-based gaze estimators, which rely on low quality eye images (a holistic-based approach instead of feature-based). However, appearance-based approaches generally have less accuracy.

As opposed to feature-based approaches, appearance-based methods do not rely on eye-specific features. Instead, they learn a one-to-one mapping from the eye appearance (i.e., the entire eye image) to the gaze vector. Appearance-based methods do not require camera calibration or any prior knowledge on the geometry data. The reason is that the mapping is made directly on the eye image, which makes these methods suitable for gaze estimation from low resolution images, but with less accuracy. In this context, they share some similarities with interpolation-based approaches. Similar to the the interpolation-based methods, appearance-based methods do not handle the head pose.

Baluja and Pomerleau [

28] first used the interpolation concept from image content to the screen coordinate. Their method is based on training a neural network. However, their method requires more than 2000 training samples. To reduce such a large number of training examples, Tan et al. [

29] proposed using a linear interpolation and reconstructed a test sample from the local appearance manifold within the training data. By exploiting this topological information of eye appearance, the authors reduced the training samples to 252. Later, Lu et al. [

30] proposed a similar approach to exploit topological information encoded in the two-dimensional space of gaze space. To further reduce the number of the training data, Williams et al. [

31] proposed a semi-supervised sparse Gaussian process regression method S3GP. Note that most of these methods assumed a fixed head pose. Alternatively, some other researchers used head mounted setups, but these methods are no longer non-intrusive [

32,

33].

With the main intention of designing a gaze estimator robust to head pose, Funes and Odobez [

7,

34] proposed the first model-based pose estimator by building a subject-specific model-based face tracker using Iterative Closest Point (ICP) and 3D Morphable Models. Their system is not only able to estimate the pose, but is also able to track the face and stabilize it. A major limitation of their method is the offline step for subject specific 3D head model reconstruction. For this purpose, they manually placed landmarks (eye corners, eyebrows, mouth corners) on RGB image of the subject, and consequently added an extra term to the cost function in their ICP formulation. In other words, their ICP formulation is supported by a manual term. Moreover, the user has to cooperate with the system and turn their head from left to right. Recently, the authors proposed a more recent version of their system in the work of [

11] without the need for manual intervention.

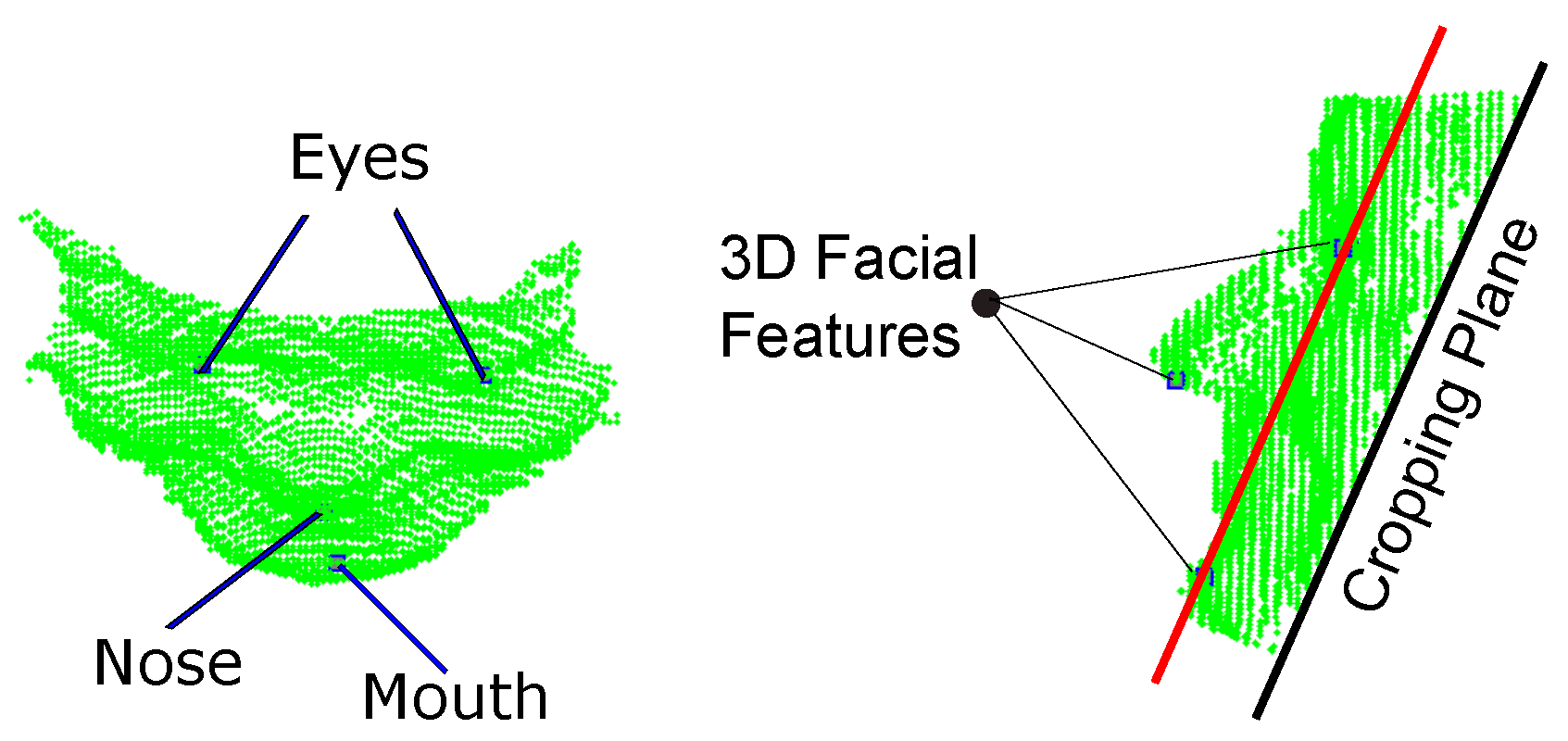

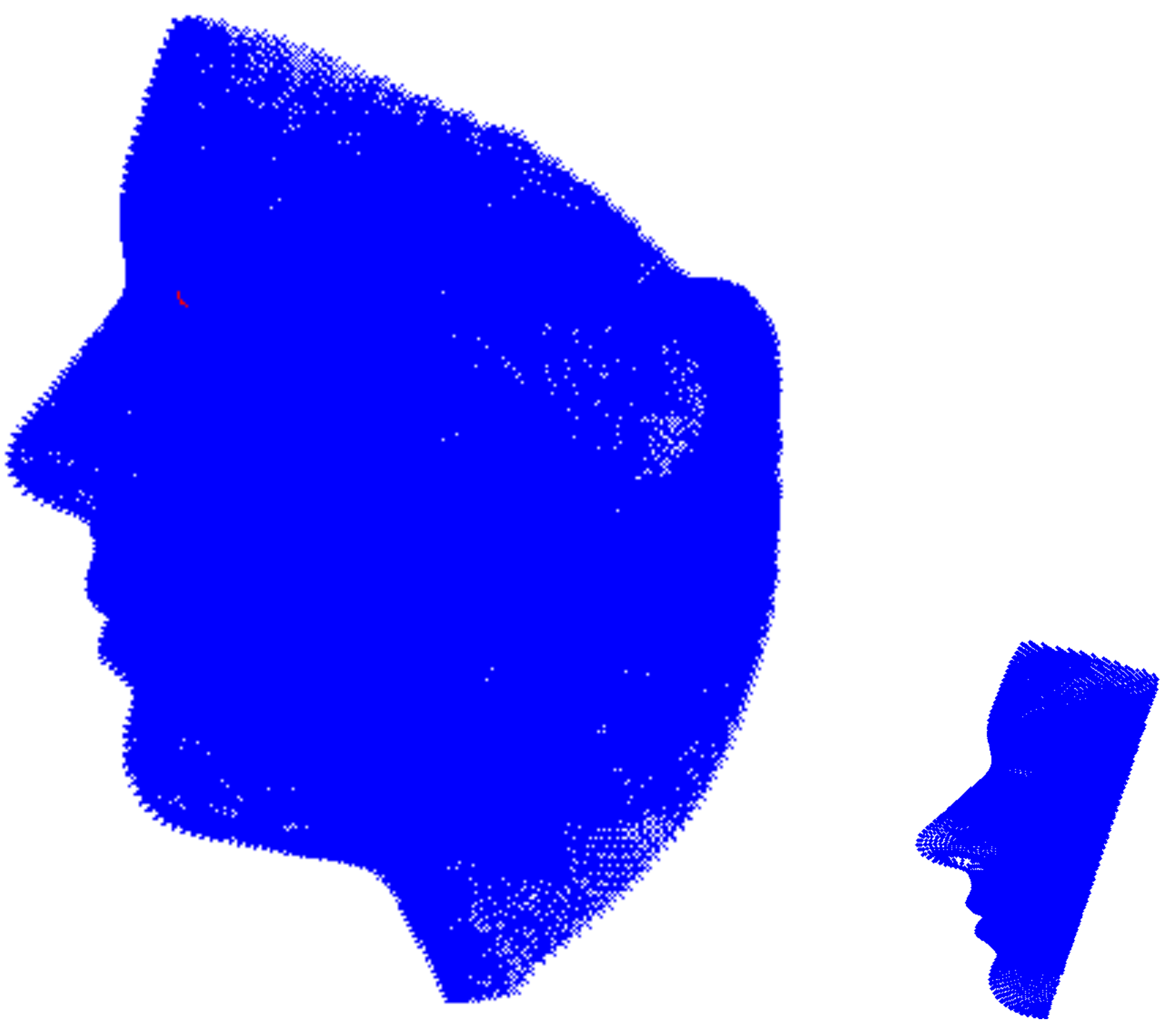

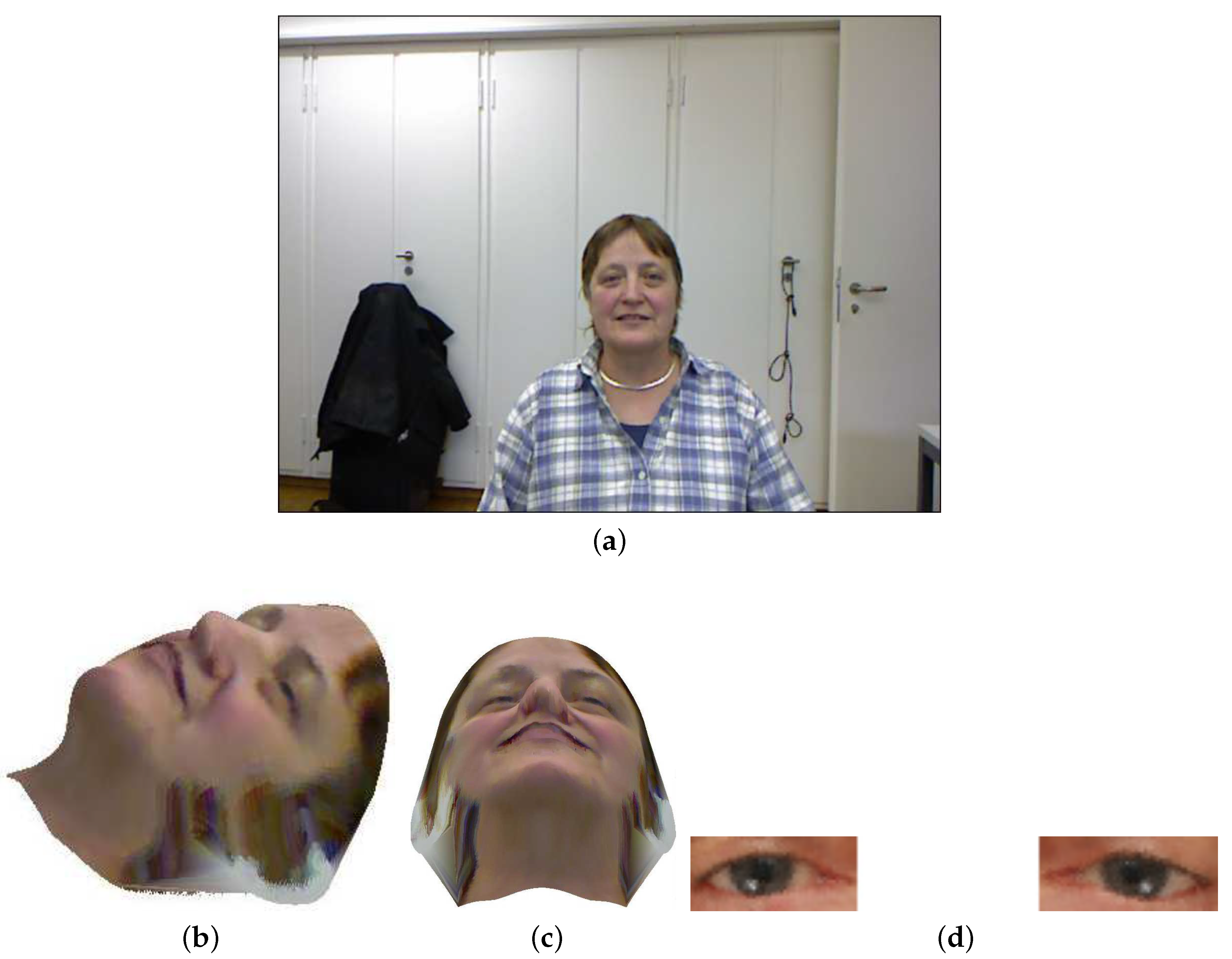

1.3. Contribution of the Proposed Approach

Unlike [

2,

3,

7,

34], our proposed system does not require any commercial system to learn a subject’s head or any offline step. A key contribution of our approach is to propose a method to automatically learn a subject’s 3D face rather than the entire 3D head. Consequently, we no longer need subject’s cooperation (i.e., turning their head from left to right), which is important in previous works for model-based pose estimation systems. In addition, unlike [

7], our system does not require any manual intervention for model reconstruction. Instead, we rely on Haar features and boosting for facial feature detection, which, in turn, can be used for face model construction. Note that we use only one RGB frame for model reconstruction. The tracking step is based on depth frames only. After learning a subject’s face, the pose estimation task is performed by a fully automatic, user non-cooperative and generic ICP formulation without any manual term. Our ICP formulation is robustified with Tukey functions in tracking mode. Thanks to the Tukey functions, our method successfully tracks a subject face in challenging scenarios. The outline of the paper is as follows: The method details are explained in

Section 2. Afterwards, the experimental results are discussed in

Section 3. Finally, the conclusions are drawn in

Section 4.

, very good;

, very good;  , good;

, good;  , weak.

, weak.

, very good;

, very good;  , good;

, good;  , weak.

, weak.

, very good;

, very good;  , good;

, good;  , weak.

, weak.

, very good;

, very good;  , good;

, good;  , weak.

, weak.

, very good;

, very good;  , good;

, good;  , weak.

, weak.

, very good;

, very good;  , good;

, good;  , weak.

, weak.

, good;

, good;  , weak.

, weak.

, good;

, good;  , weak.

, weak.

, very good;

, very good;  , good;

, good;  , weak.

, weak.

, very good;

, very good;  , good;

, good;  , weak.

, weak.