1. Introduction

A star sensor is an electronic measurement system that can measure the three-axis attitude of the carrier satellite using a star as the measurement object and a photodetector as the core component. Owing to their strong autonomy, good concealment, high reliability, and high precision, star sensors have become important instruments for measuring spacecraft attitude in the aerospace field. They are widely used with Earth observation satellites and space exploration aircraft. In recent years, with the rapid development of aerospace science and technology, the dynamic high-precision attitude measurement of aircraft has become even more important for space target surveillance and geosynchronous observation of constellations of spacecraft [

1,

2,

3,

4].

Dynamic high-precision attitude measurement has become indispensable for aircrafts [

5,

6]. Because this technique requires the image to provide extremely accurate star point coordinates, research on resolving errors related to star sensor imaging errors is always ongoing [

7]. A star sensor will introduce complex imaging errors in the dynamic imaging process, making the accuracy of attitude measurement difficult to guarantee [

8,

9]. These imaging errors are due to the relative motion between the starry sky and the camera [

10]; star point positioning errors include both static [

8,

11] and dynamic systematic errors [

12]. All errors can cause the star point position to be inaccurate, thus affecting the measured attitude of the satellite platform.

The development of methods for correcting these errors is ongoing. On the theoretical level, Jia et al. [

13] used the frequency domain method to explore the factors influencing errors involved in star point extraction from the perspective of an imaging model. However, that study did not shed light on the inherent mechanism of error generation. Yao et al. [

14] established a distortion model based on point coordinate measurement, which implements non-uniformity error correction for each pixel. Li et al. [

15] pointed out that suppressing pixel non-uniformity noise can greatly improve the accuracy of star point positioning based on analysis of a star point positioning error model. Schmidt et al. [

16] considered the in-orbit usage and real-time requirements of the star sensor, treated all noise as pixel non-uniformity noise, predicted the pixel-level noise, and corrected the star point positioning error using the background difference method. Liao et al. [

17] considered combining a star sensor and an inertial platform to counteract the negative effects of excessive angular velocity of the carrier satellite and to ensure that star point positioning eliminates cumulative errors. At a practical engineering level, Samaan et al. [

18] considered selecting more sensitive chips to avoid star blur while Yang et al. [

19] used the least square support vector regression method to train and fit an image to compensate for the image of the square star coordinates. Most of these methods analyzed the star sensor imaging errors from the perspective of imaging principles or hardware and compensated for the errors. The Kalman filter [

20] or smoother techniques can improve attitude measurements, but they have high coupling with hardware design model, and are acting on the attitude directly.

The above studies make important contributions to the theoretical development and application of measurement technology. However, all of them consider one type of error to the neglect of others. In addition, they lack error analysis for star maps obtained from a moving satellite platform. In response, a mathematical model that considers multiple imaging errors needs to be established. In this paper, we develop a generic model by analyzing a large amount of star map data to be used in real-time attitude determination. Specifically, we think about correcting error from the aspect of the data. We describe our aims and demonstrate the effectiveness of our method. Our method is easier to implement than a stricter model, as in our method we do not need to understand how a specific star sensor works. The only thing we need to know is the coordinate before the correction. This powerful model can be used to correct star point coordinates from the same star sensor.

In our method, we consider the star maps of the star sensor to be superimposed, and the relative motion trajectory of the star indicates the motion of the satellite to some extent. We select point coordinates for every trajectory randomly and equably. Then, we conduct bidirectional fitting of the motion trajectory. If the errors obtained by fitting from the two directions are not consistent, a more quadratic fit is required. The fit establishes a link between image position and first coefficients. The image position can be indicated by one set of the point coordinates mentioned as above. The selection principle is that the point coordinates can best match the results of the first fitting. And then these parameters are utilized to restrain and screen the corrected coordinates, so as to improve the accuracy of the corrected coordinates.

This study is based on image data collected by the star sensor. We take the motion of the satellite platform, use an elliptic equation to fit the relative motion trajectory of the star image, and implement geometric error correction of the image star points using multiple parameters to classify the ambiguous solutions of the intersection of the elliptic equation. As long as a set of identical star sensor data are available to obtain the initial model, the coordinates of the star point can be quickly compensated, and the model can be continuously updated to improve its accuracy. Our research shows that after dynamic imaging error analysis and parameter estimation, the intersection of multi-estimated parameter curves can effectively be used to compensate for the image star point observation values. The time required for the method is short, which will allow for better observations for the strict calibration and attitude calculation of subsequent star sensors.

2. Methods

2.1. Technical Outline of the Ellipsoid Model Method

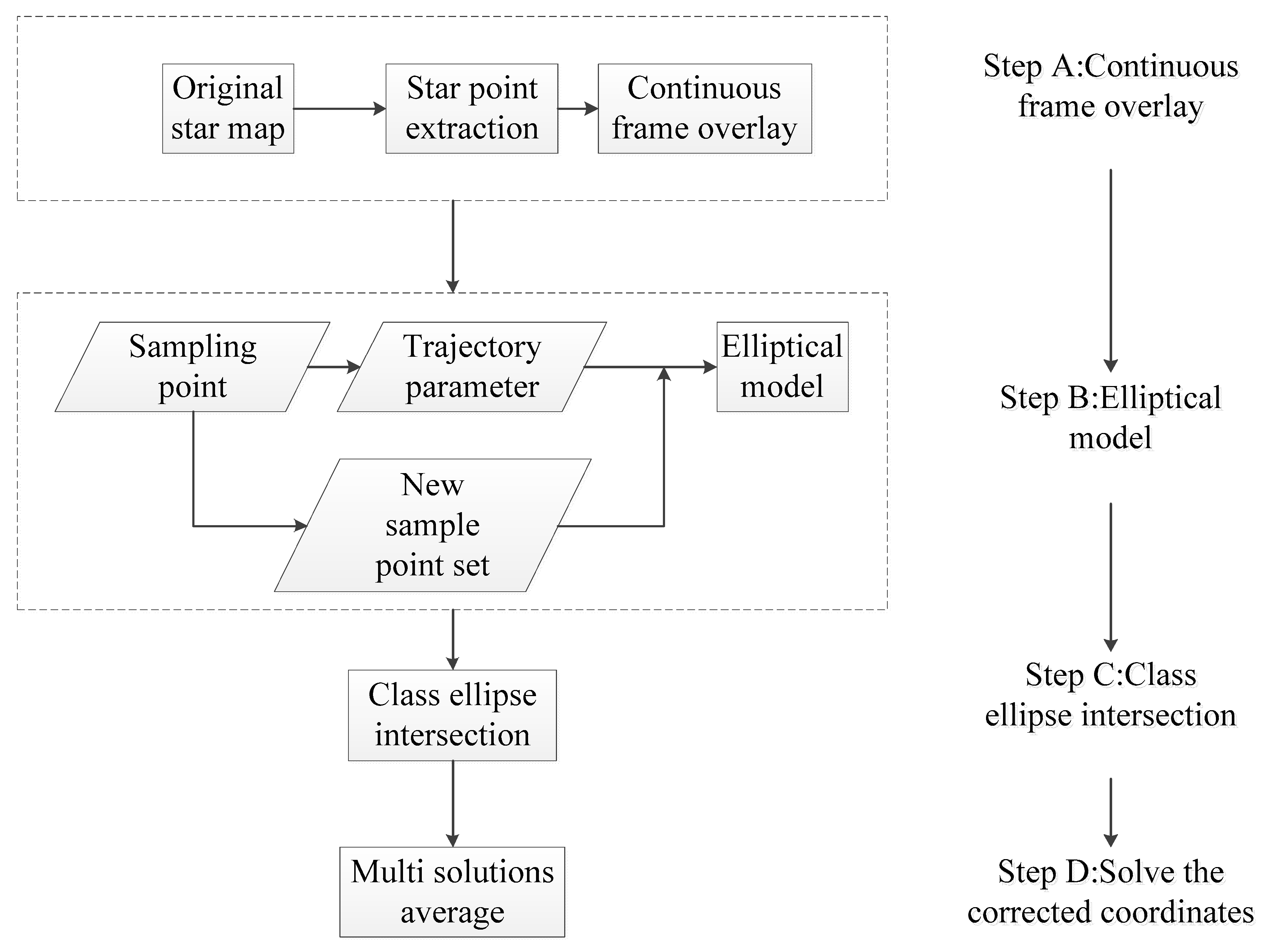

As mentioned above, we wanted to solve the imaging error problem of the star sensor based on star maps. We considered the star maps of the star sensor to be superimposed, and the relative motion trajectory of the star indicates the motion of the satellite to some extent. Then, we conducted bidirectional fitting of the motion trajectory. Based on this fitting, an elliptic model was established, the parameters were estimated and the coordinates were compensated to correct the star point error.

Figure 1 shows the flow of the ellipsoid model method.

2.2. Ellipsoid Model of Image Star Points

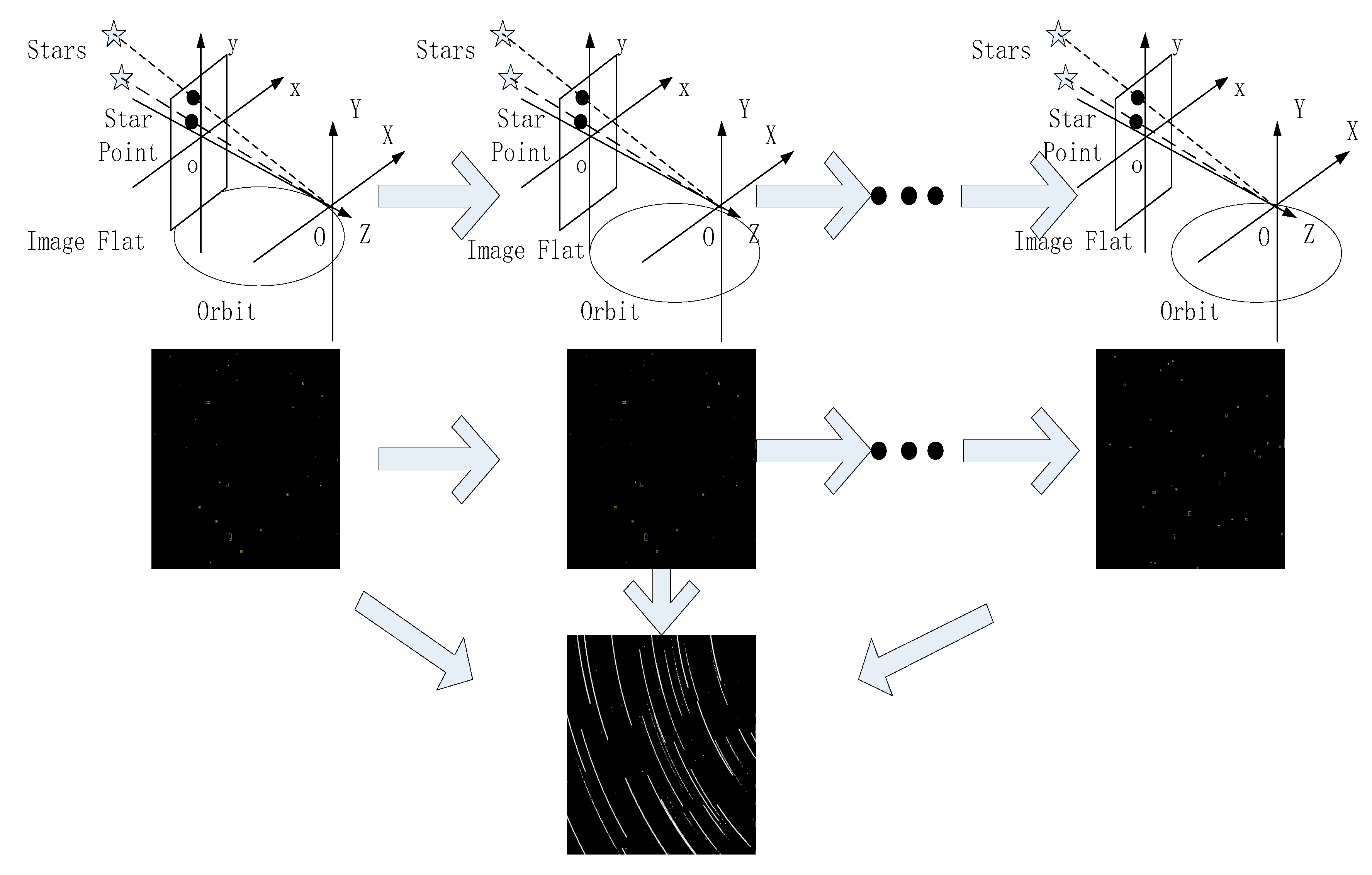

In the celestial system, stars stand at very great distances from satellite platforms that orbit the Earth; therefore, stars can be thought of as relatively static control points that can be used to determine the attitude of a satellite platform. The process of star sensor imaging is shown in

Figure 2. During a known period, a satellite moves in an elliptical orbit. Note that a spacecraft experiencing acceleration in any direction will not follow an elliptical orbit. Fortunately, observation satellites are usually not accelerating when observations occur. We consider that when an observation satellite is in a stable attitude, the satellite is slewing at a constant angular rate. As the satellite moves along its elliptical orbit, the camera center of the star sensor changes accordingly. Because the star is stationary, the imaging trajectory of the star during this time reflects the elliptical orbit of the satellite platform to some extent.

However, because the x and y coordinates in the image are perpendicular to each other and measured relatively independently, the long and short half axes of the elliptical orbit are negligible relative to the star distance during the star sensor imaging process. A single imaging trajectory has two possible directions (the x and y directions); such polysemy solutions will be considered in subsequent parameter estimation. If the errors obtained by fitting from the two directions are consistent, that is, the errors by fitting from direction x are approximately equal to those from direction y, then the error can be compensated. If they are not consistent, a more quadratic fit is required.

From the perspective of the image, the imaging trajectory of a single star point can be represented by a certain elliptical arc equation. Since the satellite platform only runs a small elliptical arc orbit during this period, a parabolic equation approximation is used instead of a small elliptical arc equation. This is bidirectionally analyzed from

x and

y, respectively, so an image point trajectory equation can be established (Equation (1)).

In the Equation (1), are a random and uniform selection of points on the relative trajectory of stars after the superposition of multi-frame star maps.

Each type of imaging trajectory in the figure can be fitted with an ellipsoid shape. Now, although the matching ellipse corresponding to each imaging trajectory is not the same, these trajectories are all obtained from the same star maps and are formed by the same satellite platform, so they should have the same regularity. In other words, they can express the motion trajectory of the satellite platform, but some kind of scaling relationship does exist. Therefore, the elliptic coefficient parameter should satisfy a certain model and is related to the image position. The image position can be indicated by one set of the point coordinates

in Equation (1). The selection principle is that the selected set of star coordinates should be able to best express the fitting coefficients (

). That means the point coordinates

we selected can best match the results of the first fitting. A quadratic model is used, and its mathematical expression can be expressed as:

In this equation, are the image coordinates, which can best match the results of the first fitting, , , , , , and are the fitting coefficients of the equations in Equation (1), , , , , and are the fitting coefficients, and thus, as Equation (2), the equation is called the ellipsoid model.

2.3. Parameter Estimation and Coordinate Compensation

In the above process, the star map data acquired by the star sensor is expressed by a set of parameterized elliptic equations, and the actual imaging error has been smoothed and corrected in the fitting process of the superimposed image traces. Using the elliptic equations of the above six related parameters, we can realize the correction and compensation of the image observations before calibration and attitude calculation of the star sensor.

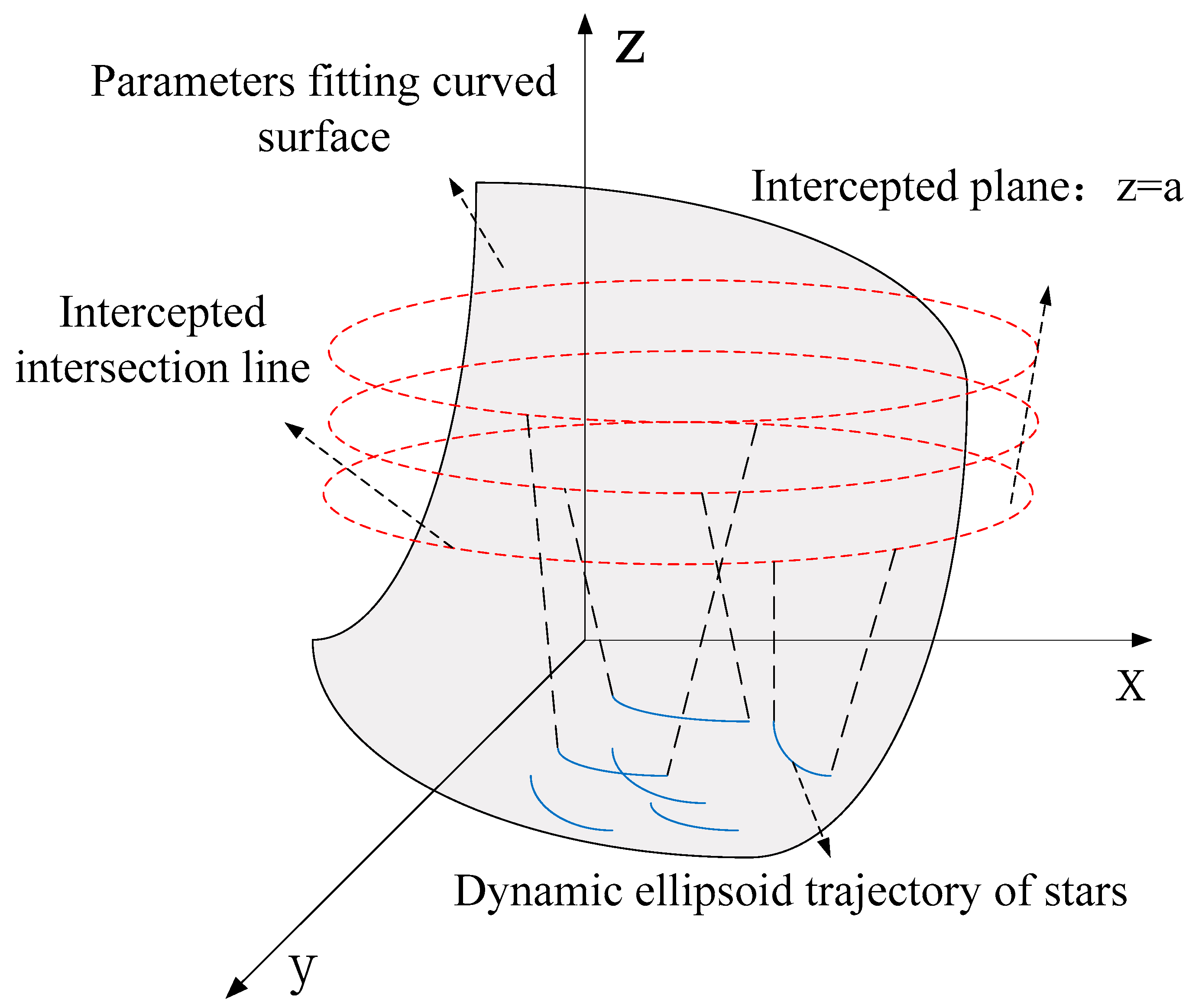

The principle on which the ellipsoid model is based shows that any trajectory in the image plane will correspond to a curve on the surface (

Figure 3). The specific principle is that the parameter value is high, parallel to the

xoy plane, the intersection of the plane is the corresponding conic, and solutions can be found by intersecting the conic of each group. In theory, the intersection point is represented by the corrected coordinates obtained.

But actually, in

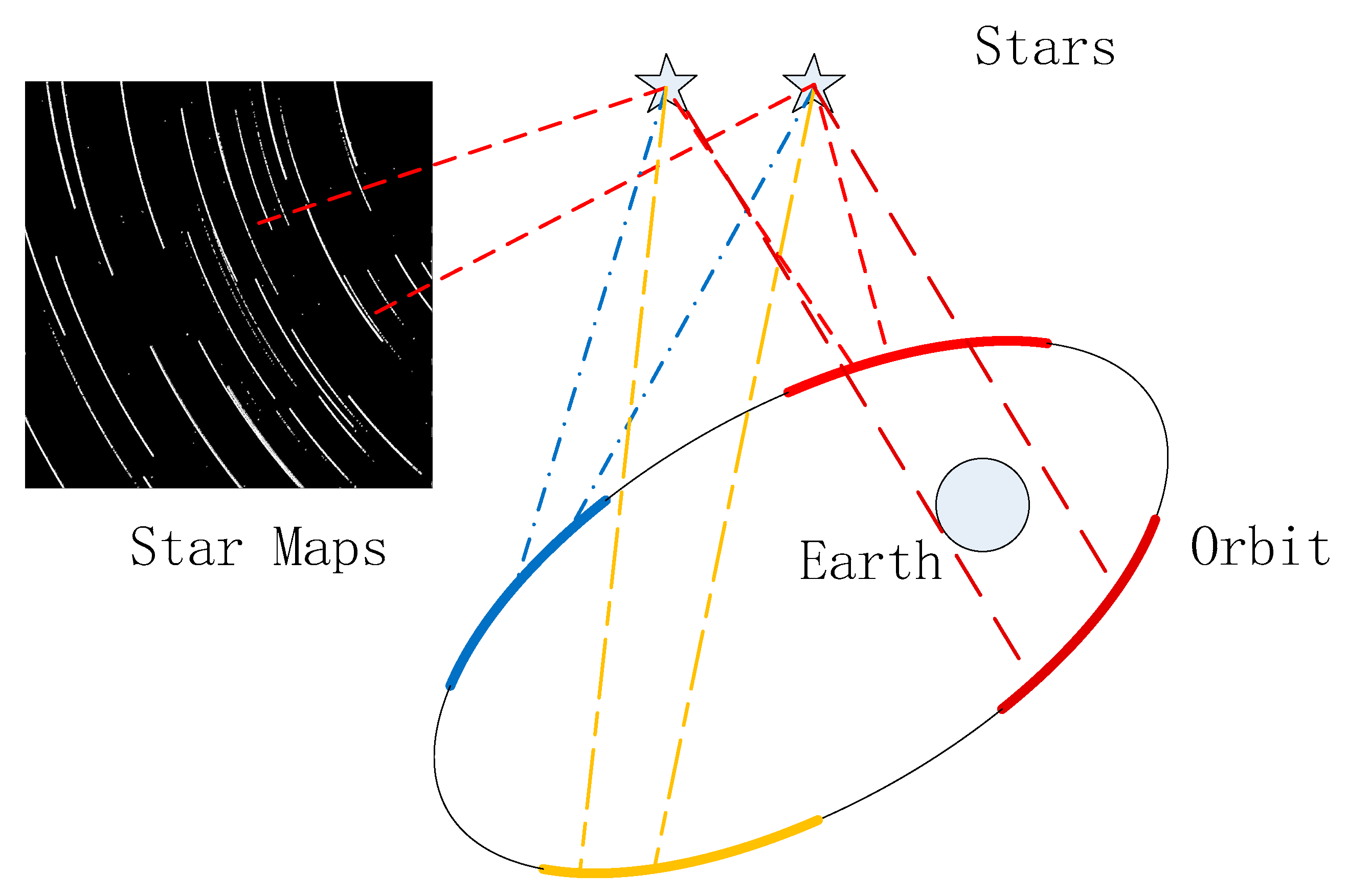

Figure 4 the upper left corner provides a superimposed imaging trajectory of multi-frame data. The black figure in the upper left corner results from superimposing multi-frame consequent star maps. We can get many relative motion trajectories, as shown by the white curve. Because the star is stationary, the trajectory corresponds to the regular orbit of the spacecraft. The ellipse in this figure illustrates the orbit and the motion of the satellite platform; the circle at one focus represents the earth. According to the analysis, the traces after multi-frame data superposition are the embodiment of the orbit equation of the satellite platform. Although different stars have different imaging positions, these traces over a specific period should conform to an arc of the elliptical orbit, which is the motion trajectory represented by the arcs (shown in four colors). The real meaning of the four arcs is the orbit of the spacecraft, which is a schematic diagram, meaning the traces in a specific period should confirm to the ellipsoid. and the motion trajectory we got may correspond random one of them.

This explains why the results have an ambiguous solution. At this time, it is theoretically reasonable and effective to use an elliptic equation to fit the imaging error on the image path. Because it is a conic shape, the intersection solution will have an ambiguous solution meaning it must be filtered.

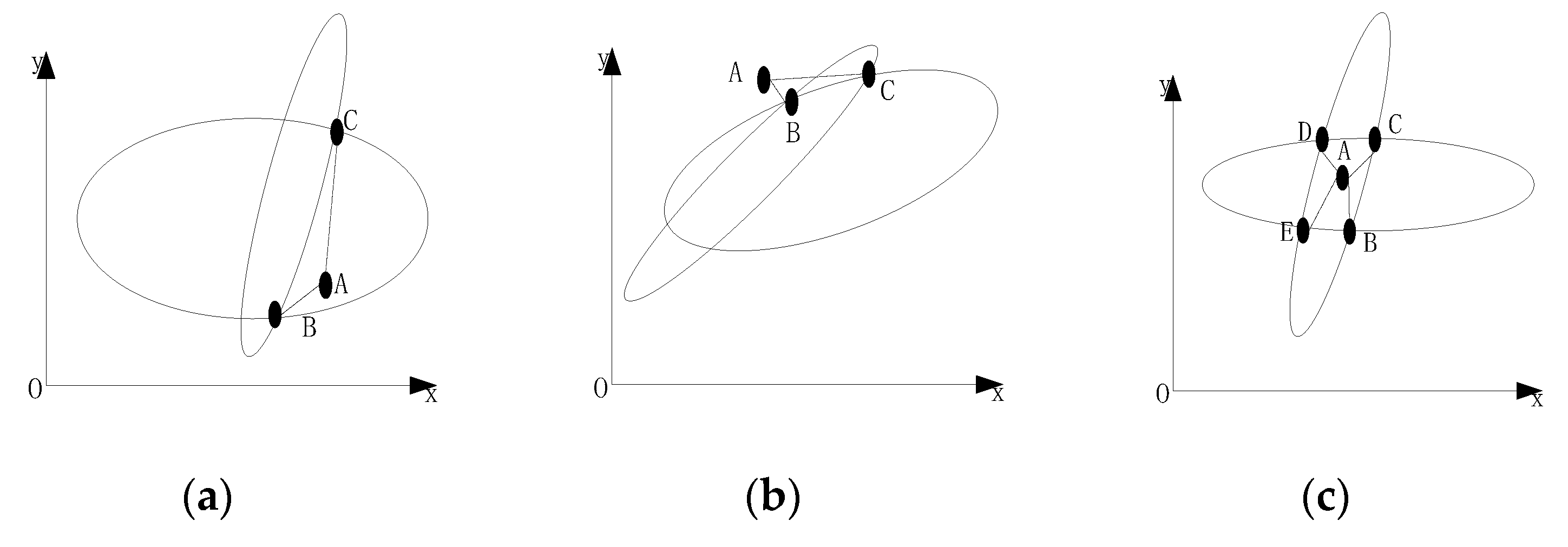

We now describe the principle involved in intersecting ambiguous solution filtering. As shown in

Figure 5a,b, point A is the original star point; one coordinate of point C is closer to point A, but the other coordinate is farther away. Therefore, point B the closer point should be selected as the candidate point in the modified solution. As shown in

Figure 5c, four ambiguous solutions of point BCDE are possible. Therefore, it is necessary to consider the two-way coordinates comprehensively and select the point closest to point A as the candidate point of the modified solution.

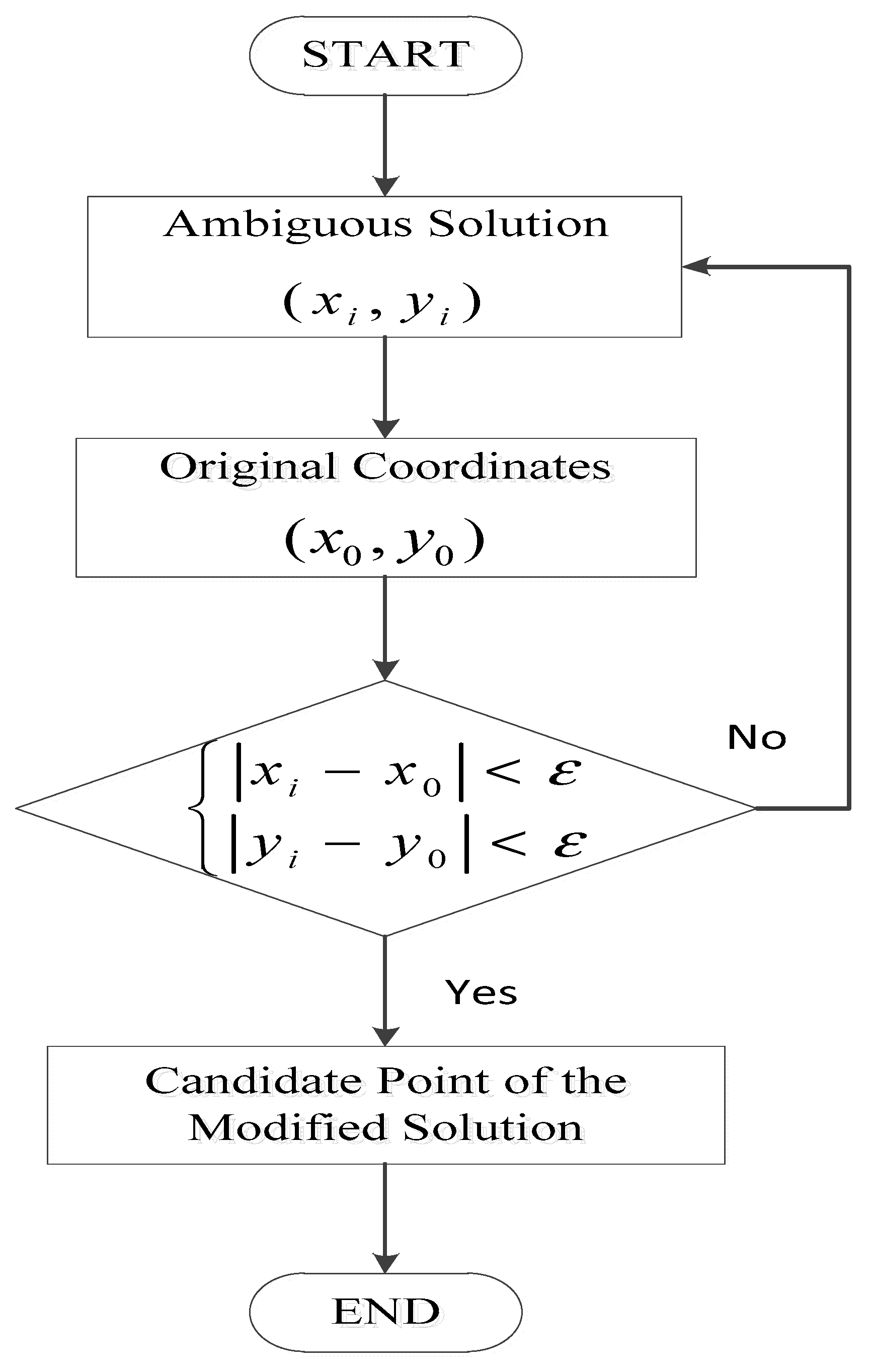

Figure 6 shows the steps for filtering the ambiguous solution. Here, we set a value

to filter the ambiguous solution; meets this value, we will call it candidate point of the modified solution. In the simulation experiment, the value is set as 2 pixels, while in the real data experiment, the value was set as 15 pixels.

3. Experiment and Results Analysis

3.1. Experiment Data

In this paper, two sets of experiments were designed, one simulated and one using for the real data.

The design idea of the simulation experiment is as follows: first, we simulated a set of original star maps using MATLAB2014 and added Gaussian white noise. We then carried out rough extraction of the star points. Next, the ellipsoid model was applied to the dataset to correct the coordinates of the star point. Here, we assumed that the simulated star point coordinates before the noise was added were the real coordinates; the corrected coordinates were compared with the real coordinates.

The simulation conditions were as follows: Something about the computer: frequency of the CPU was 1.70 GHz, memory was 4.00 GB, system was Windows 8.1. We input the initial a set of attitudes of satellites, and then simulated using the principles of geometric imaging. In this process, we assumed that the focal length of the camera was f = 43.3 mm, the pixel size was 0.015 mm, the signal-to-noise ratio (SNR) was 5 dB, the star’s star limit was set to 6, and the photo size was set to 1024 × 1024.

In the second set of experiments, the ellipsoid model was applied to the real data to correct the star point error. Then the satellite attitude was determined. In this paper, the satellite attitude was represented by quaternions, and compared with the quaternions provided by the original gyroscope.

The experimental data used in this experiment are all real data. obtained by the star sensor aboard the carrier satellite ZY-3-02 of China. Two sets of data were used: the group 0702 star maps was obtained on 2 July 2016, and the group 0712 was obtained on 12 July 2016. The size of each star map was 1024 × 1024 pixel. The group 0702 was used as raw data for model fitting and the group 0712 was used to test the model when conducting the comparison test. First, the 0702 data were used to test the conformity of the model to its own data to prove the correction of the model. Then the 0712 data were used to test the applicability and effectiveness of the model.

In reference to the correction test of this model, for the simulation experiment, we can assume that the MATLAB star point coordinates were exact before the noise was added, meaning the accuracy of the correction can be directly verified. For the real data, the real coordinates were not clear, so the external precision evaluation could not be performed. The mean square error of the difference between the two was evaluated using the coincidence degree of data.

3.2. Experiment Results and Analysis

3.2.1. Experiment Results of Simulation

As described above, the present study began by performing two-way fitting in the

x and

y directions of the frame superposition results of simulation data; We select 100 point coordinates of every trajectory and fit the point coordinates. The resulting fitting equations are shown in Equations (3) and (4).

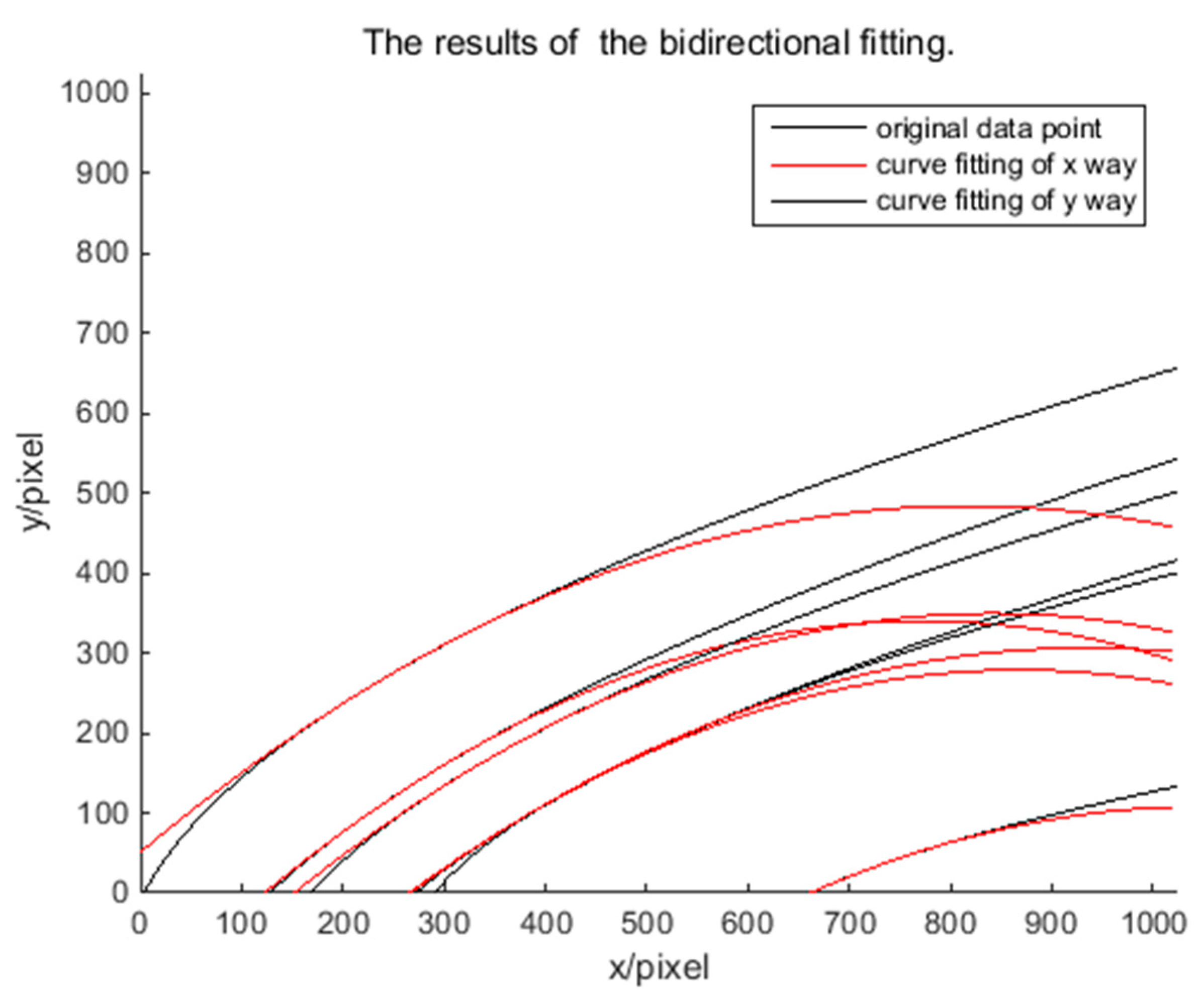

The results of bidirectional fitting are shown using MATLAB software.

Figure 7 shows that the fitting results from the

x and

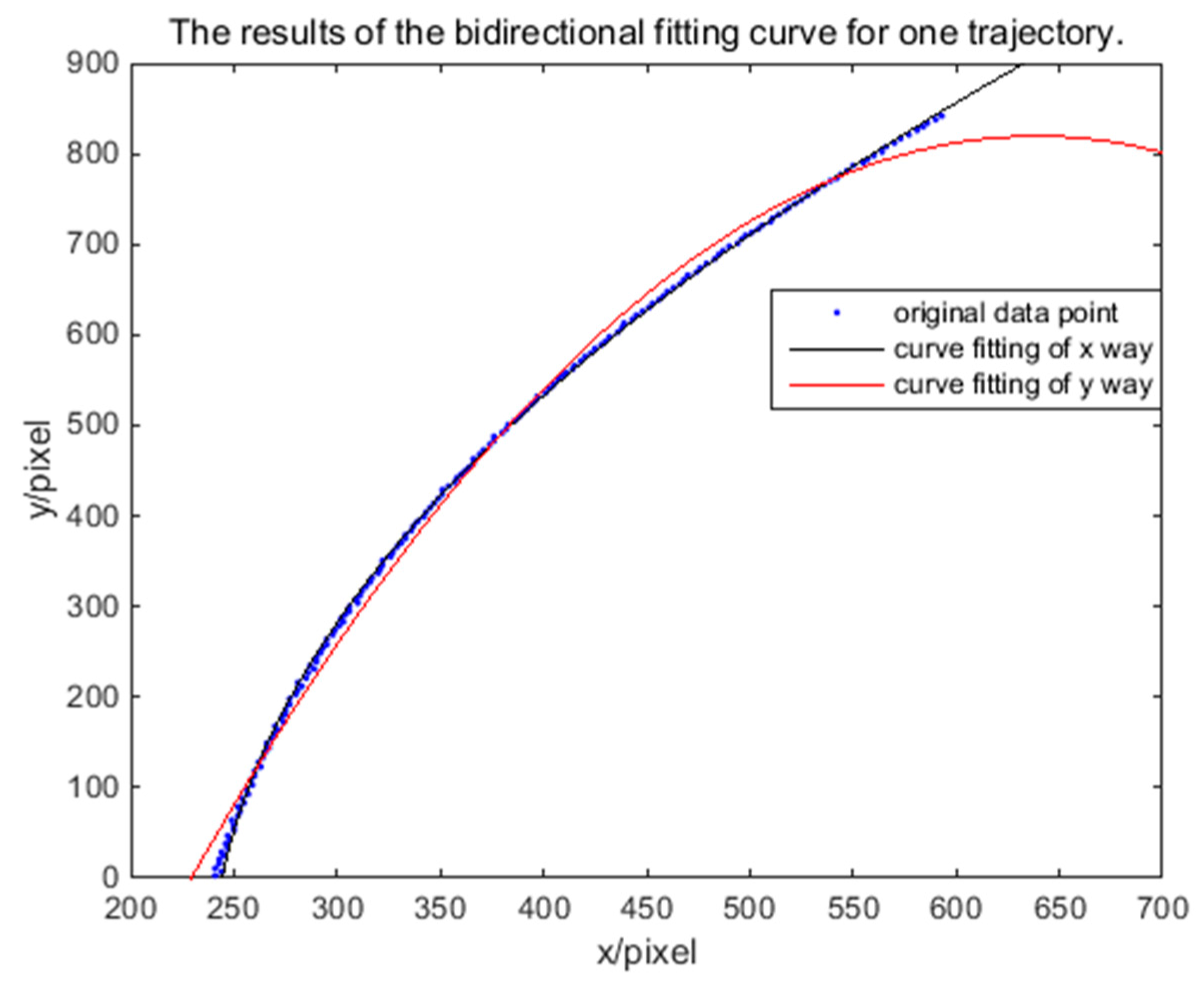

y directions are not very consistent in general. Therefore, starting from a single trajectory,

Figure 8 shows the results of the bidirectional fitting curve for the one trajectory. A difference exists in the two-way error; this difference will affect the determination of the error and the correction of the coordinates. After calculation, the average error in the

x direction of fitting is 1.86 pixels; that in the y direction of fitting is 0.483 pixels. So, if we correct error from one direction,

x or

y, the result is not accurate. Therefore, we propose a method that involves using an ellipsoid model and an intersection to correct the error. Specifically, based on the two-way fitting curve, the coefficients are quadratically fitted. In theory, the required coordinate correction solution should satisfy the quadratic fitting equation of each coefficient; however, when considering the actual situation and the existence of the fitting error, the results of the intersection of each quadratic fitting curve were regarded as the required coordinate correction solution. Because the result of each intersection may be a modified solution, each result was recorded as a candidate point of the modified solution; and the average value was used as the final correction solution.

Then, we selected most the suitable point coordinate for every trajectory, so we selected six point coordinates to fit the coefficients, and the results are as follows:

Equations (5) and (6) provide the results of quadratic fitting.

Table 1 compares the coordinates before and after the correction of the simulation data, and the amount of time needed to correct each coordinate.

Table 2 compares the errors before and after the correction of the simulation data. According to the tables we can calculate the mean square error of the errors. After calculation, the mean square error of the errors before correction was 0.4709, and the mean square error after correction was 0.0479, and the accuracy improvement of 89.8%. Besides, the average time required for a star point coordinate correction is 0.259 s. Therefore, the model can correct the star point errors effectively and quickly. From the experimental results, the time required for the model can fully meet the needs of typical missions.

3.2.2. Experiment Results of Real Data

As described above, this study began by performing two-way fitting in the

x and

y directions of the frame superposition results; We select 120 point coordinates of every trajectory and fit the point coordinates. The obtained fitting equations are shown in Equations (7) and (8).

Detailed equations see

Appendix A Equations (A1)–(A26).

For detailed equations see

Appendix A Equations (A27)–(A52).

Then, we selected the most suitable point coordinates for every trajectory, so we select twenty-six point coordinates to fit the coefficients, and the results are as follows:

Equations (9) and (10) provide the results of quadratic fitting.

For detailed equations see

Appendix A Equations (A53) and (A54).

Table 3 and

Table 4 compare the coordinates before and after the correction of the 0702 and 0712 groups, respectively, using the ellipsoid model.

Table 3 and

Table 4 represent two different experimental datasets. The entries in the table respectively refer to the horizontal pixel coordinate of points before the correction, the vertical coordinate before the correction, the horizontal pixel coordinate of points after the correction, the vertical coordinate after the correction, the difference between the horizontal pixel coordinate of points before and after the correction, the difference between the vertical coordinate of points before and after the correction, and the time required to correct each coordinate.

Table 3 and

Table 4 show that most of the differences for the 0702 group correction are at the pixel level; meanwhile, for the 0712 group, the differences involve more than a dozen pixels. After calculation, the average time to calculate a star point coordinate of the 0702 group is 0.272 s. However, for the 0712 group 0.284 s were required. We know that the correction effect of the ellipsoid model for the 0702 group was significantly better than that of the 0712 group.

Table 5 gives the mean square error of the difference between the two groups of experiments. We can see the model has more complexity than the 0712 group data and the correction effect has improved. We can conclude that the model not only applies effectively to its own data, but also can apply to other data. This shows that the model has good correction ability, applicability, and effectiveness.

In addition, we used the coordinates before and after the correction to calculate the attitude. The attitude directly obtained by gyroscope was used as the original reference attitude. The quaternion was used to measure the accuracy comparison before and after the correction.

Table 6 gives a comparison of the quaternion calculated before and after the correction with the quaternion provided by the gyroscope.

Table 7 shows the mean of the errors of the four components before and after the correction, and the mean of the combined error. From the table, we can see that the error obtained after correction is reduced by 52.3%, demonstrating the validity of the method.

5. Conclusions

Based on simulation data and real-life imaging data from a star sensor on ZY-3-02, this paper proposes a star point centroid determination model of the whole star map based on the trajectory of motion and the correlation between stars in the same star domain. In simulation experiments, we can calculate the mean square error of the errors. In the example herein, the mean square error of the errors before correction is 0.4709, and the mean square error after correction is 0.0479, an improvement in accuracy of 89.8%. Besides, the average time required for a star point coordinate correction is 0.259 s. Therefore, the model can correct the star point error effectively and the time required for the model can fully meet the needs of typical missions. In real data experiments, we can see that the error obtained after correction is reduced by 52.3%, demonstrating validity of this method for star point coordinate correction What is more, the 0702 group and the 0712 group data express that the model not only applies effectively to its own data, but also can apply to other data. This shows that the model has strong correction ability, applicability, and effectiveness.

As the number of current data points is small, the correction effect is not very good. However, herein, we prosed a new method for star point centroid correction, proved the applicability and effectiveness of the method, and provided a strong correlated star point centroid and image control point coordinates for subsequent star map recognition. Our work should help with star map recognition and determination of the initial attitude of the satellite.