Real-Time UAV Autonomous Localization Based on Smartphone Sensors

Abstract

:1. Introduction

1.1. Motiviation

- The built-in MEMS phone sensor is low-priced, and its accuracy is relatively low. The measurement data contains a large amount of noise, which can seriously affect the sensor for accurate calculation, such as UAV localization. At present, the common standard and testing methods have not been formulated in the research field on these MEMS sensors [7], so the first question is supposed to determine which sensors in mobile phones can be used for UAV localization.

- The limited storage and computing resources of the mobile phone pose another challenge. After years of exploration and practice in the academic field, visual information has been outstanding in solving the problem with the UAV location, typically such as visual odometry (VO) [8,9,10] and visual simultaneous localization and mapping (VSLAM) [5,11,12], which have made amazing achievements. However, the operation of these algorithms requires sufficient storage space and computing resources to store environment maps, loop closure detection, and local/global optimization, which challenge the performance of the mobile phone.

1.2. Contributions

- Accuracy calibration experiments were designed firstly, and the feasibility and precision of the mobile phone sensors (accelerometer, gyroscope, pressure sensor, and orientation sensor) were evaluated.

- An efficient and real-time autonomous localization algorithm was designed based on the off-the-shelf smartphone sensors. Indoor and outdoor flying experiments were carried out to verify the accuracy of the proposed approach.

2. Related Work

3. Materials and Methods

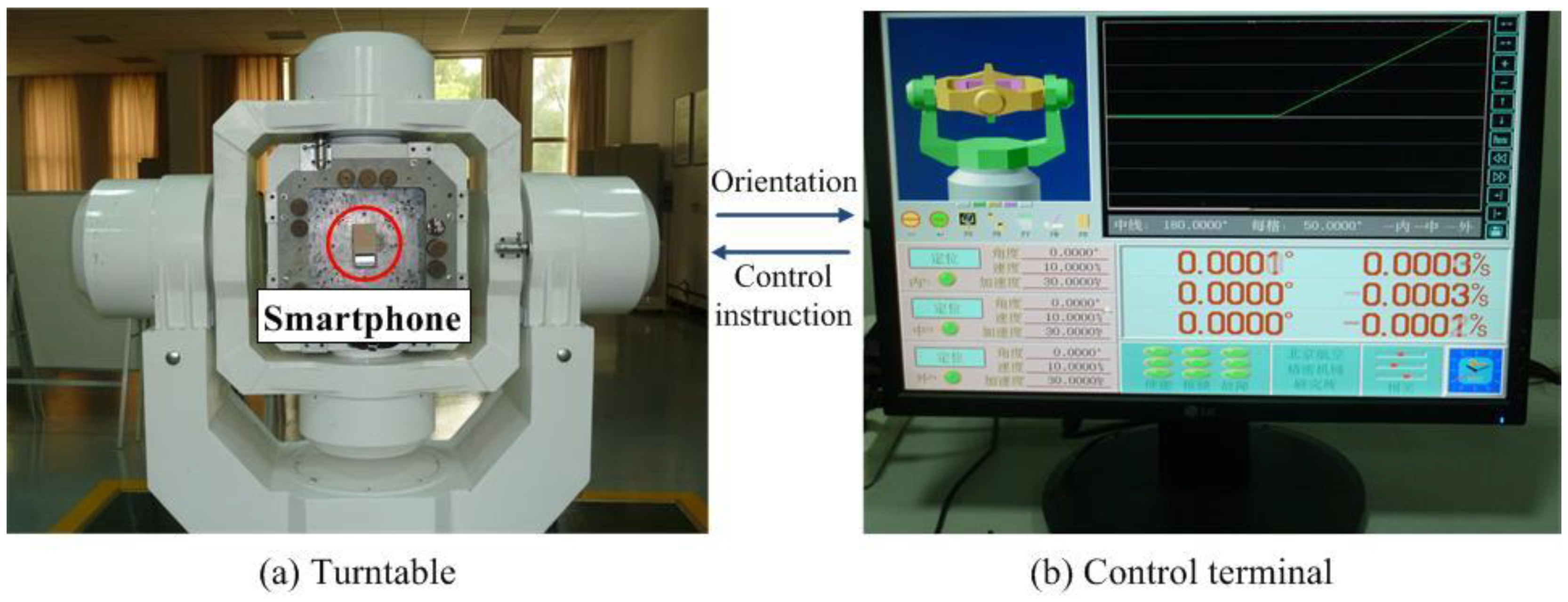

3.1. Mobile Phone Sensor Performance Evaluation

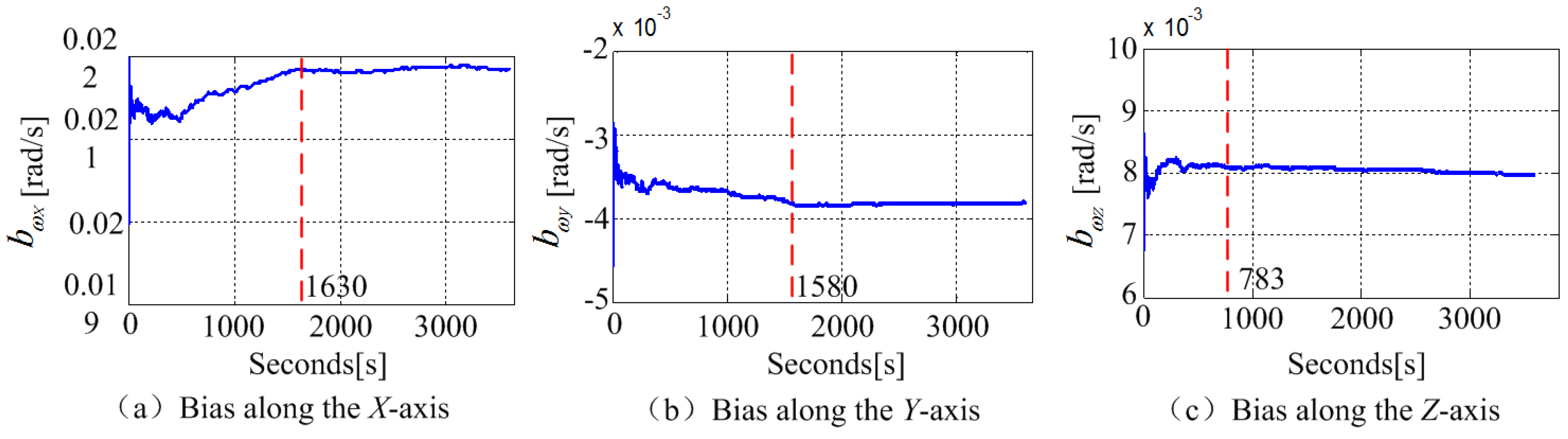

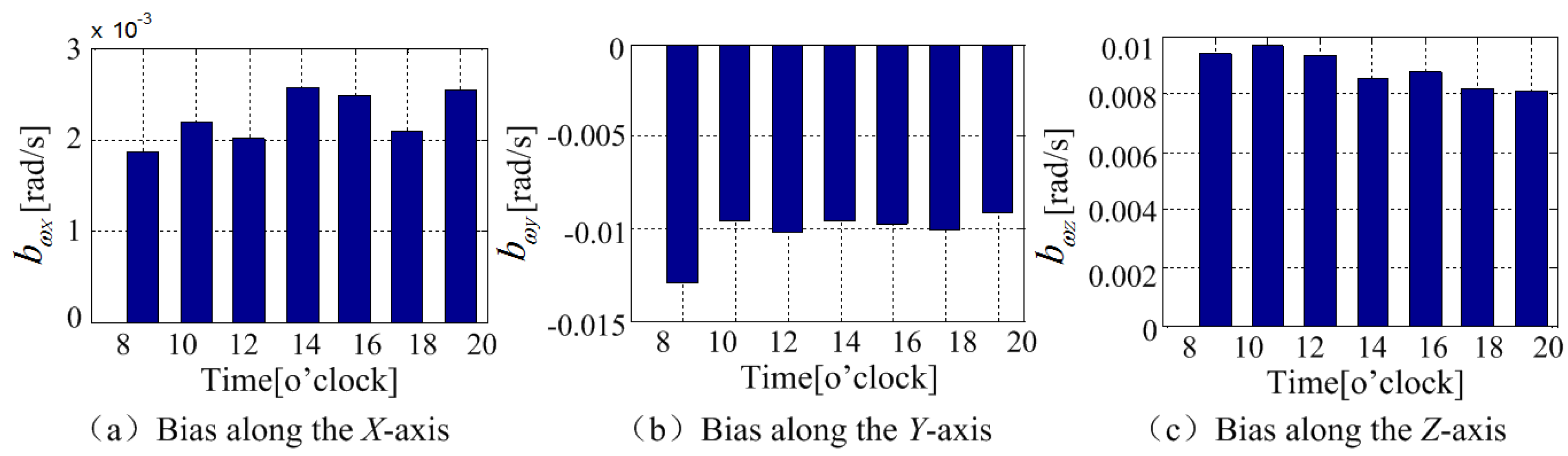

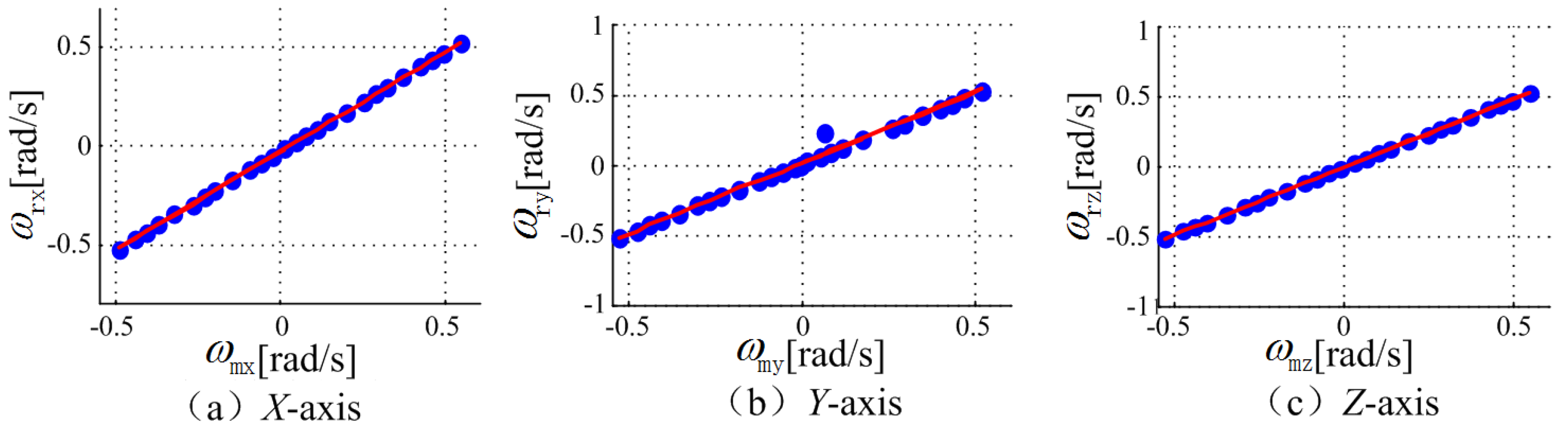

3.1.1. Gyroscope

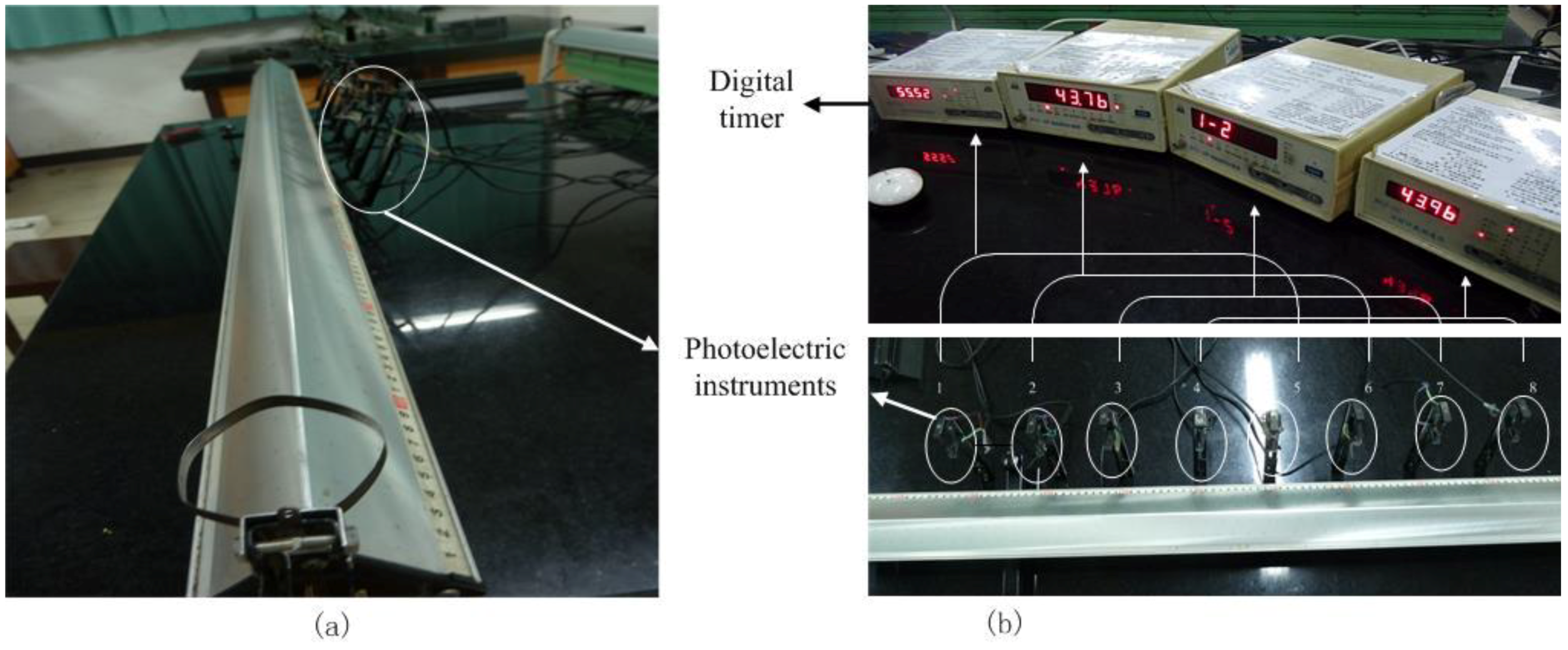

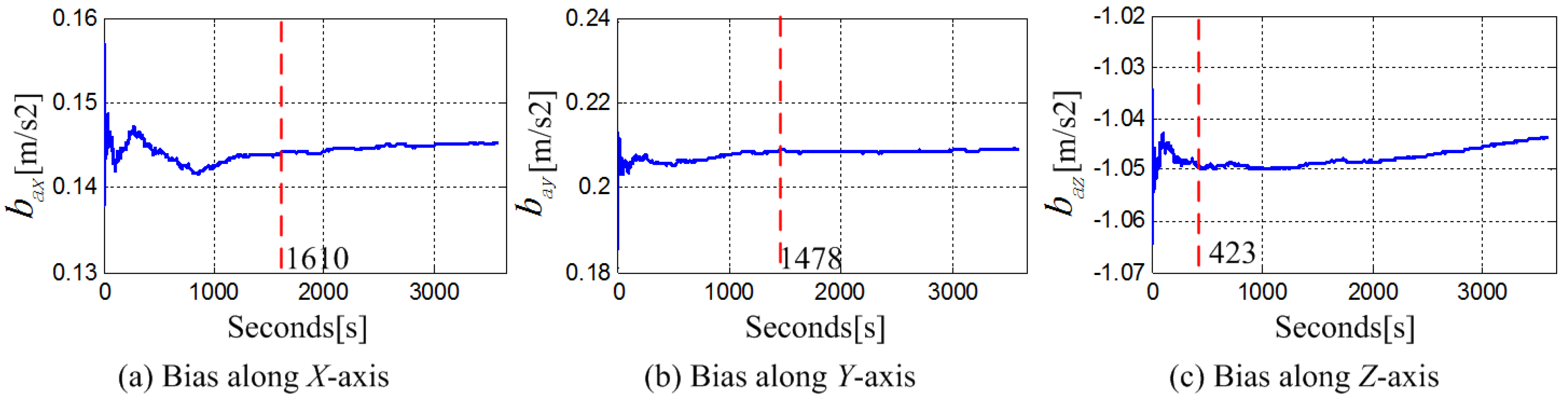

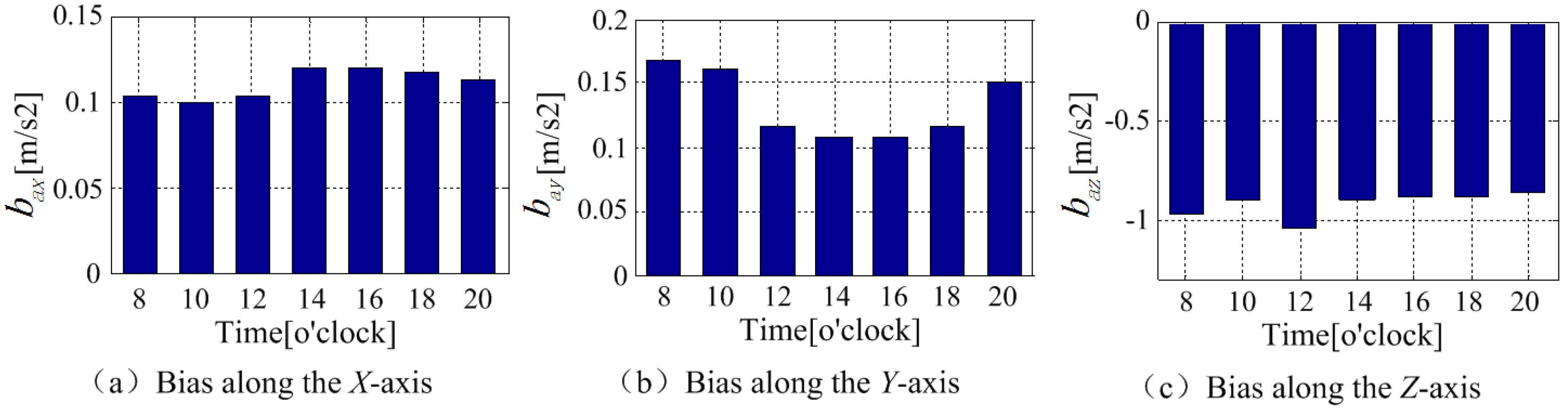

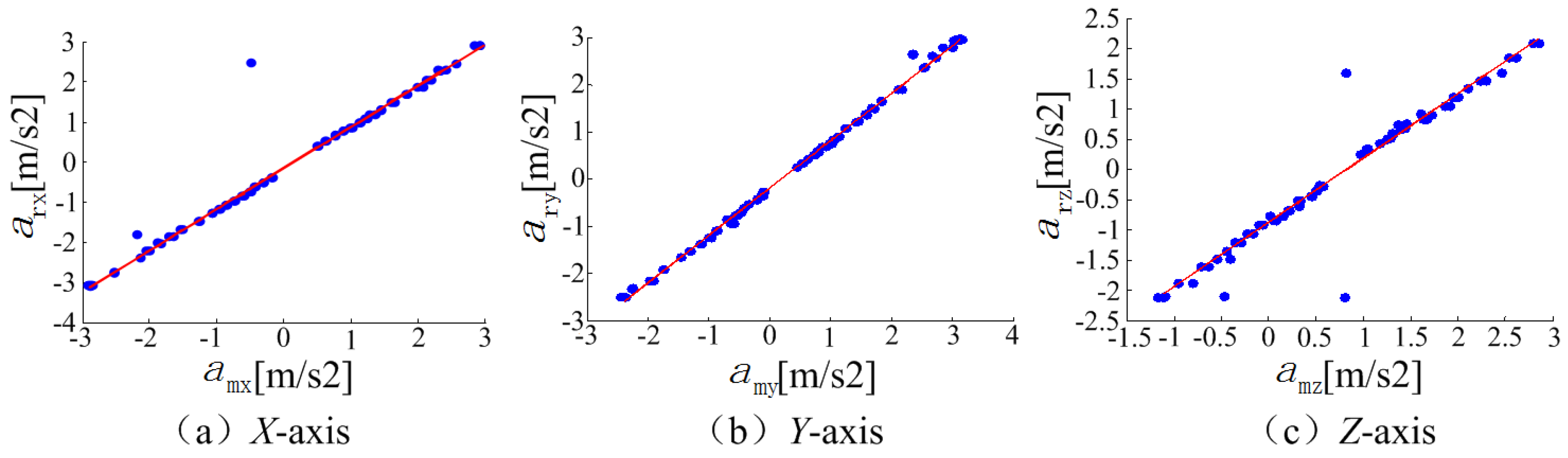

3.1.2. Accelerometer

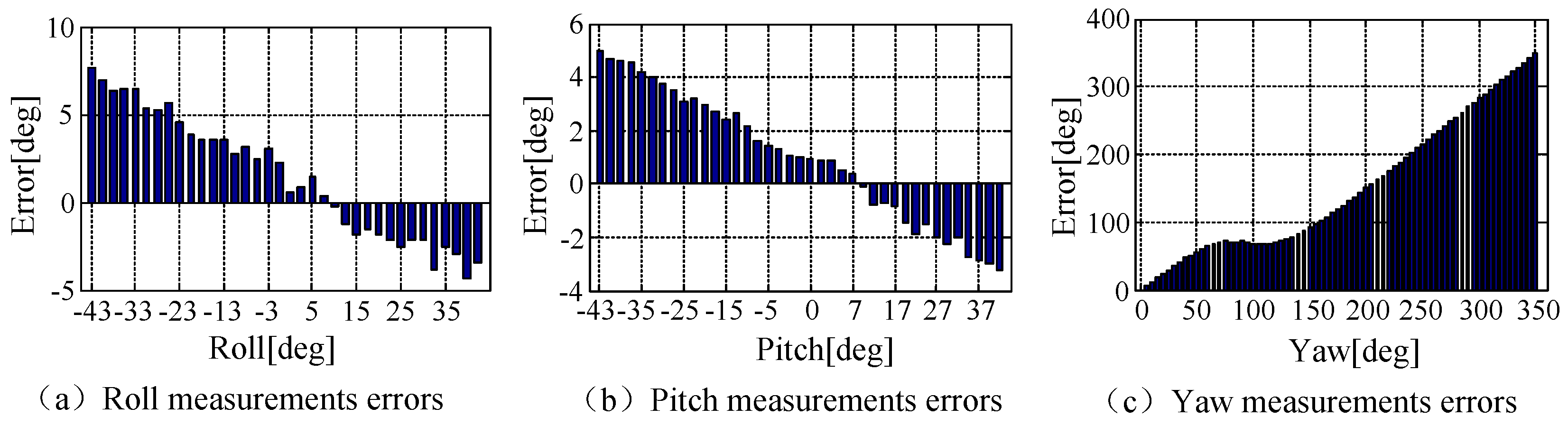

3.1.3. Orientation Sensor

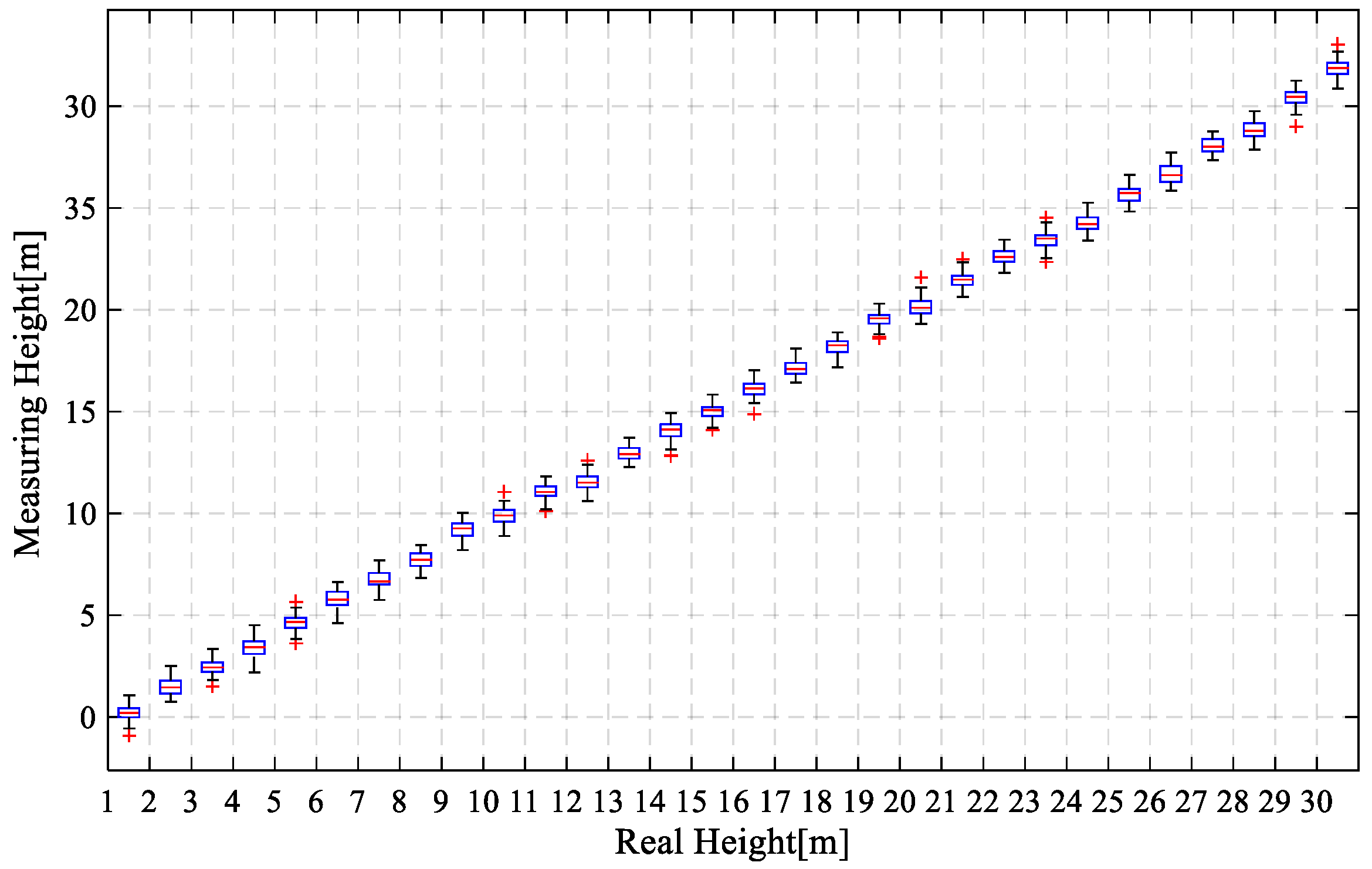

3.1.4. Pressure Sensor

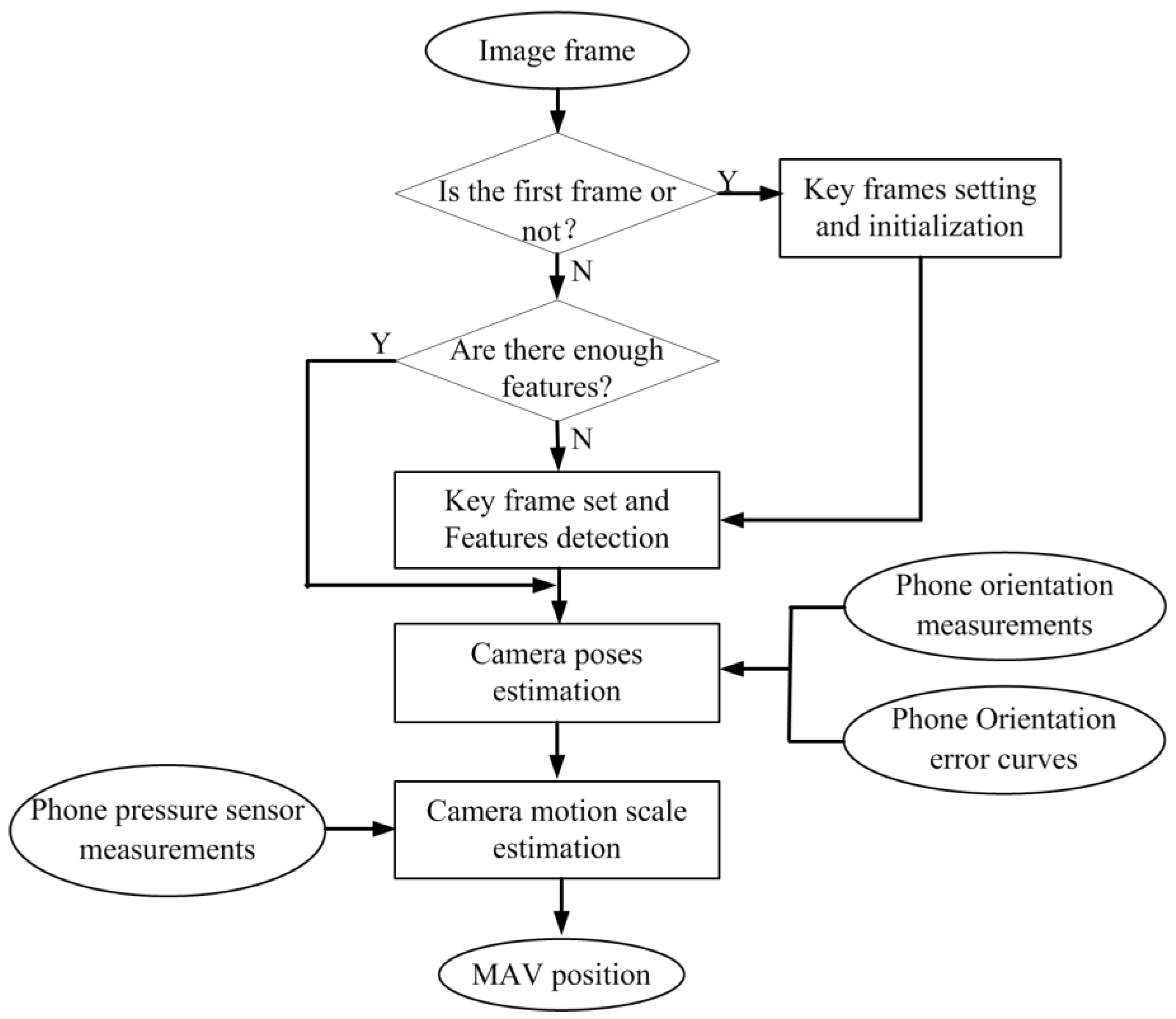

3.2. Multi Phone Sensors Fusion-Based Visual Odometry (MFVO)

4. Experiments and Results

4.1. Phone Sensors Accuracy Assessment

4.1.1. Gyroscope

4.1.2. Accelerometer

4.1.3. Orientation Sensor

4.1.4. Pressure Sensor

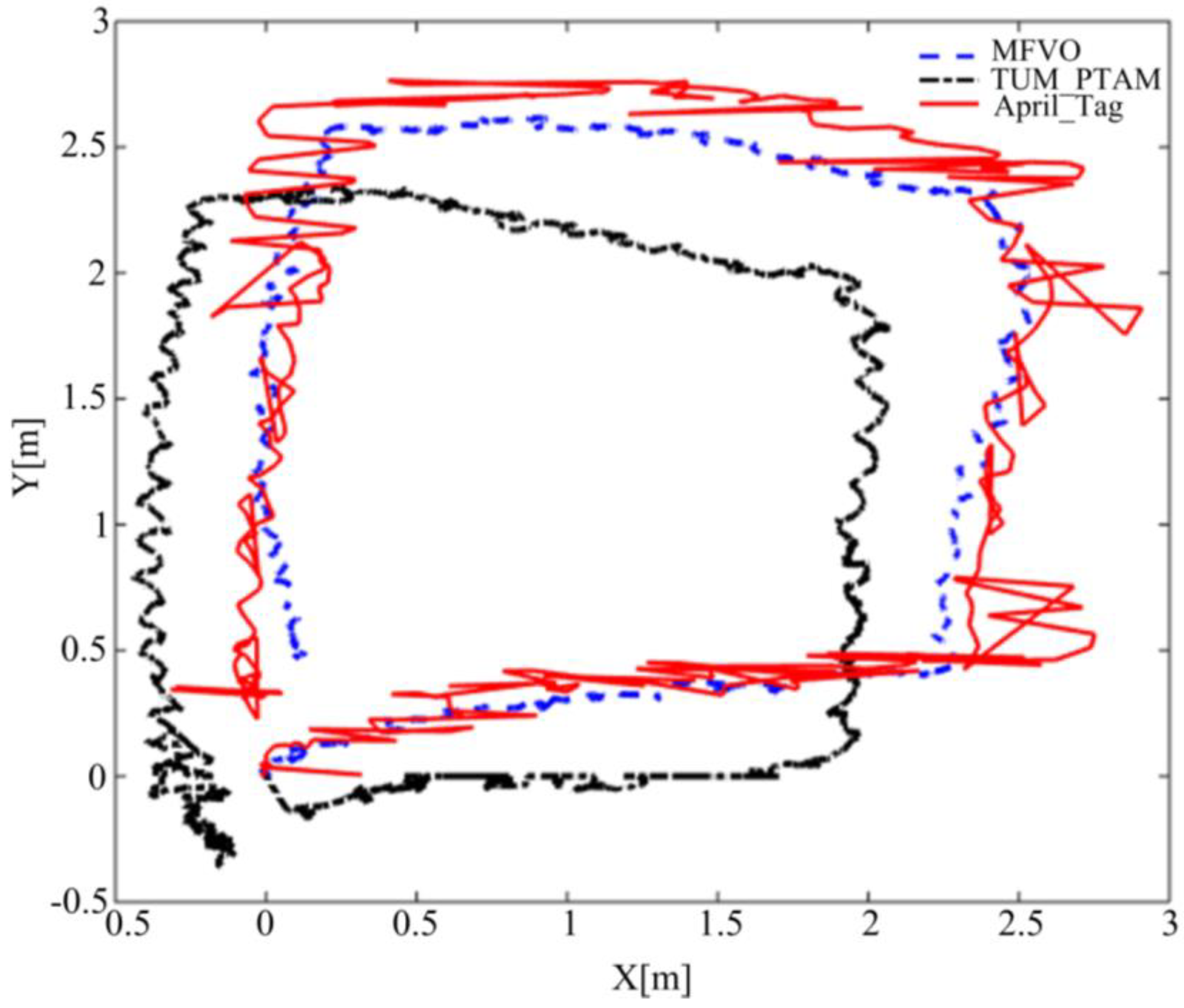

4.2. Algorithm Accuracy Assessment

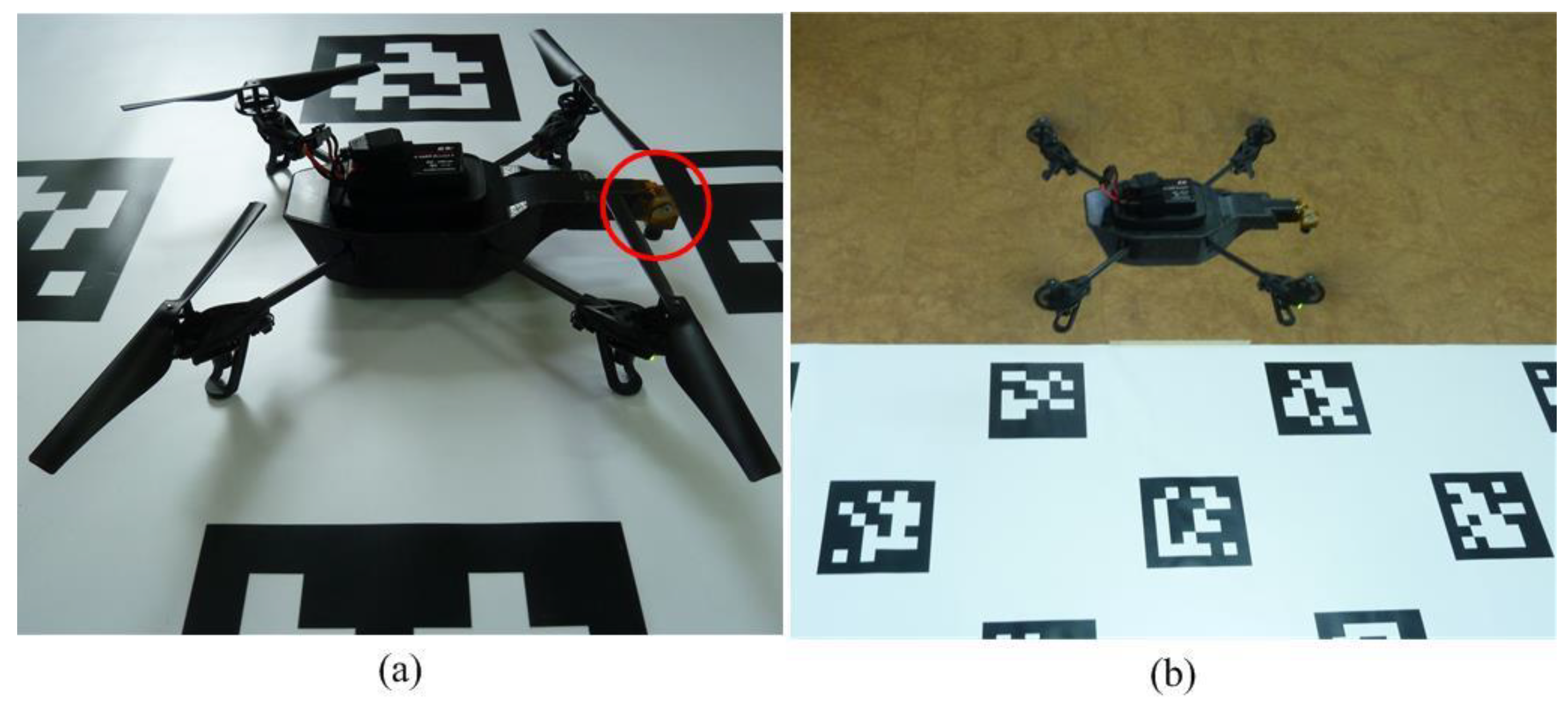

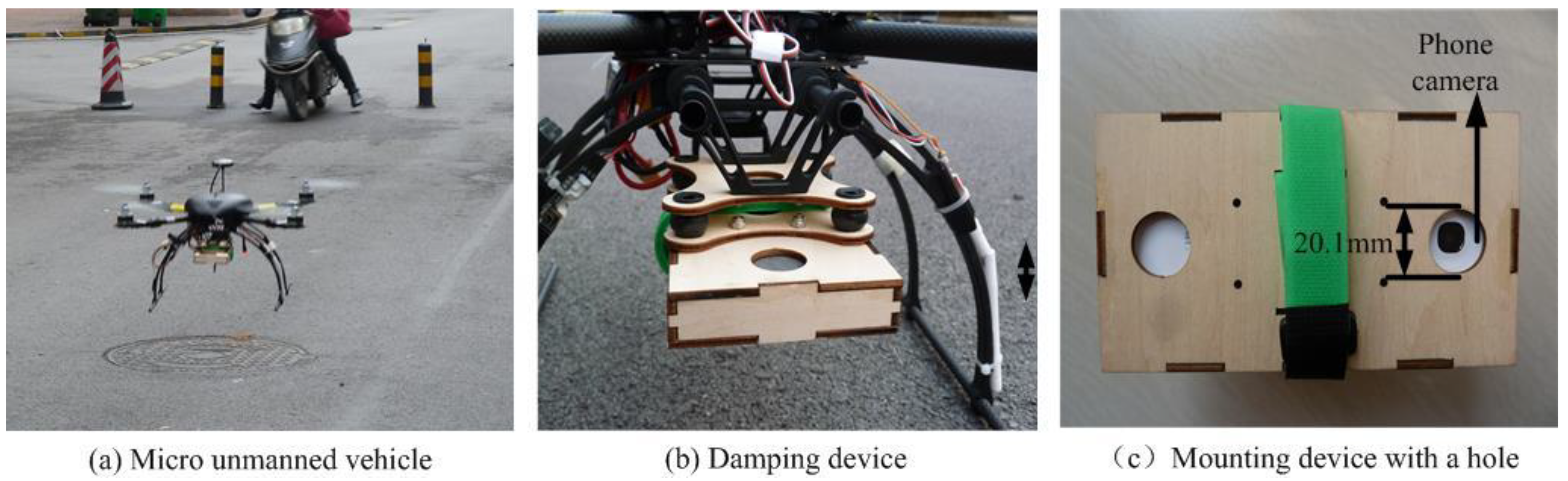

4.2.1. Parrot AR Drone Experiment

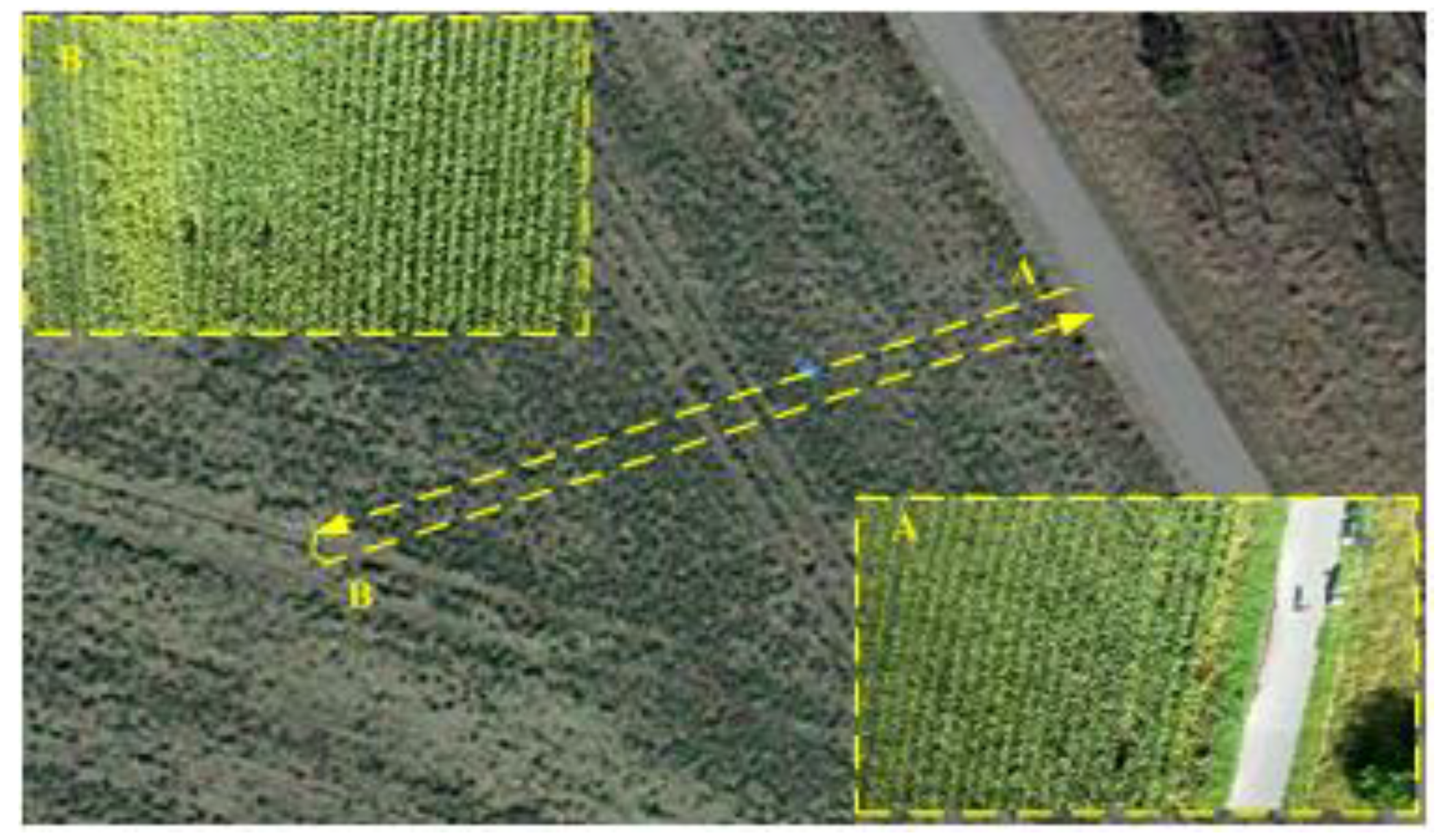

4.2.2. Flying Experiment in the Wheat Fields

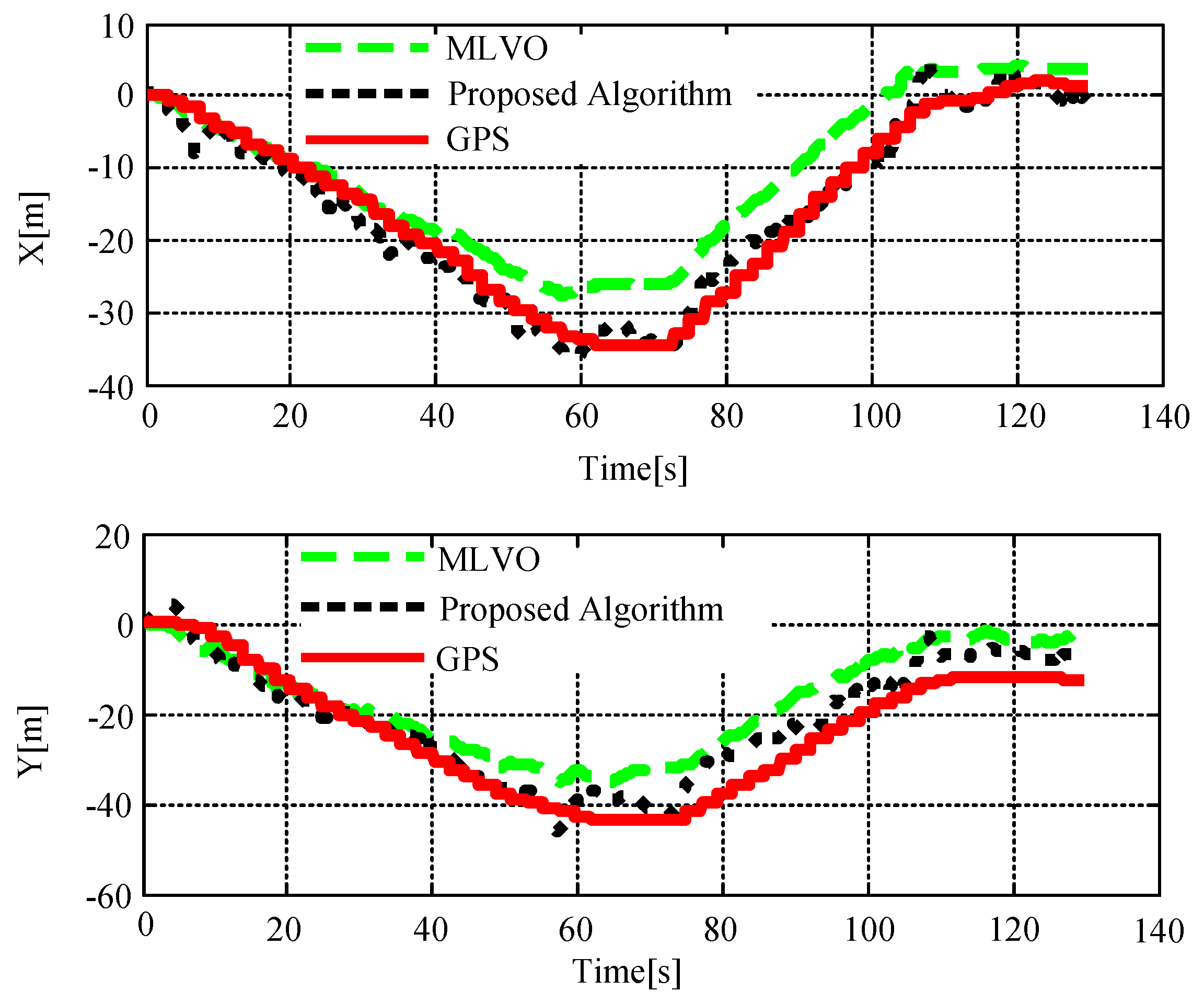

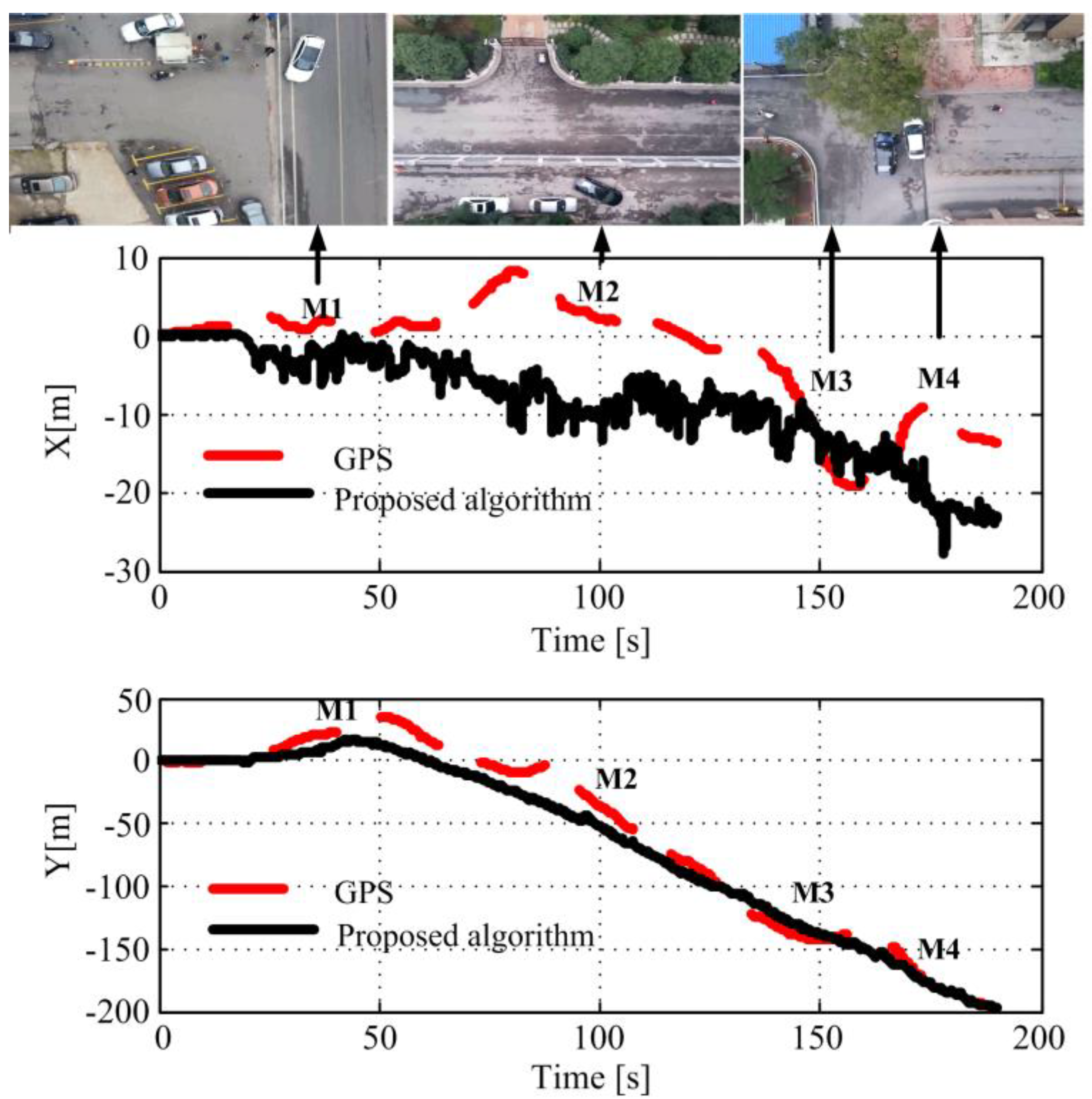

4.2.3. Flying Experiment in the Urban Environment

5. Discussion and Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Suresh, M.; Ghose, D. UAV Grouping and Coordination Tactics for Ground Attack Missions. IEEE Trans. Aerosp. Electr. Syst. 2012, 48, 673–692. [Google Scholar] [CrossRef]

- Cesare, K.; Skeele, R.; Yoo, S.H.; Zhang, Y.; Hollinger, G. Multi-UAV exploration with limited communication and battery. In Robotics and Automation (ICRA). In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015. [Google Scholar] [CrossRef]

- Chmaj, G.; Selvaraj, H. Distributed Processing Applications for UAV/drones: A Survey; Springer International Publishing: Cham, Switzerland, 2015. [Google Scholar]

- Bahnik, P.; Gerke, M.; Masár, I.; Jelenčiak, F.; Beyer, V. Collaborative data-exchange architecture for crisis management using an UAV as a mobile sensor platform. In Proceedings of the 2013 International Conference on Process Control (PC), Strbske Pleso, Slovakia, 18–21 June 2013; pp. 365–370. [Google Scholar]

- Weiss, S.; Achtelik, M.W.; Lynen, S.; Achtelik, M.C.; Laurent Kneip, L.; Chli, M.; Siegwart, R. Monocular Vision for Long-term Micro Aerial Vehicle State Estimation: A Compendium. J. Field Robot. 2013, 30, 803–831. [Google Scholar] [CrossRef]

- Lutwak, R. Micro-technology for positioning, navigation, and timing towards PNT everywhere and always. In Proceedings of the 2014 International Symposium on Inertial Sensors and Systems (ISISS), Laguna Beach, CA, USA, 25–26 February 2014. [Google Scholar]

- Zhao, B.; Hellwich, O.; Hu, T.; Zhou, D.; Niu, Y.; Shen, L. Employing smartphone as on-board navigator in unmanned aerial vehicles: implementation and experiments. Ind. Robot. 2015, 42, 306–313. [Google Scholar] [CrossRef]

- Forster, C.; Pizzoli, M.; Scaramuzza, D. SVO: Fast semi-direct monocular visual odometry. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 15–22. [Google Scholar]

- Engel, J.; Sturm, J.; Cremers, D. Semi-dense Visual Odometry for a Monocular Camera. In Proceedings of the 2013 IEEE International Conference on Computer Vision (ICCV), Sydney, NSW, Australia, 1–8 December 2013; pp. 1449–1456. [Google Scholar]

- Koletschka, T.; Puig, L.; Daniilidis, K. MEVO: Multi-environment stereo visual odometry. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2014), Chicago, IL, USA, 14–18 September 2014; pp. 4981–4988. [Google Scholar]

- Wang, T.; Wang, C.; Liang, J.; Zhang, Y. Rao-Blackwellized visual SLAM for small UAVs with vehicle model partition. Ind. Robot 2014, 41, 266–274. [Google Scholar] [CrossRef]

- Klein, G.; Murray, D. Parallel Tracking and Mapping for Small AR Workspaces. In Proceedings of the 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, 13–16 November 2007; pp. 225–234. [Google Scholar]

- Li, B.; Liu, H.; Zhang, J.; Zhao, X.; Zhao, B. Small UAV autonomous localization based on multiple sensors fusion. In Proceedings of the 2017 IEEE 2nd Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Chongqing, China, 25–26 March 2017; pp. 296–303. [Google Scholar]

- Google. Google: Project Tango. February 2014. Available online: https://www.google.com/atap/projecttango/Google project tango (accessed on 15 August 2017).

- Lee, J. 4-1: Invited Paper: Mobile AR in Your Pocket with Google Tango. Sid Symp. Dig. Tech. Pap. 2017, 48, 17–18. [Google Scholar] [CrossRef]

- Chen, Z.; Zou, H.; Jiang, H.; Zhu, Q.; Chai, Y.; Xie, L. Fusion of WiFi, Smartphone Sensors and Landmarks Using the Kalman Filter for Indoor Localization. Sensors 2015, 15, 715–732. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bo, C.; Li, X.Y.; Jung, T.; Mao, X.; Tao, Y.; Yao, L. SmartLoc: Push the limit of the inertial sensor based metropolitan localization using smartphone. In Proceedings of the 19th Annual International Conference on Mobile Computing & Networking, Miami, FL, USA, 30 September–4 October 2013; pp. 195–198. [Google Scholar]

- Yang, M.D.; Su, T.C.; Lin, H.Y. Fusion of Infrared Thermal Image and Visible Image for 3D Thermal Model Reconstruction Using Smartphone Sensors. Sensors 2018, 18, 2003. [Google Scholar] [CrossRef] [PubMed]

- Erhard, S.; Wenzel, K.E.; Zell, A. Flyphone: Visual Self-Localisation Using a Mobile Phone as Onboard Image Processor on a Quadrocopter. J. Intell. Robot. Syst. 2010, 57, 451–465. [Google Scholar] [CrossRef]

- Yun, M.H.; Kim, J.; Seo, D.; Lee, J.; Choi, C. Application Possibility of Smartphone as Payload for Photogrammetric Uav System. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, XXXIX-B4, 349–352. [Google Scholar] [CrossRef]

- Kim, J.; Lee, S.; Ahn, H.; Seo, D.; Park, S.; Choi, C. Feasibility of employing a smartphone as the payload in a photogrammetric UAV system. J. Photogramm. Remote Sens. 2013, 79, 1–18. [Google Scholar] [CrossRef]

- Mossel, A.; Leichtfried, M.; Kaltenriner, C.; Kaufmann, H. SmartCopter: Enabling autonomous flight in indoor environments with a smartphone as on-board processing unit. Int. J. Pervasive Comput. Commun. 2014, 10, 92–114. [Google Scholar] [CrossRef]

- Giuseppe, L.; Mulgaonkar, Y.; Brunner, C.; Ahuja, D.; Ramanandan, A.; Chari, M.; Diaz, S.; Kumar, V. Smartphones power flying robots. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015. [Google Scholar]

- Astudillo, A.; Muñoz, P.; Álvarez, F.; Rosero, E. Altitude and attitude cascade controller for a smartphone-based quadcopter. In Proceedings of the 2007 International Conference on Unmanned Aircraft Systems (ICUAS), Miami, FL, USA, 13–16 June 2017; pp. 1447–1454. [Google Scholar]

- Pedro, D.; Tomic, S.; Bernardo, L.; Beko, M.; Oliveira, R.; Dinis, R.; Pinto, P. Localization of Static Remote Devices Using Smartphones. In Proceedings of the 2018 IEEE 87th Vehicular Technology Conference (VTC Spring), Porto, Portugal, 3–6 June 2018. [Google Scholar]

- Wu, C.; Yang, Z.; Xiao, C. Automatic Radio Map Adaptation for Indoor Localization using Smartphones. IEEE Trans. Mob. Comput. 2018, 17, 517–528. [Google Scholar] [CrossRef]

- Zhang, Y.; Chao, A.; Zhao, B.; Liu, H.; Zhao, X. Migratory birds-inspired navigation system for unmanned aerial vehicles. In Proceedings of the 2016 IEEE International Conference on Information and Automation (ICIA), Ningbo, China, 1–3 August 2016; pp. 276–281. [Google Scholar]

- Engel, J.; Sturm, J.; Cremers, D. Scale-Aware Navigation of a Low-Cost Quadrocopter with a Monocular Camera. Robot. Auton. Syst. 2014, 62, 1646–1656. [Google Scholar] [CrossRef]

- Bian, C.; Xia, S.; Xu, Y.; Chen, S.; Cui, Z. A Micro Amperometric Immunosensor Based on MEMS. Rare Metal Mater. Eng. 2006, 28, 339–341. (In Chinese) [Google Scholar]

- Zhang, Z. Eight-Point Algorithm; Springer US: New York, NY, USA, 2014. [Google Scholar]

- Welch, G.; Bishop, G. An Introduction to the Kalman Filter. Univ. North Carol. Chapel Hill 2001, 8, 127–132. [Google Scholar]

- Olson, E. AprilTag: A robust and flexible visual fiducial system. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 3400–3407. [Google Scholar]

- Zhao, B.; Hu, T.; Zhang, D.; Shen, L.; Ma, Z.; Kong, W. 2D monocular visual odometry using mobile-phone sensors. In Proceedings of the 2015 34th Chinese Control Conference (CCC), Hangzhou, China, 28–30 July 2015; pp. 5919–5924. [Google Scholar]

- Cai, G.; Chen, B.M.; Dong, X.; Lee, T.H. Design and implementation of a robust and nonlinear flight control system for an unmanned helicopter. Mechatronics 2011, 21, 803–820. [Google Scholar] [CrossRef]

- Elbanna, A.E.A.; Soliman, T.H.M.; Ouda, A.N.; Hamed, E.M. Improved Design and Implementation of Automatic Flight Control System (AFCS) for a Fixed Wing Small UAV. Radioengineering 2018, 27, 882–890. [Google Scholar] [CrossRef]

| Sensors | Sampling Frequency [Hz] |

|---|---|

| Camera | 30 |

| Orientation sensor | 20 |

| Pressure sensor | 40 |

| Accelerometer | 50 |

| Gyroscope | 50 |

| The Name of Parameters | Parameter Setting |

|---|---|

| Camera resolution [pixels] | |

| Image segmentation N | 4 |

| Orientation threshold | |

| Manhattan distance | |

| Number of maximum feature | 200 |

| Minimum features for keyframe selection | 100 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, B.; Chen, X.; Zhao, X.; Jiang, J.; Wei, J. Real-Time UAV Autonomous Localization Based on Smartphone Sensors. Sensors 2018, 18, 4161. https://doi.org/10.3390/s18124161

Zhao B, Chen X, Zhao X, Jiang J, Wei J. Real-Time UAV Autonomous Localization Based on Smartphone Sensors. Sensors. 2018; 18(12):4161. https://doi.org/10.3390/s18124161

Chicago/Turabian StyleZhao, Boxin, Xiaolong Chen, Xiaolin Zhao, Jun Jiang, and Jiahua Wei. 2018. "Real-Time UAV Autonomous Localization Based on Smartphone Sensors" Sensors 18, no. 12: 4161. https://doi.org/10.3390/s18124161

APA StyleZhao, B., Chen, X., Zhao, X., Jiang, J., & Wei, J. (2018). Real-Time UAV Autonomous Localization Based on Smartphone Sensors. Sensors, 18(12), 4161. https://doi.org/10.3390/s18124161