Abstract

Gaofen-4 is China’s first geosynchronous orbit high-definition optical imaging satellite with extremely high temporal resolution. The features of staring imaging and high temporal resolution enable the super-resolution of multiple images of the same scene. In this paper, we propose a super-resolution (SR) technique to reconstruct a higher-resolution image from multiple low-resolution (LR) satellite images. The method first performs image registration in both the spatial and range domains. Then the point spread function (PSF) of LR images is parameterized by a Gaussian function and estimated by a blind deconvolution algorithm based on the maximum a posteriori (MAP). Finally, the high-resolution (HR) image is reconstructed by a MAP-based SR algorithm. The MAP cost function includes a data fidelity term and a regularized term. The data fidelity term is in the L2 norm, and the regularized term employs the Huber-Markov prior which can reduce the noise and artifacts while preserving the image edges. Experiments with real Gaofen-4 images show that the reconstructed images are sharper and contain more details than Google Earth ones.

1. Introduction

Images with higher resolution are required in most electronic imaging applications, such as remote sensing, medical diagnostics, and video surveillance. During the past decades, considerable advances have been realized in imaging systems. However, the quality of images is still limited by the cost and the manufacturing technology [1]. Super-resolution (SR) is a promising digital image processing technique to construct high-resolution (HR) images from several blurred low-resolution (LR) images.

The basic idea of SR is that the LR images of the same scene contain different information because of relative sub-pixel shifts, thus, an HR image with higher spatial information can be reconstructed by image fusion. Sub-pixel motion can occur due to movement of local objects or vibrating of the imaging system, or even controlled micro-scanning [2,3]. Numerous SR algorithms have been proposed since the concept of SR was introduced by Tsai and Huang [4] in the frequency domain. However, most of researchers nowadays address the problem mainly in the spatial domain, because it is more flexible to model all kinds of image degradations [5]. Ur and Gross [6] proposed a non-uniform interpolation of multiple spatially-shifted LR images based on the generalized multichannel sampling theorem, followed by a deblurring process. Stark et al. [7] were the first to suggest an SR algorithm based on the projection onto convex sets approach (POCS). Patti et al. [8] extended the method to handle arbitrary sampling lattices and non-zero aperture time. The advantage of the POCS method is that it allows a convenient inclusion of prior knowledge into the reconstruction process. Irani and Peleg [9] estimated the HR image by back projecting the error between re-projected LR images and the observed LR images. For the ill-posed nature of the SR problem, regularization methods are widely used in SR reconstruction. Schultz and Stevenson [10] reconstructed a single HR video frame from a short image sequence using the maximum a posteriori (MAP) approach with a discontinuity-preserving image prior. They used a block-matching method to estimate the sub-pixel displacement vectors between LR images. A MAP framework for jointly estimating image registration parameters and the HR image was presented by Hardie et al. [11]. The sub-pixel displacement vectors were iteratively updated along with the HR image in a cyclic coordinate-descent optimization procedure. Zomet et al. [12] proposed a robust SR algorithm in which a median estimator was used as the gradient of the L2 norm cost function. Farsiu et al. [13] used a cost function in the L1 norm rather than modified the gradient directly to further increase the robustness.

For remote sensing applications, researchers mainly focused on single-frame SR based on learning or sparse representation for lack of multiple images of the same scene [14,15,16,17]. Nevertheless, some researchers have made their contributions. Merino and Núñez [18] proposed a method named Super-Resolution Variable-Pixel Linear Reconstruction (SRVPLR) to reconstruct an HR image from multiple LR images acquired over a long period. This algorithm was derived from drizzle [19] which was designed to work with astronomical dithered under-sampled images. A prior histogram matching on LR images and high geometrical corrections were introduced to make it compatible with remotely-sensed images. Shen et al. [20] proposed an SR method to MODIS remote sensing images, where image registration in the range and spatial domains were performed before image reconstruction. Li et al. [21] proposed a MAP-based SR algorithm with a universal hidden Markov tree model, and tested it with Landsat7 panchromatic images captured on different days. Fan et al. [22] proposed a POCS-based SR algorithm with a point spread function (PSF) estimated by a slant knife-edge method. The method was tested on Airborne Digital Sensor 40 (ADS40) three-line array images and the overlapped areas of two adjacent Gaofen-2 images.

This paper presents a technique that performs multi-frame super-resolution of Gaofen-4 satellite images. Gaofen-4 is a staring imaging satellite with high temporal resolution, and its high orbit limits spatial resolution, therefore, multi-frame SR is possible and essential. First, we build the observation model for the Gaofen-4 satellite, then we propose the pipeline of our MAP-based SR algorithm. Our algorithm consists of three steps: image registration, PSF estimation, and image fusion. Image registration is performed both in the range and spatial domains, and the Gaussian PSFs of LR images are estimated via a modified MAP-based blind deconvolution algorithm. Finally, The HR image is estimated by an image fusion method based on the MAP method with a Huber image prior, while the registration parameters are further refined along with the HR image.

2. Materials and Methods

2.1. Observation Model

To analyze the SR problem comprehensively, an image observation model must be formulated first. For notational simplicity, the image in our method is represented as a lexicographically-ordered vector. Let us consider the desired HR image of size , where denotes the downsampling factor in the observation model, and and are the number of rows and columns of the LR images, respectively. Generally, the observation model-related HR image and the LR images is given by [1]:

where is the subpixel motion matrix of size ; represents the blur matrix of size ; denotes the downsampling matrix of size ; is the lexicographically-ordered additive Gaussian noise vector with zero mean; and represents the lexicographically-ordered LR image vector of size .

The observation model represented by Equation (1) assumes an illumination invariant environment. However, this assumption may not be valid due to the variation of light conditions over time. The modified observation model is given by:

where and denote the contrast gain and illumination offset, respectively, and is a unit vector of size . The motion matrix could vary in time, while the downsampling matrix and blur matrix remain constant over time for most situations. Therefore, and are used instead of and , respectively. In the following of this section, we build the specific model for the Gaofen-4 satellite.

Remote sensing sensors can be modeled by rational polynomial coefficients (RPC) geometrically. However, for registering images taken by the Gaofen-4 staring imaging satellite, the scene can be effectively modeled as a planar region for its high orbit when the region of interest (ROI) is not too large and, hence, a simple affine camera model is sufficient to describe the image geometry. The affine transformation contains six parameters and can be written as:

where and denote translation along and directions respectively, and , , and are the parameters representing rotation, scale and deformation. Using matrix notation, the entire affine motion parameters are represented by .

We assume a shift-invariant, convolutional blur, which can be described by the PSF in the spatial domain or the modulation transfer function (MTF) in the frequency domain. The remote sensing images can be blurred by the atmosphere, the optical system, relative motion, and pixel integration. The overall PSF is the successive convolution of all of the individual terms:

which respectively summarize the atmospheric, optical system, motion and sensor contributions.

The atmosphere contributes through turbulence, light scattering, and attenuation. The spatial resolution of Gaofen-4 in the visible and near-infrared band is 50 m, and the contribution of turbulence at this spatial resolution is insignificant. The scattering and absorption of energy by the aerosols affects all spatial frequencies, therefore, causing edges in the image to be blurred. The aerosol MTF can be approximated by a Gaussian form for below the cut-off frequency [23].

A limited aperture size of the optical system causes a diffraction blur, and the corresponding PSF is given by the Airy disk. Other optical aberrations, such as defocus and spherical aberrations, also contribute to the optical system, which can be seen as perturbations to the Airy disk. A general optical system can be expressed as a Gaussian function:

where and denote the deviations of the two-dimensional Gaussian function along the and direction, respectively.

The motion-related blur results from shift and vibrations. For pushbroom and whiskbroom sensors, the PSF can be characterized by a rectangular function along the scanning direction. We assume the motion blur of the Gaofen-4 satellite can be ignored for it is a staring imager equipped with focal-plane arrays.

The finite size of the sensor pixels results in sensor blur which presents the integration of irradiance over pixels. The PSF is given by:

where is the size of a pixel.

All PSF terms can are gathered into a single Gaussian term, which is given by:

where we further assume the deviations along the and direction are equal and denoted by .

2.2. Image Registration

The success of the SR technique relies on the subpixel motion between images, and the image registration accuracy affects the quality of the reconstructed HR image significantly. Geometric registration and photometric registration are interactive. Most photometric registration approaches require accurate geometric registration, and geometric registration may fail when images are not aligned photometrically. We first perform geometric registration coarsely using speeded up robust features (SURF) [24], which is not invariant to image contrast gain and illumination bias. Then photometric and geometric registration are jointly performed using alternative minimization.

The relationship between the target image and the reference image is given by the following model:

where denotes the spatial coordinates; and are geometric and photometric parameters in matrix form, respectively; and are geometric and photometric models, respectively; and is the additive Gaussian noise. In this paper, we focus on the linear photometric model and affine motion. Thus, Equation (8) becomes:

where and denote the contrast gain and illumination bias, respectively. The goal of the image registration is to estimate these parameters via minimization of an objective function in the least square sense:

where is the transformation of the target image which is expressed as:

and represents the region of inliers. To detect inliers, we use a geometric criterion and a photometric criterion. Geometric criterion requires that the pixels of the warped image should have the same geometric field with the reference image, i.e.,

where and are the number of rows and columns of the reference image, respectively. The photometric criterion requires that the intensity difference between the reference image and the registered target image should be small enough, that is to say:

where is the intensity threshold and is set to 8.

The solution of Equation (10) can be obtained by an optimization algorithm, such as the conjugate gradient descent algorithm or the Gaussian-Newton method. However, it is easy to fall into a local optimal solution when the misalignment between two images is large. Thus, SURF is employed to estimate the geometric parameters coarsely, then an iterative updating strategy is used to estimate the photometric and geometric parameters more precisely. Specifically, at each iteration, the affine motion parameters are firstly updated by a new variation of the Marquardt-Levenberg algorithm, which was proposed by Thévenaz et al. [25], given the photometric parameters are fixed, then the affine motion parameters are kept fixed, and the photometric parameters are updated by the conjugate gradient method. The gradients of with respect to and are given by:

where is the target image after geometric registration, but without photometric registration.

The image registration algorithm flow is shown in Algorithm 1. In the feature-matching step, the random sample consensus (RANSAC) method [26] is used to eliminate matching outliers. The stop criterion of this image registration algorithm is that values of the estimated parameters do not change anymore, which can be expressed by:

where means the sum of all elements; represents the estimated geometric parameters after k times cycles, and so on. is the precision threshold; here, is used.

| Algorithm 1. Image registration algorithm. | |

| 1 | Input: LR images . |

| 2 | Output: Geometric and photometric parameters. |

| 3 | Select one image as the reference image, and detect its SURF features. |

| 4 | for , do |

| 5 | Detect SURF features of the LR image . |

| 6 | Match features of and , then estimate geometric parameters based on RANSAC. |

| 7 | do |

| 8 | Estimate photometric parameters using conjugate gradient method for fixed geometric parameters . |

| 9 | Estimate geometric parameters using the Marquardt-Levenberg method for fixed photometric parameters . |

| 10 | until and do not change anymore. |

| 11 | endfor |

2.3. PSF Estimation

The PSF of remote sensing images can be measured after being launched, or estimated via sharp edge prediction. Sometimes, appropriate sharp edges do not exist, so the PSF estimation method should be employed. We propose a modified MAP-based multi-frame blind deconvolution algorithm to estimate the PSF, which was originally proposed by Matson et al. [27]. The forward model used in this algorithm is:

where is the -th measurement image; is the true object; is the -th PSF; is the -th additive Gaussian noise; and denotes convolution. The cost function to generate estimates of the PSFs is given by:

where is the regularized parameter and is the image noise variance of the -th frame. The minimization of the cost function is performed via the conjugate gradient method in a cyclic coordinate-descent optimization procedure. Readers can refer to [27] for more details of this blind deconvolution algorithm.

To make the above algorithm compatible with our application, we use the bicubic upsampled registered LR images as the measurement images. In order to save computational resources, no more than four measurement images are used. The estimated PSFs are reparameterized by Gaussian PSFs, which are given by Equation (7). The mean deviation of these PSFs is used in image fusion. We use the mean deviation because we assume there are no significant differences between the PSFs.

2.4. Image Fusion

Mathematically, the SR problem is an inverse problem whose objective is to estimate an HR image from multiple observed blurry LR images. The MAP estimator is a powerful tool to solve this type of problem. The MAP optimization problem can be represented by:

Under the assumption of additive Gaussian noise, the likelihood term can be expressed as:

The term represents the probability density of the HR image, which restricts the solution space and introduces prior knowledge to the reconstruction. In particular, we employ a Huber Markov random field (HMRF) as the image prior. HMRF encourages piecewise smoothness, and can preserve image edges as well. The expression of is given by:

where denotes a gradient operator; denotes the prior strength; is a parameter of the Huber function; and is a normalization factor. The Huber function is defined as:

where is the -th element of the vector .

By substituting Equations (21)–(24) to Equation (20) and neglecting the constant term, the MAP estimator can be converted to a minimizing cost function, which is given by:

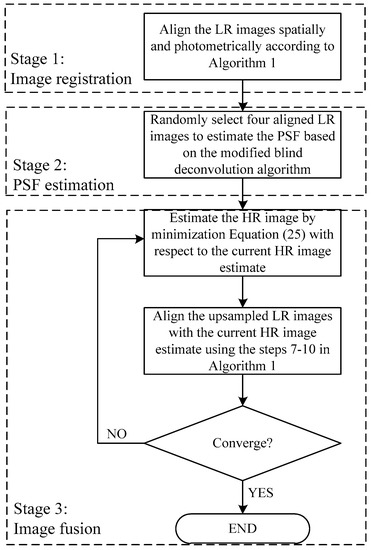

The accuracy of the geometric and photometric registration is limited by the low quality of the LR images. Thus, an iterative update of the registration parameters, along with the HR image, is required. For the discrepancy of convergence rate of , , and , a cyclic coordinate-descent optimization procedure is adopted. At each iteration, the HR image is updated by minimizing Equation (25) with respect to using a scaled conjugate gradient optimization method, given the registration parameters are fixed; then the registration parameters are updated by minimizing the cost function with respect to the registration parameters, given the HR image estimate is fixed. This minimization is completed by an image registration process, which is the same with the registration method proposed in Section 2.2. However, feature-based registration is not needed anymore, and the current HR image estimate is used as the reference image instead. The algorithm stops when the value of the function does not change. The proposed algorithm as a whole system is illustrated in Figure 1. The algorithm consists of three stages: image registration, PSF estimation and image fusion.

Figure 1.

Diagram of the proposed algorithm.

3. Results and Discussion

3.1. Simulation Images

We first test our algorithm using synthetic images. The quantitative evaluation of the algorithm is achieved by computing the root mean square error (RMSE) and Structural Similarity (SSIM). RMSE is defined as:

where and are the original image and the reconstructed HR image respectively, and is the total number of pixels of the original image. The SSIM index is defined as: [28]

where and are the means and and are the standard deviations of the original and the reconstructed HR image, is the covariance of and , and .

A sequence of LR images are generated by the following procedure:

- (i)

- Apply random affine transformations generated by their SVD decomposition with translations added, where and are rotation matrices with and uniformly distributed in the range of ; is a diagonal matrix with diagonal elements uniformly distributed in the range of (0.95, 1.05); and the additive horizontal and vertical displacements are uniformly distributed in the range of (–5, 5) pixels.

- (ii)

- Blur the images with a Gaussian point spread function (PSF) with the standard deviation equal to 1.

- (iii)

- Clip images to 0.8 times of the size both horizontally and vertically, so that only overlapped regions are left.

- (iv)

- Downsample the images by a factor of .

- (v)

- Apply photometric parameters with uniformly-distributing contrast gain in the range of (0.9, 1.1) and bias in the range of .

- (vi)

- Make the SNR of the images become 30 dB by adding white Gaussian noise with zero mean.

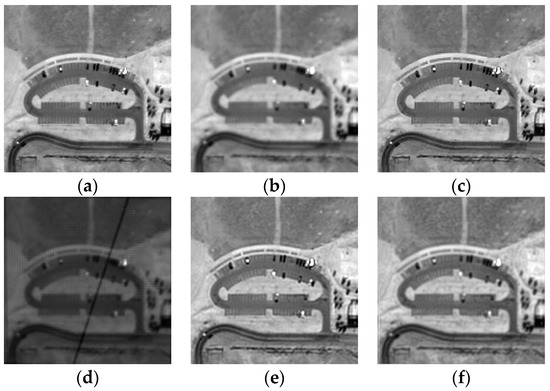

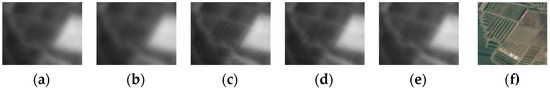

A QuickBird 2 remote sensing image with a size of is used as the origin HR image, and the downsampling factor is equal to 2. We use six LR images to reconstruct an HR image. The Huber function parameter is set to 0.04, and the prior strength parameter is set to 10. The estimated deviation of the total PSF is 1.12. Our method is compared with the bicubic interpolation, the iterative back projection (IBP) approach proposed by Irani and Peleg [9] and Farsiu’s robust SR algorithm [13]. Note that the original IBP and Farsiu’s algorithm do not consider the variations of the illumination in the image registration stage, thus we use our image registration algorithm instead. Figure 2 shows the original image and the reconstructed images by different methods. Compared with the bicubic upsampled image, the HR images reconstructed by all SR methods are sharper and contain more details. The reconstructed images are further compared quantitatively in RMSE and SSIM, as is listed in Table 1. The performance of our method and IBP is close, and better than the bicubic interpolation and Farsiu’s algorithm. Additionally, without photometric registration, many artifacts appear in the reconstructed HR image, as is shown in Figure 2d.

Figure 2.

Simulation results of different methods. (a) Origin HR image; (b) the bicubic upsampled reference image; (c) the proposed method; (d) the proposed method without photometric registration; (e) IBP; (f) Farsiu’s algorithm.

Table 1.

The RMSE and SSIM between the original and reconstructed images obtained from bicubic interpolation, IBP, Farsiu’s method and the proposed method.

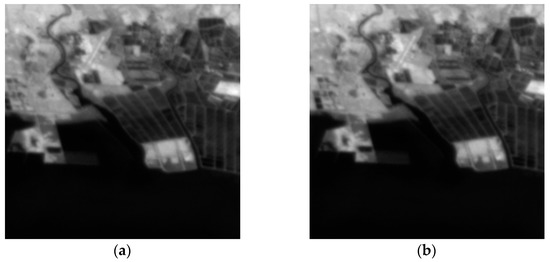

3.2. Real Gaofen-4 Images

The second experiment is carried out with ten 5-band Gaofen-4 images without ortho-rectification. The images were all captured on 3 March 2017, from 11:10:20 to 11:20:21. The original size of full-view images is 10,240 × 10,240, and we chip the region of interest (ROI) with a size of 256 × 256. This region is an offshore aquaculture area, and the images captured at 11:10:20 and 11:20:21 are shown in Figure 3.

Figure 3.

Two LR images: (a) captured at 11:10:20; and (b) captured at 11:20:21.

The image captured at 11:10:20 is used as the reference image, and the other images are aligned to it. Although the images were captured over a short period, photometric registration is needed. The criterion used to evaluate the registration accuracy is signal to noise ratio (SNR), which is defined as:

where is the target image after registration, and is the reference image. The registration results are listed in Table 2. The images are numbered according to their capture time and the first LR image is used as the reference image. All images have similar registration accuracy with both geometric and photometric registration while, without photometric registration, the SNRs are lower, and become lower and lower as time progresses.

Table 2.

Registration results. The row of SNR 1 lists the registration results with both geometric and photometric registration, and the row of SNR 2 lists the registration results with only geometric registration.

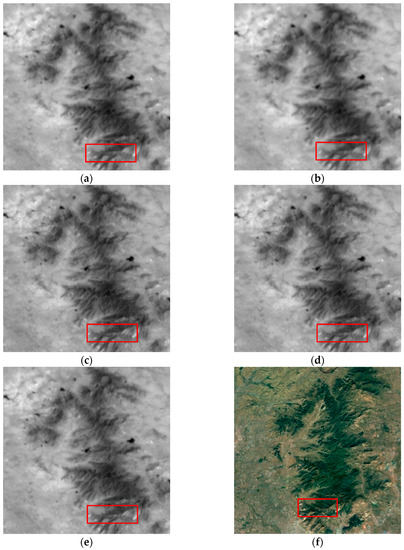

The Huber function parameter is set to 0.04, and the prior strength parameter is set to 10. The estimated deviation of the total PSF is 1.21. The experimental results are shown in Figure 4.

Figure 4.

Experimental results of an offshore aquaculture area. (a) The bicubic upsampled reference image; (b) the added image after registration; (c) the HR image reconstructed by the proposed method; (d) the HR image reconstructed by IBP; (e) the HR image reconstructed by Farsiu’s algorithm; and (f) the image chipped from Google Earth.

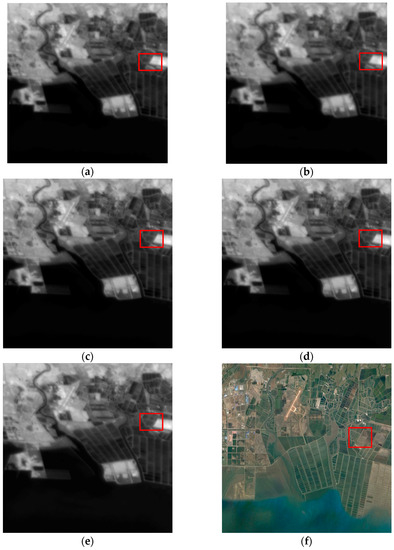

To validate our reconstructed HR image, we chip an image of the same zone from Google Earth, which was captured on 16 September 2015. The reconstructed HR image is clearer and contains more details which corresponds to Google Earth. To see more clearly, the regions denoted by the rectangles in Figure 4 are shown in Figure 5 without zooming. It is clear that the HR image reconstructed by our method is the sharpest.

Figure 5.

The regions denoted by the rectangles in Figure 5. (a) The bicubic upsampled reference image; (b) the added image after registration; the HR images reconstructed by (c) the proposed method, (d) IBP and (e) Farsiu’s algorithm; (f) the image chipped from Google Earth.

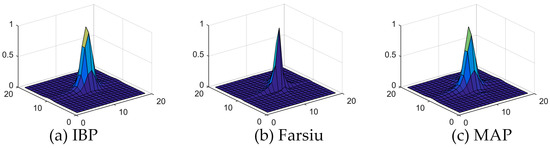

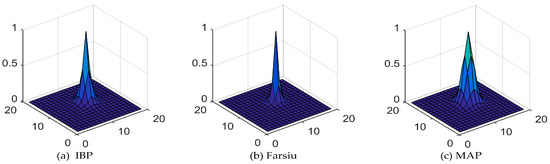

Quantitatively evaluating the performance of the proposed algorithm is not easy in real image applications, for there are no true original images. A commonly used strategy to solve the problem is to treat the image of an inferior resolution level such as the panchromatic image as the true original image. But the strategy is not applicable for Gaofen-4 images, because the panchromatic and multi-spectral images of Gaofen-4 have the same spatial resolution. Theoretically, the reconstructed HR image should be sharper than the bicubic upsampled image. Thus we assume the upsampled image is the blurred version of the reconstructed HR image and estimate the PSF between them. The PSF estimation is completed by the PSF estimation algorithm proposed by Matson et al. which has been described in Section 2.3. Then the PSF is fitted to the Gaussian function. A larger deviation of the Gaussian function means a sharper reconstructed HR image. The estimated PSFs for IBP, Farsiu’s algorithm and our MAP-based SR algorithm are shown in Figure 6. The deviations of the fitted PSFs are 1.0, 0.9 and 1.0 respectively, which means our method has a close performance with IBP, and is better than Farsiu’s algorithm.

Figure 6.

The PSFs between the upsampled reference image and the reconstructed HR images of the offshore aquaculture area.

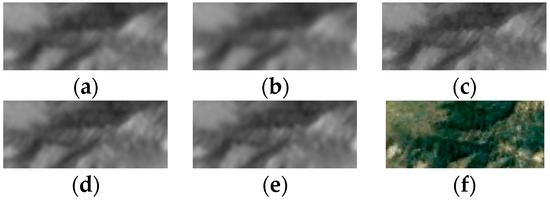

We extract another hilly region of interest with a size of 256 × 256 to further test our algorithm. The parameters are set the same as the previous experiment. The estimated deviation of the total PSF is 1.19, which is almost same with the previous experiment. The experimental results are shown in Figure 7. The Google Earth image was captured on 20 April 2017. To see more clearly, the regions denoted by the rectangles in Figure 7 are shown in Figure 8. Compared with other methods, our method results in the sharpest edges.

Figure 7.

Experimental results of the hilly area. (a) The bicubic upsampled reference image; (b) the added image after registration; the HR images reconstructed by (c) the proposed method, (d) IBP and (e) Farsiu’s algorithm; (f) the image chipped from Google Earth.

Figure 8.

The regions denoted by the rectangles in Figure 7. (a) The bicubic upsampled reference image; (b) the added image after registration; the HR images reconstructed by (c) the proposed method, (d) IBP and (e) Farsiu’s algorithm; (f) the image chipped from Google Earth. The Google Earth image is rotated to be compared with the Gaofen-4 images without ortho-rectification.

The PSFs between the HR images and the upsampled reference image of the hilly area are shown in Figure 9. The deviations of the fitted PSFs for IBP, Farsiu’s algorithm and our MAP-based SR algorithm are 0.98, 0.82 and 1.0 respectively, which means our method yields the best SR result.

Figure 9.

The PSFs between the upsampled reference image and the reconstructed HR images of the hilly area.

3.3. Further Discussion

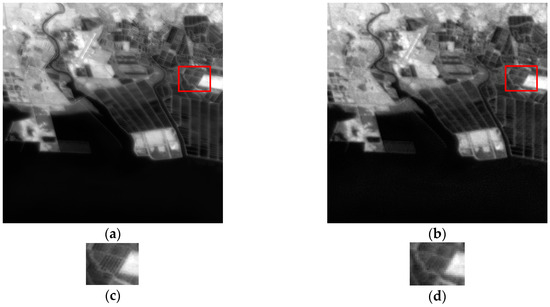

The deviation of the PSF between the HR image and the upsampled image is less than the deviation estimated in the PSF estimation stage, i.e., the blur is not removed completely. A visually better image can be generated by contrast manipulation plus sharpening after the SR reconstruction. We take the real Gaofen-4 images of the offshore aquaculture area as an example. The result of enhancing the HR image reconstructed by our SR method (Figure 4c) is shown in Figure 10a. For comparison, the reference LR image is enhanced directly without SR reconstruction, and the result is shown in Figure 10b. The regions denoted by the rectangles in Figure 10a,b are shown in Figure 10c,d. Comparing Figure 4, Figure 5 and Figure 10, we note that the enhanced LR image is visually better than the LR image and the reconstructed HR image, but contains less details. That is to say, the SR algorithm reveals more information contained in the LR images.

Figure 10.

Results of image enhancement. (a) SR + image enhancement; (b) image enhancement of the reference image; (c) and (d) are the regions denoted by the rectangles in (a) and (b) respectively.

4. Conclusions

In this paper, we propose an SR algorithm to reconstruct an HR image from multiple LR images captured by the Gaofen-4 staring imaging satellite. An appropriate observation model with photometric parameters is built. The registration with photometric adjustment improves the registration accuracy and the quality of the final reconstructed HR image. A MAP-based blind deconvolution algorithm is modified to estimate the imaging PSF which is reparameterized by a Gaussian function. Finally, the PSF is used to estimate the HR image. Experimental results with synthetic and real images show that the reconstructed HR image reveals more details which cannot be seen in LR images.

Author Contributions

Jieping Xu is the first author and the corresponding author of this paper. His main contributions include the basic idea and writing of this paper. The main contributions of Yonghui Liang and Jin Liu include analyzing the basic idea and checking the experimental results. The main contribution of Zongfu Huang was in providing the real Gaofen-4 images and refining the paper. All authors have read and approved the final manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Park, S.C.; Park, M.K.; Kang, M.G. Super-resolution image reconstruction: a technical overview. IEEE Signal Process. Mag. 2003, 20, 21–36. [Google Scholar] [CrossRef]

- Alam, M.S.; Bognar, J.G.; Hardie, R.C.; Yasuda, B.J. High resolution infrared image reconstruction using multiple, randomly shifted, low resolution, aliased frames. Proc. SPIE 1997, 3063, 102–112. [Google Scholar]

- Alam, M.S.; Bognar, J.G.; Hardie, R.C.; Yasuda, B.J. Infrared image registration and high-resolution reconstruction using multiple translationally shifted aliased video frames. IEEE Trans. Instrum. Meas. 2000, 49, 915–923. [Google Scholar] [CrossRef]

- Tsai, R.Y. Multiframe image restoration and registration. Adv. Comput. Vis. Image Process. 1984, 1, 317–339. [Google Scholar]

- Yang, J.; Huang, T. Image super-resolution: Historical overview and future challenges. In Super-Resolution Imaging; Milanfar, P., Ed.; CRC Press: Boca Raton, FL, USA, 2010; pp. 1–33. ISBN 978-1-4398-1931-9. [Google Scholar]

- Ur, H.; Gross, D. Improved resolution from subpixel shifted pictures. CVGIP Graph. Models Image Process. 1992, 54, 181–186. [Google Scholar] [CrossRef]

- Stark, H.; Oskoui, P. High-resolution image recovery from image-plane arrays, using convex projections. JOSA A 1989, 6, 1715–1726. [Google Scholar] [CrossRef]

- Patti, A.J.; Sezan, M.I.; Tekalp, A.M. Superresolution video reconstruction with arbitrary sampling lattices and nonzero aperture time. IEEE Trans. Image Process. 1997, 6, 1064–1076. [Google Scholar] [CrossRef] [PubMed]

- Irani, M.; Peleg, S. Improving resolution by image registration. CVGIP Graph. Models Image Process. 1991, 53, 231–239. [Google Scholar] [CrossRef]

- Schultz, R.R.; Stevenson, R.L. Extraction of high-resolution frames from video sequences. IEEE Trans. Image Process. 1996, 5, 996–1011. [Google Scholar] [CrossRef] [PubMed]

- Hardie, R.C.; Barnard, K.J.; Armstrong, E.E. Joint MAP registration and high-resolution image estimation using a sequence of undersampled images. IEEE Trans. Image Process. 1997, 6, 1621–1633. [Google Scholar] [CrossRef] [PubMed]

- Zomet, A.; Rav-Acha, A.; Peleg, S. Robust Super-Resolution. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Kauai, HI, USA, 8–14 December 2001. [Google Scholar]

- Farsiu, S.; Robinson, M.D.; Elad, M.; Milanfar, P. Fast and robust multiframe super resolution. IEEE Trans. Image Process. 2004, 13, 1327–1344. [Google Scholar] [CrossRef] [PubMed]

- Yang, S.; Sun, F.; Wang, M.; Liu, Z.; Jiao, L. Novel Super Resolution Restoration of Remote Sensing Images Based on Compressive Sensing and Example Patches-aided Dictionary Learning. In Proceedings of the International Workshop on Multi-Platform/Multi-Sensor Remote Sensing and Mapping (M2RSM), Xiamen, China, 10–12 January 2011. [Google Scholar]

- Sreeja, S.J.; Wilscy, M. Single Image Super-Resolution Based on Compressive Sensing and TV Minimization Sparse Recovery for Remote Sensing Images. In Proceedings of the IEEE Recent Advances in Intelligent Computational Systems (RAICS), Trivandrum, India, 19–21 December 2013. [Google Scholar]

- Yang, Q.; Wang, H.; Luo, X. Study on the super-resolution reconstruction algorithm for remote sensing image based on compressed sensing. Int. J. Signal Process. Image Process. Pattern Recognit. 2015, 8, 1–8. [Google Scholar] [CrossRef]

- Pan, Z.; Yu, J.; Huang, H.; Hu, S.; Zhang, A.; Ma, H.; Sun, W. Super-resolution based on compressive sensing and structural self-similarity for remote sensing images. IEEE Trans. Geosci. Remote Sens. 2013, 51, 4864–4876. [Google Scholar] [CrossRef]

- Merino, M.T.; Nunez, J. Super-resolution of remotely sensed images with variable-pixel linear reconstruction. IEEE Trans. Geosci. Remote Sens. 2007, 45, 1446–1457. [Google Scholar] [CrossRef]

- Fruchter, A.S.; Hook, R.N. Drizzle: A method for the linear reconstruction of undersampled images. Publ. Astron. Soc. Pac. 2002, 114, 144. [Google Scholar] [CrossRef]

- Shen, H.; Ng, M.K.; Li, P.; Zhang, L. Super-resolution reconstruction algorithm to MODIS remote sensing images. Comput. J. 2007, 52, 90–100. [Google Scholar] [CrossRef]

- Li, F.; Jia, X.; Fraser, D.; Lambert, A. Super resolution for remote sensing images based on a universal hidden Markov tree model. IEEE Trans. Geosci. Remote Sens. 2010, 48, 1270–1278. [Google Scholar]

- Fan, C.; Wu, C.; Li, G.; Ma, J. Projections onto convex sets super-resolution reconstruction based on point spread function estimation of low-resolution remote sensing images. Sensors 2017, 17, 362. [Google Scholar] [CrossRef] [PubMed]

- Jalobeanu, A.; Zerubia, J.; Blanc-Féraud, L. Bayesian estimation of blur and noise in remote sensing imaging. In Blind Image Deconvolution: Theory and Applications; Campisi, P., Egiazarian, K., Eds.; CRC Press: Boca Raton, FL, USA, 2007; pp. 239–275. [Google Scholar]

- Bay, H.; Tuytelaars, T.; Van Gool, L. Surf: Speeded Up Robust Features. In Proceedings of the European Conference on Computer Vision 2006 (ECCV 2006), Graz, Austria, 12–18 October 2006; pp. 404–417. [Google Scholar]

- Thévenaz, P.; Ruttimann, U.E.; Unser, M. A pyramid approach to subpixel registration based on intensity. IEEE Trans. Image Process. 1998, 7, 27–41. [Google Scholar] [CrossRef] [PubMed]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Comm. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Matson, C.L.; Borelli, K.; Jefferies, S.; Beckner, C.C., Jr.; Hege, E.K.; Lloyd-Hart, M. Fast and optimal multiframe blind deconvolution algorithm for high-resolution ground-based imaging of space objects. Appl. Opt. 2009, 48, A75–A92. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2009, 13, 600–612. [Google Scholar] [CrossRef]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).