Cross-Correlation-Based Structural System Identification Using Unmanned Aerial Vehicles

Abstract

1. Introduction

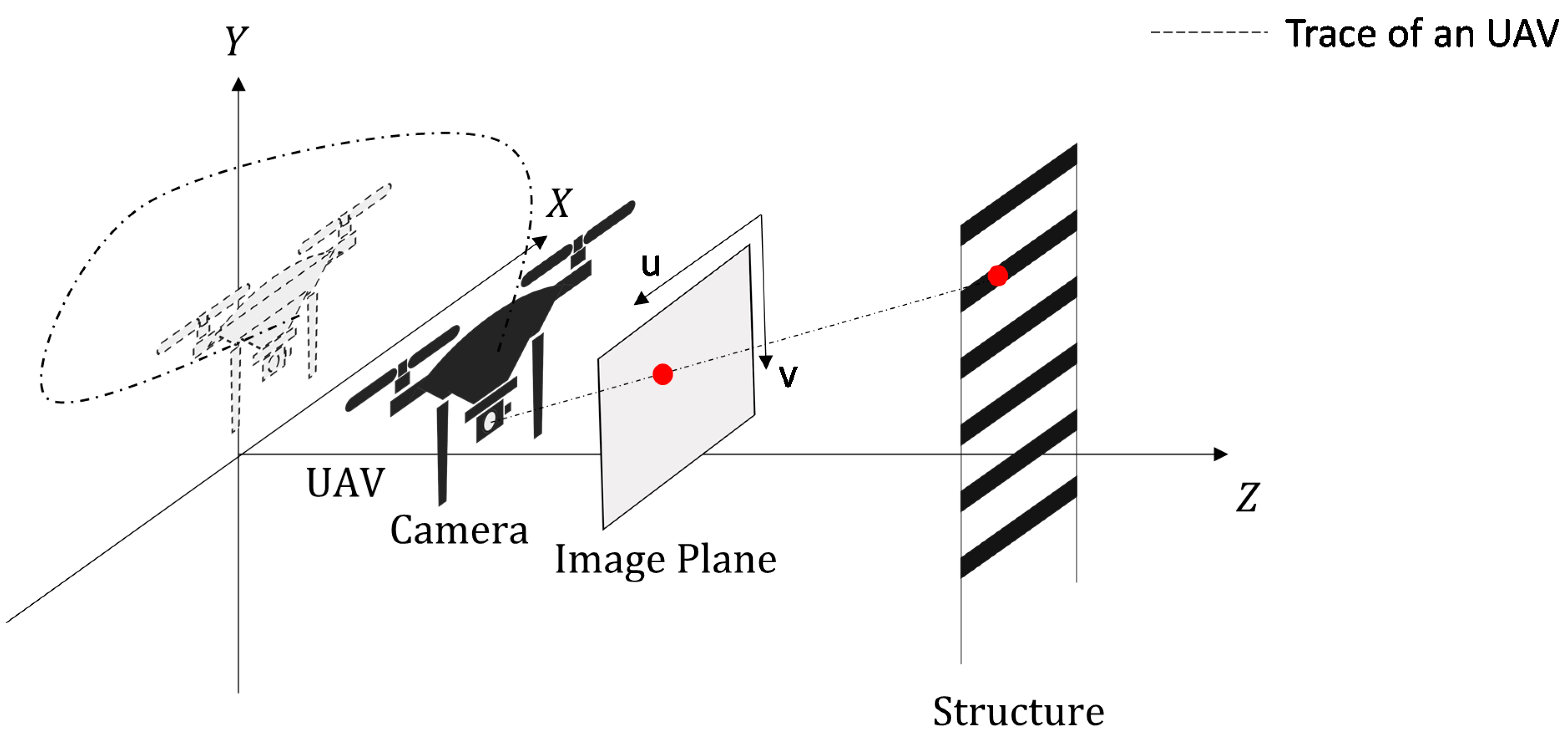

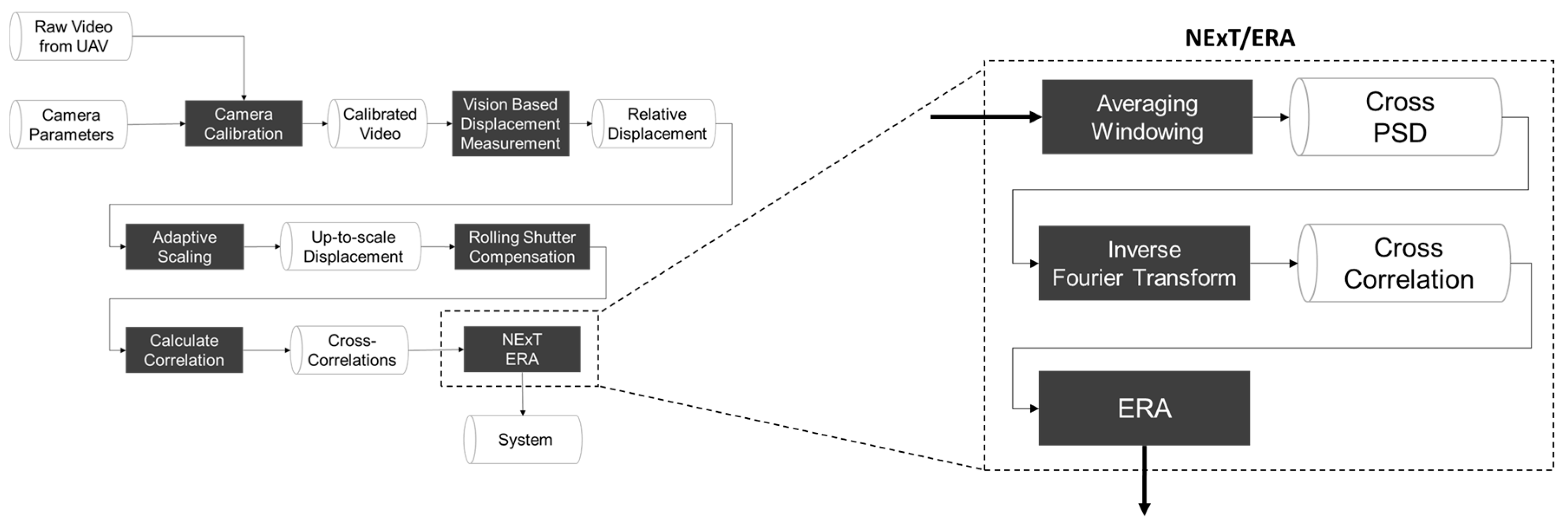

2. Proposed Methodology

2.1. Overview

2.2. Conventional, Vision-Based Displacement Measurement

2.3. Displacement Measurement Using an UAV

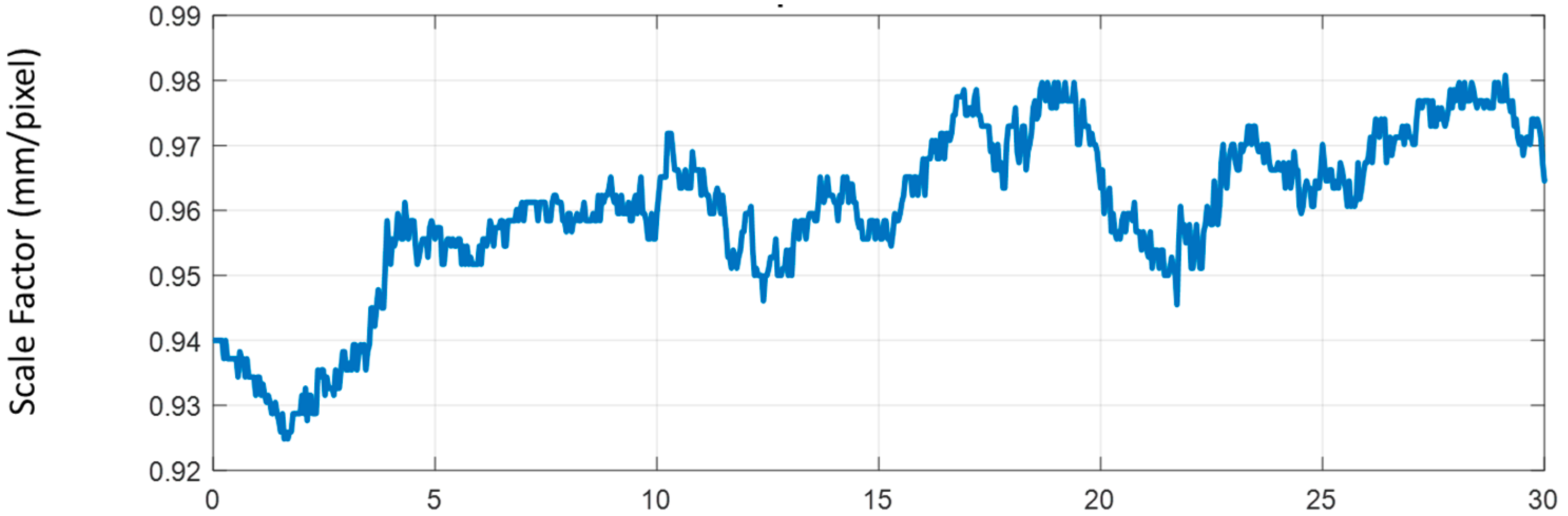

2.4. Adaptive Scale Factor

2.5. Rolling Shutter Compensation

2.6. Natural Excitation Technique (NExT) for System Identification

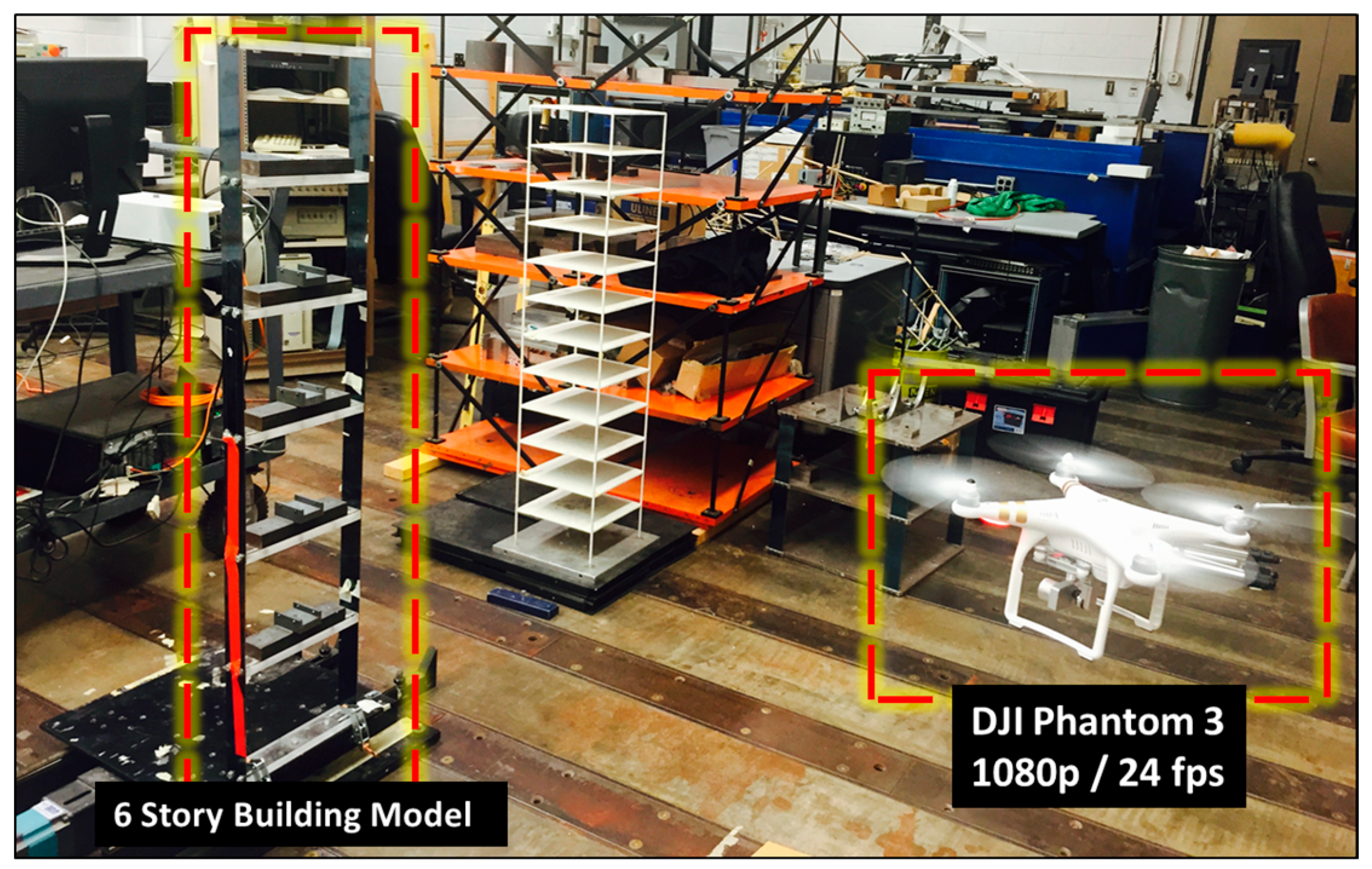

3. Experimental Validation

3.1. Experimental Setup

3.2. Analyzed Adaptive Scale Factor

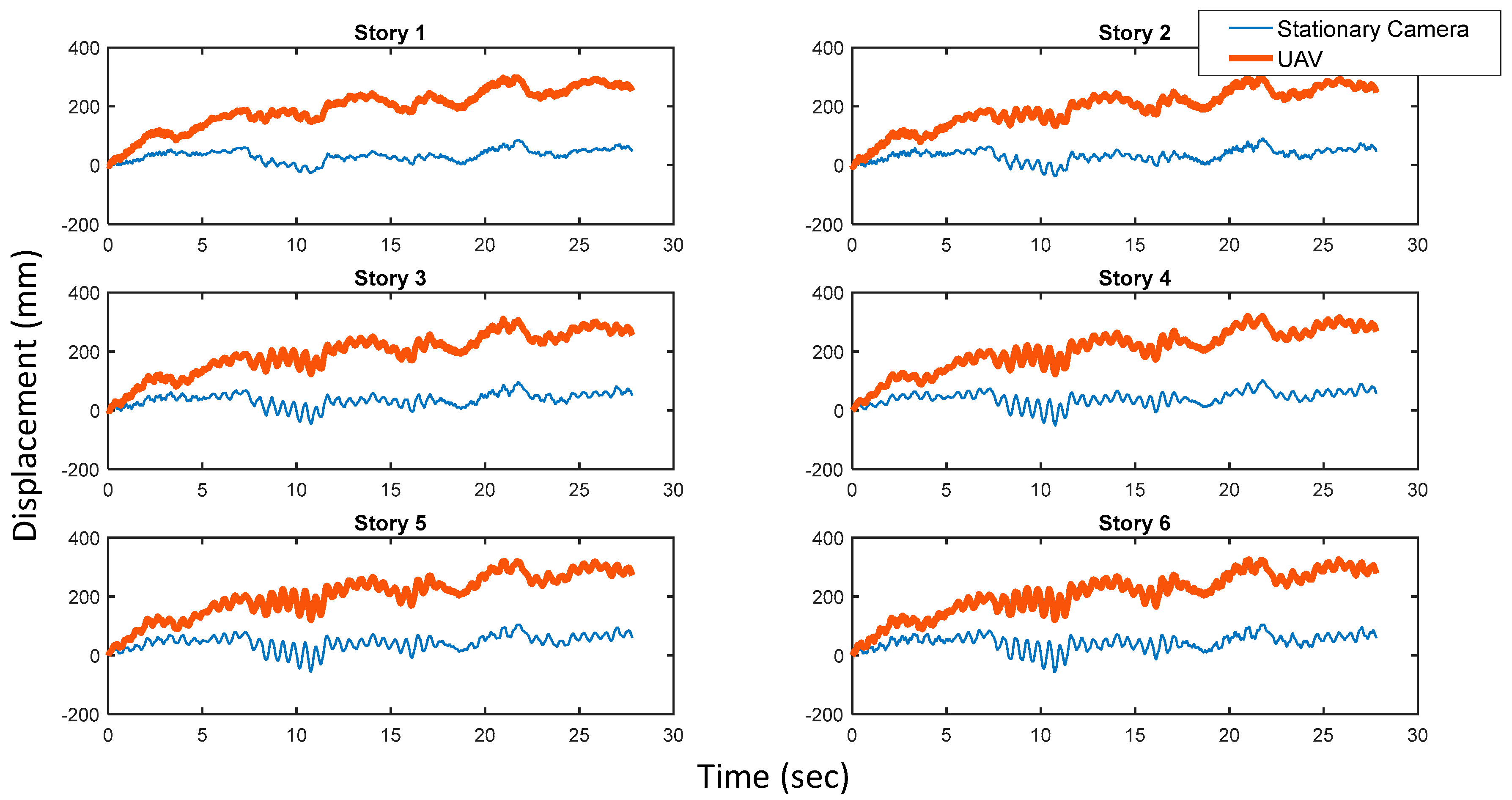

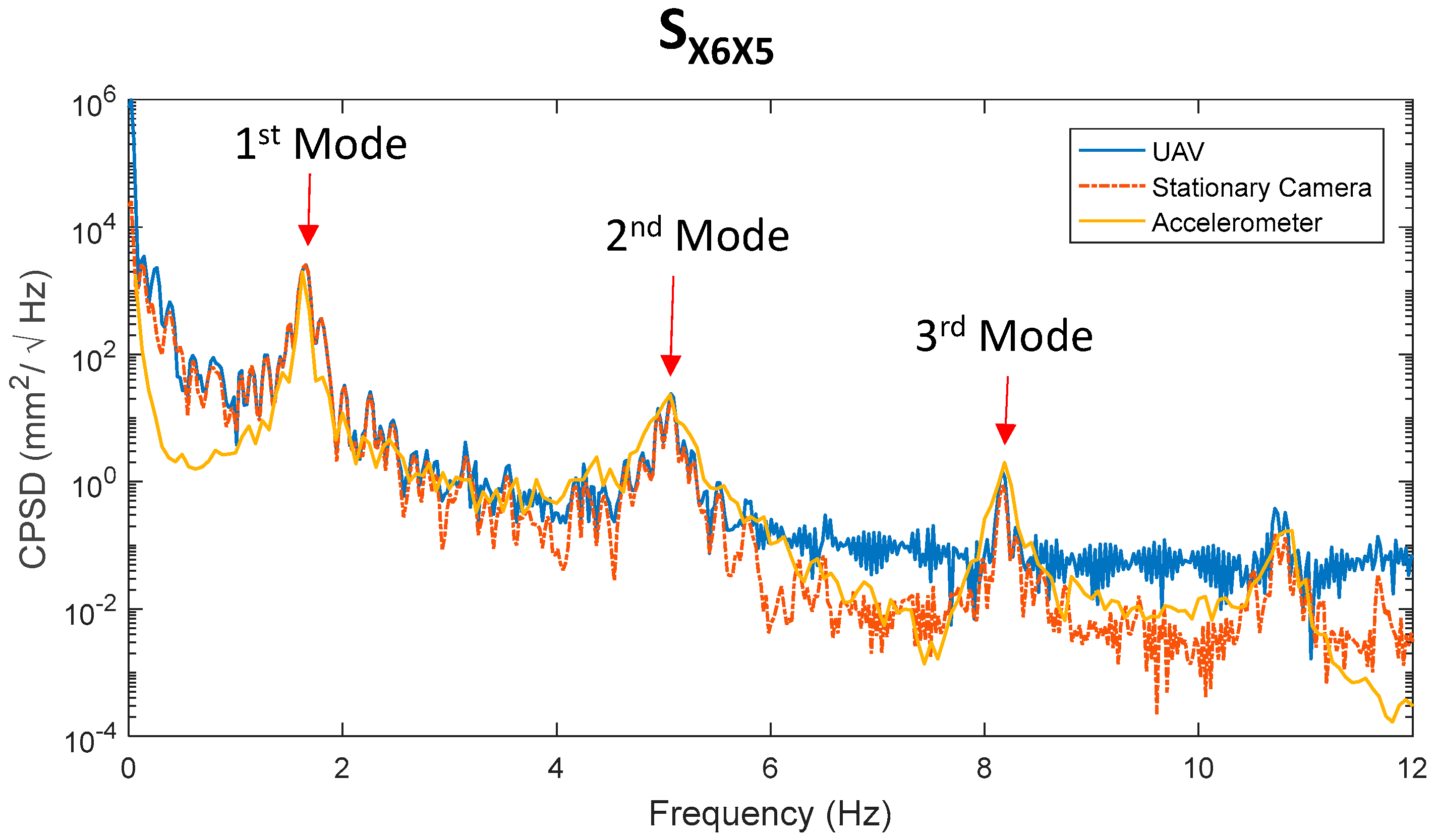

3.3. Comparison of the Dynamic Responses

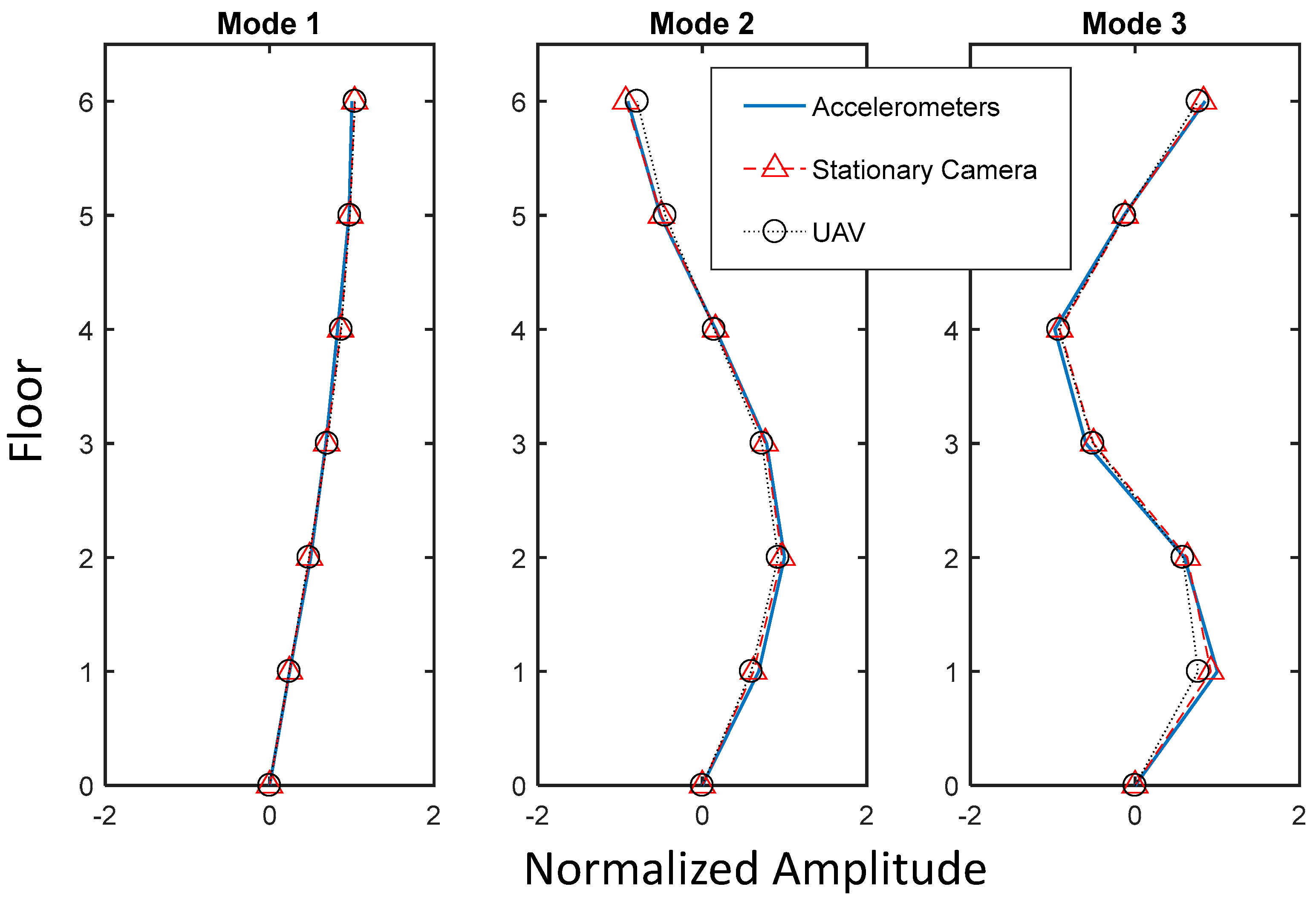

- Assuming that the system identification result from the accelerometers was the most reliable, the result from both the stationary camera and the UAV showed around 1% of maximum error in terms of natural frequency estimation. The comparison indicates that the proposed method using the UAV provides as accurate natural modes as those obtained from the stationary camera and accelerometers.

- Mode shapes extracted by the proposed method using the UAV were compared with ones from the stationary camera and the accelerometers in terms of the mode assurance criteria (MAC) value; all three mode shapes showed over 99% consistency when compared with the reference, and are shown in Figure 9.

4. Discussion

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Fukuda, Y.; Feng, M.Q.; Narita, Y.; Kaneko, S.I.; Tanaka, T. Vision-based displacement sensor for monitoring dynamic response using robust object search algorithm. IEEE Sens. J. 2013, 13, 4725–4732. [Google Scholar] [CrossRef]

- Jo, H.; Sim, S.H.; Tatkowski, A.; Spencer, B.F., Jr.; Nelson, M.E. Feasibility of displacement monitoring using low-cost GPS receivers. Struct. Control Health Monit. 2013, 20, 1240–1254. [Google Scholar] [CrossRef]

- Park, J.W.; Sim, S.H.; Jung, H.J. Development of a wireless displacement measurement system using acceleration responses. Sensors 2013, 13, 8377–8392. [Google Scholar] [CrossRef] [PubMed]

- Choi, I.; Kim, J.H.; Kim, D. A Target-Less Vision-based displacement sensor based on image convex hull optimization for measuring the dynamic response of building structures. Sensors 2016, 16, 2085. [Google Scholar] [CrossRef] [PubMed]

- Feng, D.; Feng, M.Q.; Ozer, E.; Fukuda, Y. A vision-based sensor for noncontact structural displacement measurement. Sensors 2015, 15, 16557–16575. [Google Scholar] [CrossRef] [PubMed]

- Ji, Y.; Chang, C.C. Nontarget image-based technique for small cable vibration measurement. J. Bridge Eng. 2008, 13, 34–42. [Google Scholar] [CrossRef]

- Caetano, E.; Silva, S.; Bateira, J. A vision system for vibration monitoring of civil engineering structures. Exp. Tech. 2011, 35, 74–82. [Google Scholar] [CrossRef]

- Barazzetti, L.; Scaioni, M. Development and implementation of image-based algorithms for measurement of deformations in material testing. Sensors 2010, 10, 7469–7495. [Google Scholar] [CrossRef] [PubMed]

- Schumacher, T.; Shariati, A. Monitoring of structures and mechanical systems using virtual visual sensors for video analysis: Fundamental concept and proof of feasibility. Sensors 2013, 13, 16551–16564. [Google Scholar] [CrossRef]

- Bartilson, D.T.; Wieghaus, K.T.; Hurlebaus, S. Target-less computer vision for traffic signal structure vibration studies. Mech. Syst. Sig. Process. 2015, 60–61, 571–582. [Google Scholar] [CrossRef]

- Feng, D.; Feng, M.Q. Vision-based multipoint displacement measurement for structural health monitoring. Struct. Control Health Monit. 2016, 23, 876–890. [Google Scholar] [CrossRef]

- Feng, D.; Feng, M.Q. Experimental validation of cost-effective vision-based structural health monitoring. Mech. Syst. Sig. Process. 2017, 88, 199–211. [Google Scholar] [CrossRef]

- Chen, J.G.; Wadhwa, N.; Cha, Y.J.; Durand, F.; Freeman, W.T.; Buyukozturk, O. Modal identification of simple structures with high-speed video using motion magnification. J. Sound Vib. 2015, 345, 58–71. [Google Scholar] [CrossRef]

- Yoon, H.; Elanwar, H.; Choi, H.J.; Golparvar-Fard, M.; Spencer, B.F., Jr. Target-free approach for vision-based structural system identification using consumer-grade cameras. Struct. Control Health Monit. 2016, 23, 1405–1416. [Google Scholar] [CrossRef]

- Feng, D.; Feng, M.Q. Identification of structural stiffness and excitation forces in time domain using noncontact vision-based displacement measurement. J. Sound Vib. 2017, 406, 15–28. [Google Scholar] [CrossRef]

- Cha, Y.-J.; Chen, J.G.; Buyukozturk, O. Output-only computer vision based damage detection using phase-based optical flow and unscented Kalman filters. Eng. Strut. 2017, 132, 300–313. [Google Scholar]

- Yoon, H.; Shin, J.; Spencer, B.F., Jr.; Joy, R. Measuring displacement of railroad bridges using unmanned aerial vehicles. Technol. Dig. 2016, 16, TD-16-034. [Google Scholar]

- Yoon, H.; Shin, J.; Spencer, B.F., Jr. Structural Displacement Measurement using an Unmanned Aerial System. Comp-Aided Civil Infra. Eng 2017. under review. [Google Scholar]

- Liang, C.K.; Chang, L.W.; Chen, H.H. Analysis and compensation of rolling shutter effect. IEEE Trans. Image Process. 2008, 17, 1323–1330. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.Y. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Harris, C.; Stephens, M. A combined corner and edge detector. In Proceedings of the 4th Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988; pp. 147–151. [Google Scholar]

- Tomasi, C.; Kanade, T. Detection and Tracking of Point Features; Technical Report CMU-CS-91132; Carnegie Mellon University: Pittsburgh, PA, USA, 1991. [Google Scholar]

- Torr, P.H.; Zisserman, A. MLESAC: A new robust estimator with application to estimating image geometry. Comput. Vis. Image Underst. 2000, 78, 138–156. [Google Scholar] [CrossRef]

- Heikkila, J.; Silven, O. A four-step camera calibration procedure with implicit image correction. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Juan, Puerto Rico, 17–19 June 1997. [Google Scholar]

- James, G.H.; Carne, T.G.; Lauffer, J.P. The natural excitation technique (next) for modal parameter extraction from operating structures. Modal Anal. 1995, 10, 260–277. [Google Scholar]

| Natural Frequencies (Hz) | MAC (%) | Error (%) | |||||

|---|---|---|---|---|---|---|---|

| Mode | Accelerometers (Reference) | Stationary Camera | UAV | Stationary Camera | UAV | Stationary Camera | UAV |

| 1 | 1.632 | 1.649 | 1.649 | 99.99 | 99.99 | 1.04 | 1.04 |

| 2 | 5.054 | 5.060 | 5.043 | 99.99 | 99.86 | 0.12 | 0.22 |

| 3 | 8.175 | 8.166 | 8.170 | 99.99 | 99.67 | 0.11 | 0.06 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yoon, H.; Hoskere, V.; Park, J.-W.; Spencer, B.F., Jr. Cross-Correlation-Based Structural System Identification Using Unmanned Aerial Vehicles. Sensors 2017, 17, 2075. https://doi.org/10.3390/s17092075

Yoon H, Hoskere V, Park J-W, Spencer BF Jr. Cross-Correlation-Based Structural System Identification Using Unmanned Aerial Vehicles. Sensors. 2017; 17(9):2075. https://doi.org/10.3390/s17092075

Chicago/Turabian StyleYoon, Hyungchul, Vedhus Hoskere, Jong-Woong Park, and Billie F. Spencer, Jr. 2017. "Cross-Correlation-Based Structural System Identification Using Unmanned Aerial Vehicles" Sensors 17, no. 9: 2075. https://doi.org/10.3390/s17092075

APA StyleYoon, H., Hoskere, V., Park, J.-W., & Spencer, B. F., Jr. (2017). Cross-Correlation-Based Structural System Identification Using Unmanned Aerial Vehicles. Sensors, 17(9), 2075. https://doi.org/10.3390/s17092075