Real-Time External Respiratory Motion Measuring Technique Using an RGB-D Camera and Principal Component Analysis †

Abstract

:1. Introduction

2. Related Work

3. Overview of the Proposed Method

4. Materials and Methods

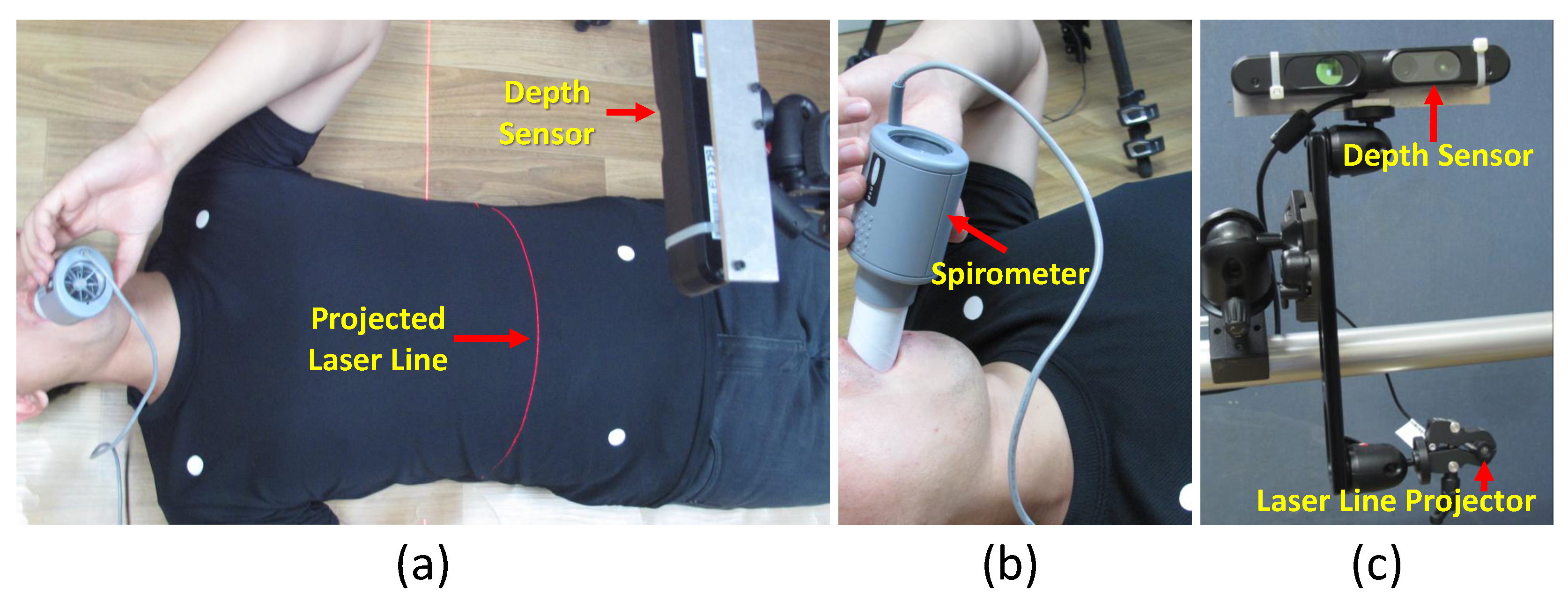

4.1. Data Acquisition

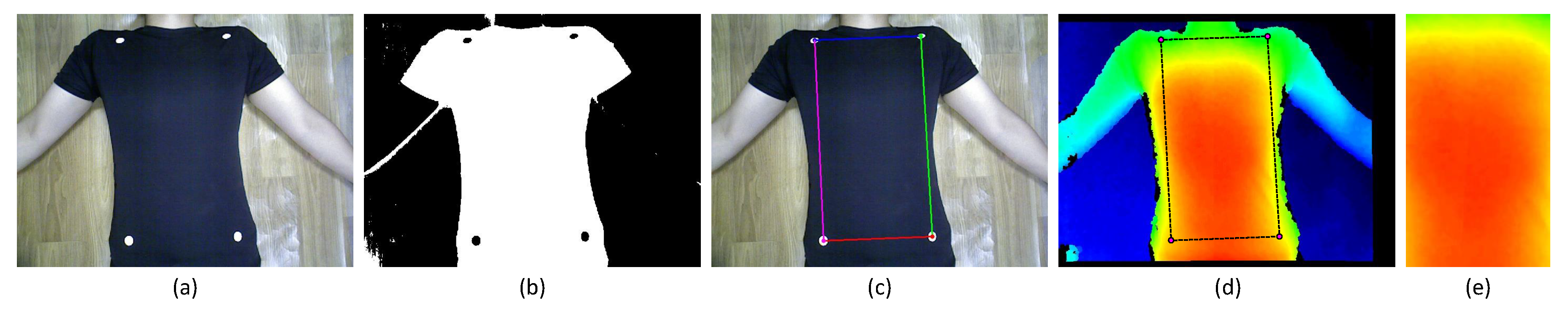

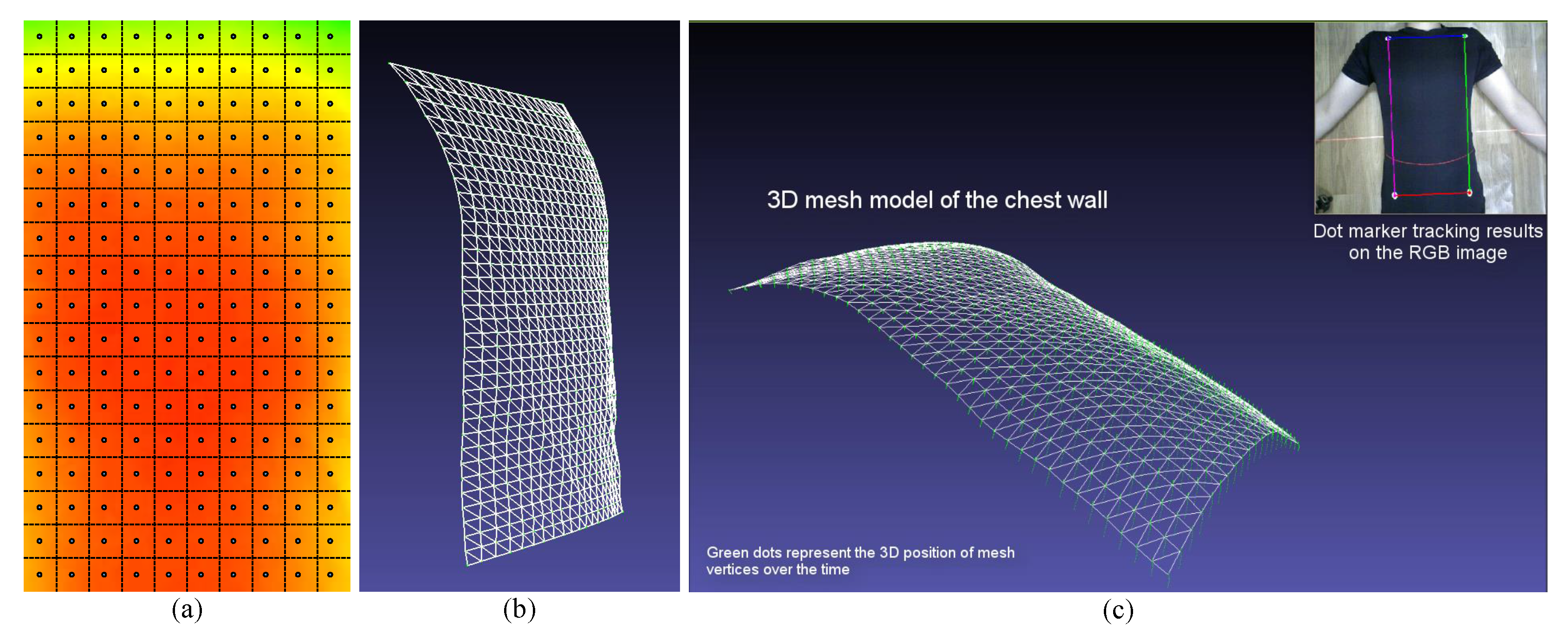

4.2. Measuring Region

4.3. Respiratory Motion Modeling Using PCA

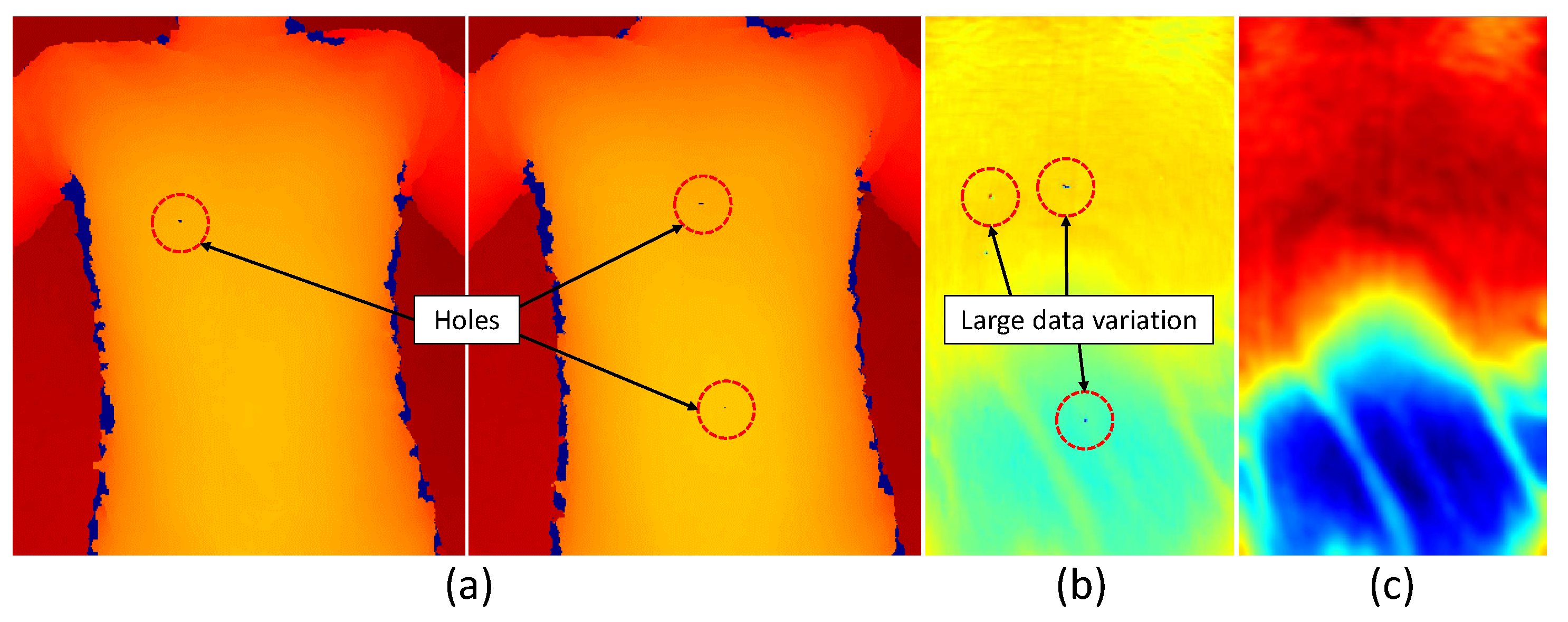

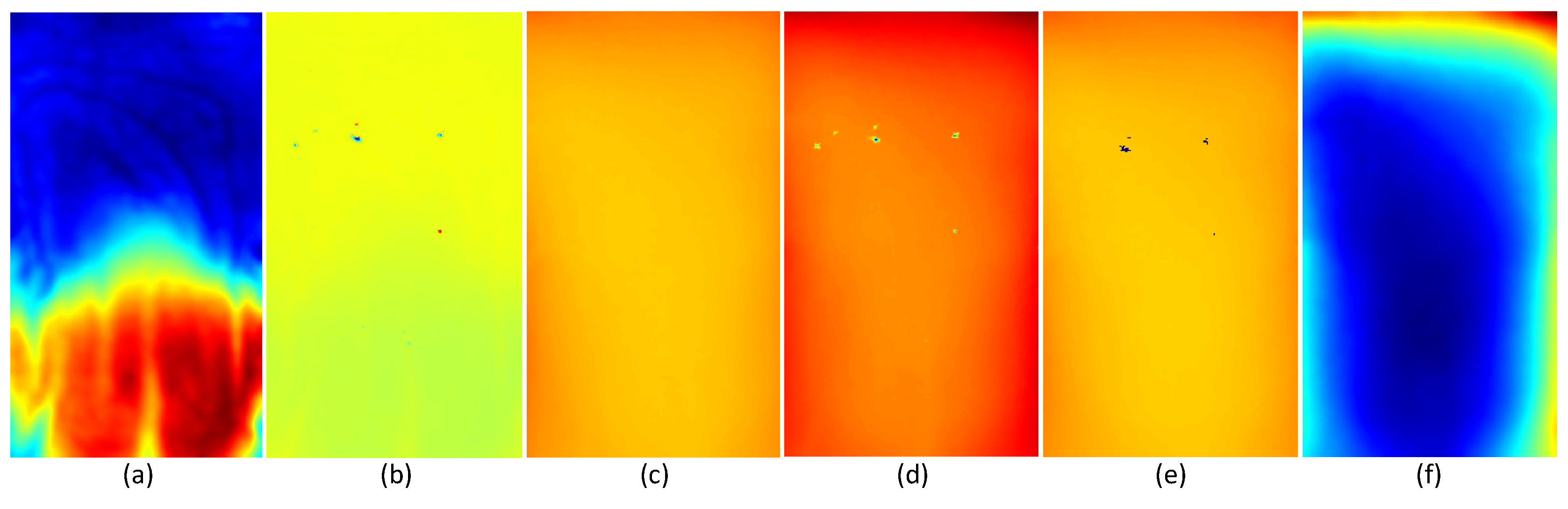

4.3.1. Depth Data Pre-Processing

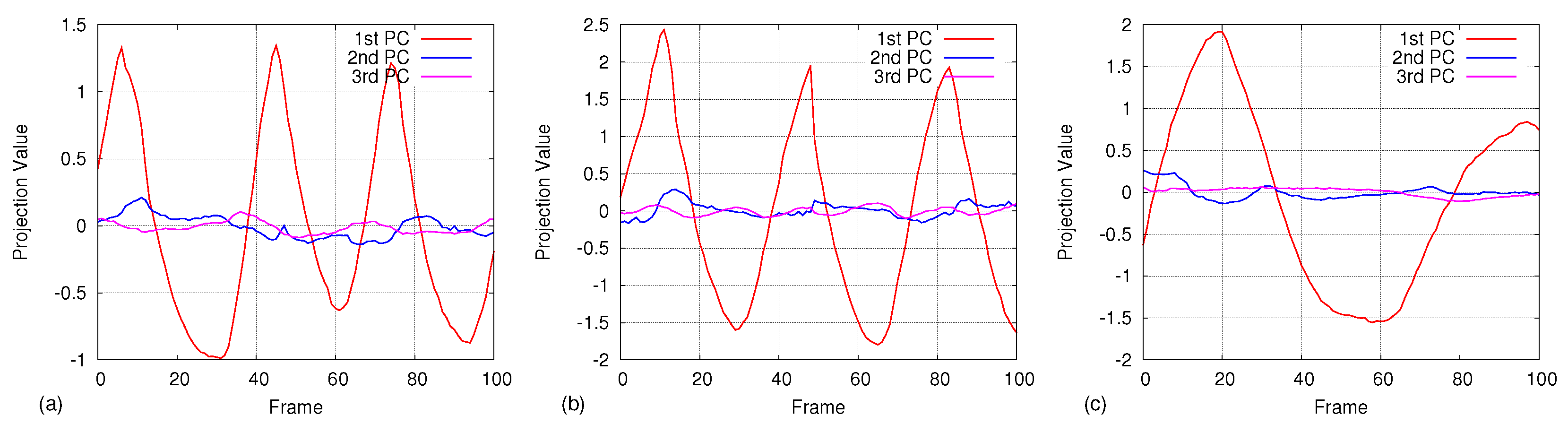

4.3.2. Principal Component Analysis

4.4. Real-Time Respiratory Motion Measurement

4.4.1. Projection and Reconstruction

4.4.2. Motion Measurement

4.5. Evaluation of the Accuracy

4.5.1. Comparison with Spirometer

4.5.2. Comparison with Laser Line Scanning

5. Results

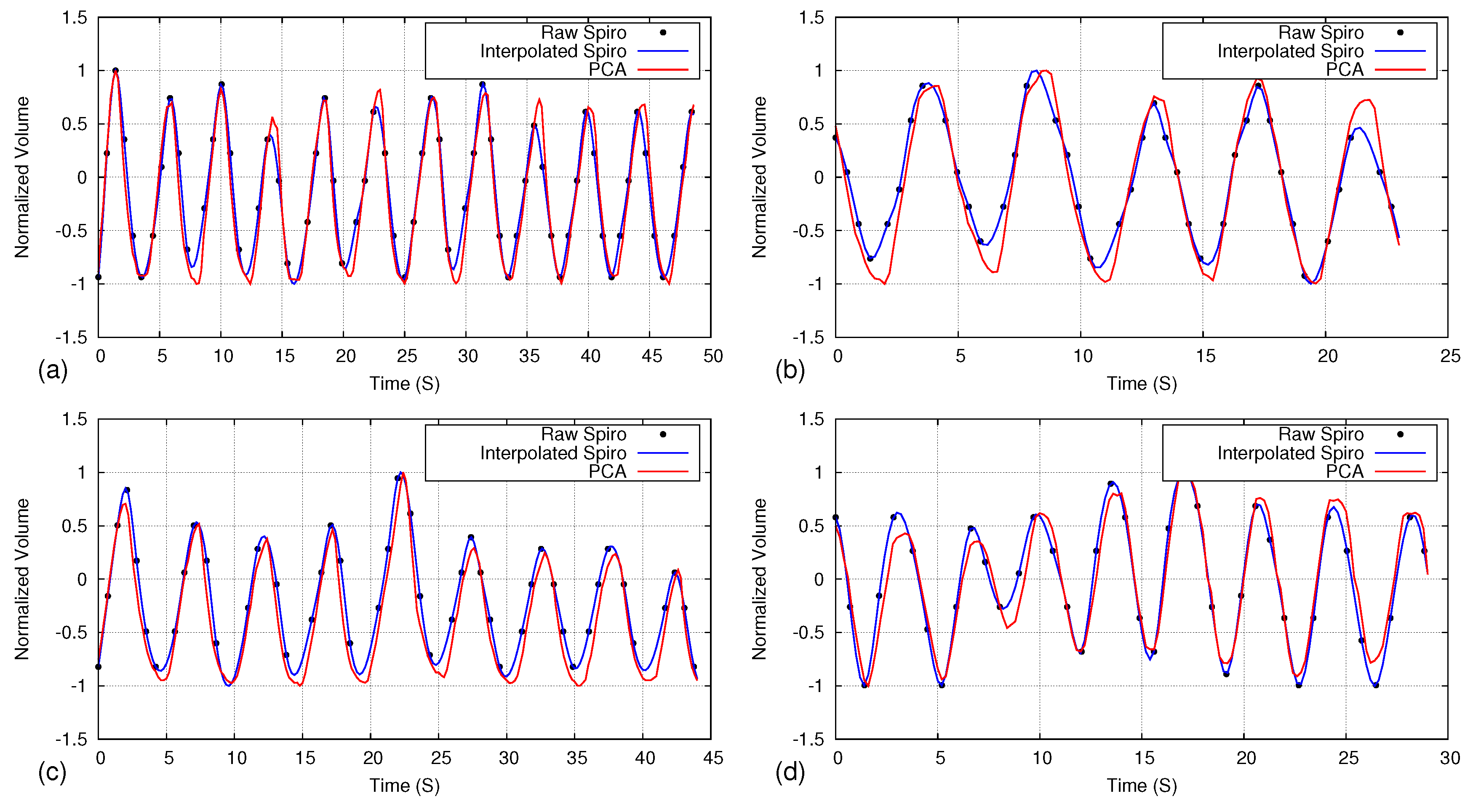

5.1. Comparison of Respiratory Pattern with Spirometer

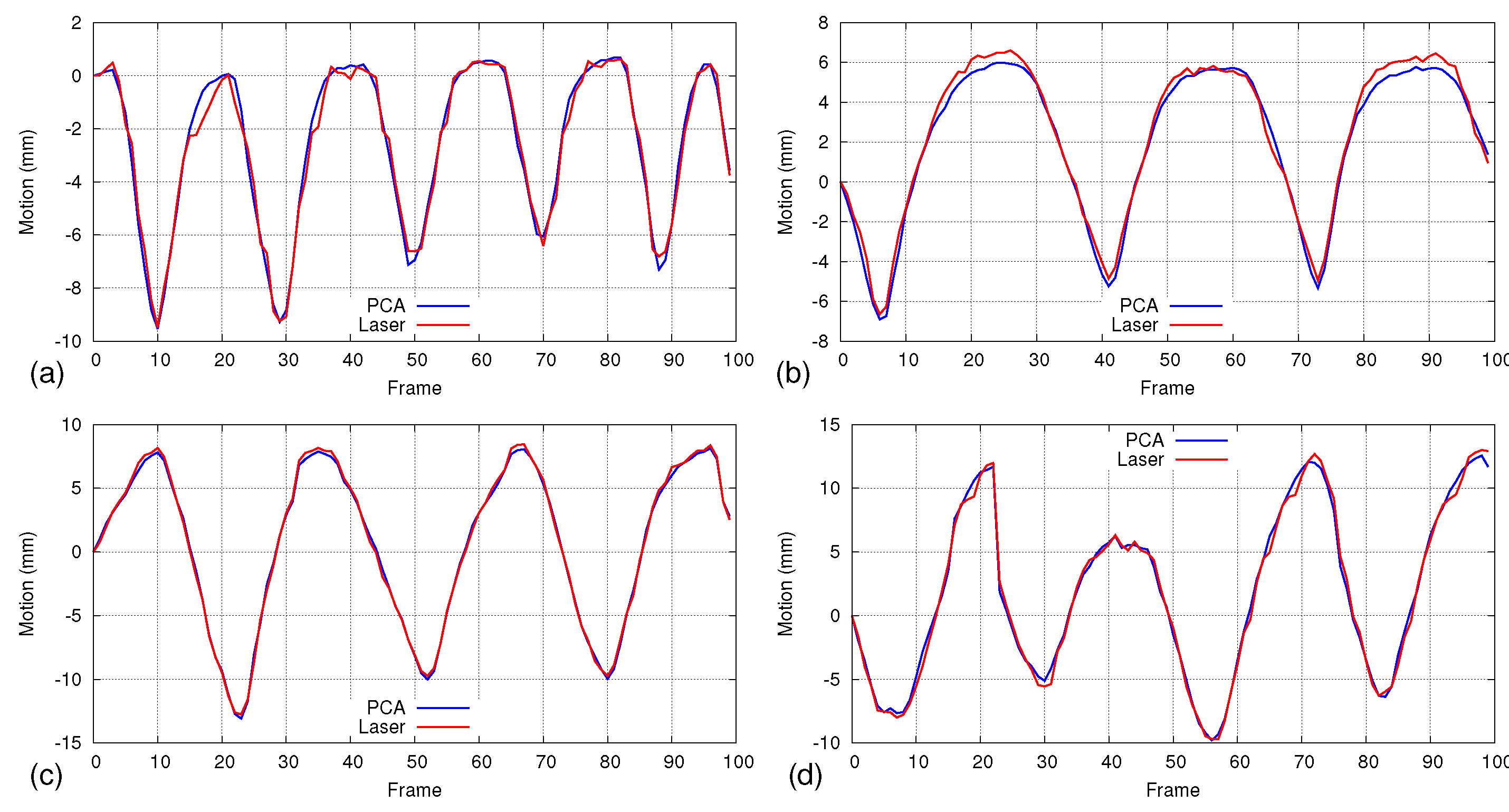

5.2. Accuracy Analysis Using Laser Line Scanning

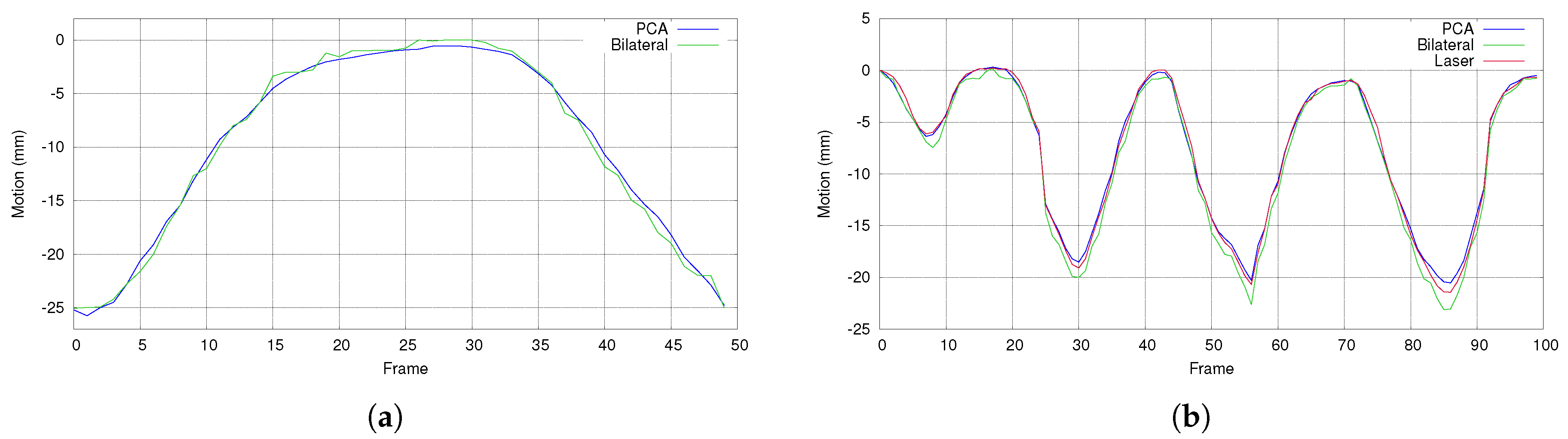

5.3. Comparison with Bilateral Filtering

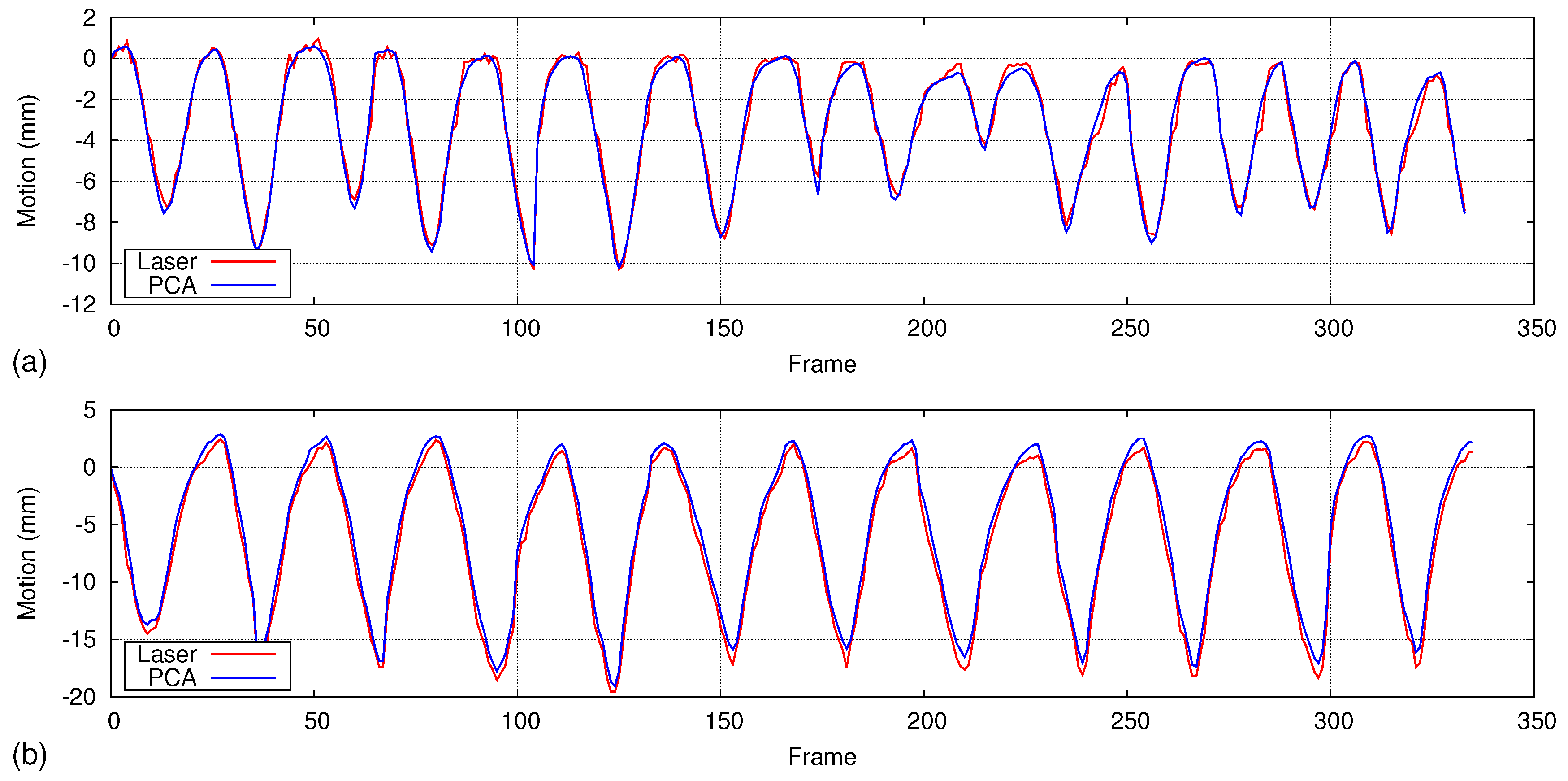

5.4. Isovolume Maneuver

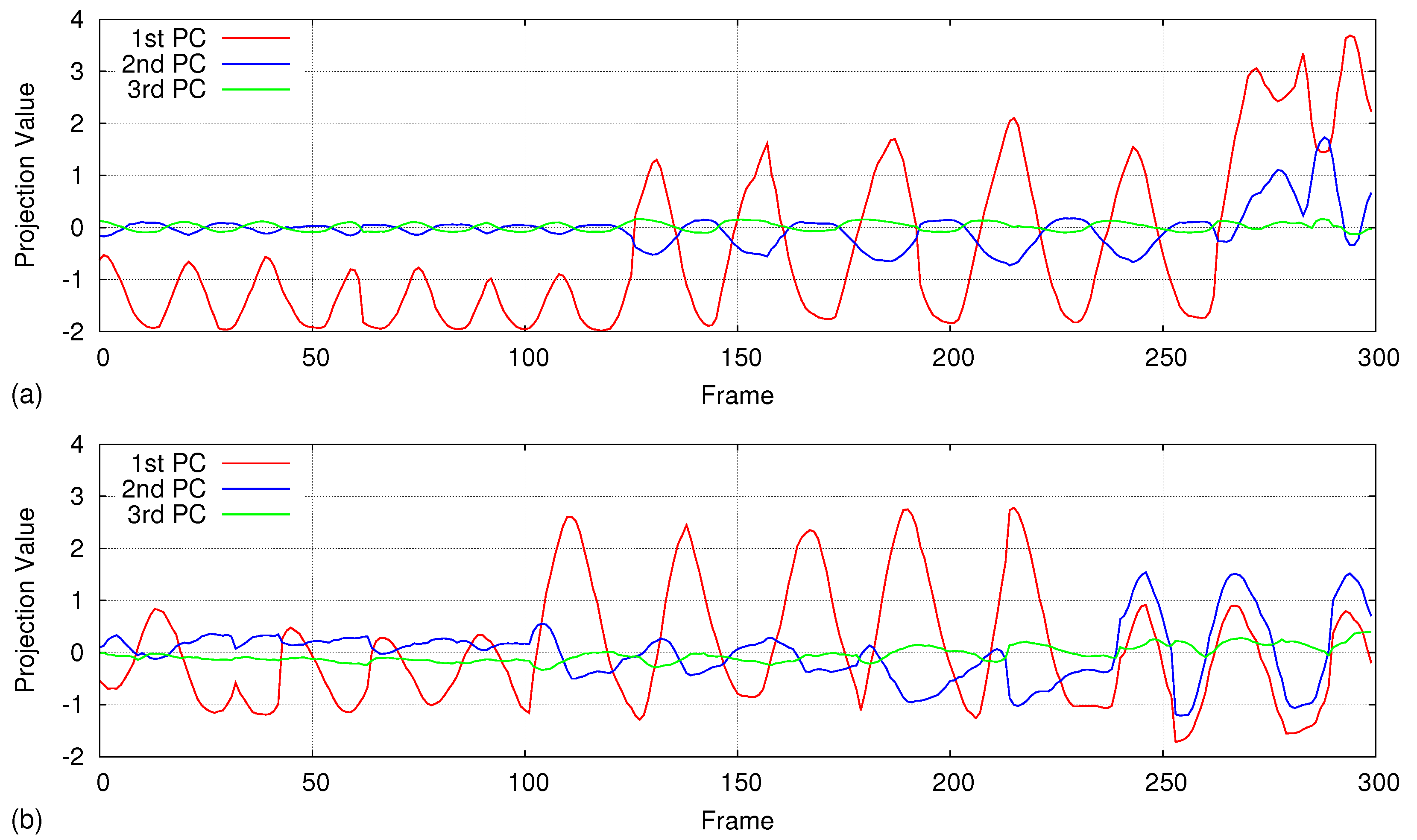

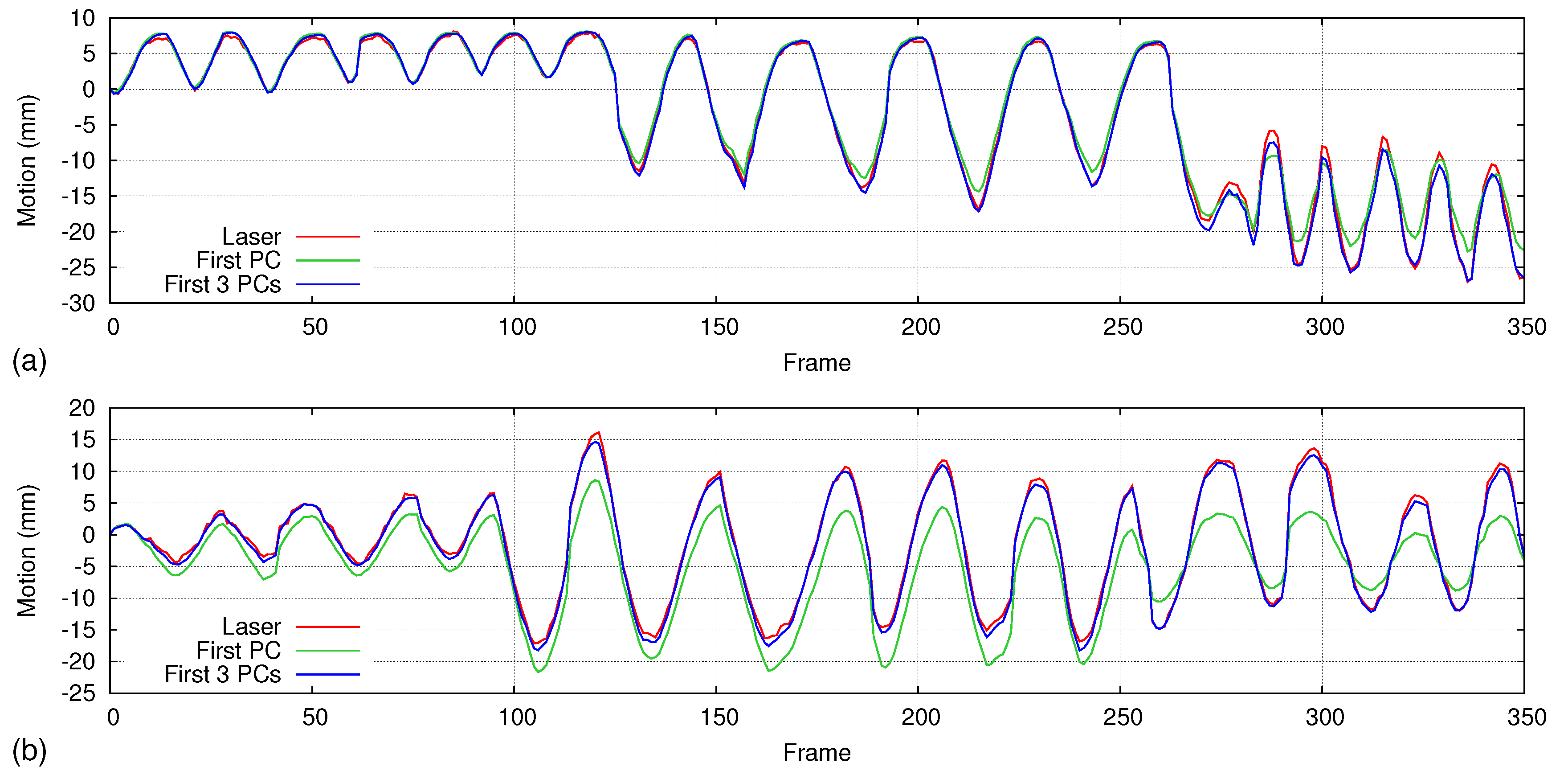

5.5. Handling Irregular Breathing

6. Discussion and Conclusions

Supplementary Materials

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Keall, P.J.; Mageras, G.S.; Balter, J.M.; Emery, R.S.; Forster, K.M.; Jiang, S.B.; Kapatoes, J.M.; Low, D.A.; Murphy, M.J.; Murray, B.R.; et al. The management of respiratory motion in radiation oncology report of AAPM Task Group 76. Med. Phys. 2006, 33, 3874–3900. [Google Scholar] [CrossRef] [PubMed]

- Ozhasoglu, C.; Murphy, M.J. Issues in respiratory motion compensation during external-beam radiotherapy. Int. J. Radiat. Oncol. Biol. Phys. 2002, 52, 1389–1399. [Google Scholar] [CrossRef]

- Hanley, J.; Debois, M.M.; Mah, D.; Mageras, G.S.; Raben, A.; Rosenzweig, K.; Mychalczak, B.; Schwartz, L.H.; Gloeggler, P.J.; Lutz, W.; et al. Deep inspiration breath-hold technique for lung tumors: The potential value of target immobilization and reduced lung density in dose escalation. Int. J. Radiat. Oncol. 1999, 45, 603–611. [Google Scholar] [CrossRef]

- Barnes, E.A.; Murray, B.R.; Robinson, D.M.; Underwood, L.J.; Hanson, J.; Roa, W.H.Y. Dosimetric evaluation of lung tumor immobilization using breath hold at deep inspiration. Int. J. Radiat. Oncol. Biol. Phys. 2001, 50, 1091–1098. [Google Scholar] [CrossRef]

- Davies, S.C.; Hill, A.L.; Holmes, R.B.; Halliwell, M.; Jackson, P.C. Ultrasound quantitation of respiratory organ motion in the upper abdomen. Br. J. Radiol. 1994, 67, 1096–1102. [Google Scholar] [CrossRef] [PubMed]

- Ross, C.S.; Hussey, D.H.; Pennington, E.C.; Stanford, W.; Fred Doornbos, J. Analysis of movement of intrathoracic neoplasms using ultrafast computerized tomography. Int. J. Radiat. Oncol. Biol. Phys. 1990, 18, 671–677. [Google Scholar] [CrossRef]

- Langen, K.M.; Jones, D.T.L. Organ motion and its management. Int. J. Radiat. Oncol. Biol. Phys. 2001, 50, 265–278. [Google Scholar] [CrossRef]

- Engelsman, M.; Damen, E.M.F.; De Jaeger, K.; Van Ingen, K.M.; Mijnheer, B.J. The effect of breathing and set-up errors on the cumulative dose to a lung tumor. Radiother. Oncol. 2001, 60, 95–105. [Google Scholar] [CrossRef]

- Malone, S.; Crook, J.M.; Kendal, W.S.; Zanto, J.S. Respiratory-induced prostate motion: Quantification and characterization. Int. J. Radiat. Oncol. Biol. Phys. 2000, 48, 105–109. [Google Scholar] [CrossRef]

- Lujan, A.E.; Larsen, E.W.; Balter, J.M.; Ten Haken, R.K. A method for incorporating organ motion due to breathing into 3D dose calculations. Med. Phys. 1999, 26, 715–720. [Google Scholar] [CrossRef] [PubMed]

- Jacobs, I.; Vanregemorter, J.; Scalliet, P. Influence of respiration on calculation and delivery of the prescribed dose in external radiotherapy. Radiother. Oncol. 1996, 39, 123–128. [Google Scholar] [CrossRef]

- Wijenayake, U.; Park, S.Y. PCA based analysis of external respiratory motion using an RGB-D camera. In Proceedings of the IEEE International Symposium on Medical Measurements and Applications (MeMeA), Benevento, Italy, 15–18 May 2016; pp. 1–6. [Google Scholar]

- Bukovsky, I.; Homma, N.; Ichiji, K.; Cejnek, M.; Slama, M.; Benes, P.M.; Bila, J. A fast neural network approach to predict lung tumor motion during respiration for radiation therapy applications. BioMed Res. Int. 2015, 2015, 489679. [Google Scholar] [CrossRef] [PubMed]

- McClelland, J.; Hawkes, D.; Schaeffter, T.; King, A. Respiratory motion models: A review. Med. Image Anal. 2013, 17, 19–42. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- McClelland, J. Estimating Internal Respiratory Motion from Respiratory Surrogate Signals Using Correspondence Models. In 4D Modeling and Estimation of Respiratory Motion for Radiation Therapy; Ehrhardt, J., Lorenz, C., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; pp. 187–213. [Google Scholar]

- Fayad, H.; Pan, T.; Clément, J.F.; Visvikis, D. Technical note: Correlation of respiratory motion between external patient surface and internal anatomical landmarks. Med. Phys. 2011, 38, 3157–3164. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Seppenwoolde, Y.; Berbeco, R.I.; Nishioka, S.; Shirato, H.; Heijmen, B. Accuracy of tumor motion compensation algorithm from a robotic respiratory tracking system: A simulation study. Med. Phys. 2007, 34, 2774–2784. [Google Scholar] [CrossRef] [PubMed]

- Willoughby, T.R.; Kupelian, P.A.; Pouliot, J.; Shinohara, K.; Aubin, M.; Roach, M.; Skrumeda, L.L.; Balter, J.M.; Litzenberg, D.W.; Hadley, S.W.; et al. Target localization and real-time tracking using the Calypso 4D localization system in patients with localized prostate cancer. Int. J. Radiat. Oncol. Biol. Phys. 2006, 65, 528–534. [Google Scholar] [CrossRef] [PubMed]

- Jin, J.Y.; Yin, F.F.; Tenn, S.E.; Medin, P.M.; Solberg, T.D. Use of the BrainLAB ExacTrac X-Ray 6D System in Image-Guided Radiotherapy. Med. Dosim. 2008, 33, 124–134. [Google Scholar] [CrossRef] [PubMed]

- Fu, D.; Kahn, R.; Wang, B.; Wang, H.; Mu, Z.; Park, J.; Kuduvalli, G.; Maurer, C.R., Jr. Xsight lung tracking system: A fiducial-less method for respiratory motion tracking. In Treating Tumors that Move with Respiration; Springer: Berlin/Heidelberg, Germany, 2007; pp. 265–282. [Google Scholar]

- Zhang, Y.; Yang, J.; Zhang, L.; Court, L.E.; Balter, P.A.; Dong, L. Modeling respiratory motion for reducing motion artifacts in 4D CT images. Med. Phys. 2013, 40, 041716. [Google Scholar] [CrossRef] [PubMed]

- Mori, S.; Hara, R.; Yanagi, T.; Sharp, G.C.; Kumagai, M.; Asakura, H.; Kishimoto, R.; Yamada, S.; Kandatsu, S.; Kamada, T. Four-dimensional measurement of intrafractional respiratory motion of pancreatic tumors using a 256 multi-slice CT scanner. Radiother. Oncol. 2009, 92, 231–237. [Google Scholar] [CrossRef] [PubMed]

- Yang, D.; Lu, W.; Low, D.A.; Deasy, J.O.; Hope, A.J.; El Naqa, I. 4D-CT motion estimation using deformable image registration and 5D respiratory motion modeling. Med. Phys. 2008, 35, 4577–4590. [Google Scholar] [CrossRef] [PubMed]

- Yun, J.; Yip, E.; Wachowicz, K.; Rathee, S.; Mackenzie, M.; Robinson, D.; Fallone, B.G. Evaluation of a lung tumor autocontouring algorithm for intrafractional tumor tracking using low-field MRI: A phantom study. Med. Phys. 2012, 39, 1481–1494. [Google Scholar] [CrossRef] [PubMed]

- Crijns, S.P.M.; Raaymakers, B.W.; Lagendijk, J.J.W. Proof of concept of MRI-guided tracked radiation delivery: Tracking one-dimensional motion. Phys. Med. Biol. 2012, 57, 7863. [Google Scholar] [CrossRef] [PubMed]

- Cerviño, L.I.; Du, J.; Jiang, S.B. MRI-guided tumor tracking in lung cancer radiotherapy. Phys. Med. Biol. 2011, 56, 3773. [Google Scholar] [CrossRef] [PubMed]

- Cai, J.; Chang, Z.; Wang, Z.; Paul Segars, W.; Yin, F.F. Four-dimensional magnetic resonance imaging (4D-MRI) using image-based respiratory surrogate: A feasibility study. Med. Phys. 2011, 38, 6384–6394. [Google Scholar] [CrossRef] [PubMed]

- Siebenthal, M.V.; Székely, G.; Gamper, U.; Boesiger, P.; Lomax, A.; Cattin, P. 4D MR imaging of respiratory organ motion and its variability. Phys. Med. Biol. 2007, 52, 1547. [Google Scholar] [CrossRef] [PubMed]

- Hwang, Y.; Kim, J.B.; Kim, Y.S.; Bang, W.C.; Kim, J.D.K.; Kim, C. Ultrasound image-based respiratory motion tracking. SPIE Med. Imaging 2012, 83200N. [Google Scholar] [CrossRef]

- Nadeau, C.; Krupa, A.; Gangloff, J. Automatic Tracking of an Organ Section with an Ultrasound Probe: Compensation of Respiratory Motion. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2011; Springer: Berlin/Heidelberg, Germany, 2011; pp. 57–64. [Google Scholar]

- Nutti, B.; Kronander, A.; Nilsing, M.; Maad, K.; Svensson, C.; Li, H. Depth Sensor-Based Realtime Tumor Tracking for Accurate Radiation Therapy; Eurographics 2014—Short Papers; Galin, E., Wand, M., Eds.; The Eurographics Association: Strasbourg, France, 2014; pp. 10–13. [Google Scholar]

- Ahavori, F.; Alnowami, M.; Wells, K. Marker-less respiratory motion modeling using the Microsoft Kinect forWindows. Proceedings of Medical Imaging 2014: Image—Guided Procedures, Robotic Interventions, and Modeling, San Diego, CA, USA, 15–20 February 2014. [Google Scholar]

- Ferrigno, G.; Carnevali, P.; Aliverti, A.; Molteni, F.; Beulcke, G.; Pedotti, A. Three-dimensional optical analysis of chest wall motion. J. Appl. Physiol. 1994, 77, 1224–1231. [Google Scholar] [PubMed]

- Wijenayake, U.; Park, S.Y. Respiratory motion estimation using visual coded markers for radiotherapy. In Proceedings of the 29th Annual ACM Symposium on Applied Computing Association for Computing Machinery (ACM), Gyeongju, Korea, 24–28 March 2014; pp. 1751–1752. [Google Scholar]

- Yan, H.; Zhu, G.; Yang, J.; Lu, M.; Ajlouni, M.; Kim, J.H.; Yin, F.F. The Investigation on the Location Effect of External Markers in Respiratory Gated Radiotherapy. J. Appl. Clin. Med. Phys. 2008, 9, 2758. [Google Scholar] [CrossRef] [PubMed]

- Alnowami, M.R.; Lewis, E.; Wells, K.; Guy, M. Respiratory motion modelling and prediction using probability density estimation. In Proceedings of the IEEE Nuclear Science Symposuim and Medical Imaging Conference, Knoxville, TN, USA, 30 October–6 November 2010; pp. 2465–2469. [Google Scholar]

- Alnowami, M.; Lewis, E.; Wells, K.; Guy, M. Inter- and intra-subject variation of abdominal vs. thoracic respiratory motion using kernel density estimation. In Proceedings of the IEEE Nuclear Science Symposuim and Medical Imaging Conference, Knoxville, TN, USA, 30 October–6 November 2010; pp. 2921–2924. [Google Scholar]

- Babchenko, A.; Khanokh, B.; Shomer, Y.; Nitzan, M. Fiber Optic Sensor for the Measurement of Respiratory Chest Circumference Changes. J. Biomed. Opt. 1999, 4, 224–229. [Google Scholar] [CrossRef] [PubMed]

- Allsop, T.; Bhamber, R.; Lloyd, G.; Miller, M.R.; Dixon, A.; Webb, D.; Castañón, J.D.A.; Bennion, I. Respiratory function monitoring using a real-time three-dimensional fiber-optic shaping sensing scheme based upon fiber Bragg gratings. J. Biomed. Opt. 2012, 17, 117001. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Aoki, H.; Koshiji, K.; Nakamura, H.; Takemura, Y.; Nakajima, M. Study on respiration monitoring method using near-infrared multiple slit-lights projection. In Proceedings of the IEEE International Symposium on Micro-NanoMechatronics and Human Science, Nagoya, Japan, 7–9 November 2005; pp. 273–278. [Google Scholar]

- Chen, H.; Cheng, Y.; Liu, D.; Zhang, X.; Zhang, J.; Que, C.; Wang, G.; Fang, J. Color structured light system of chest wall motion measurement for respiratory volume evaluation. J. Biomed. Opt. 2010, 15, 026013. [Google Scholar] [CrossRef] [PubMed]

- Müller, K.; Schaller, C.; Penne, J.; Hornegger, J. Surface-Based Respiratory Motion Classification and Verification. In Bildverarbeitung für die Medizin 2009; Meinzer, H.P., Deserno, T.M., Handels, H., Tolxdorff, T., Eds.; Springer: Berlin/Heidelberg, Germany, 2009; pp. 257–261. [Google Scholar]

- Schaller, C.; Penne, J.; Hornegger, J. Time-of-flight sensor for respiratory motion gating. Med. Phys. 2008, 35, 3090–3093. [Google Scholar] [CrossRef] [PubMed]

- Placht, S.; Stancanello, J.; Schaller, C.; Balda, M.; Angelopoulou, E. Fast time-of-flight camera based surface registration for radiotherapy patient positioning. Med. Phys. 2012, 39, 4–17. [Google Scholar] [CrossRef] [PubMed]

- Burba, N.; Bolas, M.; Krum, D.M.; Suma, E.A. Unobtrusive measurement of subtle nonverbal behaviors with the Microsoft Kinect. In Proceedings of the 2012 IEEE Virtual Reality Workshops (VRW), Costa Mesa, CA, USA, 4–8 March 2012; pp. 1–4. [Google Scholar]

- Martinez, M.; Stiefelhagen, R. Breath rate monitoring during sleep using near-IR imagery and PCA. In Proceedings of the 21st International Conference on Pattern Recognition (ICPR2012), Tsukuba, Japan, 11–15 November 2012; pp. 3472–3475. [Google Scholar]

- Yu, M.C.; Liou, J.L.; Kuo, S.W.; Lee, M.S.; Hung, Y.P. Noncontact respiratory measurement of volume change using depth camera. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Diego, CA, USA, 28 August–1 September 2012; pp. 2371–2374. [Google Scholar]

- Benetazzo, F.; Longhi, S.; Monteriù, A.; Freddi, A. Respiratory rate detection algorithm based on RGB-D camera: Theoretical background and experimental results. Healthc. Technol. Lett. 2014, 1, 81–86. [Google Scholar] [CrossRef] [PubMed]

- Bernal, E.A.; Mestha, L.K.; Shilla, E. Non contact monitoring of respiratory function via depth sensing. In Proceedings of the IEEE-EMBS International Conference on Biomedical and Health Informatics (BHI), Valencia, Spain, 1–4 June 2014; pp. 101–104. [Google Scholar]

- Al-Naji, A.; Gibson, K.; Lee, S.H.; Chahl, J. Real Time Apnoea Monitoring of Children Using the Microsoft Kinect Sensor: A Pilot Study. Sensors 2017, 17, 286. [Google Scholar] [CrossRef] [PubMed]

- Procházka, A.; Schätz, M.; Vyšata, O.; Vališ, M. Microsoft Kinect Visual and Depth Sensors for Breathing and Heart Rate Analysis. Sensors 2016, 16, 996. [Google Scholar] [CrossRef] [PubMed]

- Seppenwoolde, Y.; Shirato, H.; Kitamura, K.; Shimizu, S.; van Herk, M.; Lebesque, J.V.; Miyasaka, K. Precise and real-time measurement of 3D tumor motion in lung due to breathing and heartbeat, measured during radiotherapy. Int. J. Radiat. Oncol. Biol. Phys. 2002, 53, 822–834. [Google Scholar] [CrossRef]

- Xia, J.; Siochi, R.A. A real-time respiratory motion monitoring system using KINECT: Proof of concept. Med. Phys. 2012, 39, 2682–2685. [Google Scholar] [CrossRef] [PubMed]

- Wasza, J.; Bauer, S.; Haase, S.; Hornegger, J. Sparse Principal Axes Statistical Surface Deformation Models for Respiration Analysis and Classification. In Bildverarbeitung für die Medizin 2012; Tolxdorff, T., Deserno, M.T., Handels, H., Meinzer, H.P., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 316–321. [Google Scholar]

- Alnowami, M.; Alnwaimi, B.; Tahavori, F.; Copland, M.; Wells, K. A quantitative assessment of using the Kinect for Xbox360 for respiratory surface motion tracking. In Proceedings of the SPIE Medical Imaging. International Society for Optics and Photonics, San Diego, CA, USA, 4 February 2012; p. 83161T-83161T-10. [Google Scholar]

- Tahavori, F.; Adams, E.; Dabbs, M.; Aldridge, L.; Liversidge, N.; Donovan, E.; Jordan, T.; Evans, P.; Wells, K. Combining marker-less patient setup and respiratory motion monitoring using low cost 3D camera technology. In Proceedings of the SPIE Medical Imaging. International Society for Optics and Photonics, Orlando, Florida, USA, 21 February 2015; p. 94152I-94152I-7. [Google Scholar] [CrossRef]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing, 3rd ed.; Pearson: New York, NY, USA, 2007. [Google Scholar]

- Gui, P.; Ye, Q.; Chen, H.; Zhang, T.; Yang, C. Accurately calibrate kinect sensor using indoor control field. In Proceedings of the 2014 Third International Workshop on Earth Observation and Remote Sensing Applications (EORSA), Changsha, China, 11–14 June 2014; pp. 9–13. [Google Scholar]

- Daniel, H.C.; Kannala, J.; Heikkilä, J. Joint Depth and Color Camera Calibration with Distortion Correction. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2058–2064. [Google Scholar]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision, 2nd ed.; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Tomasi, C.; Manduchi, R. Bilateral filtering for gray and color images. In Proceedings of the Sixth International Conference on Computer Vision (IEEE Cat. No.98CH36271), Bombay, India, 4–7 January 1998; pp. 839–846. [Google Scholar]

- Jolliffe, I. Principal Component Analysis; Springer: Berlin/Heidelberg, Germany, 2002. [Google Scholar]

- Harte, J.M.; Golby, C.K.; Acosta, J.; Nash, E.F.; Kiraci, E.; Williams, M.A.; Arvanitis, T.N.; Naidu, B. Chest wall motion analysis in healthy volunteers and adults with cystic fibrosis using a novel Kinect-based motion tracking system. Med. Biol. Eng. Comput. 2016, 54, 1631–1640. [Google Scholar] [CrossRef] [PubMed]

- Sharp, C.; Soleimani, V.; Hannuna, S.; Camplani, M.; Damen, D.; Viner, J.; Mirmehdi, M.; Dodd, J.W. Toward Respiratory Assessment Using Depth Measurements from a Time-of-Flight Sensor. Front. Physiol. 2017, 8. [Google Scholar] [CrossRef] [PubMed]

- Zhou, F.; Zhang, G. Complete calibration of a structured light stripe vision sensor through planar target of unknown orientations. Imag. Vis. Comput. 2005, 23, 59–67. [Google Scholar] [CrossRef]

- Dang, Q.; Chee, Y.; Pham, D.; Suh, Y. A Virtual Blind Cane Using a Line Laser-Based Vision System and an Inertial Measurement Unit. Sensors 2016, 16, 95. [Google Scholar] [CrossRef] [PubMed]

- Matney, J.E.; Parker, B.C.; Neck, D.W.; Henkelmann, G.; Rosen, I.I. Target localization accuracy in a respiratory phantom using BrainLAB ExacTrac and 4DCT imaging. J. Appl. Clin. Med. Phys. 2011, 12, 3296. [Google Scholar] [CrossRef] [PubMed]

| Volunteer | Gender | Age (years) | BMI (kg/m) | Datasets |

|---|---|---|---|---|

| 1 | M | 29 | 26.4 | D01, D02 |

| 2 | M | 32 | 28.7 | D03 |

| 3 | M | 26 | 27.4 | D04, D05 |

| 4 | M | 27 | 21.5 | D06, D07 |

| 5 | M | 25 | 26.9 | D08 |

| 6 | M | 28 | 26.5 | D09 |

| 7 | M | 27 | 19.3 | D10, D11 |

| 8 | M | 24 | 24.3 | D12, D13 |

| 9 | M | 30 | 20.9 | D14 |

| 10 | M | 25 | 24.0 | D15 |

| Position | Parameters | D01 | D02 | D03 | D04 | D05 | D06 | D07 | D08 | D09 | D10 | D11 | D12 | D13 | D14 | D15 | Average |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| P1 | Average | 0.23 | 0.66 | 0.18 | 0.27 | 0.39 | 0.36 | 0.36 | 0.21 | 0.83 | 0.45 | 0.32 | 0.36 | 0.94 | 0.43 | 0.55 | 0.44 |

| Max. | 0.92 | 2.69 | 0.66 | 1.14 | 0.96 | 1.41 | 1.41 | 0.77 | 1.91 | 1.45 | 1.05 | 1.47 | 1.89 | 1.24 | 1.51 | 1.37 | |

| Standard deviation | 0.19 | 0.66 | 0.13 | 0.24 | 0.22 | 0.32 | 0.32 | 0.16 | 0.47 | 0.31 | 0.23 | 0.29 | 0.50 | 0.27 | 0.38 | 0.31 | |

| P2 | Average | 0.39 | 0.34 | 0.33 | 0.52 | 0.22 | 1.09 | 0.47 | 0.47 | 0.85 | 0.46 | 0.30 | 0.50 | 0.52 | 0.97 | 0.38 | 0.52 |

| Max. | 1.10 | 1.34 | 0.84 | 1.62 | 0.66 | 1.87 | 1.37 | 1.31 | 1.72 | 1.34 | 0.79 | 1.55 | 1.56 | 2.51 | 1.38 | 1.40 | |

| Standard deviation | 0.25 | 0.31 | 0.21 | 0.38 | 0.16 | 0.40 | 0.30 | 0.33 | 0.46 | 0.32 | 0.19 | 0.34 | 0.40 | 0.66 | 0.29 | 0.33 | |

| P3 | Average | 0.31 | 0.85 | 0.42 | 0.50 | 0.59 | 0.41 | 0.44 | 0.74 | 0.78 | 0.70 | 0.40 | 0.63 | 1.04 | 0.57 | 0.64 | 0.60 |

| Max. | 1.09 | 1.90 | 1.18 | 1.29 | 1.39 | 1.28 | 1.59 | 1.81 | 1.97 | 1.83 | 1.03 | 1.89 | 2.55 | 1.56 | 1.82 | 1.61 | |

| Standard deviation | 0.25 | 0.44 | 0.26 | 0.32 | 0.34 | 0.31 | 0.34 | 0.44 | 0.50 | 0.46 | 0.26 | 0.47 | 0.65 | 0.36 | 0.40 | 0.39 | |

| P4 | Average | 0.42 | 0.27 | 0.28 | 0.51 | 0.34 | 0.40 | 0.36 | 0.38 | 1.18 | 1.55 | 0.69 | 0.50 | 0.74 | 0.49 | 0.41 | 0.57 |

| Max. | 0.95 | 1.38 | 0.77 | 1.72 | 0.91 | 0.90 | 1.03 | 1.24 | 2.45 | 3.18 | 1.52 | 1.52 | 1.86 | 1.11 | 1.02 | 1.44 | |

| Standard deviation | 0.21 | 0.27 | 0.19 | 0.46 | 0.23 | 0.24 | 0.26 | 0.27 | 0.71 | 0.67 | 0.32 | 0.43 | 0.43 | 0.32 | 0.24 | 0.35 | |

| P5 | Average | 0.32 | 0.43 | 0.33 | 0.29 | 0.51 | 0.89 | 0.53 | 0.70 | 0.37 | 0.43 | 0.38 | 0.70 | 0.87 | 0.63 | 0.73 | 0.54 |

| Max. | 0.89 | 2.23 | 0.96 | 1.61 | 0.97 | 1.68 | 1.46 | 1.59 | 1.27 | 1.35 | 1.02 | 2.04 | 2.16 | 1.63 | 1.79 | 1.51 | |

| Standard deviation | 0.22 | 0.49 | 0.21 | 0.27 | 0.23 | 0.37 | 0.40 | 0.40 | 0.29 | 0.33 | 0.25 | 0.59 | 0.56 | 0.37 | 0.42 | 0.36 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wijenayake, U.; Park, S.-Y. Real-Time External Respiratory Motion Measuring Technique Using an RGB-D Camera and Principal Component Analysis. Sensors 2017, 17, 1840. https://doi.org/10.3390/s17081840

Wijenayake U, Park S-Y. Real-Time External Respiratory Motion Measuring Technique Using an RGB-D Camera and Principal Component Analysis. Sensors. 2017; 17(8):1840. https://doi.org/10.3390/s17081840

Chicago/Turabian StyleWijenayake, Udaya, and Soon-Yong Park. 2017. "Real-Time External Respiratory Motion Measuring Technique Using an RGB-D Camera and Principal Component Analysis" Sensors 17, no. 8: 1840. https://doi.org/10.3390/s17081840

APA StyleWijenayake, U., & Park, S.-Y. (2017). Real-Time External Respiratory Motion Measuring Technique Using an RGB-D Camera and Principal Component Analysis. Sensors, 17(8), 1840. https://doi.org/10.3390/s17081840