Development of Three-Dimensional Dental Scanning Apparatus Using Structured Illumination

Abstract

:1. Introduction

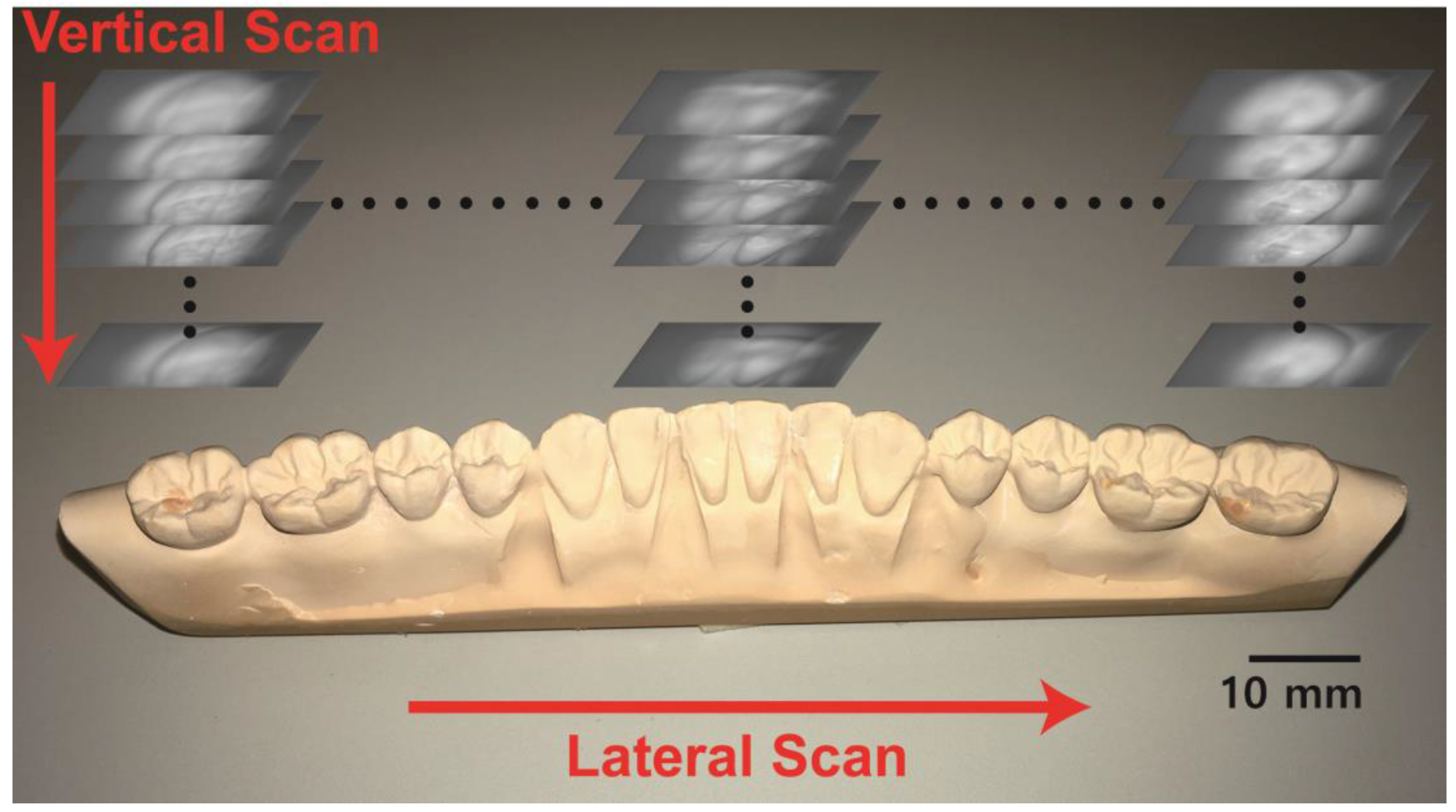

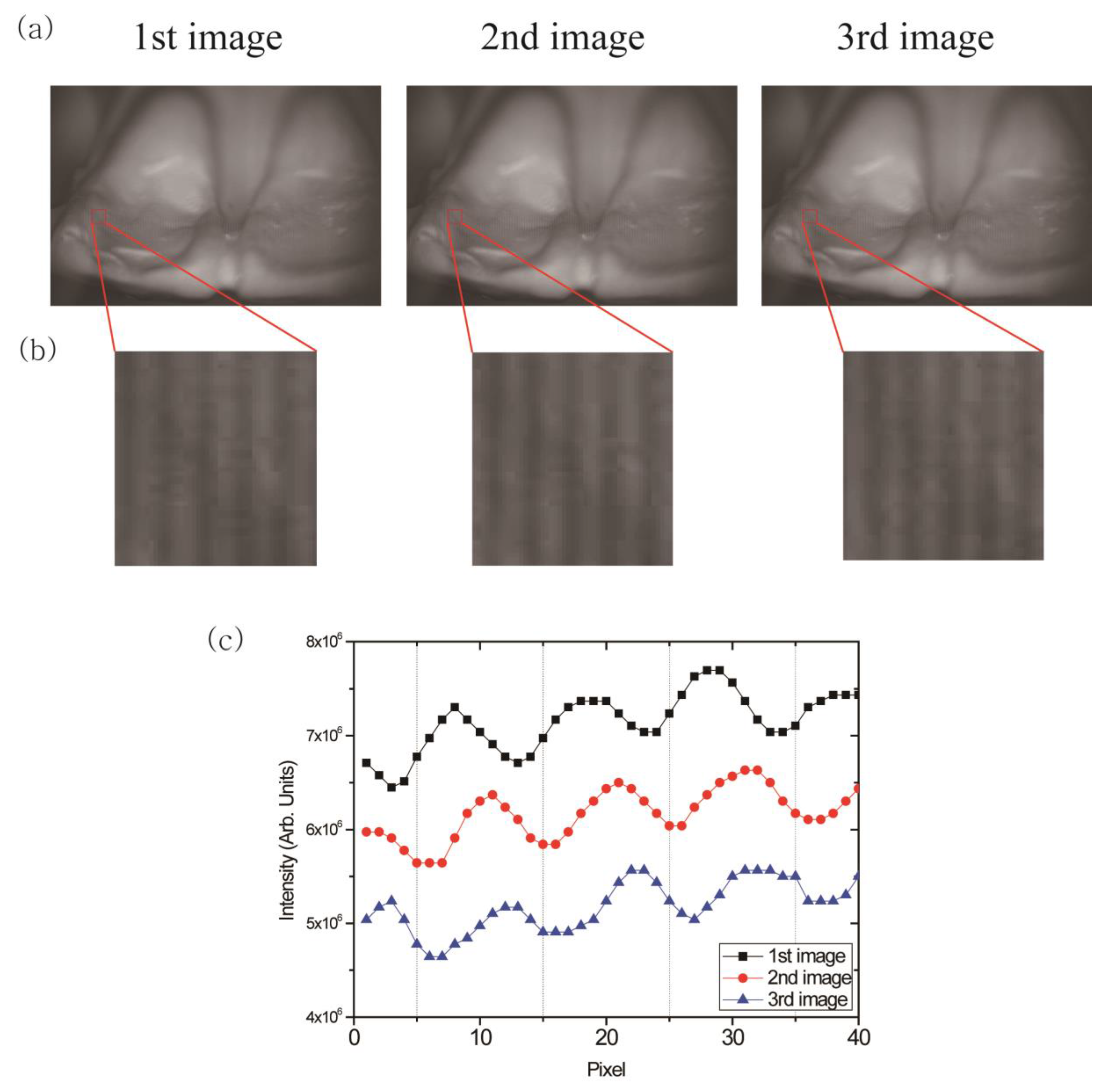

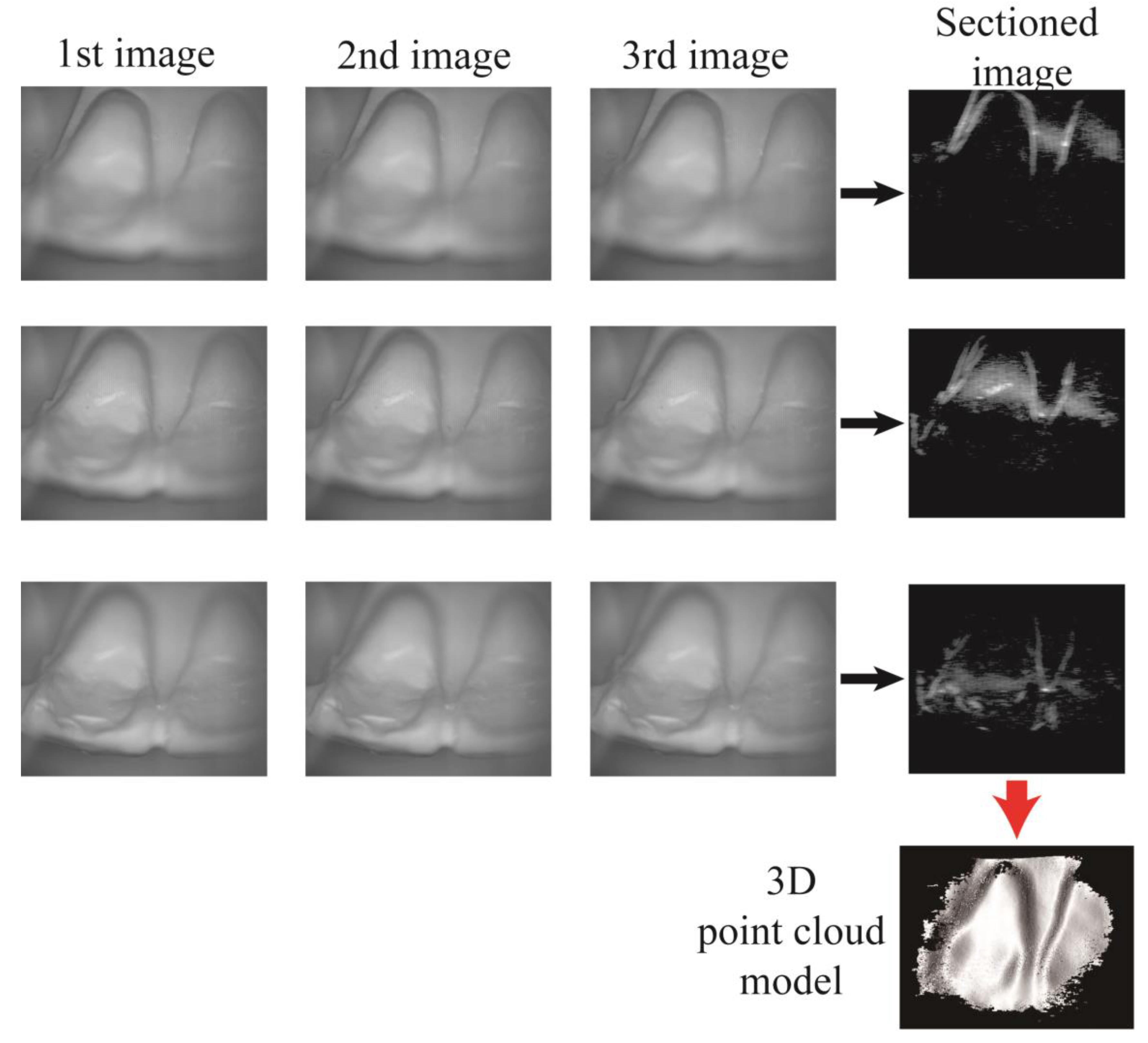

2. Methods

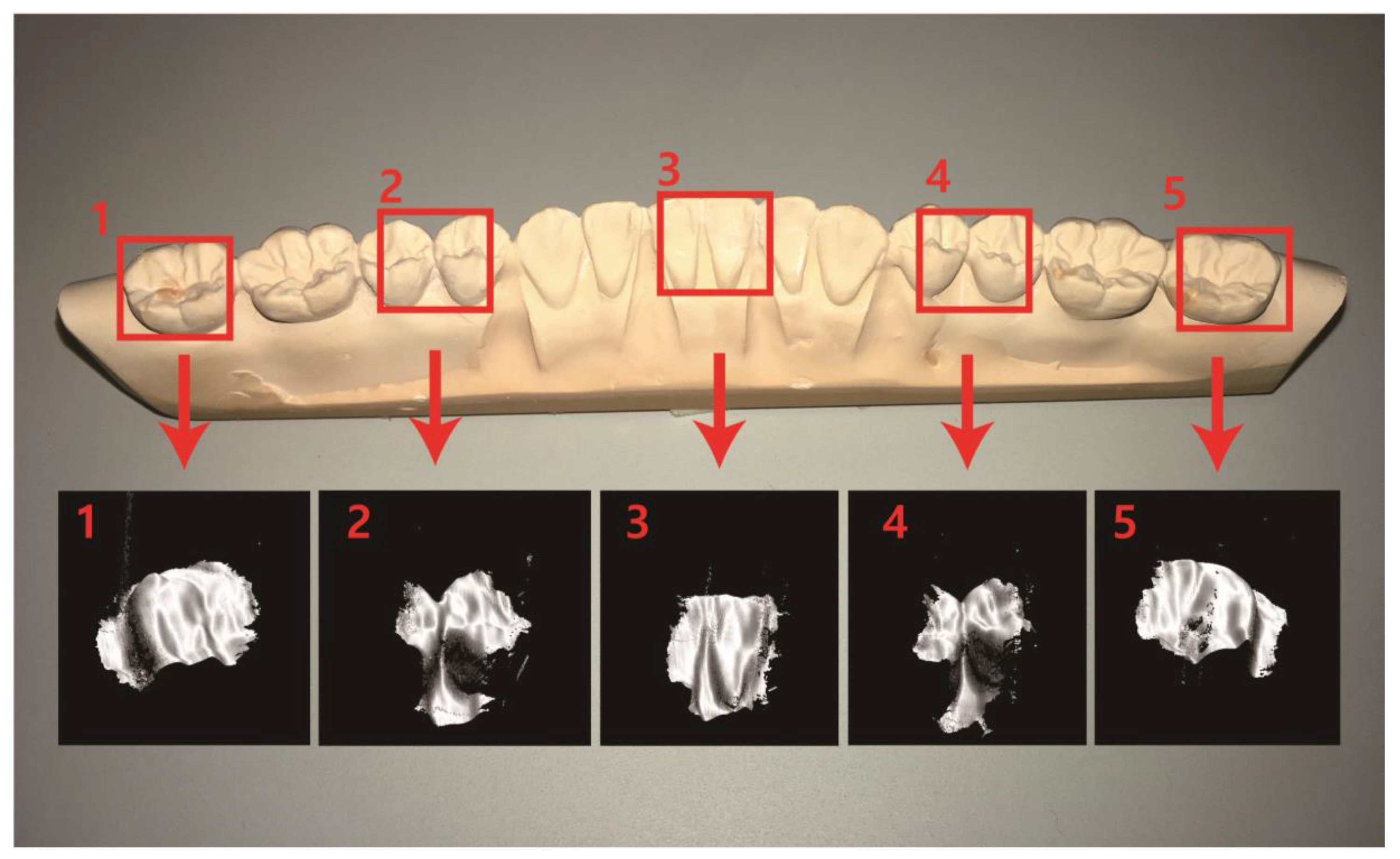

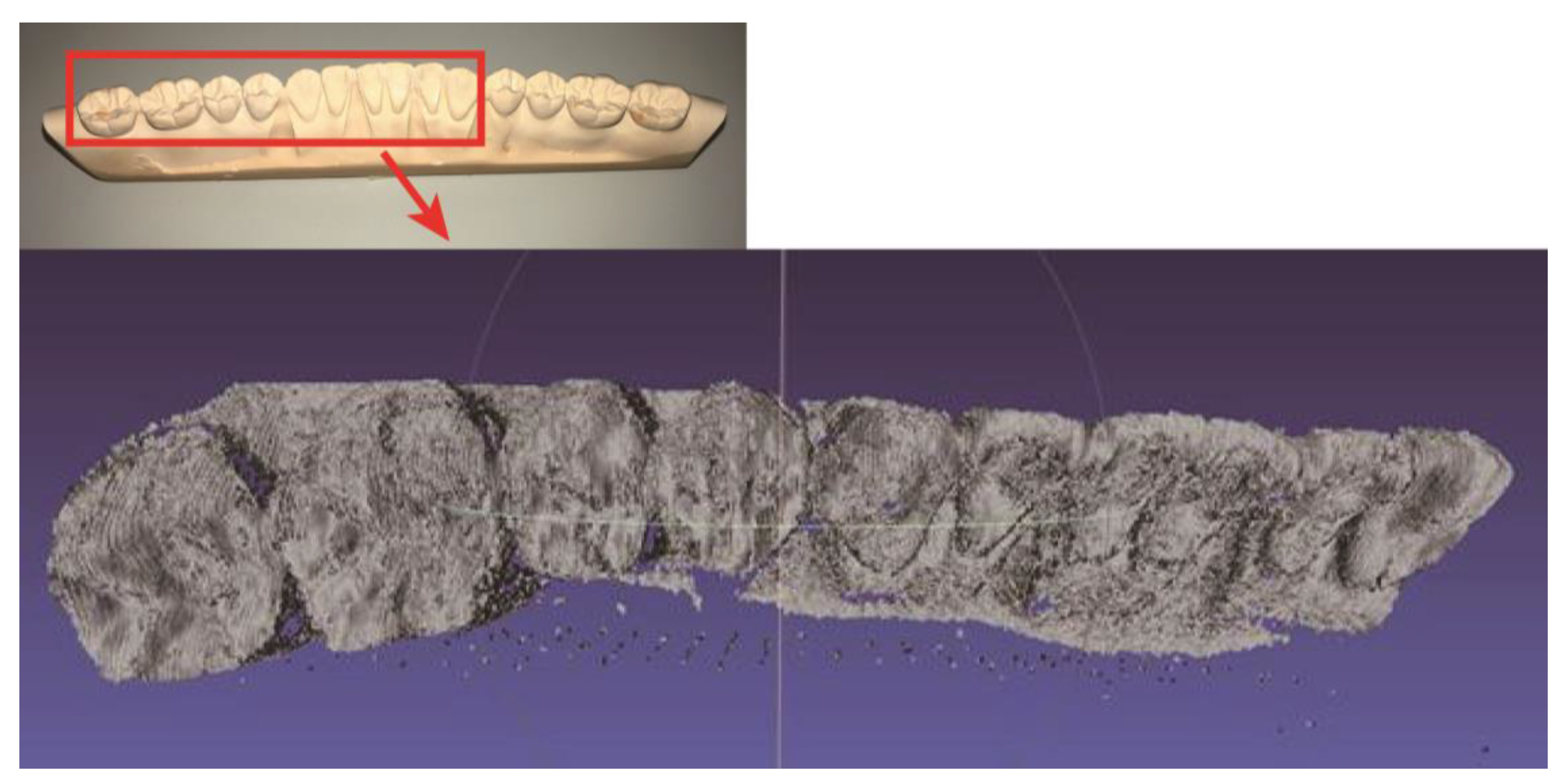

3. Results

4. Discussion

Supplementary Materials

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Nedevschi, S.; Danescu, R.; Frentiu, D.; Marita, T.; Oniga, F.; Pocol, C.; Schmidt, R.; Graf, T. High accuracy stereo vision system for far distance obstacle detection. In Proceedings of the 2004 IEEE Intelligent Vehicles Symposium, Parma, Italy, 14–17 June 2004; pp. 292–297. [Google Scholar]

- Broggi, A.; Caraffi, C.; Porta, P.P.; Zani, P. The single frame stereo vision system for reliable obstacle detection used during the 2005 DARPA grand challenge on TerraMax. In Proceedings of the IEEE Intelligent Transportation Systems Conference, Rio de Janeiro, Brazil, 1–4 November 2006; pp. 745–752. [Google Scholar]

- Huang, W.; Kovacevic, R. A Laser-Based Vision System for Weld Quality Inspection. Sensors 2011, 11, 506–521. [Google Scholar] [CrossRef] [PubMed]

- Matsubara, A.; Yamazaki, T.; Ikenaga, S. Non-contact measurement of spindle stiffness by using magnetic loading device. Int. J. Mach. Tools Manuf. 2013, 71, 20–25. [Google Scholar] [CrossRef]

- Schwenke, H.; Neuschaefer-Rube, U.; Pfeifer, T.; Kunzmann, H. Optical methods for dimensional metrology in production engineering. CIRP Ann. Manuf. Technol. 2002, 51, 685–699. [Google Scholar]

- Istook, C.L.; Hwang, S.-J. 3D body scanning systems with application to the apparel industry. Fash. Mark. Manag. 2001, 5, 120–132. [Google Scholar] [CrossRef]

- Ashdown, S.P.; Loker, S.; Schoenfelder, K.; Lyman-Clarke, L. Using 3D scans for fit analysis. J. Text. Appar. Technol. Manag. 2004, 4, 1–12. [Google Scholar]

- Hajeer, M.; Millett, D.; Ayoub, A.; Siebert, J. Current Products and Practices: Applications of 3D imaging in orthodontics: Part I. J. Orthod. 2004, 31, 62–70. [Google Scholar] [CrossRef] [PubMed]

- Zhou, H.; Hu, H. Human motion tracking for rehabilitation—A survey. Biomed. Signal Process. Control 2008, 3, 1–18. [Google Scholar]

- Kusnoto, B.; Evans, C.A. Reliability of a 3D surface laser scanner for orthodontic applications. Am. J. Orthod. Dentofac. Orthop. 2002, 122, 342–348. [Google Scholar]

- Kovacs, L.; Zimmermann, A.; Brockmann, G.; Gühring, M.; Baurecht, H.; Papadopulos, N.; Schwenzer-Zimmerer, K.; Sader, R.; Biemer, E.; Zeilhofer, H. Three-dimensional recording of the human face with a 3D laser scanner. J. Plast. Reconstr. Aesthet. Surg. 2006, 59, 1193–1202. [Google Scholar] [CrossRef] [PubMed]

- Maier-Hein, L.; Mountney, P.; Bartoli, A.; Elhawary, H.; Elson, D.; Groch, A.; Kolb, A.; Rodrigues, M.; Sorger, J.; Speidel, S.; et al. Optical techniques for 3D surface reconstruction in computer-assisted laparoscopic surgery. Med. Image Anal. 2013, 17, 974–996. [Google Scholar] [PubMed]

- Yatabe, K.; Ishikawa, K.; Oikawa, Y. Compensation of fringe distortion for phase-shifting three-dimensional shape measurement by inverse map estimation. Appl. Opt. 2016, 55, 6017–6024. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z. Microsoft kinect sensor and its effect. IEEE Multimed. 2012, 19, 4–10. [Google Scholar] [CrossRef]

- Cui, Y.; Schuon, S.; Chan, D.; Thrun, S.; Theobalt, C. 3D shape scanning with a time-of-flight camera. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 1173–1180. [Google Scholar]

- Fu, G.; Menciassi, A.; Dario, P. Development of a low-cost active 3D triangulation laser scanner for indoor navigation of miniature mobile robots. Robot. Auton. Syst. 2012, 60, 1317–1326. [Google Scholar] [CrossRef]

- Kieu, H.; Pan, T.; Wang, Z.; Le, M.; Nguyen, H.; Vo, M. Accurate 3D shape measurement of multiple separate objects with stereo vision. Meas. Sci. Technol. 2014, 25, 035401. [Google Scholar] [CrossRef]

- Ghim, Y.-S.; Rhee, H.-G.; Davies, A.; Yang, H.-S.; Lee, Y.-W. 3D surface mapping of freeform optics using wavelength scanning lateral shearing interferometry. Opt. Exp. 2014, 22, 5098–5105. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S. Recent progresses on real-time 3D shape measurement using digital fringe projection techniques. Opt. Lasers Eng. 2010, 48, 149–158. [Google Scholar] [CrossRef]

- Chen, L.-C.; Huang, C.-C. Miniaturized 3D surface profilometer using digital fringe projection. Meas. Sci. Technol. 2005, 16, 1601. [Google Scholar] [CrossRef]

- Birkholz, U.; Haertl, C.; Nassler, P. Method and apparatus for producing an ear impression. U.S. Patent 4,834,927, 30 May 1989. [Google Scholar]

- Pantino, D.A. Method of making a face mask from a facial impression and of gas delivery. U.S. Patent 5,832,918, 10 November 1998. [Google Scholar]

- Von Nostitz, F.H. Dental impression tray and process for the use thereof. U.S. Patent 4,569,342, 11 February 1986. [Google Scholar]

- Christensen, G.J. Impressions are changing: Deciding on conventional, digital or digital plus in-office milling. J. Am. Dent. Assoc. 2009, 140, 1301–1304. [Google Scholar] [CrossRef] [PubMed]

- Duret, F.; Termoz, C. Method of and apparatus for making a prosthesis, especially a dental prosthesis. U.S. Patent 4,663,720, 5 May 1987. [Google Scholar]

- Brandestini, M.; Moermann, W.H. Method and apparatus for the three-dimensional registration and display of prepared teeth. U.S. Patent 4,837,732, 6 June 1989. [Google Scholar]

- Taneva, E.; Kusnoto, B.; Evans, C.A. 3D Scanning, Imaging, and Printing in Orthodontics. Issues in Contemporary Orthodontics 2015. Available online: https://www.intechopen.com/books/issues-in-contemporary-orthodontics/3d-scanning-imaging-and-printing-in-orthodontics (accessed on 15 July 2017).

- Logozzo, S.; Zanetti, E.M.; Franceschini, G.; Kilpelä, A.; Mäkynen, A. Recent advances in dental optics–Part I: 3D intraoral scanners for restorative dentistry. Opt. Lasers Eng. 2014, 54, 203–221. [Google Scholar] [CrossRef]

- Ender, A.; Mehl, A. Full arch scans: conventional versus digital impressions--an in-vitro study. Int. J. Comput. Dent. 2011, 14, 11–21. [Google Scholar] [PubMed]

- Ender, A.; Attin, T.; Mehl, A. In vivo precision of conventional and digital methods of obtaining complete-arch dental impressions. J. Prosthet. Dent. 2016, 115, 313–320. [Google Scholar] [CrossRef] [PubMed]

- Fisker, R.; Öjelund, H.; Kjær, R.; van der Poel, M.; Qazi, A.A.; Hollenbeck, K.-J. Focus scanning apparatus. U.S. Patent 20120092461A1, 19 April 2012. [Google Scholar]

- Doblas, A.; Sánchez-Ortiga, E.; Saavedra, G.; Sola-Pikabea, J.; Martínez-Corral, M.; Hsieh, P.-Y.; Huang, Y.-P. Three-dimensional microscopy through liquid-lens axial scanning. Proc. SPIE 2015, 9495. [Google Scholar] [CrossRef]

- Annibale, P.; Dvornikov, A.; Gratton, E. Electrically tunable lens speeds up 3D orbital tracking. Biomed. Opt. Exp. 2015, 6, 2181–2190. [Google Scholar] [CrossRef] [PubMed]

- Pokorny, P.; Miks, A. 3D optical two-mirror scanner with focus-tunable lens. Appl. Opt. 2015, 54, 6955–6960. [Google Scholar] [CrossRef] [PubMed]

- Qian, J.; Lei, M.; Dan, D.; Yao, B.; Zhou, X.; Yang, Y.; Yan, S.; Min, J.; Yu, X. Full-color structured illumination optical sectioning microscopy. Sci. Rep. 2015, 5, 14513. [Google Scholar] [CrossRef] [PubMed]

- Lohry, W.; Zhang, S. High-speed absolute three-dimensional shape measurement using three binary dithered patterns. Opt. Exp. 2014, 22, 26752. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; van der Weide, D.; Oliver, J. Superfast phase-shifting method for 3-D shape measurement. Opt. Exp. 2010, 18, 9684. [Google Scholar] [CrossRef] [PubMed]

- Besl, P.J.; McKay, H.D. A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Holz, D.; Ichim, A.E.; Tombari, F.; Rusu, R.B.; Behnke, S. Registration with the Point Cloud Library: A Modular Framework for Aligning in 3-D. IEEE Robot. Autom. Mag. 2015, 22, 110–124. [Google Scholar] [CrossRef]

- Boeddinghaus, M.; Breloer, E.S.; Rehmann, P.; Wöstmann, B. Accuracy of single-tooth restorations based on intraoral digital and conventional impressions in patients. Clin. Oral Investig. 2015, 19, 2027–2034. [Google Scholar] [CrossRef] [PubMed]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ahn, J.S.; Park, A.; Kim, J.W.; Lee, B.H.; Eom, J.B. Development of Three-Dimensional Dental Scanning Apparatus Using Structured Illumination. Sensors 2017, 17, 1634. https://doi.org/10.3390/s17071634

Ahn JS, Park A, Kim JW, Lee BH, Eom JB. Development of Three-Dimensional Dental Scanning Apparatus Using Structured Illumination. Sensors. 2017; 17(7):1634. https://doi.org/10.3390/s17071634

Chicago/Turabian StyleAhn, Jae Sung, Anjin Park, Ju Wan Kim, Byeong Ha Lee, and Joo Beom Eom. 2017. "Development of Three-Dimensional Dental Scanning Apparatus Using Structured Illumination" Sensors 17, no. 7: 1634. https://doi.org/10.3390/s17071634

APA StyleAhn, J. S., Park, A., Kim, J. W., Lee, B. H., & Eom, J. B. (2017). Development of Three-Dimensional Dental Scanning Apparatus Using Structured Illumination. Sensors, 17(7), 1634. https://doi.org/10.3390/s17071634