A Multi-Disciplinary Approach to Remote Sensing through Low-Cost UAVs

Abstract

:1. Introduction

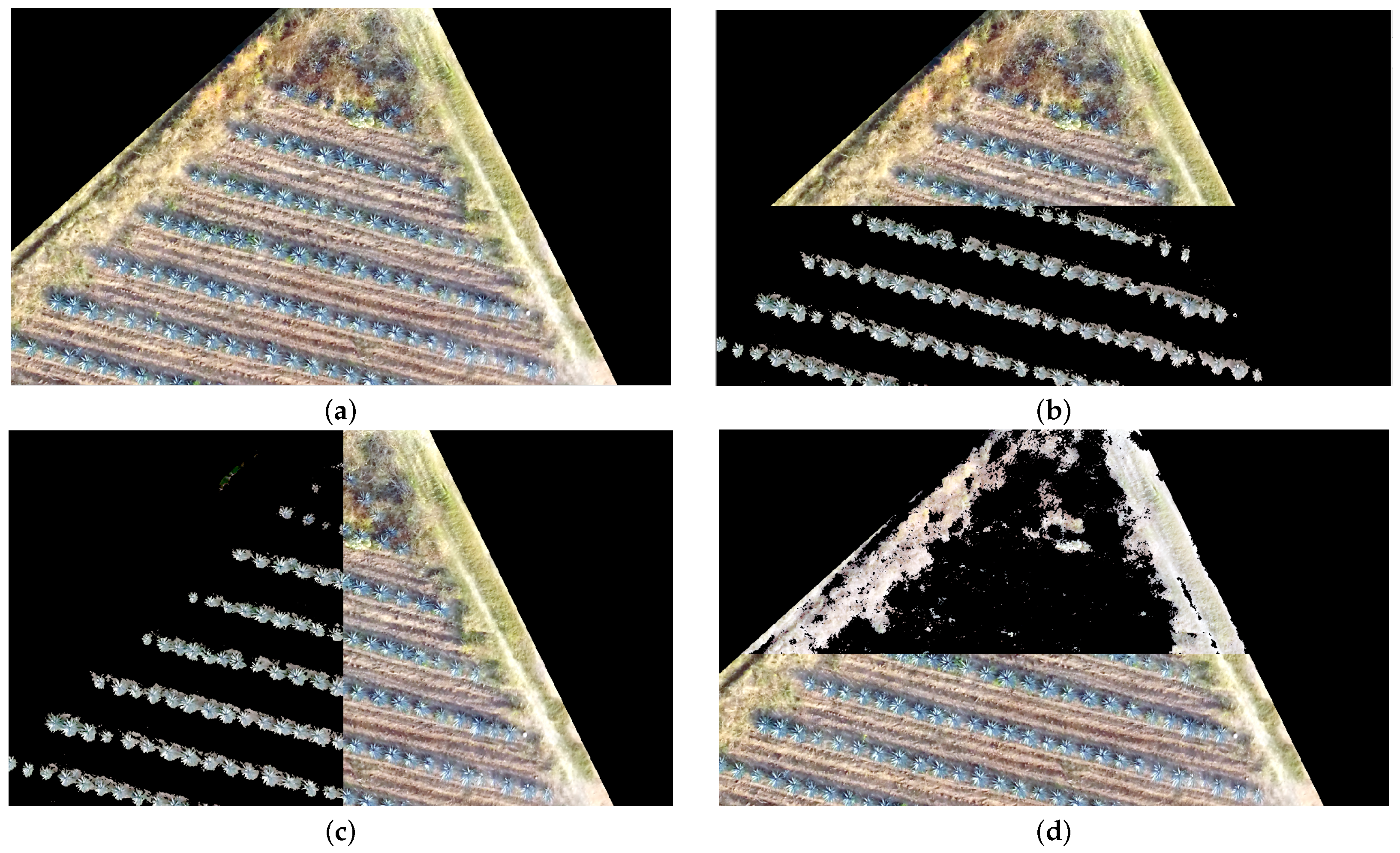

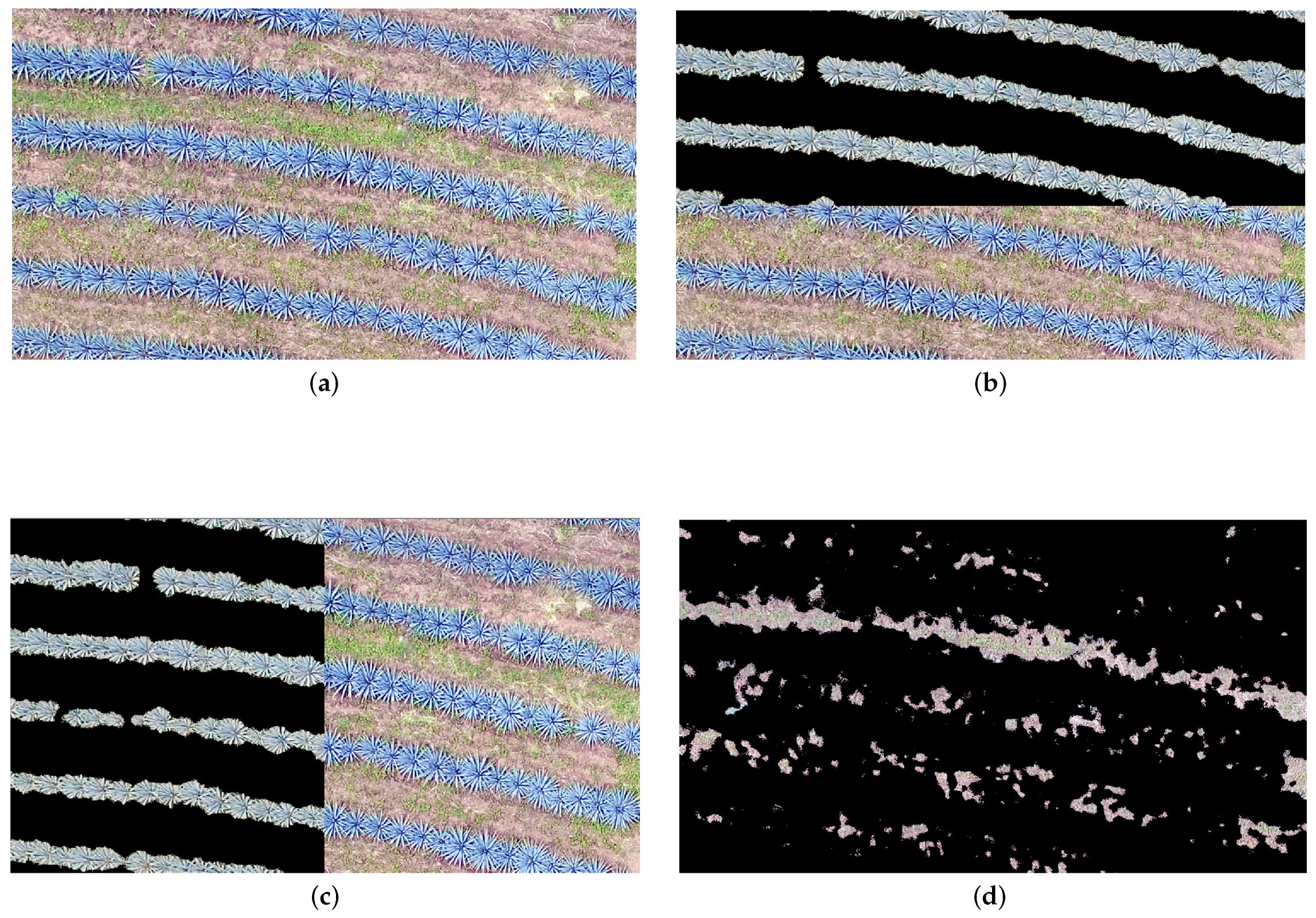

2. Materials and Methods

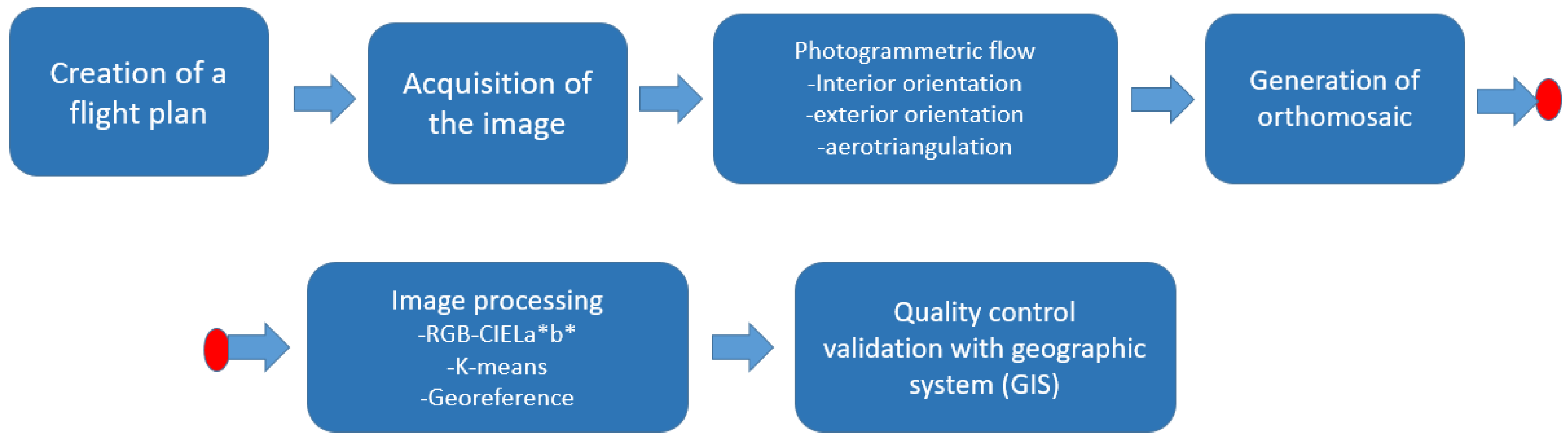

2.1. Work-Flow

2.2. Study Areas and UAV Flight Plan

2.3. Description of the Sensor

2.4. Camera Calibration

2.5. Photogrammetric Flow

- The interior orientation: it refers to the internal geometry of the camera and defines the coordinates of the principal point and focal length.

- The aerial triangulation: it delivers 3D positions of points, measured on images, in a ground control coordinate system. This process consists in generating the correct overlap of each image [46], which, in our case, was in the horizontal of 70% and in the vertical of 30%.

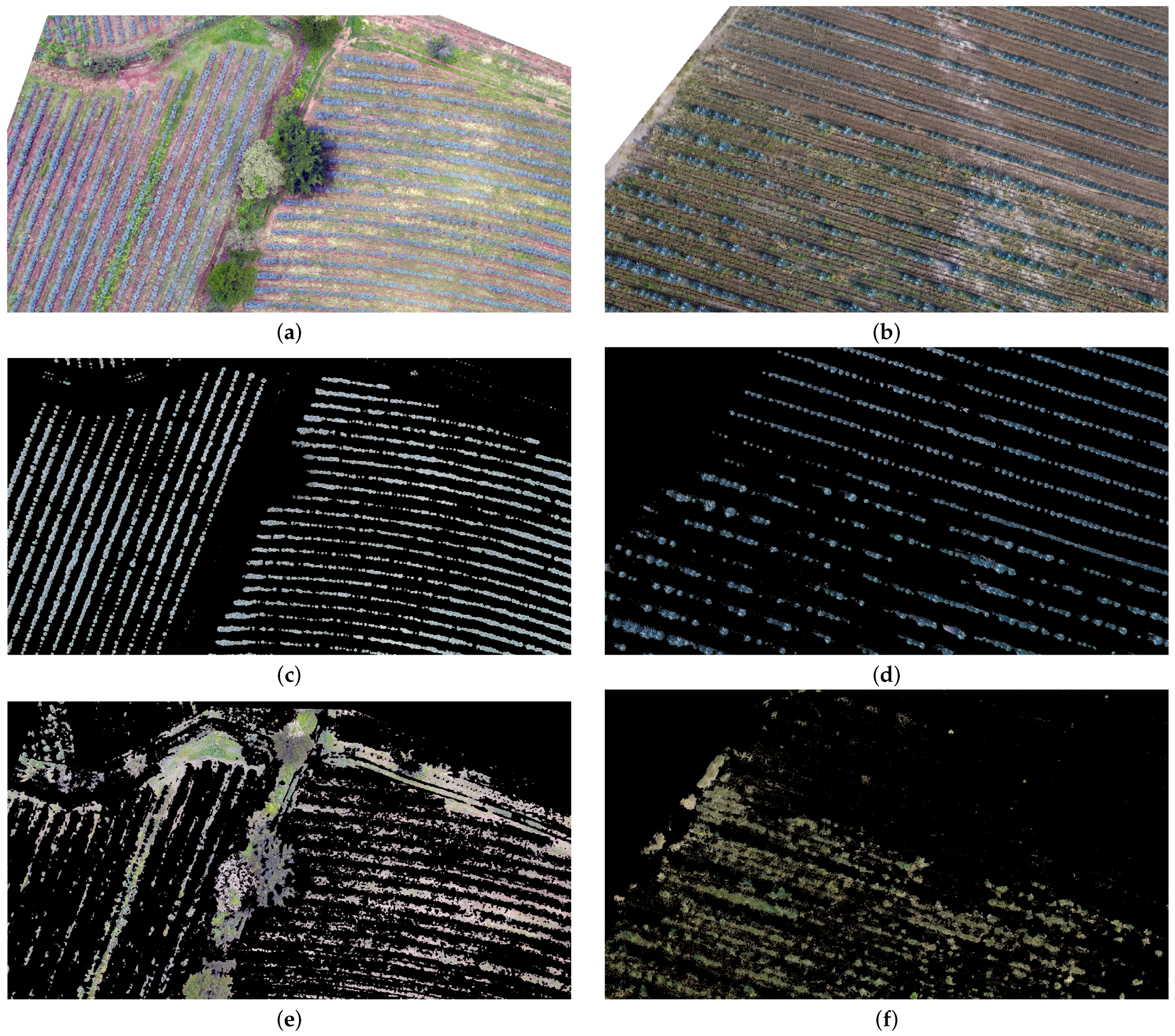

3. Image Processing

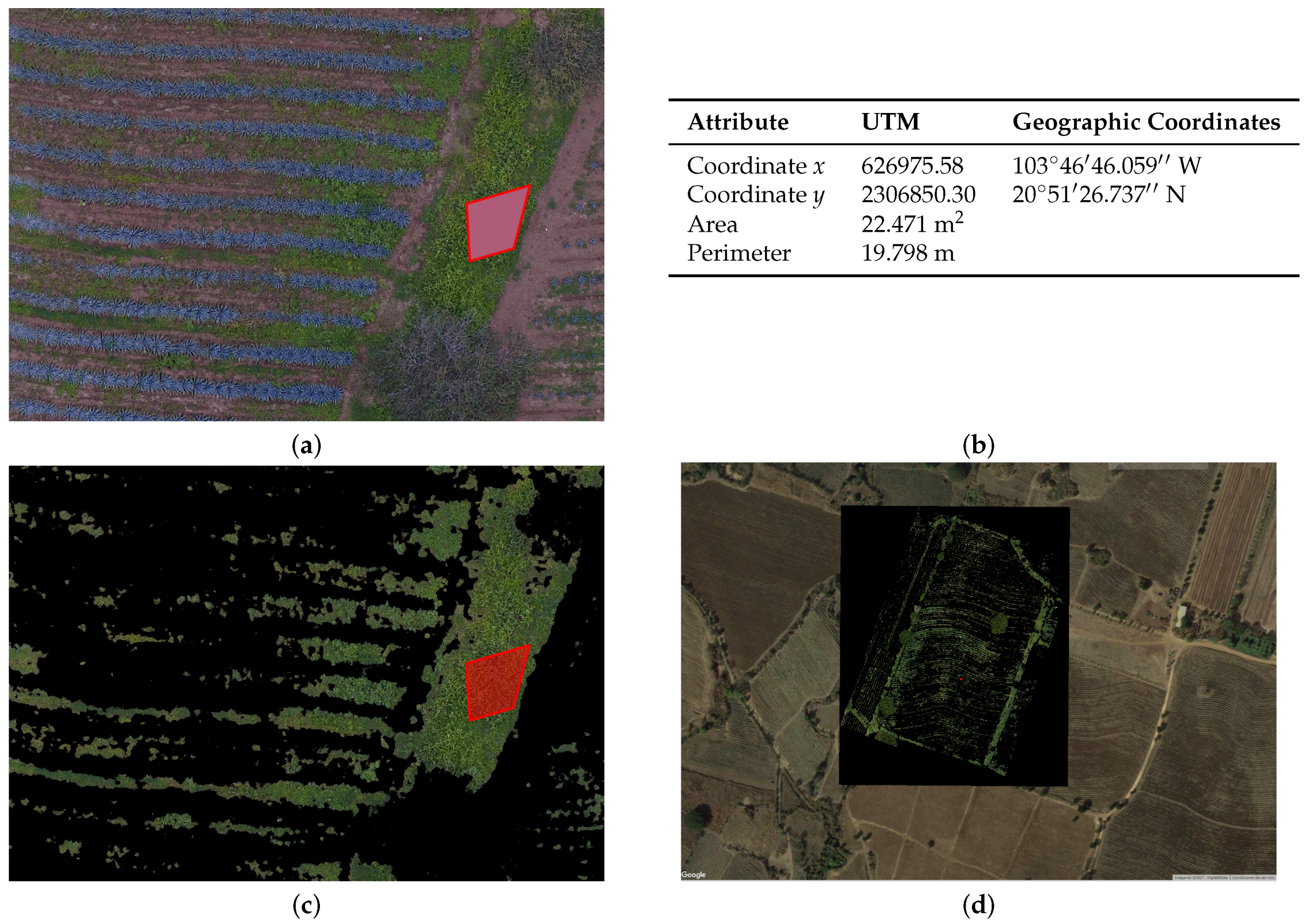

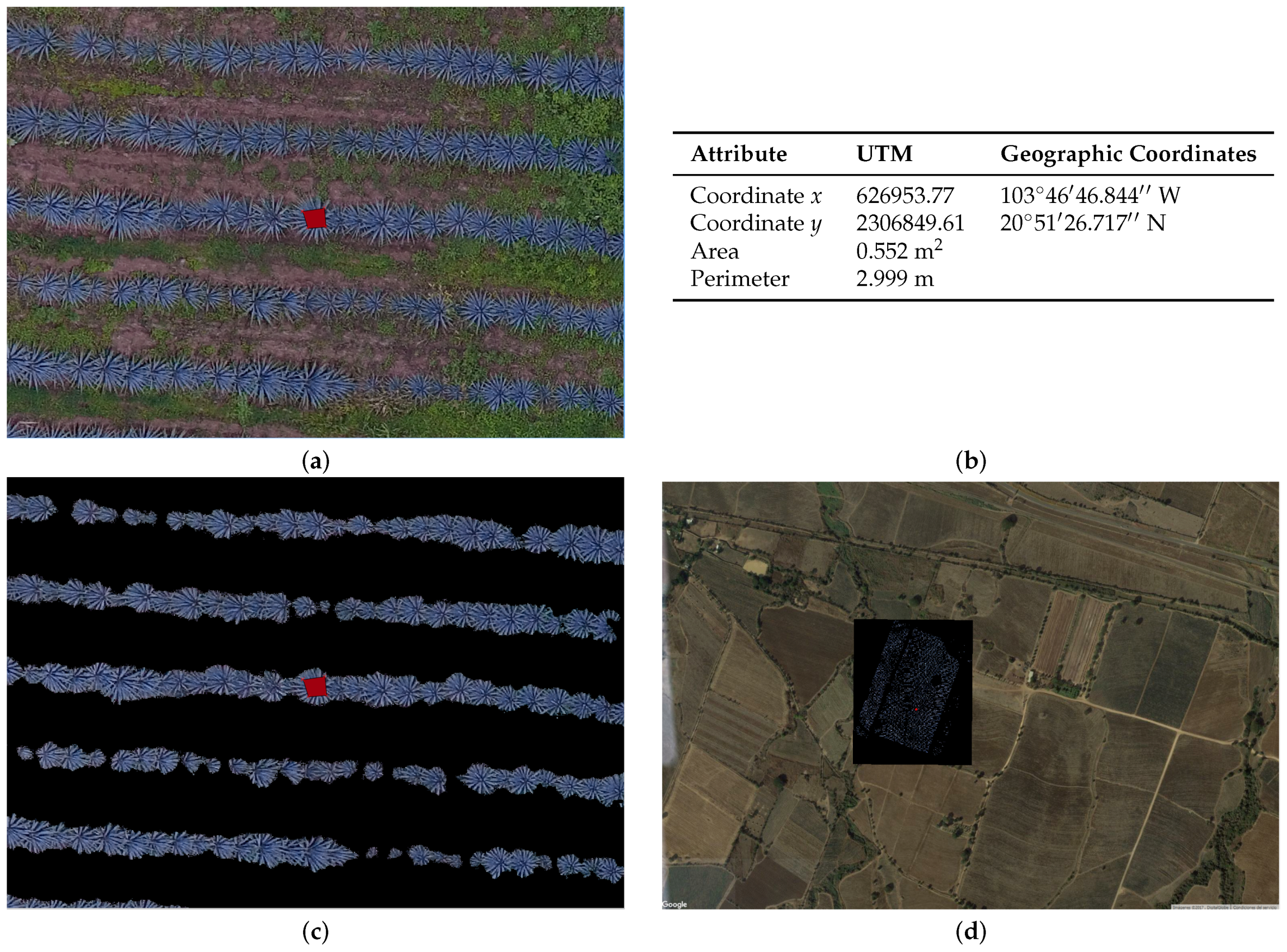

4. Evaluation of Methodology

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Aasen, H.; Burkart, A.; Bolten, A.; Bareth, G. Generating 3D hyperspectral information with lightweight UAV snapshot cameras for vegetation monitoring: From camera calibration to quality assurance. ISPRS J. Photogramm. Remote Sens. 2015, 108, 245–259. [Google Scholar] [CrossRef]

- Horcher, A.; Visser, R.J. Unmanned aerial vehicles: Applications for natural resource management and monitoring. In Proceedings of the Council on Forest Engineering Conference, Hot Springs, AR, USA, 27–30 April 2004. [Google Scholar]

- Tsach, S.; Penn, D.; Levy, A. Advanced technologies and approaches for next generation UAVs. In Proceedings of the International Council of the Aeronautical Sciences, Congress ICAS, Toronto, ON, Canada, 8–13 September 2002. [Google Scholar]

- Gonçalves, J.; Henriques, R. UAV photogrammetry for topographic monitoring of coastal areas. ISPRS J. Photogramm. Remote Sens. 2015, 104, 101–111. [Google Scholar] [CrossRef]

- Kimball, B.; Idso, S. Increasing atmospheric CO2: Effects on crop yield, water use and climate. Agric. Water Manag. 1983, 7, 55–72. [Google Scholar] [CrossRef]

- Seelan, S.K.; Laguette, S.; Casady, G.M.; Seielstad, G.A. Remote sensing applications for precision agriculture: A learning community approach. Remote Sens. Environ. 2003, 88, 157–169. [Google Scholar] [CrossRef]

- Weibel, R.; Hansman, R.J. Safety considerations for operation of different classes of uavs in the nas. In Proceedings of the AIAA 3rd “Unmanned Unlimited” Technical Conference, Workshop and Exhibit, Chicago, IL, USA, 20–22 September 2004; p. 6421. [Google Scholar]

- Tetracam INC. Available online: http://www.tetracam.com/Products1.htm (accessed on 22 March 2017).

- Resonon Systems. Available online: http://www.resonon.com (accessed on 25 March 2017).

- Zhou, G.; Yang, J.; Li, X.; Yang, X. Advances of flash LiDAR development onboard UAV. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 39, 193–198. [Google Scholar]

- Geodetics Incorporated. Available online: http://geodetics.com/wp-content/uploads/2016/10/Geo-MMS.pdf (accessed on 22 March 2017).

- Skycatch. Available online: https://www.skycatch.com/ (accessed on 22 March 2017).

- Dronedeploy. Available online: https://www.dronedeploy.com/ (accessed on 22 March 2017).

- marketsandmarkets.com. Unmanned Aerial Vehicle (UAV) Market, by Application, Class (Mini, Micro, Nano, Tactical, MALE, HALE, UCAV), SubSystem (GCS, Data Link, Software), Energy Source, Material Type, Payload and Region—Global Forecast to 2022. Available online: http://www.marketsandmarkets.com/Market-Reports/unmanned-aerial-vehicles-uav-market-662.html (accessed on 22 December 2016).

- Andersson, P.J. Hazard: A Framework Towards Connecting Artificial Intelligence And Robotics. Available online: https://www.cs.auckland.ac.nz/courses/compsci777s2c/ijcai05.pdf#page=5 (accessed on 15 June 2017).

- Colomina, I.; de la Tecnologia, P.M. Towards A New Paradigm for High-Resolution Low-Cost Photogrammetryand Remote Sensing. In Proceedings of the International Society for Photogrammetry and Remote Sensing, (ISPRS) XXI Congress, Beijing, China, 3–11 July 2008; pp. 1201–1206. [Google Scholar]

- Ustuner, M.; Esetlili, M.T.; Sanli, F.B.; Abdikan, S.; Kurucu, Y. Comparison of crop classification methods for the sustanaible agriculture management. J. Environ. Prot. Ecol. 2016, 17, 648–655. [Google Scholar]

- Gould, W.; Pitblado, J.; Sribney, W. Maximum Likelihood Estimation With Stata; Stata Press: Station, TX, USA, 2006. [Google Scholar]

- Mather, P.; Tso, B. Classification Methods for Remotely Sensed Data, Second Edition; CRC Press: Boca Raton, FL, USA, 2009. [Google Scholar]

- Richards, J.A. Remote Sensing Digital Image Analysis; Springer: Berlin, Germany, 2013. [Google Scholar]

- Kruse, F.A.; Lefkoff, A.B.; Boardman, J.W.; Heidebrecht, K.B.; Shapiro, A.T.; Barloon, P.J.; Goetz, A.F.H. The Spectral Image Processing System (SIPS)—Interactive Visualization and Analysis of Imaging Spectrometer Data. Remote Sens. Environ. 1993, 44, 145–163. [Google Scholar] [CrossRef]

- Kruse, F.A.; Lefkoff, A.B.; Boardman, J.W.; Heidebrecht, K.B.; Shapiro, A.T.; Barloon, P.J.; Goetz, A.F.H. Comparison of Spectral Angle Mapper and Artificial Neural Network Classifiers Combined with Landsat TM Imagery Analysis for Obtaining Burnt Area Mapping. Sensors 2010, 10, 1967–1985. [Google Scholar]

- Mather, P.M.; Koch, M. Computer Processing of Remotely-sensed Images: An Introduction, 4th ed.; Wiley-Blackwell: Chichester, UK, 2011. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-Vector Networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar]

- Huang, C.; Davis, L.S.; Townshend, J.R.G. An Assessment of Support Vector Machines for Land Cover Classification. Int. J. Remote Sens. 2002, 23, 725–749. [Google Scholar]

- Melgani, F.; Bruzzone, L. Classification of Hyperspectral Remote Sensing Images with Support Vector Machines. Geosci. Remote Sens. IEEE Trans. 2004, 42, 1778–1790. [Google Scholar] [CrossRef]

- Zhang, C.; Xie, Z. Data fusion and classifier ensemble techniques for vegetation mapping in the coastal Everglades. Geocarto Int. 2014, 29, 228–243. [Google Scholar] [CrossRef]

- Pinter, P.J., Jr.; Hatfield, J.L.; Schepers, J.S.; Barnes, E.M.; Moran, M.S.; Daughtry, C.S.; Upchurch, D.R. Remote sensing for crop management. Photogramm. Eng. Remote Sens. 2003, 69, 647–664. [Google Scholar] [CrossRef]

- Yu, K.; Li, F.; Gnyp, M.L.; Miao, Y.; Bareth, G.; Chen, X. Remotely detecting canopy nitrogen concentration and uptake of paddy rice in the Northeast China Plain. ISPRS J. Photogramm. Remote Sens. 2013, 78, 102–115. [Google Scholar] [CrossRef]

- Farifteh, J.; Van der Meer, F.; Atzberger, C.; Carranza, E. Quantitative analysis of salt-affected soil reflectance spectra: A comparison of two adaptive methods (PLSR and ANN). Remote Sens. Environ. 2007, 110, 59–78. [Google Scholar] [CrossRef]

- Motohka, T.; Nasahara, K.N.; Oguma, H.; Tsuchida, S. Applicability of green-red vegetation index for remote sensing of vegetation phenology. Remote Sens. 2010, 2, 2369–2387. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Bautista-Justo, M.; García-Oropeza, L.; Barboza-Corona, J.; Parra-Negrete, L. El Agave Tequilana Weber y la Producción de Tequila; Red Acta Universitaria: Guanajuato, Mexico, 2000. [Google Scholar]

- Garnica, J.F.; Reich, R.; Zuñiga, E.T.; Bravo, C.A. Using Remote Sensing to Support different Approaches to identify Agave (Agave tequilana Weber) CROPS. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, XXXVII, 941–944. [Google Scholar]

- Vallet, J.; Panissod, F.; Strecha, C.; Tracol, M. Photogrammetric performance of an ultra light weight swinglet UAV. In Proceedings of the AV-g (Unmanned Aerial Vehicle in Geomatics) Conference, Zurich, Switzerland, 14–16 September 2011. [Google Scholar]

- DJI. Available online: https://www.dji.com/phantom-4/info (accessed on 23 February 2017).

- Bouguet, J.Y. Camera Calibration Toolbox for Matlab. Available online: http://www.vision.caltech.edu/bouguetj/calib_doc/ (accessed on 25 April 2017).

- MathWorks. Single Camera Calibration App. Available online: https://es.mathworks.com/help/vision/ug/single-camera-calibrator-app.html (accessed on 25 April 2017).

- Gašparović, M.; Gajski, D. Two-step camera calibration method developed for micro UAV’s. In Proceedings of the XXIII ISPRS Congress, Prague, Czech Republic, 12–19 July 2016. [Google Scholar]

- Bath, W.; Paxman, J. UAV localisation & control through computer vision. In Proceedings of the Australasian Conference on Robotics and Automation, Sydney, Australia, 7 December 2005. [Google Scholar]

- Snavely, N.; Seitz, S.M.; Szeliski, R. Modeling the world from internet photo collections. Int. J. Comput. Vis. 2008, 80, 189–210. [Google Scholar] [CrossRef]

- Sani, S.; Carlos, J. Vehículos aéReos no Tripulados-UAV Para la Elaboración de Cartografía Escalas Grandes Referidas al Marco de Referencia Sirgas-Ecuador. Ph.D. Thesis, Universidad de las Fuerzas Armadas ESPE, Sangolquí, Ecuador, 2015. [Google Scholar]

- Cohen, K. Digital still camera forensics. Small Scale Digit. Device Forensics J. 2007, 1, 1–8. [Google Scholar]

- Laliberte, A.S.; Winters, C.; Rango, A. UAS remote sensing missions for rangeland applications. Geocarto Int. 2011, 26, 141–156. [Google Scholar] [CrossRef]

- Gašparović, M.; Jurjević, L. Gimbal Influence on the Stability of Exterior Orientation Parameters of UAV Acquired Images. Sensors 2017, 17, 401. [Google Scholar] [CrossRef] [PubMed]

- García, J.L.L. Aerotriangulación: Cálculo y Compensación de un Bloque Fotogramétrico; Universidad Politécnica de Valencia: Valencia, Spain, 1999. [Google Scholar]

- Laliberte, A.S.; Herrick, J.E.; Rango, A.; Winters, C. Acquisition, orthorectification, and object-based classification of unmanned aerial vehicle (UAV) imagery for rangeland monitoring. Photogramm. Eng. Remote Sens. 2010, 76, 661–672. [Google Scholar] [CrossRef]

- Wyszecki, G.; Stiles, W. Color Science: Concepts and Methods, Quantitative Data and Formulae, 2nd ed.; Wiley: New York, NY, USA, 1982. [Google Scholar]

- Wright, W.D. A re-determination of the trichromatic coeficients of spectral colours. Trans. Opt. Soc. 1929, 30, 141. [Google Scholar] [CrossRef]

- Guild, J. The colorimetric propierties of the spectrum. Philos. Trans. R. Soc. London 1932, 230, 149–187. [Google Scholar] [CrossRef]

- Hutson, G.H. Teoría de la Television en Color, 2nd ed.; S.A. MARCOMBO: Barcelona, Spain, 1984. [Google Scholar]

- Alarcón, T.E.; Marroquín, J.L. Linguistic color image segmentation using a hierarchical Bayesian approach. Color Res. Appl. 2009, 34, 299–309. [Google Scholar] [CrossRef]

- Jain, A.K. Data clustering: 50 years beyond K-means. Pattern Recognit. Lett. 2010, 31, 651–666. [Google Scholar] [CrossRef]

- Martins, O.; Braz Junior, G.; Corrêa Silva, A.; Cardoso de Paiva, A.; Gattass, M. Detection of masses in digital mammograms using K-means and support vector machine. ELCVIA: Electron. Lett. Comput. Vis. Image Anal. 2009, 8, 39–50. [Google Scholar]

- Grama, A. Introduction to Parallel Computing; Pearson Education: Harlow, UK, 2003. [Google Scholar]

- Zhao, W.; Ma, H.; He, Q. Parallel k-means clustering based on mapreduce. In IEEE International Conference on Cloud Computing; Springer: Berlin/Heidelberg, Germany, 2009; pp. 674–679. [Google Scholar]

- Ritter, N.; Ruth, M.; Grissom, B.B.; Galang, G.; Haller, J.; Stephenson, G.; Covington, S.; Nagy, T.; Moyers, J.; Stickley, J.; et al. GeoTIFF Format Specification GeoTIFF Revision 1.0; SPOT Image Corp.: Reston, VA, USA, 28 December 2000. [Google Scholar]

- Mahammad, S.S.; Ramakrishnan, R. GeoTIFF-A standard image file format for GIS applications. Map India 2003, 28–31. [Google Scholar]

- Bonilla Romero, J.H. Método Para Generar Modelos Digitales de Terreno con Base en Datos de Escáner láser Terrestre. Ph.D. Thesis, Universidad Nacional de Colombia-Sede Bogotá, Bogotá, Colombia, 2016. [Google Scholar]

- Langley, R.B. The UTM grid system. GPS World 1998, 9, 46–50. [Google Scholar]

| Parameter | Value | |

|---|---|---|

| Sensor RGB | 6.25 mm × 4.68 mm | |

| Weight | 25 grams | |

| Sensor | 12.4 Megapixels | |

| Lens | FOV | |

| Focal length | 20 mm (35 mm format equivalent) f/2.8 focus at ∞ | |

| Pixel size | 1.5625 m | |

| Measurement of image | 4000 × 3000 | |

| Image Type | JPEG, DNG (RAW) | |

| Temperature | to |

| Parameter | Values |

|---|---|

| Focal length | (2.2495 2.2498 ) |

| Principal point coordinates | (2.0159 , 1.5088 ) |

| Skew | −7.2265 |

| Lens distortion | |

| Tangential Distortion coefficients | (0.0011, 5.6749 ) |

| Radial distortion coefficients | (−0.0160, −0.0336) |

| Num. Patterns | 16 |

| Study Areas | Images Low-Cost Camera | Number of Flight Lines | Image Resolution (cm) | RMSE (Pixels/cm) |

|---|---|---|---|---|

| a | 146 | 10 | 2.60 | 1.4 |

| b | 140 | 8 | 1.63 | 1.7 |

| c | 266 | 18 | 2.10 | 1.6 |

| Study Areas | Precision | Overall Accuracy |

|---|---|---|

| a | 0.99995 | 0.99994 |

| b | 0.99998 | 0.99998 |

| c | 0.99961 | 0.99961 |

| d | 0.99991 | 0.99998 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Calvario, G.; Sierra, B.; Alarcón, T.E.; Hernandez, C.; Dalmau, O. A Multi-Disciplinary Approach to Remote Sensing through Low-Cost UAVs. Sensors 2017, 17, 1411. https://doi.org/10.3390/s17061411

Calvario G, Sierra B, Alarcón TE, Hernandez C, Dalmau O. A Multi-Disciplinary Approach to Remote Sensing through Low-Cost UAVs. Sensors. 2017; 17(6):1411. https://doi.org/10.3390/s17061411

Chicago/Turabian StyleCalvario, Gabriela, Basilio Sierra, Teresa E. Alarcón, Carmen Hernandez, and Oscar Dalmau. 2017. "A Multi-Disciplinary Approach to Remote Sensing through Low-Cost UAVs" Sensors 17, no. 6: 1411. https://doi.org/10.3390/s17061411

APA StyleCalvario, G., Sierra, B., Alarcón, T. E., Hernandez, C., & Dalmau, O. (2017). A Multi-Disciplinary Approach to Remote Sensing through Low-Cost UAVs. Sensors, 17(6), 1411. https://doi.org/10.3390/s17061411