Emotional Self-Regulation of Individuals with Autism Spectrum Disorders: Smartwatches for Monitoring and Interaction

Abstract

:1. Introduction

1.1. Autism Spectrum Disorders and Emotions

1.1.1. Self-Determination and Executive Dysfunction

1.1.2. Do I Need Assistance?

1.2. Autism Spectrum Disorders and Technology

1.2.1. Acceptance and Social Stigma

1.2.2. The Role of Caregivers and Family

1.3. Autism Spectrum Disorders and Intervention Strategies

Procedures and Media

1.4. From Inward State to Interaction

1.4.1. What Is Inward State?

1.4.2. Wearables as Translators of Inward State

1.4.3. Smartwatches: Towards Acceptance

1.5. Taimun-Watch

1.5.1. The System

1.5.2. Wearable Paradigm

- Standalone apps: they are executed in the smartwatch, and do not need interaction with smartphones, tablets or other supporting devices (e.g., Endomondo for Android Wear or Apple Pay for watchOS)

- Smartphone-dependent apps: they are sold as smartphone apps, but include a smartwatch module to complement the systems (as notification reader, alarms or reminders). They are usually smartphone apps that have been added smartwatch functionality in order to keep in line with the trend of new devices, and they are often called cross-device apps (e.g., Google Keep for Android Wear, iTunes for watchOS)

- Dual apps: they were designed to work together smartphone-smartwatch. None of their halves are able to work as standalone (e.g., PixtoCam for Android Wear, Slopes for WatchOS).

1.5.3. Smartwatch: Assistive Device

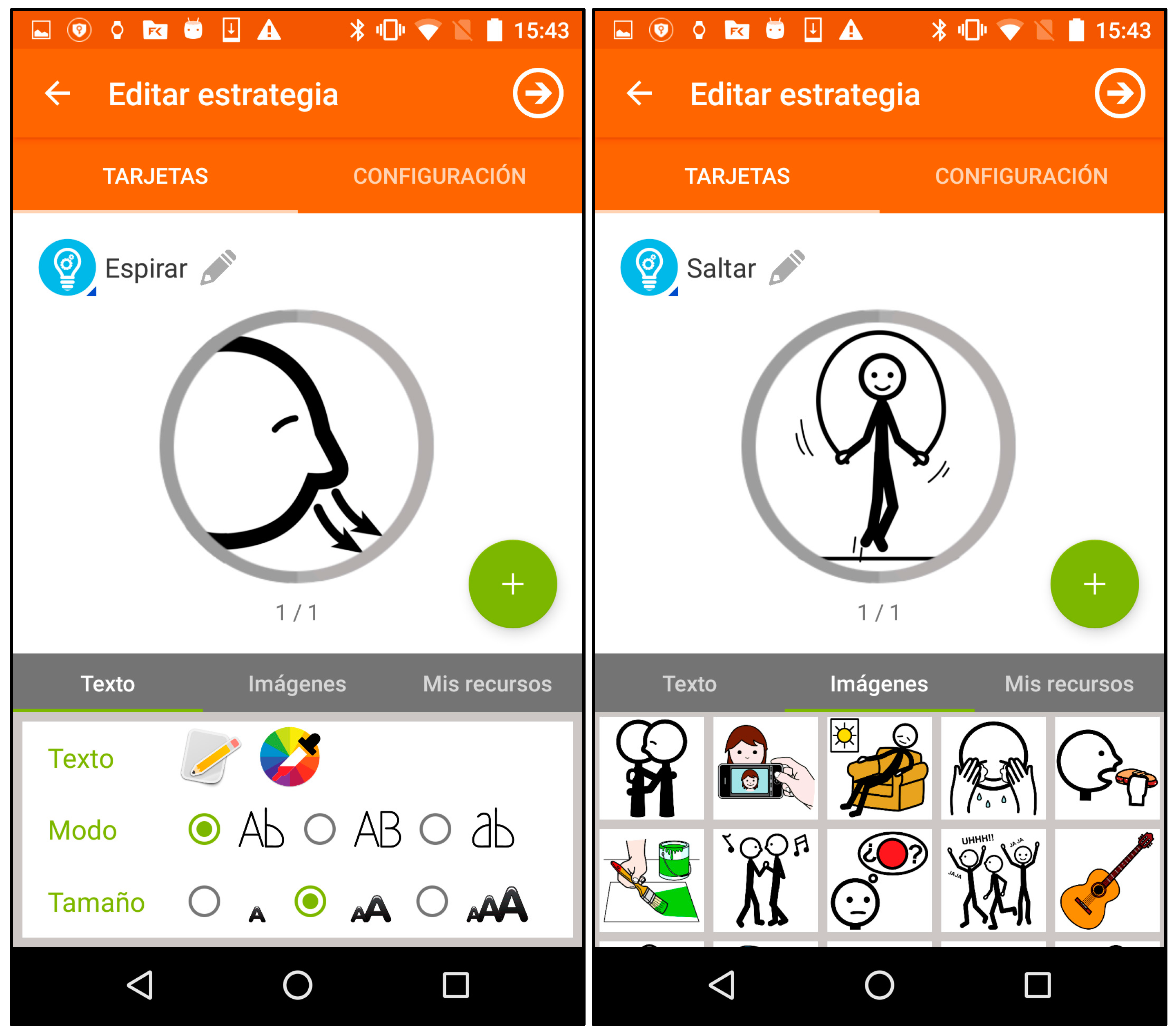

1.5.4. Smartphone: Authoring Tool

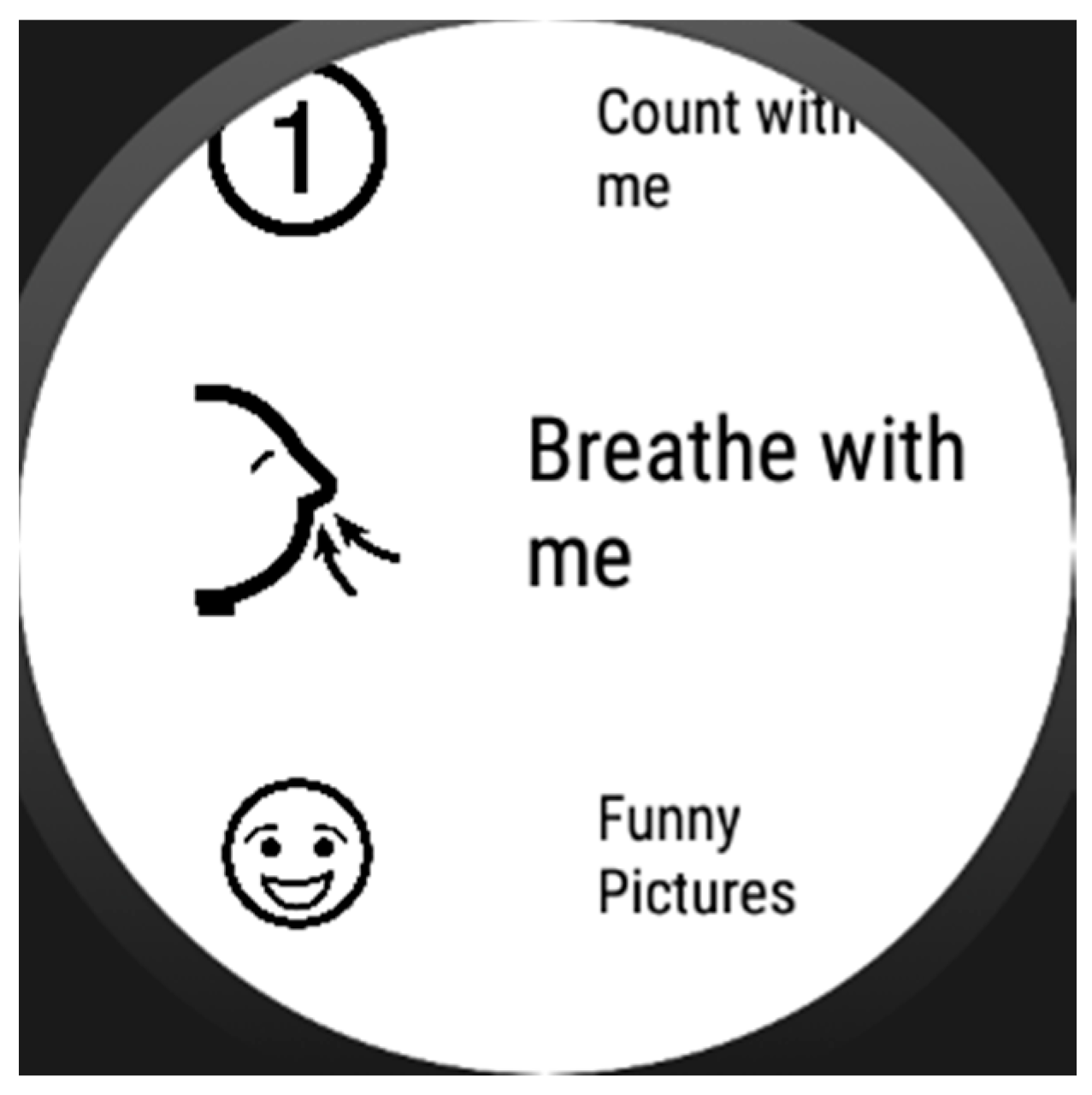

1.5.5. Self-Regulation Strategies

1.5.6. Use Cases

1.5.7. Contribution

2. Materials and Methods

- (a)

- Study the way that executive dysfunction manifests in the individual.

- (b)

- Analyze the effects of emotional dysregulation on the individual’s behavior.

- (c)

- Analyze the intervention that caregivers used to employ in those cases (if any).

- (d)

- Adapt that intervention, case by case, to the tool’s format.

- (e)

- Test the tool on the individual performing a thorough observation of the effects of the intervention.

- (f)

- Analyze all post-session gathered data.

2.1. Users

- Lack of organization skills

- Unproportioned attention to irrelevant aspects of a given task.

- Difficulty to keep an instruction in mind while inhibiting a problematic response.

- Lack of abstract and conceptual thinking.

- Literality in the comprehension of a given problem.

- Strong difficulties in the change of environment of certain tasks.

- Lack of initiative at problem solving.

- Lack of knowledge transfer between tasks.

- Inclusion of pointless activity between instructions.

2.2. Materials

2.3. Methodology

- Ground truth problem: prior to the experiment, we gathered sensor data from the individuals (wearing the smartwatch), and their caregivers used a smartphone annotator software to report outbursts, temper tantrums and anger episodes. Machine learning from these data, though useful for other purposes, excludes episodes with no visible manifestation. For instance, user A showed episodes of fear and stress that manifested in subtle ways such as slightly paler skin and lip tightening: caregiver’s reports would not have noticed them during the ground truth data gathering.

- Underrepresentation: even if the ground truth data gathering was perfectly accurate, it would contain far fewer samples labeled as “stress-positive” compared to the whole set of samples of time windows where the user is calm and does not need assistance. Accuracy of any classifier built over this kind of data is hindered greatly.

3. Results

- Does the self-regulation assistance from the smartwatch help the user regain a state of calm?

- Is the user able to interact with the device in a way that the assistance is provided and the strategies are completed?

- What level of autonomy can they achieve with the system? Are they able to use it themselves?

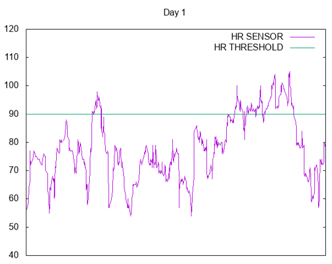

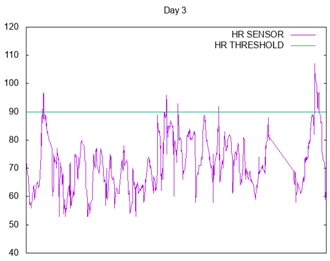

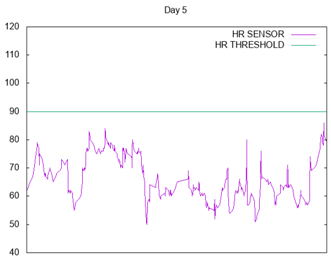

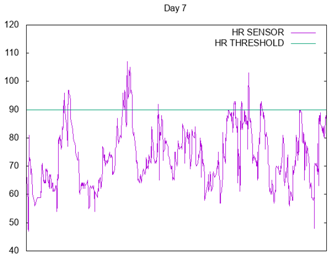

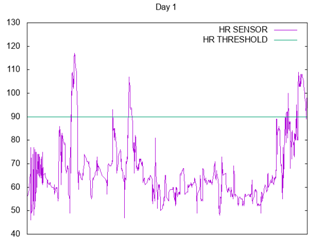

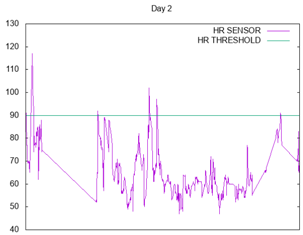

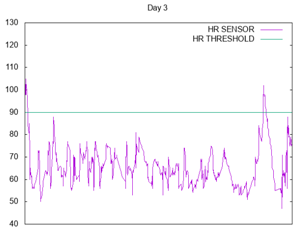

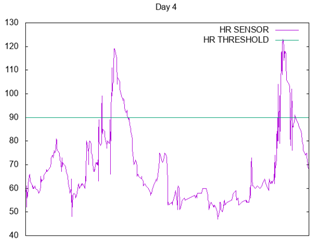

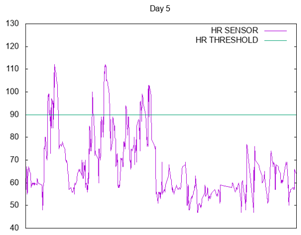

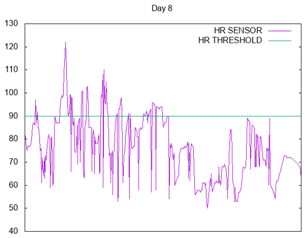

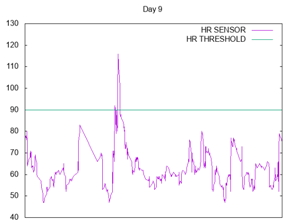

3.1. User A

3.2. User B

4. Conclusions

5. Future Work

Acknowledgments

Author Contributions

Conflicts of Interest

Appendix A

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Appendix B

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

References

- McDougle, C. Autism Spectrum Disorder; Oxford University Press: Oxford, UK, 2016. [Google Scholar]

- Association, A.P. Diagnostic and Statistical Manual of Mental Disorders (DSM-5®); American Psychiatric Publishing Incorporated: Washington, DC, USA, 2013. [Google Scholar]

- Lord, C.; Cook, E. Autism spectrum disorders. In Autism: The Science of Mental Health; Routledge: New York, NY, USA, 2013. [Google Scholar]

- Lauritsen, M. Autism spectrum disorders. Eur. Child Adolesc. Psychiatry 2013, 22, 37–42. [Google Scholar] [CrossRef] [PubMed]

- Baron-Cohen, S.; Chaparro, S. Autismo y Síndrome de Asperger; Alianza Editorial: Madrid, Spain, 2010. [Google Scholar]

- Hughes, C.; Russell, J.; Robbins, T. Evidence for executive dysfunction in autism. Neuropsychologia 1994, 32, 477–492. [Google Scholar] [CrossRef]

- Hill, E. Executive dysfunction in autism. Trends Cogn. Sci. 2004, 8, 26–32. [Google Scholar] [CrossRef] [PubMed]

- Ashburner, J.; Ziviani, J.; Rodger, S. Surviving in the mainstream: Capacity of children with autism spectrum disorders to perform academically and regulate their emotions and behavior at school. Res. Autism Spectr. Disord. 2010, 4, 18–27. [Google Scholar] [CrossRef]

- Mazefsky, C.; Herrington, J.; Siegel, M. The role of emotion regulation in autism spectrum disorder. J. Am. Acad. Child Adolesc. Psychiatry 2013, 52, 679–688. [Google Scholar] [CrossRef] [PubMed]

- Berthoz, S.; Hill, E. The validity of using self-reports to assess emotion regulation abilities in adults with autism spectrum disorder. Eur. Psychiatry 2005, 20, 291–298. [Google Scholar] [CrossRef] [PubMed]

- Ashburner, J.; Ziviani, J.; Rodger, S. Sensory processing and classroom emotional, behavioral, and educational outcomes in children with autism spectrum disorder. Am. J. Occup. Ther. 2008, 62, 564–573. [Google Scholar] [CrossRef] [PubMed]

- Gitlin, L. Why older people accept or reject assistive technology. Generations 1995, 19, 41–46. [Google Scholar]

- Verza, R.; Carvalho, M. An interdisciplinary approach to evaluating the need for assistive technology reduces equipment abandonment. Mult. Schlerosis J. 2006, 12, 88–93. [Google Scholar] [CrossRef] [PubMed]

- Dawe, M. Desperately Seeking Simplicity: How Young Adults with Cognitive Disabilities and Their Families Adopt Assistive Technologies. In Proceedings of the ACM 2006 Conference on Human Factors in Computing Systems 2006, Montréal, QC, Canada, 22–27 April 2006; pp. 1143–1152. [Google Scholar]

- Wilkowska, W.; Gaul, S.; Ziefle, M. A Small but Significant Difference—The Role of Gender on Acceptance of Medical Assistive Technologies; Springer: Berlin, Germany, 2010. [Google Scholar]

- Parette, P.; Scherer, M. Assistive technology use and stigma. Educ. Train. Dev. Disabil. 2004, 39, 217–226. [Google Scholar]

- Shinohara, K.; Wobbrock, J. In the shadow of misperception: Assistive technology use and social interactions. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Vancouver, BC, Canada, 7–12 May 2011. [Google Scholar]

- Cook, A.; Polgar, J. Assistive Technologies: Principles and Practice; Elsevier: Amsterdam, The Netherlands, 2014. [Google Scholar]

- Phillips, B.; Zhao, H. Predictors of assistive technology abandonment. Assist. Technol. 1993, 5, 36–45. [Google Scholar] [CrossRef] [PubMed]

- Scherer, M. The change in emphasis from people to person: Introduction to the special issue on Assistive Technology. Disabil. Rehabil. 2002, 24, 1–4. [Google Scholar] [CrossRef] [PubMed]

- Riemer-Reiss, M.; Wacker, R. Factors associated with assistive technology discontinuance among individuals with disabilities. J. Rehabil. 2000, 66, 44–50. [Google Scholar]

- Sivberg, B. Family System and Coping Behaviors a comparison between parents of children with autistic spectrum disorders and parents with non-autistic children. Autism 2002, 6, 397–408. [Google Scholar] [CrossRef] [PubMed]

- Higgins, D.; Bailey, S.; Pearce, J. Factors associated with functioning style and coping strategies of families with a child with an autism spectrum disorder. Autism 2005, 9, 125–137. [Google Scholar] [CrossRef] [PubMed]

- Pottie, C.; Ingram, K. Daily stress, coping, and well-being in parents of children with autism: A multilevel modeling approach. J. Fam. Psychol. 2008, 22, 855–864. [Google Scholar] [CrossRef] [PubMed]

- Myers, S.; Johnson, C. Management of children with autism spectrum disorders. Pediatrics 2007, 120, 1162–1182. [Google Scholar] [CrossRef] [PubMed]

- Odom, S.; Collet-Klingenberg, L. Evidence-based practices in interventions for children and youth with autism spectrum disorders. Prev. Sch. Fail. Altern. Educ. Child. Youth 2010, 54, 275–282. [Google Scholar] [CrossRef]

- Smith, T. Discrete trial training in the treatment of autism. Focus Autism Other Dev. Disabil. 2001, 16, 86–92. [Google Scholar] [CrossRef]

- McConachie, H.; Diggle, T. Parent implemented early intervention for young children with autism spectrum disorder: A systematic review. J. Eval. Clin. Pract. 2007, 13, 120–129. [Google Scholar] [CrossRef] [PubMed]

- Sperry, L. Peer-mediated instruction and intervention strategies for students with autism spectrum disorders. Prev. Sch. Fail. Altern. Educ. Child. Youth 2010, 54, 256–264. [Google Scholar] [CrossRef]

- Charlop-Christy, M.; Carpenter, M. Using the picture exchange communication system (PECS) with children with autism: Assessment of PECS acquisition, speech, social-communicative behavior, and problem behavior. J. Appl. Behav. Anal. 2002, 35, 213–231. [Google Scholar] [CrossRef] [PubMed]

- Koegel, R.; Koegel, L.K. Pivotal Response Treatments for Autism: Communication, Social, and Academic Development; Brookes Publishing Company: Baltimore, MD, USA, 2006. [Google Scholar]

- Gray, C.; Garand, J. Social stories: Improving responses of students with autism with accurate social information. Focus Autistic Behav. 1993, 8, 1–10. [Google Scholar] [CrossRef]

- Bellini, S.; Akullian, J. A meta-analysis of video modeling and video self-modeling interventions for children and adolescents with autism spectrum disorders. Except. Child. 2007, 73, 264–287. [Google Scholar] [CrossRef]

- Lee, S.; Simpson, R.; Shogren, K. Effects and implications of self-management for students with autism a meta-analysis. Focus Autism Other Dev. Disabil. 2007, 22, 2–13. [Google Scholar] [CrossRef]

- Machalicek, W.; O’Reilly, M. A review of interventions to reduce challenging behavior in school settings for students with autism spectrum disorders. Res. Autism Dev. Disabil. 2007, 1, 229–246. [Google Scholar] [CrossRef]

- Glasberg, B. Functional behavior assessment for people with autism: Making sense of seemingly senseless behavior. Educ. Treat. Child. 2006, 31, 433–435. [Google Scholar]

- Green, G. Behavior analytic instruction for learners with autism advances in stimulus control technology. Focus Autism Other Dev. Disabil. 2001, 16, 72–85. [Google Scholar] [CrossRef]

- Cassella, M.; Sidener, T. Response interruption and redirection for vocal stereotypy in children with autism: A systematic replication. J. Appl. Behav. Anal. 2011, 44, 169–173. [Google Scholar] [CrossRef] [PubMed]

- Carr, E.; Durand, V. Reducing behavior problems through functional communication training. J. Appl. Behav. Anal. 1985, 18, 111–126. [Google Scholar] [CrossRef] [PubMed]

- Rincover, A.; Cook, R. Sensory extinction and sensory reinforcement principles for programming multiple adaptive behavior change. J. Appl. Behav. Anal. 1979, 12, 221–233. [Google Scholar] [CrossRef] [PubMed]

- Shabani, D.; Fisher, W. Stimulus fading and differential reinforcement for the treatment of needle phobia in a youth with autism. J. Appl. Behav. Anal. 2006, 39, 449–452. [Google Scholar] [CrossRef] [PubMed]

- Schmidt, A. Implicit human computer interaction through context. Pers. Technol. 2000, 4, 191–199. [Google Scholar] [CrossRef]

- Abowd, G.; Dey, A.; Brown, P. Towards a better understanding of context and context-awareness. In Proceedings of the International Symposium on Handheld and Ubiquitous Computing, Karlsruhe, Germany, 27–29 September 1999. [Google Scholar]

- Cook, D.; Augusto, J.; Jakkula, V. Ambient intelligence: Technologies, applications, and opportunities. Pervasive Mob. Comput. 2009, 5, 277–298. [Google Scholar] [CrossRef]

- Huang, P. Promoting Wearable Computing: A Survey and Future Agenda; Springer: Tokyo, Japan, 2000. [Google Scholar]

- Gillespie, A.; Best, C.; O’Neill, B. Cognitive Function and Assistive Technology for Cognition: A Systematic Review. J. Int. Neuropsychol. Soc. 2012, 18, 1–19. [Google Scholar] [CrossRef] [PubMed]

- Picard, R. Affective Computing for HCI. In Proceedings of the HCI International (the 8th International Conference on Human-Computer Interaction) on Human-Computer Interaction: Ergonomics and User Interfaces, Munich, Germany, 22–27 August 1999. [Google Scholar]

- Picard, R. Affective computing: From laughter to IEEE. IEEE Trans. Affect. Comput. 2010, 1, 11–17. [Google Scholar] [CrossRef]

- Pentland, A. Healthwear: Medical technology becomes wearable. Stud. Health Technol. Inform. 2005, 37, 42–49. [Google Scholar] [CrossRef]

- Kulikowski, C.; Kulikowski, C. Biomedical and health informatics in translational medicine. Methods Inf. Med. 2009, 48, 4–10. [Google Scholar] [CrossRef] [PubMed]

- Klasnja, P.; Consolvo, S.; Pratt, W. How to evaluate technologies for health behavior change in HCI research. In Proceedings of the SIGCHI Conference on Human Factors in Computer Systems, Vancouver, BC, Canada, 7–12 May 2011. [Google Scholar]

- Alpay, L.; Toussaint, P.; Zwetsloot-Schonk, B. Supporting healthcare communication enabled by information and communication technology: Can HCI and related cognitive aspects help? Proceedings on Dutch Directions in HCI, Amsterdam, The Netherlands, 10 June 2004. [Google Scholar]

- Aungst, T.; Lewis, T. Potential uses of wearable technology in medicine: Lessons learnt from Google Glass. Int. J. Clin. Pract. 2015, 69, 1179–1183. [Google Scholar] [CrossRef] [PubMed]

- Patel, M.; Asch, D.; Volpp, K. Wearable devices as facilitators, not drivers, of health behavior change. JAMA 2015, 313, 459–460. [Google Scholar] [CrossRef] [PubMed]

- Kim, E.; Helal, S.; Cook, D. Human activity recognition and pattern discovery. IEEE Pervasive Comput. 2010, 9. [Google Scholar] [CrossRef] [PubMed]

- Lee, S.; Mase, K. Activity and location recognition using wearable sensors. IEEE Pervasive Comput. 2002, 1, 24–32. [Google Scholar]

- Tian, Y.; Kanade, T.; Cohn, J. Facial Expression Recognition; Springer: London, UK, 2011. [Google Scholar]

- Dardas, N.; Georganas, N. Real-time hand gesture detection and recognition using bag-of-features and support vector machine techniques. IEEE Trans. Instrum. Meas. 2011, 60, 3592–3607. [Google Scholar] [CrossRef]

- Verbrugge, K.; Stevens, I.; De Marez, L. The role of an omnipresent pocket device: Smartphone attendance and the role of user habits. In Proceedings of the IAMCR 2013: ″Crises, ‘Creative Destruction’ and the Global Power and Communication Orders″, Dublin, Ireland, 25–29 June 2013. [Google Scholar]

- Stawarz, K.; Cox, A.; Blandford, A. Beyond self-tracking and reminders: Designing smartphone apps that support habit formation. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, Seoul, Korea, 18–23 April 2015. [Google Scholar]

- Shuzo, M.; Komori, S.; Takashima, T. Wearable eating habit sensing system using internal body sound. J. Adv. Mech. Des. Syst. Manuf. 2009, 4, 158–166. [Google Scholar] [CrossRef]

- Witt, H.; Kenn, H. Towards implicit interaction by using wearable interaction device sensors for more than one task. In Proceedings of the 3rd International Conference on Mobile Technology, Applications & Systems, Bangkok, Thailand, 25–27 October 2006. [Google Scholar]

- Kearns, W.; Jasiewicz, J.M.; Fozard, J.L.; Webster, P.; Scott, S. Temporo-spacial prompting for persons with cognitive impairment using smart wrist-worn interface. J. Rehabil. Res. Dev. 2013, 50, 7–13. [Google Scholar] [CrossRef] [PubMed]

- Sharma, V.; Mankodiya, K.; De La Torre, F.; Zhang, A.; Ryan, N.; Ton, T.G.N.; Gandhi, R.; Jain, S. SPARK: Personalized Parkinson Disease Interventions through Synergy between a Smartphone and a Smartwatch. In Proceedings of the Design, User Experience, and Usability User Experience Design for Everyday Life Applications and Services 2014, Crete, Greece, 22–27 June 2014; pp. 103–114. [Google Scholar] [CrossRef]

- Wile, D.; Ranawaya, R.; Kiss, Z. Smart watch accelerometry for analysis and diagnosis of tremor. J. Neurosci. Methods 2014, 230, 1–4. [Google Scholar] [CrossRef] [PubMed]

- Shin, D.-M.; Shin, D.; Shin, D. Smart watch and monitoring system for dementia patients. In Proceedings of the International Conference on Grid and Pervasive Computing, Seoul, Korea, 9–11 May 2013. [Google Scholar]

- Shoaib, M.; Bosch, S. Towards detection of bad habits by fusing smartphone and smartwatch sensors. In Proceedings of the IEEE Pervasive Computing and Communication Workshops (PerCom Workshops), St. Louis, MO, USA, 23–27 March 2015. [Google Scholar]

- Zhu, Z.; Satizabal, H.; Blanke, U. Naturalistic Recognition of Activities and Mood Using Wearable Electronics. IEEE Trans. Affect. Comput. 2015, 7, 272–285. [Google Scholar] [CrossRef]

- Xiao, R.; Laput, G.; Harrison, C. Expanding the Input Expressivity of Smartwatches with Mechanical Pan, Twist, Tilt and Click. In Proceedings of the 32nd Annual ACM Conference on Human factors in Computing Systems—CHI’14, 2014, Toronto, ON, Canada, 26 April–1 May 2014; pp. 193–196. [Google Scholar] [CrossRef]

- Lisetti, C.; Nasoz, F. Using noninvasive wearable computers to recognize human emotions from physiological signals. EURASIP J. Adv. Signal Process. 2004, 2004, 929414. [Google Scholar] [CrossRef]

- Lara, O.; Labrador, M. A survey on human activity recognition using wearable sensors. IEEE Commun. Surv. Tutor. 2013, 15, 1192–1209. [Google Scholar] [CrossRef]

- Lancioni, G.; Sigafoos, J.; O’Reilly, M.; Singh, N. Assistive Technology: Interventions for Individuals with Severe/Profound and Multiple Disabilities; Springer: New York, NY, USA, 2012. [Google Scholar]

- Rawassizadeh, R.; Price, B.A.; Petre, M. Wearables. Commun. ACM 2014, 58, 45–47. [Google Scholar] [CrossRef]

- Simpson, R. Evidence-based practices and students with autism spectrum disorders. Focus Autism Other Dev. Disabil. 2005, 20, 140–149. [Google Scholar] [CrossRef]

- Lumsden, J.; Brewster, S. A paradigm shift: Alternative interaction techniques for use with mobile & wearable devices. In Proceedings of the 2003 Conference of the Centre for Advanced Studies on Collaborative Research, Toronto, ON, Canada, 6–9 October 2003. [Google Scholar]

- Sapargaliyev, D. Wearables in Education: Expectations and Disappointments. In Proceedings of the International Conference on Technology in Education, Hong Kong, China, 3–5 January 2015. [Google Scholar]

- Dunlop, M.; Komninos, A.; Durga, N. Towards high quality text entry on smartwatches. In Proceedings of the CHI’14 Extended Abstracts on Human Factors in Computing Systems, Toronto, ON, Canada, 26 April–1 May 2014. [Google Scholar]

- Götzelmann, T.; Vázquez, P. InclineType: An Accelerometer-based Typing Approach for Smartwatches. In Proceedings of the 16th International Conference on Human Computer Interaction (Interacción’15), Vilanova i la Geltrú, Spain, 7–9 September 2015. [Google Scholar]

- Rodrigo, J.; Corral, D. ARASAAC: Portal Aragonés de la Comunicación Aumentativa y Alternativa. Software, herramientas y materiales para la comunicación e inclusión. Inform. Educ. Teor. Prát. 2013, 16, 27–38. [Google Scholar]

- Doughty, K. SPAs (smart phone applications)—A new form of assistive technology. J. Assist. Technol. 2011, 5, 88–94. [Google Scholar] [CrossRef]

- Oatley, K.; Johnson-Laird, P. Towards a cognitive theory of emotions. Cogn. Emot. 1987, 1, 29–50. [Google Scholar] [CrossRef]

- O’Neill, B.; Gillespie, A. Assistive Technology for Cognition; Psychology Press: Hove, UK, 2014. [Google Scholar]

- Paclt, I.; Strunecka, A. Autism Spectrum Disorders: Clinical Aspects. In Cellular and Molecular Biology of Autism Spectrum Disorders; Bentham Science Publishers: Sharjah, UAE, 2010; Volume 1. [Google Scholar]

- Frank, J.A.; Brill, A.; Kapila, V. Mounted smartphones as measurement and control platforms for motor-based laboratory test-beds. Sensors 2016, 16, 1331. [Google Scholar] [CrossRef] [PubMed]

- Muaremi, A.; Arnrich, B.; Tröster, G. Towards measuring stress with smartphones and wearable devices during workday and sleep. BioNanoScience 2013, 3, 172–183. [Google Scholar] [CrossRef] [PubMed]

- Mocanu, B.; Tapu, R.; Zaharia, T. When ultrasonic sensors and computer vision join forces for efficient obstacle detection and recognition. Sensors 2016, 16, 1807. [Google Scholar] [CrossRef] [PubMed]

- Dibia, V.; Trewin, S.; Ashoori, M.; Erickson, T. Exploring the Potential of Wearables to Support Employment for People with Mild Cognitive Impairment. In Proceedings of the 17th International ACM SIGACCESS Conference on Computers & Accessibility, Lisbon, Portugal, 26–28 October 2015. [Google Scholar]

- Tapu, R.; Mocanu, B.; Bursuc, A.; Zaharia, T. A Smartphone-Based Obstacle Detection and Classification System for Assisting Visually Impaired People. In Proceedings of the 2013 IEEE International Conference on Computer Vision Workshops (ICCVW), Sydney, Australia, 1–8 December 2013; pp. 444–451. [Google Scholar]

- Ramos, J.; Hong, J.; Dey, A. Stress recognition: A step outside the lab. In Proceedings of the International Conference on Physiological Computing Systems, Lisbon, Portugal, 7–9 January 2014; Volume 1, pp. 107–118. [Google Scholar]

- Kim, K.; Bang, S.; Kim, S. Emotion recognition system using short-term monitoring of physiological signals. Med. Biol. Eng. Comput. 2004, 42, 419–427. [Google Scholar] [CrossRef] [PubMed]

- Miranda, D.; Calderón, M.; Favela, J. Anxiety detection using wearable monitoring. In Proceedings of the 5th Mexican Conference on Human-Computer Interaction—MexIHC’14, Oaxaca, Mexico, 3–5 November 2014; pp. 34–41. [Google Scholar]

- Healey, J.; Picard, R. Detecting stress during real-world driving tasks using physiological sensors. IEEE Trans. Intell. Transp. Syst. 2005, 6, 156–166. [Google Scholar] [CrossRef]

- Obrusnikova, I.; Cavalier, A.R. Perceived Barriers and Facilitators of Participation in After-School Physical Activity by Children with Autism Spectrum Disorders. J. Dev. Phys. Disabil. 2011, 23, 195–211. [Google Scholar] [CrossRef]

- Obrusnikova, I.; Miccinello, D.L. Parent perceptions of factors influencing after-school physical activity of children with autism spectrum disorders. Adapt. Phys. Act. Q. 2012, 29, 63–80. [Google Scholar] [CrossRef]

- Pan, C.-Y.; Frey, G.C. Physical Activity Patterns in Youth with Autism Spectrum Disorders. J. Autism Dev. Disord. 2006, 36, 597–606. [Google Scholar] [CrossRef] [PubMed]

| Self-Regulation Strategy | Implementation |

|---|---|

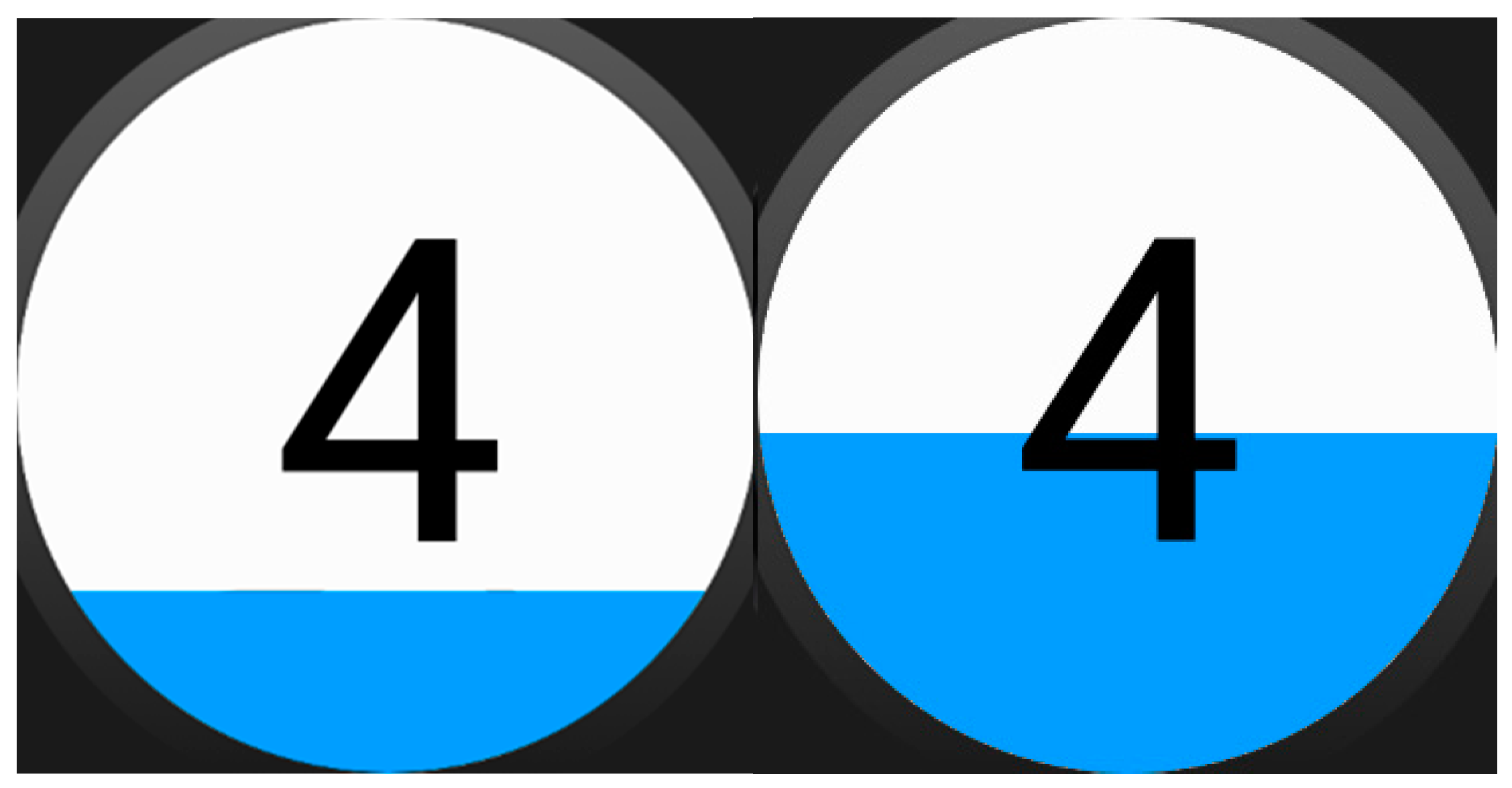

| Counting numbers | Sequence of (numbers/picture representation of numbers/picture representation of quantities). It has timeout if the counting is automatic, it does not if the user is intended to touch the screen with each number |

| Sitting and relaxing | Sequence of pictograms telling the user to sit and relax |

| Grasping a certain object | Sequence of pictograms telling the user to look for the object and grasp it |

| Going for a walk | Sequence of pictograms and animated GIFs telling the user to walk or run |

| Asking an adult for help | Sequence of pictograms telling the user to look for an adult, combined with other timed strategy meanwhile |

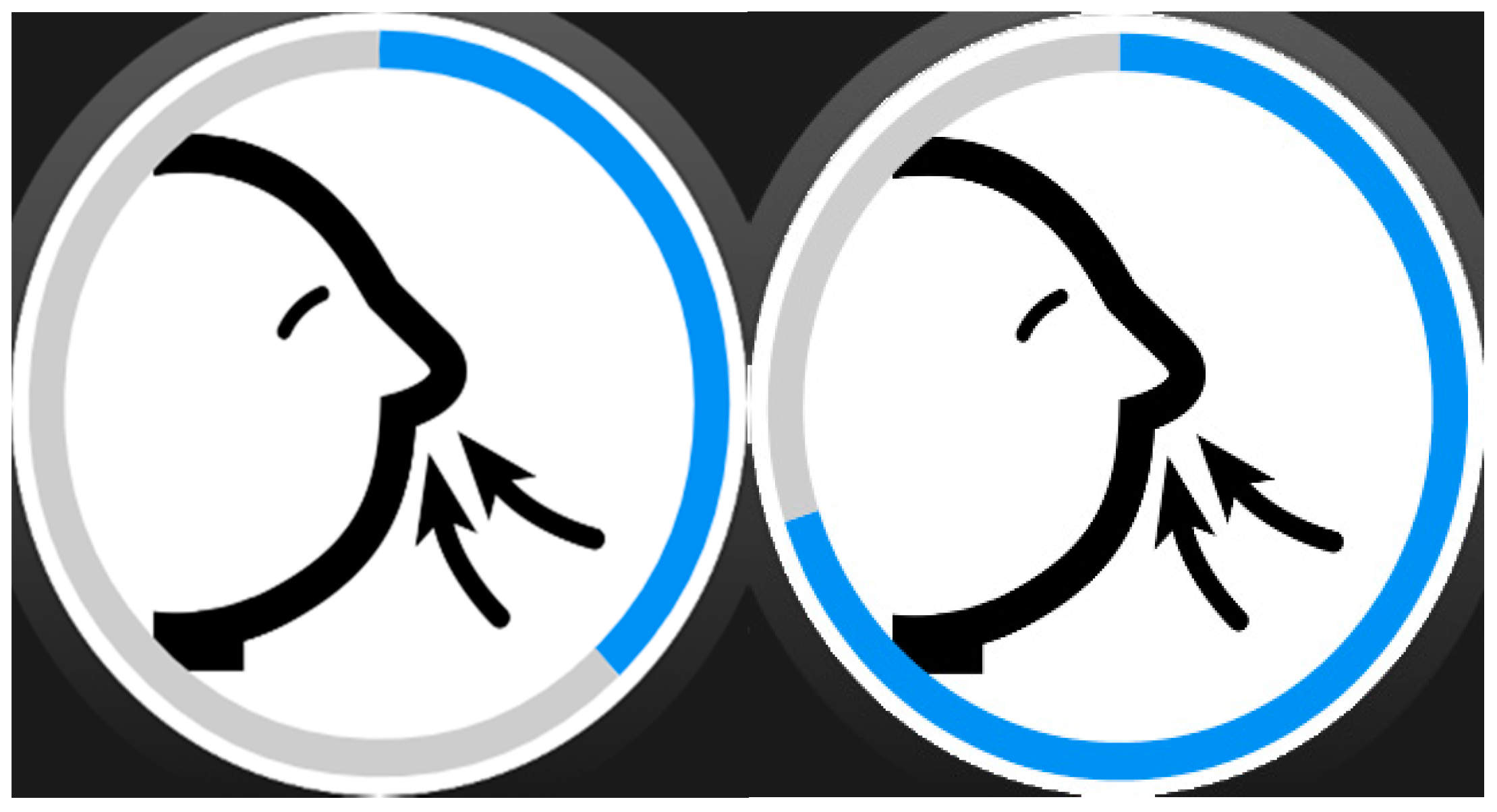

| Two-phase breathing | Sequence of pairs of timed pictograms with indications of breathing in and out |

| Asking for a hug | Sequence of pictograms telling the user to look for an adult and asking him for a hug, combined with other timed strategy meanwhile |

| Looking funny/relaxing pictures | Sequence of such images, with or without timing |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Torrado, J.C.; Gomez, J.; Montoro, G. Emotional Self-Regulation of Individuals with Autism Spectrum Disorders: Smartwatches for Monitoring and Interaction. Sensors 2017, 17, 1359. https://doi.org/10.3390/s17061359

Torrado JC, Gomez J, Montoro G. Emotional Self-Regulation of Individuals with Autism Spectrum Disorders: Smartwatches for Monitoring and Interaction. Sensors. 2017; 17(6):1359. https://doi.org/10.3390/s17061359

Chicago/Turabian StyleTorrado, Juan C., Javier Gomez, and Germán Montoro. 2017. "Emotional Self-Regulation of Individuals with Autism Spectrum Disorders: Smartwatches for Monitoring and Interaction" Sensors 17, no. 6: 1359. https://doi.org/10.3390/s17061359

APA StyleTorrado, J. C., Gomez, J., & Montoro, G. (2017). Emotional Self-Regulation of Individuals with Autism Spectrum Disorders: Smartwatches for Monitoring and Interaction. Sensors, 17(6), 1359. https://doi.org/10.3390/s17061359