Abstract

An adaptive optics (AO) system provides real-time compensation for atmospheric turbulence. However, an AO image is usually of poor contrast because of the nature of the imaging process, meaning that the image contains information coming from both out-of-focus and in-focus planes of the object, which also brings about a loss in quality. In this paper, we present a robust multi-frame adaptive optics image restoration algorithm via maximum likelihood estimation. Our proposed algorithm uses a maximum likelihood method with image regularization as the basic principle, and constructs the joint log likelihood function for multi-frame AO images based on a Poisson distribution model. To begin with, a frame selection method based on image variance is applied to the observed multi-frame AO images to select images with better quality to improve the convergence of a blind deconvolution algorithm. Then, by combining the imaging conditions and the AO system properties, a point spread function estimation model is built. Finally, we develop our iterative solutions for AO image restoration addressing the joint deconvolution issue. We conduct a number of experiments to evaluate the performances of our proposed algorithm. Experimental results show that our algorithm produces accurate AO image restoration results and outperforms the current state-of-the-art blind deconvolution methods.

1. Introduction

Because of the turbulent flow field, an imaging system with adaptive optics (AO) is interfered by heat, thermal radiation, and image transmission. The observed image contains pointing jitters and is fuzzy, and this effect is known as the aero optical effect [1,2]. However, the AO correction within the system is often only partial, and the long-exposure images must be restored by postprocessing, where a deconvolution is required for reaching the diffraction limit. The AO images in a real system are usually represented by the convolution of an ideal image with a point spread function (PSF) [3]. The AO image is also contaminated by other noises, such as read-out, photon counting, multiplicative, and compression noise [4,5]. In most practical applications, however, finding the real PSF is impossible and an estimation must be carried out. Because the observed AO image is corrupted by various sources of noise, the estimation of both the object image and the PSF should be carried out at the same time, and this process is called blind deconvolution [6].

Blind deconvolution is also very sensitive to the noise present in the observed images [7,8]. To handle this problem, researchers have been making efforts in developing AO image restoration algorithms under various conditions. Using a total variation (TV) prior on the variational Bayesian blind deconvolution algorithm (VBBD-TV algorithm), Babacan et al. [9] developed an algorithm which shows good restoration performance for total variation based blind deconvolution and parameter estimation utilizing a variational framework. Katsaggelos and Lay proposed a maximum likelihood (ML) blur identification and image restoration method using the Expectation-Maximization algorithm (ML-EM algorithm) [10], which uses no knowledge about the type of distortion or its support region during iteration.

Zhu et al. presented an adaptive algorithm for image restoration using combined penalty functions (CPF-adaptive algorithm) [11]. This algorithm is adaptive and is used to estimate the smoothing and roughness parameters. The advantage of this method is that it is able to remove noise at the same time when restoring the image information around edges.

Zhang et al. proposed a multi-frame iterative blind deconvolution algorithm based on an improved expectation maximization algorithm for AO image restoration (RT-IEM algorithm) [12], which represents a more complex function to minimize. This algorithm contains a cost function for the joint-deconvolution of multi-frame AO images, and it estimates the regularization terms. The major limitation for this algorithm is its computational speed. Robust blind deconvolution methods optimize a data fidelity term, which can be stabilized by some additional regularization terms. Yap and Guan [13] proposed an approach for adaptive image regularization based on a neural network and a hierarchical cluster model. The main advantage of the method is that the empirical relationship between the optimized regularization vector and the local perception measure can be reused in the restoration of other degraded images. The major limitation for the algorithm is its convergence speed.

Although a number of blind deconvolution methods have been proposed, a good knowledge of the PSF is always desirable in order to achieve an accurate restoration. For example, Deforest et al. [14] modeled the scattering portion of the transition region and coronal explorer (TRACE) PSF as the sum of a measured diffraction pattern and a circularly symmetric scattering profile. In addition to constructing a parametric model of the PSF using the specification instrument, Poduval et al. modeled the PSF with a diffraction kernel and an isotropic scattering term representing stray light [15]. In these works, the parametric methods have the limitation that they depend on a particular instrument and the PSF models need to be available [16].

Our goal in this paper is to propose a new deconvolution scheme based on our frame selection method and the initial PSF estimation approach. The frame selection method is used to choose the better quality images from the multi-frame short exposure image sequence, which is initially corrected by the AO system. We take the variance of the AO image as the evaluation method for frame selection. The turbulence effect of an AO imaging system refers to the fact that when a reference star is regarded as the center for wavefront detection, the fluctuation of wavefront is no longer consistent with the detected wavefront for the regions that are beyond an isoplanatic angle. If this effect exists for an imaging process, it affects the restoration of AO images. Therefore, we develop the method for initial PSF estimation, which is a parametric method based on the physics of the instrument, and we provide a more precise PSF model containing some instrument’s properties. The initial estimation of the PSF model takes into account the a priori information on the object and the AO system parameters. A theoretical expression of the PSF is derived. The proposed PSF initial estimation is also evaluated by sensitivity analysis techniques and an error analysis is carried out based on simulation results (with and without noise).

The outline of our paper is as follows. Section 2 describes the frame selection method and the PSF model. Section 3 presents our new algorithm for joint blind deconvolution based on Poisson distribution and gives several methods for improved ML estimation and regularization. The restoration algorithm is applied to real AO images and the results are presented in Section 4. Finally in Section 5 we conclude.

2. Frame Selection Method and PSF Model

2.1. AO Image Degradation Model

Affected by atmospheric turbulence, observed images from astronomical imaging and remote sensing often suffer from image quality degradation. The readout noise satisfies an additive Gaussian model [17], and the degradation process can be represented as

where is the spatial coordinate in the image, is the original image, refers to the PSF, represents the degraded image acquired by the image sensor, is the noise, is the region of the object image, and ⊗ stands for the convolution operation.

A multi-frame AO image degradation model can be represented as

where denotes the kth frame of the observed AO images, represents its corresponding PSF, and M is the total number of frames. In this paper, we use the sequence of the degraded multi-frame images of the same object to restore the original image o.

2.2. Frame Selection Technique Based on Variance

In an experiment, the system can collect thousands of images for the same object. Interfered by atmospheric turbulence with various strengths, the AO system compensates the turbulence effects, and the image restoration results are directly affected by each frame. We select better quality frames in a sequence of images for image restoration [18].

A frame selection technique is used to select the high quality images from the multi-frame images that are initially corrected by an AO system. There are a number of evaluation criteria that can be used to evaluate the quality of degraded images. In this paper, we use the variance of an image as the frame evaluation method. The variance of the kth frame of an observed degraded image can be calculated by

where and represent the width and height of the observed image, respectively, and refers to the mean of an observed image

The mean of variance of the multi-frame observed images is defined as

The constraint for frame selection is defined as

where is the threshold for frame selection. In this method, the variance value of the degraded image should be close to the mean of variance . Note that can be higher than or lower than it. We only keep those images with variance values in the middle. Images with too low variance will be removed, and images with too high ones will also be removed.

The variance for the observed multi-frame images is defined as a sequence of data samples , and the variance of the data samples is

In the frame selection method, we choose the threshold T according to the variance . In the frame selection process, we have carried out a lot of experiments and chosen different T values for obtaining high quality images from the collected thousands of degraded AO images for the same object. Finally, we found that the threshold is approximately 2–3 times of , which can obtain better frames in a sequence of degraded AO images.

The frame selection process is based on an iterative algorithm. That is, when a better frame has been selected, the threshold T is recalculated. Then, the remaining frames will be checked by this method until the constraint is satisfied. The frame selection algorithm is shown in Algorithm 1.

| Algorithm 1 Frame selection |

|

In Algorithm 1, the threshold was estimated experimentally by running the frame selection method with different Ts and selecting the one with the most visually acceptable results.

2.3. PSF Model

According to Veran et al. [19], the wavefront phase that is corrected by the AO system is still a quasi-stationary random process. The optical transfer function (OTF) of an AO system is defined as

where f is the focal length of the imaging system, is the phase structure function of the wavefront, and its calculation formula can be expressed as

where denotes the expectation over turbulence realizations, z is a space vector within the telescope pupil, is a space interval vector within the telescope pupil, and denotes the residual wavefront phase after an AO system correction.

According to the analysis in [20,21], the residual wavefront phase structure function is the sum of an isoplanatic and an anisoplanatic structure functions, which is defined as

where is the off-axis angle interval, represents the on-axis isoplanatic structure function, and represents the anisoplanatic structure function, which is defined as

According to Zernike polynomial theory and assuming that an AO system can fully compensate for the first n order Zernike polynomials, the residual wavefront phase of isoplanatic and residual wavefront phase of anisoplanatic are expressed as [22]

where and represent the coefficients of the on-axis and off-axis Zernike polynomial, respectively, and is the jth order Zernike polynomial.

Under the anisoplanatic situation, the OTF of the imaging system is defined as

Similarly, modifying Equation (8) and the atmospheric environment, an OTF of an AO system is defined as

where is an OTF for the anisoplanatic effect.

Therefore, an OTF of the whole AO system is

where is the telescope diffraction-limited OTF, which is [20]

where D is the telescope diameter, and will return a circle mask array with a circle of radius and the default array size is with a central location at the middle of the array.

Modifying Equation (11) according to [11], we obtain the expression for the anisoplanatic structure function, which is

where , is the angular correlation function between coefficients and of the Zernike polynomial, and are functions defined as [21]

where is the telescope pupil function.

Based on Equations (13), (15) and (17), the OTF of an AO imaging system can be calculated. Therefore, the OTF can be transformed to PSF by the inverse Fourier transform, which is

In this paper, we adopt the above method to rebuild the AO-PSF estimated model as an initial estimation for the AO image restoration. Considering that the isoplanatic angle is , it is equivalent to a square isoplanatic area with . Assuming that the PSF is with circular symmetry, we build the PSF model when the field-of-view is , and the grid is . Based on Equation (1) for the image deconvolution reconstruction, we will describe our AO image restoration method in Section 3.

3. Joint Blind Deconvolution Algorithm Based on Poisson Distribution

3.1. Estimators with Poisson Statistics

A blind deconvolution algorithm is to estimate both the object and the PSF at the same time. The noise in an AO image is mainly photon noise that has a Poisson distribution. However, an AO astronomy image is dominated by a quite homogeneous background [23,24]. The intensity convolution based on the Shell theorem describes the non-coherent imaging system, the object image which is a nonnegative function, and the PSF (D is the region of the observed image) which is affected by atmospheric turbulence. Then, we define the object intensity at point in the kth frame as [25]

where , and is the region of the object image. In fact, the value of cannot be detected perfectly because it is always contaminated by some noise.

Considering the cases where the degraded image with atmosphere turbulence has noise, the intensity at each point in the observed image is a random variable that follows an independent Poisson distribution. The ML estimator based on Poisson distribution can be expressed as [23,25]

The intensity in Equation (20) is taken into account in the calculation of the ML estimator as in Equation (21), which is

Assuming that the pixels of the observed image are independent from each other [15], the joint likelihood function can be defined as

3.2. Algorithm Implementation for AO Image Restoration

To estimate and , an iterative deconvolution algorithm can be used. The and are based on the maximum likelihood estimation criterion, and the cost function can be written as

Taking the logarithm on both sides of Equation (23), the log-likelihood function is defined as

It is assumed that the M observed images , , ⋯, , are statistically independent [26], and the log-likelihood function of the multi-frame joint estimator is expressed as

However, it has been shown that the restoration algorithm does not converge to the solution because the noise is amplified during iterations [25]. To avoid excessive noise amplification, regularization on the cost function is required. We combine Equation (27) with regularization parameters to establish the cost function for joint deconvolution for multi-frame AO images, which is

where the second term is a regularization term that can be defined as

and

where has a variable regularization coefficient associated with the gradient of each point with [12,27]; parameter is the smoothing coefficient; and is the gradient for point , where v is a gray pixel value.

In order to minimize the cost function, Equation (28) can be differentiated with respect to and , and then make the differentiation equal to zero, which is

In order to take the derivative easily, can be formulated by Equation (20), and then brought into Equation (26). According to Equations (26), (28), and (31), the derivation process is

With the condition of image energy conservation, the sum value of each frame PSF of the turbulence degraded images is 1, namely . According to conservation of energy, the image energy before and after degradation remains unchanged, that is

Therefore, an iterative relationship can be established as follows:

where and are the results of the nth iteration.

In order to take the derivative easily, can be formulated by Equation (20). According to Equations (28) and (32), the solution is

Because the object image is within the support region of the observed image, the original object image is normalized in advance, namely . Therefore, within the support region for the observed image, the energy sum value for the original object images is 1, which is

When the object is estimated by Equation (35), we can build the iterative relationship of the new PSF, , which is

where is a constant.

In summary, the object image and the estimation of the PSF model, , are obtained by multiple iterations of Equations (35) and (38). The specific implementation steps for our algorithm are summarized in Algorithm 2.

| Algorithm 2 Steps for our proposed restoration algorithm |

|

4. Experimental Results

In this section, we use simulated images and a set of real AO images to verify our proposed restoration algorithm. We present experimental results on AO images taken by a 1.2 m AO telescope (Yunnan Observatory, Yunnan, China) which uses the Hartmann–Shack wavefront sensor from the Chinese Academy of Sciences at Yunnan Observatory on 3 December 2006. The second set of AO images are from the observations of celestial bodies on 13 January 2007. The values of the main parameters for the AO imaging system are shown in Table 1.

Table 1.

The values of the main parameters for an AO imaging system.

In order to verify the restoration effect and the reliability of our algorithm, we implement it using MATLAB 6.5 (The MathWorks, Natick, MA, USA) and test it on a 2.5 GHz Intel i5-2525M CPU (Intel Corporation, Santa Clara, CA, USA) with 4.0 GB of RAM running on a 32 bit Windows 7 operating system (Microsoft Corporation, Redmond, WA, USA).

In order to evaluate the image restoration result, we adopt the objective evaluation criteria Normalized Mean Square Error () [28] and Full Width at Half Maximum () [29] in this paper. The is defined as

is an estimator of the overall deviations between the original image and the object image. A lower value indicates that the deviation between the original image and the object image is small and a better restored image quality is obtained.

is a parameter commonly used to describe the width of a “bump” on a curve or function. It is given by the distance between points on the curve at which the function reaches half its maximum value. For a point-like object in an astronomical image, is two times that of the pixel distance between the image peak value and half its peak value [29]. It is used to measure for the quality of an image in astronomical observations. The components along the x- and y-directions of an image are used for evaluation in astronomical observations with the following formula:

where and represent the peak pixels for the on the x- and y-directions, respectively. The closer the value of is to the optical diffraction limit for the AO imaging system, the better it is for the image restoration quality.

4.1. The Restoration Experiment on Simulated Images

In the simulation experiments in this paper, the original images (“House” with 256 × 256 pixels, “Chemical Plant” with 256 × 256 pixels, and “The Little Girl” with 256 × 256 pixels) are selected from [30]. Ten frames of simulation degraded images are generated by image degradation software from the Key Optical Laboratory of the Chinese Academy of Sciences [25], and then Gaussian white noise is added and the signal-to-noise ratio (SNR) of the images is set to be 20 dB, 25 dB, and 30 dB with real AO imaging conditions including atmospheric turbulence. The equivalent parameters for the four layers turbulence model are the same as those in Reference [22], and we set the parameters to be the same as the 1.2 m AO telescope at the observatory in Yunnan, China. The main parameters of the telescope imaging system are the atmospheric coherence as shown in Table 1. The simulated degraded images are assumed to be at certain distances so that one arcsecond imaging angle corresponds to degradation of a certain linear scale. Experiments on the ML-EM algorithm [10], the CPF-adaptive algorithm [11], the RT-IEM algorithm [12], the VBBD-TV algorithm [9], and our algorithm are compared.

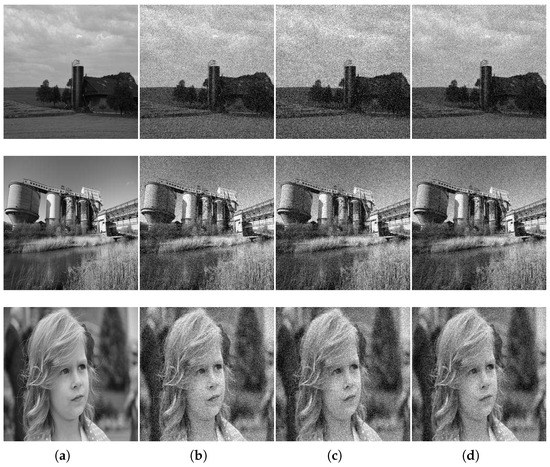

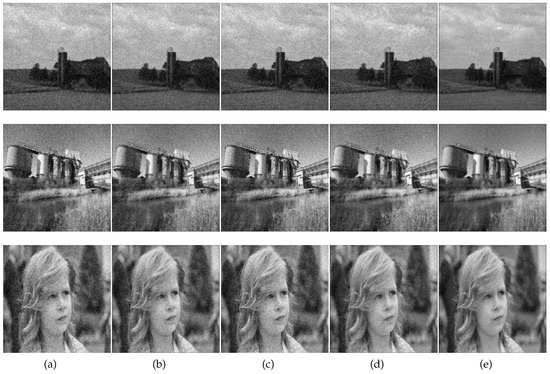

Figure 1 is the original image and simulated multi-frame degradation images. Figure 1a is the original images, and example frames with turbulence and noise are shown in Figure 1b–d (to save space, only three frames are shown). The comparison results based on the five algorithms are shown in Figure 2. In our algorithm, the parameters and were selected experimentally for visually acceptable results. Table 2 gives the results of our algorithm and those of the ML-EM [10], CPF-adaptive [11], RT-IEM [12], and VBBD-TV [9] methods, and the number of iterations for the four algorithms is 300. Our algorithm ranks on the top of the list, and the results demonstrate the superiority of our method.

Figure 1.

The original images and the simulated multi-frame degradation images. (a) input images of the datasets; (b) images degraded by the 5 × 5 PSF at SNR of 20 dB under ideal conditions; (c) images degraded by the 5 × 5 PSF at SNR of 25 dB under ideal conditions; (d) images degraded by the 5 × 5 PSF at SNR of 30 dB under ideal conditions.

Figure 2.

The comparison results of the restored images based on four algorithms (with 300 iterations). (a) by the ML-EM algorithm; (b) by the CPF-adaptive algorithm; (c) by the RT-IEM algorithm; (d) by the VBBD-TV algorithm; (e) by our algorithm with and .

Table 2.

Comparison results on and running time of different restoration algorithms.

4.2. Restoration Experiments on Binary-Star AO Images

For adaptive optics compensated images, the degradation is dominated by the residual fine-scale wavefront error, which requires several parameters to characterize and varies from frame to frame. Now, we show the restoration results of our proposed algorithm on binary-star AO images that were taken by a 1.2 m AO imaging system on 3 December 2006. The adaptive optics bench is a system with 88 actuators, with a Shack–Hartman wave-front sensor (64 sub-apertures). The values of the main parameters for the AO imaging system are shown in Table 1. The estimated PSF model for an AO image is under the following conditions: the full field-of-view for the system is ; the Zernike model for the fully corrected turbulence effect is with the first 35 orders; the field-of-view is ; the size of the space-variant PSF is pixels; and the isoplanatic angle is . The AO image restoration experiments based on the ML-EM algorithm [10], the CPF-adaptive algorithm [11], the RT-IEM algorithm [12], the VBBD-TV algorithm [9], and our algorithm are compared.

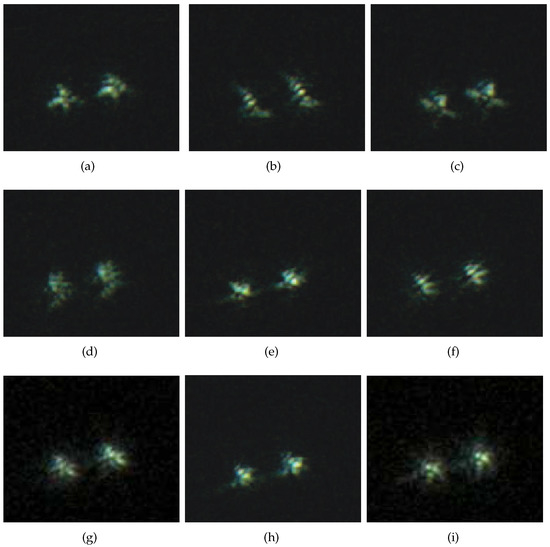

We apply our frame select technique, which is introduced in Section 2.2, and select 50 frames from 200 of the observed AO images as the input images for blind convolution. Figure 3 shows the observed multi-frame degraded binary-star AO images (only nine frames are shown). In order to obtain better restoration results, our frame selection technique is used to select a group of high-quality degraded AO images to improve the stability of the algorithm.

Figure 3.

Nine frames of binary-star images by an AO system and their variances. (a) Frame 1 with variance ; (b) Frame 2 with variance ; (c) Frame 3 with variance ; (d) Frame 4 with variance ; (e) Frame 5 with variance ; (f) Frame 6 with variance ; (g) Frame 7 with variance ; (h) Frame 8 with variance ; (i) Frame 9 with variance .

In Figure 3, frame selection experiment based on image variance is carried out for binary-star AO images. The variance of each degraded image is calculated using Equation (5). The size of the binary-star AO image is pixels.

The variance value for each observed AO image is given in the caption of Figure 3. The variance values show that most of the variance values for the images are close to each other, and their values are between 500 and 570. While affected by Gaussian noise, the variance of the 3rd frame is 566.23, which is higher than the others. The variance of the 7th frame is influenced by the PSF blurring effect and it is 336.34, so its value is much lower than that of the other images. Therefore, the 3rd and 7th frames should be eliminated for estimation purposes.

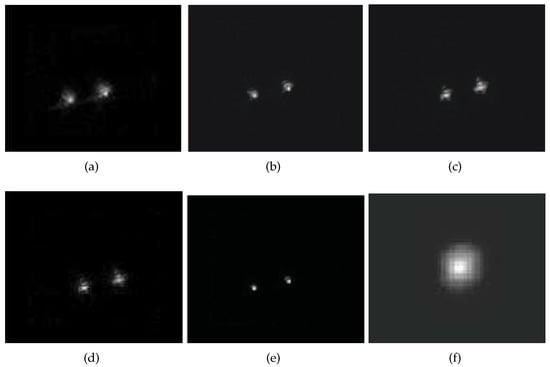

To test the performance of our proposed algorithm, image restoration experiments were carried out, and the selected AO images for the experiments were shown in Figure 3 (Frames 1, 2, 4, 5, 6, 8, 9 were selected in the experiment). We choose , and the parameter for the regularization function is with 300 iterations. Figure 4 shows the restoration results on degraded AO images based on five algorithms. Figure 4a is the restored image based on the ML-EM algorithm with and ; Figure 4b is the restored image based on the CPF-adaptive algorithm with and ; Figure 4c is the restored image based on the RT-IEM algorithm with and ; Figure 4d is the restored image based on the VBBD-TV algorithm with and ; and Figure 4e is the restored image based on our algorithm with and . The total number of iteration is 200, and the restoration results are very close to the diffraction limit of the 1.2 m AO telescope. Figure 4f is the estimation of the PSF based on our algorithm.

Figure 4.

The restoration results comparison on multi-frames binary-star AO images. (a) restored image by the ML-EM algorithm; (b) restored image by the CPF-adaptive algorithm; (c) restored image by the RT-IEM algorithm; (d) restored image by the VBBD-TV algorithm; (e) restored image by our algorithm; (f) estimated PSF by our algorithm.

In order to verify our algorithm, we compare our algorithm with four other algorithms (the ML-EM, CPF-adaptive, RT-IEM, and VBBD-TV algorithms) for the binary-star images, and the objective evaluation criteria for the experimental results are measured by , , and the computation time, which are shown in Table 3, and the number of iterations for the five algorithms is 300.

Table 3.

The , , and the computation time comparison for the five algorithms for binary-star images restoration (iteration number is 300).

Comparing with the ML-EM, CPF-adaptive, RT-IEM, and VBBD-TV algorithms, we can see that the measures from our algorithm are decreased by 19%, 4.2%, 2.8%, and 7.7%, respectively. It is shown in Table 3 that our algorithm can obtain a , which is closer to the diffraction limit of the AO system and can obtain lower measures. Therefore, our proposed method can restore binary-star degraded images effectively where the degradation was due to the effect of atmospheric turbulence acting on the optical telescope. The computation load of our method is slightly higher than the other four restoration algorithms, and we plan to further improve the performance of our algorithm in the future.

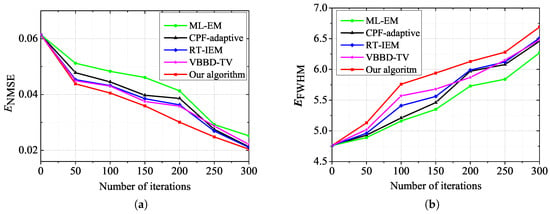

Moreover, the and measures of the best restored images by the five algorithms are compared in Figure 5. The measure versus the iteration number for the five algorithms on the binary-star AO images are plotted in Figure 5a. Figure 5b is the results for the five restoration algorithms. It can be seen from Figure 5b that our algorithm has values that are close to the diffraction limit of the AO system when applied to binary-star images. This shows that our restoration algorithm can effectively restore the degraded AO images.

Figure 5.

The restoration comparisons on and measures versus the iteration number for the five methods. (a) versus the iteration number of the five algorithms; (b) versus the iteration number of the five algorithms.

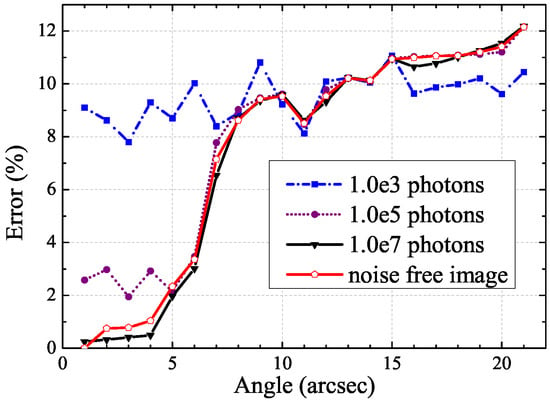

4.3. Sensitivity Analysis

Sensitivity analysis is the study of how the uncertainty in the output of a model can be portioned to different sources of variation in the model inputs [31]. In order to evaluate the benefits of using the proposed PSF initial estimation, the computation of the sensitivity indices of a PSF model was performed. In an AO image restoration process, we initialize the restoration algorithm with a perfect PSF. In this work, extensive simulations are performed to test the behaviour of the proposed PSF estimation. In a realistic case, the degraded AO image is affected by the turbulence effect, the photon, and detector noise. We assume that the dominant noise is the photon noise. The simulation conditions are the followings: the simulation is performed on three different photon noise levels varying in: photons, photons, and photons; and the angle varies from 1 arcsec for the minimum value to 21 arcsec for the maximum value. Figure 6 shows the error on the estimation of the PSF model in the case of a noise free image, and in the cases of photon noises with , , and photons in the whole image, respectively. We plot in Figure 6 the errors which are given in percentages on the magnitude estimation of star images using an isoplanatic deconvolution process. In the case of an isoplanatic deconvolution, the PSF is constant in the whole field of view [21]. For a given noise level, a limit angle can be defined below when the error is lower than the noise error. The lower the noise level, the greater the limit angle (see Figure 6). For instance, the error becomes greater than the noise error in the case of photons for angle . This limit angle can be used to define an isoplanatic angle for our AO image restoration method.

Figure 6.

Error on the estimation of the PSF model in the case of a noise free image, and in the cases of photon noises with , , and photons in the whole image, respectively.

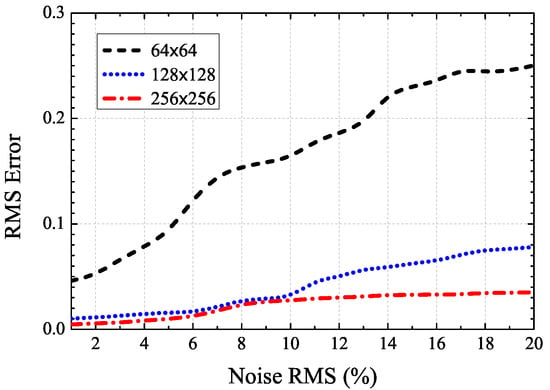

Furthermore, we perform a sensitivity analysis on the PSF starting values for our proposed AO image restoration algorithm. To validate the sensitivity behaviour of the PSF model, the experiment is performed and the conditions are:

- Noise root mean square (RMS) changes from one percent for the minimum value of the image to 20 percent for the maximum value for the image;

- Fifty noise realizations are calculated for each RMS noise value;

- The simulation is performed on three different sub-images varying in size: a pixels central region of the image, a pixels central region of the image, and the whole pixels image.

Figure 7 shows the RMS error on the estimation of the initial PSF values for different levels of noise and varying data size. It indicates that the initial value of our PSF estimation is useful to provide less sensitive final PSF estimation in our proposed restoration algorithm. This simulation shows that the PSF estimator exhibits a lower sensitivity to noises, which opens the way to its use for real AO images.

Figure 7.

RMS error on estimation of the PSF initial value for different noise levels (in percentage).

5. Conclusions

This paper presents a novel adaptive optics images restoration algorithm based on our frame selection method and the PSF model for the turbulence effect. There are two main contributions in this paper. The proposed frame selection method provides a reasonable criterion to evaluate the quality of a degraded AO image based on the variance of the image. We also propose a PSF reconstruction method for AO images, which can accurately estimate the PSF model in a blind deconvolution process using the initial estimate value. A theoretical expression of the PSF is derived. According to the Poisson distribution model for establishing a joint log-likelihood function for the multi-frame AO images, the proposed algorithm for the maximum likelihood estimator is used for the object image and the PSF estimation for each frame. A series of experiments were carried out to verify the effectiveness of the proposed algorithm and show its superiority over the current state-of-the-art blind deconvolution methods. The comparisons with the ML-EM, CPF-adaptive, RT-IEM, and VBBD-TV methods also indicate that our proposed algorithm is competitive on AO image restoration. Future works should focus on reducing the computation time by employing parallel computing. Our proposed algorithm can be used for restoring observed AO images, and it has the potential for real applications.

Acknowledgments

This research is supported by the National Science Foundation of China (Grant No. 61402193, 61401347), National Spark Program of China (Grant No. 2015GA66004), the National University Students Innovation Training Project of China (Grant No. 201610193048, 201610193053), the Scientific and Technological Research Project of the Department of Education in Jilin Province, China. We thank Wei Su of Changchun University of Science and Technology for his comments on this paper. The authors are grateful to the reviewers for the valuable suggestions. The input images used in Figure 1 and Figure 2 are obtained from https://pixabay.com/ which can be used free for any purposes.

Author Contributions

Dongming Li conceived and designed the research; Dongming Li and Lijuan Zhang designed and performed the experiments; Jinhua Yang contributed to the theory studies; Huan Liu and Jiaqi Peng analyzed the data; Dongming Li and Changming Sun wrote the manuscript; and all the authors reviewed and revised the manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhang, L.; Yang, J.; Su, W.; Wang, X.; Jiang, Y.; Jiang, C.; Liu, Z. Research on blind deconvolution algorithm of multiframe turbulence-degraded images. J. Inf. Comput. Sci. 2013, 10, 3625–3634. [Google Scholar] [CrossRef]

- Cheong, H.; Chae, E.; Lee, E.; Jo, G.; Paik, J. Fast image restoration for spatially varying defocus blur of imaging sensor. Sensors 2015, 15, 880–898. [Google Scholar] [CrossRef] [PubMed]

- El-Sallam, A.; Boussaid, F. Spectral-based blind image restoration method for thin TOMBO imagers. Sensors 2008, 8, 6108–6124. [Google Scholar] [CrossRef] [PubMed]

- Christou, J.; Hege, E.; Jefferies, S.; Keller, C. Application of multiframe iterative blind deconvolution for diverse astronomical imaging. Proceedings of SPIE 2200, Amplitude and Intensity Spatial Interferometry II, Kailua Kona, HI, USA, 9 June 1994; pp. 433–444. [Google Scholar]

- Conan, J.; Mugnier, L.; Fusco, T.; Michau, V.; Rousset, G. Myopic deconvolution of adaptive optics images by use of object and point-spread function power spectra. Appl. Opt. 1998, 37, 4614–4622. [Google Scholar] [CrossRef] [PubMed]

- Kundur, D.; Hatzinakos, D. A novel blind deconvolution scheme for image restoration using recursive filtering. IEEE Trans. Signal Process. 1998, 46, 375–390. [Google Scholar] [CrossRef]

- Gerwe, D.; Lee, D.; Barchers, J. Supersampling multiframe blind deconvolution resolution enhancement of adaptive optics compensated imagery of low earth orbit satellites. Opt. Eng. 2002, 41, 2238–2251. [Google Scholar]

- Gallé, R.; Núñez, J.; Gladysz, S. Extended-object reconstruction in adaptive-optics imaging: the multiresolution approach. Astron. Astrophys. 2013, 555, A69. [Google Scholar] [CrossRef]

- Babacan, S.; Molina, R.; Katsaggelos, A. Variational Bayesian blind deconvolution using a total variation prior. IEEE Trans. Image Process. 2009, 18, 12–26. [Google Scholar] [CrossRef] [PubMed]

- Katsaggelos, A.; Lay, K. Maximum likelihood blur identification and image restoration using the EM algorithm. IEEE Trans. Signal Process. 1991, 39, 729–733. [Google Scholar] [CrossRef]

- Zhu, D.; Razaz, M.; Fisher, M. An adaptive algorithm for image restoration using combined penalty functions. Pattern Recognit. Lett. 2006, 27, 1336–1341. [Google Scholar] [CrossRef]

- Zhang, L.; Li, D.; Su, W.; Yang, J.; Jiang, Y. Research on Adaptive Optics Image Restoration Algorithm by Improved Expectation Maximization Method. Abstr. Appl. Anal. 2014, 2014, 781607. [Google Scholar] [CrossRef]

- Yap, K.; Guan, L. Adaptive image restoration based on hierarchical neural networks. Opt. Eng. 2000, 39, 1877–1890. [Google Scholar] [CrossRef]

- DeForest, C.; Martens, P.; Wills-Davey, M. Solar coronal structure and stray light in TRACE. Astrophys. J. 2008, 690, 1264. [Google Scholar] [CrossRef]

- Poduval, B.; DeForest, C.; Schmelz, J.; Pathak, S. Point-spread functions for the extreme-ultraviolet channels of SDO/AIA telescopes. Astrophys. J. 2013, 765, 144. [Google Scholar] [CrossRef]

- González, A.; Delouille, V.; Jacques, L. Non-parametric PSF estimation from celestial transit solar images using blind deconvolution. J. Space Weather Space Clim. 2016, 6, 492–504. [Google Scholar] [CrossRef]

- Li, D.; Zhang, L.; Yang, J.; Su, W. Research on wavelet-based contourlet transform algorithm for adaptive optics image denoising. Opt. Int. J. Light Electron Opt. 2016, 127, 5029–5034. [Google Scholar] [CrossRef]

- Tian, Y.; Rao, C.; Wei, K. Adaptive Optics Image Restoration Based on Frame Selection and Multi-frame Blind Deconvolution. Chin. Astron. Astrophy. 2009, 33, 223–230. [Google Scholar]

- Veran, J.; Rigaut, F.; Maitre, H.; Rouan, D. Estimation of the adaptive optics long-exposure point spread function using control loop data: recent developments. Proceedings of SPIE 3126, Adaptive Optics and Applications, San Diego, CA, USA, 17 October 1997; pp. 3057–3069. [Google Scholar]

- Voitsekhovich, V.; Bara, S. Effect of anisotropic imaging in off-axis adaptive astronomical systems. Astron. Astrophys. Suppl. Ser. 1999, 137, 385–389. [Google Scholar] [CrossRef]

- Fusco, T.; Conan, J.; Mugnier, L.; Michau, V.; Rousset, G. Characterization of adaptive optics point spread function for anisoplanatic imaging: Application to stellar field deconvolution. Astron. Astrophys. Suppl. Ser. 2000, 142, 149–156. [Google Scholar] [CrossRef]

- Chang, X.; Li, R.; Xiong, Y. Predicted space varying point spread function model for anisoplanatic adaptive optics imaging. Acta Opt. Sin. 2011, 12, 003. [Google Scholar] [CrossRef]

- Blanco, L.; Mugnier, L. Marginal blind deconvolution of adaptive optics retinal images. Opt. Express 2011, 19, 23227–23239. [Google Scholar] [CrossRef] [PubMed]

- Prato, M.; Camera, A.; Bonettini, S.; Bertero, M. A convergent blind deconvolution method for post-adaptive optics astronomical imaging. Inverse Prob. 2013, 29, 065017. [Google Scholar] [CrossRef]

- Hong, H.; Zhang, T.; Yu, G. Iterative multi-frame restoration algorithm of turbulence-degraded images based on Poisson model. J. Astronaut. (Chin.) 2004, 25, 649–654. [Google Scholar]

- Sheppard, D.; Hunt, B.; Marcellin, M. Iterative multiframe superresolution algorithms for atmospheric-turbulence-degraded imagery. J. Opt. Soc. Am. A 1998, 15, 978–992. [Google Scholar] [CrossRef]

- Yang, H.; Li, X.; Gong, C.; Jiang, W. Restoration of turbulence-degraded extended object using the stochastic parallel gradient descent algorithm: Numerical simulation. Opt. Express 2009, 17, 3052–3062. [Google Scholar] [CrossRef] [PubMed]

- Gabarda, S.; Cristóbal, G. Blind image quality assessment through anisotropy. J. Opt. Soc. Am. A 2007, 24, B42–B51. [Google Scholar] [CrossRef]

- Chen, B.; Cheng, C.; Guo, S.; Pu, G.; Geng, Z. Unsymmetrical multi-limit iterative blind deconvolution algorithm for adaptive optics image restoration. High Power Laser Part. Beams (Chin.) 2011, 2, 313–318. [Google Scholar] [CrossRef]

- Pixabay. Available online: https://pixabay.com/en/ (accessed on 3 November 2016).

- Figueiro, T.; Saib, M.; Tortai, J.; Schiavone, P. PSF calibration patterns selection based on sensitivity analysis. Microelectron. Eng. 2013, 112, 282–286. [Google Scholar] [CrossRef]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).