Abstract

In wireless sensor networks (WSNs), sensor nodes are deployed for collecting and analyzing data. These nodes use limited energy batteries for easy deployment and low cost. The use of limited energy batteries is closely related to the lifetime of the sensor nodes when using wireless sensor networks. Efficient-energy management is important to extending the lifetime of the sensor nodes. Most effort for improving power efficiency in tiny sensor nodes has focused mainly on reducing the power consumed during data transmission. However, recent emergence of sensor nodes equipped with multi-cores strongly requires attention to be given to the problem of reducing power consumption in multi-cores. In this paper, we propose an energy efficient scheduling method for sensor nodes supporting a uniform multi-cores. We extend the proposed T-Ler plane based scheduling for global optimal scheduling of a uniform multi-cores and multi-processors to enable power management using dynamic power management. In the proposed approach, processor selection for a scheduling and mapping method between the tasks and processors is proposed to efficiently utilize dynamic power management. Experiments show the effectiveness of the proposed approach compared to other existing methods.

1. Introduction

WSNs consist of a number of moblile sensor nodes which are tiny, multi-functional, and low-power. Table 1 lists mobile sensing platforms with various sensors. It is widely used in various applications to collect and process data, such as various types of physical and environment information. Recently, sensor nodes in WSNs have evolved for multimedia streaming and image processing. In response to these high performance demands, sensor nodes with multi-processors have emerged. A multi-processor sensor node platform, mPlatform, which is capable of parallel processing for computationally intensive signal processing, was proposed by Lymberopoulos et al. [1]. These platforms operate with limited batteries, as shown in Table 1. The use of a multi-cores in the sensor node makes energy consumption more serious. Power management among sensor nodes is of critical importance for several reasons: limited energy batteries and ensuring longevity [2,3,4], meeting performance requirements [2,5,6], inefficiency arising because of over provisioning resources [2], power challenges posed by CMOS scaling [2,7], and enabling green computing [2]. Recent advances in CMOS technology have improved the density and speeds for on-chip transistors. These trends limit the fraction of chips that can be used at maximum speeds within limited power. Therefore, power challenges in CMOS have been addressed for processor performance. Transistor performance scaling in the future may end if left unaddressed [8,9]. Battery-operated embedded systems are sensitive to high power consumption, which leads to heating and reduced battery lifetime. Thus, energy-efficient management is essential in several embedded systems such as wearable devices. Improving energy efficiency leads to scale performance without violating the power budget. In recent advances, mobile computing devices with a multi-cores have been dynamically increased for mobile convergence applications (e.g., video streaming and web browsing). Power management in embedded systems contributes to achieve nearly 3% of the overall carbon footprint in green computing [10]. Energy efficiency scheduling algorithms for the sensor node with multi-processors are necessary. The scheduling algorithms must be able to keep battery lifetime longer while meeting the time constraints.

Table 1.

Mobile sensing platforms.

The Asymmetric Multi-core Platform (AMP) is capable of parallelism with different performance levels. The examples of AMP include mobile phones, tablets, and high-end mobile sensor nodes. These devices are equipped with cores capable of handling tasks requiring high-performance processing. Note that not all tasks need the high-performance processing and energy efficient schemes are adopted even for the cores consuming low power. The problem of scheduling AMP for high-performance mobile sensors is important in terms of performance and energy efficiency. The scheduler can switch the high-performance cores to a low power state by assigning tasks to the low power cores when processing the tasks requiring low loads. It is also possible that powerful cores are changed into simpler cores to adapt the system to varying loads. ARM’s big.LITTLE architecture [17,18] is a representative example. In ARM’s big.LITTLE architecture, there are three modes for task migration: cluster migration, CPU migration, and global task scheduling. The scheduler improves energy efficiency by migrating tasks between big and little cores. In this paper, we discuss real-time scheduling problems in the context of AMP. We adopt a scheme using the T-Ler plane to develop energy-efficient scheduling algorithms for real-time tasks on uniform multi-core systems.

The T-Ler plane extends the T-L plane using a T-L abstraction strategy to fit uniform multi-core systems. The Voltage Frequency Scale (VFS) is exploited on energy-efficient scheduling algorithms using the T-L plane. On the other hand, there are not many studies related to Dynamic Power Management (DPM). Sensor network applications with varying loads depending on the situation can take advantage of the energy by switching the state of unnecessarily used processors. Kim et al. [19,20] proposed several T-L plane based energy-efficient algorithms using DPM for sensor nodes with identical multi-processors. However, these algorithms are not suitable for uniform multi-processor systems. In particular, it is hard to select the set of processors with the lowest power consumption among the multiple sets of processors that have the same capacity. We propose a new algorithm suitable for sensor node with uniform multi-processors, called Uniform-DPM. More specifically, we extend the previous approaches [19,20] by considering the characteristics of uniform multi-processors in terms of energy efficiency as follows:

- At the beginning of each T-Ler plane, select the processors operating with a low frequency and minimize the processing capacity as much as possible.

- Reduce the complexity of scheduling and fragments of idle time, and classify the processors and tasks into processor sets and task sets at the beginning of the T-Ler plane, respectively.

- At each event in the T-Ler plane, utilize constrained migration to reduce the complexity of scheduling and fragments of idle time.

The first extension is to reduce the power loss caused by uniform multi-processors that consist of processors with difference processing capacities. The previous approach [20], as shown in Section 2, focuses solely on minimizing the number of processors. It is not suitable for uniform multi-processors. In the case of uniform multi-processors, the processors must be selected considering the processing capacity and the frequency of each processor. The second extension is to classify processors and tasks for limited scheduling, where tasks in a set are only scheduled to processors in the according processor set. The third extension is to adjust the sets in each event and to assign tasks to the processors using the limited scheduling. These prevent the unnecessary migration of tasks and enables the collection of idle time on particular processors.

We organize this paper as follows. In Section 2, we introduce related works, including the approaches previously based on T-L plane targeting uniform multi-processors. In Section 3, we propose mechanisms to select processors and allocate tasks for energy-efficient scheduling in uniform multi-processors. We extend the proposed events in identical multi-processors to ones in uniform multi-processors. In Section 4, we perform experimental evaluations by comparing our proposed algorithms with other algorithms. Lastly, we present the conclusions and future works in Section 5.

2. Related works

2.1. Power Management Techniques

Due to the advancements in semiconductor process technologies, there have been more high-end processors available that integrate more transistors. Recently, real-time embedded systems have been increasingly adopting high-end processors. In addition, to improve the performance, real-time embedded systems are also adopting multi-processors. However, this increases the processor power consumption significantly. The power consumption of CMOS chips is as follows [21]:

is the static power consumption which is calculated as the sum of the leakage power and short current power. is the dynamic power consumption by charging and discharging of the output capacitance for processing time. It is not easy to reduce the static power consumption which depends on various parameters in the semiconductor process. Therefore, we focus on reducing the dynamic power consumption. Dynamic power is defined as:

where f is the frequency, is the switching activity factor, V is the supply voltage, and C is the capacitive load. DVFS is a method used to adjust the supply voltage and frequency of a CMOS chip by utilizing the slack time that occurs when scheduling tasks. On the other hand, DPM is a method of reducing energy consumption by switching to a low power state when slack time occurs. However, if a sufficient slack time is not guaranteed over the break-even time, the energy overhead caused by the state transition will cause loss. The break-even time is determined by Equation (3) [22].

The transition energy overhead and recovery time are denoted as and , respectively. denotes the idle power. The sleep power is denoted by . The break-even time should be considered when developing a scheduling algorithm that not only uses the sleep mode, but also guarantees real-time responsiveness.

2.2. Global Scheduling Approaches on Multi-Processors

Scheduling disciplines can be categorized by considering the complexity of the priority mechanisms and the degree of job migration. Considering how task priorities are determined, Carpenter et al. [23] have categorized the schemes to static, dynamic but fixed within a job, or fully dynamic.

- Static: A single fixed priority is applied to all jobs for each task in the system. e.g., Rate Monotonic (RM) scheduling.

- Dynamic but fixed within a job: Different priorities may be assigned for the jobs of a task, but a job has a fixed priority at different times. e.g., Earliest Deadline First (EDF) scheduling.

- Fully dynamic: Different priorities may be assigned for a single job at different times, e.g., Least Laxity First (LLF) scheduling.

Depending on the degree of job migration, Carpenter et al. [23] have categorized the migration criterion to no migration, restricted migration, and unrestricted migration.

- No migration: The set of tasks in the system is partitioned into some subsets for available processors, a scheduler schedules a subset on a unique processor. The jobs of a task in a subset are executed on the corresponding processor.

- Restricted migration: Each job of a task must be scheduled entirely on a single processor. However, other jobs of the same task may be executed on different processors. Therefore, migrations among processors are allowed at the task-level context, but not at job boundaries.

- Unrestricted migration: Any jobs is also allowed to migrate among processors during its lifetime.

Note that our proposed scheduling algorithm supports fully dynamic and unrestricted migration.

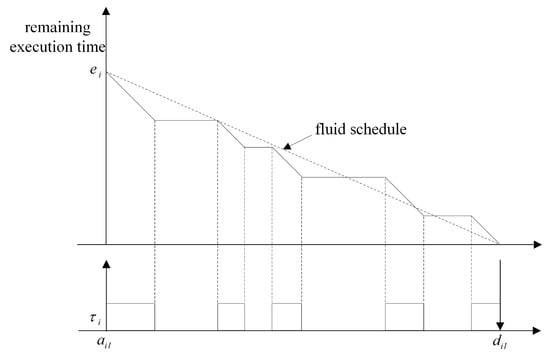

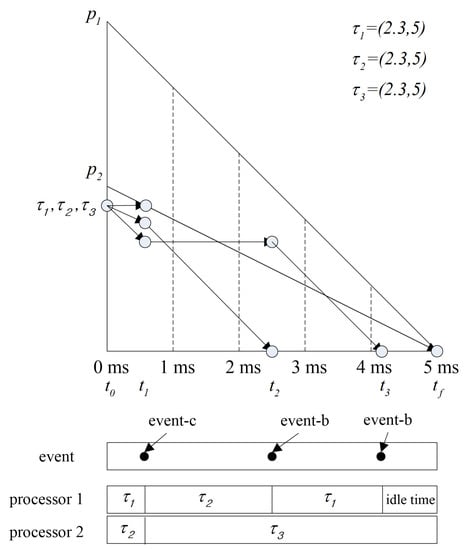

Various global scheduling algorithms for multi-processors have been studied. In global scheduling, all eligible jobs waiting for execution are in a single priority-ordered queue shared by all of the processors in the system; the highest priority job is dispatched from this queue by the global scheduler. Most of early the studies on global scheduling extended optimal scheduling algorithms known well for a single processor, such as RM and EDF, to multi-processors. However, these extensions can result in wasted utilization of resources. The fluid scheduling model with fairness notion, where each task is always executed at a fixed rate, emerged to overcome the limitation [24]. Figure 1 compares the fluid scheduling concept and the practical scheduling. There is a gap between fluid scheduling and practical scheduling, as shown in Figure 1. There are some algorithms extending the fluid scheduling model for achieving optimality on multi-processors. Proportionate fair (P-fair) scheduling has produced a feasible schedule for periodic tasks on multi-processors, and it has shown considerable promise in multi-processor scheduling [25]. However, extensive amount of migrations and preemption are needed to follow the fluid schedule. Much effort has been made to overcome this problem in global optimal scheduling. Thereafter, Deadline Partitioning-fair (DP-fair) and Deadline Partitioning-warp (DP-wrap) algorithms were proposed, and they exhibited better performance with respect to preemption in [26]. The method of allocating tasks to the processors supported by these scheduling algorithms is not suitable for uniform multi-processors. Cho et al. [27] proposed Largest Nodal Remaining Execution-time First (LNREF) using a T-L plane abstraction and it performs well with uniform multi-processors. Funk and Meka [28] proposed a T-L plane based scheduling algorithm, U-LLREF, that extends LNREF algorithm for uniform parallel machines. In U-LLREF, a uniform multi-processors provides a condition for determining event-c. Chen et al. [29] proposed Precaution Cut Greedy (PCG), a T-L plane based scheduling algorithm for uniform multi-processors. PCG uses a modified T-L plane, a T-Ler plane. Figure 2 shows how the PCG schedules in the first T-Ler plane. When event-c occurs, is assigned to until the end of the T-Ler plane. Thus, in PCG, a task monopolizes a single processor, thereby preventing unnecessary task migration.

Figure 1.

Fluid schedule model.

Figure 2.

A scheduling example in the 1st T-Ler plane.

2.3. T-L Plane Based Energy-Efficient Global Optimal Scheduling Approaches

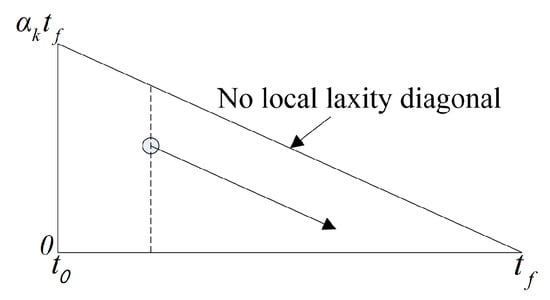

Energy-efficient scheduling based on the T-L plane for uniform parallel machines has been proposed due to the demand for energy efficiency. Uniform RT-SVFS [30] reduces the energy consumption by scaling the frequency of all processors with a constant rate. By scaling the height of the T-L plane, as shown in Figure 3, scheduling is enabled at the changed frequency. represents the normalized frequency of the processor. In addition, energy-efficient T-L plane based scheduling algorithms for unrelated parallel machines have emerged. Independent RT-SVFS [30] determines the frequency by statically scaling each processor. This algorithm has been proposed to overcome the heavy task bottlenecks that can occur when using the frequency scaling technique. The Growing Minimum Frequency (GMF) [31], which is a state-of-the-art algorithm for T-L plane based non-uniform frequency scaling for saving energy on VFS embedded multi-processors, has been proposed for the frequency control of multi-processors using U-LLREF, and the global optimal frequency can be determined. RT-DVFS [32] allows you to dynamically adjust the frequency of each processor in the event of scheduling.

Figure 3.

Transistion of the T-L plane (frequency ).

It is difficult to consider DPM due to the idle time fragmentation problem that occurs when using the T-L plane based scheduling algorithm. In addition, since scheduling is performed using all processors existing in the system, a considerable energy overhead due to unnecessary state transition occurs when DPM is used. TL-DPM [19] solves the idle time fragmentation problem by using a new event to retrieve tokens, which is performed in the next plane. However, since only the token of the next plane is targeted, there is room for solving the idle time fragmentation problem. Kim et al. [20] proposed a generalized method for executing tokens to be scheduled in the later plane in the current plane in order to solve this problem. To reduce the number of state transitions, scheduling is performed using only the minimum number of processors.

3. Proposed Energy Efficient Approach on Uniform Multi-Processors

3.1. Feasibility Conditions

Theorems 1 and 2 represent the conditions that must be met to obtain schedules satisfying the time constraints when uniform multi-processors are used for scheduling the given task set.

Theorem 1.

(Horvath et al. [33]) The level algorithm constructs a minimal length schedule for the set of independent tasks with service requirements on the processing system , where . The schedule length is given by

Theorem 2.

(Funk et al. [34]) Consider a set of periodic tasks indexed according to non-increasing utilization (i.e., for all i, , where ). Let for all i, . Let π denote a system of uniform processors with capacities , , …, , for all i, . Periodic task system τ can be scheduled to meet all deadlines on the uniform multi-processor platform π if and only if the following constraints hold:

where , for all .

Selecting processors for the scheduling tasks at the beginning of each T-L plane is divided into the case where tasks are allocated to the processors with the same capacity as the utilization, and the case where they are not.

3.2. Processor Selections and Classification

3.2.1. Simple Case: Exact Match

Table 2 shows some examples of processor selections for scheduling the task set shown in Table 3. In a CMOS chip, power consumption is determined by the operating frequency and supply voltage. The relationship between the power consumption and the supply voltage in the processor is as follows.

Table 2.

An example of the available processor sets.

Table 3.

Task properties.

In addition, according to the relationship between the supply voltage and the operating frequency in a processor, shown in Equation (7), a processor operating at a higher frequency requires a higher supply voltage than that operating at a lower frequency. Therefore, as shown in Table 2, a processor with a higher supply voltage will have a higher capacity.

where is the threshold voltage of transistors and is a measure of the velocity saturation in COMS transistors.

, , and shown in Table 2 satisfy Theorems 1 and 2 presented above. Since the processing capacity of , , and is equal to the total utilization of the task set, there is no idle time when the task set is scheduled. However, since the number and capacity of processors is not the same in each processor, the power consumed by , , and is different. The energy consumption for scheduling the task set in Table 3 on , , and is shown in Table 4. The lowest power consumption can be observed on , where each task is independently assigned to a processor whose capacity is equal to the utilization of each task in the task set. If the total capacity of a processor set is equal to the total utilization of a task set, then there is no idle time because all processors always perform their tasks. Therefore, the power consumption of each processor is dependent on the processed workload. High-capacity processors can handle more work in terms of processor workloads. Equation (8) shows the power consumption needed to process in a processor whose operating frequency and supply voltage are and , respectively.

where and denote the voltage and capacity of the k-th processor, respectively. Lemma 1 shows the power consumption characteristics of processor sets whose total capacity is equal to the total utilization of a task set.

Table 4.

Dynamic power consumption of some feasible processor sets.

Lemma 1.

If , when scheduling a task set with on two processor sets and , the power consumption satisfies .

Proof of Lemma 1.

According to Equation (8), the power consumption measures of and are and respectively. Since and , there is no idle time when the tasks are scheduled. In addition, , where and . Hence, . ☐

According to Lemma 1, selecting the 0.8 capacity processor for scheduling the 0.6 and 0.2 utilization tasks in the task set, as shown in Table 1, will result in higher power consumption than selecting the 0.6 and 0.2 capacity processors for the scheduling the tasks. Therefore, assigning each task to the processor sets whose capacity is equal to its utilization is the most energy-efficient way when there are enough processors. Under the condition of , the processor whose capacity is equal to shows the lowest power consumption to execute the task with the utilization . Lemma 2 shows these characteristics.

Lemma 2.

When a task with utilization is executed on two processors under the condition of , their power consumption for processing the allocated workload during the task period is .

Proof of Lemma 2.

When a task set with the total time of is scheduled on n processors whose capacity is different, the power consumption required for processing the allocated workload on n processors is shown in Equation (9). , , …, represents the workload assigned to each processor.

If the total capacity of n processors is greater than the total utilization of a task set to be scheduled, there will be idle time during task scheduling. This means that the power consumption during the idle time should be taken into account to measure the processors’ power consumption required for scheduling the task set. The power consumption of n processors is shown in Equation (10). denotes the power consumption of the i-th processor during the idle time.

Lemma 3 and Theorem 3 show the power consumption required for scheduling a task set on a set of n processors with different capacities.

Lemma 3.

When the task set is scheduled with the processor set , the lowest power is consumed, where the total capacity of is .

Proof of Lemma 3.

If , scheduling involves no idle time, so the processor power consumption is . If , scheduling involves some idle time, so the processor power consumption based on Equation (10) is . Hence, if , then the lowest power consumption will be observed. ☐

Theorem 3.

Independently assigning each task in the task set τ to processors whose capacity is equal to the utilization of the task shows the lowest power consumption for scheduling a set of tasks.

Proof of Theorem 3.

This is easily proven by Lemmas 1–3. ☐

Therefore, selecting processors whose capacity is equal to the utilization of each task shows the lowest power consumption for scheduling a set of tasks.

3.2.2. Generalized Solution

When not assignable to a processor with the same capacity as the task’s utilization, it is necessary to select a processor set available for scheduling with the limited processors. Table 5 shows the characteristics of processors used for task scheduling. Table 6 shows the processor sets selected from the processors in Table 5 for scheduling the task set shown in Table 7.

Table 5.

Processor properties.

Table 6.

Selecting processors for scheduling a task set.

Table 7.

Task properties.

Since the processor sets , , , and shown in Table 6 satisfy Theorems 1 and 2, they can be used for task scheduling. However, since the processor sets are differently configured, the idle time during the task scheduling and the difference in their supply voltages result in their different power consumption. Therefore, the following two strategies should be considered to select energy-efficient processors.

Selecting a processor set for task scheduling in consideration of all the problems presented above is a NP-hard problem. Therefore, in this paper, we propose a heuristic method for selecting an energy-efficient processor set. In the proposed method, if the size of the current plane is smaller than , the processor in active mode is added to a processor set for task scheduling because it cannot be switched to sleep mode at the end of the previous plane. If the preferentially selected processors are not enough for scheduling the given task set, additional processors will be selected. Processors for scheduling are selected in terms of the local utilization of tasks from highest to lowest. Selecting processors for scheduling depends on the difference between the total local utilization of tasks in at the start time in each plane and the total capacity of the processors in . The selected processors are moved to . The following describes how to select processors.

- If , the processor with the smallest capacity is selected for scheduling in the given processor set, .

- If , the previously selected processor is used for scheduling without selecting an additional processor.

is the set of all the processors in the system. is the set of the selected processors for task scheduling. Algorithm 1 shows how to select processors for scheduling at the beginning of each plane. The function getMinimumCapacityProcessor(availableCapacity, τ, ) takes the available capacity (availableCapacity) of the previously selected processor into account to return the lowest capacity processor for scheduling the task set from the given processor set . The function add() adds elements to the set, and the function erase() removes elements from the set. The processors in indicate the processor in the sleep state in the plane. It is necessary to ensure a break-even time longer than the idle time in order to use DPM techniques for switching the state of a processor. To ensure the idle timeis long enough to enter the sleep mode, the idle time in the plane should be generated as much as possible on a single processor. To prevent unnecessary power consumption, a task is assigned to the selected processor whose capacity is the lowest for scheduling the task. For this reason, in the proposed method, the processors in the selected processor set are classified into the following categories: processors that can be used to the maximum extent in the plane and processors that can be used exclusively by a single task in the plane. That is, the processors in are classified into the following categories: , , and . The processors in represent a set of processors exclusively used by a single task. is the set of processors used to the maximum extent in the plane. is the set of processors that may result in idle time in the plane during task scheduling. The tasks to be executed on the classified processor sets are divided into the following categories: , , and . Tasks assigned to a processor set cannot be moved to another processor set. The following describes how to classify the processor sets.

| Algorithm 1 Processor selection of the beginning time of a T-L plane |

|

- To select a processor for scheduling a task in where the difference between the total local utilization of the tasks in at () and the total capacity of the processors in () is greater than zero: .

- If , the task is additionally assigned to a previously selected processor without selecting an additional processor. The assigned task is moved from to .

- If , the task is additionally assigned to a previously selected processor without selecting an additional processor. The assigned task is moved from to .

- If ,

- All processors and tasks in and are moved to and .

- The processor whose capacity is the lowest for scheduling a task , is selected from the following processor set, . If the capacity of the selected processor is equal to the local utilization () of the task , the processor and the task are moved to and , respectively. Otherwise, they are moved to and , respectively.

Algorithm 2 shows how to classify the processor set into the following categories: , , and . The task with the highest local utilization is considered first to classify the processor set and the task set. The function getFirstLocalUtilizationTask() returns the task with the highest local utilization in . The function getMinimumCapacityProcessor(availableCapacity, τ, ) takes availableCapacity into account to return the processor whose capacity is the lowest for scheduling a task in . If the capacity of the returned processor is equal to the local utilization of the task, the processor and the task is moved to and , respectively. If availableCapacity is 0, the processors in and the tasks in are moved to and .

3.3. Scheduling Strategy

In the paper written by Chen et al. [29] , there are two suggested methods for scheduling on a uniform multi-processors. However, event-t, event-s, and event-r presented above are not taken into account in these scheduling methods. In this section, we propose a new T-L plane based scheduling method in which event-t, event-s, and event-r are used to reduce the power consumption of a uniform multi-processors. When the , , and tasks are scheduled with the , , and processor sets, the tasks cannot be moved from one processor set to another in order to generate no idle time on the processors in and . The remaining part shows the movement of elements between task sets and processor sets and the processor assignment when a rescheduling event occurs. Since event-t as defined above targets identical multi-processors is not suitable for uniform multi-processors, it is redefined as in Definition 1.

Definition 1.

An event-t in uniform multi-processors occurs at if the following conditions are met.

- .

- where .

Algorithm 3 shows the process of assigning tasks to processors when a rescheduling event occurs. All the tasks in the set are moved to the set , and all the tasks in the set are deleted. The function eraseAll() removes all elements in the set . Tasks are assigned to processors in each processor set in the following order: , , and . The function getMaximumLocalUtilizationTask(p.c, , ) returns the task with the highest local utilization in and where the task can be performed on the processor with the capacity of p.c. The function getFirstLocalUtilizationTask( ) returns the task with the highest local utilization in and . The function allocateTaskToProcessor(, p) assigns the task to the processor p.

| Algorithm 2 Classification of selected processors for scheduling |

|

Algorithm 4 shows the movement of the elements between processor sets and task sets. When an event-b occurs, all the tasks which have triggered an event-b are moved to and are removed from . The function getEventTasks() returns all the tasks that have triggered the event-b. When an event-c or an event-f occurs, all the tasks that have triggered the event are moved to , and the processors that have triggered the event are moved to . The function getProcessor(, ) returns the processor with the capacity in . When an event-t occurs, the processors which can be switched to sleep mode are moved to and are removed from . When an event-s or an event-r occurs, all the tasks that have triggered the event are moved to and are removed from . The function reallocateProcessorTime() assigns the available processing time to a task with remaining execution time in . The assigned task is moved to .

| Algorithm 3 Assignment of tasks to processors at rescheduling |

|

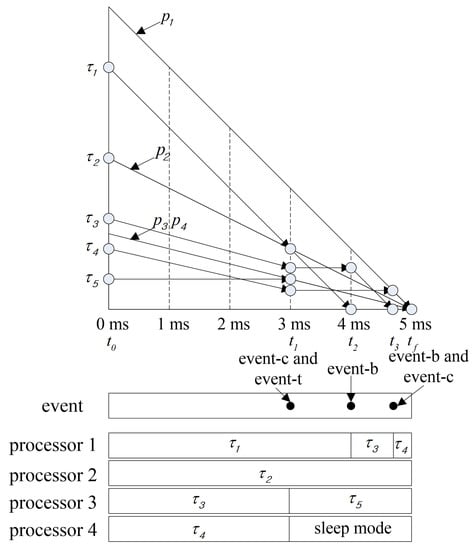

Figure 4 shows the scheduling in the first plane from the proposed method when scheduling the tasks of Table 8 on the processors listed in Table 9. Algorithm 2 is used to categorize the processor sets and ready tasks selected by Algorithm 1 at . Task that has triggered an event-c at and the processor whose capacity is equal to the local utilization of are moved to and , respectively. At the same time, the processor is moved to by event-t. Task that has triggered an event-b at is moved to . Task that has triggered an event-b at is moved to . At the same time, task that has triggered an event-c and the processor whose capacity is equal to the local utilization of are moved to and , respectively. Table 10 shows the elements added to the processor and task sets by Algorithm 4 at each event in the 1st plane. Tasks are assigned to processors by Algorithm 3. As shown in Figure 4, tasks assigned to processors move diagonally along the slope of the processor capacity and tasks unassigned to processors will move horizontally.

Figure 4.

A scheduling in the first plane.

Table 8.

Task properties.

Table 9.

Processor properties.

Table 10.

Example of sets at events in the plane.

| Algorithm 4 Movement of elements during rescheduling in the T-L plane |

|

4. Energy Efficiency on Uniform Multi-Processors

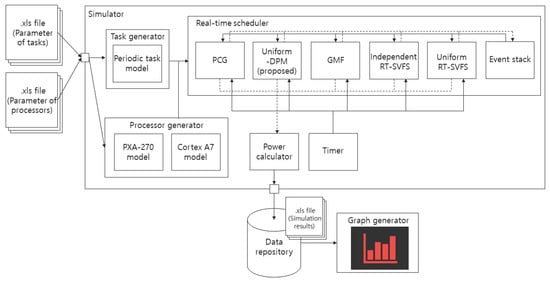

In this section, the performance of the proposed algorithm is compared with the major algorithms previously developed for power management. We implemented a simulator operating in Windows 10 using the Ruby language (version 2.4.1) for the experiments. Figure 5 illustrates the architecture of the simulator. The results of the simulation show the energy consumption for task executions, as well as the energy overheads associated with the state transitions.

Figure 5.

The architecture of the simulator.

4.1. Experiment Environment

The characteristics of the cortex-A7 core in Marvell’s MV78230, which is the Multi-Core ARMv7 system based on the chip processor, is used to set the experimental parameters of the processor in the simulator. This core supports dynamic frequency scaling and dynamic power down options. Table 11 and Table 12 show that cortex-A7 supports six frequency levels and five processor states. Run thermal is used in the stress test of the CPU. The deep idle and sleep modes consume the same energy with respect to the CPU. We consider the run typical, idle, and sleep modes in Table 12 for our experiment. WolfBot [16], which is a distributed mobile sensing platform, has ARMv7 based cortex processors.

Table 11.

Frequency levels of the cortex-A7 core.

Table 12.

Power states of the cortex-A7 core.

To confirm the scalability of the proposed algorithm, we change the number of available processors within the range 8–32. Then, we use the Emberson procedure to construct 100 task sets on each available processor. The total utilization of the task set is equal to 8, and the task has a utilization within 0.01–0.99. The period of each task is evenly distributed within 10–150 and simulated for 1000 system units.

4.2. Experiment Results and Analysis

Table 13 shows the platform type and power management technique of the algorithm to be simulated. The algorithm’s platform type is called “non-uniform” when the associated frequency of each processor is independently adjustable, and is called “uniform” when it can change all the frequencies at a constant rate when scaling the frequency of the processor. It is possible for each processor among the uniform multi-processors to operate at a different frequency. A job has a different execution time depending on which processor is allocated. These platforms are otherwise called “unrelated”.

Table 13.

Summary of the energy-efficient scheduling algorithms.

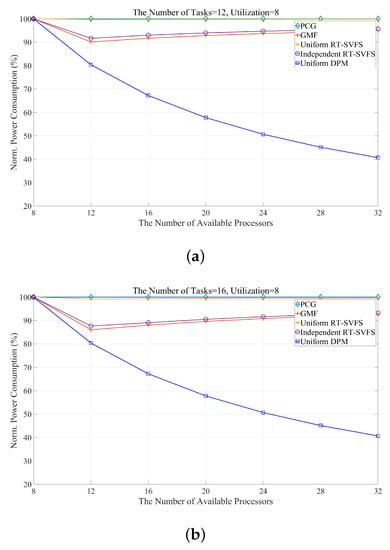

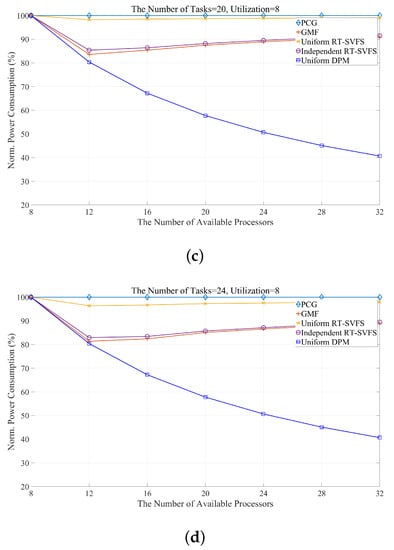

Figure 6 shows the power efficiency obtained by simulating the five algorithms mentioned in Table 13 while varying the number of available processors and the number of tasks. We implement our proposed algorithm as well as the following models: PCG, the original uniform algorithm without any power management [29]; Uniform-DPM, our proposed scheduling algorithm for DPM-embedded uniform multi-processors; GMF [31]; Independent RT-SVFS [30]; and Uniform RT-SVFS [30]. The x-axis of Figure 6 represents the number of available processors, and the y-axis represents the normalized power consumption (NPC). The power consumption consumed by the PCG is measured by the reference consumption and the power consumption rate of each algorithm. Figure 6 show the results when the number of tasks composing a task set is 12, 16, 20, and 24, respectively. All of the algorithms to be simulated is global optimal scheduling. Thus, since the total utilization of the task set used in the simulation is fixed at 8, the power efficiency of all algorithms shows 100% energy consumption in all scheduling using eight processors. As shown in Figure 6, the GMF and RT-SVFS algorithms change the power efficiency according to the number of tasks, while the proposed algorithm, Uniform-DPM, consumes the same a mount of power. This is because they always generate the same idle time. In addition, in the case of many available processors, the proposed algorithm shows high power efficiency by preventing unnecessary processor activation and idle time fragmentation, and by preventing frequent state transitions of the processor. GMF and independent RT-SVFS have similar power efficiencies because they determine the frequency of each processor independently. GMF finds a global optimal solution in the search spaces, but not Independent-SVFS. Thus, GMF is better than Independent-SVFS, as shown in Figure 6. Uniform RT-SVFS adjusts the frequency of all processors to a certain ratio, so if the number of tasks is small, the energy efficiency is not good because the work can be concentrated on some processor and the frequency of the processor cannot be lowered. However, as the number of tasks increases, the number of tasks can be divided and processed simultaneously by multiple processors, which can reduce the frequency of the processor. Table 14 and Table 15 show the energy efficiency characteristics of this proposed algorithm. Table 14 shows that Uniform-DPM always shows constant energy efficiency regardless of the number of tasks. Table 15 shows that the energy efficiency increases as the number of processors increases.

Figure 6.

Comparing the energy consumption of an energy-efficient approach while varying the number of tasks: (a) 12; (b) 16; (c) 20; and (d) 24.

Table 14.

Summary of the experimental results by varying the number of tasks.

Table 15.

Summary of the experimental results by varying the number of uniform processors.

5. Conclusions and Future Works

The lifetime of WSNs is closely related to the management of sensor nodes operating at limited energy. In this paper, we propose a power management method for sensor nodes supporting DPM-enabled uniform multi-processors. In the proposed approach, the selection of processors to process a set of tasks and the assignment of tasks to the selected processors have been proposed in terms of energy efficiency. In addition, we implement a simulator to measure the power consumption of various scheduling algorithms. The experimental results show that the proposed algorithms provide better scalability to the number of available processors than DVFS-based approaches. Currently, our proposed algorithms can handle periodic tasks with implicit deadlines. In future work, we plan to extend our algorithms to handle sporadic tasks with time constraint. We are very interested in combining the DVFS and DPM approaches for T-L plane abstraction as well. In addition, studies on trade-offs between the power usage and computational complexity, as well as performance evaluations on overloaded situations, would be interesting problems for potential future research.

Acknowledgments

This work was supported by the National Research Foundation of Korea (NRF) grant (NRF-2017R1D1A1B03029552, NRF-2017R1E1A1A01075803) funded by the Korea government (MSIP).

Author Contributions

Youngmin Kim and Chan-Gun Lee conceived and developed the algorithm; Ki-Seong Lee performed the experiments; Youngmin Kim and Ki-Seong Lee analyzed the data; Youngmin Kim and Chan-Gun Lee verified the results and finalized the paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Lymberopoulos, D.; Priyantha, N.B.; Zhao, F. mPlatform: A reconfigurable architecture and efficient data sharing mechanism for modular sensor nodes. In Proceedings of the 6th International Conference on Information Processing in Sensor Networks, Cambridge, MA, USA, 25–27 April 2007. [Google Scholar]

- Mittal, S. A survey of techniques for improving energy efficiency in embedded computing systems. Int. J. Comput. Aided Eng. Technol. 2014, 6, 440–459. [Google Scholar] [CrossRef]

- Tutuncuoglu, K.; Yener, A. Optimum transmission policies for battery limited energy harvesting nodes. IEEE Trans. Wirel. Commun. 2012, 11, 1180–1189. [Google Scholar] [CrossRef]

- Zhang, Y.; He, S.; Chen, J.; Sun, Y.; Shen, X.S. Distributed sampling rate control for rechargeable sensor nodes with limited battery capacity. IEEE Trans. Wirel. Commun. 2013, 12, 3096–3106. [Google Scholar] [CrossRef]

- Tan, Y.K.; Panda, S.K. Energy harvesting from hybrid indoor ambient light and thermal energy sources for enhanced performance of wireless sensor nodes. IEEE Trans. Ind. Electron. 2011, 58, 4424–4435. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, X.; Yin, J. Requirements of quality of service in wireless sensor network. In Proceedings of the International Conference on Systems and International Conference on Mobile Communications and Learning Technologies (ICN/ICONS/MCL 2006), Morne, Mauritius, 23–29 April 2006. [Google Scholar]

- Krishnarnurthy, R.; Alvandpour, A.; De, V.; Borkar, S. High-performance and low-power challenges for sub-70 nm microprocessor circuits. In Proceedings of the IEEE Custom Integrated Circuits Conference, Orlando, FL, USA, 15 May 2002. [Google Scholar]

- Venkatesh, G.; Sampson, J.; Goulding, N.; Garcia, S.; Bryksin, V.; Lugo-Martinez, J.; Swanson, S.; Taylor, M.B. Conservation cores: Reducing the energy of mature computations. In ACM SIGARCH Computer Architecture News; ACM: New York, NY, USA, 2010; pp. 205–218. [Google Scholar]

- Esmaeilzadeh, H.; Blem, E.; St Amant, R.; Sankaralingam, K.; Burger, D. Dark silicon and the end of multicore scaling. In ACM SIGARCH Computer Architecture News; ACM: New York, NY, USA, 2011; pp. 365–376. [Google Scholar]

- Smarr, L. Project Greenlight: Optimizing cyber-infrastructure for a carbon-constrained world. Computer 2010, 43, 22–27. [Google Scholar] [CrossRef]

- McLurkin, J.; Lynch, A.J.; Rixner, S.; Barr, T.W.; Chou, A.; Foster, K.; Bilstein, S. A low-cost multi-robot system for research, teaching, and outreach. In Distributed Autonomous Robotic Systems; Springer: New York, NY, USA, 2013; pp. 597–609. [Google Scholar]

- Mondada, F.; Bonani, M.; Raemy, X.; Pugh, J.; Cianci, C.; Klaptocz, A.; Magnenat, S.; Zufferey, J.C.; Floreano, D.; Martinoli, A. The e-puck, a robot designed for education in engineering. In Proceedings of the 9th Conference on Autonomous Robot Systems and Competitions, Castelo Branco, Portugal, 7 May 2009. [Google Scholar]

- Bonani, M.; Longchamp, V.; Magnenat, S.; Rétornaz, P.; Burnier, D.; Roulet, G.; Vaussard, F.; Bleuler, H.; Mondada, F. The marXbot, a miniature mobile robot opening new perspectives for the collective-robotic research. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2010), Taipei, Taiwan, 18–22 October 2010. [Google Scholar]

- Brutschy, A.; Pini, G.; Decugniere, A. Grippable Objects for the Foot-Bot; IRIDIA Technical Report, Technical Report TR/IRIDIA/2012-001; Université Libre de Bruxelles: Brussels, Belgium, 2012. [Google Scholar]

- Chen, P.; Ahammad, P.; Boyer, C.; Huang, S.I.; Lin, L.; Lobaton, E.; Meingast, M.; Oh, S.; Wang, S.; Yan, P.; et al. CITRIC: A low-bandwidth wireless camera network platform. In Proceedings of the Second ACM/IEEE International Conference on Distributed Smart Cameras (ICDSC 2008), Stanford, CA, USA, 7–11 September 2008. [Google Scholar]

- Betthauser, J.; Benavides, D.; Schornick, J.; O’Hara, N.; Patel, J.; Cole, J.; Lobaton, E. WolfBot: A distributed mobile sensing platform for research and education. In Proceedings of the 2014 Zone 1 Conference of the American Society for Engineering Education (ASEE Zone 1), Bridgeport, CT, USA, 3–5 April 2014. [Google Scholar]

- Chung, H.; Kang, M.; Cho, H.D. Heterogeneous Multi-Processing Solution of Exynos 5 Octa with ARM® big. LITTLE™ Technology. Samsung White Paper. 2012. Available online: https://www.arm.com/files/pdf/Heterogeneous_Multi_Processing_Solution_of_Exynos_5_Octa_with_ARM_bigLITTLE_Technology.pdf (accessed on 31 October 2017).

- Kamdar, S.; Kamdar, N. big. LITTLE architecture: Heterogeneous multicore processing. Int. J. Comput. Appl. 2015, 119, 1. [Google Scholar] [CrossRef]

- Youngmin, K.; Ki-Seong, L.; Byunghak, K.; Chan-Gun, L. TL Plane Based Real-Time Scheduling Using Dynamic Power Management. IEICE Trans. Inf. Syst. 2015, 98, 1596–1599. [Google Scholar]

- Kim, Y.; Lee, K.S.; Pham, N.S.; Lee, S.R.; Lee, C.G. TL Plane Abstraction-Based Energy-Efficient Real-Time Scheduling for Multi-Core Wireless Sensors. Sensors 2016, 16, 1054. [Google Scholar] [CrossRef] [PubMed]

- Jan, M.R.; Anantha, C.; Borivoje, N. Digital Integrated Circuits—A Design Perspective; Pearson Publishing: London, UK, 2003. [Google Scholar]

- Chen, G.; Huang, K.; Knoll, A. Energy optimization for real-time multiprocessor system-on-chip with optimal DVFS and DPM combination. ACM Trans. Embed. Comput. Syst. 2014, 13, 111. [Google Scholar] [CrossRef]

- Carpenter, J.; Funk, S.; Holman, P.; Srinivasan, A.; Anderson, J.H.; Baruah, S.K. A Categorization of Real-Time Multiprocessor Scheduling Problems and Algorithms In Handbook on Scheduling Algorithms, Methods, and Models; Chapman Hall/CRC: Boca Raton, FL, USA, 2004; Volume 19, pp. 1–30. [Google Scholar]

- Holman, P.; Anderson, J.H. Adapting Pfair scheduling for symmetric multiprocessors. J. Embed. Comput. 2005, 1, 543–564. [Google Scholar]

- Anderson, J.H.; Srinivasan, A. Pfair scheduling: Beyond periodic task systems. In Proceedings of the 2000 Seventh International Conference on Real-Time Computing Systems and Applications, Cheju Island, Korea, 12–14 December 2000. [Google Scholar]

- Levin, G.; Funk, S.; Sadowski, C.; Pye, I.; Brandt, S. DP-FAIR: A simple model for understanding optimal multiprocessor scheduling. In Proceedings of the 2010 22nd Euromicro Conference on Real-Time Systems (ECRTS), Brussels, Belgium, 6–9 July 2010. [Google Scholar]

- Cho, H.; Ravindran, B.; Jensen, E.D. An optimal real-time scheduling algorithm for multiprocessors. In Proceedings of the 27th IEEE International Real-Time Systems Symposium (RTSS’06), Rio de Janeiro, Brazil, 5–8 December 2006. [Google Scholar]

- Funk, S.H.; Meka, A. U-LLREF: An optimal scheduling algorithm for uniform multiprocessors. In Proceedings of the 9th Workshop on Models and Algorithms for Planning and Scheduling Problems, Abbey Rolduc, The Netherlands, 29 June–3 July 2009. [Google Scholar]

- Chen, S.Y.; Hsueh, C.W. Optimal dynamic-priority real-time scheduling algorithms for uniform multiprocessors. In Proceedings of the Real-Time Systems Symposium, Barcelona, Spain, 30 November–3 December 2008. [Google Scholar]

- Funaoka, K.; Kato, S.; Yamasaki, N. Energy-efficient optimal real-time scheduling on multiprocessors. In Proceedings of the 2008 11th IEEE International Symposium on Object Oriented Real-Time Distributed Computing (ISORC), Orlando, FL, USA, 5–7 May 2008. [Google Scholar]

- Moreno, G.A.; De Niz, D. An optimal real-time voltage and frequency scaling for uniform multiprocessors. In Proceedings of the 2012 IEEE 18th International Conference on Embedded and Real-Time Computing Systems and Applications (RTCSA), Seoul, Korea, 19–22 August 2012. [Google Scholar]

- Funaoka, K.; Takeda, A.; Kato, S.; Yamasaki, N. Dynamic voltage and frequency scaling for optimal real-time scheduling on multiprocessors. In Proceedings of the International Symposium on Industrial Embedded Systems (SIES 2008), Le Grande Motte, France, 11–13 June 2008. [Google Scholar]

- Horvath, E.C.; Lam, S.; Sethi, R. A level algorithm for preemptive scheduling. J. ACM 1977, 24, 32–43. [Google Scholar] [CrossRef]

- Funk, S.; Goossens, J.; Baruah, S. On-line scheduling on uniform multiprocessors. In Proceedings of the 22nd IEEE Real-Time Systems Symposium (RTSS 2001), London, UK, 3–6 December 2001. [Google Scholar]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).