Augmented Reality as a Telemedicine Platform for Remote Procedural Training

Abstract

:1. Introduction

1.1. Rural Healthcare Problems

1.2. Current Limitations

1.3. Explosion of Computer-Mediated Reality

1.4. Research Contributions

2. Background and Related Work

2.1. Augmented Reality Research in Medicine

2.2. Augmented Reality Research in Telemedicine

2.3. Remote Collaboration

2.4. Google Glass and Microsoft HoloLens

2.5. Advantages of the HoloLens

2.6. Disadvantages of the HoloLens

2.7. Research Focus

3. System Design

3.1. Prototypes

- Gyroscope-Controlled Probe: We established a connection between an Android phone with a HoloLens using a binary communication protocol called Thrift developed by Apache. The Android application collected the orientation information of the phone and transferred it to the HoloLens application. The HoloLens application then rendered a hologram correspondingly representing a virtual ultrasound transducer. Finally, in this prototype, users can control a hologram rotating via a gyroscope located inside a mobile phone.

- Video Conferencing: We established video conferencing between a desktop computer with a HoloLens using a local area network. Microsoft provides a built-in function called mixed reality capture (MRC) for HoloLens users. MRC enables us to capture a first-person view of the HoloLens and then present it to a remote computer. MRC is achieved by slicing the mixed reality view video into pieces and exposing those pieces through a built-in web server. Other devices can then play a smooth live video using HTTP Progressive Download. However, this could cause a noticeable latency between two ends.

- AR together with VR: This prototype mainly remained the same structure as the previous one. The only difference was the remote player. A Virtual Reality player on a mobile phone was responsible for playing the mixed reality video. A mobile-based headset was then used to watch the VR version of the first-person view of the HoloLens. In this prototype, the mixed reality view is not a 360 degree video. Therefore, the VR user could not control the vision inside the headset, and the content was actually controlled by the HoloLens user.

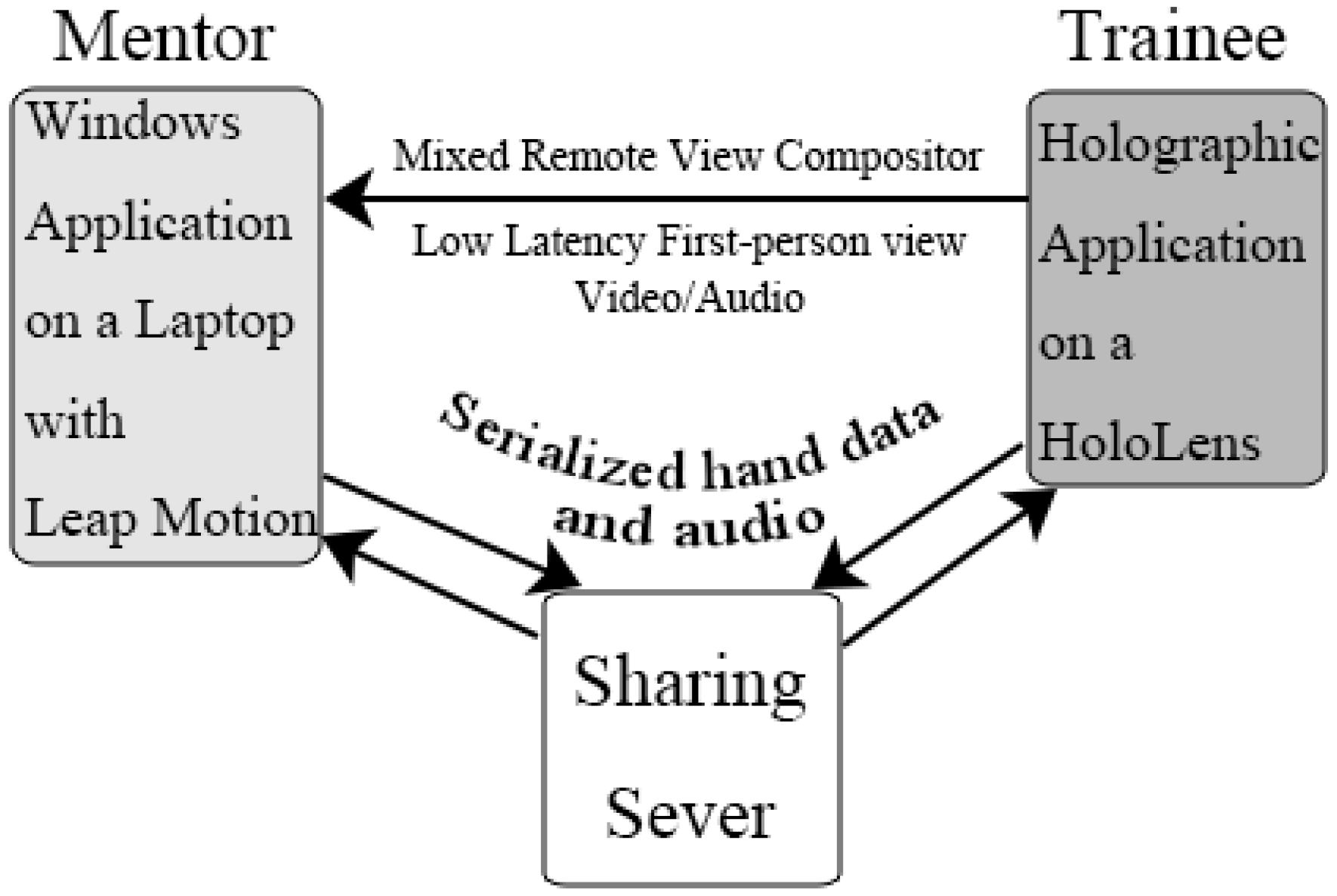

3.2. Final Design

- Latency is an important factor in the quality of the teleconference experience and should be kept to a minimum.

- Verbal communication is critical for mentoring. Video conferencing within the AR without two-way voice communication was found generally less valuable.

- Immersive VR HMD for the mentors creates more challenges and requires significant technical development prior to enhancing telemedicine.

- The simplicity and familiarity of conventional technology for the mentor was an important aspect that should remain in the proposed solution.

- Remote pointing and display of hand gestures from the mentor to the trainee would be helpful for training purposes.

- Specific to ultrasound teaching, a hologram with a hand model provided additional context for remote training.

- The Leap Motion sensor was used to capture the hand and finger motion of the mentor in order to project into the AR space of the trainee.

- Three static holograms depicting specific hand positions holding the ultrasound probe was generated and controlled by the mentor/Leap Motion.

- MRC (video, hologram and audio) was streamed to the mentor while the mentor’s voice and hologram representations of the mentors’ hand(s) was sent to the trainee to support learning.

- Hand model data captured by Leap Motion was serialized and bundled together with the mentor’s audio at a controlled rate to minimize latency while maintaining adequate communications.

3.2.1. The Mentor’s End

3.2.2. The Trainee’s End

3.2.3. Settings

4. Experimental Validation

4.1. Methods

4.1.1. Participants

4.1.2. Experimental Control

4.1.3. Procedure

4.1.4. Ethics Approvals

4.1.5. System Setup and Performance

4.1.6. Data and Analysis

5. Results

5.1. Trainees

5.2. Mentor

5.3. GRS

5.4. Completion Time, Mental Effort and Task Difficulty Ratings

6. Discussion

6.1. The Performance of the System

6.2. General Insights

6.3. Limitations

6.4. Privacy

6.5. Future Work

7. Conclusions

7.1. Main Contributions of this Research

- We have developed one of the first telemedicine mentoring systems using the Microsoft Hololens. We then demonstrated its viability and evaluated its suitability in practical use through a user study.

- We have tested various techniques and put them together inside the HoloLens, including: overlaying the holograms; controlling the hologram using a smart phone; implementing a videoconference with minimal latency; projecting Leap Motion recognized gestures inside the HoloLens. All of these attempts are meaningful and useful for HoloLens-related developers due to its novelty.

- We have found that the performance of the AR setup using the Hololens and Leap Motion did not show significant statistical difference when compared to a full telemedicine setup, demonstrating the viability of the system.

- Until August 2017, the documentation about HoloLens development is still scarce. When planning to develop a new application under the HoloLens, lack of support is currently a primary problem. We have provided a large amount of support material to follow up on this work, which could be considered a valuable asset for the research community.

Supplementary Materials

- A video about this study is available online at http://www.wsycarlos.com/teleholo_video.html.

- To provide an overview of the lessons learned in this research, the advantages and disadvantages of the different prototypes attempted to reach our proposed solution are illustrated in Appendix A.

- Specific technical details about the video streaming solutions explored for the HoloLens are discussed in Appendix B.

- Source code of the whole project can be accessed via https://bitbucket.org/wsycarlos/mrcleaphand.

- Mixed Remote View Compositor in HoloLensCompanionKit:

- Sharing Sever in HoloToolkit:

- Leap Motion for Unity Development

- AVPro Video plugin developed by RenderHeads

Acknowledgments

Author Contributions

Conflicts of Interest

Appendix A. Development of a Telemedicine Prototype Using the Hololens

Appendix A.1. Gyroscope-Controlled Probe

Appendix A.2. Video Conferencing

Appendix A.3. AR Together with VR

Appendix B. Video Streaming on the HoloLens

Appendix B.1. Web Real-Time Communication (WebRTC)

Appendix B.2. HTTP Live Streaming (HLS)

Appendix B.3. Real-Time Messaging Protocol (RTMP) and Real-Time Streaming Protocol (RTSP)

Appendix B.4. Dynamic Streaming over HTTP (DASH)

References

- Dussault, G.; Franceschini, M.C. Not enough there, too many here: Understanding geographical imbalances in the distribution of the health workforce. Hum. Resour. Health 2006, 4, 12. [Google Scholar] [CrossRef] [PubMed]

- Rural Health in Rural Hands: Strategic Directions for Rural, Remote, Northern and Aboriginal Communities. Available online: http://www.ruralontarioinstitute.ca/file.aspx?id=29b5ba0b-c6ce-489f-bb07-2febfb576daa (accessed on 9 October 2017).

- Aarnio, P.; Rudenberg, H.; Ellonen, M.; Jaatinen, P. User satisfaction with teleconsultations for surgery. J. Telemed. Telecare 2000, 6, 237–241. [Google Scholar] [CrossRef] [PubMed]

- Moehr, J.; Schaafsma, J.; Anglin, C.; Pantazi, S.; Grimm, N.; Anglin, S. Success factors for telehealth: A case study. Int. J. Med. Inform. 2006, 75, 755–763. [Google Scholar] [CrossRef] [PubMed]

- Doze, S.; Simpson, J.; Hailey, D.; Jacobs, P. Evaluation of a telepsychiatry pilot project. J. Telemed. Telecare 1999, 5, 38–46. [Google Scholar] [CrossRef] [PubMed]

- Mendez, I.; Jong, M.; Keays-White, D.; Turner, G. The use of remote presence for health care delivery in a northern Inuit community: A feasibility study. Int. J. Circumpolar Health 2013, 72. [Google Scholar] [CrossRef] [PubMed]

- Facebook Has Oculus, Google Has Cardboard. Available online: https://www.cnet.com/news/facebook-has-oculus-google-has-cardboard/ (accessed on 9 October 2017).

- Taylor, A.G. Develop Microsoft HoloLens Apps Now; Springer: New York, NY, USA, 2016. [Google Scholar]

- Riva, G. Medical Clinical Uses of Virtual Worlds. In The Oxford Handbook of Virtuality; Grimshaw, M., Ed.; Oxford University Press: New York, NY, USA, 2014; pp. 649–665. [Google Scholar]

- Müller, S.; Maier-Hein, L.; Mehrabi, A.; Pianka, F.; Rietdorf, U.; Wolf, I.; Grenacher, L.; Richter, G.; Gutt, C.; Schmidt, J.; et al. Creation and establishment of a respiratory liver motion simulator for liver interventions. Med. Phys. 2007, 34, 4605–4608. [Google Scholar] [CrossRef] [PubMed]

- Ogata, T.; Onuki, J.; Takahashi, K.; Fujimoto, T. The use of computer-assisted system in ear surgery. Oto-Rhino-Laryngol. Tokyo 2005, 48, 47–51. [Google Scholar]

- Meola, A.; Cutolo, F.; Carbone, M.; Cagnazzo, F.; Ferrari, M.; Ferrari, V. Augmented reality in neurosurgery: A systematic review. Neurosurgical rev. 2016, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Bly, R.A.; Chang, S.H.; Cudejkova, M.; Liu, J.J.; Moe, K.S. Computer-guided orbital reconstruction to improve outcomes. JAMA Facial Plast. Surg. 2013, 15, 113–120. [Google Scholar] [CrossRef] [PubMed]

- Qu, M.; Hou, Y.; Xu, Y.; Shen, C.; Zhu, M.; Xie, L.; Wang, H.; Zhang, Y.; Chai, G. Precise positioning of an intraoral distractor using augmented reality in patients with hemifacial microsomia. J. Cranio-Maxillofac. Surg. 2015, 43, 106–112. [Google Scholar] [CrossRef] [PubMed]

- Badiali, G.; Ferrari, V.; Cutolo, F.; Freschi, C.; Caramella, D.; Bianchi, A.; Marchetti, C. Augmented reality as an aid in maxillofacial surgery: Validation of a wearable system allowing maxillary repositioning. J. Cranio-Maxillofac. Surg. 2014, 42, 1970–1976. [Google Scholar] [CrossRef] [PubMed]

- Herron, J. Augmented Reality in Medical Education and Training. J. Electron. Resour. Med. Lib. 2016, 13, 51–55. [Google Scholar] [CrossRef]

- Foronda, C.L.; Alfes, C.M.; Dev, P.; Kleinheksel, A.; Nelson Jr, D.A.; O’Donnell, J.M.; Samosky, J.T. Virtually Nursing: Emerging Technologies in Nursing Education. Nurse Educ. 2017, 42, 14–17. [Google Scholar] [CrossRef] [PubMed]

- Alem, L.; Tecchia, F.; Huang, W. HandsOnVideo: Towards a gesture based mobile AR system for remote collaboration. In Recent Trends of Mobile Collaborative Augmented Reality Systems; Springer: New York, NY, USA, 2011; pp. 135–148. [Google Scholar]

- Tecchia, F.; Alem, L.; Huang, W. 3D helping hands: a gesture based MR system for remote collaboration. In Proceedings of the 11th ACM SIGGRAPH International Conference on Virtual-Reality Continuum and Its Applications in Industry, Singapore, 2–4 December 2012; pp. 323–328. [Google Scholar]

- Anton, D.; Kurillo, G.; Yang, A.Y.; Bajcsy, R. Augmented Telemedicine Platform for Real-Time Remote Medical Consultation. In Proceedings of the International Conference on Multimedia Modeling, Reykjavík, Iceland, 4–6 January 2017; pp. 77–89. [Google Scholar]

- Kurillo, G.; Yang, A.Y.; Shia, V.; Bair, A.; Bajcsy, R. New Emergency Medicine Paradigm via Augmented Telemedicine. In Proceedings of the International Conference on Virtual, Augmented and Mixed Reality, Toronto, ON, Canada, 17–22 July 2016; pp. 502–511. [Google Scholar]

- Riva, G.; Dakanalis, A.; Mantovani, F. Leveraging Psychology of Virtual Body for Health and Wellness. In The Handbook of the Psychology of Communication Technology; Sundar, S.S., Ed.; John Wiley & Sons, Ltd.: Chichester, UK, 2015; pp. 528–547. [Google Scholar]

- Carbone, M.; Freschi, C.; Mascioli, S.; Ferrari, V.; Ferrari, M. A Wearable Augmented Reality Platform for Telemedicine. In Proceedings of the International Conference on Virtual, Augmented and Mixed Reality, Toronto, ON, Canada, 17–22 July 2016; pp. 92–100. [Google Scholar]

- Shenai, M.B.; Dillavou, M.; Shum, C.; Ross, D.; Tubbs, R.S.; Shih, A.; Guthrie, B.L. Virtual interactive presence and augmented reality (VIPAR) for remote surgical assistance. Oper. Neurosurg. 2011, 68, ons200–ons207. [Google Scholar] [CrossRef] [PubMed]

- Antón, D.; Kurillo, G.; Goñi, A.; Illarramendi, A.; Bajcsy, R. Real-time communication for Kinect-based telerehabilitation. Future Gener. Comput. Syst. 2017, 75, 72–81. [Google Scholar] [CrossRef]

- Oyekan, J.; Prabhu, V.; Tiwari, A.; Baskaran, V.; Burgess, M.; Mcnally, R. Remote real-time collaboration through synchronous exchange of digitised human–workpiece interactions. Future Gener. Comput. Syst. 2017, 67, 83–93. [Google Scholar] [CrossRef]

- Muensterer, O.; Lacher, M.; Zoeller, C.; Bronstein, M.; Kübler, J. Google Glass in pediatric surgery: An exploratory study. Int. J. Surg. 2014, 12, 281–289. [Google Scholar] [CrossRef] [PubMed]

- Cicero, M.X.; Walsh, B.; Solad, Y.; Whitfill, T.; Paesano, G.; Kim, K.; Baum, C.R.; Cone, D.C. Do you see what I see? Insights from using google glass for disaster telemedicine triage. Prehospital Disaster Med. 2015, 30, 4–8. [Google Scholar] [CrossRef] [PubMed]

- Knight, H.; Gajendragadkar, P.; Bokhari, A. Wearable technology: Using Google Glass as a teaching tool. BMJ Case Rep. 2015. [Google Scholar] [CrossRef] [PubMed]

- Moshtaghi, O.; Kelley, K.; Armstrong, W.; Ghavami, Y.; Gu, J.; Djalilian, H. Using Google Glass to solve communication and surgical education challenges in the operating room. Laryngoscope 2015, 125, 2295–2297. [Google Scholar] [CrossRef] [PubMed]

- Widmer, A.; Müller, H. Using Google Glass to enhance pre-hospital care. Swiss Med. Inf. 2014, 30, 1–4. [Google Scholar]

- Cui, N.; Kharel, P.; Gruev, V. Augmented reality with Microsoft HoloLens Holograms for Near Infrared Fluorescence Based Image Guided Surgery. Proc. SPIE 2017, 10049. [Google Scholar] [CrossRef]

- Pham, T.; Tang, A. User-Defined Gestures for Holographic Medical Analytics. In Proceedings of the Graphics Interface, Edmonton, Alberta, 16–19 May 2017. [Google Scholar]

- Beck, S.; Kunert, A.; Kulik, A.; Froehlich, B. Immersive group-to-group telepresence. IEEE Trans. Vis. Comput. Graph. 2013, 19, 616–625. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Cai, Q.; Chou, P.; Zhang, Z.; Martin-Brualla, R. Viewport: A distributed, immersive teleconferencing system with infrared dot pattern. IEEE Trans. Multimedia 2013, 20, 17–27. [Google Scholar] [CrossRef]

- Hachman, M. We Found 7 Critical HoloLens Details That Microsoft Hid Inside Its Developer Docs. Available online: http://www.pcworld.com/article/3039822/consumer-electronics/we-found-7-critical-hololens-details-that-microsoft-hid-inside-its-developer-docs.html (accessed on 9 October 2017).

- Looker, J.; Garvey, T. Reaching for Holograms. Available online: http://www.dbpia.co.kr/Journal/ArticleDetail/NODE06588268 (accessed on 9 October 2017).

- Munz, G. Microsoft Hololens May Cause Discomfort as It Gets Extremely Hot. Available online: https://infinityleap.com/microsoft-hololens-may-cause-discomfort-as-it-gets-extremely-hot/ (accessed on 9 October 2017).

- Shapiro, A.; McDonald, G. I’m not a real doctor, but I play one in virtual reality: Implications of virtual reality for judgments about reality. J. Commun. 1992, 42, 94–114. [Google Scholar] [CrossRef]

- Kaltenborn, K.; Rienhoff, O. Virtual reality in medicine. Methods Inf. Med. 1993, 32, 407–417. [Google Scholar] [PubMed]

- De Lattre, A.; Bilien, J.; Daoud, A.; Stenac, C.; Cellerier, A.; Saman, J.P. VideoLAN Streaming Howto. Available online: https://pdfs.semanticscholar.org/5124/351d69bd3cbd95eca1e282fb8da05cd3761c.pdf (accessed on 9 October 2017).

- Black, H.; Sheppard, G.; Metcalfe, B.; Stone-McLean, J.; McCarthy, H.; Dubrowski, A. Expert facilitated development of an objective assessment tool for point-of-care ultrasound performance in undergraduate medical education. Cureus 2016, 8, 6. [Google Scholar] [CrossRef] [PubMed]

- DeLeeuw, K.E.; Mayer, R.E. A comparison of three measures of cognitive load: Evidence for separable measures of intrinsic, extraneous, and germane load. J. Educ. Psychol. 2008, 100, 223. [Google Scholar] [CrossRef]

- Pourazar, B.; Meruvia-Pastor, O. A Comprehensive Framework for Evaluation of Stereo Correspondence Solutions in Immersive Augmented and Virtual Realities. J. Virtual Reality Broadcast. 2016, 13, 2. [Google Scholar]

- Howard, I.P.; Rogers, B.J. Binocular vision and stereopsis; Oxford University Press: New York, NY, USA, 1995. [Google Scholar]

- Staff, C. Address the consequences of AI in advance. Commun. ACM 2017, 60, 10–11. [Google Scholar] [CrossRef]

- Anvari, M.; Broderick, T.; Stein, H.; Chapman, T.; Ghodoussi, M.; Birch, D.W.; Mckinley, C.; Trudeau, P.; Dutta, S.; Goldsmith, C.H. The impact of latency on surgical precision and task completion during robotic-assisted remote telepresence surgery. Comput. Aided Surg. 2005, 10, 93–99. [Google Scholar] [CrossRef] [PubMed]

- Geelhoed, E.; Parker, A.; Williams, D.J.; Groen, M. Effects of Latency on Telepresence. Available online: http://shiftleft.com/mirrors/www.hpl.hp.com/techreports/2009/HPL-2009-120.pdf (accessed on 9 October 2017).

- Prochaska, M.T.; Press, V.G.; Meltzer, D.O.; Arora, V.M. Patient Perceptions of Wearable Face-Mounted Computing Technology and the Effect on the Doctor-Patient Relationship. Appl. Clin. Inf. 2016, 7, 946–953. [Google Scholar] [CrossRef] [PubMed]

- Microsoft. Mixed Reality Capture for Developers. Available online: https://developer.microsoft.com/en-us/windows/mixed-reality/mixed_reality_capture_for_developers (accessed on 9 October 2017).

- Aloman, A.; Ispas, A.; Ciotîrnae, P.; Sanchez-Iborra, R.; Cano, M.D. Performance evaluation of video streaming using MPEG DASH, RTSP, and RTMP in mobile networks. In Proceedings of the IFIP Wireless and Mobile Networking Conference (WMNC), Munich, Germany, 5–7 October 2015; pp. 144–151. [Google Scholar]

| HoloLens Score Out of 5 (Standard Deviation) | Full Telemedicine Set-Up Score Out of 5 (Standard Deviation) | p-Value | t-Value | Degree of Freedom | |

|---|---|---|---|---|---|

| The technology was easy to setup and use | 4.08(0.90) | 4.67(0.49) | 0.065 | 1.969 | 17.039 |

| The technology enhanced my ability to generate a suitable ultrasound image | 4.50(0.67) | 4.58(0.51) | 0.737 | 0.340 | 22 |

| The technology was overly complex | 1.92(0.79) | 1.42(0.51) | 0.081 | −1.832 | 22 |

| HoloLens Score Out of 5 (Standard Deviation) | Full Telemedicine Set-Up Score Out of 5 (Standard Deviation) | p-Value | t-Value | Degree of Freedom | |

|---|---|---|---|---|---|

| I was able to telementor the student effectively | 2.92(1.00) | 3.67(0.65) | 0.04 | 2.183 | 22 |

| The technology was effective in enhancing remote ultrasound training | 2.50(1.17) | 3.75(0.45) | 0.004 | 3.458 | 14.227 |

| I would be able to mentor a trainee in a real-life stressful situation with this technology | 2.25(1.14) | 3.42(0.67) | 0.007 | 3.062 | 17.783 |

| HoloLens Score Out of 5 (Standard Deviation) | Full Telemedicine Set-Up Score Out of 5 (Standard Deviation) | p-Value | t-Value | Degree of Freedom | |

|---|---|---|---|---|---|

| Preparation for Procedure | 2.92(0.79) | 3.00(0.60) | 0.775 | 0.290 | 22 |

| Patient Interaction | 3.00(0.43) | 3.08(0.51) | 0.670 | 0.432 | 22 |

| Image Optimization | 3.00(0.60) | 3.08(0.51) | 0.719 | 0.364 | 22 |

| Probe Technique | 2.83(0.58) | 2.83(0.72) | 1.000 | 0.000 | 22 |

| Overall Performance | 2.75(0.62) | 2.91(0.67) | 0.534 | 0.632 | 22 |

| HoloLens Score (Standard Deviation) | Full Telemedicine Set-Up Score (Standard Deviation) | p-Value | |

|---|---|---|---|

| Completion Time (Seconds) | 536.00(142.11) | 382.25(124.09) | 0.008 |

| HoloLens Score (Standard Deviation) | Full Telemedicine Set-Up Score (Standard Deviation) | p-Value | t-Value | Degree of Freedom | |

|---|---|---|---|---|---|

| Mental Effort Score out of 9 | 3.83(1.59) | 4.58(1.73) | 0.280 | 1.107 | 22 |

| Task Difficulty Score out of 9 | 3.42(1.31) | 4.25(1.66) | 0.186 | 1.365 | 22 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, S.; Parsons, M.; Stone-McLean, J.; Rogers, P.; Boyd, S.; Hoover, K.; Meruvia-Pastor, O.; Gong, M.; Smith, A. Augmented Reality as a Telemedicine Platform for Remote Procedural Training. Sensors 2017, 17, 2294. https://doi.org/10.3390/s17102294

Wang S, Parsons M, Stone-McLean J, Rogers P, Boyd S, Hoover K, Meruvia-Pastor O, Gong M, Smith A. Augmented Reality as a Telemedicine Platform for Remote Procedural Training. Sensors. 2017; 17(10):2294. https://doi.org/10.3390/s17102294

Chicago/Turabian StyleWang, Shiyao, Michael Parsons, Jordan Stone-McLean, Peter Rogers, Sarah Boyd, Kristopher Hoover, Oscar Meruvia-Pastor, Minglun Gong, and Andrew Smith. 2017. "Augmented Reality as a Telemedicine Platform for Remote Procedural Training" Sensors 17, no. 10: 2294. https://doi.org/10.3390/s17102294

APA StyleWang, S., Parsons, M., Stone-McLean, J., Rogers, P., Boyd, S., Hoover, K., Meruvia-Pastor, O., Gong, M., & Smith, A. (2017). Augmented Reality as a Telemedicine Platform for Remote Procedural Training. Sensors, 17(10), 2294. https://doi.org/10.3390/s17102294