Euro Banknote Recognition System for Blind People

Abstract

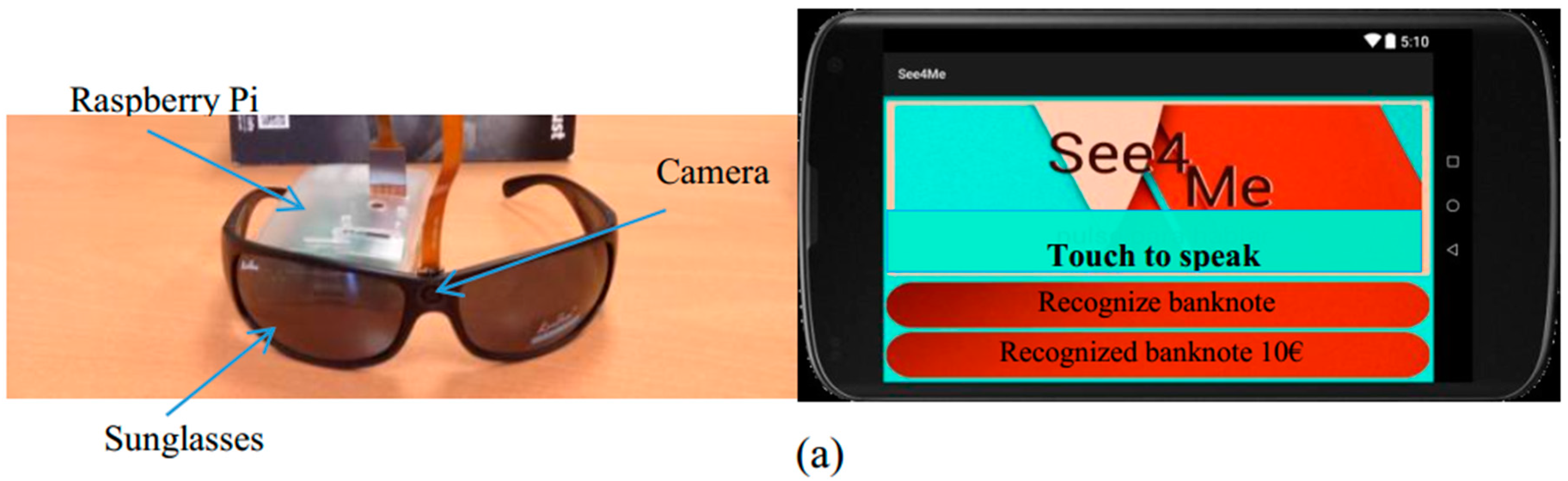

:1. Introduction

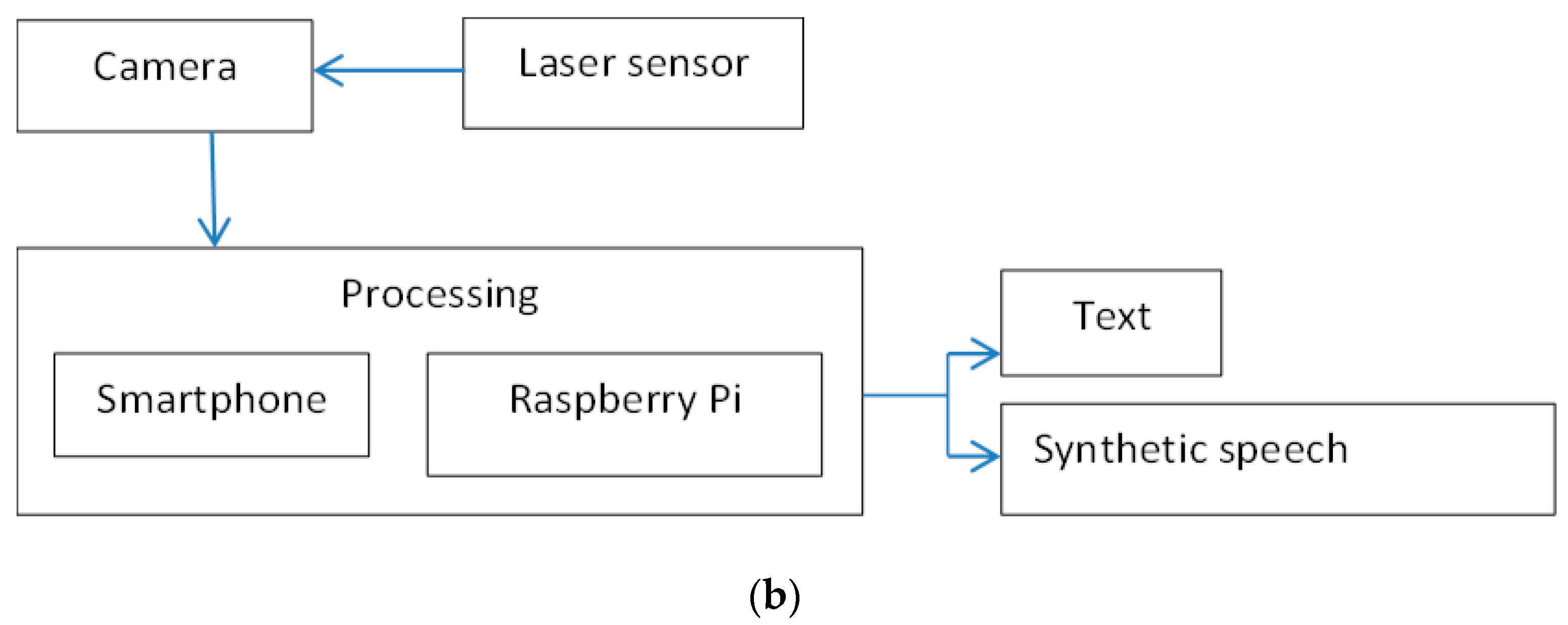

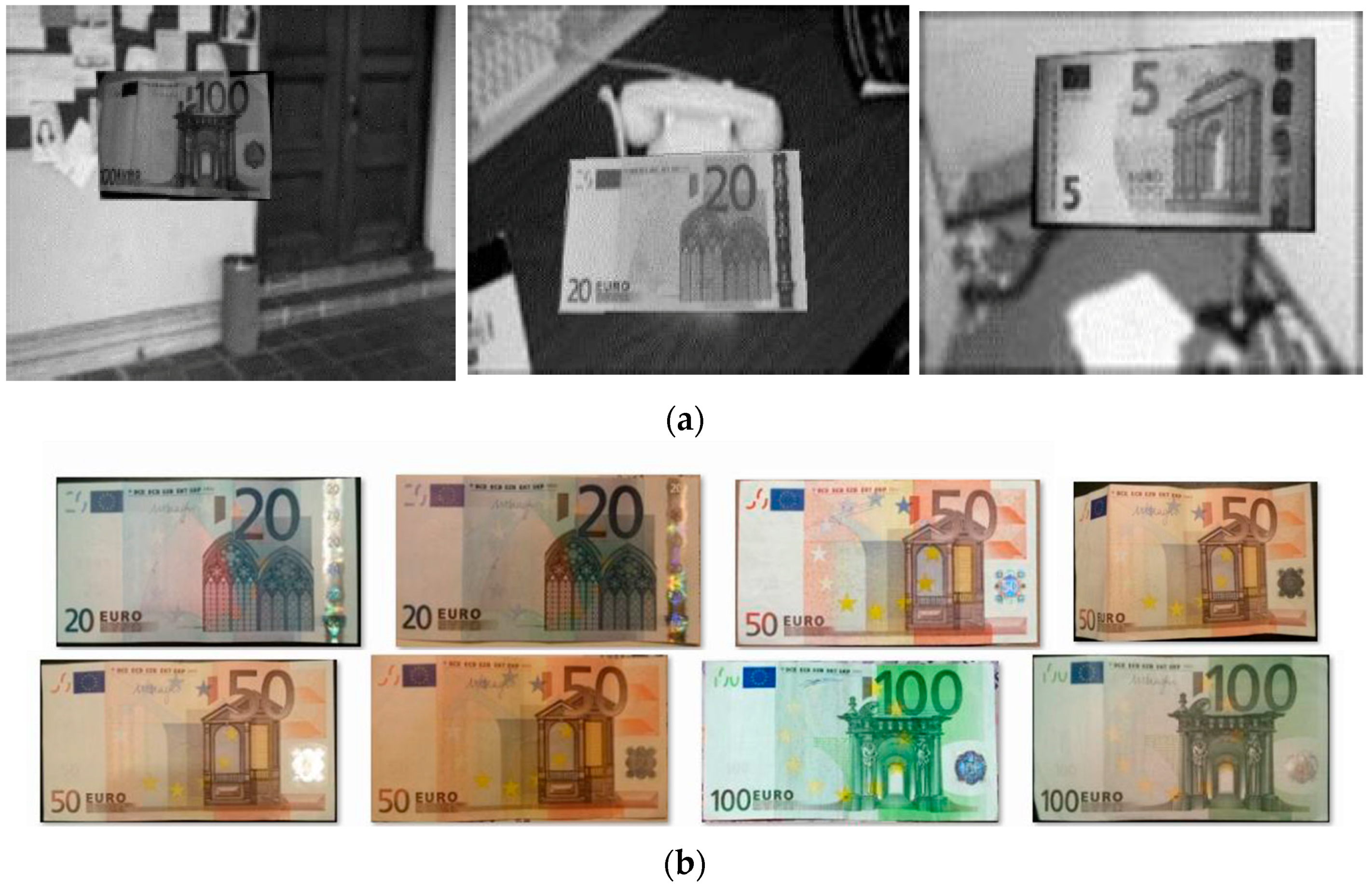

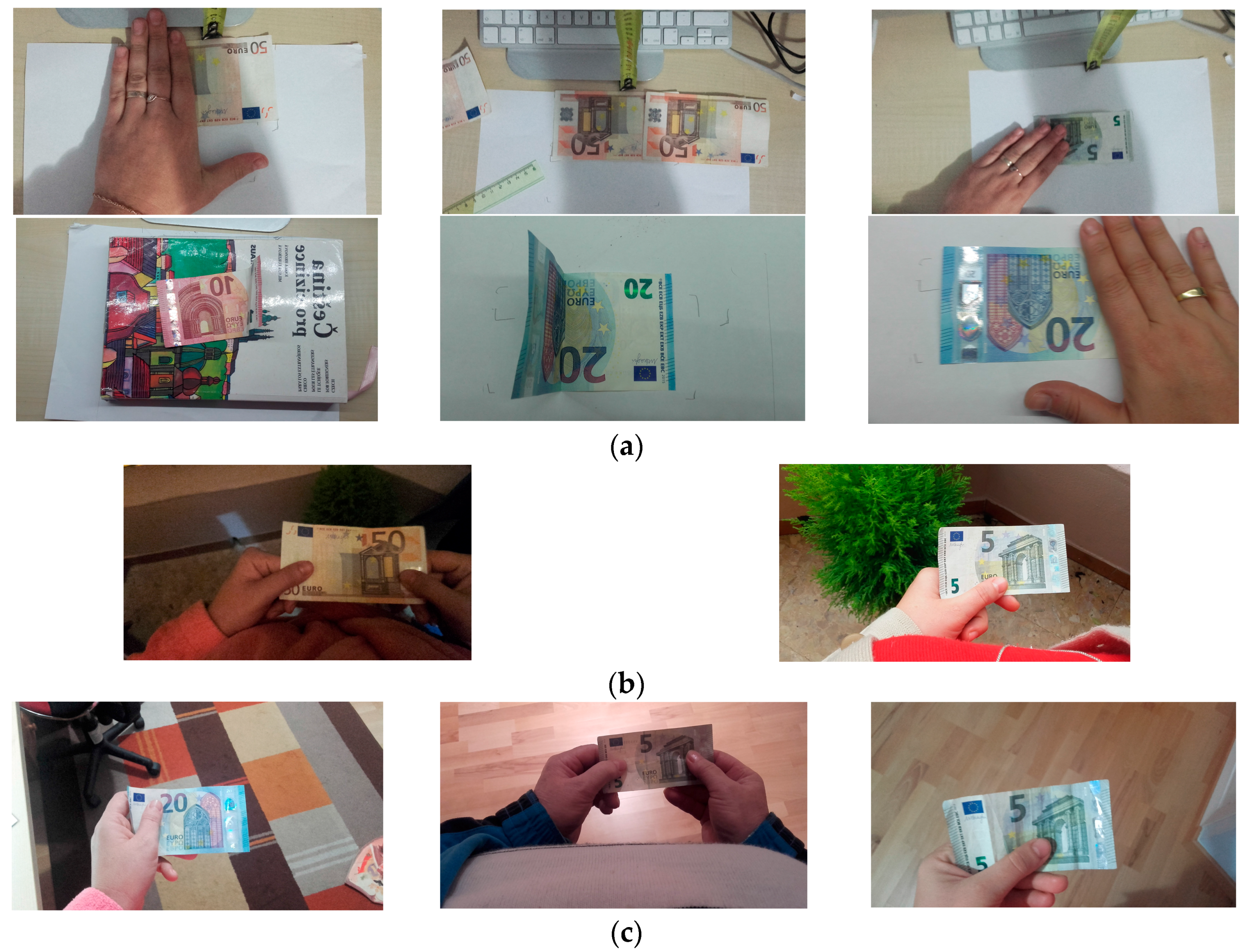

2. Materials

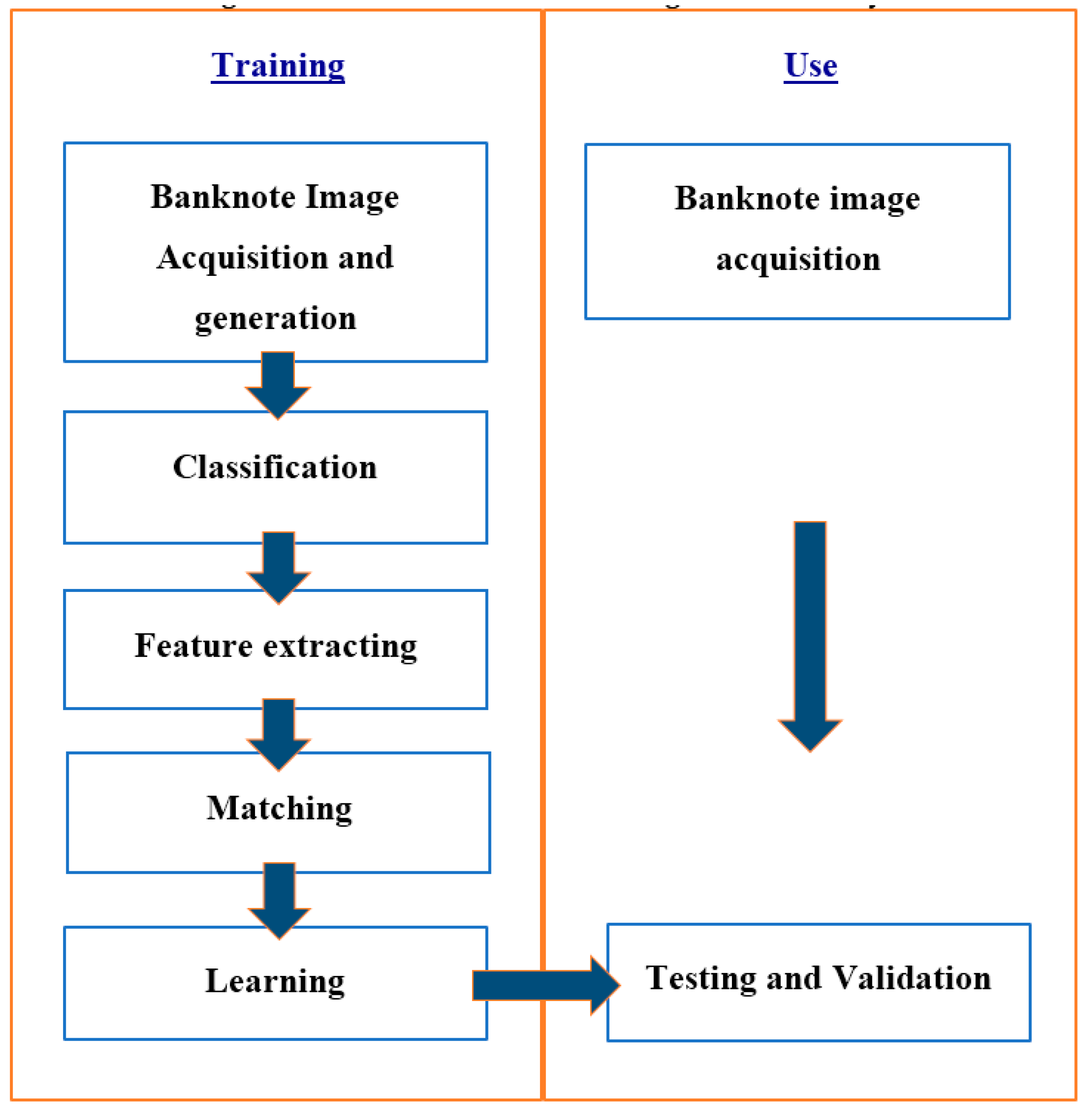

3. Methods

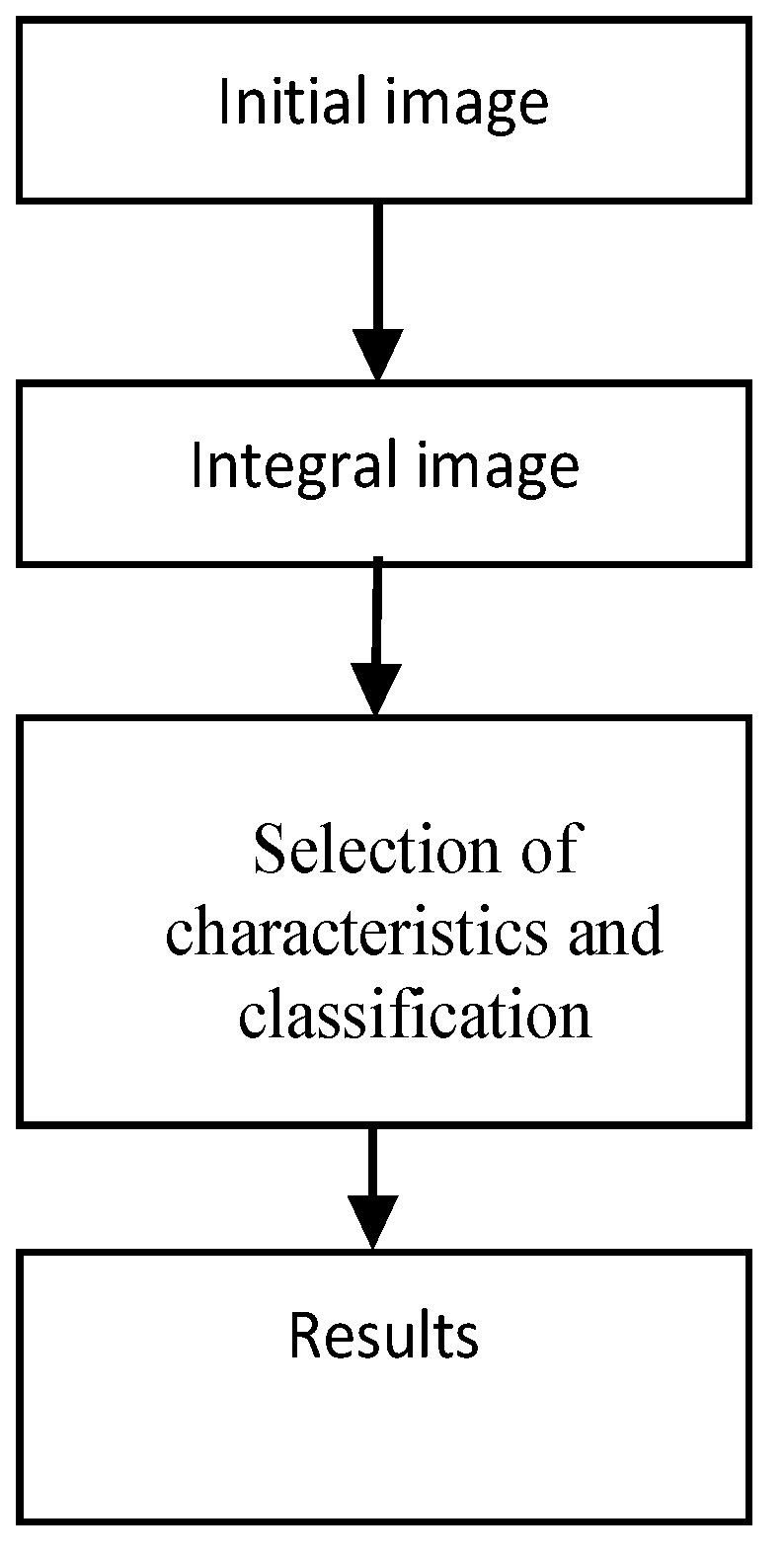

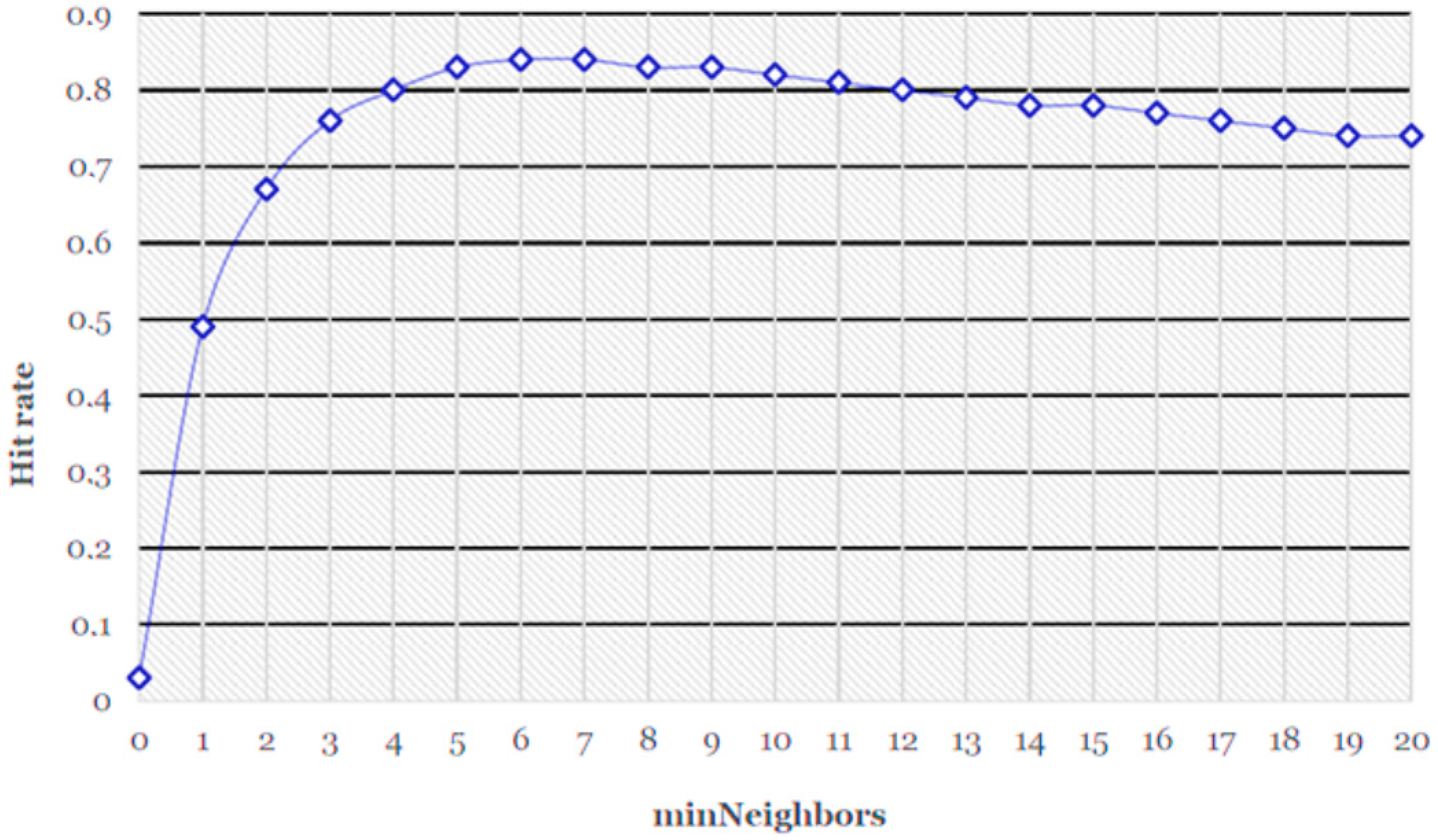

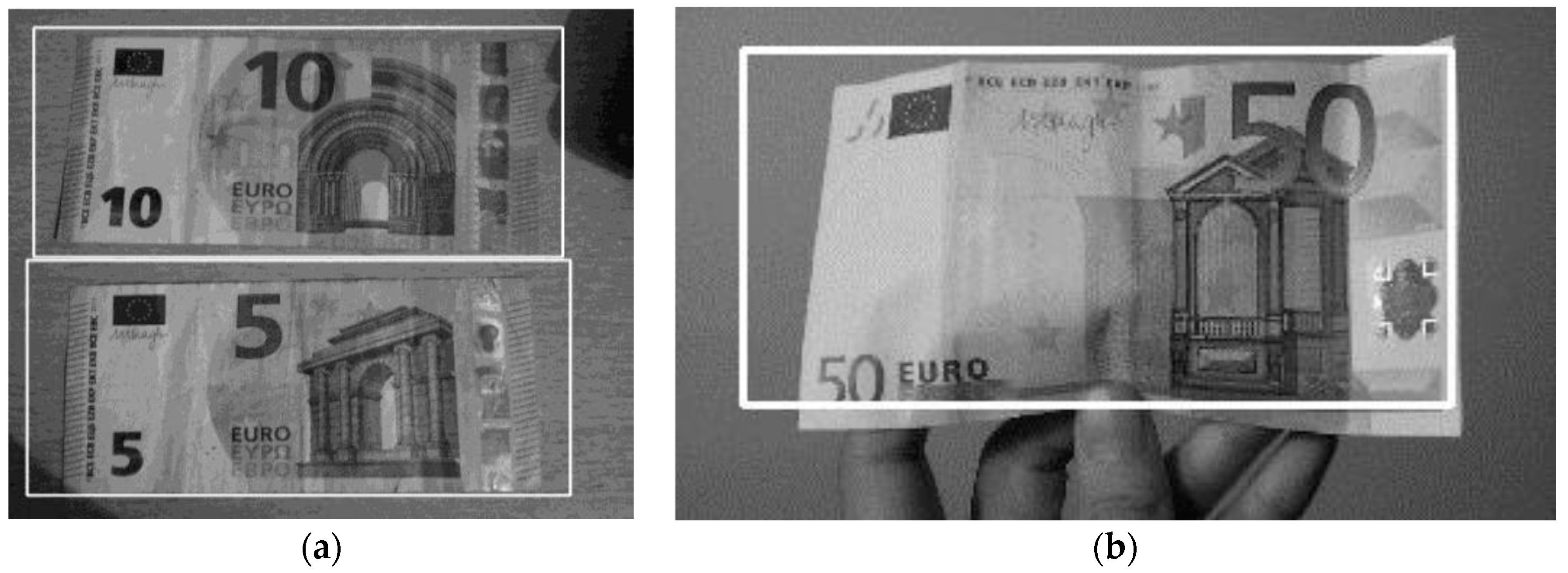

3.1. Euro Banknote Detection

3.1.1. Integral Image

3.1.2. Selection of Characteristics and Classification

3.2. Banknote Recognition Algorithms

4. Experiments and Results

4.1. Banknote Detection Results

4.2. Banknote Recognition Results

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- WBU (World Blind Union). Available online: http://www.who.int/mediacentre/factsheets/fs282/en/ (accessed on 28 April 2013).

- Koenes, S.G.; Karshmer, J.F. Depression: A comparison study between blind and sighted adolescents. Issues Ment. Health Nurs. 2000, 21, 269–279. [Google Scholar] [CrossRef] [PubMed]

- Augustin, A.; Sahel, J.A.; Bandello, F.; Dardennes, R.; Maurel, F.; Negrini, C.; Hieke, K.; Berdeaux, G. Anxiety and depression prevalence rates in age-related macular degeneration. Investig. Ophthalmol. Vis. Sci. 2007, 48, 1498–1503. [Google Scholar] [CrossRef] [PubMed]

- Burmedi, D.; Becker, S.; Heyl, V.; Wahl, H.-W.; Himmelsbach, I. Emotional and social consequences of age-related low vision: A narrative review. Vis. Impair. Res. 2002, 4, 47–71. [Google Scholar] [CrossRef]

- Banco Central Europeo, Our Currency. Available online: https://www.ecb.europa.eu/euro/banknotes/html/index.es.html (accessed on 5 April 2016).

- Klatzky, R.L.; Lederman, S.J.; Reed, C. Haptic integration of object properties: Texture, hardness, and planar contour. J. Exp. Psychol. Hum. Percept. Perform. 1989, 5, 385–395. [Google Scholar] [CrossRef]

- Aoba, M.; Kikuchi, T.; Takefuji, Y. Euro banknote recognition system using a three-layered perception and RBF networks. IPSJ Trans. Med. Model. Its Appl. 2003, 44, 99–108. [Google Scholar]

- Vila, A.; Ferrer, N.; Mantecon, J.; Bretón, D.; García, J.F. Development of a fast and non-destructive procedure for characterizing and distinguishing original and fake euro notes. Anal. Chim. Acta 2006, 559, 257–263. [Google Scholar] [CrossRef]

- Lee, J.-K.; Jeon, S.-G.; Kim, I.-H. Distinctive point extraction and recognition algorithm for various kinds of euro banknotes. Int. J. Control Autom. Syst. 2004, 2, 201–206. [Google Scholar]

- Hasanuzzaman, F.M.; Yang, X.; Tian, Y.-L. Robust and effective component-based banknote recognition for the blind. IEEE Trans. Syst. Man Cybern. CAppl. Rev. 2012, 42, 1021–1030. [Google Scholar] [CrossRef] [PubMed]

- Takeda, F.; Omatu, S. High speed paper currency recognition by neural networks. IEEE Trans. Neural Netw. 1995, 6, 73–77. [Google Scholar] [CrossRef] [PubMed]

- Llive, C.; Roberto, Ch.; Ayala, G.; Andrés, C. Desarrollo e Implementación de un Software de Reconocimiento de Dólares Americanos Dirigido a Personas con Discapacidad Visual Utilizando Teléfonos Móviles Inteligentes con Sistema Operativo Android. Available online: http://repositorio.espe.edu.ec/xmlui/handle/21000/4752 (accessed on 5 April 2016). (In Spanish)

- Young Ho, P.; Seung Yong, K.; Tuyen Danh, P.; Kang Ryoung, P.; Dae Sik, J.; Sungsoo, Y. A high performance recognition system based on a one-dimensional visible light line sensor. Sens. J. 2015, 15, 14093–14115. [Google Scholar]

- Mumle, D.; Dravid, A. A study of computer vision techniques for currency recognition on mobile phone for the visually impaired. Int. J. Adv. Res. Comput. Sci. Softw. Eng. 2014, 4, 160–165. [Google Scholar]

- García-Lamont, F.; Cervantes, J.; López, A. Recognition of Mexican banknotes via their color and texture features. Expert Syst. Appl. 2012, 39, 9551–9660. [Google Scholar] [CrossRef]

- Safraz, M. An intelligent paper currency recognition system. Proc. Comput. Sci. 2015, 65, 538–545. [Google Scholar] [CrossRef]

- Hasanuzzaman, F.M.; Yang, X.; Tian, Y. Robust and effective component-based banknote recognition by SURF features. In Proceedings of the 20th Annual Wireless and Optical Communications Conference, Newark, NJ, USA, 15–16 April 2011; pp. 1–6.

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Kauai, HI, USA, 8–14 December 2001.

- Bay, H.; Ess, A.; Tuytelaass, T.; van Gool, L. Speed-Up Robust Features (SURF). Comput. Vis. Image Underst. J. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Papageorgiou, C.P.; Oren, M.; Poggio, T. A general framework for object detection. In Proceedings of the Sixth International Conference on Computer Vision ICV, Bombay, India, 4–7 January 1998; pp. 555–562.

- Lienhart, R.; Maydt, J. An extended set of haar-like features for rapid object detection. In Proceedings of the International Conference of Image Processing (ICIP 2002), Rochester, NY, USA, 22–25 September 2002; Volume 1, pp. I-900–I-903.

- Weber, M. Background. Available online: http://www.vision.caltech.edu/Image_Datasets/background/background.tar (accessed on 1 August 2015).

- Muja, M.; Lowe, D.G. Fast Approximate Nearest Neighbors with Automatic Algorithm Configuration. In Proceedings of the International Conference on Computer Vision Theory and Applications (VISAPP’09), Lisboa, Portugal, 5–8 February 2009.

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

| Classification | Positive | Negative |

|---|---|---|

| Banknote Correctly Classified | Banknote Incorrectly Classified | |

| Positive | 76.05 | 23.95 |

| Negative | 8.25 | 91.75 |

| Images | Positive | Negative |

|---|---|---|

| 200 | 195 | 5 |

| Banknote | €5 | €10 | €20 | €50 | N/A |

|---|---|---|---|---|---|

| €5 | 100 | 0 | 0 | 0 | 0 |

| €10 | 0 | 100 | 0 | 0 | 0 |

| €20 | 0 | 0 | 93.3 | 0 | 6.7 |

| €50 | 0 | 0 | 0 | 100 | 0 |

| Banknote | €5 | €10 | €20 | €50 | N/A |

|---|---|---|---|---|---|

| €5 | 100 | 0 | 0 | 0 | 0 |

| €10 | 0 | 82.35 | 0 | 0 | 17.65 |

| €20 | 0 | 0 | 100 | 0 | 0 |

| €50 | 0 | 0 | 0 | 100 | 0 |

© 2017 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dunai Dunai, L.; Chillarón Pérez, M.; Peris-Fajarnés, G.; Lengua Lengua, I. Euro Banknote Recognition System for Blind People. Sensors 2017, 17, 184. https://doi.org/10.3390/s17010184

Dunai Dunai L, Chillarón Pérez M, Peris-Fajarnés G, Lengua Lengua I. Euro Banknote Recognition System for Blind People. Sensors. 2017; 17(1):184. https://doi.org/10.3390/s17010184

Chicago/Turabian StyleDunai Dunai, Larisa, Mónica Chillarón Pérez, Guillermo Peris-Fajarnés, and Ismael Lengua Lengua. 2017. "Euro Banknote Recognition System for Blind People" Sensors 17, no. 1: 184. https://doi.org/10.3390/s17010184

APA StyleDunai Dunai, L., Chillarón Pérez, M., Peris-Fajarnés, G., & Lengua Lengua, I. (2017). Euro Banknote Recognition System for Blind People. Sensors, 17(1), 184. https://doi.org/10.3390/s17010184