Classification of Hyperspectral or Trichromatic Measurements of Ocean Color Data into Spectral Classes

Abstract

:1. Introduction

- without requiring atmospheric correction for satellite sensors, despite the contribution of the remote sensing reflectance being only a small part of the TOA radiances, or

- without requiring mutlispectral sensors, such that airborne broad band sensors such as consumer cameras can be used.

2. Information about Datasets and Sensors for Synthetic Experiments

3. Proposed Classification Approach

3.1. Radiometric Measurements as Functions of Remote Sensing Reflectance Spectra

3.1.1. Satellite Sensors

3.1.2. Airborne Sensors

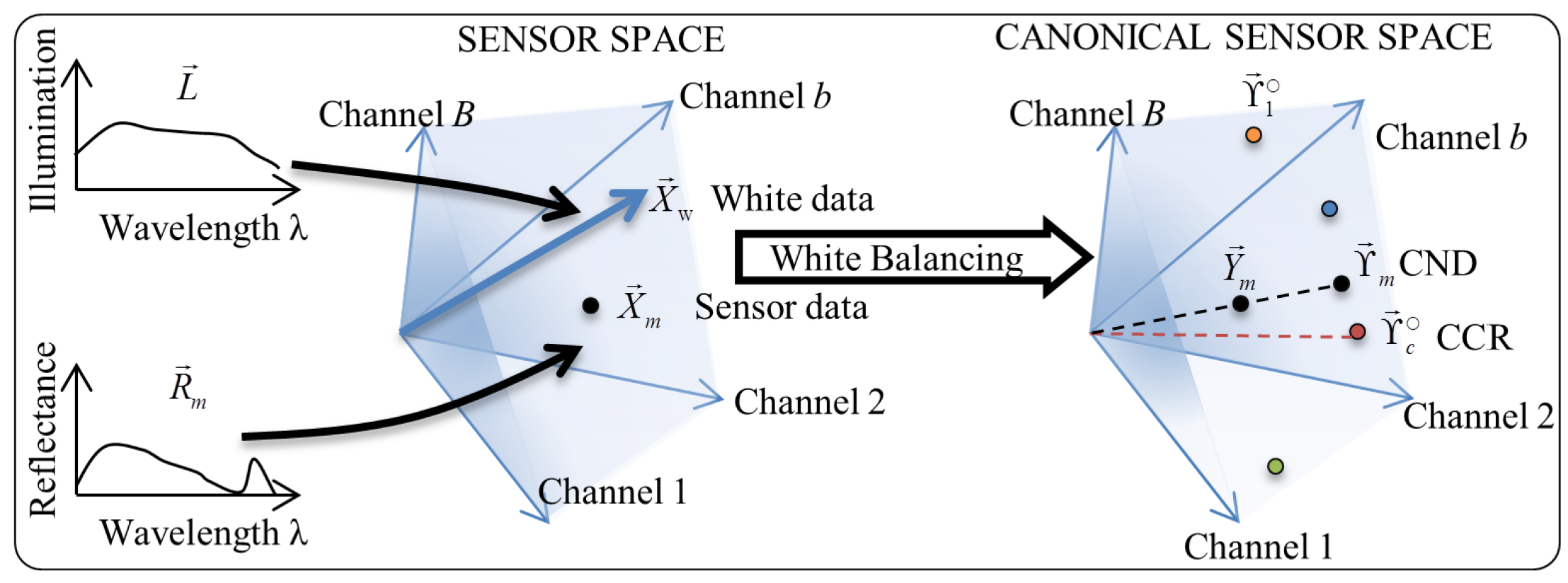

3.2. Classification Approach

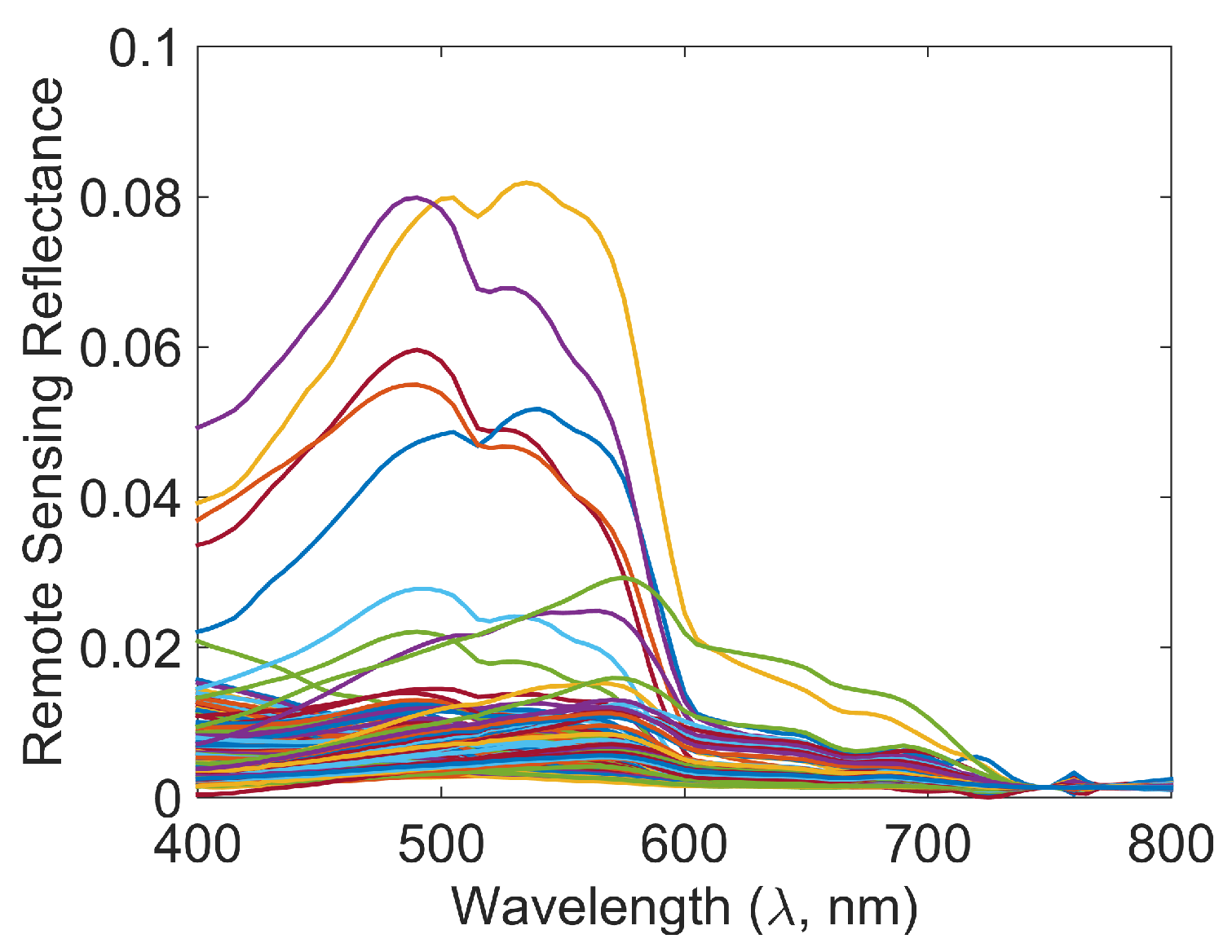

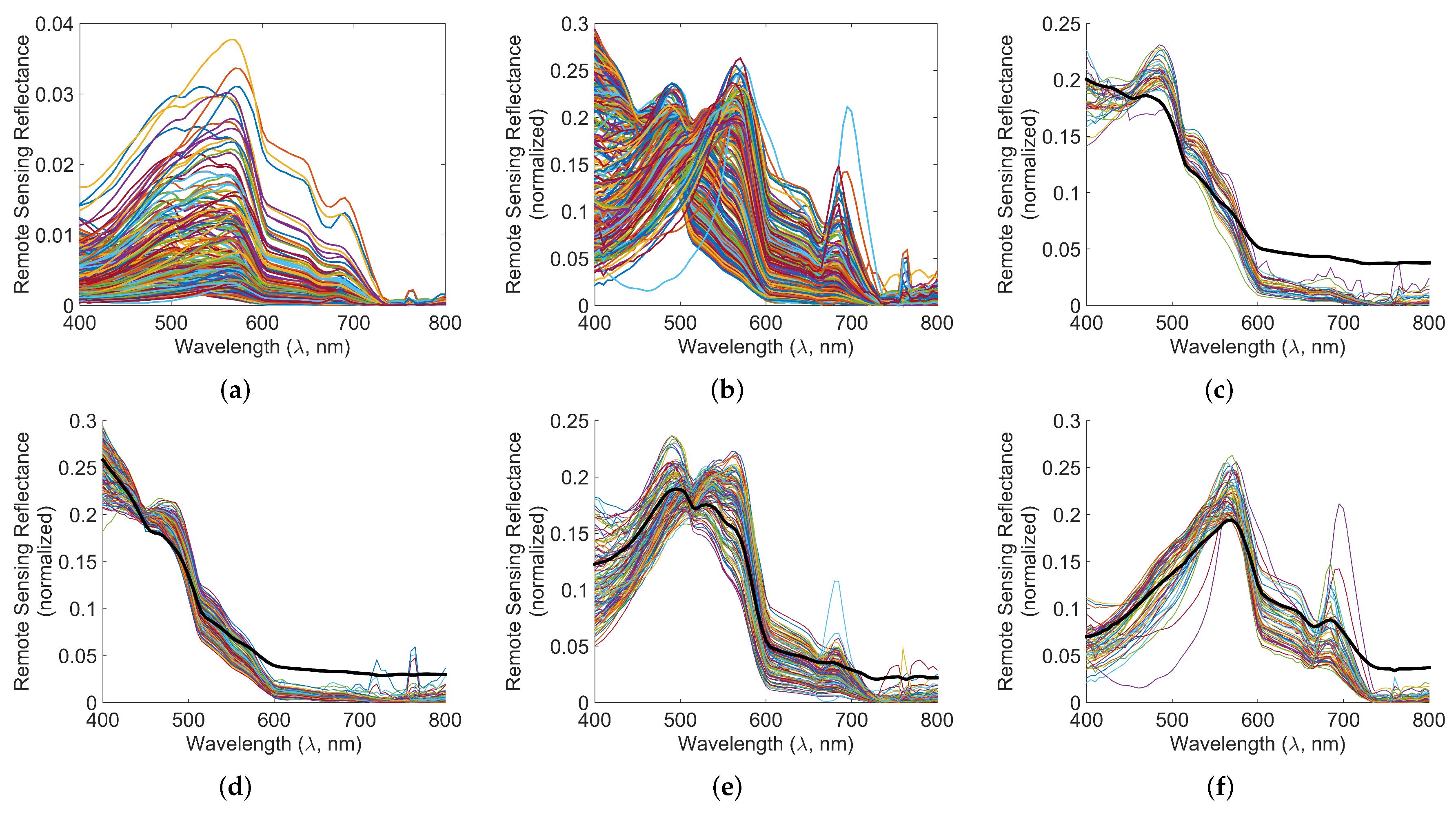

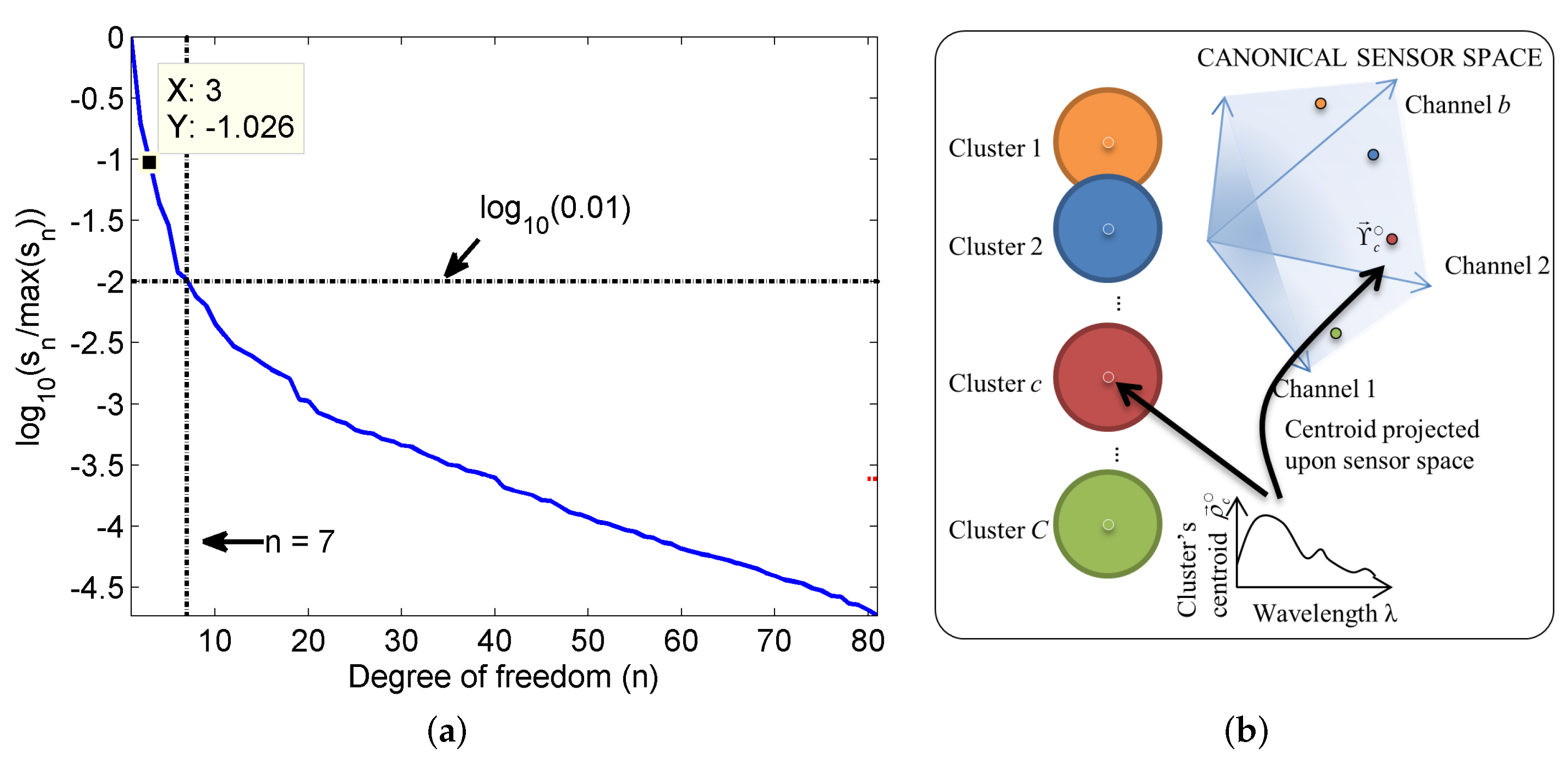

3.3. An Example of Finding Characteristic Spectra for the Lookup Table

4. Results

4.1. Synthetic Experiments

4.1.1. Classification Results for Dataset 1

4.1.2. Classification Results for Dataset 2

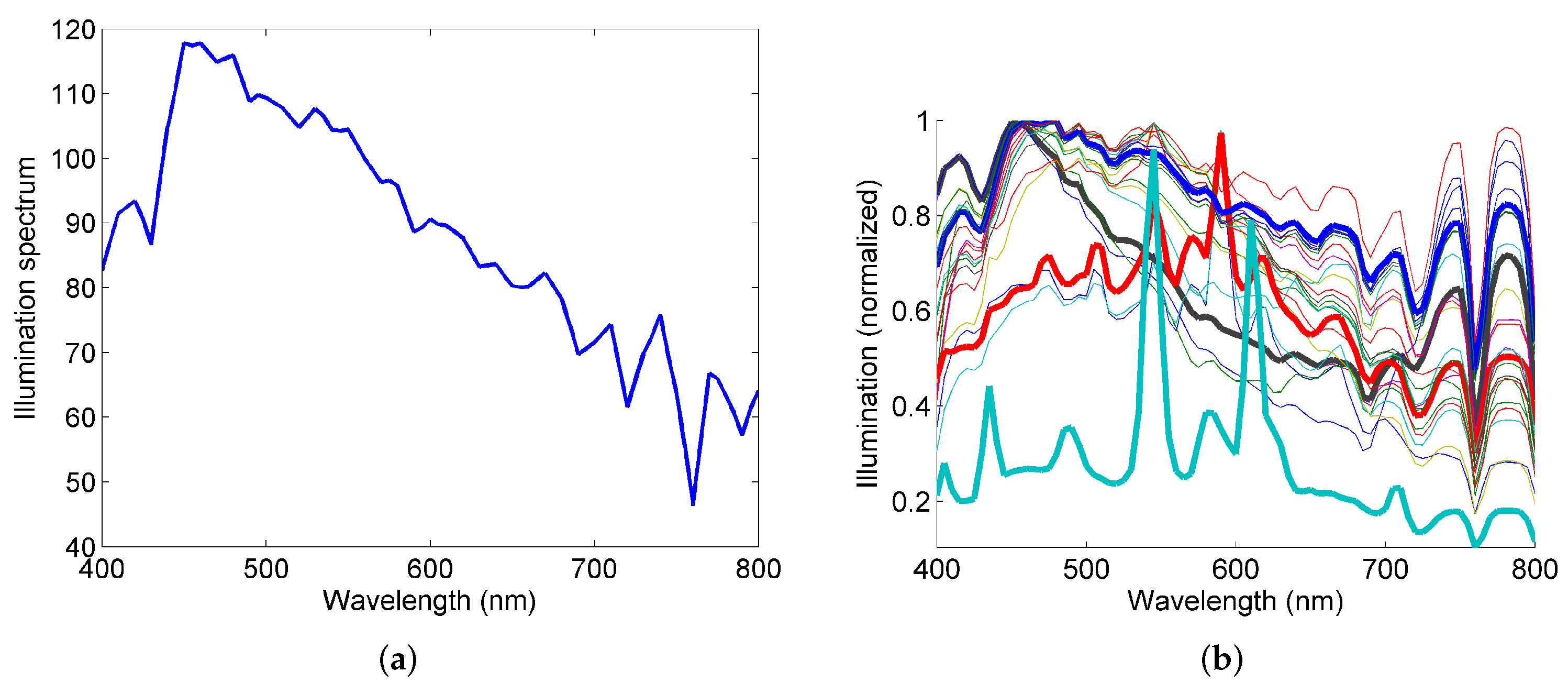

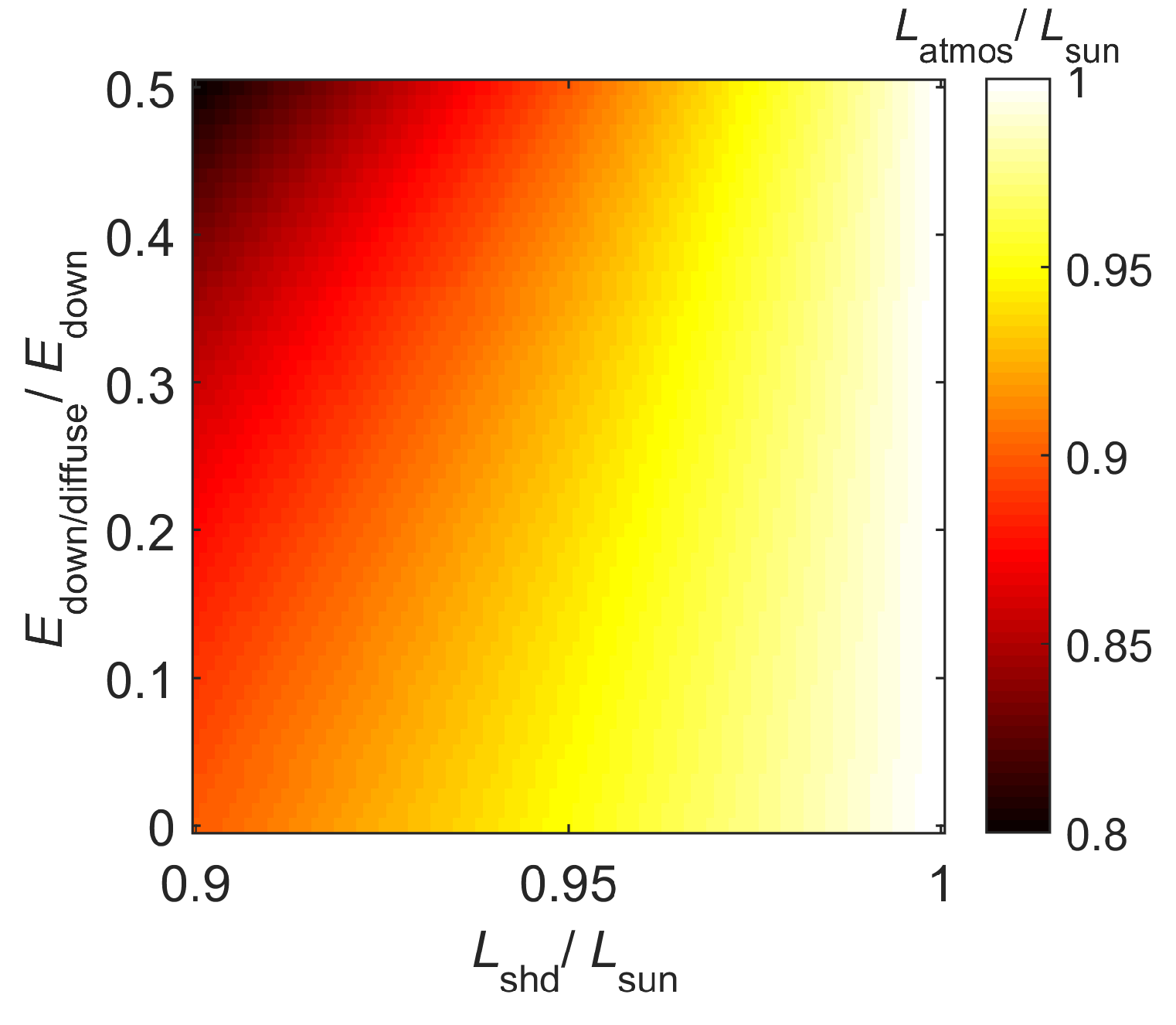

4.1.3. Different Illuminations and Classification Accuracy

4.1.4. Classification Using Consumer Cameras

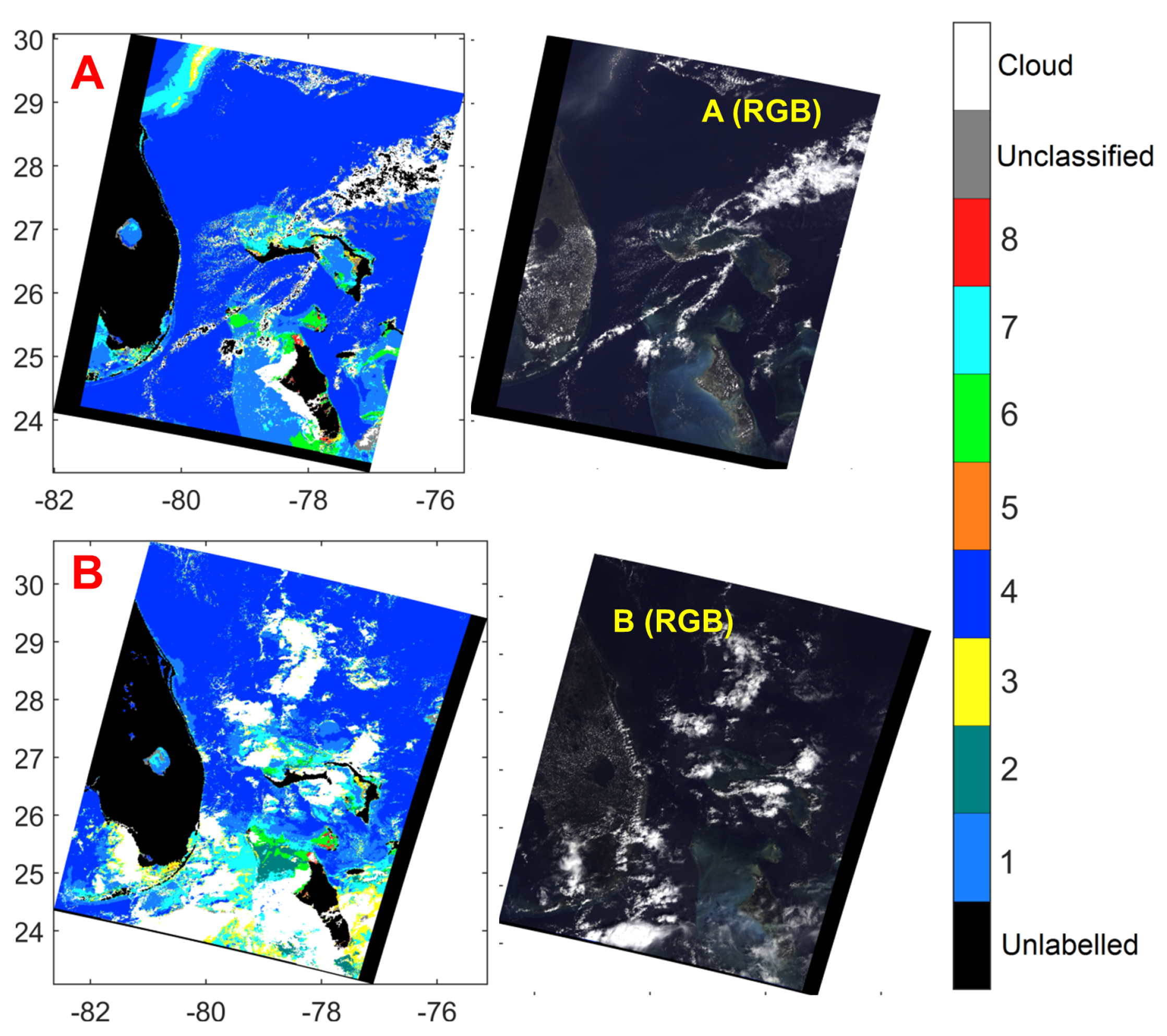

4.2. Classification of Real Satellite Data

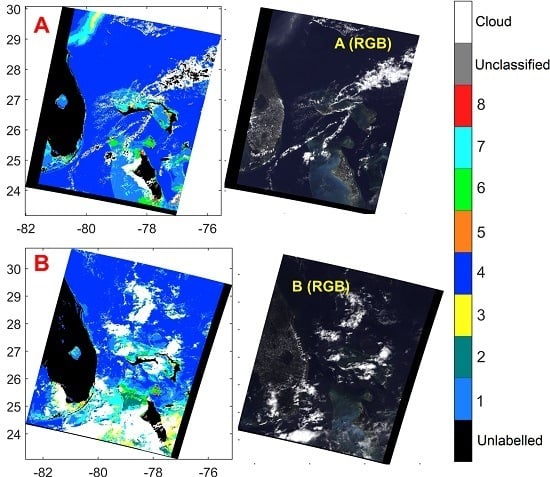

4.2.1. Classification of MERIS Data over Different Scenes

4.2.2. Correlation with IOP Results in the Same Data

4.2.3. Similarity between Estimated Remote Sensing Reflectances Using MERIS Level 2 Data and the Proposed Method

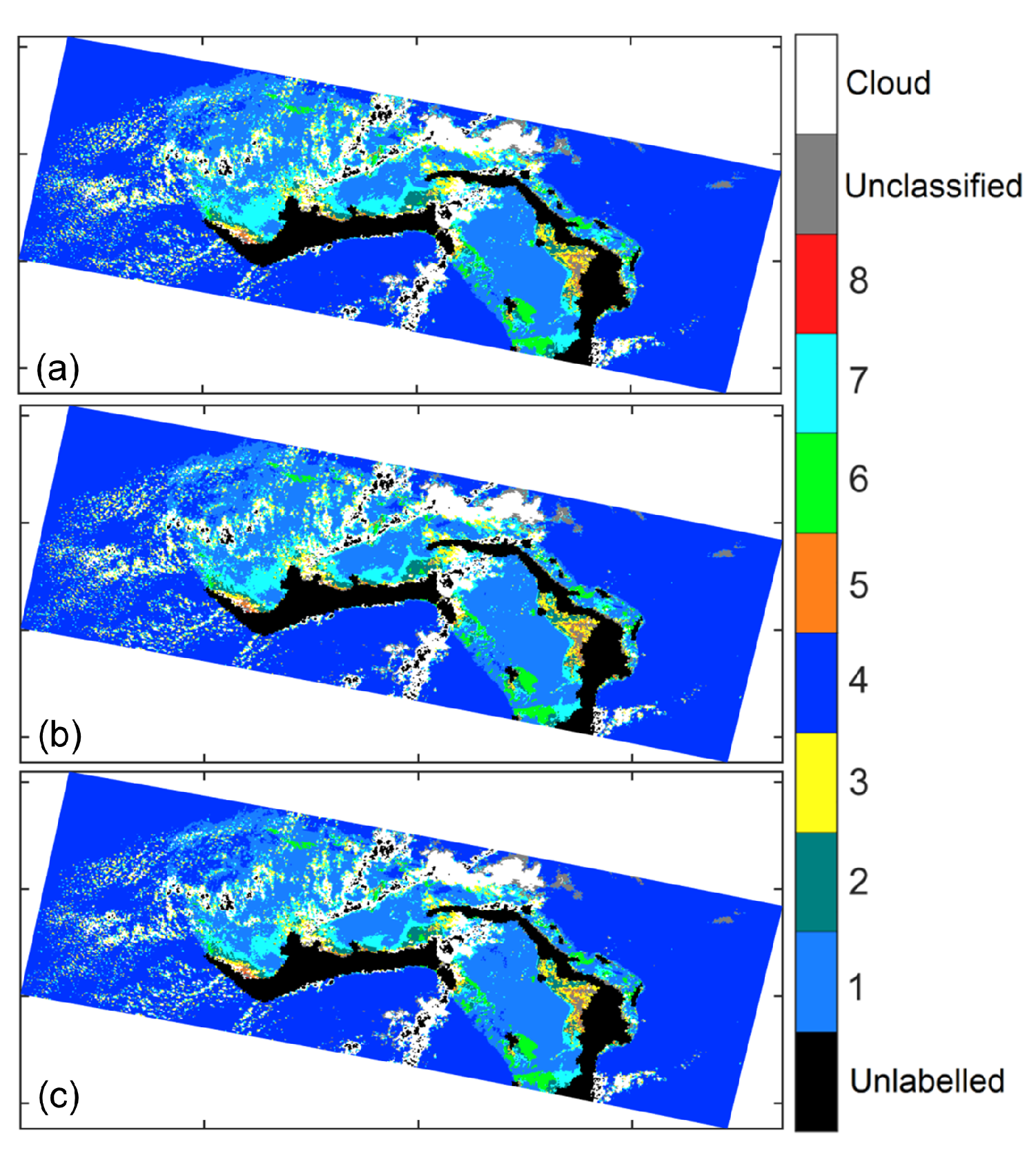

4.2.4. Effect of the Value β on Classification

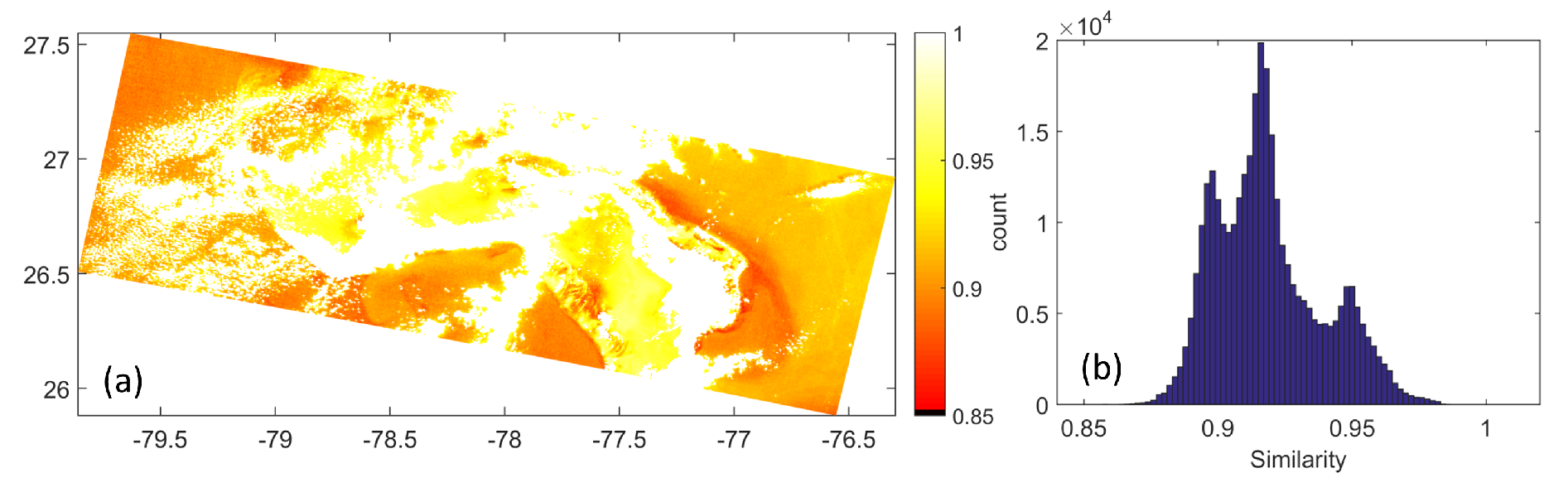

4.2.5. Assumption of Local Uniformity

4.2.6. Consideration of Glint

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Zabalza, J.; Ren, J.; Zheng, J.; Han, J.; Zhao, H.; Li, S.; Marshall, S. Novel two-dimensional singular spectrum analysis for effective feature extraction and data classification in hyperspectral imaging. IEEE Trans. Geosci. Remote Sens. 2015, 53, 4418–4433. [Google Scholar] [CrossRef]

- Vantrepotte, V.; Loisel, H.; Dessailly, D.; Mériaux, X. Optical classification of contrasted coastal waters. Remote Sen. Environ. 2012, 123, 306–323. [Google Scholar] [CrossRef]

- Mélin, F.; Vantrepotte, V. How optically diverse is the coastal ocean? Remote Sens. Environ. 2015, 160, 235–251. [Google Scholar] [CrossRef]

- Blondeau-Patissier, D.; Gower, J.; Dekker, A.; Phinn, S.; Brando, V. A review of ocean color remote sensing methods and statistical techniques for the detection, mapping and analysis of phytoplankton blooms in coastal and open oceans. Prog. Oceanogr. 2014, 123, 123–144. [Google Scholar] [CrossRef]

- Cannizzaro, J.P.; Carder, K.L.; Chen, F.R.; Heil, C.A.; Vargo, G.A. A novel technique for detection of the toxic dinoflagellate, Karenia brevis, in the Gulf of Mexico from remotely sensed ocean color data. Cont. Shelf Res. 2008, 28, 137–158. [Google Scholar] [CrossRef]

- Camps-Valls, G.; Bruzzone, L.; Rojo-Álvarez, J.; Melgani, F. Robust support vector regression for biophysical variable estimation from remotely sensed images. IEEE Geosci. Remote Sens. Lett. 2006, 3, 339–343. [Google Scholar] [CrossRef]

- ESA. MERIS Product Handbook; ESA Earth Online: Paris, France, 2002. [Google Scholar]

- Moore, T.S.; Campbell, J.W.; Dowell, M.D. A class-based approach to characterizing and mapping the uncertainty of the MODIS ocean chlorophyll product. Remote Sens. Environ. 2009, 113, 2424–2430. [Google Scholar] [CrossRef]

- Vantrepotte, V.; Loisel, H.; Dessailly, D.; Mériaux, X. Optical classification of contrasted coastal waters. Remote Sens. Environ. 2012, 123, 306–323. [Google Scholar] [CrossRef]

- Mélin, F.; Vantrepotte, V. How optically diverse is the coastal ocean? Remote Sens. Environ. 2015, 160, 235–251. [Google Scholar] [CrossRef]

- Lee, Z.; Carder, K.; Arnone, R.; He, M. Determination of primary spectral bands for remote sensing of aquatic environments. Sensors 2007, 7, 3428–3441. [Google Scholar] [CrossRef]

- SeaBASS. SeaBASS Data Archive Directory. Available online: http://seabass.gsfc.nasa.gov/seabasscgi/archive.cgi?q=/USF (accessed on 20 March 2016).

- Nguyen, R.; Prasad, D.; Brown, M. Training-Based Spectral Reconstruction from a Single RGB Image. In ECCV 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer International Publishing: New York, NY, USA, 2014; Volume 8695, pp. 186–201. [Google Scholar]

- Barnard, K.; Martin, L.; Funt, B.; Coath, A. A data set for color research. Color Res. Appl. 2002, 27, 147–151. [Google Scholar] [CrossRef]

- Amin, R.; Lewis, D.; Gould, R.W.; Hou, W.; Lawson, A.; Ondrusek, M.; Arnone, R. Assessing the Application of Cloud-Shadow Atmospheric Correction Algorithm on HICO. IEEE Trans. Geosci. Remote Sens. 2014, 52, 2646–2653. [Google Scholar] [CrossRef]

- Lee, Z.; Casey, B.; Arnone, R.; Weidemann, A.; Parsons, R.; Montes, M.J.; Gao, B.C.; Goode, W.; Davis, C.; Dye, J. Water and bottom properties of a coastal environment derived from Hyperion data measured from the EO-1 spacecraft platform. J. Appl. Remote Sens. 2007, 1. [Google Scholar] [CrossRef]

- Jedlovec, G. Automated Detection of Clouds in Satellite Imagery; INTECH Open Access Publisher: Rijeka, Croatia, 2009. [Google Scholar]

- Chang, C.; Salinas, S.; Liew, S.; Kwoh, L. Spectral reflectance of clouds in multiple-resolution satellite remote sensing images. In Proceedings of the 27th Asian Conference on Remote Sensing (ACRS), Ulannbaator, Mongolia, 9–13 October 2006.

- Cheng, D.; Prasad, D.K.; Brown, M.S. Illuminant estimation for color constancy: Why spatial-domain methods work and the role of the color distribution. J. Opt. Soc. Am. A 2014, 31, 1049–1058. [Google Scholar] [CrossRef] [PubMed]

- MacQueen, J. Some methods for classification and analysis of multivariate observations. In Proceedings of the fifth Berkeley Symposium on Mathematical Statistics and Probability, Berkeley, CA, USA, 21 June 1967; Volume 1, pp. 281–297.

- Golub, G.; Kahan, W. Calculating the singular values and pseudo-inverse of a matrix. J. Soc. Ind. Appl. Math. Ser. B Numer. Anal. 1965, 2, 205–224. [Google Scholar] [CrossRef]

- Hu, C.; Lee, Z.; Franz, B. Chlorophyll aalgorithms for oligotrophic oceans: A novel approach based on three-band reflectance difference. J. Geophys. Res. Oceans 2012, 117, 92–99. [Google Scholar] [CrossRef]

- Prasad, D.K.; Wenhe, L. Metrics and statistics of frequency of occurrence of metamerism in consumer cameras for natural scenes. J. Opt. Soc. Am. A 2015, 32, 1390–1402. [Google Scholar] [CrossRef] [PubMed]

- Prasad, D.K. Gamut expansion of consumer camera to the CIE XYZ color gamut using a specifically designed fourth sensor channel. Appl. Opt. 2015, 54, 6146–6154. [Google Scholar] [CrossRef] [PubMed]

- Cox, C.; Munk, W. Measurement of the roughness of the sea surface from photographs of the sun’s glitter. JOSA 1954, 44, 838–850. [Google Scholar] [CrossRef]

- Kay, S.; Hedley, J.D.; Lavender, S. Sun glint correction of high and low spatial resolution images of aquatic scenes: A review of methods for visible and near-infrared wavelengths. Remote Sens. 2009, 1, 697–730. [Google Scholar] [CrossRef]

- Software and Source Codes. Available online: https://sites.google.com/site/dilipprasad/source-codes (accessed on 20 March 2016).

| MODIS | MERIS | SeaWiFS | CZCS | OLCI | VIIRS (VisNIR) | CIMEL SeaPRISM | |

|---|---|---|---|---|---|---|---|

| No. of bands | 9 | 11 | 8 | 4 | 21 | 9 | 9 |

| No. used (B) | 8 | 11 | 7 | 4 | 15 | 7 | 6 |

| Bands | 405–420 | 405.2–419.6 | 402–422 | 425–460 | 407.5–417.5 | 402–422 | 407–417 |

| used (nm) | 438–448 | 435.0–449.5 | 433–453 | 500–535 | 437.5–447.5 | 436–454 | 435–445 |

| 483–493 | 482.3–496.9 | 480–500 | 535–565 | 485–495 | 478–498 | 495–505 | |

| 526–536 | 502.2–516.8 | 500–520 | 650–685 | 505–515 | 545–565 | 526–536 | |

| 546–556 | 552.1–566.7 | 545–565 | 555–565 | 600–680 | 545–555 | ||

| 662–672 | 612.0–626.6 | 660–680 | 615–625 | 662–682 | 670–680 | ||

| 673–683 | 657.0–671.6 | 745–785 | 660–670 | 739–754 | |||

| 743–753 | 674.5–686.6 | 670–677.5 | |||||

| 700.7–715.3 | 677.5–685 | ||||||

| 747.0–759.0 | 703.75–713.75 | ||||||

| 755.8–764.1 | 750–757.5 | ||||||

| 760–762.5 | |||||||

| 762.5–766.25 | |||||||

| 766.25–768.75 | |||||||

| 771.25–786.25 | |||||||

| Bands | 862–877 | 845–885 | 392.5–407.5 | 846–885 (I2) | |||

| unused (nm) | 855–875 | 846–885 (M7) | 865–875 | ||||

| 880–890 | 931–941 | ||||||

| 895–905 | 1015–1025 | ||||||

| 930–950 | |||||||

| 1000–1040 |

| Symbol | Meaning |

|---|---|

| n; N | index of the wavelength sample ; total number of wavelength samples |

| m; M | index of the location of measurement; total number of location samples |

| c; C | index of the spectral class; total number of spectral classes |

| b; B | index of the channel in a sensor; total number of channels in a sensor |

| Upwelling radiance measured at the sensor | |

| Upwelling radiance leaving the water column | |

| Upwelling radiance at radiance due to atmospheric scattering and reflection from water surface | |

| , , | Upwelling radiance at sensor at sunny, shadowed, and cloud regions, respectively |

| Downwelling radiance at the water column | |

| Portion of corresponding to atmospheric scattering | |

| ; | remote sensing reflectance at wavelength λ; |

| remote sensing reflectance at the mth location Equation (1) | |

| normalized remote sensing reflectance at the mth location Equation (20) | |

| normalized remote sensing reflectance representing the cth spectral class | |

| Spectrally flat remote sensing reflectance of cloud | |

| ; β | Ratio ; constant approximation of |

| α; | Constant ; class assigned to mth data point by our algorithm using Equation (19) |

| sensor’s spectral response matrix given as | |

| spectral sensitivity of the bth channel of sensor given as | |

| sensor’s radiometric measurement (data) given as | |

| sensor’s white data computed differently for satellite and airborne sensors | |

| canonical class representative (CCR) of the cth class stored in the lookup table | |

| canonical data obtained using data and in Equation (14) | |

| canonical normalized data (CND) computed using Equation (16) |

| Sensor | Measure | Class 1 | Class 2 | Class 3 | Class 4 | Class 5 | Class 6 | Class 7 | Class 8 | Overall |

|---|---|---|---|---|---|---|---|---|---|---|

| MODIS | Precision | 0.8889 | 0.8810 | 1.0000 | 0.9545 | 0.9667 | 0.9706 | 0.9636 | 0.9744 | − |

| Recall | 0.9231 | 0.9487 | 0.9143 | 1.0000 | 0.9667 | 1.0000 | 0.8983 | 1.0000 | 0.9502 | |

| MERIS | Precision | 0.9630 | 0.9286 | 1.0000 | 1.0000 | 0.9655 | 0.9706 | 1.0000 | 0.9737 | − |

| Recall | 1.0000 | 1.0000 | 0.9429 | 1.0000 | 0.9333 | 1.0000 | 0.9661 | 0.9737 | 0.9751 | |

| SeaWiFS | Precision | 0.8214 | 0.8372 | 1.0000 | 0.9545 | 0.9375 | 0.9412 | 0.9434 | 1.0000 | − |

| Recall | 0.8846 | 0.9231 | 0.8857 | 1.0000 | 1.0000 | 0.9697 | 0.8475 | 1.0000 | 0.9288 | |

| CZCS | Precision | 0.8065 | 0.8372 | 1.0000 | 1.0000 | 0.9355 | 0.9394 | 0.9455 | 0.9744 | − |

| Recall | 0.9615 | 0.9231 | 0.8857 | 0.8571 | 0.9667 | 0.9394 | 0.8814 | 1.0000 | 0.9253 | |

| OLCI | Precision | 0.8667 | 0.8864 | 1.0000 | 1.0000 | 0.9333 | 0.9706 | 1.0000 | 0.9730 | − |

| Recall | 1.0000 | 1.0000 | 0.9143 | 0.9048 | 0.9333 | 1.0000 | 0.9322 | 0.9474 | 0.9502 | |

| VIIRS | Precision | 0.9259 | 0.9750 | 1.0000 | 0.9545 | 0.9375 | 1.0000 | 1.0000 | 1.0000 | − |

| Recall | 0.9615 | 1.0000 | 0.9429 | 1.0000 | 1.0000 | 1.0000 | 0.9661 | 0.9737 | 0.9786 | |

| SeaPRISM | Precision | 0.7931 | 0.8205 | 1.0000 | 0.9545 | 0.9355 | 0.8421 | 0.9245 | 0.9737 | − |

| Recall | 0.8846 | 0.8205 | 0.8857 | 1.0000 | 0.9667 | 0.9697 | 0.8305 | 0.9737 | 0.9039 | |

| Canon | Precision | 0.8889 | 0.6667 | 0.6923 | 1.0000 | 0.7895 | 0.9167 | 0.9483 | 1.0000 | − |

| Recall | 0.9231 | 0.7179 | 0.7714 | 0.8571 | 1.0000 | 0.6667 | 0.9322 | 0.9211 | 0.8505 | |

| Nikon | Precision | 0.8929 | 0.7632 | 0.7027 | 1.0000 | 0.7692 | 0.9310 | 0.9655 | 1.0000 | − |

| Recall | 0.9615 | 0.7436 | 0.7429 | 0.8571 | 1.0000 | 0.8182 | 0.9492 | 0.8947 | 0.8719 |

| Sensor | Measure | Class 1 | Class 4 | Class 6 | Class 8 | Overall |

|---|---|---|---|---|---|---|

| MODIS | Precision | 0.8000 | 1.0000 | 1.0000 | 0.8438 | − |

| Recall | 1.0000 | 0.9507 | 0.9040 | 1.0000 | 0.9468 | |

| MERIS | Precision | 1.0000 | 1.0000 | 0.8986 | 0.9773 | − |

| Recall | 0.9167 | 1.0000 | 0.9920 | 0.7963 | 0.9580 | |

| SeaWiFS | Precision | 0.6122 | 1.0000 | 0.9904 | 0.7297 | − |

| Recall | 0.8333 | 0.8662 | 0.8240 | 1.0000 | 0.8683 | |

| CZCS | Precision | 0.9286 | 0.9929 | 1.0000 | 0.7941 | − |

| Recall | 0.7222 | 0.9859 | 0.8640 | 1.0000 | 0.9188 | |

| OLCI | Precision | 1.0000 | 0.9861 | 0.8052 | 0.9737 | − |

| Recall | 0.5833 | 1.0000 | 0.9920 | 0.6852 | 0.9468 | |

| VIIRS | Precision | 0.8182 | 1.0000 | 1.0000 | 0.9000 | − |

| Recall | 1.0000 | 0.9648 | 0.9280 | 1.0000 | 0.9608 | |

| SeaPRISM | Precision | 0.5294 | 1.0000 | 1.0000 | 0.6341 | − |

| Recall | 0.5000 | 0.8873 | 0.7200 | 0.9630 | 0.8011 | |

| Canon 1Ds | Precision | 0.8065 | 0.9342 | 1.0000 | 1.0000 | − |

| MarkIII | Recall | 0.6944 | 1.0000 | 0.5520 | 0.6852 | 0.7647 |

| Nikon D40 | Precision | 0.7576 | 0.9281 | 1.0000 | 1.0000 | − |

| Recall | 0.6944 | 1.0000 | 0.6000 | 0.6852 | 0.7815 |

| Dataset | MODIS | MERIS | SeaWiFS | CZCS | OLCI | VIIRS | SeaPRISM | Canon | Nikon |

|---|---|---|---|---|---|---|---|---|---|

| Dataset 1 | 0.9502 | 0.9749 | 0.9307 | 0.9267 | 0.9537 | 0.9751 | 0.9004 | 0.8424 | 0.8596 |

| Dataset 2 | 0.9447 | 0.9581 | 0.8681 | 0.9175 | 0.9107 | 0.9580 | 0.8011 | 0.7622 | 0.7692 |

| β | 0.80 | 0.85 | 0.9 |

|---|---|---|---|

| 0.75 | 2.88 | 5.97 | 9.30 |

| 0.80 | 0 | 3.10 | 6.45 |

| 0.85 | 3.10 | 0 | 3.37 |

| , | , | , | |

|---|---|---|---|

| Including unclassified pixels | 15.08 | 14.72 | 4.52 |

| Excluding unclassified pixels | 13.06 | 12.87 | 4.06 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Prasad, D.K.; Agarwal, K. Classification of Hyperspectral or Trichromatic Measurements of Ocean Color Data into Spectral Classes. Sensors 2016, 16, 413. https://doi.org/10.3390/s16030413

Prasad DK, Agarwal K. Classification of Hyperspectral or Trichromatic Measurements of Ocean Color Data into Spectral Classes. Sensors. 2016; 16(3):413. https://doi.org/10.3390/s16030413

Chicago/Turabian StylePrasad, Dilip K., and Krishna Agarwal. 2016. "Classification of Hyperspectral or Trichromatic Measurements of Ocean Color Data into Spectral Classes" Sensors 16, no. 3: 413. https://doi.org/10.3390/s16030413

APA StylePrasad, D. K., & Agarwal, K. (2016). Classification of Hyperspectral or Trichromatic Measurements of Ocean Color Data into Spectral Classes. Sensors, 16(3), 413. https://doi.org/10.3390/s16030413