Expansion of Smartwatch Touch Interface from Touchscreen to Around Device Interface Using Infrared Line Image Sensors

Abstract

:1. Introduction

2. Background

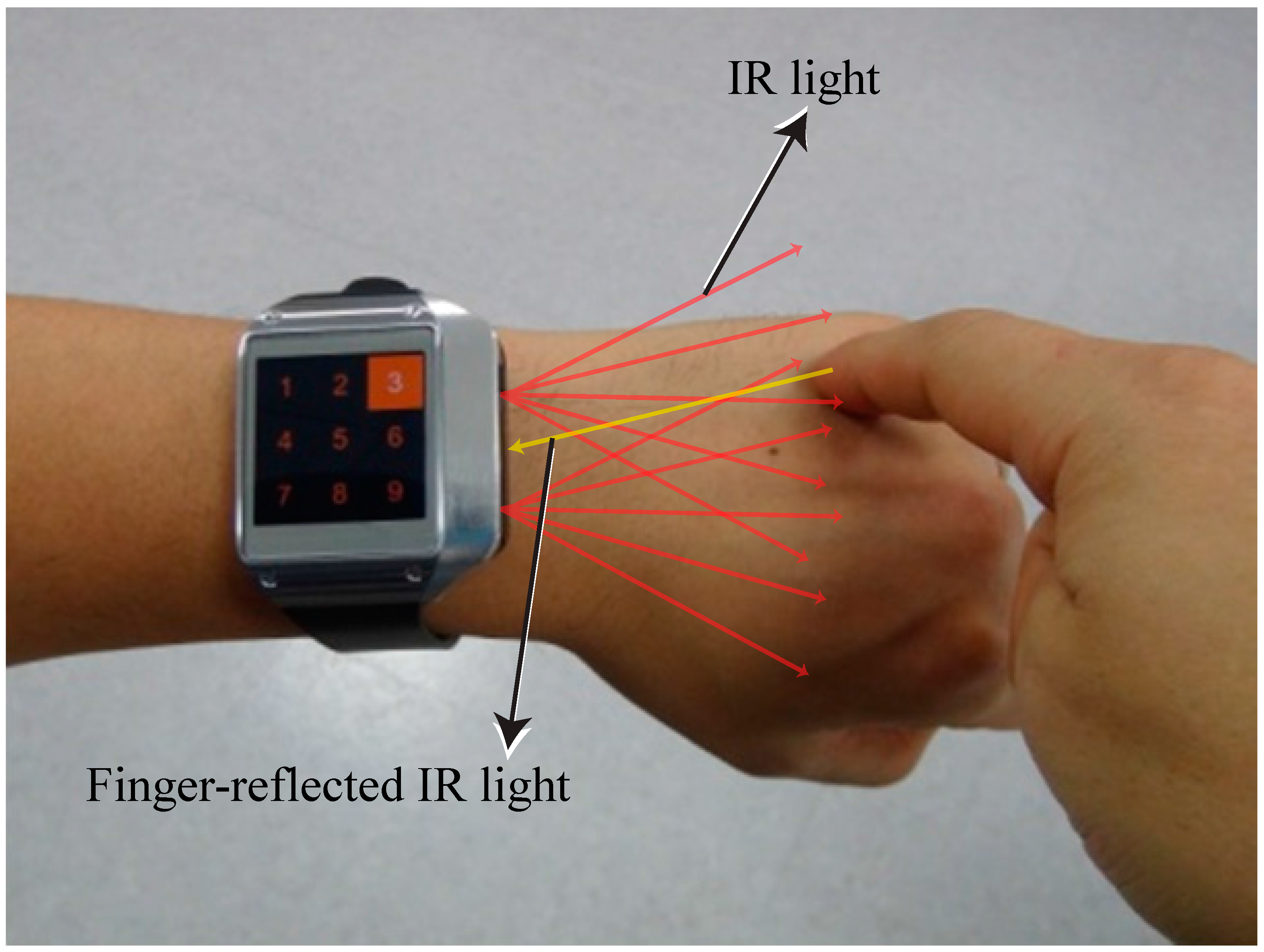

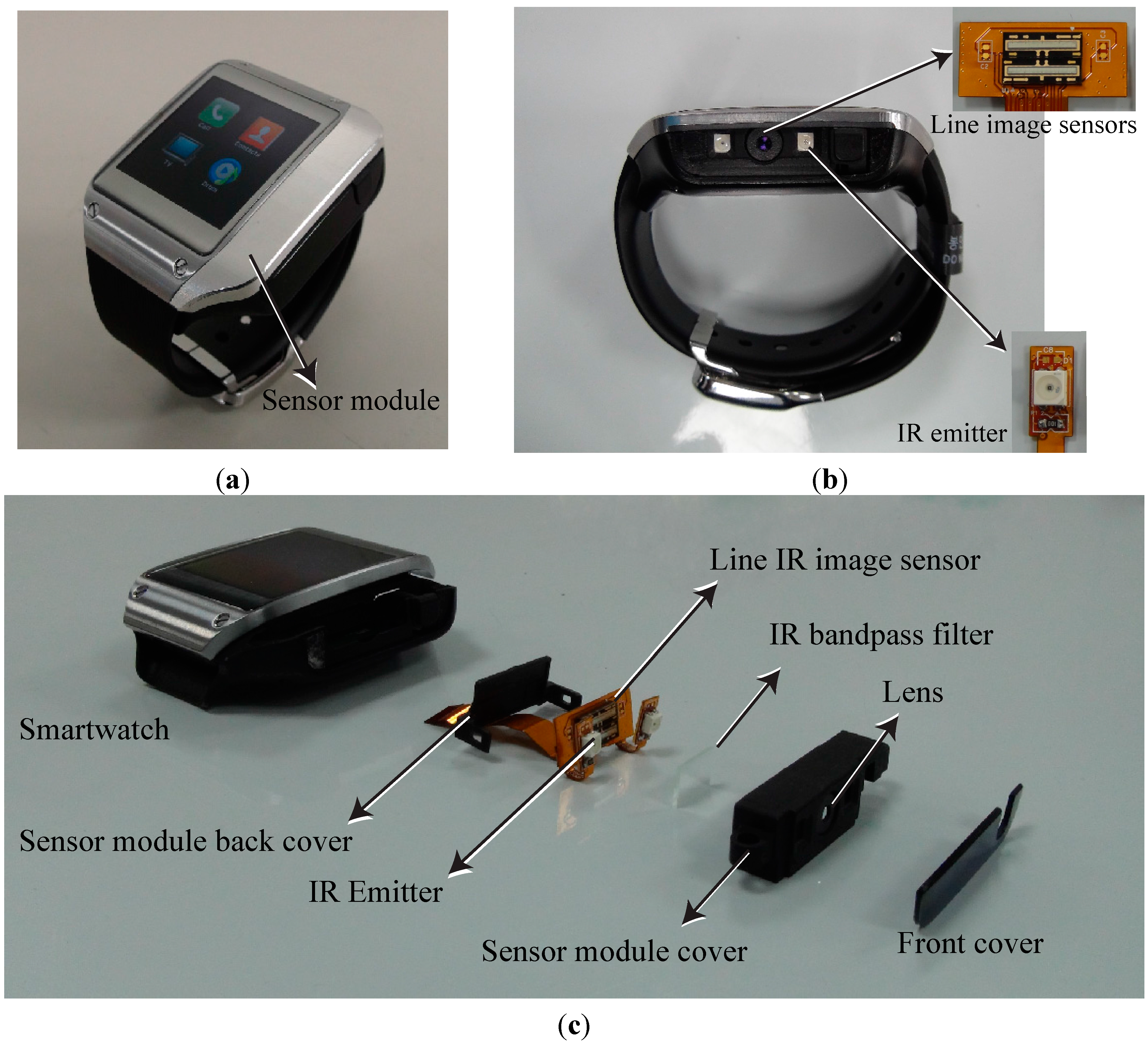

3. Around Device Interface

3.1. Around Device Interface System

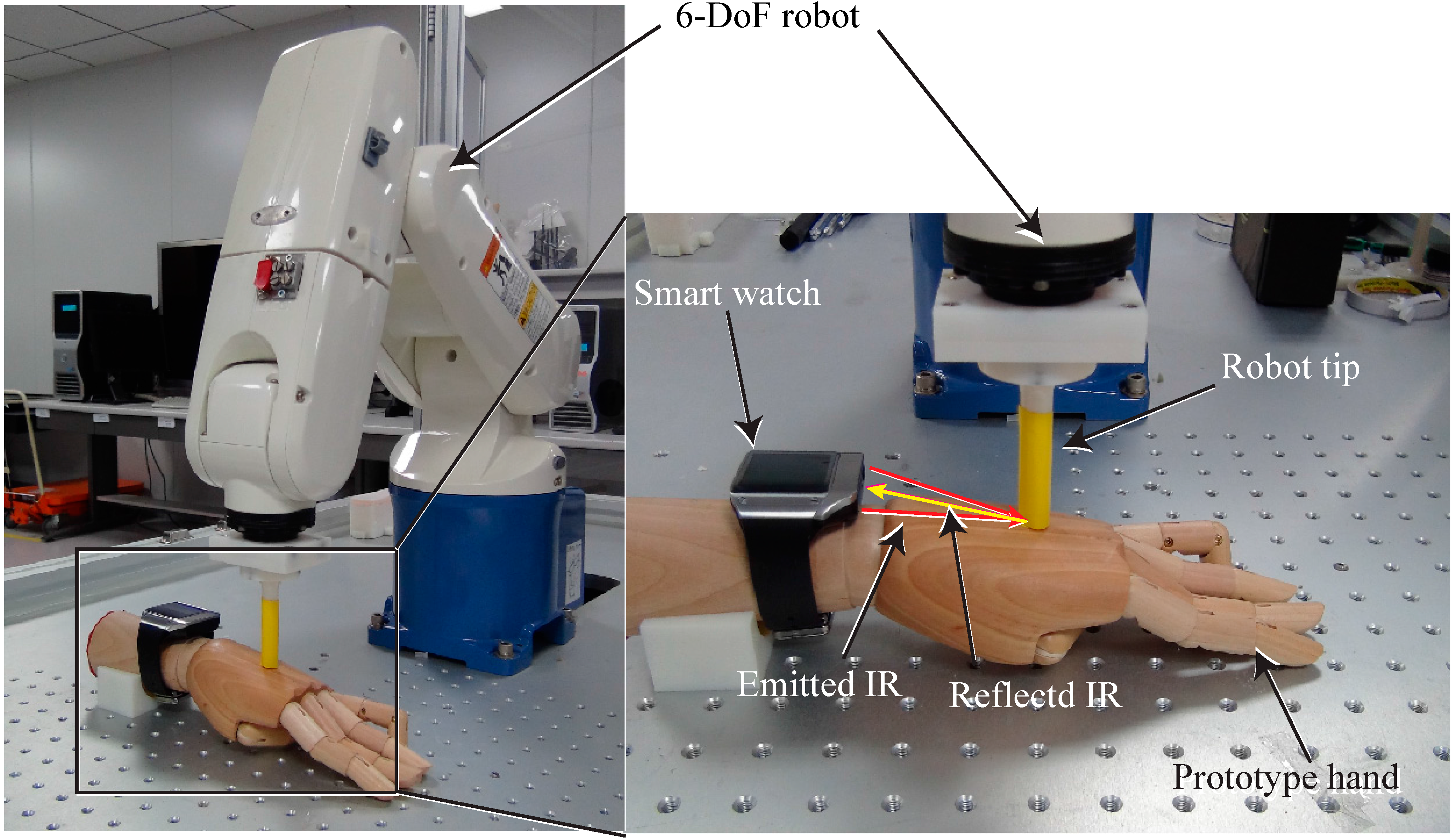

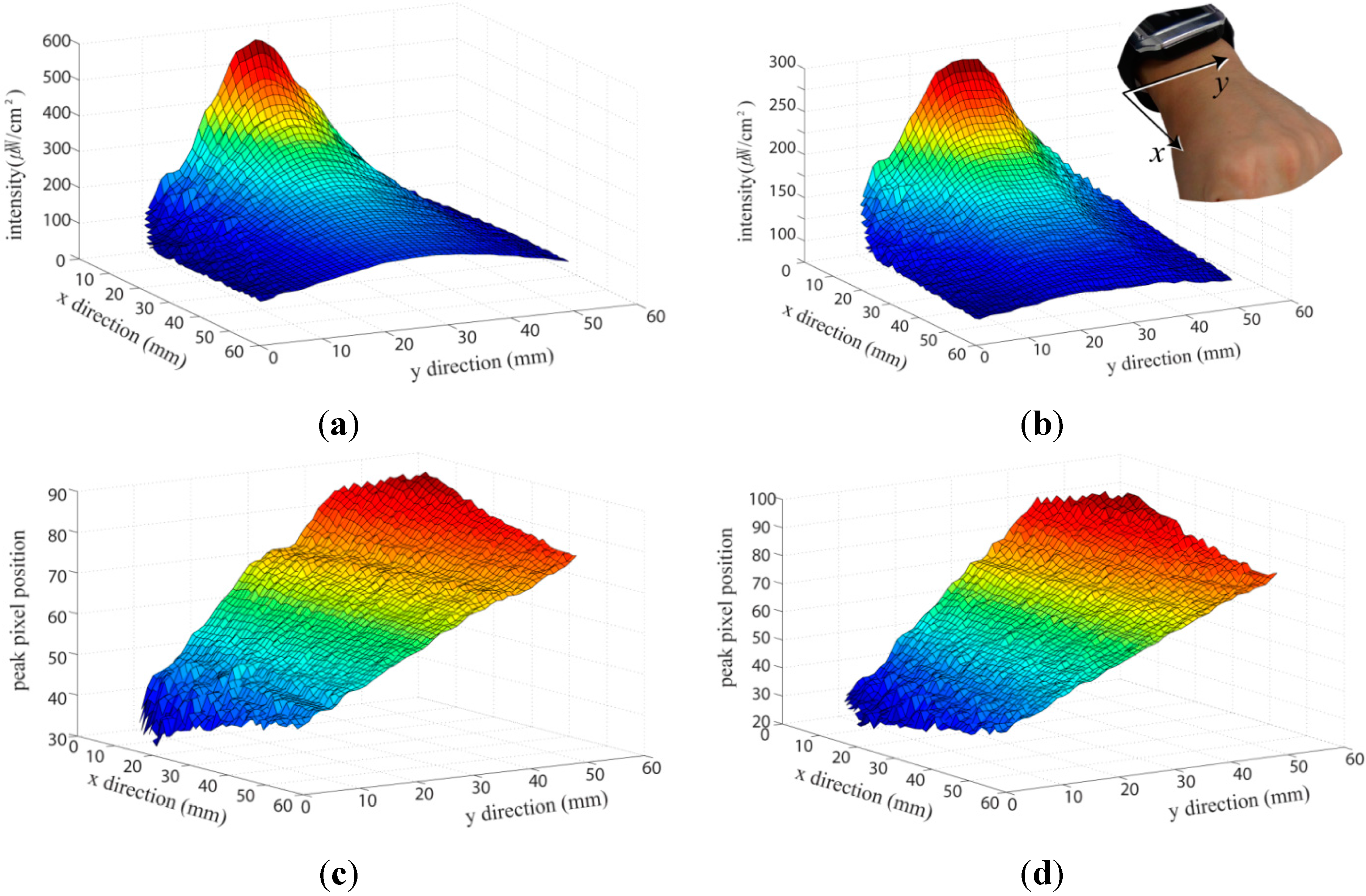

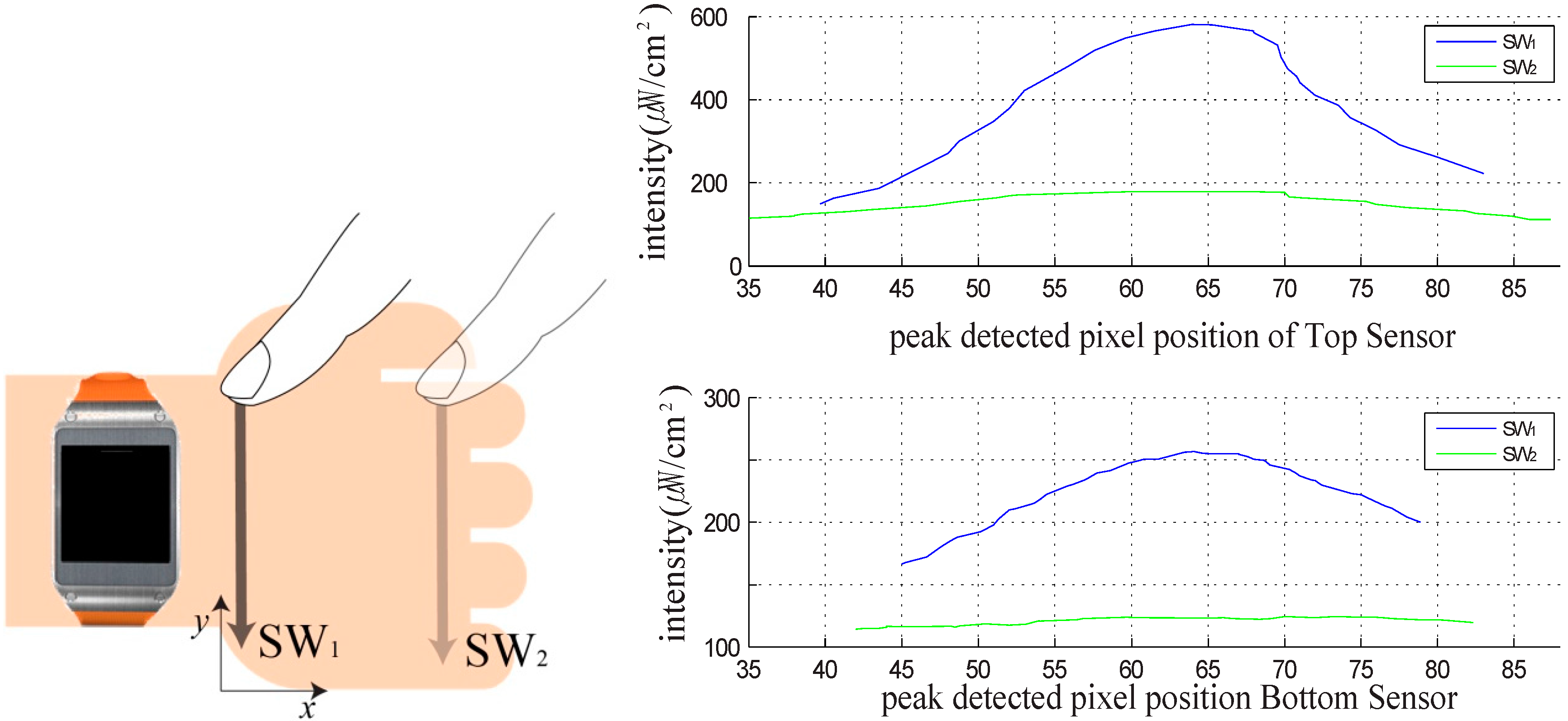

3.2. Finger Position Measurement and Performance Evaluation

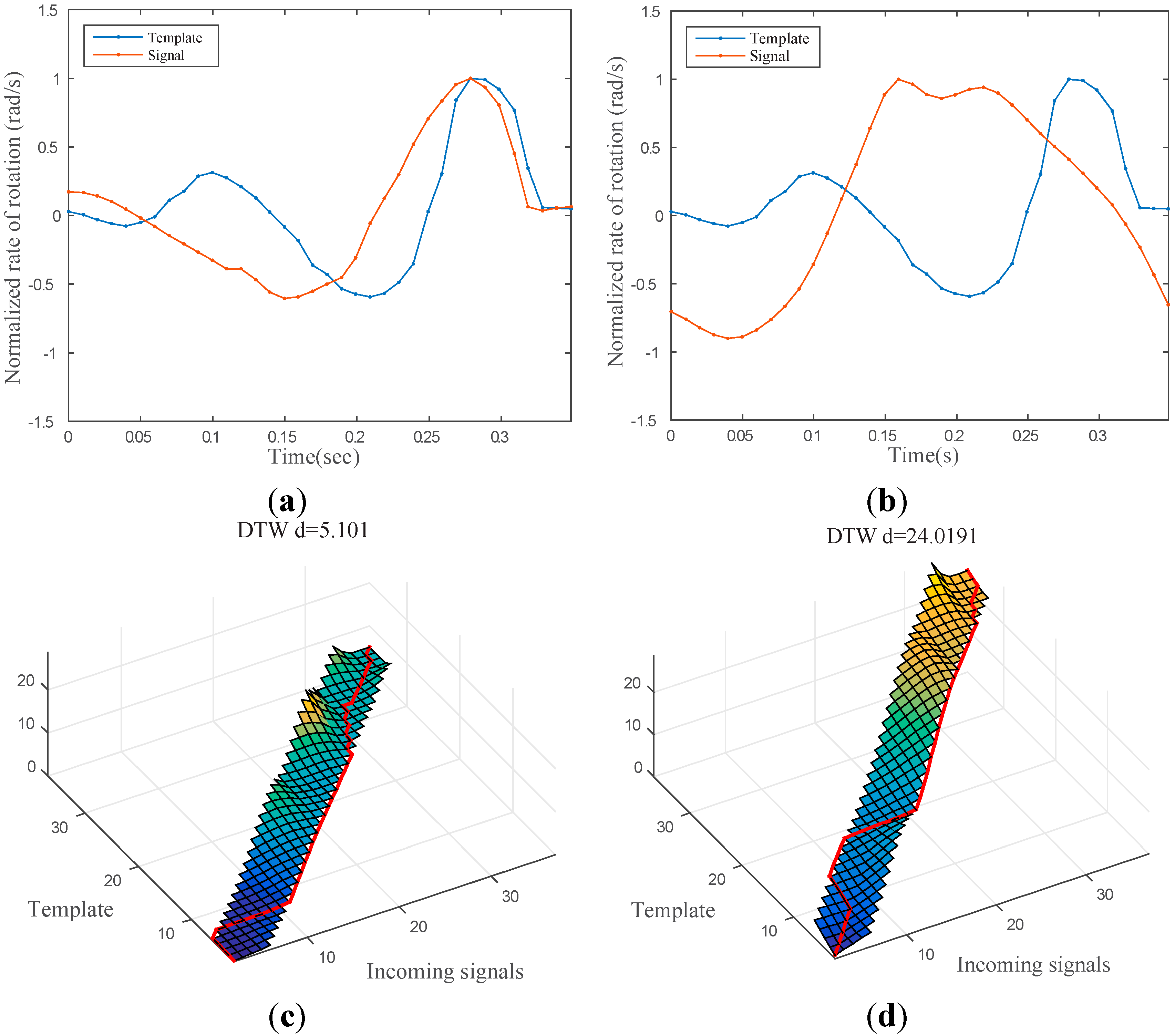

3.3. Finger Touch Recognition

4. Implementation of Smartwatch Interface with Around Touch

5. Conclusions

Acknowledgments

Supplementary Materials

Author Contributions

Conflicts of Interest

References

- Rawassizadeh, R.; Price, B.A.; Petre, M. Wearables: Has the age of smartwatches finally arrived? Commun. ACM 2014, 58, 45–47. [Google Scholar] [CrossRef]

- Komninos, A.; Dunlop, M. Text input on a smart watch. IEEE Pervasive Comput. 2014, 13, 50–58. [Google Scholar] [CrossRef]

- Profita, H.P.; Clawson, J.; Gilliland, S.; Zeagler, C.; Starner, T.; Budd, J.; Do, E.Y.-L. Don’t mind me touching my wrist: A case study of interacting with on-body technology in public. In Proceedings of the 2013 International Symposium on Wearable Computers, Zurich, Switzerland, 9–12 September 2013; pp. 89–96.

- Harrison, C.; Tan, D.; Morris, D. Skinput: Appropriating the skin as an interactive canvas. Commun. ACM 2011, 54, 111–118. [Google Scholar] [CrossRef]

- Laput, G.; Xiao, R.; Chen, X.A.; Hudson, S.E.; Harrison, C. Skin buttons: Cheap, small, low-powered and clickable fixed-icon laser projectors. In Proceedings of the 27th Annual ACM Symposium on User Interface Software and Technology, Honolulu, HI, USA, 5–8 October 2014; pp. 389–394.

- Kratz, S.; Rohs, M. Hoverflow: Expanding the design space of around-device interaction. In Proceedings of the 11th International Conference on Human-Computer Interaction with Mobile Devices and Services, Bonn, Germany, 15–18 September 2009; pp. 1–8.

- Nakatsuma, K.; Shinoda, H.; Makino, Y.; Sato, K.; Maeno, T. Touch interface on back of the hand. In Proceedings of the ACM SIGGRAPH 2011 Emerging Technologies, Vancouver, BC, Canada, 7–11 August 2011; p. 1.

- Butler, A.; Izadi, S.; Hodges, S. Sidesight: Multi-“touch” interaction around small devices. In Proceedings of the 21st Annual ACM Symposium on User Interface Software and Technology, Monterey, CA, USA, 19–22 October 2008; pp. 201–204.

- Xu, C.; Pathak, P.H.; Mohapatra, P. Finger-writing with smartwatch: A case for finger and hand gesture recognition using smartwatch. In Proceedings of the 16th International Workshop on Mobile Computing Systems and Applications, Santa Fe, NM, USA, 12–13 February 2015; pp. 9–14.

- Song, J.; Sörös, G.; Pece, F.; Fanello, S.R.; Izadi, S.; Keskin, C.; Hilliges, O. In-air gestures around unmodified mobile devices. In Proceedings of the 27th Annual ACM Symposium on User Interface Software and Technology, Honolulu, HI, USA, 5–8 October 2014; pp. 319–329.

- Knibbe, J.; Seah, S.A.; Fraser, M. Videohandles: Searching through action camera videos by replicating hand gestures. Comput. Graph. 2015, 48. [Google Scholar] [CrossRef]

- Yousefi, S.; Abedan Kondori, F.; Li, H. Experiencing real 3D gestural interaction with mobile devices. Pattern Recognit. Lett. 2013, 34, 912–921. [Google Scholar] [CrossRef]

- Palacios, J.; Sagüés, C.; Montijano, E.; Llorente, S. Human-computer interaction based on hand gestures using RGB-D sensors. Sensors 2013, 13, 11842–11860. [Google Scholar] [CrossRef] [PubMed]

- Kondori, F.A.; Yousefi, S.; Kouma, J.-P.; Liu, L.; Li, H. Direct hand pose estimation for immersive gestural interaction. Pattern Recognit. Lett. 2015, in press. [Google Scholar]

- Yang, H.-D. Sign language recognition with the Kinect sensor based on conditional random fields. Sensors 2014, 15, 135–147. [Google Scholar] [CrossRef] [PubMed]

- Kim, D.; Hilliges, O.; Izadi, S.; Butler, A.D.; Chen, J.; Oikonomidis, I.; Olivier, P. Digits: Freehand 3D interactions anywhere using a wrist-worn gloveless sensor. In Proceedings of the 25th Annual ACM Symposium on User Interface Software and Technology, Cambridge, MA, USA, 7–10 October 2012; pp. 167–176.

- Liang, R.-H.; Lin, S.-Y.; Su, C.-H.; Cheng, K.-Y.; Chen, B.-Y.; Yang, D.-N. Sonarwatch: Appropriating the forearm as a slider bar. In SIGGRAPH Asia 2011 Emerging Technologies, Hong Kong, China, 13–15 December 2011; p. 1.

- Lin, S.-Y.; Su, C.-H.; Cheng, K.-Y.; Liang, R.-H.; Kuo, T.-H.; Chen, B.-Y. PUB—Point upon body: Exploring eyes-free interaction and methods on an arm. In Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology, Santa Barbara, CA, USA, 16–19 October 2011; pp. 481–488.

- Ketabdar, H.; Yuksel, K.A.; Roshandel, M. Magitact: Interaction with mobile devices based on compass (magnetic) sensor. In Proceedings of the 15th International Conference on Intelligent User Interfaces, Hong Kong, China, 7–10 February 2010; pp. 413–414.

- Jing, L.; Zhou, Y.; Cheng, Z.; Huang, T. Magic ring: A finger-worn device for multiple appliances control using static finger gestures. Sensors 2012, 12, 5775–5790. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.; Cho, D.; Lee, K.; Lee, B. A real-time pinch-to-zoom motion detection by means of a surface EMG-based human-computer interface. Sensors 2014, 15, 394–407. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.; Jiasheng, H.; Lyons, K.; Starner, T. The gesture watch: A wireless contact-free gesture based wrist interface. In Proceedings of the 11th IEEE International Symposium on Wearable Computers, Boston, MA, USA, 11–13 October 2007; pp. 15–22.

- Howard, B.; Howard, S. Lightglove: Wrist-worn virtual typing and pointing. In Proceedings of the 5th IEEE International Symposium on Wearable Computers, Zurich, Switzerland, 8–9 October 2001; pp. 172–173.

- Keogh, E.; Ratanamahatana, C.A. Exact indexing of dynamic time warping. Knowl. Inf. Syst. 2005, 7, 358–386. [Google Scholar] [CrossRef]

- Hiroaki, S.; Chiba, S. Dynamic programming algorithm optimization for spoken word recognition. IEEE Trans. Acoust. Speech Signal Process. 1978, 26, 43–49. [Google Scholar]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lim, S.-C.; Shin, J.; Kim, S.-C.; Park, J. Expansion of Smartwatch Touch Interface from Touchscreen to Around Device Interface Using Infrared Line Image Sensors. Sensors 2015, 15, 16642-16653. https://doi.org/10.3390/s150716642

Lim S-C, Shin J, Kim S-C, Park J. Expansion of Smartwatch Touch Interface from Touchscreen to Around Device Interface Using Infrared Line Image Sensors. Sensors. 2015; 15(7):16642-16653. https://doi.org/10.3390/s150716642

Chicago/Turabian StyleLim, Soo-Chul, Jungsoon Shin, Seung-Chan Kim, and Joonah Park. 2015. "Expansion of Smartwatch Touch Interface from Touchscreen to Around Device Interface Using Infrared Line Image Sensors" Sensors 15, no. 7: 16642-16653. https://doi.org/10.3390/s150716642

APA StyleLim, S.-C., Shin, J., Kim, S.-C., & Park, J. (2015). Expansion of Smartwatch Touch Interface from Touchscreen to Around Device Interface Using Infrared Line Image Sensors. Sensors, 15(7), 16642-16653. https://doi.org/10.3390/s150716642