1. Introduction

With the rapid development of the 3-dimensional (3D) industry, significantly more people have access to 3D content from movies, games, home videos, and TV. Parallel to this development, the problem of eye fatigue with 3D displays has been identified, which is induced by the discrepancy between accommodation and convergence, binocular parallax, viewing distance, and viewing position [

1]. Therefore, significant research for assessing eye fatigue has been initiated, which includes subjective evaluation-, camera-, and biosignal-based methods. This research on eye fatigue measurement can be classified into two categories: single modality-based and multiple modality-based methods.

The former methods measure eye fatigue using a single modality such as the image obtained with a camera [

2,

3] or using biosignals [

4,

5,

6,

7,

8]. In previous studies, user eye blinks were measured using camera images for assessing eye fatigue [

2,

3]. Other studies proposed that visual fatigue could be measured using electroencephalogram (EEG) signals when watching 3D displays [

4]. Chen

et al. proved that the gravity frequency of the power spectrum and power spectral entropy of EEG signals can be used to measure visual fatigue for 2-dimensional (2D) TV and 3D TV [

5]. Park

et al. proposed that user electrocardiography (ECG) signals can be used for measuring visual fatigue while watching 3D TV [

6]. In another study, EEG based on an event-related potential (ERP), was used to measure 3D visual fatigue [

7]. Yu

et al. proposed the method of measuring eye movement using electro-oculography (EOG) signals for evaluating visual fatigue on 2D and 3D displays [

8]. However, none of the previous research based on single modality can guarantee the credibility of eye fatigue measurement because the performance of a single sensor can be influenced by various factors including face movement.

To measure eye fatigue with higher credibility, multiple modality-based methods based on multiple modality sensors have been proposed [

9,

10,

11,

12]. In a previous study, video-oculography (VOG) and EOG were used for measuring visual fatigue on 3D images [

9]. Bang

et al. proposed a method of measuring eye fatigue with 3D displays using EEG, eye blinking rate (BR), facial temperature (FT), and subjective evaluation (SE) [

10]. In [

11], ECG sensors, galvanic skin response (GSR), and skin temperature (SKT) with SE were used to measure eye fatigue on 2D and 3D displays. The power of the beta bands of EEGs and BRs, and a Bayesian network have been utilized for measuring eye fatigue when viewing 3D displays [

12]. Although the multiple modality-based methods enhance the credibility of eye fatigue measurement compared to single modality-based methods, they have not combine the measured values from multiple modalities. Moreover, they have not considered the qualities of the measured values.

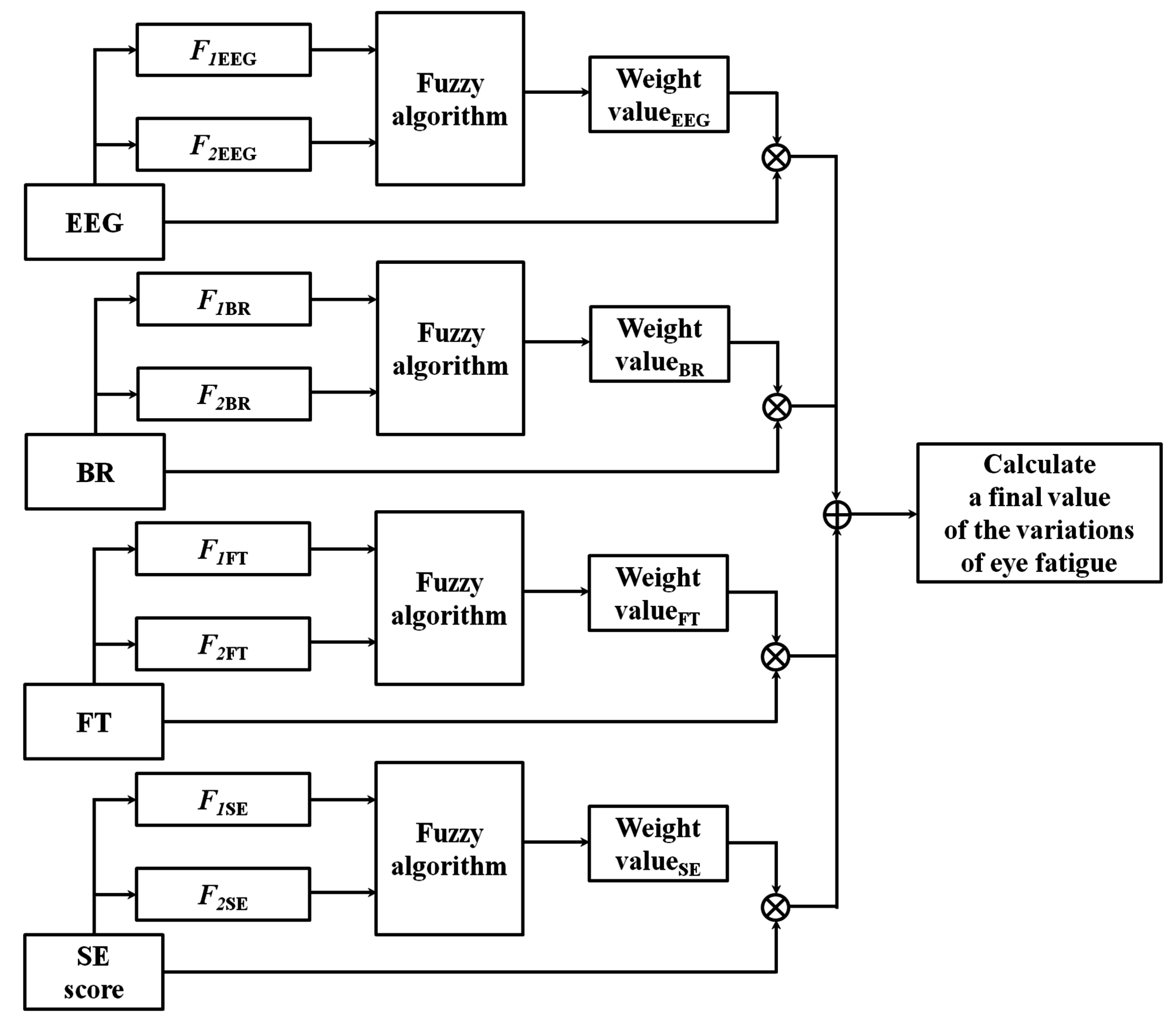

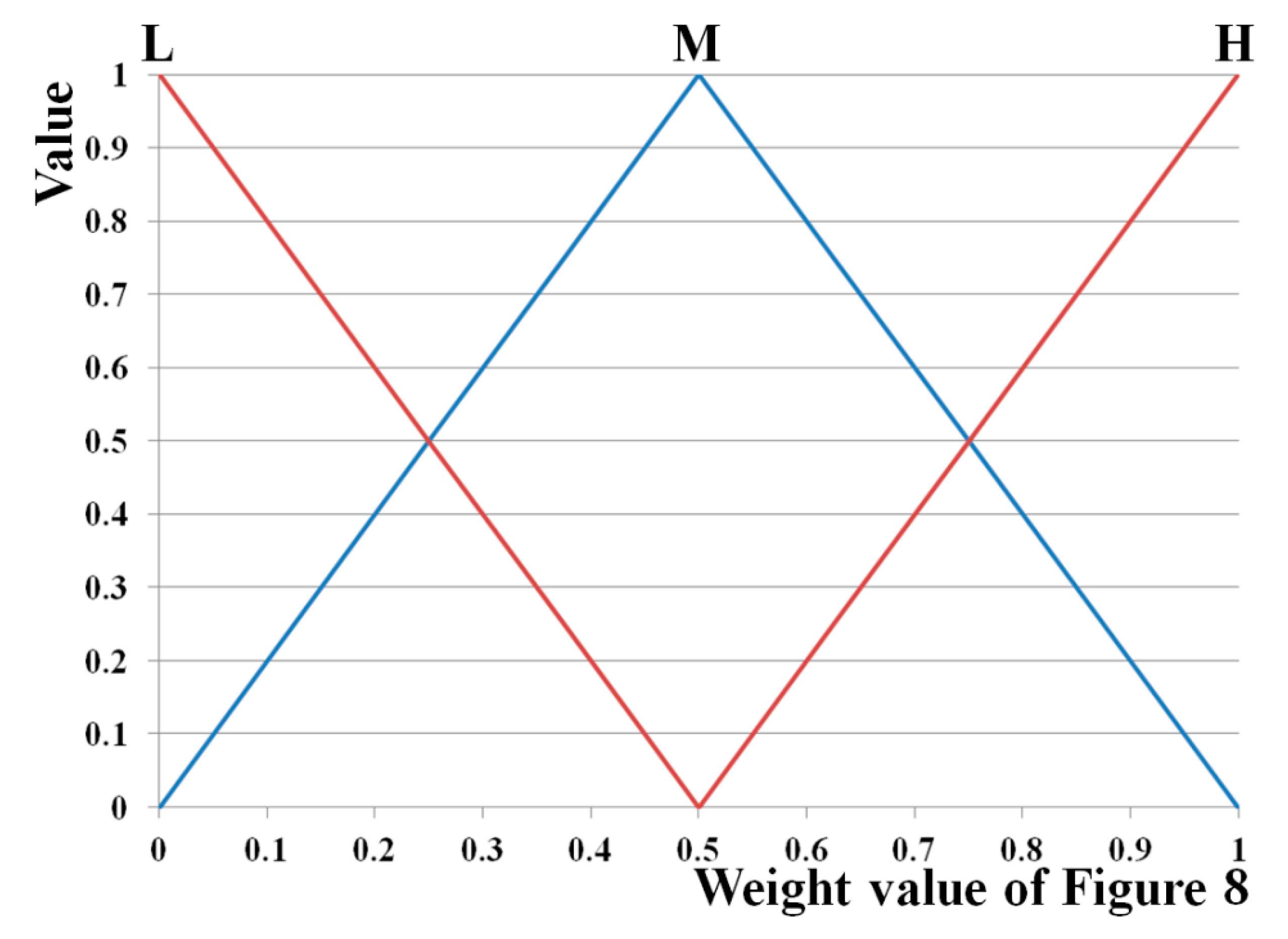

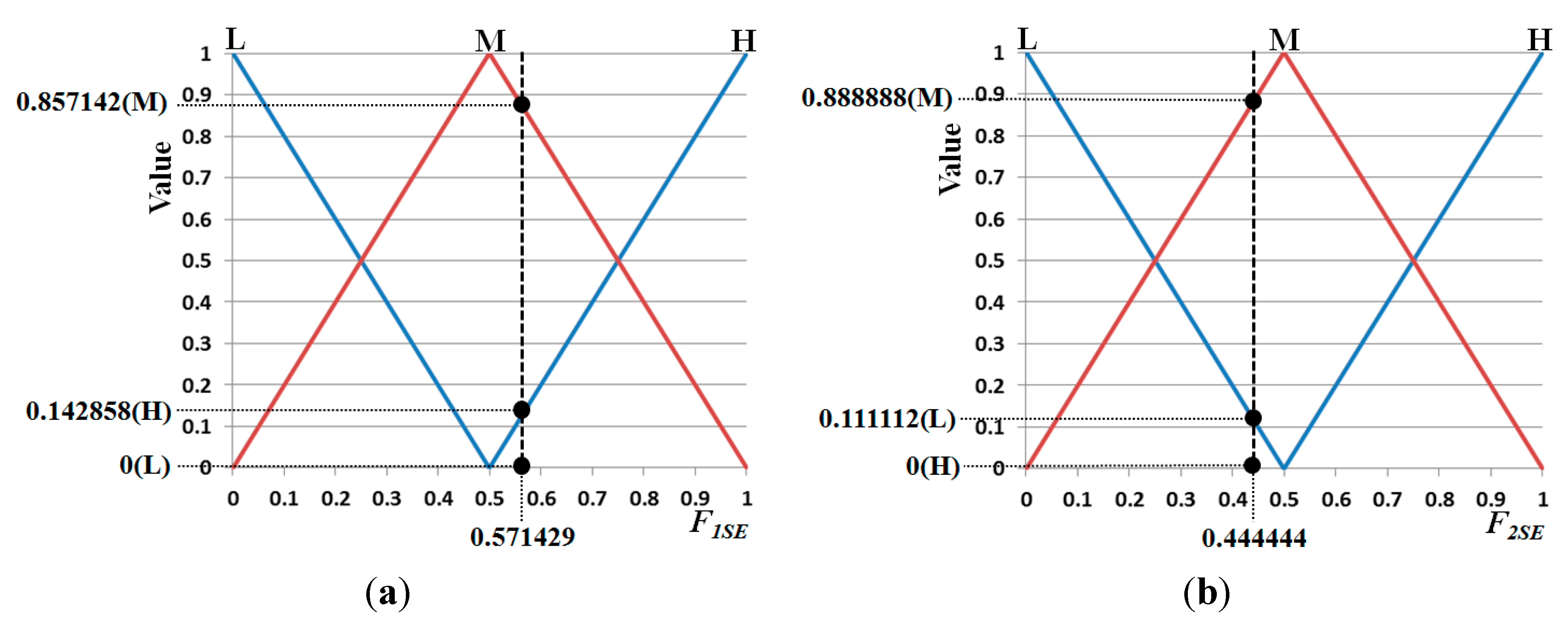

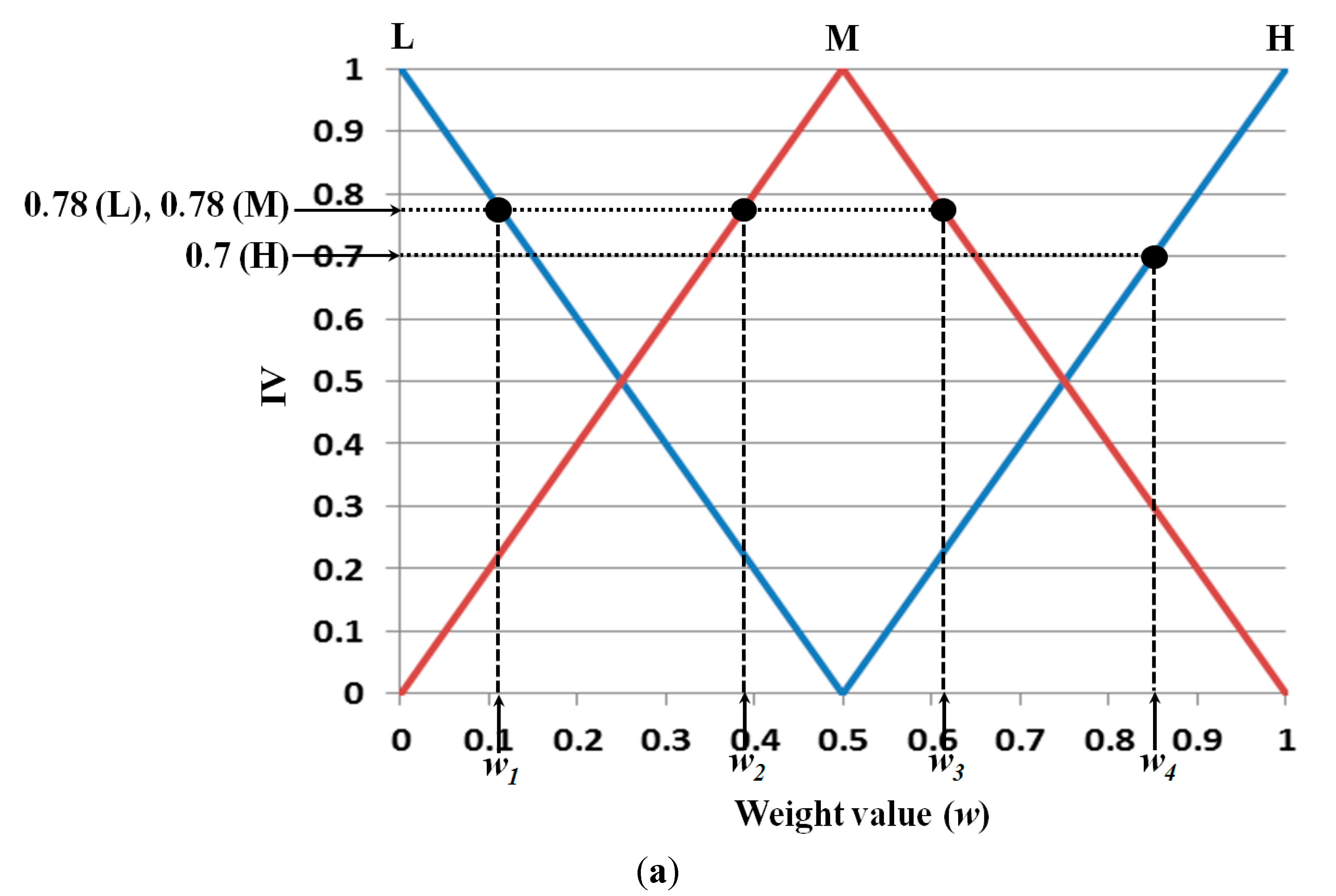

Therefore, we propose a new method for quantitatively evaluating the variation of eye fatigue before and after watching 3D displays by combining multimodal measurements. For the evaluation of the variation of eye fatigue with high credibility on 3D displays, a fuzzy-based fusion method (FBFM) is proposed based on the multimodalities of EEG signals, eye BR, FT, and SE. To measure a more accurate variation of eye fatigue, we obtain the quality scores of EEG signals, eye BR, FT, and SE. For combining the values of the four modalities, we obtain the optimal weights of the EEG signals, BR, FT, and SE using a fuzzy system based on the quality scores. Then, the quantitative level of the variation of eye fatigue is finally obtained using the weighted sum of the values measured by the four modalities. In

Table 1, we show the comparisons of previous and proposed methods to measure eye fatigue.

Table 1.

Comparison of previous and proposed methods to measure eye fatigue.

Table 1.

Comparison of previous and proposed methods to measure eye fatigue.

| Category | Method | Advantages | Disadvantages |

|---|

| Single modality-based methods | Camera-based method [2,3] | Eye blink is measured | - Less influenced by movement of muscle, head, or body because of contactless method | - Data capturing speed is less than biosignal-based method |

| Biosignal-based method [4,5,6,7,8] | EEG [4,5,7], ECG [6], and EOG [8] are measured | - Causes less discomfort to user than multiple modality-based methods because of smaller number of sensors attached to body | - Contains noise caused by movements of muscle, head, or body |

| Multiple modality-based methods | Method not combining information of multiple modalities [9,10,11,12] | VOG and EOG [9], EEG, BR, and FT [10], ECG, GSR, and SKT [11], and EEG and BR [12] are measured | - Measurement of eye fatigue is more accurate with multiple modalities than with single modality | - Does not consider qualities and weights of measured values |

| Method combining information of multiple modalities (proposed method) | Combining multiple modalities based on FBFM considering quality of the measured values | - Accuracy of eye fatigue measurement is enhanced by combining multiple modalities considering the quality of the measured values | - Additional procedures are necessary for quality measures and combining multiple modalities |

The remainder of this paper is organized as follows: in

Section 2, the proposed system and the analysis of the quality measurements are described. In

Section 3, the experimental setup and results are discussed. Finally, the conclusions are presented in

Section 4.

3. Experimental Setup and Results

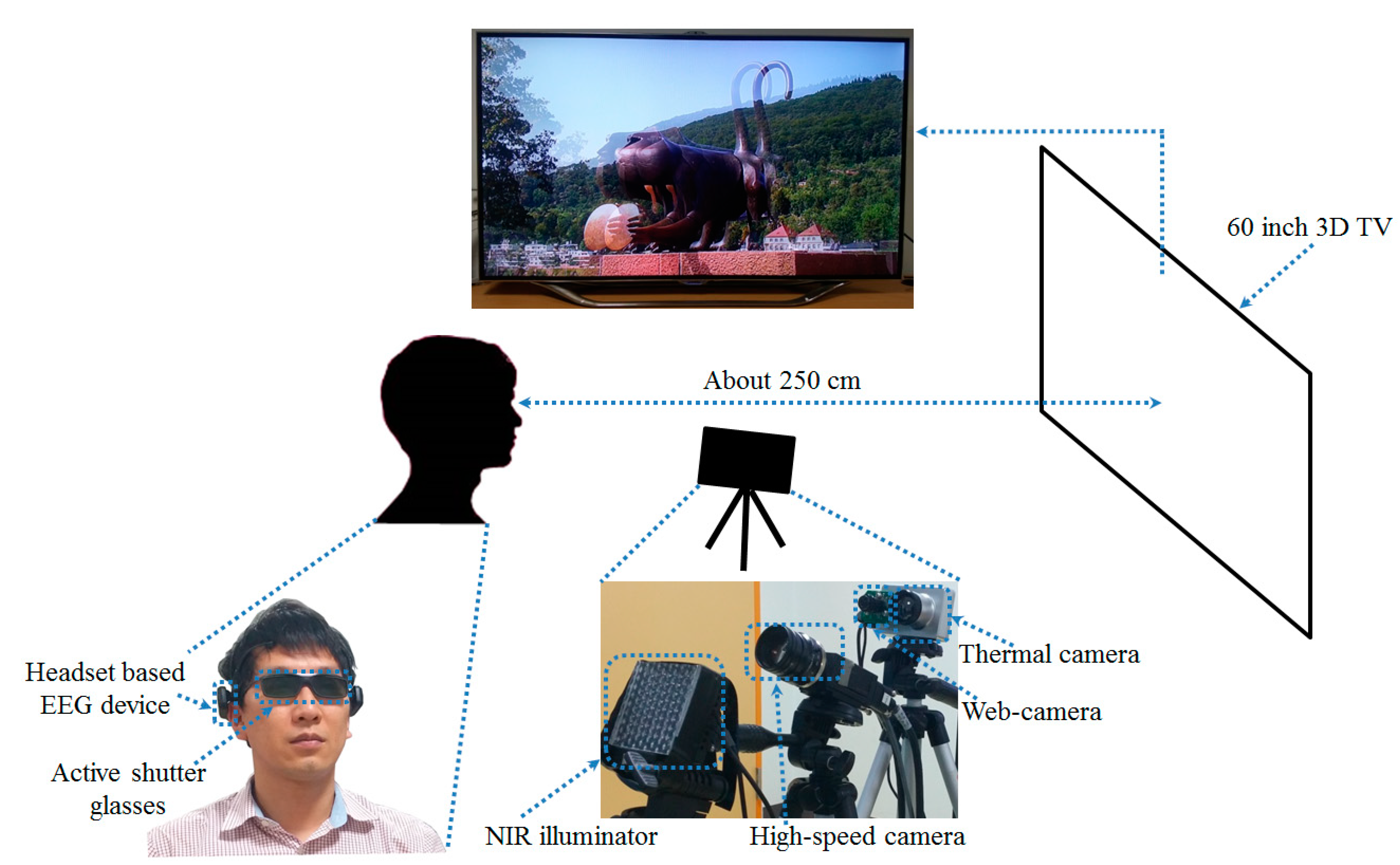

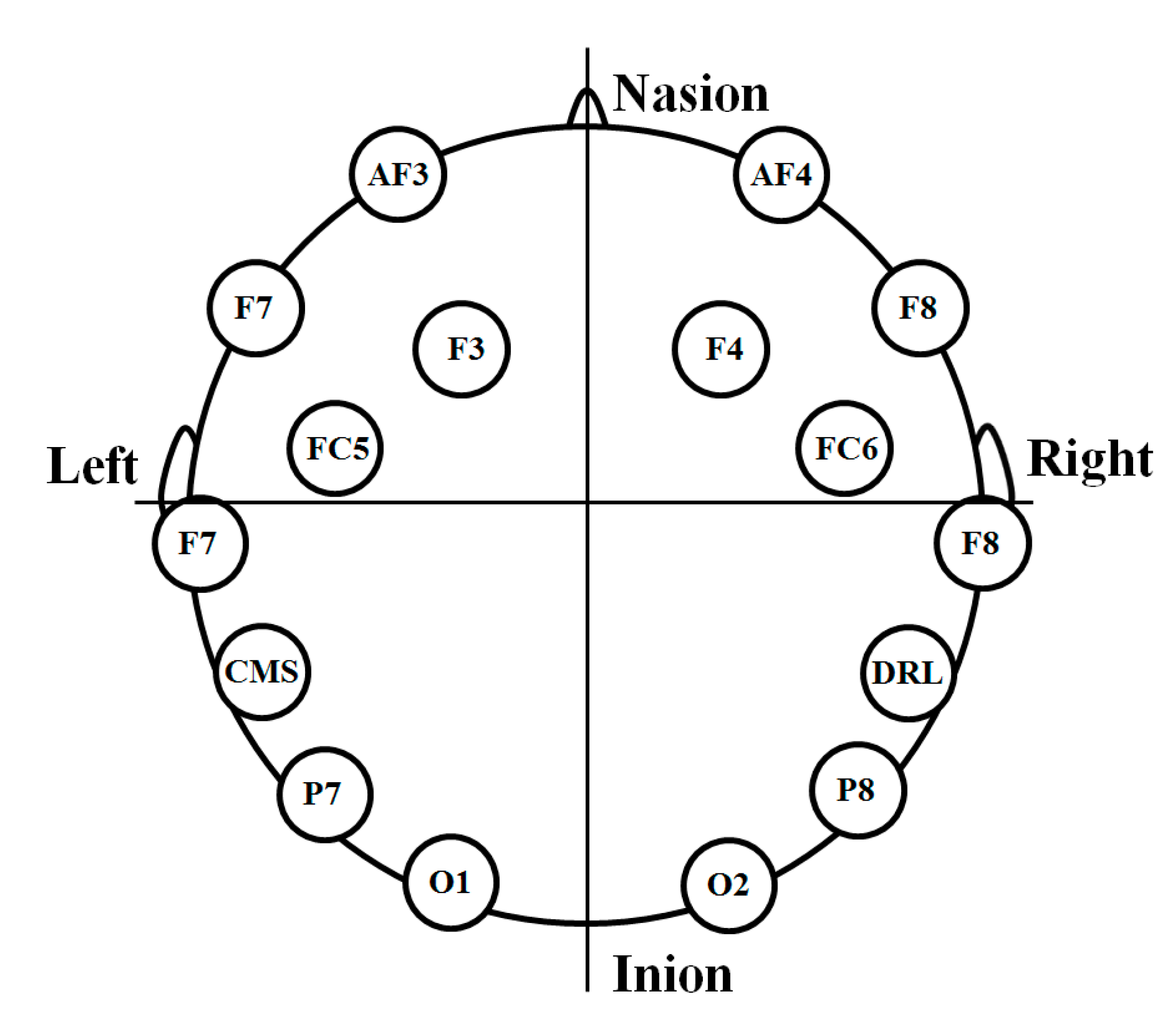

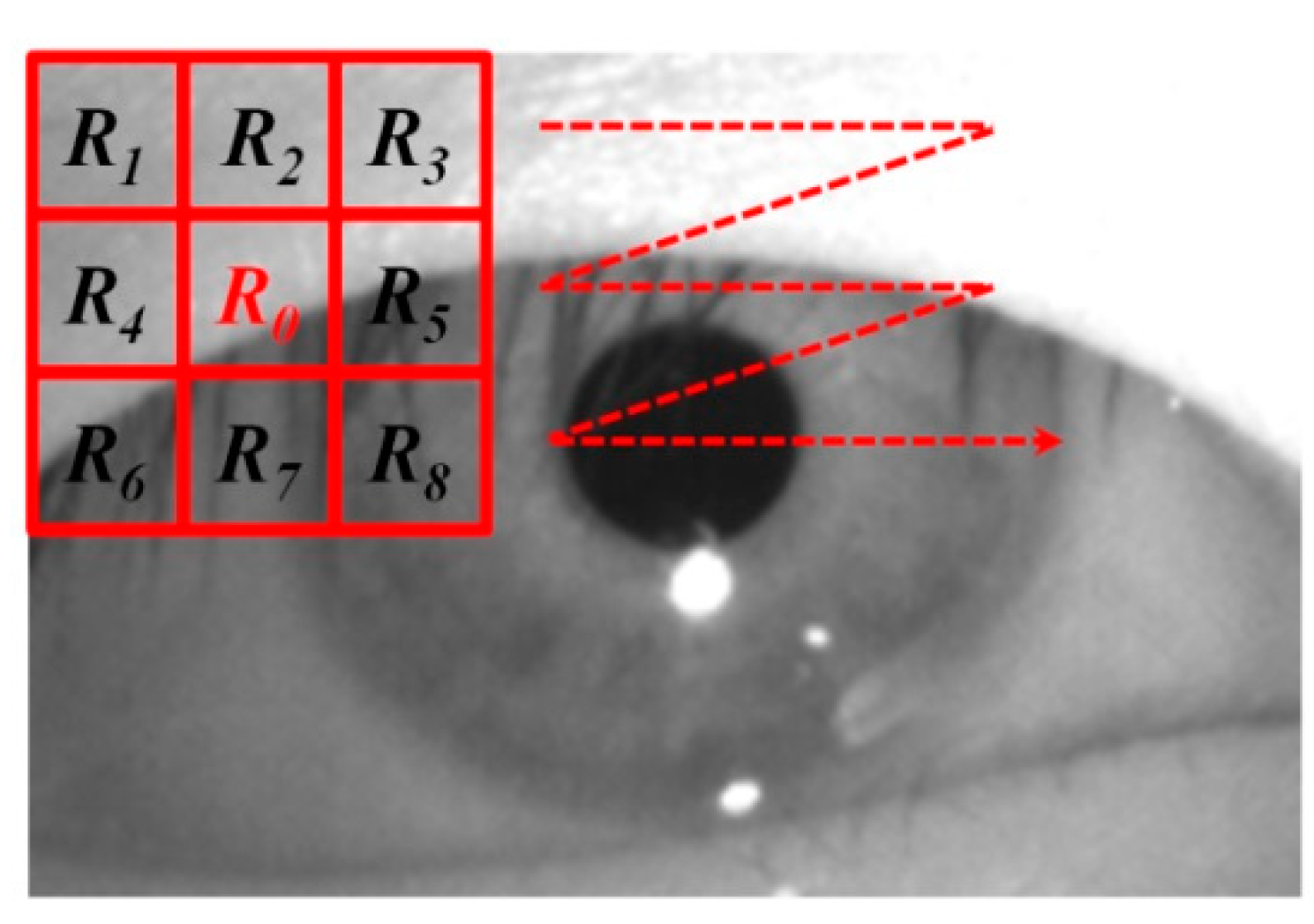

To simultaneously acquire the data for EEG, FT, and eye image for BR without time delay, two desktop computers with an additional laptop computer were used. The first desktop computer (used to capture the images of both eyes using a high-speed camera) had a 3.07 GHz CPU (Intel (R) Core (TM) i7 CPU 950) and 6 GB RAM. The second desktop computer (used to acquire the EEG signals using the Emotiv EPOC headset) had a 2.33 GHz CPU (Intel (R) Core (TM) 2 Quad CPU Q8200) and 4 GB RAM. The laptop computer (used to capture the images from the web-camera and thermal camera) had a 2.50 GHz CPU (Intel (R) Core (TM) i5-2520M) and 4 GB RAM. The proposed method for measuring eye fatigue was implemented with a C++ program using the Microsoft Foundation Class (MFC) and OpenCV library (ver. 2.3.1).

A detailed description of the participants and sample images for the experiments can be found in [

10]. A group of 15 subjects (male: 12, female: 3) participated in the experiments (average age: 26.89, standard deviation: 1.96). We obtained written and informed agreements from each participant. We used 3D content entitled “

Summer in Heidelberg” for our experiments. It consists primarily of landscape scenes as illustrated in

Figure 2. We obtained the permission of the copyright owner of the content [

34]. The highest brightness of the display used for experiments was 99.546 cd/m

2 and the luminance of the room was measured as 321 lux.

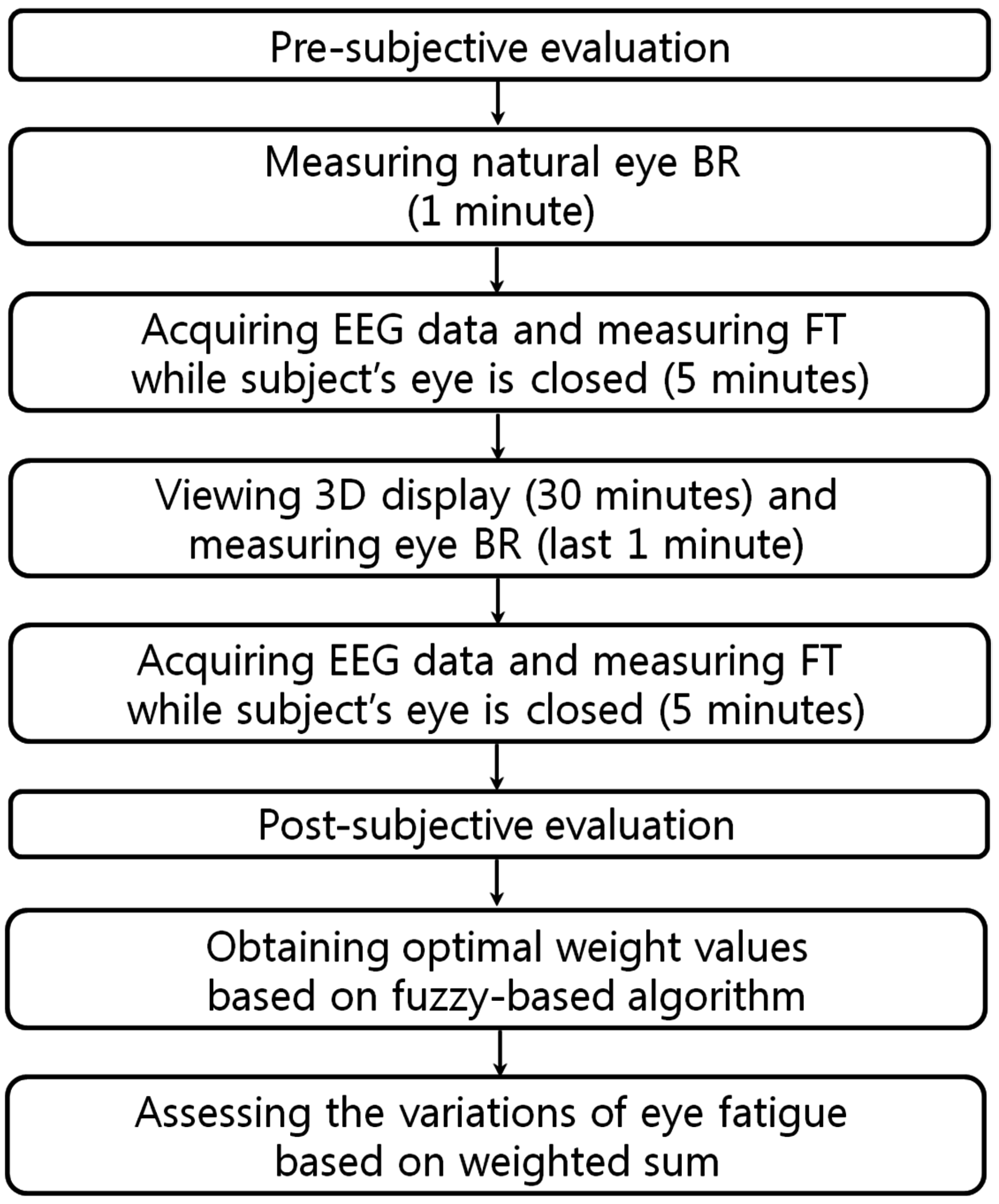

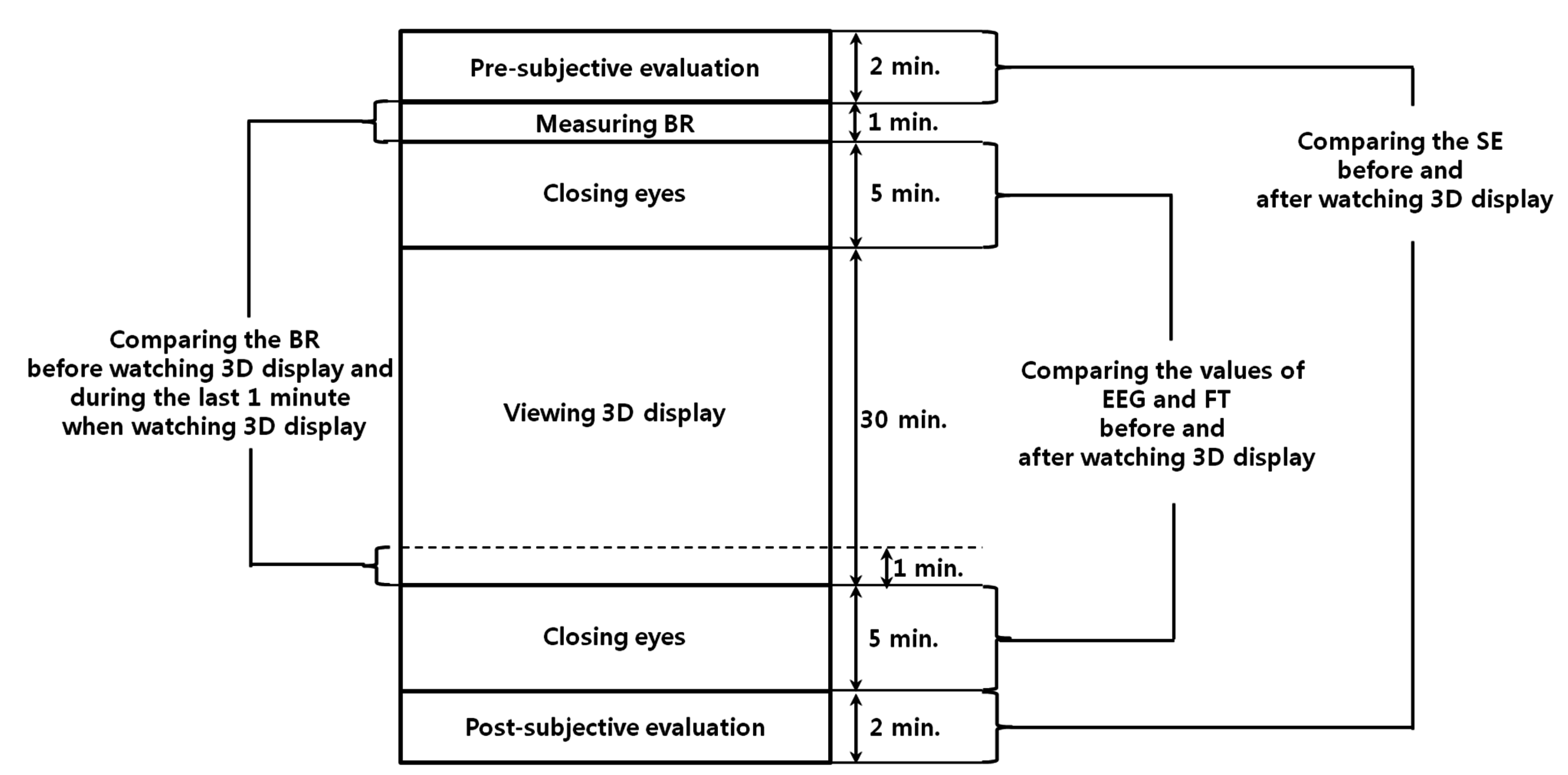

Figure 13 presents the experimental procedures. To guarantee the accuracy of the eye fatigue measurement, the variations of EEG signal, eye BR, and FT were measured with SE before and after watching the 3D display [

10]. To measure the natural BR in the last minute of

Figure 13, we did not provide any artificial indication or instruction for the user’s alertness. There were no dozing or drowsy participants in the experiments. To compare the status of each participant before and after watching the 3D display, an SE was also performed with the questionnaire form displayed in

Table 5 using a 10-point scale (1: Not at all~10: Yes, very much). Based on previous research [

10,

35], these questions were made to measure the status of each participant.

Figure 13.

Experimental procedures.

Figure 13.

Experimental procedures.

Table 5.

Questionnaire for SE.

Table 5.

Questionnaire for SE.

| Six questions for SE |

|---|

| I have difficulties seeing |

| I have a strange feeling around my eyes |

| My eyes feel tired |

| I feel numb |

| I feel dizzy looking at the screen |

| I have a headache |

In previous research [

10], the variations of EEG data, eye BR, FT, and an SE score caused by eye fatigue before and after watching 3D TV were measured. However, the researchers did not obtain one final value for the variation of eye fatigue by combining the values of the EEG data, eye BR, FT, and an SE score considering the qualities of each modality. Unlike [

10], we propose a method of obtaining one final value for the variation of eye fatigue based on FBFM considering the qualities of EEG data, eye BR, FT, and an SE score.

Based on FBFM, the sum of the correlation values with other data was calculated as presented in

Table 6. The sum of the correlation values with other data was highest when obtaining the final value of the variation of eye fatigue using the MAX rule and COG defuzzification method. Therefore, all the following experiments using FBFM were performed based on the MAX rule and COG defuzzification method.

Table 6.

Sum of the correlation values with other data for EEG, BR, FT, and SE according to various defuzzification methods and min or max rule.

Table 6.

Sum of the correlation values with other data for EEG, BR, FT, and SE according to various defuzzification methods and min or max rule.

| Method | EEG | BR | FT | SE | Sum of the Correlation Values |

|---|

| Min rule | FOM | 0.3969 | 0.2742 | 0.5977 | 0.663 | 1.9318 |

| MOM | 0.3405 | 0.3312 | 0.6202 | 0.7204 | 2.0123 |

| LOM | 0.2908 | 0.3733 | 0.6308 | 0.7581 | 2.053 |

| MeOM | 0.3405 | 0.3312 | 0.6202 | 0.7204 | 2.0123 |

| COG | 0.298 | 0.4255 | 0.6736 | 0.7683 | 2.1654 |

| Max rule | FOM | −0.0275 | 0.4176 | 0.6563 | 0.6871 | 1.7335 |

| MOM | 0.0914 | 0.5136 | 0.7091 | 0.8094 | 2.1235 |

| LOM | 0.1508 | 0.5564 | 0.681 | 0.8486 | 2.2368 |

| MeOM | 0.1315 | 0.5254 | 0.7002 | 0.8303 | 2.1874 |

| COG | 0.2268 | 0.5464 | 0.6381 | 0.8413 | 2.2526 |

For the next experiment, we measured the gradient, R

2, and correlation value between the value of the eye fatigue using the proposed FBFM and each of the EEG, BR, FT, and SE data as presented in

Table 7. Linear regression is the usual approach for obtaining the line that is optimally fitted with the data distribution in 2D space. The gradient and R

2, which are calculated from the line, represent the measure of steepness of data distribution and the confidence level of the fitted data in the predicted regression line, respectively. In general, the higher the data reliably fits the regression line, the larger the R

2 value becomes [

10]. The range of the correlation value is represented from −1 to 1 [

36]. −1 and 1 represent strong negative and strong positive relationships, respectively. If the correlation value is 0, the data is completely uncorrelated.

In

Table 7, we also include the gradient, R

2, and correlation value of each modality, which were measured in [

10]. As illustrated in

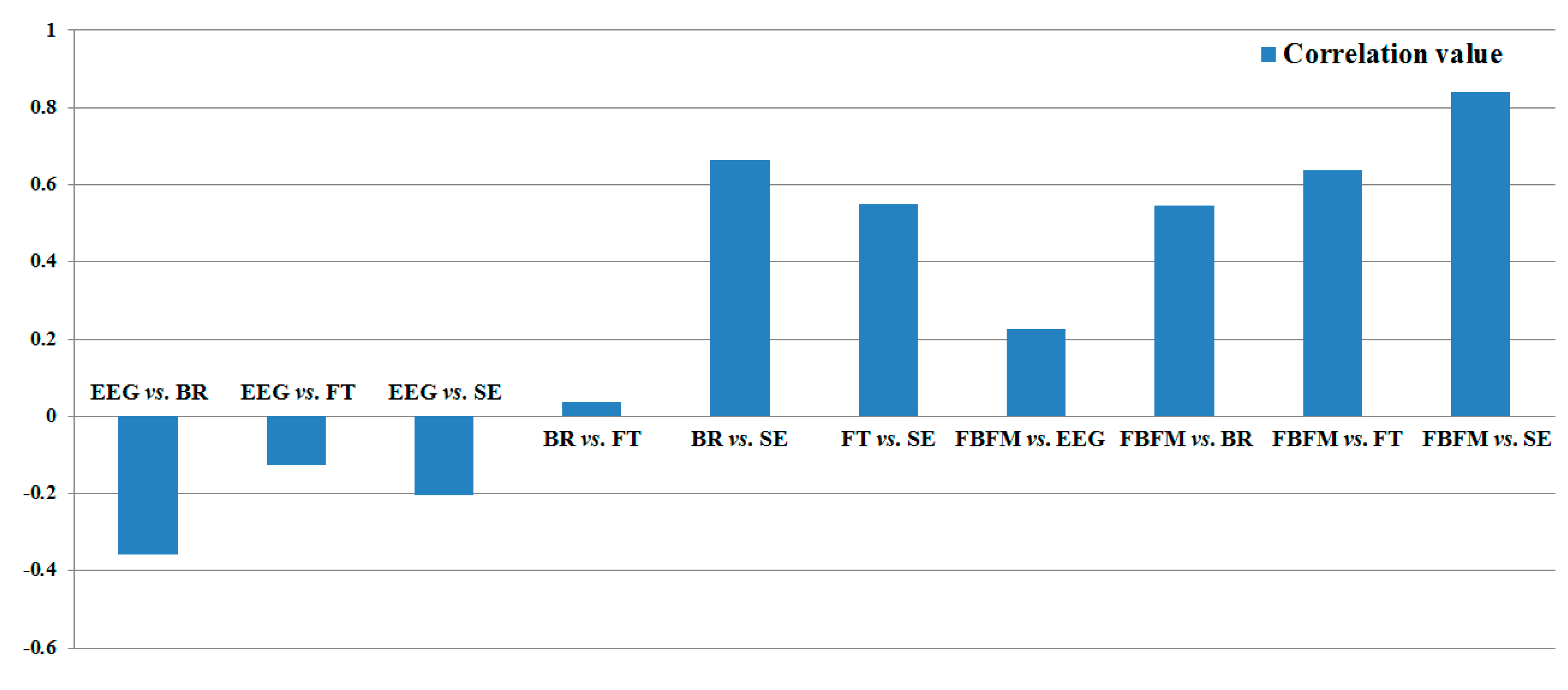

Table 7 and

Figure 14, the correlation and R

2 values between the value of eye fatigue using the proposed FBFM and SE are the highest and between the BR and FT are the lowest.

Table 7.

Results of gradient, R2, and correlation value between each modality and the value of eye fatigue using the proposed FBFM. Value of eye fatigue using the proposed FBFM is labeled FBFM.

Table 7.

Results of gradient, R2, and correlation value between each modality and the value of eye fatigue using the proposed FBFM. Value of eye fatigue using the proposed FBFM is labeled FBFM.

| Method | Gradient | R2 | Correlation Value |

|---|

| Previous research [10] | EEG vs. BR | −0.33 | 0.1285 | −0.3585 |

| EEG vs. FT | −0.1154 | 0.0156 | −0.125 |

| EEG vs. SE | −0.1584 | 0.0421 | −0.2052 |

| BR vs. FT | 0.0381 | 0.0014 | 0.038 |

| BR vs. SE | 0.5582 | 0.4427 | 0.6653 |

| FT vs. SE | 0.4593 | 0.3015 | 0.5491 |

| Proposed method | FBFM vs. EEG | 0.4804 | 0.0515 | 0.2268 |

| FBFM vs. BR | 1.0654 | 0.2986 | 0.5464 |

| FBFM vs. FT | 1.2478 | 0.4072 | 0.6381 |

| FBFM vs. SE | 1.3762 | 0.7078 | 0.8413 |

Figure 14.

Result of correlation value between each modality and the value of eye fatigue using the proposed FBFM.

Figure 14.

Result of correlation value between each modality and the value of eye fatigue using the proposed FBFM.

To compare the correlation values between each data and the value using FBFM, we obtained the correlation matrix presented in

Table 8. The relationships between the EEG and other data (BR, FT, and SE) were negatively analyzed because EEG signals contain noise such as the movement of the head or facial muscles. As indicated in

Table 8, the absolute correlation value between FBFM and SE is highest (0.8413), and that between BR and FT is lowest (0.038) for the measurement of eye fatigue for the 3D content.

As illustrated in

Table 7 and

Figure 14, the gradient, R

2, and correlation values obtained using the proposed FBFM and SE are greater than those of the previous method (EEG

vs. SE, BR

vs. SE, and FT

vs. SE) [

10]. The values using the proposed FBFM and FT are greater than those using the previous method (EEG

vs. FT, BR

vs. FT, and SE

vs. FT). Similarly, the majority of the gradient, R

2, and correlation values obtained using the proposed FBFM and BR and the proposed FBFM and EEG are also greater than those using the previous method. From this, we can confirm that the eye fatigue value obtained using the proposed FBFM is more correlated with each modality, EEG, BR, FT, and SE, compared to that using the previous method using each modality without fusion [

10]. This means that the credibility of the eye fatigue value using the proposed FBFM is higher than that of using each modality without fusion and that a more accurate eye fatigue can be measured using the proposed FBFM compared to the previous method.

Table 8.

Correlation matrix of four measured data and the value using FBFM before and after (or in the final one minute) watching 3D content.

Table 8.

Correlation matrix of four measured data and the value using FBFM before and after (or in the final one minute) watching 3D content.

| EEG | BR | FT | SE | FBFM | Sum of the Correlation Values with Other Data |

|---|

| EEG | 1 | −0.3585 | −0.125 | −0.2052 | 0.2268 | −0.4619 |

| BR | −0.3585 | 1 | 0.038 | 0.6653 | 0.5464 | 0.8912 |

| FT | −0.125 | 0.038 | 1 | 0.5491 | 0.6381 | 1.1002 |

| SE | −0.2052 | 0.6653 | 0.5491 | 1 | 0.8413 | 1.8505 |

| FBFM | 0.2268 | 0.5464 | 0.6381 | 0.8413 | 1 | 2.2526 |

To quantitatively assess the correlation values between each data, we added the correlation values excluding the auto-correlation value of 1 (for example, correlation value between EEG and EEG). As presented in

Table 8 and

Figure 15, the correlation of the proposed method (FBFM) with other data is highest (2.2526). Those of the SE, FT, BR, and EEG with other data are second, third, fourth, and fifth highest, respectively. From these results, we can confirm that the value of eye fatigue using the proposed FBFM is more correlated with the value of each modality compared to not combining each modality. This indicates that the value of eye fatigue using the proposed FBFM is more credible than not combining each modality.

In the next analysis, we performed an independent two-sample t-test, which has been widely used as a statistical hypothesis test [

37], with the variations of eye fatigue using the proposed FBFM. The null-hypothesis (the two scores of eye fatigue using the FBFM are equal before and after watching the 3D display) was used for the t-test. The thresholds for the confidence level of 99% and 95% used were 0.01 and 0.05, respectively. In general, if the calculated p-value is less than the threshold of 0.01 or 0.05, the null-hypothesis is rejected based on the confidence level of 99% or 95%, respectively [

37]. This mean that the two scores of eye fatigue using the FBFM before and after watching the 3D display are significantly different based on the confidence level. Experimental results determined that the calculated p-value of the FBFM is 0.0471, which is less than the threshold of 0.05. Therefore, we can confirm that with a confidence level of 95%, the two eye fatigue scores using FBFM were significantly different before and after watching the 3D display.

For the last test, we analyzed the variations of the eye fatigue using the FBFM before and after watching the 3D display using the effect size in descriptive statistics. The effect size is usually accepted as a descriptive statistic and has been widely used to represent the power of a measured phenomenon in statistics [

38]. Based on previous research [

39], we defined Cohen’s

d values of 0.2, 0.5, and 0.8 as small, medium, and large, respectively. Cohen’s

d value is calculated based on the difference between two means divided by the standard deviation of the measured data. If the calculated Cohen’s

d value is close to 0.2, the measured data is regarded as having small effect size. If the value is close to 0.8, the measured data can be regarded as having large effect size. Experimental results determined that Cohen’s

d value was 0.7704, which is closer to 0.8, compared to 0.2 or 0.5, and we can confirm that the variations of the eye fatigue using FBFM before and after watching the 3D display can be regarded as having a large effect size.

Figure 15.

Result of the sum of the correlation values with other data.

Figure 15.

Result of the sum of the correlation values with other data.

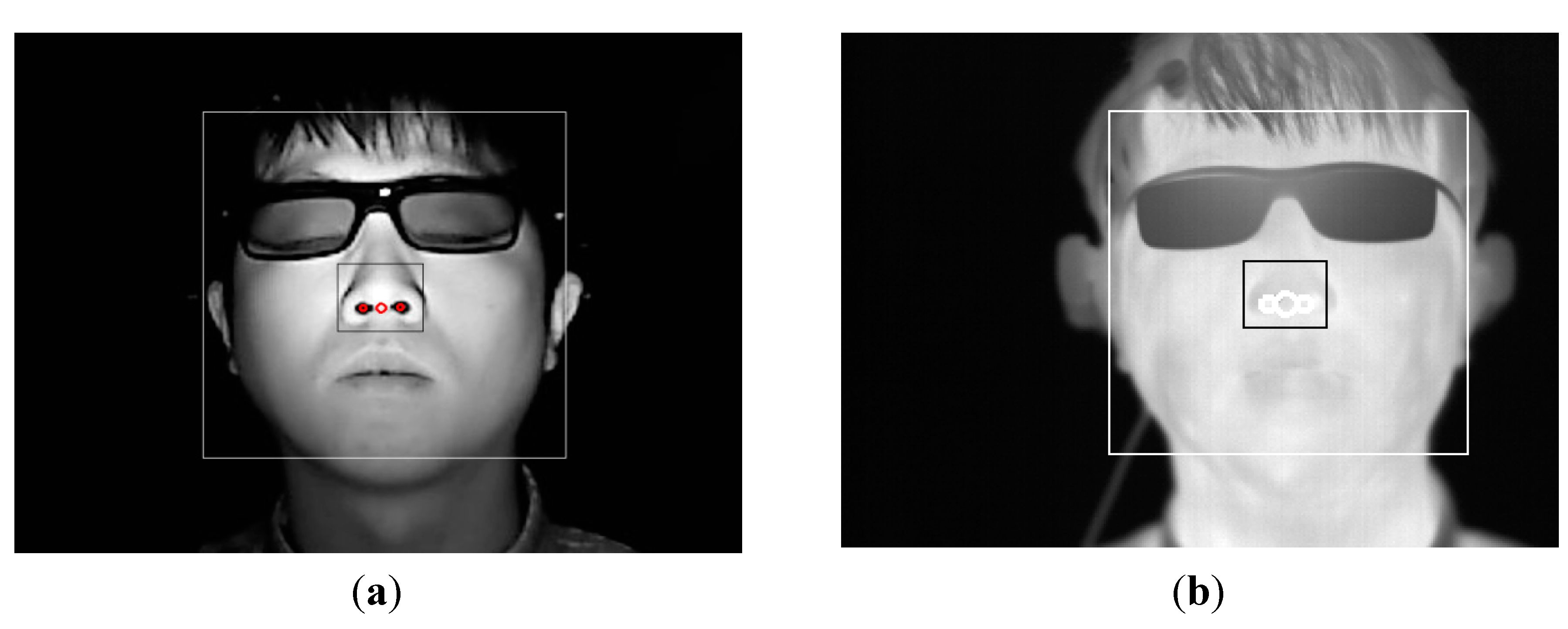

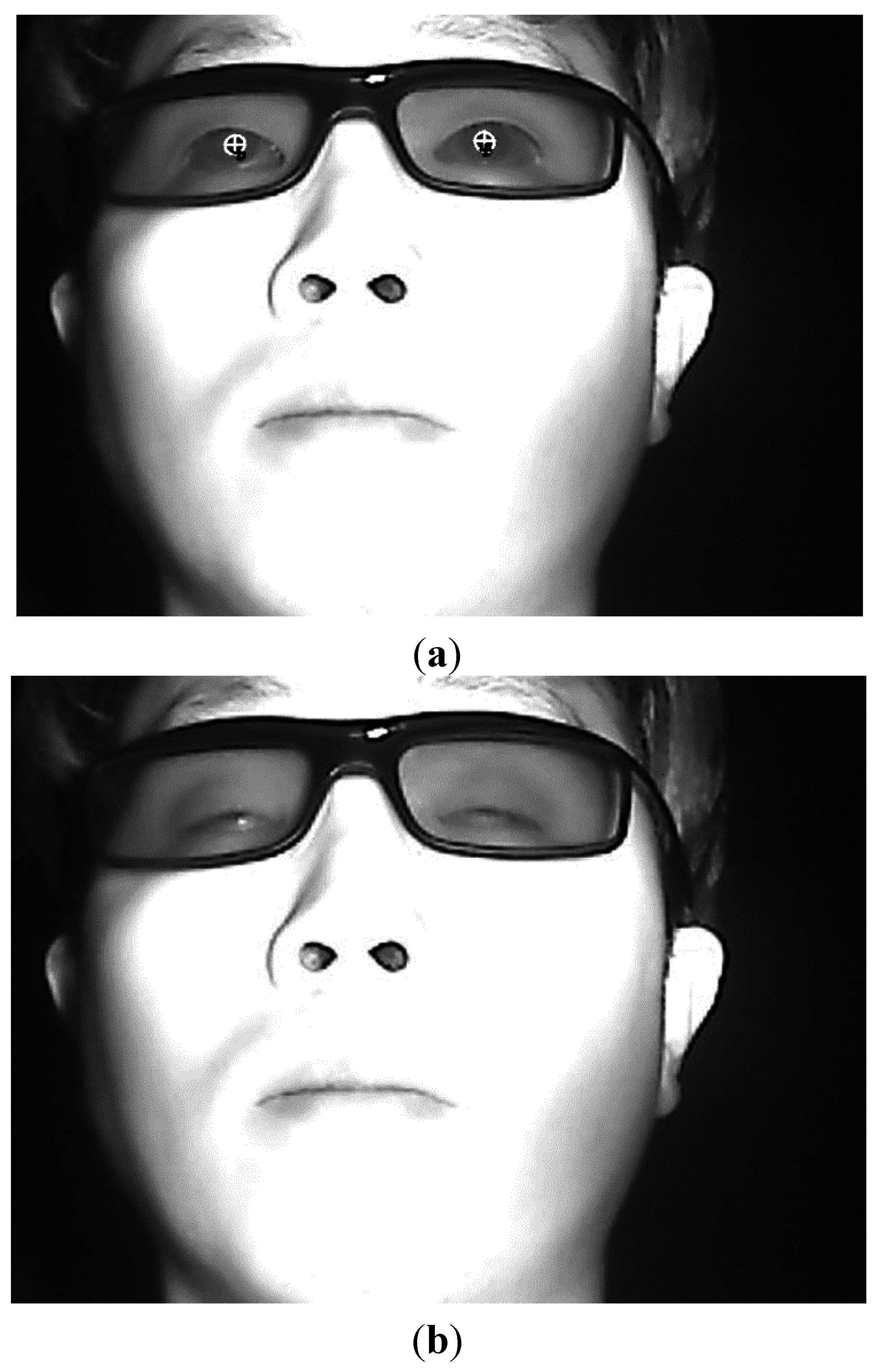

In addition, we simplified the capturing system of

Figure 2 as follows. In the simplified system, we combined two functionalities of measuring eye BR by the high-speed camera and detecting face and nostril for defining the cheek regions (where facial temperature is measured) by the web-camera of

Figure 2. That is, we removed the high-speed camera of large size, and made these two functionalities be performed by one web-camera. The revised capturing system is shown in

Figure 16, and the captured images by this system are shown in

Figure 17. Like the previous system of

Figure 2, in order to detect accurate pupil area through active shutter glasses, NIR illuminator is also used in the revised system of

Figure 16. By attaching the NIR illuminator to the left side of the web-camera as shown in

Figure 16, we made the small capturing system whose size is 18 × 7 × 5 cm

3 (width × height × depth) and whose weight is about 330 g.

In order to prove that the performance of this simplified capturing system of

Figure 16 is similar to that of previous system of

Figure 2, we measured the accuracies of eye BR by this system. Because the eye BR is measured by counting the number of closed eyes for a time duration of one minute, the accuracy of eye BR can be measured by Type 1 and 2 errors. Type 1 error represents the error of misclassifying open eye into close one whereas Type 2 error shows the error of misclassifying close eye into open one. Experimental results (by this simplified capturing system of

Figure 16 with 15 people) showed that the Type 1 and 2 errors are 99.2% and 99.1%, respectively, which are similar to those (99.2% and 99%) by the previous system of

Figure 2.

As the next test for proving that the performance of this simplified capturing system is similar to that of previous system of

Figure 2, we measured the accuracies of detected face and nostril in web-camera image and detected cheek regions for measuring facial temperature in thermal image. Experimental results (by this simplified capturing system of

Figure 16 with 15 people) showed that the accuracies of detected face, nostril, and cheek regions are 99.9%, 99.8% and 99.5%, respectively, which are similar to those (99.9%, 99.7% and 99.3%) by the previous system of

Figure 2. Because EEG signal is measured by the previous EEG measurement device of

Figure 2, the performance of EEG measurement by the system of

Figure 16 is same to that of previous system of

Figure 2.

Figure 16.

Simplified capturing system for measuring eye fatigue on 3D display.

Figure 16.

Simplified capturing system for measuring eye fatigue on 3D display.

Figure 17.

Examples of captured image by the simplified capturing system, (a) Detected pupil center and specular reflection center in case of open eye in the image by web-camera; (b) Closed eye in the image by web-camera; (c) Image by the thermal camera for defining the cheek regions for measuring facial temperate.

Figure 17.

Examples of captured image by the simplified capturing system, (a) Detected pupil center and specular reflection center in case of open eye in the image by web-camera; (b) Closed eye in the image by web-camera; (c) Image by the thermal camera for defining the cheek regions for measuring facial temperate.

In our research, we normalized the BR considering the bias in our original experimental results. That is, in order to measure the bias value which represents the change of BR caused by being exposed to the NIR illuminator (excluding the change effect of BR caused by eye fatigue of 3D display), we performed the experiment with two images which are comfortable to user’s eyes. Based on previous research that the green color can be more comfortable to user’s eye [

40], we used two sample images for experiment. These images do not have any copyright [

41], and they are shown in

Figure 18.

In detail, before performing the experiments of

Figure 13, we measured the BR of 15 participants (who took part in our experiments of

Figure 13) with the two images of

Figure 18 under same experimental environment (luminance of the room, display size, and distance of user to display,

etc.) to that of

Figure 13.

At first, BR was measured for 1 min. before each people looked at the two images, which corresponds to measuring BR before viewing 3D display of

Figure 13. Then, each people closed eyes for 5 min. like the experimental procedure of

Figure 13. After that, BR was measured again in the last 1 min. while each people looked at the images for 30 min., which corresponds to measuring BR of the last 1 min. of viewing 3D display of

Figure 13. After that, each participant took sufficient rest before doing the experiments of

Figure 13.

From this experiment with two images of

Figure 18, we obtained the two average graphs of BR of 15 participants as shown in

Figure 19. In both cases of

Figure 19a,b, the BRs during the last 1 min of looking at the image are a little increased compared to those before looking at the image. Then, we obtained the average graphs of

Figure 19a,b, and two average BRs (before and during the last 1 min of looking at the image, respectively) were obtained. The difference between these two average BRs was used as the bias value which represents the change of BR caused by being exposed to the NIR illuminator (excluding the change effect of eye BR caused by eye fatigue of 3D display).

Figure 18.

Examples of two images which were used for measuring the bias value of the change of BR caused by being exposed to the NIR illuminator.

Figure 18.

Examples of two images which were used for measuring the bias value of the change of BR caused by being exposed to the NIR illuminator.

By conclusion, we already reflected this bias value to the measured eye BRs of our original experiments of

Figure 13, from which we obtained the correct BRs which were not biased to the change of BR caused by being exposed to the NIR illuminator.

Figure 19.

Two average graphs of BR before looking at the images and in the last 1 min. while looking at the images. Graphs when people looked at (

a) the left image of

Figure 18, (

b) the right image of

Figure 18.

Figure 19.

Two average graphs of BR before looking at the images and in the last 1 min. while looking at the images. Graphs when people looked at (

a) the left image of

Figure 18, (

b) the right image of

Figure 18.