Camera Calibration for Water-Biota Research: The Projected Area of Vegetation

Abstract

:1. Introduction

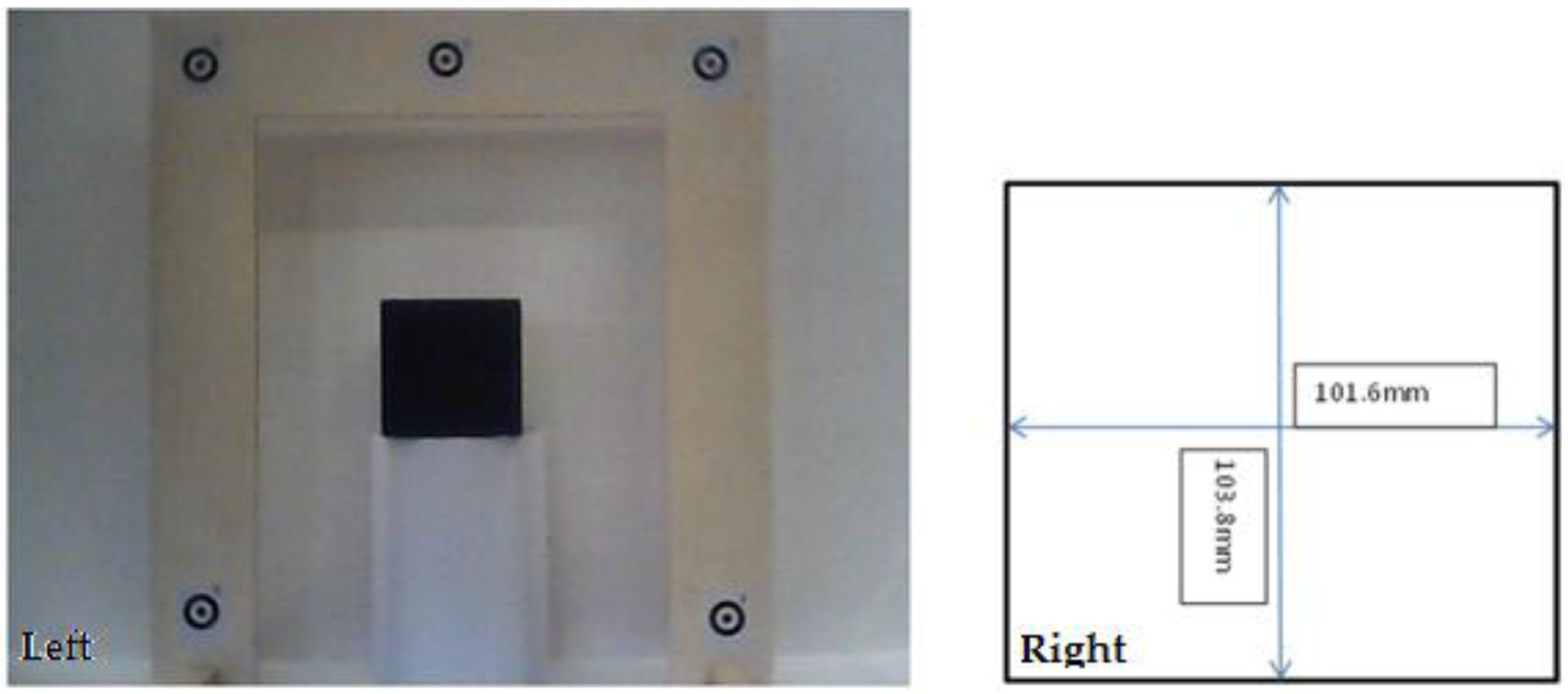

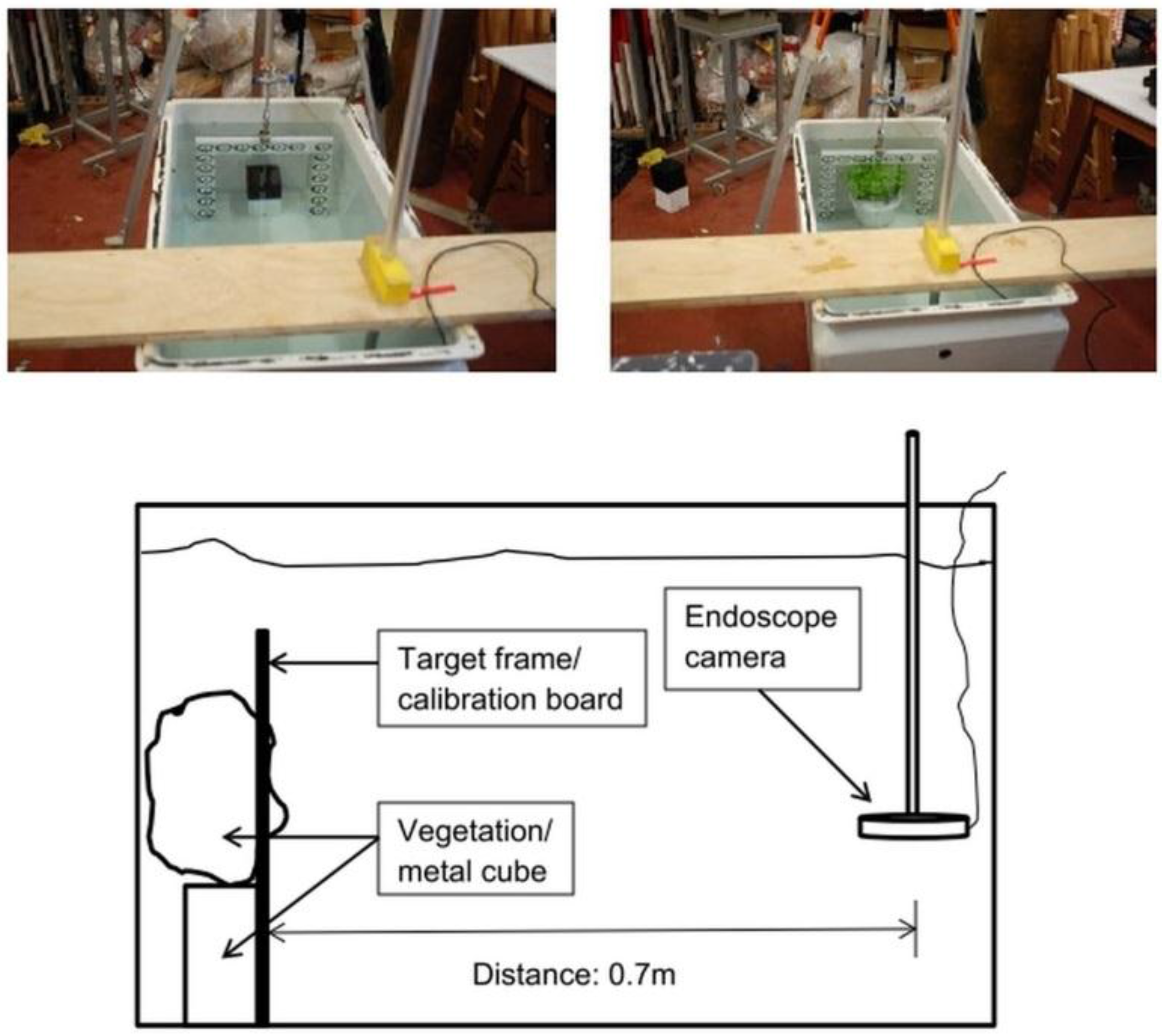

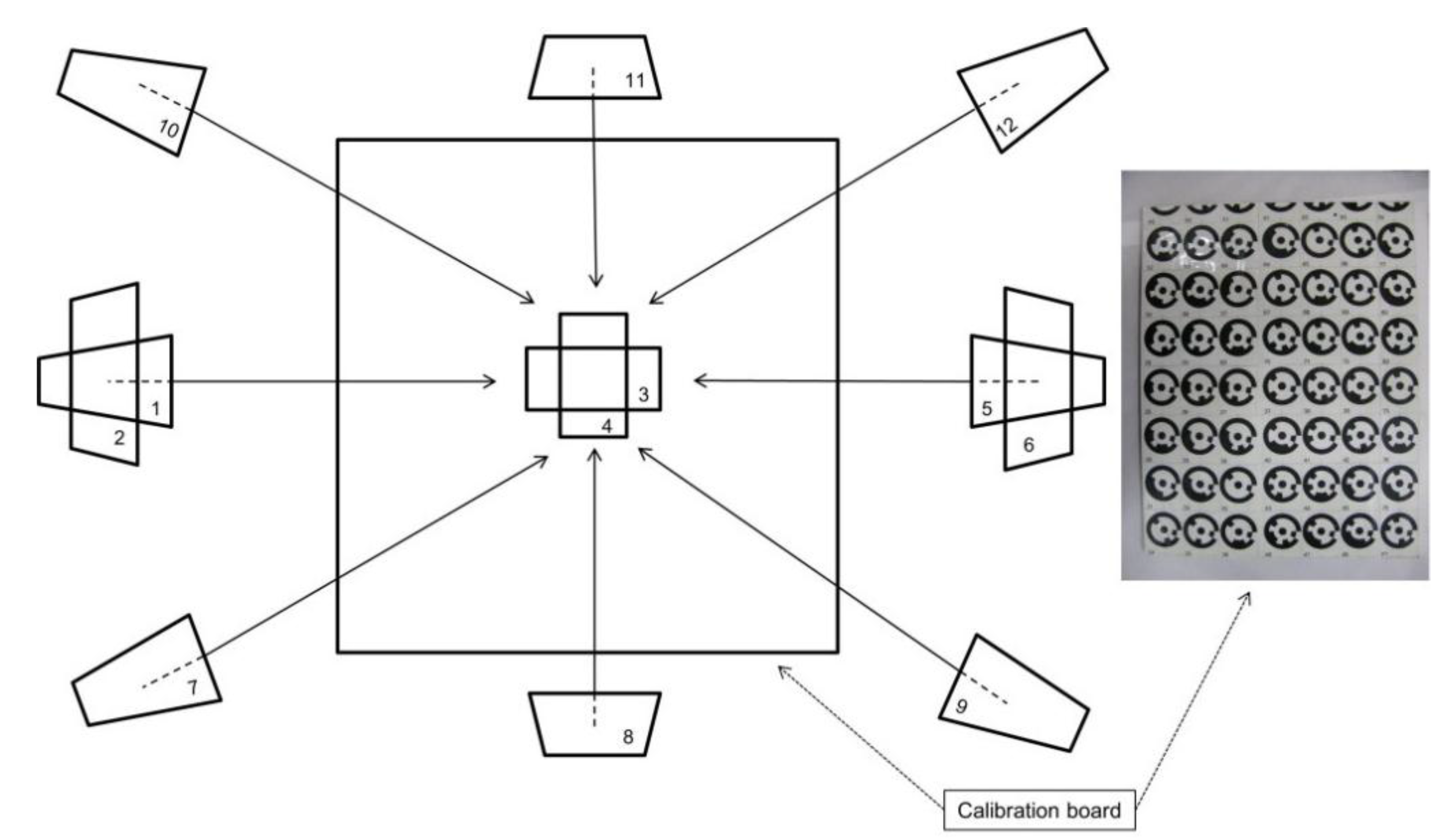

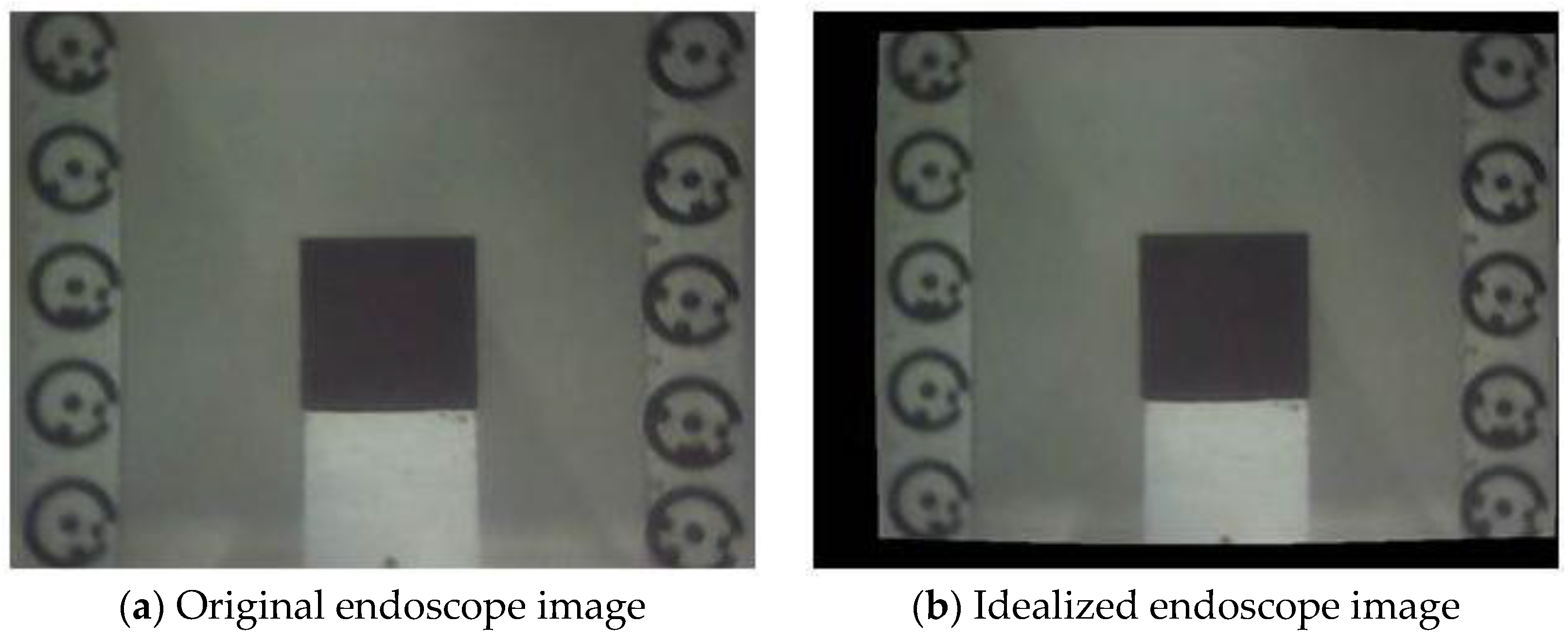

2. Experimental Setup

3. Camera Calibration

4. Results and Discussion

4.1. Dry Case

| Camera Calibration | Area D80 Camera [m2] | Error D80 Camera [%] | Area Endoscope Camera [m2] | Error Endoscope Camera [%] |

|---|---|---|---|---|

| Not calibrated dry | 0.01063 | 0.8 | 0.01040 | 1.4 |

| Calibrated dry | 0.01056 | 0.1 | 0.01056 | 0.1 |

| Not calibrated tank | 0.0096 | 9.0 | ||

| Calibrated tank | 0.0104 | 1.4 | ||

| Not calibrated flume | 0.0098 | 7.1 | ||

| Calibrated flume | 0.0107 | 1.4 |

| Camera Calibration | Area D80 Camera [m2] | Area Endoscope Camera [m2] | Difference D80-Endoscope [%] |

|---|---|---|---|

| Not calibrated dry | 0.0324 | 0.0333 | 2.7 |

| Calibrated dry | 0.0318 | 0.0317 | 0.3 |

4.2. Plastic Water Tank

| Camera Calibration | Area Bush Submerged [m2] | Area Bush Dry [m2] | Difference Submerged-Dry [%] |

|---|---|---|---|

| Not calibrated tank | 0.0292 | 0.0333 | 12.3 |

| Calibrated tank | 0.0312 | 0.0317 | 1.6 |

4.3. Open-Channel Flume

| Camera Calibration | Area Bush Submerged [m2] | Area Bush Dry [m2] | Difference Flowing-Dry [%] |

|---|---|---|---|

| Not calibrated flume | 0.0284 | 0.0333 | 17.3 |

| Calibrated flume | 0.0299 | 0.0317 | 5.7 |

| Difference calibrated-not calibrated [%] | 5.0 | 4.9 |

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Marion, A.; Nikora, V.; Puijalon, S.; Koll, K.; Ballio, F.; Tait, S.; Zaramella, M.; Sukhodolov, S.; O’Hare, M.; Wharton, G.; et al. Aquatic interfaces: A hydrodynamic and ecological perspective. J. Hydraul. Res. 2014, 52, 744–758. [Google Scholar] [CrossRef]

- Puijalon, S.; Bornette, G.; Sagnes, P. Adaptations to increasing hydraulic stress: Morphology, hydrodynamics and fitness of two higher aquatic plant species. J. Exp. Bot. 2005, 56, 777–786. [Google Scholar] [CrossRef] [PubMed]

- Luhar, M.; Nepf, H. Flow induced reconfiguration of buoyant and flexible aquatic vegetation. Limnol. Oceanogr. 2011, 56, 2003–2017. [Google Scholar] [CrossRef]

- Sagnes, P. Using multiple scales to estimate the projected frontal surface area of complex three-dimensional shapes such as flexible freshwater macrophytes at different flow conditions. Limnol. Oceanogr. Methods 2010, 8, 474–483. [Google Scholar] [CrossRef]

- Stazner, B.; Lamouroux, N.; Nikora, V.; Sagnes, P. The debate about drag and reconfiguration of freshwater macrophytes: Comparing results obtained by three recently discussed approaches. Freshw. Biol. 2006, 51, 2173–2183. [Google Scholar] [CrossRef]

- Neumeier, U. Quantification of vertical density variations of salt-marsh vegetation. Estuar. Coast. Shelf Sci. 2005, 63, 489–496. [Google Scholar] [CrossRef]

- Pavlis, M.; Kane, B.; Harris, J.R.; Seiler, J.R. The effects of pruning on drag and bending moment of shade trees. Arboric. Urban For. 2008, 34, 207–215. [Google Scholar]

- Armanini, A.; Righetti, M.; Grisenti, P. Direct measurement of vegetation resistance in prototype scale. J. Hydraul. Res. 2005, 43, 481–487. [Google Scholar] [CrossRef]

- Wilson, C.A.M.E.; Hoyt, J.; Schnauder, I. Impact of foliage on the drag force of vegetation in aquatic flows. J. Hydraul. Eng. 2008, 134, 885–891. [Google Scholar] [CrossRef]

- Wunder, S.; Lehmann, B.; Nestmann, F. Determination of the drag coefficients of emergent and just submerged willows. Int. J. River Basin Manag. 2011, 9, 231–236. [Google Scholar] [CrossRef]

- Jalonen, J.; Järvelä, J.; Aberle, J. Vegetated flows: Drag force and velocity profiles for foliated plant stands. In In River Flow, Proceedings of the 6th International Conference on Fluvial Hydraulics, San José, Costa Rica, 5–7 September 2012; Murillo Muñoz, R.E., Ed.; CRC Press: Boca Raton, FL, USA, 2012; pp. 233–239. [Google Scholar]

- Brown, D.C. Close-range camera calibration. Photogramm. Eng. 1971, 37, 855–866. [Google Scholar]

- Chandler, J. Effective application of automated digital photogrammetry for geomorphological research. Earth Surf. Process. Landf. 1999, 24, 51–63. [Google Scholar] [CrossRef]

- Lane, S.N.; Chandler, J.H.; Porfiri, K. Monitoring river channel and flume surfaces with digital photogrammetry. J. Hydraul. Eng. 2001, 127, 871–877. [Google Scholar] [CrossRef]

- Whittaker, P. Modelling the Hydrodynamic Drag Force of Flexible Riparian Woodland. Ph.D. Thesis, Cardiff University, Cardiff, UK, 2014. [Google Scholar]

- Wackrow, R.; Chandler, J. A convergent image configuration for DEM extraction that minimizes the systematic effects caused by an inaccurate lens model. Photogramm. Rec. 2008, 23, 6–18. [Google Scholar] [CrossRef]

- Fryer, J.G. Camera calibration. In Close Range Photogrammetry and Machine Vision; Atkinson, K.B., Ed.; Whittles Publishing: Caithness, UK, 2001. [Google Scholar]

- Wackrow, R.; Chandler, J.H.; Bryan, P. Geometric consistency and stability of consumer-grade digital cameras for accurate spatial measurement. Photogram. Rec. 2007, 22, 121–134. [Google Scholar] [CrossRef]

- Fryer, J.G.; Fraser, C.S. On the calibration of underwater cameras. Photogramm. Rec. 1986, 12, 73–85. [Google Scholar] [CrossRef]

- Harvey, E.S.; Shortis, M.R. Calibration stability of an underwater stereo video system: Implications for measurement accuracy and precision. Mar. Technol. Soc. J. 1998, 32, 3–17. [Google Scholar]

- Cooper, M.A.R.; Robson, S. Theory of close range photogrammetry. In Close Range Photogrammetry and Machine Vision; Atkinson, K.B., Ed.; Whittles Publishing: Caithness, UK, 2001. [Google Scholar]

- Wackrow, R. Spatial Measurement with Consumer Grade Digital Cameras. Ph.D. Thesis, Loughborough University, Loughborough, UK, 2008. [Google Scholar]

- Granshaw, S.I. Bundle adjustment methods in engineering photogrammetry. Phtogramm. Rec. 2006, 10, 181–207. [Google Scholar] [CrossRef]

- PhotoModeler Scanner. Available online: http://photomodeler.com/index.html (accessed on 24 September 2005).

- Fraser, C.S. Digital self-calibration. ISPRS J. Photogramm. Remote Sens. 1997, 52, 149–159. [Google Scholar] [CrossRef]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wackrow, R.; Ferreira, E.; Chandler, J.; Shiono, K. Camera Calibration for Water-Biota Research: The Projected Area of Vegetation. Sensors 2015, 15, 30261-30269. https://doi.org/10.3390/s151229798

Wackrow R, Ferreira E, Chandler J, Shiono K. Camera Calibration for Water-Biota Research: The Projected Area of Vegetation. Sensors. 2015; 15(12):30261-30269. https://doi.org/10.3390/s151229798

Chicago/Turabian StyleWackrow, Rene, Edgar Ferreira, Jim Chandler, and Koji Shiono. 2015. "Camera Calibration for Water-Biota Research: The Projected Area of Vegetation" Sensors 15, no. 12: 30261-30269. https://doi.org/10.3390/s151229798

APA StyleWackrow, R., Ferreira, E., Chandler, J., & Shiono, K. (2015). Camera Calibration for Water-Biota Research: The Projected Area of Vegetation. Sensors, 15(12), 30261-30269. https://doi.org/10.3390/s151229798