Can Smartwatches Replace Smartphones for Posture Tracking?

Abstract

:1. Introduction

2. Related Works

2.1. Activity Recognition

2.2. Wearable Activity Recognition

2.3. Smartwatch Activity Recognition

3. Smartwatch Tracking System

3.1. Hardware Platform and Data Collection

3.2. Feature Extraction and Selection

| Phase | Movement State | Activity Description |

|---|---|---|

| Transitions | Sit-Stand | Minimal Movement Transition |

| Stand-Sit | ||

| Sit-Lie | ||

| Lie-Sit | ||

| Stand-Lie | ||

| Lie-Stand | ||

| Activities of Daily Living | Standing | Using Phone (10 s) |

| Brushing Teeth (10 s) | ||

| Lifting Cup (10 times) | ||

| Swinging Arms(10 times) | ||

| Walk (10 s) | ||

| Open Door (10 times) | ||

| Look at Watch (10 times) | ||

| Clean with Broom (10 s) | ||

| Sitting | Typing (10 s) | |

| Reading Book (10 s) | ||

| Brushing Teeth (10 s) | ||

| Look at Watch (10 times) | ||

| Bicep Curl (10 times) | ||

| Use TV Remote (10 s) | ||

| Lying | Adjust Pillow (10 s) | |

| Text with Phone (10 s) | ||

| Adjust in Bed (10 s) | ||

| Reading Book (10 s) | ||

| Adjust Blanket (10 s) | ||

| Walk | Step Forward | 10 times |

| Step Backward | 10 times |

| Feature | Description (Domain) |

|---|---|

| Minimum | Minimum value obtained over the movement window (time) |

| Maximum | Maximum value obtained over the movement window (time) |

| Sum | Sum of values obtained over the movement window (time) |

| Mean | Mean value obtained over the movement window (time) |

| Standard Deviation | Standard deviation of values obtained over the movement window (time) |

| Kurtosis | Peakedness of the distribution (time) |

| Skewness | Asymmetry of the distribution (time) |

| Energy | Calculation of the energy (sum of the absolute value of the fftcomponents) (frequency) |

| Variance | Variance of values obtained over the movement window (time) |

| Median | Median value obtained over the movement window (time) |

| Root Mean Square (RMS) | Root mean square of values over the movement window (time) |

| Average Difference | Average difference of values (pairwise) in window (time) |

| Interquartile Range | Dispersion of data and elimination of outlier points (time) |

| Zero Crossing Rate | Rate of sign changes in signal (time) |

| Mean Crossing Rate | Rate of crossing the mean value of signal (time) |

| Eigenvalues of Dominant Directions | Corresponds to dominant direction of movement (time) |

| CAGH | Correlation coefficient of acceleration between gravity and heading directions (time) |

| Average Mean Intensity | Mean intensity of the signal (time) |

| Average Rotation Angles | Calculates rotation based on gravity (time) |

| Dominant Frequency | Dominant frequency in transform (frequency) |

| Peak Difference | Peak difference of frequencies (frequency) |

| Peak RMS | Root mean square of peak frequencies (frequency) |

| Root Sum of Squares | Root sum squares of frequencies (frequency) |

| First Peak (Energy) | First peak found in energy (frequency) |

| Second Peak (Energy) | Second peak found in energy (frequency) |

3.3. Training the Algorithm

3.4. Testing the Algorithm

Cross-Validation

4. Results

4.1. Experimental Setup

4.2. Summary View

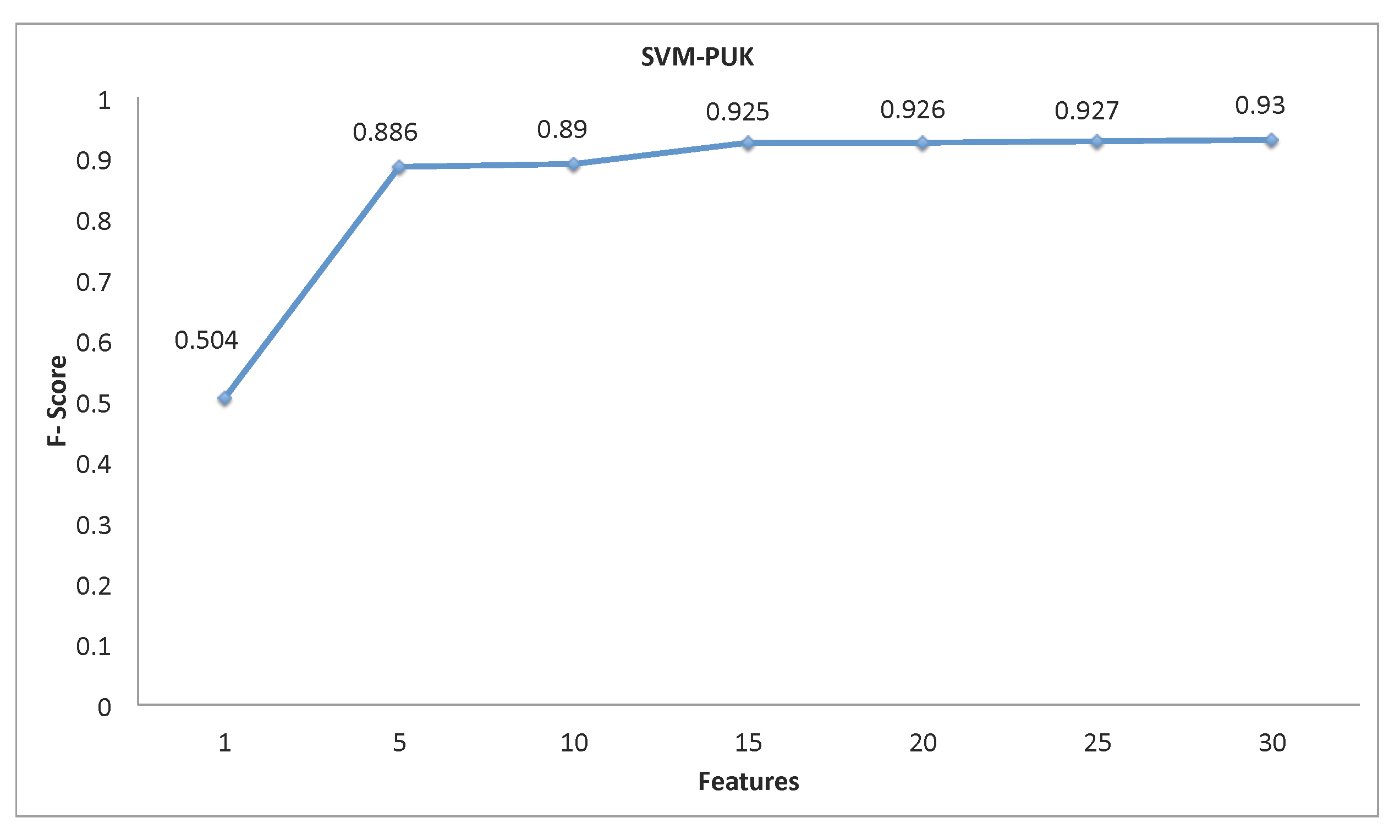

4.3. Feature Selection

| Features 1–10 | 11–20 | 21–30 |

|---|---|---|

| Average Difference () | Mean () | Mean () |

| Average Difference () | Sum () | Sum () |

| Median of Intensity of Gyroscope () | Eigenvalues () | Dominant Frequency () |

| Mean () | Root Mean Square () | Energy () |

| Sum () | Energy () | Root Mean Square() |

| Dominant Frequency () | Root Sum of Squares () | Root Sum of Squares () |

| Energy () | Standard Deviation () | Peak Difference () |

| Root Sum of Squares () | Variance () | Peak Difference () |

| Root Mean Square () | Variance () | Dominant Frequency () |

| Peak Difference () | Standard Deviation () | First Peak () |

4.4. Cross-Validation Results

4.5. Comparison of Methods

| Algorithm | F-Score |

|---|---|

| SVM (PUK) | 0.930 |

| SVM (RBF) | 0.812 |

| pADL (AccelOnly) | 0.702 |

| pADL (Accel + Gyro) | 0.783 |

| wADL (Accel Only) | 0.814 |

| wADL (Accel + Gyro) | 0.908 |

5. Discussion

5.1. Review of the Results

5.2. Limitations and Future Work

| ECOG Value | ECOG Description |

|---|---|

| 0 | Fully active, able to carry on all pre-disease performance without restriction |

| 1 | Restricted in physically strenuous activity, but ambulatory and able to carry out work of a light or sedentary nature |

| 2 | Ambulatory and capable of all self-care, but unable to carry out any work activities. Up and about more than 50% of waking hours. |

| 3 | Capable of only limited self-care, confined to bed or chair more than 50% of waking hours. |

| 4 | Completely disabled. Cannot carry out self-care. Totally confined to bed or chair. |

5.3. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Dey, A.K.; Wac, K.; Ferreira, D.; Tassini, K.; Hong, J.H.; Ramos, J. Getting closer: An empirical investigation of the proximity of user to their smart phones. In Proceedings of the 13th International Conference on Ubiquitous Computing, Beijing, China, 17–21 September 2011; ACM: New York, NY, USA, 2011; pp. 163–172. [Google Scholar]

- Lan, M.; Samy, L.; Alshurafa, N.; Suh, M.K.; Ghasemzadeh, H.; Macabasco-O’Connell, A.; Sarrafzadeh, M. Wanda: An end-to-end remote health monitoring and analytics system for heart failure patients. In Proceedings of the Conference on Wireless Health, La Jolla, CA, USA, 22–25 October 2012; ACM: New York, NY, USA, 2012. [Google Scholar]

- Alshurafa, N.; Eastwood, J.; Nyamathi, S.; Xu, W.; Liu, J.J.; Sarrafzadeh, M. Battery Optimization in Smartphones for Remote Health Monitoring Systems to Enhance User Adherence. In Proceedings of the 7th International Conference on PErvasive Technologies Related to Assistive Environments, Island of Rhodes, Greece, 27–30 May 2014; ACM: New York, NY, USA, 2014. [Google Scholar]

- Rawassizadeh, R.; Tomitsch, M.; Wac, K.; Tjoa, A.M. UbiqLog: A generic mobile phone-based life-log framework. Pers. Ubiquitous Comput. 2013, 17, 621–637. [Google Scholar] [CrossRef]

- Van Laerhoven, K.; Borazio, M.; Burdinski, J.H. Wear is Your Mobile? Investigating Phone Carrying and Use Habits with a Wearable Device. Front. ICT 2015, 2. [Google Scholar] [CrossRef] [Green Version]

- Roggen, D.; Perez, D.G.; Fukumoto, M.; Van Laerhoven, K. ISWC 2013—Wearables Are Here to Stay. IEEE Pervasive Comput. 2014, 13, 14–18. [Google Scholar] [CrossRef]

- Rawassizadeh, R.; Price, B.A.; Petre, M. Wearables: Has the age of smartwatches finally arrived? Commun. ACM 2015, 58, 45–47. [Google Scholar] [CrossRef]

- Tognetti, A.; Lorussi, F.; Tesconi, M.; Bartalesi, R.; Zupone, G.; de Rossi, D. Wearable kinesthetic systems for capturing and classifying body posture and gesture. In Proceedings of the 27th Annual International Conference of the Engineering in Medicine and Biology Society, Shanghai, China, 17–18 January 2006; pp. 1012–1015.

- Dietrich, M.; van Laerhoven, K. A Typology of Wearable Activity Recognition and Interaction. In Proceedings of the 2nd International Workshop on Sensor-Based Activity Recognition and Interaction, Rostock, Germany, 25–26 June 2015; ACM: New York, NY, USA, 2015. [Google Scholar]

- Mayer, S.; Soros, G. User Interface Beaming–Seamless Interaction with Smart Things Using Personal Wearable Computers. In Proceedings of the 11th International Conference on Wearable and Implantable Body Sensor Networks Workshops (BSN Workshops), Zurich, Switzerland, 16–19 June 2014; pp. 46–49.

- Mortazavi, B.J.; Pourhomayoun, M.; Alsheikh, G.; Alshurafa, N.; Lee, S.I.; Sarrafzadeh, M. Determining the Single Best Axis for Exercise Repetition Recognition and Counting on SmartWatches. In Proceedings of the 11th International Conference on Wearable and Implantable Body Sensor Networks, Zurich, Switzerland, 16–19 June 2014; pp. 33–38.

- Zeni, M.; Zaihrayeu, I.; Giunchiglia, F. Multi-device activity logging. In Proceedings of the 2014 ACM International Joint Conference on Pervasive and Ubiquitous Computing: Adjunct Publication, Seattle, WA, USA, 13–17 September 2014; ACM: New York, NY, USA, 2014; pp. 299–302. [Google Scholar]

- Bieber, G.; Kirste, T.; Urban, B. Ambient Interaction by Smart Watches. In Proceedings of the 5th International Conference on PErvasive Technologies Related to Assistive Environments, Heraklion, Crete, Greece, 6–9 June 2012; ACM: New York, NY, USA, 2012. [Google Scholar]

- Bieber, G.; Haescher, M.; Vahl, M. Sensor Requirements for Activity Recognition on Smart Watches. In Proceedings of the 6th International Conference on PErvasive Technologies Related to Assistive Environments, Island of Rhodes, Greece, 29–31 May 2013; ACM: New York, NY, USA, 2013. [Google Scholar]

- Ghasemzadeh, H.; Ostadabbas, S.; Guenterberg, E.; Pantelopoulos, A. Wireless Medical-Embedded Systems: A Review of Signal-Processing Techniques for Classification. IEEE Sens. J. 2013, 13, 423–437. [Google Scholar] [CrossRef]

- Bao, L.; Intille, S.S. Activity recognition from user-annotated acceleration data. In Pervasive Computing; Springer: Berlin, Germany, 2004; pp. 1–17. [Google Scholar]

- Ravi, N.; Dandekar, N.; Mysore, P.; Littman, M.L. Activity recognition from accelerometer data. In Proceedings of the Twentieth National Conference on Artificial Intelligence and the Seventeenth Innovative Applications of Artificial Intelligence Conference, Pittsburgh, PA, USA, 9–13 July 2005; pp. 1541–1546.

- Chaudhry, S.I.; Phillips, C.O.; Stewart, S.S.; Riegel, B.; Mattera, J.A.; Jerant, A.F.; Krumholz, H.M. Telemonitoring for patients with chronic heart failure: A systematic review. J. Card. Fail. 2007, 13, 56–62. [Google Scholar] [CrossRef] [PubMed]

- Chaudhry, S.I.; Mattera, J.A.; Curtis, J.P.; Spertus, J.A.; Herrin, J.; Lin, Z.; Phillips, C.O.; Hodshon, B.V.; Cooper, L.S.; Krumholz, H.M. Telemonitoring in patients with heart failure. N. Engl. J. Med. 2010, 363, 2301–2309. [Google Scholar] [CrossRef] [PubMed]

- Suh, M.k.; Chen, C.A.; Woodbridge, J.; Tu, M.K.; Kim, J.I.; Nahapetian, A.; Evangelista, L.S.; Sarrafzadeh, M. A remote patient monitoring system for congestive heart failure. J. Med. Syst. 2011, 35, 1165–1179. [Google Scholar] [CrossRef] [PubMed]

- Alshurafa, N.; Xu, W.; Liu, J.J.; Huang, M.C.; Mortazavi, B.; Sarrafzadeh, M.; Roberts, C.K. Robust human intensity-varying activity recognition using Stochastic Approximation in wearable sensors. In Proceedings of the 2013 IEEE International Conference on Body Sensor Networks (BSN), Cambridge, MA, USA, 6–9 May 2013; pp. 1–6.

- Pourhomayoun, M.; Jin, Z.; Fowler, M.L. Indoor Localization, Tracking and Fall Detection for Assistive Healthcare Based on Spatial Sparsity and Wireless Sensor Network. Int. J. Monit. Surveill. Technol. Res. 2013, 1, 72–83. [Google Scholar] [CrossRef]

- Wiens, J.; Guttag, J.; Horvitz, E. Learning evolving patient risk processes for c. diff colonization. In ICML Workshop on Machine Learning from Clinical Data, Edinburgh, UK, 30 June–1 July 2012.

- Lee, S.I.; Ghasemzadeh, H.; Mortazavi, B.; Lan, M.; Alshurafa, N.; Ong, M.; Sarrafzadeh, M. Remote Patient Monitoring: What Impact Can Data Analytics Have on Cost? In Proceedings of the 4th Conference on Wireless Health, Baltimore, MD, USA, 1–3 November 2013; ACM: New York, NY, USA, 2013. [Google Scholar]

- Aaldriks, A.; Maartense, E.; Le Cessie, S.; Giltay, E.; Verlaan, H.; van der Geest, L.; Kloosterman-Boele, W.; Peters-Dijkshoorn, M.; Blansjaar, B.; Van Schaick, H.; et al. Predictive value of geriatric assessment for patients older than 70 years, treated with chemotherapy. Crit. Rev. Oncol. Hematol. 2011, 79, 205–212. [Google Scholar] [CrossRef] [PubMed]

- Balan, R.K.; Lee, Y.; Wee, T.K.; Misra, A. The challenge of continuous mobile context sensing. In Proceedings of the 2014 Sixth International Conference on Communication Systems and Networks (COMSNETS), Bangalore, India, 6–10 January 2014; pp. 1–8.

- Alshurafa, N.; Eastwood, J.A.; Pourhomayoun, M.; Nyamathi, S.; Bao, L.; Mortazavi, B.; Sarrafzadeh, M. Anti-Cheating: Detecting Self-Inflicted and Impersonator Cheaters for Remote Health Monitoring Systems with Wearable Sensors. In Proceedings of the 11th International Conference on Wearable and Implantable Body Sensor Networks (BSN), Zurich, Switzerland, 16–19 June 2014; pp. 92–97.

- Shih, P.C.; Han, K.; Poole, E.S.; Rosson, M.B.; Carroll, J.M. Use and adoption challenges of wearable activity trackers. Available online: http://hdl.handle.net/2142/73649 (accessed on 21 October 2015).

- Chowdhury, D.; Banerjee, S.J.; Sanyal, K.; Chattopadhyay, M. A real time gesture recognition with wrist mounted accelerometer. In Information Systems Design and Intelligent Applications; Springer: Berlin, Germany, 2015; pp. 245–253. [Google Scholar]

- Ugulino, W.; Cardador, D.; Vega, K.; Velloso, E.; Milidiú, R.; Fuks, H. Wearable computing: Accelerometers’ data classification of body postures and movements. In Advances in Artificial Intelligence—SBIA 2012; Springer: Berlin, Germany, 2012; pp. 52–61. [Google Scholar]

- Shin, D.M.; Shin, D.; Shin, D. Smart watch and monitoring system for dementia patients. In Grid and Pervasive Computing; Springer: Berlin, Germany, 2013; pp. 577–584. [Google Scholar]

- Park, C.; Kim, J.; Choi, H.J. A watch-type human activity detector for the aged care. In Proceedings of the 14th International Conference on Advanced Communication Technology (ICACT), PyeongChang, Korea, 19–22 February 2012; pp. 648–652.

- Rawassizadeh, R.; Tomitsch, M.; Nourizadeh, M.; Momeni, E.; Peery, A.; Ulanova, L.; Pazzani, M. Energy-Efficient Integration of Continuous Context Sensing and Prediction into Smartwatches. Sensors 2015, 15, 22616–22645. [Google Scholar] [CrossRef] [PubMed]

- Zhang, M.; Sawchuk, A.A. A feature selection-based framework for human activity recognition using wearable multimodal sensors. In Proceedings of the 6th International Conference on Body Area Networks, Beijng, China, 7–10 November 2011; pp. 92–98.

- Yang, J.Y.; Wang, J.S.; Chen, Y.P. Using acceleration measurements for activity recognition: An effective learning algorithm for constructing neural classifiers. Pattern Recognit. Lett. 2008, 29, 2213–2220. [Google Scholar] [CrossRef]

- V¨h¨-Ypy¨, H.; Vasankari, T.; Husu, P.; Suni, J.; Siev¨nen, H. A universal, accurate intensity-based classification of different physical activities using raw data of accelerometer. Clin. Physiol. Funct. Imaging 2015, 35, 64–70. [Google Scholar]

- Hall, M.; Frank, E.; Holmes, G.; Pfahringer, B.; Reutemann, P.; Witten, I.H. The WEKA data mining software: An update. ACM SIGKDD Explor. Newslett. 2009, 11, 10–18. [Google Scholar] [CrossRef]

- Prichep, L.S.; Jacquin, A.; Filipenko, J.; Dastidar, S.G.; Zabele, S.; Vodencarevic, A.; Rothman, N.S. Classification of traumatic brain injury severity using informed data reduction in a series of binary classifier algorithms. IEEE Trans. Neural Syst. Rehabil. Eng. 2012, 20, 806–822. [Google Scholar] [CrossRef] [PubMed]

- Üstün, B.; Melssen, W.J.; Buydens, L.M. Facilitating the application of Support Vector Regression by using a universal Pearson VII function based kernel. Chemom. Intell. Lab. Syst. 2006, 81, 29–40. [Google Scholar] [CrossRef]

- Mortazavi, B.; Pourhomayoun, M.; Nyamathi, S.; Wu, B.; Lee, S.I.; Sarrafzadeh, M. Multiple model recognition for near-realistic exergaming. In Proceedings of the IEEE International Conference on Pervasive Computing and Communications (PerCom), St. Louis, MO, USA, 23–27 March 2015; pp. 140–148.

- Sokolova, M.; Lapalme, G. A systematic analysis of performance measures for classification tasks. Inf. Process. Manag. 2009, 45, 427–437. [Google Scholar] [CrossRef]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mortazavi, B.; Nemati, E.; VanderWall, K.; Flores-Rodriguez, H.G.; Cai, J.Y.J.; Lucier, J.; Naeim, A.; Sarrafzadeh, M. Can Smartwatches Replace Smartphones for Posture Tracking? Sensors 2015, 15, 26783-26800. https://doi.org/10.3390/s151026783

Mortazavi B, Nemati E, VanderWall K, Flores-Rodriguez HG, Cai JYJ, Lucier J, Naeim A, Sarrafzadeh M. Can Smartwatches Replace Smartphones for Posture Tracking? Sensors. 2015; 15(10):26783-26800. https://doi.org/10.3390/s151026783

Chicago/Turabian StyleMortazavi, Bobak, Ebrahim Nemati, Kristina VanderWall, Hector G. Flores-Rodriguez, Jun Yu Jacinta Cai, Jessica Lucier, Arash Naeim, and Majid Sarrafzadeh. 2015. "Can Smartwatches Replace Smartphones for Posture Tracking?" Sensors 15, no. 10: 26783-26800. https://doi.org/10.3390/s151026783

APA StyleMortazavi, B., Nemati, E., VanderWall, K., Flores-Rodriguez, H. G., Cai, J. Y. J., Lucier, J., Naeim, A., & Sarrafzadeh, M. (2015). Can Smartwatches Replace Smartphones for Posture Tracking? Sensors, 15(10), 26783-26800. https://doi.org/10.3390/s151026783