Machine Learning Approach to Metabolomic Data Predicts Type 2 Diabetes Mellitus Incidence

Abstract

1. Introduction

2. Results

2.1. Patient Characteristics

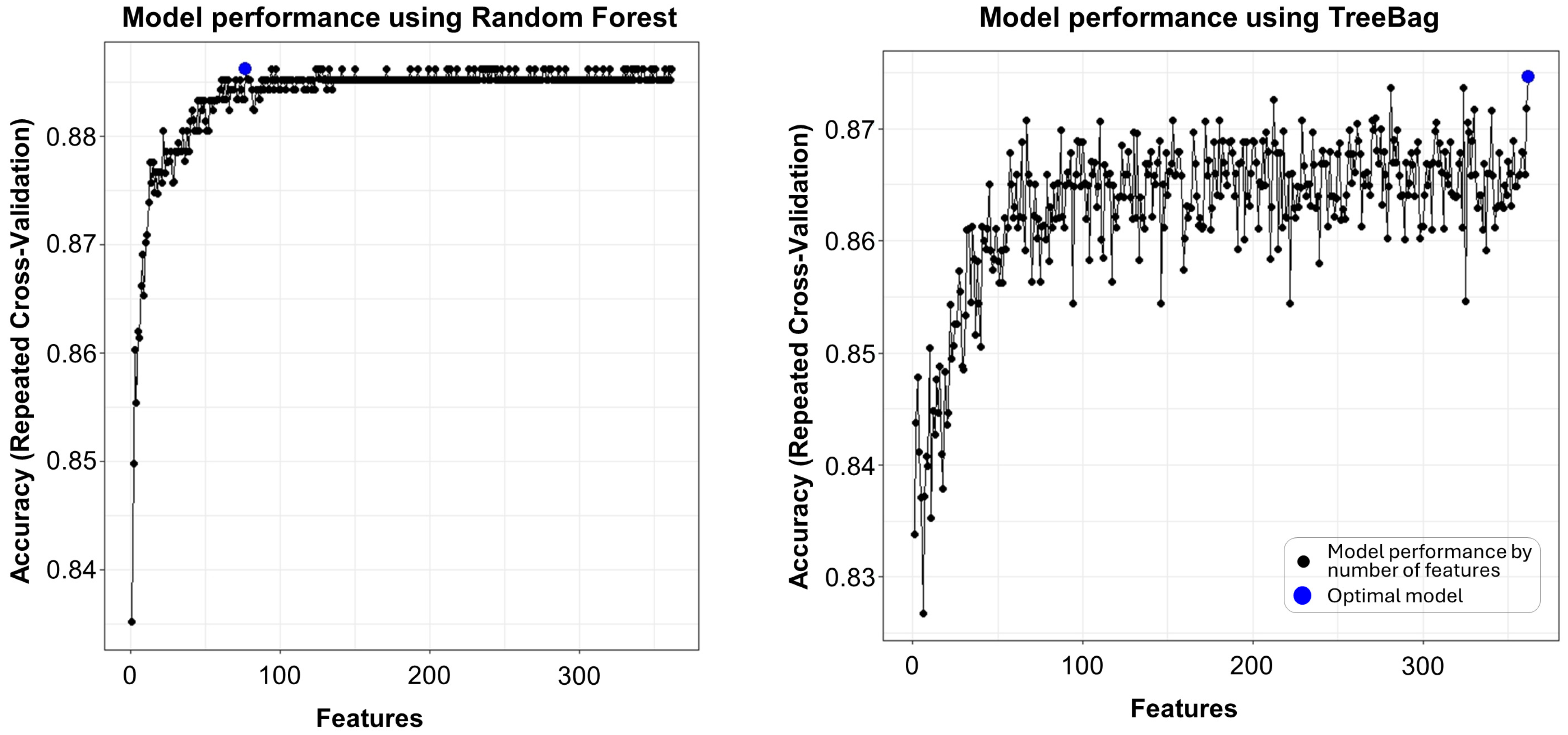

2.2. Feature Selection

2.3. ML Model Comparison

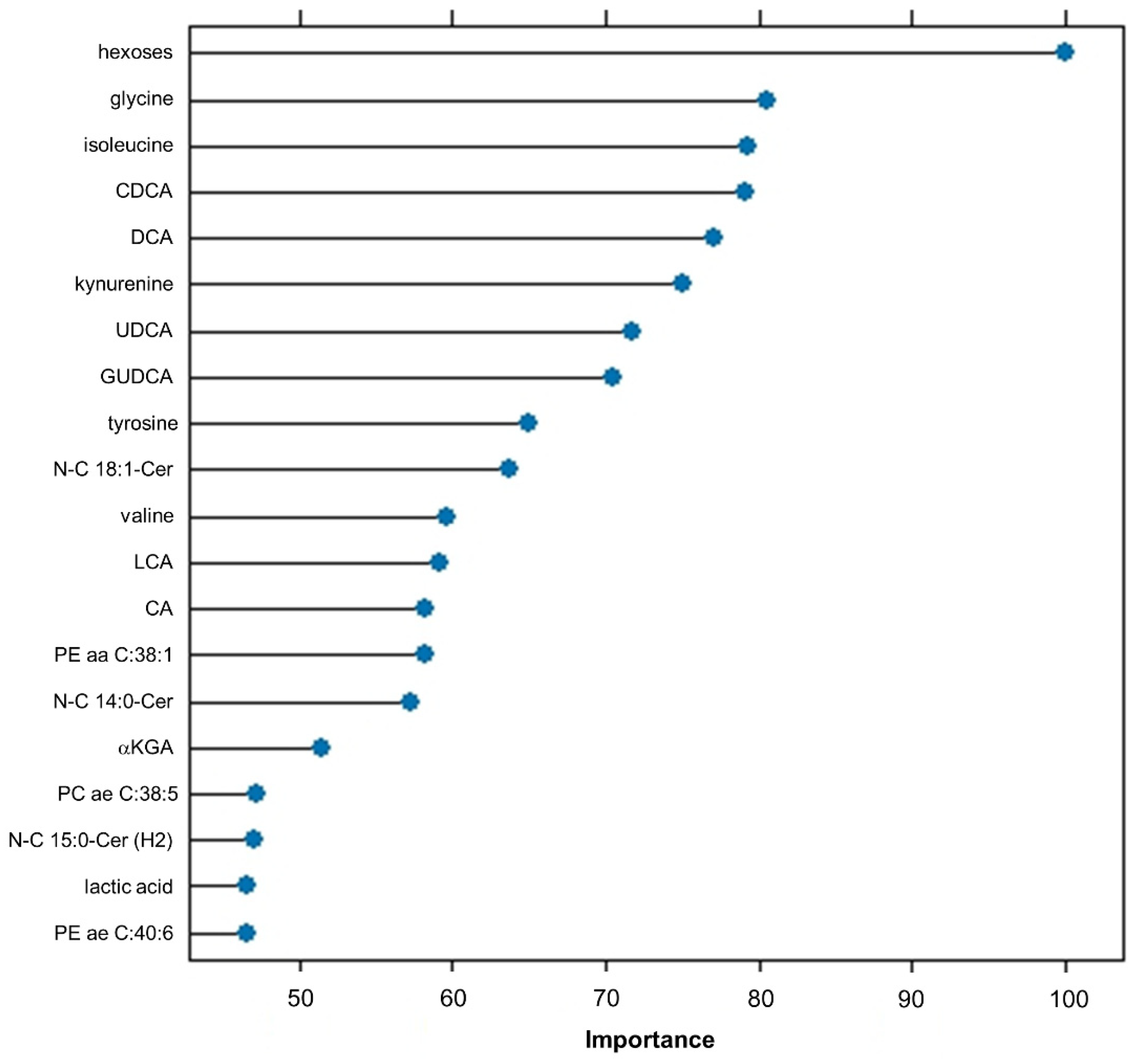

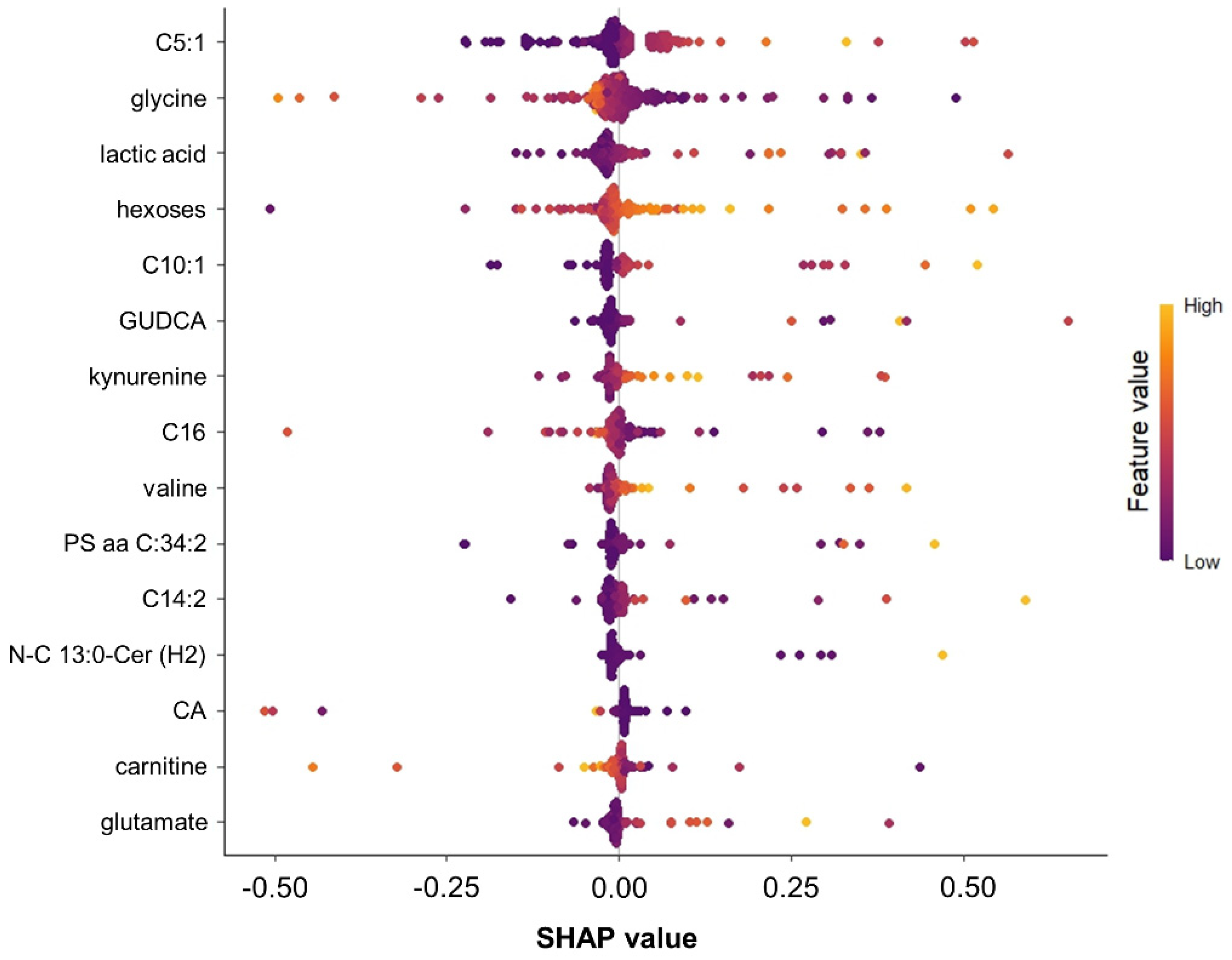

2.4. Important Features Ranking

2.5. Validation Model Output with a Linear Regression Approach

3. Discussion

3.1. Main Findings

3.2. The Role of Metabolites in Predicting New-Onset Diabetes

3.3. The Role of ML Models in Predicting New-Onset Diabetes

3.4. Strengths and Limitations

4. Materials and Methods

4.1. Study Subjects and Patient Selection for Metabolomic Analysis

4.2. Data Analysis Steps

4.2.1. Preprocessing and Recursive Feature Elimination

4.2.2. Statistical Analysis

4.2.3. Managing Data Imbalance

4.2.4. ML Analysis for Predictive Classification Modeling

4.2.5. ML Model Performance Evaluation

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Thornton, J.M.; Shah, N.M.; Lillycrop, K.A.; Cui, W.; Johnson, M.R.; Singh, N. Multigenerational diabetes mellitus. Front. Endocrinol. 2024, 14, 1245899. [Google Scholar] [CrossRef]

- Slieker, R.C.; Donnelly, L.A.; Akalestou, E.; Lopez-Noriega, L.; Melhem, R.; Güneş, A.; Azar, F.A.; Efanov, A.; Georgiadou, E.; Muniangi-Muhitu, H.; et al. Identification of biomarkers for glycaemic deterioration in type 2 diabetes. Nat. Commun. 2023, 14, 2533. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Semiz, S.; van der Lee, S.J.; van der Spek, A.; Verhoeven, A.; van Klinken, J.B.; Sijbrands, E.; Harms, A.C.; Hankemeier, T.; van Dijk, K.W.; et al. Metabolomics based markers predict type 2 diabetes in a 14-year follow-up study. Metabolomics 2017, 13, 104. [Google Scholar] [CrossRef] [PubMed]

- Sharma, T.; Shah, M. A comprehensive review of machine learning techniques on diabetes detection. Vis. Comput. Ind. Biomed. Art 2021, 4, 30. [Google Scholar] [CrossRef]

- Artzi, N.S.; Shilo, S.; Hadar, E.; Rossman, H.; Barbash-Hazan, S.; Ben-Haroush, A.; Balicer, R.D.; Feldman, B.; Wiznitzer, A.; Segal, E. Prediction of gestational diabetes based on nationwide electronic health records. Nat. Med. 2020, 26, 71–76. [Google Scholar] [CrossRef]

- Georga, E.I.; Protopappas, V.C.; Ardigò, D.; Polyzos, D.; Fotiadis, D.I. A glucose model based on support vector regression for the prediction of hypoglycemic events under free-living conditions. Diabetes Technol. Ther. 2013, 15, 634–643. [Google Scholar] [CrossRef] [PubMed]

- Saxena, R.; Sharma, S.K.; Gupta, M.; Sampada, G.C. A Novel Approach for Feature Selection and Classification of Diabetes Mellitus: Machine Learning Methods. Comput. Intell. Neurosci. 2022, 2022, 3820360. [Google Scholar] [CrossRef]

- Su, X.; Cheung, C.Y.; Zhong, J.; Ru, Y.; Fong, C.H.; Lee, C.-H.; Liu, Y.; Cheung, C.K.; Lam, K.S.; Xu, A.; et al. Ten metabolites-based algorithm predicts the future development of type 2 diabetes in Chinese. J. Adv. Res. 2023. [Google Scholar] [CrossRef]

- Einarson, T.R.; Acs, A.; Ludwig, C.; Panton, U.H. Prevalence of cardiovascular disease in type 2 diabetes: A systematic literature review of scientific evidence from across the world in 2007–2017. Cardiovasc. Diabetol. 2018, 17, 83. [Google Scholar] [CrossRef]

- American Diabetes Association Professional Practice Committee; ElSayed, N.A.; Aleppo, G.; Bannuru, R.R.; Bruemmer, D.; Collins, B.S.; Ekhlaspour, L.; Gaglia, J.L.; Hilliard, M.E.; Johnson, E.L.; et al. 2. Diagnosis and Classification of Diabetes: Standards of Care in Diabetes—2024. Diabetes Care 2024, 47, S20–S42. [Google Scholar]

- Kuhn, M. Building Predictive Models in R Using the caret Package. J. Stat. Softw. 2008, 28, 1–26. [Google Scholar] [CrossRef]

- Rodgers, L.R.; Hill, A.V.; Dennis, J.M.; Craig, Z.; May, B.; Hattersley, A.T.; McDonald, T.J.; Andrews, R.C.; Jones, A.; Shields, B.M. Choice of HbA1c threshold for identifying individuals at high risk of type 2 diabetes and implications for diabetes prevention programmes: A cohort study. BMC Med. 2021, 19, 184. [Google Scholar] [CrossRef]

- Haeusler, R.A.; Astiarraga, B.; Camastra, S.; Accili, D.; Ferrannini, E. Human Insulin Resistance Is Associated With Increased Plasma Levels of 12α-Hydroxylated Bile Acids. Diabetes 2013, 62, 4184–4191. [Google Scholar] [CrossRef]

- Hou, Y.; Zhai, X.; Wang, X.; Wu, Y.; Wang, H.; Qin, Y.; Han, J.; Meng, Y. Research progress on the relationship between bile acid metabolism and type 2 diabetes mellitus. Diabetol. Metab. Syndr. 2023, 15, 235. [Google Scholar] [CrossRef] [PubMed]

- Summers, S.A. Could Ceramides Become the New Cholesterol? Cell Metab. 2018, 27, 276–280. [Google Scholar] [CrossRef]

- Kauhanen, D.; Sysi-Aho, M.; Koistinen, K.M.; Laaksonen, R.; Sinisalo, J.; Ekroos, K. Development and validation of a high-throughput LC-MS/MS assay for routine measurement of molecular ceramides. Anal. Bioanal. Chem. 2016, 408, 3475–3483. [Google Scholar] [CrossRef] [PubMed]

- Laaksonen, R.; Ekroos, K.; Sysi-Aho, M.; Hilvo, M.; Vihervaara, T.; Kauhanen, D.; Suoniemi, M.; Hurme, R.; März, W.; Scharnagl, H.; et al. Plasma ceramides predict cardiovascular death in patients with stable coronary artery disease and acute coronary syndromes beyond LDL-cholesterol. Eur. Heart J. 2016, 37, 1967–1976. [Google Scholar] [CrossRef] [PubMed]

- Wilkerson, J.L.; Tatum, S.M.; Holland, W.L.; Summers, S.A. Ceramides are Fuel Gauges on the Drive to Cardiometabolic Disease. Physiol. Rev. 2024, 104, 1061–1119. [Google Scholar] [CrossRef] [PubMed]

- Leiherer, A.; Muendlein, A.; Plattner, T.; Vonbank, A.; Mader, A.; Sprenger, L.; Maechler, M.; Larcher, B.; Fraunberger, P.; Drexel, H.; et al. Ceramides predict the develoment of type 2 diabetes. J. Am. Coll. Cardiol. 2024, 83, 2026. [Google Scholar] [CrossRef]

- Shojaee-Mend, H.; Velayati, F.; Tayefi, B.; Babaee, E. Prediction of Diabetes Using Data Mining and Machine Learning Algorithms: A Cross-Sectional Study. Healthc. Inform. Res. 2024, 30, 73–82. [Google Scholar] [CrossRef]

- Gray, N.; Picone, G.; Sloan, F.; Yashkin, A. Relation between BMI and diabetes mellitus and its complications among US older adults. South Med. J. 2015, 108, 29–36. [Google Scholar] [CrossRef] [PubMed]

- Slieker, R.C.; Münch, M.; Donnelly, L.A.; Bouland, G.A.; Dragan, I.; Kuznetsov, D.; Elders, P.J.M.; Rutter, G.A.; Ibberson, M.; Pearson, E.R.; et al. An omics-based machine learning approach to predict diabetes progression: A RHAPSODY study. Diabetologia 2024, 67, 885–894. [Google Scholar] [CrossRef]

- Kızıltaş Koç, Ş.; Yeniad, M. Diabetes Prediction Using Machine Learning Techniques. J. Intell. Syst. Appl. 2021, 4, 150–152. [Google Scholar] [CrossRef]

- Bukhari, M.M.; Alkhamees, B.F.; Hussain, S.; Gumaei, A.; Assiri, A.; Ullah, S.S. An Improved Artificial Neural Network Model for Effective Diabetes Prediction. Complexity 2021, 2021, 5525271. [Google Scholar] [CrossRef]

- Darst, B.F.; Malecki, K.C.; Engelman, C.D. Using recursive feature elimination in random forest to account for correlated variables in high dimensional data. BMC Genet. 2018, 19, 65. [Google Scholar] [CrossRef] [PubMed]

- Leiherer, A.; Muendlein, A.; Rein, P.; Saely, C.H.; Kinz, E.; Vonbank, A.; Fraunberger, P.; Drexel, H. Genome-wide association study reveals a polymorphism in the podocyte receptor RANK for the decline of renal function in coronary patients. PLoS ONE 2014, 9, e114240. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Rein, P.; Vonbank, A.; Saely, C.H.; Beer, S.; Jankovic, V.; Boehnel, C.; Breuss, J.; Risch, L.; Fraunberger, P.; Drexel, H. Relation of Albuminuria to Angiographically Determined Coronary Arterial Narrowing in Patients With and Without Type 2 Diabetes Mellitus and Stable or Suspected Coronary Artery Disease. Am. J. Cardiol. 2011, 107, 1144–1148. [Google Scholar] [CrossRef]

- Leiherer, A.; Muendlein, A.; Saely, C.H.; Fraunberger, P.; Drexel, H. Serotonin is elevated in risk-genotype carriers of TCF7L2-rs7903146. Sci. Rep. 2019, 9, 12863. [Google Scholar] [CrossRef]

- Fox, J.; Weisberg, S. An R Companion to Applied Regression, 3rd ed.; SAGE: Thousand Oaks, CA, USA, 2018. [Google Scholar]

- Simon, N.; Friedman, J.; Hastie, T.; Tibshirani, R. Regularization Paths for Cox’s Proportional Hazards Model via Coordinate Descent. J. Stat. Softw. 2011, 39, 1–13. [Google Scholar] [CrossRef]

- Galar, M.; Fernandez, A.; Barrenechea, E.; Bustince, H.; Herrera, F. A Review on Ensembles for the Class Imbalance Problem: Bagging-, Boosting-, and Hybrid-Based Approaches. IEEE Trans. Syst. Man Cybern.—Part C Appl. Rev. 2012, 42, 463–484. [Google Scholar] [CrossRef]

- Breiman, L. Random Forest. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Rodriguez, J.J.; Kuncheva, L.I.; Alonso, C.J. Rotation Forest: A New Classifier Ensemble Method. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 1619–1630. [Google Scholar] [CrossRef] [PubMed]

- Rish, I. An empirical study of the naive Bayes classifier. In Proceedings of the IJCAI 2001 workshop on empirical methods in artificial intelligence, Seattle, WA, USA, 4–10 August 2001; Volume 3, pp. 41–46. [Google Scholar]

- Haykin, S. Neural Networks: A Comprehensive Foundation; Prentice Hall: Upper Saddle River, NJ, USA, 1999. [Google Scholar]

- Bishop, C.M. Neural Networks for Pattern Recognition; Oxford University Press: Oxford, UK, 1995. [Google Scholar]

- Jolliffe, I. Principal Component Analysis. In International Encyclopedia of Statistical Science; Springer: Berlin/Heidelberg, Germany, 2011; pp. 1094–1096. [Google Scholar]

- Breiman, L. Bagging Predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016. [Google Scholar]

- Prokhorenkova, L.; Gusev, G.; Vorobev, A.; Dorogush, A.V.; Gulin, A. CatBoost: Unbiased boosting with categorical features. In Proceedings of the Neural Information Processing Systems (NeurIPS 2018), Montreal, QC, Canada, 3–8 December 2018. [Google Scholar]

- Lundberg, S.M.; Allen, P.G.; Lee, S.-I. A Unified Approach to Interpreting Model Predictions. In Proceedings of the Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Covert, I.; Lee, S.-I. Improving KernelSHAP: Practical Shapley Value Estimation Using Linear Regression. In Proceedings of the 24th International Conference on Artificial Intelligence and Statistics, Virtual, 13–15 April 2021; pp. 3457–3465. [Google Scholar]

| Total | No T2DM Incidence | T2DM Incidence | p-Value | |

|---|---|---|---|---|

| n = 279 | n = 247 | n = 32 | ||

| Age (years) * | 65 [59–73] | 65 [59–74] | 68 [66–72] | 0.460 |

| Male sex (%) * | 50 | 49 | 59 | 0.269 |

| BMI (kg/m2) * | 28 [25–31] | 28 [25–30] | 29 [27–31] | 0.155 |

| Waist circumference (cm) * | 99 [93–106] | 99 [92–106] | 102 [96–109] | 0.083 |

| Waist–hip ratio * | 0.97 [0.91–1.00] | 0.96 [0.91–1.00] | 0.98 [0.94–1.02] | 0.203 |

| LDL-C (mg/dL) | 134 [107–162] | 134 [105–162] | 135 [115–165] | 0.495 |

| Fasting glucose (mg/dL) | 98 [90–106] | 97 [90–105] | 108 [99–115] | <0.001 |

| HbA1c (%) | 5.70 [5.50–5.90] | 5.70 [5.50–5.90] | 5.85 [5.70–6.00] | 0.052 |

| Hypertension (%) | 89 | 87 | 97 | 0.115 |

| Smoking, current (%) | 19 | 17 | 28 | 0.143 |

| Statin treatment (%) | 42 | 41 | 44 | 0.791 |

| Function (ML Algorithm) | Validation Technique | Range of Input Variable Subsets (n) | Size of Best Model (n) | Top 5 Variables |

|---|---|---|---|---|

| rfFuncs (Random Forest) | Repeated CV (10-fold repeated 5 times) | 1 to 362 | 77 | N-C15:0-OH-Cer, N-C19:0-Cer, N-C15:1-Cer, Hexoses, C14:2 |

| treebagFuncs (Treebag) | Repeated CV (10-fold repeated 5 times) | 1 to 362 | 362 | Hexoses, TLCAS, N-C15:1-Cer, Gly, C14:2 |

| nbFuncs (Naive Bayes) | Repeated CV (10-fold repeated 5 times) | 1 to 362 | 1 | Hexoses |

| caretFuncs (Caret default) | Repeated CV (10-fold repeated 5 times) | 1 to 362 | 362 | Hexoses, Gly, TLCAS, N-C15:1-Cer, C10:1 |

| MLalgorithm (Method) | Variable Set (n) | F1 | Accuracy | Balanced Accuracy | Precision | Sens. | Spec. | AUC |

|---|---|---|---|---|---|---|---|---|

| Random Forest (“rf”) | 362 | 0% | 88.4% | 50.0% | 0% | 0% | 100% | 0.5789 |

| Random Forest (“rf”) | 77 | 22.2% | 89.9% | 56.3% | 100% | 12.5% | 100% | 0.5840 |

| Rotation Forest with complexity parameter tuning (“rotationForestCp“) | 362 | 40.0% | 91.3% | 62.5% | 100% | 25.0% | 100% | 0.7039 |

| Rotation Forest with complexity parameter tuning (“rotationForestCp“) | 77 | 16.7% | 85.5% | 53.8% | 25.0% | 12.5% | 95.1% | 0.7295 |

| Naive Bayes classifier (“naïve_bayes”) | 362 | 20.8% | 11.6% | 50.0% | 11.6% | 100% | 0% | 0.5000 |

| Naive Bayes classifier (“naïve_bayes”) | 77 | 0% | 85.5% | 48.4% | 0% | 0% | 96.7% | 0.4344 |

| Multi-Layer Perceptron (“mlp“) | 362 | 38.5% | 76.8% | 70.6% | 27.8% | 62.5% | 78.7% | 0.6516 |

| Multi-Layer Perceptron (“mlp“) | 77 | 40.0% | 87.0% | 65.5% | 42.9% | 37.5% | 93.4% | 0.7213 |

| Feedforward Neural Networks with a Principal Component Step (“nnet”) | 362 | 33.3% | 82.6% | 63.0% | 30.0% | 37.5% | 88.5% | 0.6814 |

| Feedforward Neural Networks with a Principal Component Step (“nnet”) | 77 | 30.8% | 73.9% | 63.5% | 22.2% | 50.0% | 77.1% | 0.6680 |

| Bootstrap bagging of decision trees (“treebag”) | 362 | 16.7% | 85.5% | 53.8% | 25.0% | 12.5% | 95.1% | 0.5666 |

| Bootstrap bagging of decision trees (“treebag”) | 77 | 23.5 | 81.2% | 56.8% | 22.2% | 25.0% | 88.5% | 0.625 |

| Support Vector Machine with linear kernels (“svmLinear“) | 362 | 34.8% | 78.3% | 66.0% | 26.7% | 50.0% | 82.0% | 0.5430 |

| Support Vector Machine with linear kernels (“svmLinear“) | 77 | 30.8% | 73.9% | 63.5% | 22.2% | 50.0% | 77.0% | 0.6352 |

| Support Vector Machine with 2 linear kernels (“svmLinear2“) | 362 | 34.8% | 78.3% | 66.0% | 26.7% | 50.0% | 82.0% | 0.5389 |

| Support Vector Machine with 2 linear kernels (“svmLinear2“) | 77 | 50.0% | 88.4% | 71.7% | 50.0% | 50.0% | 93.4% | 0.7254 |

| Tree-based extreme gradient boosting (“xgbTree“) | 362 | 36.4% | 89.9% | 61.7% | 66.7% | 25.0% | 98.4% | 0.6680 |

| Tree-based extreme gradient boosting (“xgbTree“) | 77 | 34.8% | 78.3% | 66.0% | 26.7% | 50.0% | 82.0% | 0.6270 |

| Gradient boosting on decision trees (“catboost”) | 362 | 0% | 88.4% | 50.0% | 0% | 0 | 100.% | 0.4795 |

| Gradient boosting on decision trees (“catboost”) | 77 | 33.3% | 87.0% | 60.9% | 50.0% | 25.0% | 96.7% | 0.6311 |

| Rank | Feature | Metabolite Class | FIS |

|---|---|---|---|

| 1 | Hexoses | Hexoses | 354 |

| 2 | Gly | Amino acids | 309 |

| 3 | Ile | Amino acids | 259 |

| 4 | CDCA | Bile acids | 258 |

| 5 | GUDCA | Bile acids | 213 |

| 6 | DCA | Bile acids | 211 |

| 7 | N-C15:1-Cer | Ceramides | 177 |

| 8 | UDCA | Bile acids | 140 |

| 9 | Kynurenine | Biogenic amines | 137 |

| 10 | N-C14:1-Cer (2H) | Ceramides | 105 |

| 11 | N-C19:0(OH)-Cer (2H) | Ceramides | 102 |

| 12 | Tyr | Amino acids | 96 |

| 13 | N-C18:1-Cer | Ceramides | 95 |

| 14 | Val | Amino acids | 82 |

| 15 | N-C14:0-Cer | Ceramides | 77 |

| 16 | GCDCA | Bile acids | 76 |

| 17 | C16 | Acylcarnitines | 70 |

| 18 | PE aa C34:0 | Glycerophospholipids | 56 |

| 19 | N-C25:1-Cer | Ceramides | 55 |

| 20 | N-C12:0-Cer | Ceramides | 45 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Leiherer, A.; Muendlein, A.; Mink, S.; Mader, A.; Saely, C.H.; Festa, A.; Fraunberger, P.; Drexel, H. Machine Learning Approach to Metabolomic Data Predicts Type 2 Diabetes Mellitus Incidence. Int. J. Mol. Sci. 2024, 25, 5331. https://doi.org/10.3390/ijms25105331

Leiherer A, Muendlein A, Mink S, Mader A, Saely CH, Festa A, Fraunberger P, Drexel H. Machine Learning Approach to Metabolomic Data Predicts Type 2 Diabetes Mellitus Incidence. International Journal of Molecular Sciences. 2024; 25(10):5331. https://doi.org/10.3390/ijms25105331

Chicago/Turabian StyleLeiherer, Andreas, Axel Muendlein, Sylvia Mink, Arthur Mader, Christoph H. Saely, Andreas Festa, Peter Fraunberger, and Heinz Drexel. 2024. "Machine Learning Approach to Metabolomic Data Predicts Type 2 Diabetes Mellitus Incidence" International Journal of Molecular Sciences 25, no. 10: 5331. https://doi.org/10.3390/ijms25105331

APA StyleLeiherer, A., Muendlein, A., Mink, S., Mader, A., Saely, C. H., Festa, A., Fraunberger, P., & Drexel, H. (2024). Machine Learning Approach to Metabolomic Data Predicts Type 2 Diabetes Mellitus Incidence. International Journal of Molecular Sciences, 25(10), 5331. https://doi.org/10.3390/ijms25105331