Abstract

Nuclear magnetic resonance (NMR) is a crucial technique for analyzing mixtures consisting of small molecules, providing non-destructive, fast, reproducible, and unbiased benefits. However, it is challenging to perform mixture identification because of the offset of chemical shifts and peak overlaps that often exist in mixtures such as plant flavors. Here, we propose a deep-learning-based mixture identification method (DeepMID) that can be used to identify plant flavors (mixtures) in a formulated flavor (mixture consisting of several plant flavors) without the need to know the specific components in the plant flavors. A pseudo-Siamese convolutional neural network (pSCNN) and a spatial pyramid pooling (SPP) layer were used to solve the problems due to their high accuracy and robustness. The DeepMID model is trained, validated, and tested on an augmented data set containing 50,000 pairs of formulated and plant flavors. We demonstrate that DeepMID can achieve excellent prediction results in the augmented test set: ACC = 99.58%, TPR = 99.48%, FPR = 0.32%; and two experimentally obtained data sets: one shows ACC = 97.60%, TPR = 92.81%, FPR = 0.78% and the other shows ACC = 92.31%, TPR = 80.00%, FPR = 0.00%. In conclusion, DeepMID is a reliable method for identifying plant flavors in formulated flavors based on NMR spectroscopy, which can assist researchers in accelerating the design of flavor formulations.

1. Introduction

Nuclear magnetic resonance (NMR) is an essential tool for obtaining valuable information about the composition, structure, and dynamics of molecules. It can analyze mixtures composed of small molecules, offering non-destructive, rapid, and accurate advantages [1]. NMR can detect most organic compounds, making it widely applicable in various fields such as chemistry [2], medicine [3], metabolomics [4,5], food [6], and flavors [7]. NMR is considered less sensitive than mass spectrometry [8,9]. However, it has higher repeatability and can serve as a fingerprint technology to compare, differentiate, or classify samples. Extracting meaningful information from complex spectral data of mixtures is an interesting research area. Metabolomics technology based on NMR [10] has been developed to analyze the composition of multiple metabolites in biological fluids, cells, and tissues. These metabolites contain bioactive small molecules that can be widely applied in various industrial fields, ranging from fragrances, flavors, and sweeteners to natural insecticides and pharmaceuticals [11]. There is a wide variety of small molecule metabolites, many of which are produced through plant secondary metabolism pathways [12]. This technology has led to using NMR fingerprinting and profiling to study plant metabolites such as flavors and fragrances [13,14]. The NMR spectra generated from these complex samples should be analyzed using data processing methods [15], even machine learning [16] and deep learning methods [17] in recent years.

Machine learning is a critical component of artificial intelligence [18,19]. It enables machines to learn and extract information and feature patterns from vast amounts of data for further processing [20,21,22,23,24,25]. Recently, machine learning has witnessed significant advancements due to the availability of enhanced computational resources and novel deep learning algorithms [26,27]. These developments have enabled researchers to better deal with the challenges in chemistry, especially analytical chemistry [28], such as near infrared spectroscopy [29], Raman spectroscopy [30,31,32,33], mass spectrometry [34,35,36,37,38,39,40], chromatography [41,42,43,44,45], and ion mobility spectrometry [46,47,48]. Various machine learning methods have also been gradually applied in NMR spectroscopy [49,50], including complex mixture analysis in omics [16,51]. Recent advances in deep learning have attracted attention in various fields of NMR, including fast field homogenization [52], spectrum reconstruction [53], peak picking [54,55], denoising [56], chemical shift prediction [57], functional group recognition [58], protein assignments [59], and mixture component identification [60].

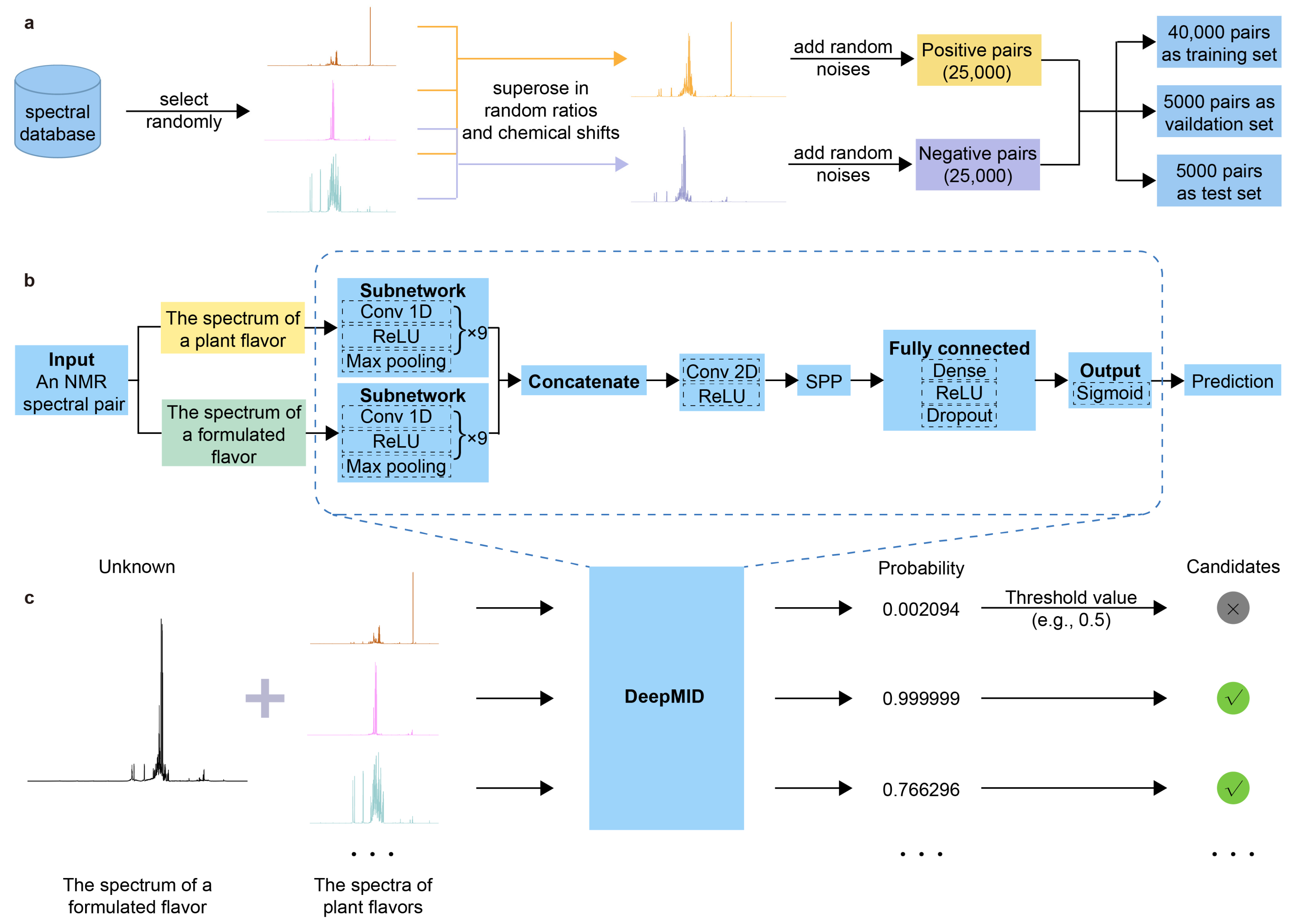

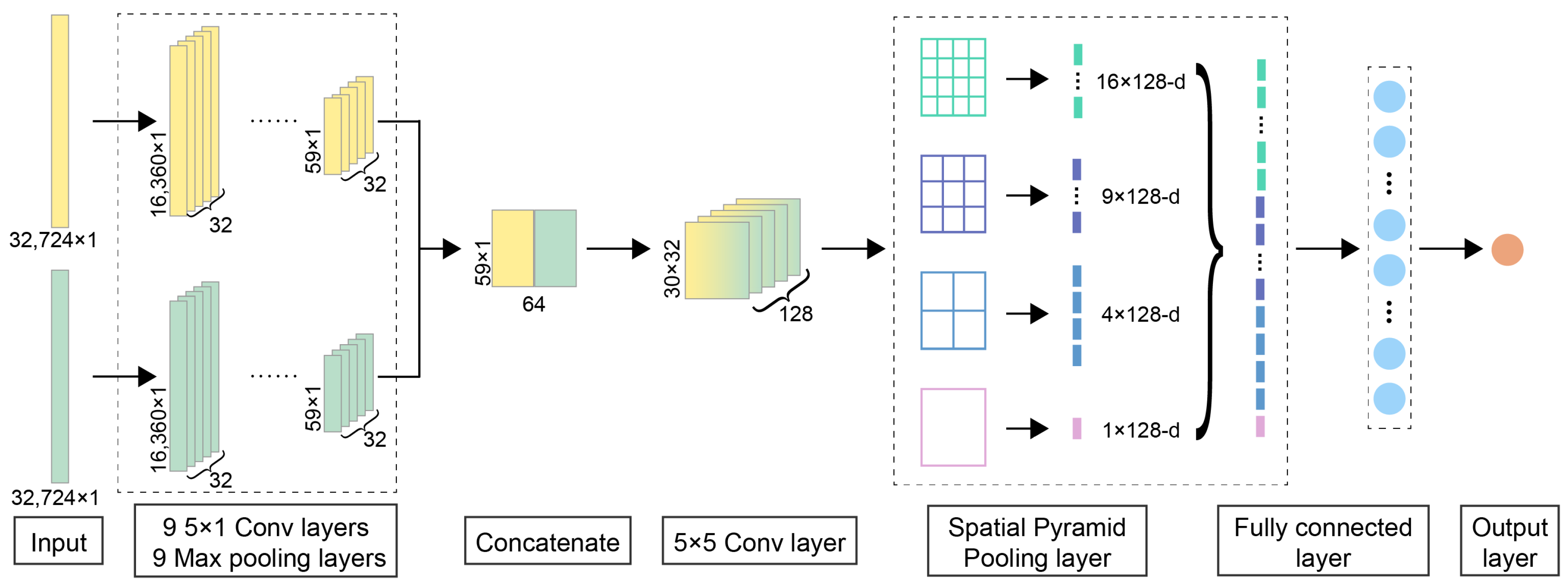

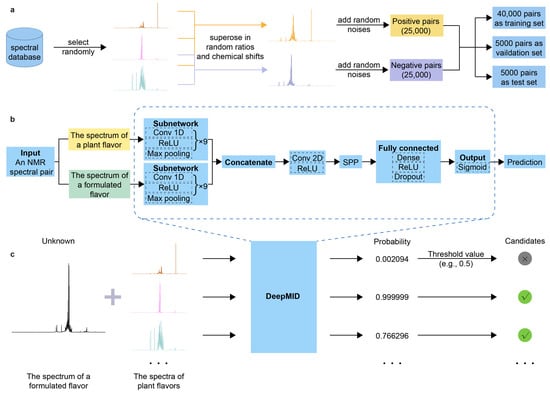

In this study, a method named DeepMID has been developed for identifying plant flavors in a formulated flavor with NMR spectroscopy inspired by the pseudo-Siamese convolutional neural network (pSCNN) [61] and DeepRaman [62]. The schematic diagram of DeepMID is shown in Figure 1. It mainly consists of three components: data augmentation, pseudo-Siamese convolutional neural network, and model-based mixture identification. As shown in Figure 1a, the NMR spectra from the plant flavor database were augmented to form NMR spectral pairs and further feed into the network. The data augmentation is performed by superimposing several NMR spectra sampled at random ratios from the plant flavor database with random noise to generate negative and positive spectral pairs. Then, the data set is divided into a training set, a validation set, and a test set. Figure 1b shows the network architecture of DeepMID, which takes NMR spectral pairs as inputs, extracts high-level features using convolutional layers, reorganizes these features in the SPP layer, and finally predicts the probability of plant flavors in the formulated flavors by dense layers. Figure 1c shows the mixture identification based on the DeepMID model, which predicts the probability of each plant flavor in a mixture of plant flavors. The possible plant flavors of the formulated flavor can be obtained by filtering the predicted probabilities using a threshold value. We will clarify the principles of each part of this method as clearly as possible in Section 3.

Figure 1.

Schematic diagram of DeepMID. (a) A spectral database is used for data augmentation, and 50,000 augmented spectral pairs are used for model training, validation, and testing. (b) The network architecture of DeepMID. (c) DeepMID-based mixture identification.

2. Results and Discussion

2.1. Implementation, Optimization, and Training of DeepMID

The neural networks were implemented in Python (version 3.10.5) and Tensorflow (version 2.5.0-GPU). The NMR spectra were read into Python by nmrglue (version 0.8) [63]. The computing tasks were submitted to the Inspur TS10000 high-performance computing (HPC) cluster of Central South University using the Slurm workload manager (version 20.02.3). This HPC cluster has 1022 central processing unit (CPU) nodes, 10 fat nodes, and 26 graphics processing unit (GPU) nodes. For the training of DeepMID models, it was a GPU node with 2 × Intel(R) Xeon(R) Gold 6248R processors, 2 × Nvidia Tesla V100s, 384G DDR4 memory, and a CentOS 7.5 operating system.

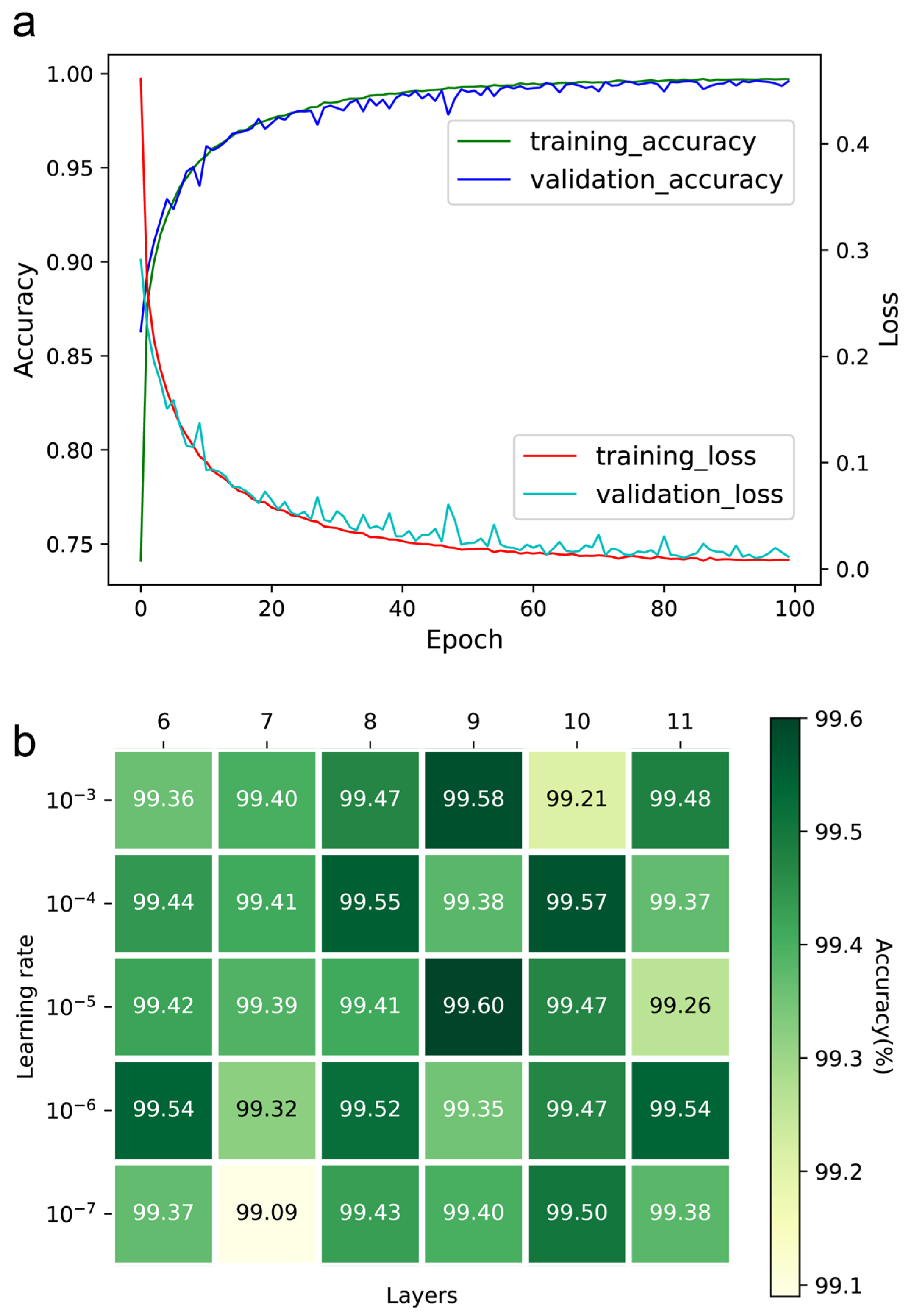

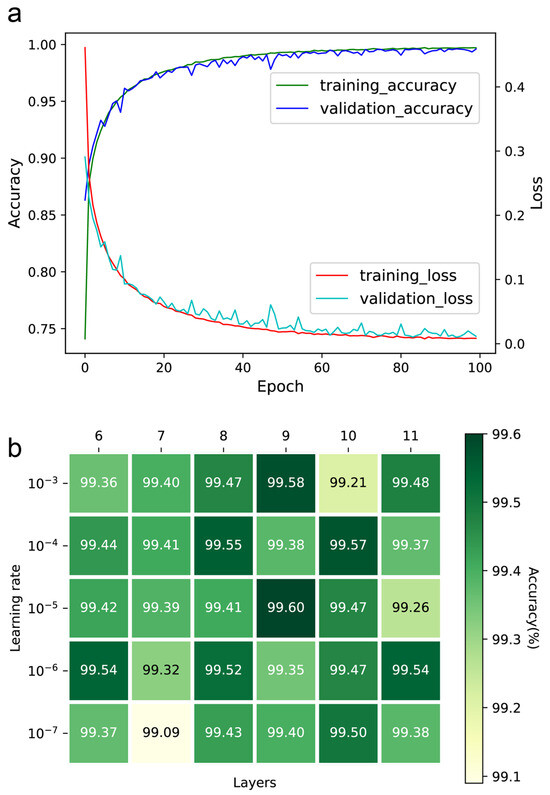

The number of epochs, learning rate, and convolution layers were optimized to ensure the performance of the DeepMID model. The accuracy and loss curves of the training and validation sets can be found in Figure 2a. Ultimately, the number of epochs was set to 100, as further increases did not significantly contribute to accuracy. Setting the learning rate too low will increase training time and may result in overfitting while setting it too high makes it difficult for the model to converge. The learning rates were optimized in the range from 10−3 to 10−7, while the number of convolution layers was from 6 to 11. The accuracies of the models are in Figure 2b. It was found that the highest accuracy was achieved with a learning rate of 10−5 and 9 convolution layers. Thus, the learning rate was set to 10−5, and the number of convolution layers was set to 9.

Figure 2.

Hyperparameter optimization of the DeepMID model. (a) The accuracy curves and loss curves of the training set and validation set. (b) The accuracy of different models on the validation set with different hyperparameters.

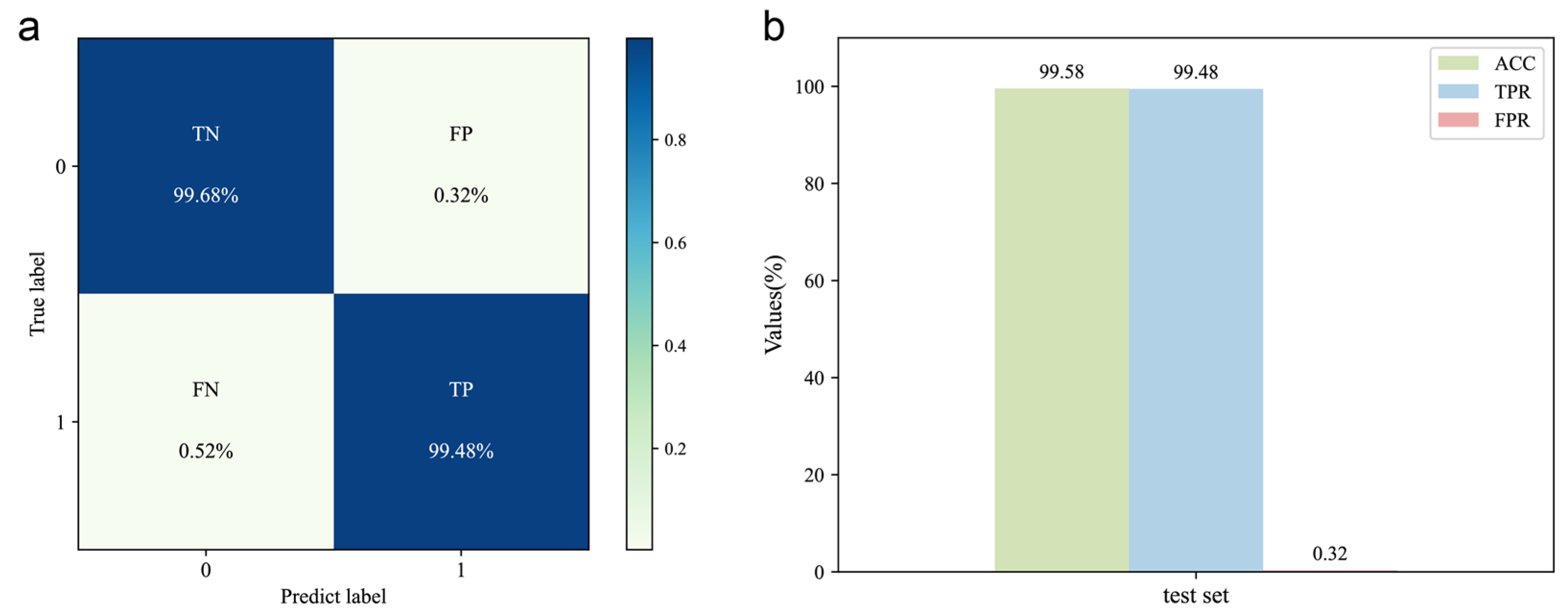

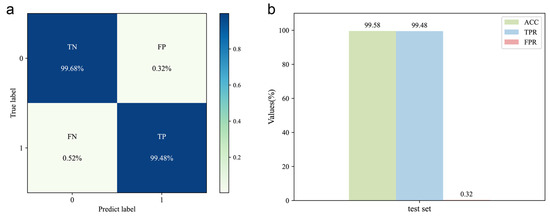

2.2. Evaluation of the DeepMID Model

The DeepMID model is trained on the training set (consisting of 40,000 data pairs) obtained by data augmentation. The hyperparameters were optimized by the validation set (consisting of 5000 data pairs). Upon testing the model with the test set, the confusion matrix is shown in Figure 3a, and the corresponding evaluation results are shown in Figure 3b. The results show ACC = 99.58%, TPR = 99.48%, and FPR = 0.32%, indicating high accuracy and generalization capability. Therefore, it can be used for mixture identification of the formulated flavors.

Figure 3.

Evaluation of the DeepMID model. (a) Confusion matrix of the DeepMID model on the test set. (b) Performance evaluation on the test set.

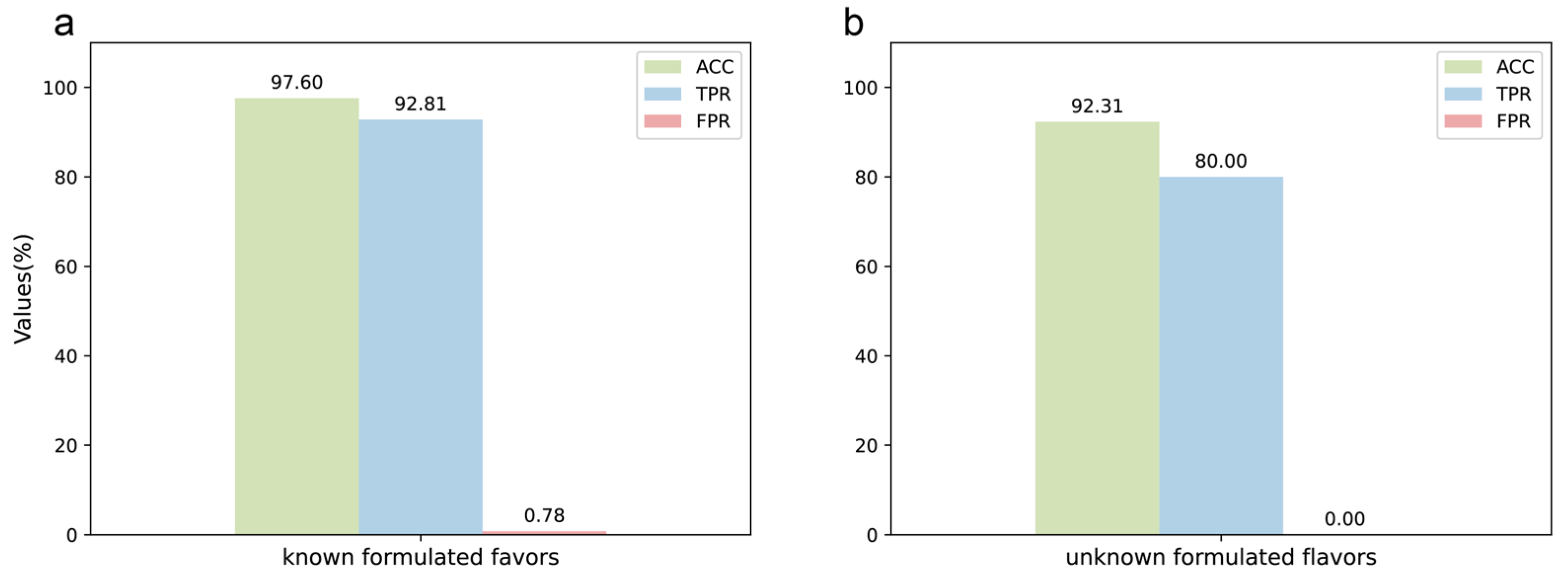

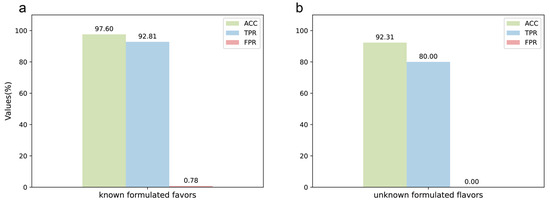

2.3. Results of Mixture Identification

The DeepMID model performed well on the test set of data augmentation, so it was further applied to the known formulated flavor data set. For known formulated flavors, they were made by experimentally blending plant flavors together. The formulations are known, so they can be used to test the performance of the model on real experimental data. The preparation methods and formulations of known formulation flavors are described in Section 4.2 and listed in Table S1. The result is in Figure 4a, showing ACC = 97.60%, TPR = 92.81%, and FPR = 0.78%. The detailed results can be found in Table S2. It can be seen that the model has high accuracy and specificity on the experimental data set and can identify plant flavors from the formulated flavors well without the need to know the specific components in the plant flavors.

Figure 4.

Application of the DeepMID model. (a) The result of the known formulated flavors. (b) The result of the unknown formulated flavors.

2.4. Elucidation of Unknown Formulated Flavors

Finally, the model was used to predict candidates of unknown formulated flavors provided by the Technology Center of China Tobacco Hunan Industrial Co., Ltd, Changsha, China. The unknown formulated flavors were used for practical applications, and the formulations of these formulated flavors were unknown before submitting the results. Therefore, the unknown formulated flavors data set could be used to check the performance of the model in practical applications, including ACC, TPR, and FPR. As Figure 4b shows, ACC = 92.31%, TPR = 80.00%, and FPR = 0.00%. The information on the unknown formulated flavors is shown in Table S3. There were two unknown formulated flavors, L1 and L2. L1 was predicted by DeepMID first, and it was found that all the plant flavors predicted were correct against the results sent by the Technology Center of China Tobacco Hunan Industrial Co., Ltd, Changsha, China. The result on L1 could achieve ACC = 100%, TPR = 100%, and FPR = 0.00%. Therefore, DeepMID was further applied to the unknown formulated flavor L2. L2 was a formulated flavor that was used in the actual production application. L1 and L2 were both formulated by five plant flavors. L1 was not diluted with propylene glycol, while L2 was diluted with 50% propylene glycol. The results of the DeepMID model in L2 were ACC = 84.62%, TPR = 60.00%, and FPR = 0.00%. Although the results predicted by the DeepMID model decreased after a large amount of propylene glycol dilution, it still correctly predicted three components, which can assist in the manual formulation elucidation of plant flavors in a formulated flavor to some extent. The detailed results are listed in Table S4.

2.5. Stability of the DeepMID Model

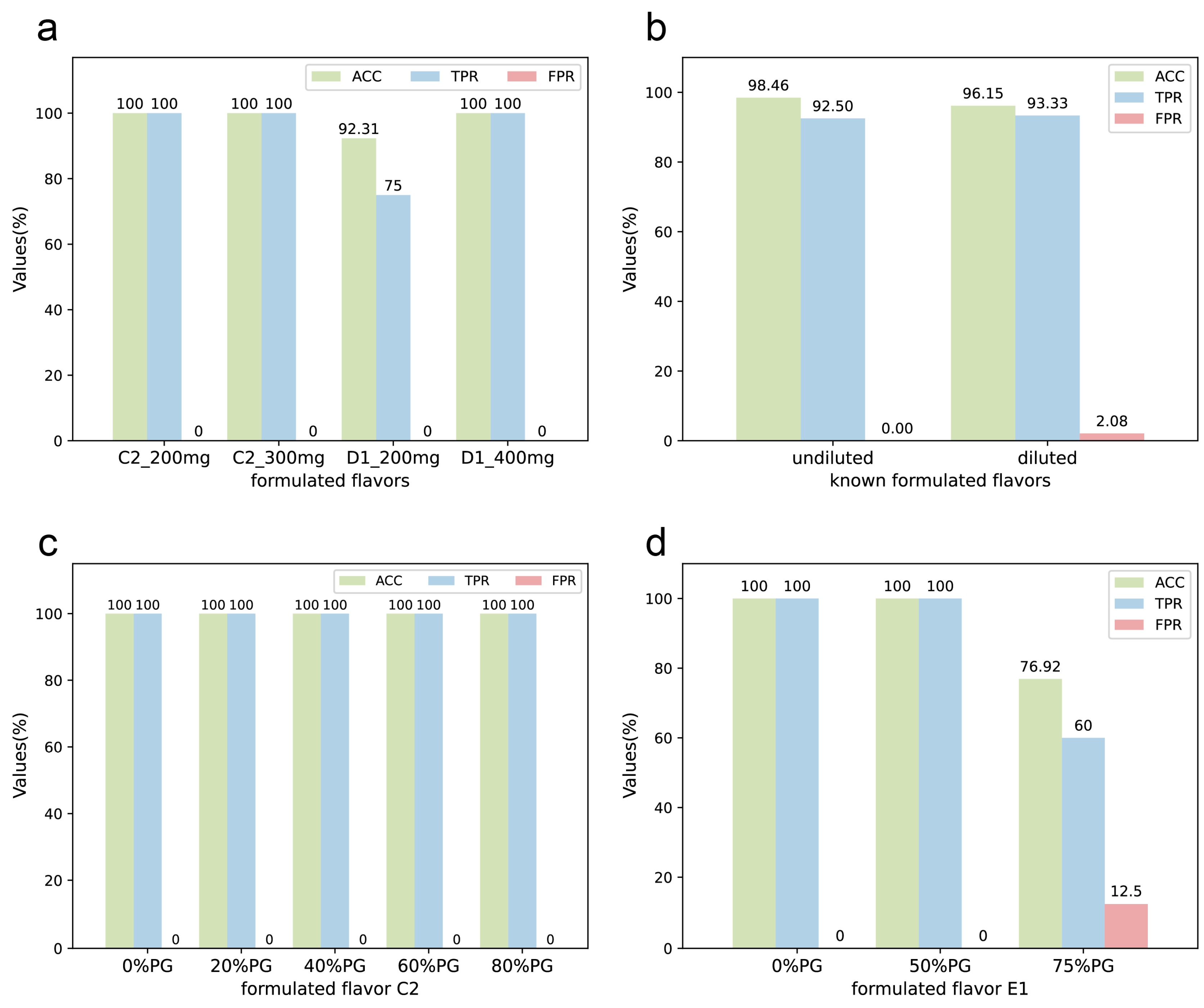

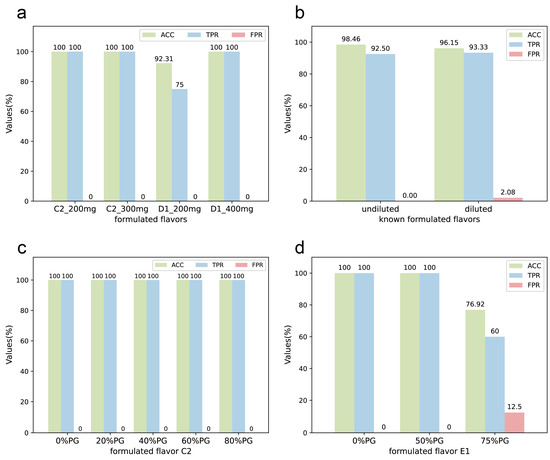

In order to investigate the performance of the model for formulated flavors with different qualities, we prepared two sets of known formulated flavors with the same formula but different qualities of plant flavors: C2 and C2-300, D1 and D1-400, as shown in Table S5. The total weight of C2 and D1 is 200 mg, while C2-300 is 300 mg and D1-400 is 400 mg. The results are presented in Figure 5a. For the 3-component formulated flavor C2, there was no significant effect of the different qualities on the results. For the 4-component formulated flavor D1, the results became more accurate when the amount of plant flavor in the formulated flavor was increased. However, since most of the experimental plant flavors are viscous and not highly soluble, too much amount used in the preparation of NMR test samples may cause precipitation, and if the solution is too viscous, it may also lead to a decrease in the quality of the NMR spectra, so the total weight of the samples was finally set to use 200 mg. It can be observed that the model was able to provide accurate predictions even when samples of different qualities were used in the preparation process.

Figure 5.

The stability of the DeepMID model. (a) For formulated flavor C2, the results on the different qualities of formulated flavors are the same. For D1, better results are obtained with larger qualities. (b) The results of undiluted and diluted known formulated flavors can be achieved with little difference. (c) The results of the 3-component formulated flavors C1 diluted with different concentrations of PG are the same. (d) For the 5-component formulated flavor E1, the results were unaffected when the PG ≤ 50%, but the results decreased when the dilution was greater than 75%.

In practical applications and production, the formulated flavors are usually diluted with propylene glycol, which may mask characteristic peaks and make it challenging to identify plant flavors in them. Therefore, a gradient experiment with propylene glycol-diluted formulated flavors was conducted. Two sets of known formulated flavors were prepared with different concentrations of PG dilution. C2 was a mixture of three plant flavors; 20%, 40%, 60%, and 80% PG was added to C2 to dilute it. Then, the diluted formulated flavors were named C2-20%, C2-40%, C2-60%, and C2-80%. Similarly, E1 was a known formulated flavor by mixing five plant flavors. After diluting with 50% and 75% PG, the diluted formulated flavors were named E1-50% and E1-75%. The results are shown in Figure 5b, indicating that although propylene glycol dilution can pose some challenges to the identification performance of DeepMID, the accuracy rate remains above 80%. The details of the formulations and the results of mixture identification can be found in Table S1 and Table S2. As shown in Figure 5c,d, the accuracy of the DeepMID model can reach 100% at less than 50% dilution. Hence, dilution has a greater effect on formulated flavors with more components. Though the results decrease as the dilution increases, they are still acceptable, which shows the stability of the DeepMID model in predicting diluted formulated flavors.

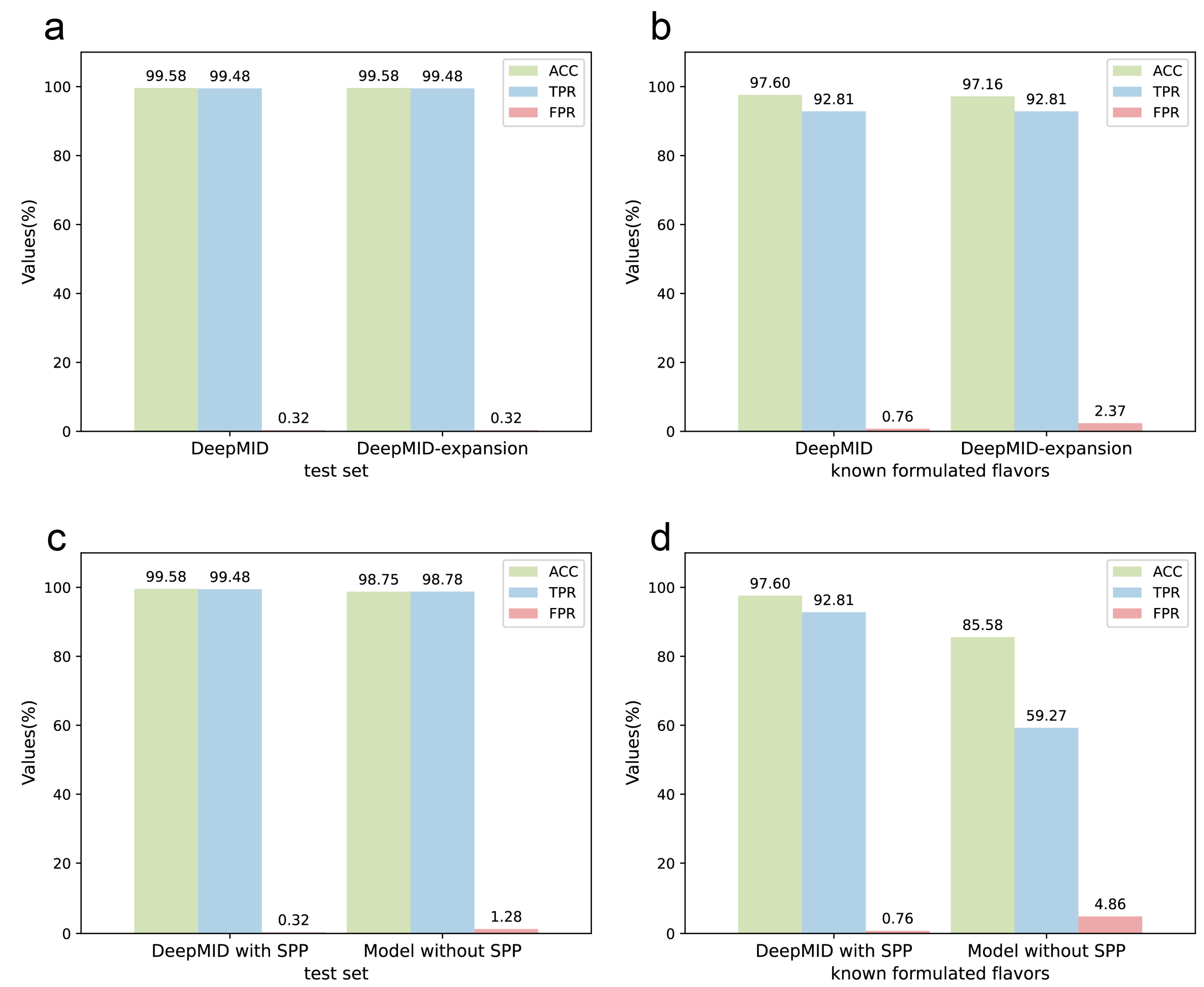

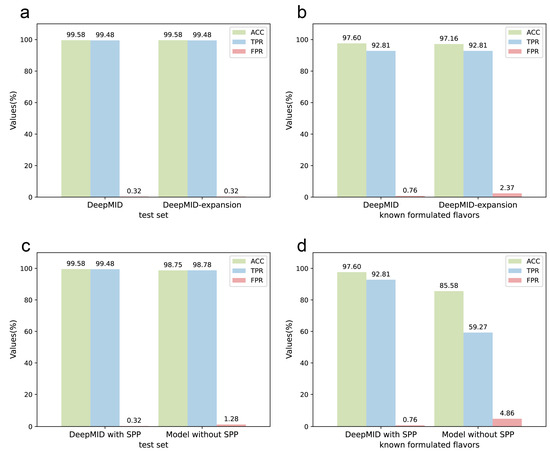

2.6. Expansion of the Spectral Database

Furthermore, 20 plant flavors were added to the database, expanding it to a total of 33 plant flavors. The database initially contained 13 plant flavors, which was expanded to 33. The details of the database before and after the extension are listed in Table S6. Figure 6a,b show the results of DeepMID on the expanded database for mixture identification. The details of the result can be found in Table S7. It can be seen that the results of DeepMID on the test set after expanding the spectral database are the same as before the expansion. For the data set obtained from the experiments, the sensitivity does not change, while the accuracy decreases slightly. The newly added 20 plant flavors have a negligible impact on the results, indicating that the model is reasonably stable, and the later added plant flavors to the database will not deteriorate the prediction performance of the DeepMID model.

Figure 6.

The discussions of the DeepMID model. (a) The results of the NMR data sets after expanding the spectral database are the same as before the expansion on the test set. (b) The results of the NMR data sets after expanding the spectral database on the known formulated flavors data set show that ACC decreases slightly and FPR increases marginally while TPR does not change compared to before expanding. (c) The comparison of the DeepMID model with and without the SPP layer on the test set shows that the SPP layer can improve the results. (d) The comparison of the DeepMID model with and without the SPP layer on the known formulated flavors set shows that the results of the model without the SPP layer are much worse than DeepMID with the SPP layer.

2.7. Comparison with the Model without the SPP Layer

In Figure 6c,d, the results of DeepMID with and without the SPP layer are compared. The model without the SPP layer works much worse on the experimental data set than the DeepMID model with the SPP layer. While DeepMID can achieve the result that ACC = 97.60%, TPR = 92.81%, and FPR = 0.76% on the known formulated flavors, the model without the SPP layer can only obtain the result that ACC = 85.58%, TPR = 59.27%, and FPR = 4.86%. The detailed results of the model without SPP can be found in Table S8. The SPP can extract spatial feature information of different sizes, which can improve the robustness of the model for the chemical shifts. The addition of the SPP layer allows the DeepMID model to have better generalization ability and prediction performance on experimental NMR spectra of real formulated flavors.

3. Methods

3.1. Data Set Curation

Many interferences make it challenging to analyze and extract features from the origin of NMR spectra. The following steps are taken to remove these interferences. During NMR experiments, baselines of the spectra may be distorted for many complex reasons. Therefore, adaptive iteratively reweighted penalized least squares (airPLS) [64] is used to correct baselines in NMR spectra. Plant flavors are mixtures of biologically active small molecules extracted from plants. In the extraction and preparation of plant flavors, a large amount of solvent is often added for dilution, which makes it challenging to identify the substance of the plant flavors. In order to solve this problem, the solvent peak is set to zero to enlarge the relative intensity of the characteristic peaks of each plant flavor. Since signal intensities in NMR spectra vary widely, it is necessary to normalize them. Normalization can adjust the intensities of NMR spectra in the same order of magnitude and make them comparable. In addition, normalization can speed up the convergence during gradient descent and increase the speed of finding the optimal solution. Here, the spectra are normalized after zeroing the solvent peaks, which can further amplify the characteristic peaks of plant flavors. Deep learning requires a large amount of data. Therefore, the informative intervals with NMR peaks (0.300–10.700 ppm) are cut out for data augmentation to obtain a data set as large as possible.

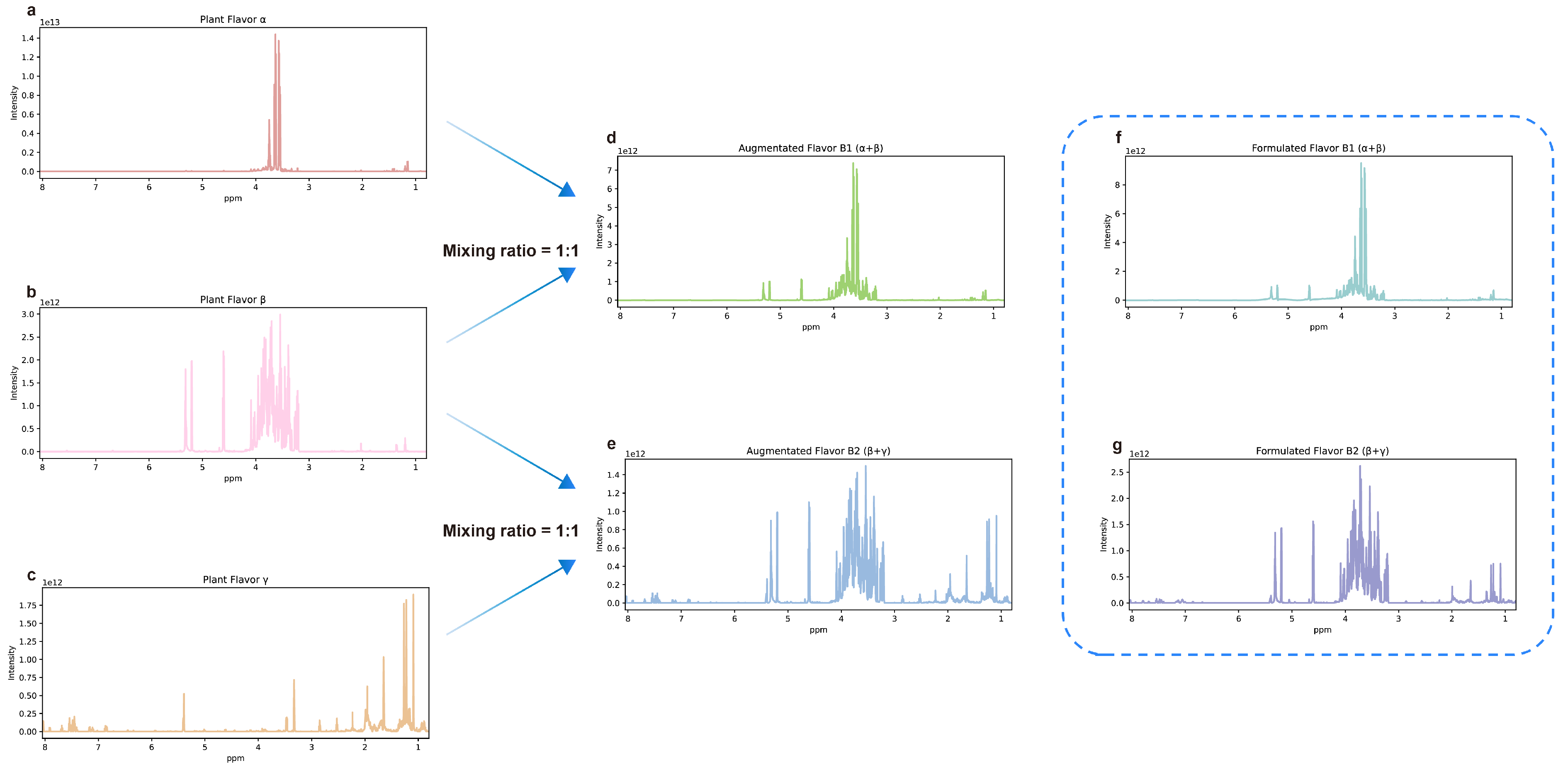

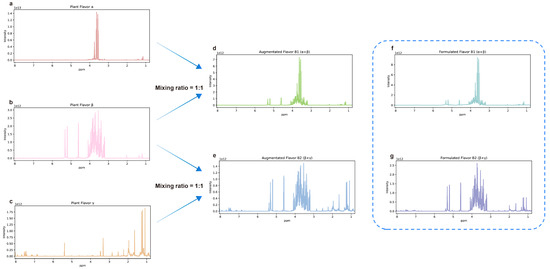

After the preprocessing, a data augmentation method was developed to generate NMR spectral pairs based on the characteristics of NMR spectra. The generated NMR spectral pairs have two different types: positive and negative. Several (1–5) NMR spectra (sampled spectra) are sampled from the plant flavor database and superposed to obtain the NMR spectrum of the formulated flavor. For a positive pair, its plant flavor is a component of its formulated flavor, and the NMR spectrum of its plant flavor is sampled from the sampled spectra. For a negative pair, its plant flavor is not a component of its formulated flavor, and the NMR spectrum of its plant flavor is sampled from the remaining spectra in the database. If the plant flavor is in the formulated flavor, the corresponding label of the NMR spectral pair is 1. Otherwise, it is 0. For example, Figure 7a shows the NMR spectrum of hops extract, named plant flavor α. Figure 7b shows the NMR spectrum of fig extract, named plant flavor β. Figure 7c shows the NMR spectrum of Roman chamomile extract, named plant flavor γ. The spectra of hops extract (α) and fig extract (β) were multiplied by a ratio of 0.5 and then superimposed to obtain the augmented NMR spectra of the formulated flavor B1 (α + β), as shown in Figure 7d. In the same way, the spectra of fig extract (β) and Roman chamomile extract (γ) were superimposed to obtain the augmented NMR spectra of the formulated flavor B2 (β + γ). For plant flavor α, the spectra pair that it combines with B1 (α + β) is a positive pair, while the spectra pair that it forms with B2 (β + γ) is a negative pair. For plant flavor β, both formulated flavors B1 and B2 combined with it are positive pairs. Formulated flavors B1 and B2 were formulated according to the above formula, and the NMR spectra are shown in Figure 7f,g. It can be observed that the augmented data can represent the experimental data to some extent. Generally speaking, the solvent peaks in the experimental data tend to be higher, while the characteristic peaks of plant flavors are lower than them. Because of the noise and chemical shift drift in real NMR spectra, random Gaussian noise and chemical shift drift (−3 × 10−4, 3 × 10−4 ppm) are injected into the plant flavors NMR spectra for each spectral pair separately. Due to the varied concentration, each NMR spectrum is multiplied by a random scale factor (0.2–1.0) when generating the mixture NMR spectrum. With the proposed data augmentation method and the plant flavor database, 50,000 NMR spectral pairs were generated, with 50% each of positive and negative samples. These spectral pairs were randomly divided into the training, validation, and test sets in the ratio of 8:1:1.

Figure 7.

Schematic diagram of NMR spectra and data augmentation. (a) The NMR spectrum of plant flavor α. (b) The NMR spectrum of plant flavor β. (c) The NMR spectrum of plant flavor γ. (d) The augmented NMR spectrum of B1 (α + β) obtained by superimposing the spectra of α and β. (e) The augmented NMR spectrum of B2 (β + γ) obtained by superimposing the spectra of β and γ. (f) The NMR spectrum of formulated flavor B1 (α + β) obtained by experiments. (g) The NMR spectrum of formulated flavor B2 (β + γ) was obtained by experiments.

3.2. Pseudo-Siamese Neural Network

The Siamese network (SNN) [65] consists of two identical subnetworks that share weights, taking in two input samples and producing their corresponding embeddings. These embeddings are then fed into a similarity function to compute the similarity or dissimilarity between the inputs. However, spectra of plant flavor and formulated flavor in an NMR spectral pair differ significantly. The pseudo-Siamese neural network (pSNN) is a variant of the SNN architecture that aims to perform similarity-based tasks. It contains two convolution neural networks with the same architecture, which do not share their weights. pSNN used here has two similar subnetworks to extract feature vectors from the spectral pairs. Then, the features are concatenated and fed to the next layer for comparison. Compared to the SNN architecture, pSNN is a more suitable architecture for the mixture identification task.

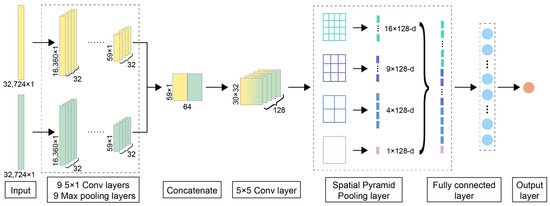

3.3. Spatial Pyramid Pooling

The spatial pyramid pooling [66] layer can pool varied feature maps and produce fixed-length outputs by dividing the feature maps into regions and pooling features in each region. The basic idea behind SPP is to divide the input feature maps into several grids or levels, with each level capturing features at a different scale. Feature pooling is performed within each grid or level to generate a fixed-length representation. These representations are then concatenated to form the final feature representation. SPP allows the network to capture both global information and local details, regardless of the size or aspect ratio of the input feature maps. As shown in Figure 8, for the two-dimensional feature map obtained after the two-dimensional convolution, the feature maps are pooled in the SPP layer with (4 × 4, 3 × 3, 2 × 2, 1 × 1) multi-level windows to obtain 4 × 4 = 16 feature vectors of 1 × 128, 3 × 3 = 9 feature vectors of 1 × 128, 2 × 2 = 4 feature vectors of 1 × 128, and one 1 × 128 feature vectors that are pooled directly to the entire feature map are stitched together to obtain a (16 + 9 + 4 + 1) × 128 = 30 × 128 feature vector. It is flattened into a one-dimensional 3840 × 1 feature vector and fed into the following fully connected layer. Multi-level windows are robust to object deformation compared to a conventional moving window pool with only a single window size. Thus, SPP can improve scale invariance, avoid distortions caused by traditional moving windows, and reduce overfitting with multi-scale features extracted to a fixed size to be fed into the fully connected layer.

Figure 8.

The detailed neural network architecture of DeepMID. DeepMID takes NMR spectral pairs as inputs, each comprising two NMR spectra: a plant flavor spectrum and a formulated flavor spectrum. The high-level feature maps from the spectral pairs were extracted by two subnetworks with the same architecture and then flattened and concatenated. Further extract features are obtained by a two-dimensional convolutional layer. An SPP layer pools these feature maps to produce a fixed-length feature, which then passes through a dense layer for comparison. The final output is obtained from an output layer with one unit.

3.4. Detailed Network Architecture

The detailed network architecture of DeepMID is shown in Figure 8. The inputs of DeepMID are NMR spectral pairs, and each spectral pair consists of two NMR spectra. One is the NMR spectrum of a plant flavor, and the other is the NMR spectrum of a formulated flavor. Two subnetworks with the same architecture (9 one-dimensional convolutional blocks) are chosen to extract the high-level feature maps from two NMR spectra in each spectral pair. Each convolutional block of subnetworks has a convolutional layer followed by a rectified linear unit (ReLU) activation layer and a max pooling layer. The weight and bias of convolutional kernels were initialized by the He normal initializer [67]. The number of convolutional kernels is 32, and the kernel size is 5 × 1. The feature maps extracted by the subnetworks are flattened and concatenated. A two-dimensional convolutional layer is used for further feature extraction; the number of kernels is 128, and the kernel size is 5 × 5. Then, the feature maps are pooled by an SPP layer to generate a feature with a fixed length, and the SPP layer is a 4-level pyramid with 30 bins (4 × 4, 3 × 3, 2 × 2, 1 × 1). The fixed-length feature is fed into a dense layer for comparison. There are 100 hidden units in the dense layer. Its activation function is also the ReLU function, and a dropout layer with a dropout rate equaling 0.2 is used to reduce the risk of overfitting. Finally, the output layer with one unit forms the final output, and its activation function is the Sigmoid function. The loss function is the binary cross-entropy, which applies to binary classification. The Adam [68] optimizer is chosen as the optimizer.

3.5. Mixture Identification

After the DeepMID model is established, it can elucidate the formulated flavors. A formulated flavor is composed of multiple plant flavors according to a formula. Plant flavors are mixtures. Therefore, a formulated flavor is a mixture of mixtures. The identification of plant flavors in a formulated flavor is the identification of mixtures in a mixture of mixtures. Therefore, this process has been termed as mixture identification. The detailed identification process is as follows. The NMR spectrum of a formulated flavor is denoted as a vector s. All the NMR spectra of plant flavors in a spectral library can be represented as a matrix D (N × P), N is the number of plant flavors in the library, and P is the number of data points in each NMR spectrum. Combining the NMR spectrum (s) of a formulated flavor with each NMR spectrum (Di) in the database yields N spectral pairs (s, D1), …, (s, DN). Each spectral pair is predicted by the DeepMID model to obtain the probability of a plant flavor in the formulated flavor. A threshold value (e.g., 0.5) can be set for this probability to control the number of false positives. If the probability of a plant flavor in the formulated flavor is larger than the threshold, it can be identified as a candidate. After completing the prediction and filtering of N spectral pairs, a list of candidates for the presence of plant flavors in the formulated flavor is obtained.

3.6. Evaluation Metrics

Accuracy rate (ACC), true positive rate (TPR, sensitivity), and false positive rate (FPR, 1-specificity) were used to evaluate the performance of the models in this study. The formulas for ACC, TPR, and FPR are as follows:

Here, TP, FP, TN, and FN stand for true positive, false positive, true negative, and false negative, respectively. In binary classification, samples are marked as positive or negative. If the plant flavor is in the formulated flavor, it is marked as positive, and vice versa for negative. If the predicted and actual values are both positive, we consider it a true positive (TP). If they are both negative, it is a true negative (TN). However, if the predicted value is positive and the actual value is negative, we classify it as a false positive (FP). On the other hand, if the predicted value is negative but the actual value is positive, we classify it as a false negative (FN). For example, if the model predicts that the probability of a plant flavor being in a formulated flavor is >0.5, the predicted value is positive, and the plant flavor is actually presented in the formulated flavor, which means that the true value is positive. Then, it is true positive.

4. Experiments

4.1. Plant Flavors

The plant flavors were provided by third-party personnel in the Technology Center of China Tobacco Hunan Industrial Co., Ltd., Changsha, China. These plant flavors are often used to prepare formulated flavors. Most of these plant flavors were mixtures of metabolites extracted from plants containing bioactive small molecules, and their compositions were complex and unidentified. The samples of plant flavors were produced by dissolving 200 mg of the natural plant flavor into a mixture of 0.6 mL CH3OD and 0.6 mL Phosphate Buffer (PB), and the detail of PB is shown in Figure S1. After being vortexed at 500 rpm for 1 min, ultrasonicated for 15 min, and centrifuged at 13,000 rpm for 10 min at 15 °C, the supernatant was taken at 550 μL for NMR measurement. Sample measurements were performed at 25 °C on a Bruker AVANCE NEO 600 MHz NMR spectrometer with the Prodigy cryoprobe (Bruker Scientific Instruments, Fällanden, Switzerland), and the cryoprobe was operated at the temperature of liquid nitrogen. The measurement frequency was 1H NMR 600.12 MHz, and the number of scans was 128. The pulse program was chosen as noesypprld to suppress the water peak; TSP was the internal standard; MeOD was used for the NMR field locking. The information on each flavor is listed in Table S9, and the information on the reagents is listed in Table S10.

4.2. Known Formulated Flavors

In addition, 16 mixtures with known plant flavors were prepared and measured through real experiments as another data set to test the DeepMID model. These blends, formulated from plant flavors (mixtures), were called known formulated flavors. These formulated flavors were numbered according to their composition. For instance, the formulated flavors consist of two plant flavors numbered B1 and B2 and three plant flavors numbered C1 and C2. The formulated flavors with more components are named similarly. The specific formulations are listed in Table S3. They were dissolved into a solution of 0.6 mL CH3OD and 0.6 mL PB. The following steps and the NMR measurement conditions are the same as plant flavors.

4.3. Unknown Formulated Flavors

Two unknown formulated flavors were provided by third-party personnel in the Technology Center of China Tobacco Hunan Industrial Co., Ltd., Changsha, China. We were told the plant flavors of the formulated flavors only after submitting the predicted result. The samples were prepared by dissolving 200 mg of the unknown formulated flavors into a mixture of 0.6 mL CH3OD and 0.6 mL PB and then were processed in the same steps as plant flavors. In addition, the NMR measurement experimental conditions were set as the plant flavors.

5. Conclusions

In this research, DeepMID was proposed to identify plant flavors in formulated flavors using a pseudo-Siamese neural network and 1H NMR spectroscopy.

First, we continuously adjusted the experimental protocol for our plant flavors to determine the best method and obtained NMR spectra. Compared to mass spectrometry, NMR has a lower sensitivity, making it difficult to accurately identify plant flavors with complex compositions and low concentrations in formulated flavors. To address this issue, a 600 MHz NMR spectrometer was chosen instead of a 400 MHz NMR spectrometer, and the Prodigy cryoprobe operated at liquid nitrogen temperature was used. Additionally, the number of scans was increased, and the pulse program was chosen as the noesypprld to suppress the water peak. Furthermore, we conducted experimental comparisons to determine the most suitable deuterium solvent for dissolving more plant flavors.

Next, after obtaining high-quality NMR spectra, we can input them into the network for training. The probabilities of the plant flavors in a formulated flavor can be obtained by feeding the spectral pairs of plant flavors and the formulated flavors into DeepMID. Then, 50,000 pairs of spectra were generated by superposing the NMR spectra in the plant flavor database. They were randomly divided into the training set, validation set, and test set in the ratio of 8:1:1 for training, validation, and testing the DeepMID model. The performance of the model was tested by the test set and experimental data sets in terms of ACC, TPR, and FPR.

Finally, DeepMID achieved good results in the augmented test set: ACC = 99.58%, TPR = 99.48%, and FPR = 0.32%, and the results of known formulated flavors show ACC = 97.60%, TPR = 92.81%, and FPR = 0.76%. The DeepMID model can also achieve acceptable performance with mixtures with different qualities, PG dilution mixtures, and expansion of the spectral database. The model identified plant flavors in unknown formulated flavors and achieved good results in mixture identification: ACC = 92.31%, TPR = 80.00%, and FPR = 0.00%.

In summary, DeepMID is a valuable method for identifying plant flavors in formulated flavors based on NMR spectroscopy, which can effectively identify plant flavors containing small molecules and can be extended to more application scenarios for NMR spectroscopy.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/molecules28217380/s1, Figure S1. The preparation method of phosphate buffer; Table S1. The information of the known formulated flavors; Table S2. The detailed results of the known formulated flavors data set; Table S3. The information of the unknown formulated flavors; Table S4. The detailed results of the unknown formulated flavors data set; Table S5. The information of the formulated flavors with different qualities; Table S6. The plant flavors of the database before and after the extension; Table S7. The detailed results of the DeepMID model after extension on the known formulated flavors data set; Table S8. The detailed results of the DeepMID model without SPP layer on the known formulated flavors data set; Table S9. The information about plant flavors; Table S10. Detailed information of the reagents.

Author Contributions

Conceptualization, Z.Z.; methodology, Y.W., Y.L. and H.L.; software, Y.W. and Y.L.; validation, Y.W., Y.L. and H.L.; formal analysis, Y.W.; investigation, Y.W. and Y.L.; resources, Y.W., B.K., W.D., J.C. and W.W.; data curation, Y.W. and Z.Z.; writing—original draft preparation, Y.W.; writing—review and editing, Z.Z. and B.K.; visualization, Y.W. and B.K.; supervision, Z.Z. and B.K. and project administration, Y.W. and Z.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by Exxon Mobil Asia Pacific Research and Development Company Ltd. (A4011260).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The source code, model, spectra, manual, and tutorial are available at https://github.com/yfWang01/DeepMID and https://doi.org/10.5281/zenodo.10033745 (accessed on 31 October 2023).

Acknowledgments

We are grateful for the resources from the High-Performance Computing Center of Central South University. We are grateful to all employees of this institute for their encouragement and support of this research.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wishart, D.S. Emerging applications of metabolomics in drug discovery and precision medicine. Nat. Rev. Drug. Discov. 2016, 15, 473–484. [Google Scholar] [CrossRef] [PubMed]

- Claridge, T.D.W. Chapter 2—Introducing high-resolution NMR. In High-Resolution NMR Techniques in Organic Chemistry; Claridge, T.D.W., Ed.; Tetrahedron Organic Chemistry Series; Elsevier: Amsterdam, The Netherlands, 2009; Volume 27, pp. 11–34. [Google Scholar]

- Softley, C.A.; Bostock, M.J.; Popowicz, G.M.; Sattler, M. Paramagnetic NMR in drug discovery. J. Biomol. NMR 2020, 74, 287–309. [Google Scholar] [CrossRef] [PubMed]

- Edison, A.S.; Colonna, M.; Gouveia, G.J.; Holderman, N.R.; Judge, M.T.; Shen, X.N.; Zhang, S.C. NMR: Unique Strengths That Enhance Modern Metabolomics Research. Anal. Chem. 2021, 93, 478–499. [Google Scholar] [CrossRef]

- Wishart, D.S. Quantitative metabolomics using NMR. TrAC Trends Anal. Chem. 2008, 27, 228–237. [Google Scholar] [CrossRef]

- Cao, R.G.; Liu, X.R.; Liu, Y.Q.; Zhai, X.Q.; Cao, T.Y.; Wang, A.L.; Qiu, J. Applications of nuclear magnetic resonance spectroscopy to the evaluation of complex food constituents. Food Chem. 2021, 342, 128258. [Google Scholar] [CrossRef]

- Martin, G.J.; Martin, M.L. Thirty Years of Flavor NMR. In Flavor Chemistry: Thirty Years of Progress; Teranishi, R., Wick, E.L., Hornstein, I., Eds.; Springer: Boston, MA, USA, 1999; pp. 19–30. [Google Scholar]

- Tsedilin, A.M.; Fakhrutdinov, A.N.; Eremin, D.B.; Zalesskiy, S.S.; Chizhov, A.O.; Kolotyrkina, N.G.; Ananikov, V.P. How sensitive and accurate are routine NMR and MS measurements? Mendeleev Commun. 2015, 25, 454–456. [Google Scholar] [CrossRef]

- Akash, M.S.H.; Rehman, K. Essentials of Pharmaceutical Analysis; Springer: Singapore, 2020. [Google Scholar]

- Nicholson, J.K.; Lindon, J.C.; Holmes, E. ‘Metabonomics’: Understanding the metabolic responses of living systems to pathophysiological stimuli via multivariate statistical analysis of biological NMR spectroscopic data. Xenobiotica 1999, 29, 1181–1189. [Google Scholar] [CrossRef]

- Huang, L.; Ho, C.-T.; Wang, Y. Biosynthetic pathways and metabolic engineering of spice flavors. Crit. Rev. Food Sci. Nutr. 2021, 61, 2047–2060. [Google Scholar] [CrossRef]

- Lim, E.-K.; Bowles, D. Plant production systems for bioactive small molecules. Curr. Opin. Biotechnol. 2012, 23, 271–277. [Google Scholar] [CrossRef]

- Sobolev, A.P.; Mannina, L.; Proietti, N.; Carradori, S.; Daglia, M.; Giusti, A.M.; Antiochia, R.; Capitani, D. Untargeted NMR-Based Methodology in the Study of Fruit Metabolites. Molecules 2015, 20, 4088–4108. [Google Scholar] [CrossRef]

- Remaud, G.S.; Akoka, S. A review of flavors authentication by position-specific isotope analysis by nuclear magnetic resonance spectrometry: The example of vanillin. Flavour Fragr. J. 2017, 32, 77–84. [Google Scholar] [CrossRef]

- Galvan, D.; de Aguiar, L.M.; Bona, E.; Marini, F.; Killner, M.H.M. Successful combination of benchtop nuclear magnetic resonance spectroscopy and chemometric tools: A review. Anal. Chim. Acta 2023, 1273, 341495. [Google Scholar] [CrossRef] [PubMed]

- Cobas, C. NMR signal processing, prediction, and structure verification with machine learning techniques. Magn. Reson. Chem. 2020, 58, 512–519. [Google Scholar] [CrossRef] [PubMed]

- Chen, D.; Wang, Z.; Guo, D.; Orekhov, V.; Qu, X. Review and Prospect: Deep Learning in Nuclear Magnetic Resonance Spectroscopy. Chem. A Eur. J. 2020, 26, 10391–10401. [Google Scholar] [CrossRef]

- Bengio, Y. Learning deep architectures for AI. Found. Trends Mach. Learn. 2009, 2, 1–127. [Google Scholar] [CrossRef]

- Hinton, G.E.; Salakhutdinov, R. Reducing the Dimensionality of Data with Neural Networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef]

- Bengio, Y.; Delalleau, O. On the expressive power of deep architectures. In Proceedings of the Algorithmic Learning Theory: 22nd International Conference, ALT 2011, Espoo, Finland, 5–7 October 2011; pp. 18–36. [Google Scholar]

- Bengio, Y.; LeCun, Y. Scaling learning algorithms towards AI. Large-Scale Kernel Mach. 2007, 34, 1–41. [Google Scholar] [CrossRef]

- Teschendorff, A.E. Avoiding common pitfalls in machine learning omic data science. Nat. Mater. 2019, 18, 422–427. [Google Scholar] [CrossRef]

- Ronan, T.; Qi, Z.; Naegle, K.M. Avoiding common pitfalls when clustering biological data. Sci. Signal. 2016, 9, re6. [Google Scholar] [CrossRef]

- Dan, J.; Zhao, X.; Ning, S.; Lu, J.; Loh, K.P.; He, Q.; Loh, N.D.; Pennycook, S.J. Learning motifs and their hierarchies in atomic resolution microscopy. Sci. Adv. 2022, 8, eabk1005. [Google Scholar] [CrossRef]

- Sanchez-Lengeling, B.; Aspuru-Guzik, A. Inverse molecular design using machine learning: Generative models for matter engineering. Science 2018, 361, 360–365. [Google Scholar] [CrossRef] [PubMed]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 53. [Google Scholar] [CrossRef] [PubMed]

- Niu, Z.; Zhong, G.; Yu, H. A review on the attention mechanism of deep learning. Neurocomputing 2021, 452, 48–62. [Google Scholar] [CrossRef]

- Debus, B.; Parastar, H.; Harrington, P.; Kirsanov, D. Deep learning in analytical chemistry. TrAC Trends Anal. Chem. 2021, 145, 116459. [Google Scholar] [CrossRef]

- Dong, J.-E.; Wang, Y.; Zuo, Z.-T.; Wang, Y.-Z. Deep learning for geographical discrimination of Panax notoginseng with directly near-infrared spectra image. Chemom. Intell. Lab. Syst. 2020, 197, 103913. [Google Scholar] [CrossRef]

- Wang, Y.; Fan, X.; Tian, S.; Zhang, H.; Sun, J.; Lu, H.; Zhang, Z. EasyCID: Make component identification easy in Raman spectroscopy. Chemom. Intell. Lab. Syst. 2022, 231, 104657. [Google Scholar] [CrossRef]

- Zeng, H.-T.; Hou, M.-H.; Ni, Y.-P.; Fang, Z.; Fan, X.-Q.; Lu, H.-M.; Zhang, Z.-M. Mixture analysis using non-negative elastic net for Raman spectroscopy. J. Chemom. 2020, 34, e3293. [Google Scholar] [CrossRef]

- Fan, X.; Ming, W.; Zeng, H.; Zhang, Z.; Lu, H. Deep learning-based component identification for the Raman spectra of mixtures. Analyst 2019, 144, 1789–1798. [Google Scholar] [CrossRef]

- Lussier, F.; Thibault, V.; Charron, B.; Wallace, G.Q.; Masson, J.-F. Deep learning and artificial intelligence methods for Raman and surface-enhanced Raman scattering. TrAC Trends Anal. Chem. 2020, 124, 115796. [Google Scholar] [CrossRef]

- Ji, H.; Xu, Y.; Lu, H.; Zhang, Z. Deep MS/MS-Aided Structural-Similarity Scoring for Unknown Metabolite Identification. Anal. Chem. 2019, 91, 5629–5637. [Google Scholar] [CrossRef]

- Ji, H.; Deng, H.; Lu, H.; Zhang, Z. Predicting a Molecular Fingerprint from an Electron Ionization Mass Spectrum with Deep Neural Networks. Anal. Chem. 2020, 92, 8649–8653. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Xu, Z.; Fan, X.; Wang, Y.; Yang, Q.; Sun, J.; Wen, M.; Kang, X.; Zhang, Z.; Lu, H. Fusion of Quality Evaluation Metrics and Convolutional Neural Network Representations for ROI Filtering in LC–MS. Anal. Chem. 2023, 95, 612–620. [Google Scholar] [CrossRef] [PubMed]

- Melnikov, A.D.; Tsentalovich, Y.P.; Yanshole, V.V. Deep Learning for the Precise Peak Detection in High-Resolution LC–MS Data. Anal. Chem. 2020, 92, 588–592. [Google Scholar] [CrossRef] [PubMed]

- Yang, Q.; Ji, H.; Xu, Z.; Li, Y.; Wang, P.; Sun, J.; Fan, X.; Zhang, H.; Lu, H.; Zhang, Z. Ultra-fast and accurate electron ionization mass spectrum matching for compound identification with million-scale in-silico library. Nat. Commun. 2023, 14, 3722. [Google Scholar] [CrossRef] [PubMed]

- Liao, Y.; Tian, M.; Zhang, H.; Lu, H.; Jiang, Y.; Chen, Y.; Zhang, Z. Highly automatic and universal approach for pure ion chromatogram construction from liquid chromatography-mass spectrometry data using deep learning. J. Chromatogr. A 2023, 1705, 464172. [Google Scholar] [CrossRef]

- Wei, J.N.; Belanger, D.; Adams, R.P.; Sculley, D. Rapid Prediction of Electron–Ionization Mass Spectrometry Using Neural Networks. ACS Cent. Sci. 2019, 5, 700–708. [Google Scholar] [CrossRef]

- Fan, Y.; Yu, C.; Lu, H.; Chen, Y.; Hu, B.; Zhang, X.; Su, J.; Zhang, Z. Deep learning-based method for automatic resolution of gas chromatography-mass spectrometry data from complex samples. J. Chromatogr. A 2023, 1690, 463768. [Google Scholar] [CrossRef]

- Yang, Q.; Ji, H.; Fan, X.; Zhang, Z.; Lu, H. Retention time prediction in hydrophilic interaction liquid chromatography with graph neural network and transfer learning. J. Chromatogr. A 2021, 1656, 462536. [Google Scholar] [CrossRef]

- Fan, X.; Xu, Z.; Zhang, H.; Liu, D.; Yang, Q.; Tao, Q.; Wen, M.; Kang, X.; Zhang, Z.; Lu, H. Fully automatic resolution of untargeted GC-MS data with deep learning assistance. Talanta 2022, 244, 123415. [Google Scholar] [CrossRef]

- Yang, Q.; Ji, H.; Lu, H.; Zhang, Z. Prediction of Liquid Chromatographic Retention Time with Graph Neural Networks to Assist in Small Molecule Identification. Anal. Chem. 2021, 93, 2200–2206. [Google Scholar] [CrossRef]

- Fan, X.; Ma, P.; Hou, M.; Ni, Y.; Fang, Z.; Lu, H.; Zhang, Z. Deep-Learning-Assisted multivariate curve resolution. J. Chromatogr. A 2021, 1635, 461713. [Google Scholar] [CrossRef] [PubMed]

- Guo, R.; Zhang, Y.; Liao, Y.; Yang, Q.; Xie, T.; Fan, X.; Lin, Z.; Chen, Y.; Lu, H.; Zhang, Z. Highly accurate and large-scale collision cross sections prediction with graph neural networks. Commun. Chem. 2023, 6, 139. [Google Scholar] [CrossRef] [PubMed]

- Plante, P.-L.; Francovic-Fontaine, É.; May, J.C.; McLean, J.A.; Baker, E.S.; Laviolette, F.; Marchand, M.; Corbeil, J. Predicting Ion Mobility Collision Cross-Sections Using a Deep Neural Network: DeepCCS. Anal. Chem. 2019, 91, 5191–5199. [Google Scholar] [CrossRef]

- Meier, F.; Köhler, N.D.; Brunner, A.-D.; Wanka, J.-M.H.; Voytik, E.; Strauss, M.T.; Theis, F.J.; Mann, M. Deep learning the collisional cross sections of the peptide universe from a million experimental values. Nat. Commun. 2021, 12, 1185. [Google Scholar] [CrossRef] [PubMed]

- Brereton, R.G. Pattern recognition in chemometrics. Chemom. Intell. Lab. Syst. 2015, 149, 90–96. [Google Scholar] [CrossRef]

- Weljie, A.M.; Newton, J.; Mercier, P.; Carlson, E.; Slupsky, C.M. Targeted Profiling: Quantitative Analysis of 1H NMR Metabolomics Data. Anal. Chem. 2006, 78, 4430–4442. [Google Scholar] [CrossRef]

- Mendez, K.M.; Broadhurst, D.I.; Reinke, S.N. The application of artificial neural networks in metabolomics: A historical perspective. Metabolomics 2019, 15, 142. [Google Scholar] [CrossRef]

- Moritz, B.; Mazin, J.; Anastasiya, K.; Jan, G.K. Deep regression with ensembles enables fast, first-order shimming in low-field NMR. J. Magn. Reson. 2022, 336, 107151. [Google Scholar] [CrossRef]

- Qu, X.; Huang, Y.; Lu, H.; Qiu, T.; Guo, D.; Agback, T.; Orekhov, V.; Chen, Z. Accelerated Nuclear Magnetic Resonance Spectroscopy with Deep Learning. Angew. Chem. Int. Ed. 2020, 59, 10297–10300. [Google Scholar] [CrossRef]

- Li, D.-W.; Hansen, A.L.; Yuan, C.; Bruschweiler-Li, L.; Brüschweiler, R. DEEP picker is a deep neural network for accurate deconvolution of complex two-dimensional NMR spectra. Nat. Commun. 2021, 12, 5229. [Google Scholar] [CrossRef]

- Klukowski, P.; Augoff, M.; Zięba, M.; Drwal, M.; Gonczarek, A.; Walczak, M.J. NMRNet: A deep learning approach to automated peak picking of protein NMR spectra. Bioinformatics 2018, 34, 2590–2597. [Google Scholar] [CrossRef] [PubMed]

- Wu, K.; Luo, J.; Zeng, Q.; Dong, X.; Chen, J.; Zhan, C.; Chen, Z.; Lin, Y. Improvement in Signal-to-Noise Ratio of Liquid-State NMR Spectroscopy via a Deep Neural Network DN-Unet. Anal. Chem. 2021, 93, 1377–1382. [Google Scholar] [CrossRef] [PubMed]

- Gerrard, W.; Bratholm, L.A.; Packer, M.J.; Mulholland, A.J.; Glowacki, D.R.; Butts, C.P. IMPRESSION—Prediction of NMR parameters for 3-dimensional chemical structures using machine learning with near quantum chemical accuracy. Chem. Sci. 2020, 11, 508–515. [Google Scholar] [CrossRef] [PubMed]

- Chongcan, L.; Yong, C.; Weihua, D. Identifying molecular functional groups of organic compounds by deep learning of NMR data. Magn. Reson. Chem. 2022, 60, 1061–1069. [Google Scholar] [CrossRef]

- Piotr, K.; Riek, R.; Güntert, P. Rapid protein assignments and structures from raw NMR spectra with the deep learning technique ARTINA. Nat. Commun. 2022, 13, 5785. [Google Scholar] [CrossRef]

- Kavitha, R.; Veera Mohana Rao, K.; Neeraj Praphulla, A.; Vrushali Siddesh, S.; Ramakrishna, V.H.; Satish Chandra, S. Identifying type of sugar adulterants in honey: Combined application of NMR spectroscopy and supervised machine learning classification. Curr. Res. Food Sci. 2022, 5, 272–277. [Google Scholar] [CrossRef]

- Wei, W.; Liao, Y.; Wang, Y.; Wang, S.; Du, W.; Lu, H.; Kong, B.; Yang, H.; Zhang, Z. Deep Learning-Based Method for Compound Identification in NMR Spectra of Mixtures. Molecules 2022, 27, 3653. [Google Scholar] [CrossRef]

- Fan, X.; Wang, Y.; Yu, C.; Lv, Y.; Zhang, H.; Yang, Q.; Wen, M.; Lu, H.; Zhang, Z. A Universal and Accurate Method for Easily Identifying Components in Raman Spectroscopy Based on Deep Learning. Anal. Chem. 2023, 95, 4863–4870. [Google Scholar] [CrossRef]

- Helmus, J.J.; Jaroniec, C.P. Nmrglue: An open source Python package for the analysis of multidimensional NMR data. J. Biomol. NMR 2013, 55, 355–367. [Google Scholar] [CrossRef]

- Zhang, Z.-M.; Chen, S.; Liang, Y.-Z. Baseline correction using adaptive iteratively reweighted penalized least squares. Analyst 2010, 135, 1138–1146. [Google Scholar] [CrossRef]

- Bromley, J.; Guyon, I.; LeCun, Y.; Säckinger, E.; Shah, R. Signature verification using a “siamese” time delay neural network. Adv. Neural Inf. Process. Syst. 1993, 6, 737–744. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2015, arXiv:1412.6980. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).