The Application of Cameras in Precision Pig Farming: An Overview for Swine-Keeping Professionals

Abstract

Simple Summary

Abstract

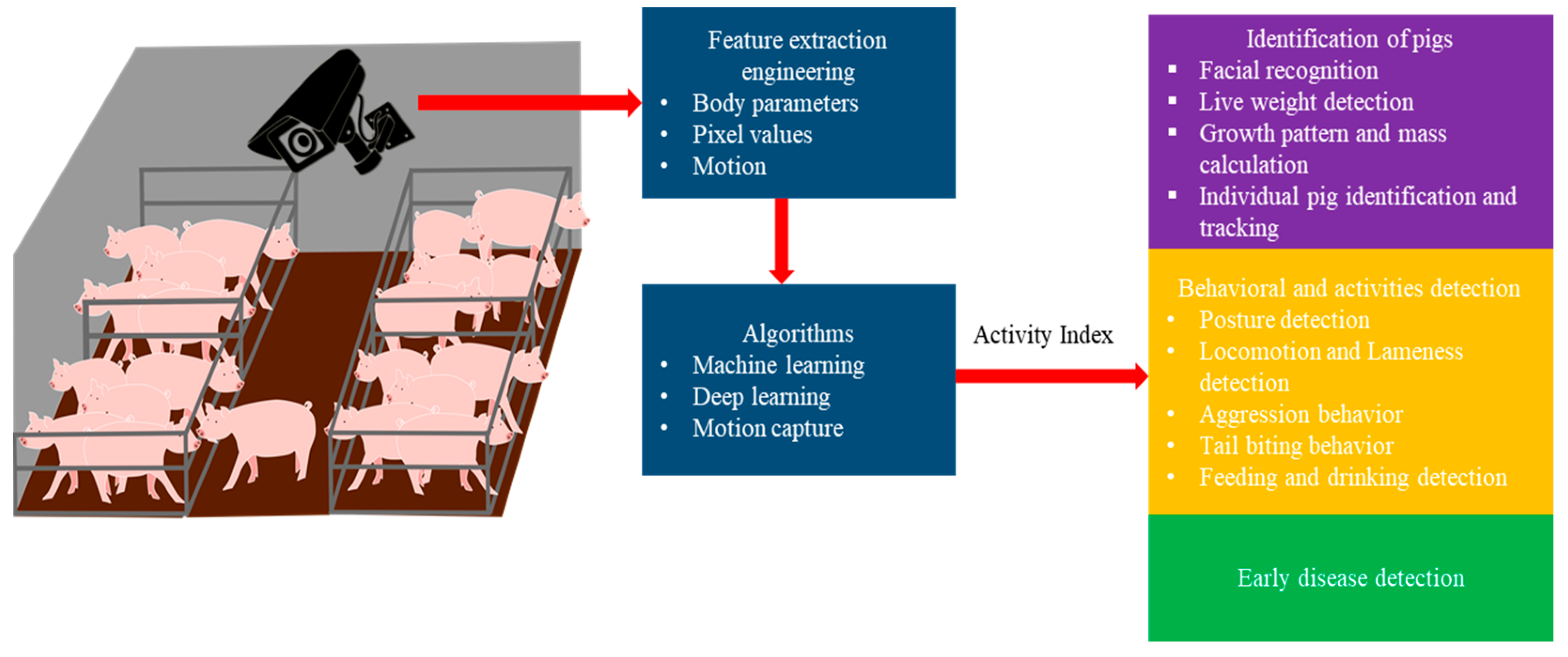

1. Introduction

1.1. The Key Drivers of Adaptations in Precision Farming

- Information and individual monitoring—maximum data could be stored in a computer, which could be used to further visualize the situation (feeding efficiency can be calculated by collecting data of feed intake and the weight of the pigs [17,18,19]; body surface temperature data can be used to track heat stress [15]). All the data, including on physical movement, performance, biological information, phonotype properties, etc., can be used to visualize not only group- or house-level data but individual data, too [20,21,22].

1.2. Review Objectives

2. Introduction to Cameras Used for PLF

3. An Overview of Software Used to Analyze an Image or Video

4. Identification of Pigs

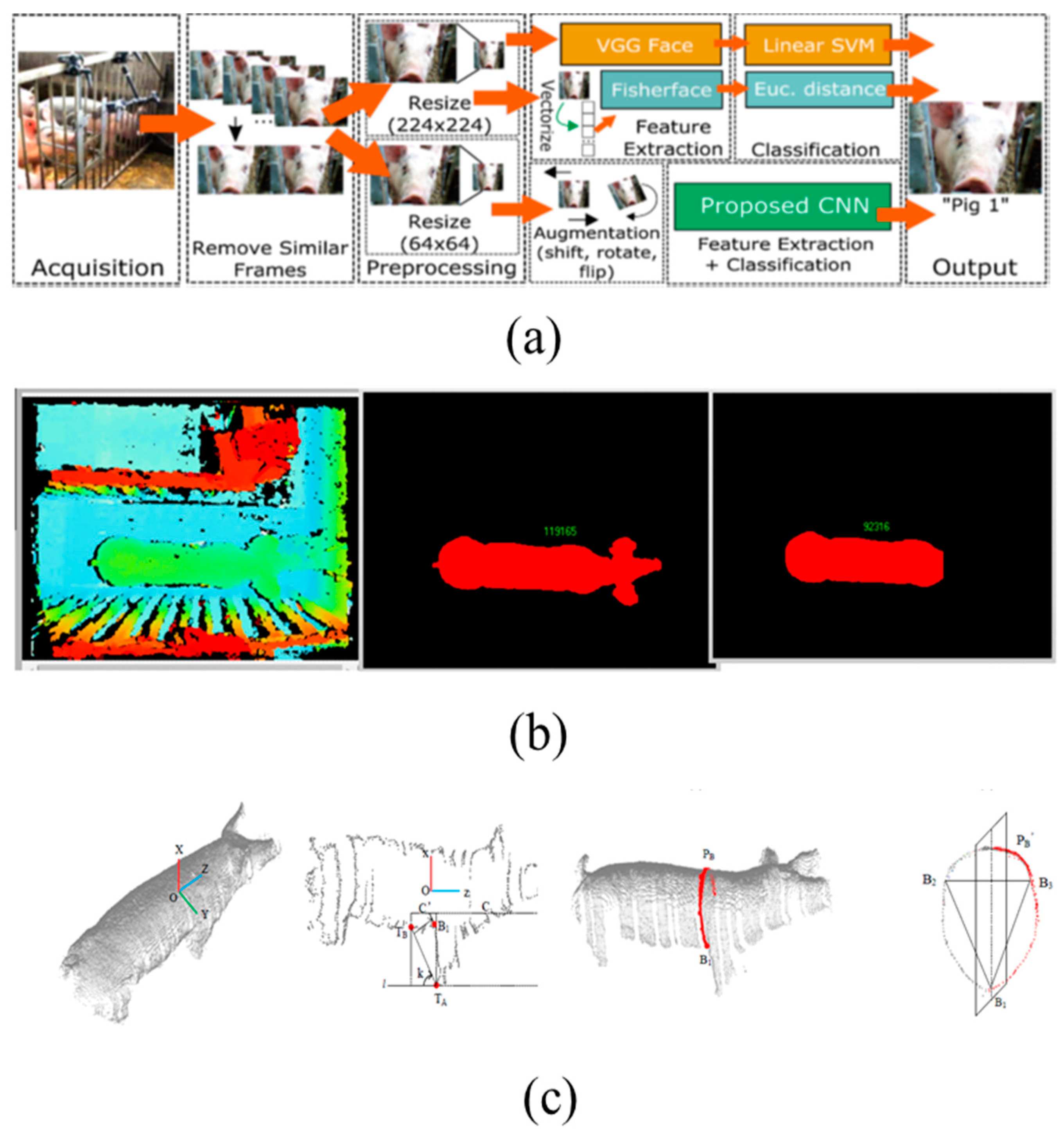

4.1. Facial Recognition

- (1)

- Range: RFID has a limited range (even long-range readers state a maximum distance of 120 cm) at which the tags can be activated and read successfully [39]. In addition, multiple tags cannot be read concurrently; therefore, the data may not be reliable since pigs are playful and bunch together.

- (2)

- Readability: Ear-tagged RFID can become illegible for reasons such as wear and tear, breakage, and soiling [42].

- (3)

- Loss: Tags may be lost due to ear tearing during fighting or playing [39]. This is possible since the pig barn has metal objects; in addition, pigs are playful with plastic objects. In addition, the RFID tags are often exposed to harsh environments with excessive dirt, dust, and moisture, and they must function in extreme heat and cold, from −30 °C to 70 °C [42].

- (4)

- Welfare: Poor application of RFID could result in infection or ear damage. In addition, ear tags can be transferred from one animal to another, which increases infection possibilities [42].

4.2. Live Weight Detection

4.3. Growth Patterns and Mass Calculation

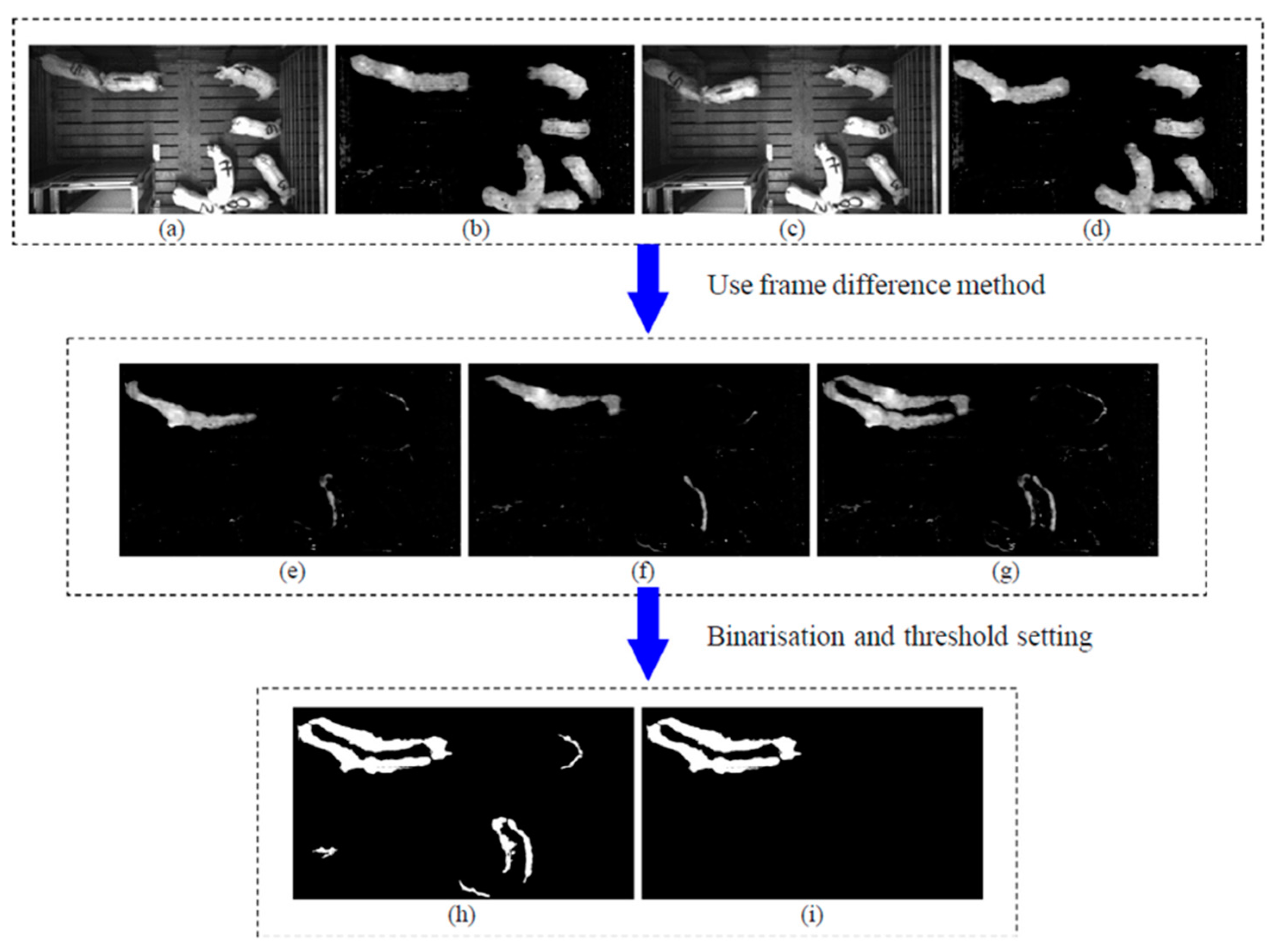

4.4. Individual Pig Identification and Tracking

5. Behavioral and Activity Detection

5.1. Posture Detection

| S. No. | Posture Name | Description |

|---|---|---|

| 1 | Sternal lying | Lying on the chest and belly on the floor; no visible legs due to them being folded under the body; totally hidden udder |

| 2 | Lateral lying | Lying sideways (on either right or left side); two or more legs visible; udder clearly visible |

| 3 | Standing | No other parts of the pig’s body except the legs are in contact with the floor; the end of the body should be visible |

| 4 | Sitting | Part of the body is in contact with the floor, with the front two legs stretched out; the end of the body is not visible |

| 5 | Feeding | Pig with head in food box/feeder for more than 2 s |

| 6 | NNV | Pig enters the feeding area with two or more feet, then leaves the feeding area having not consumed any food |

| 7 | Drinking | Pig touches one or more drinker for more than 2 s |

5.2. Locomotion and Lameness

5.3. Aggressive Behaviors

5.4. Tail Biting

- Tail pointed down

- Hyperactivity

- Uncertainty of object-directed behavior

- Outrage prevents victims from reacting.

5.5. Eating and Drinking Solutions

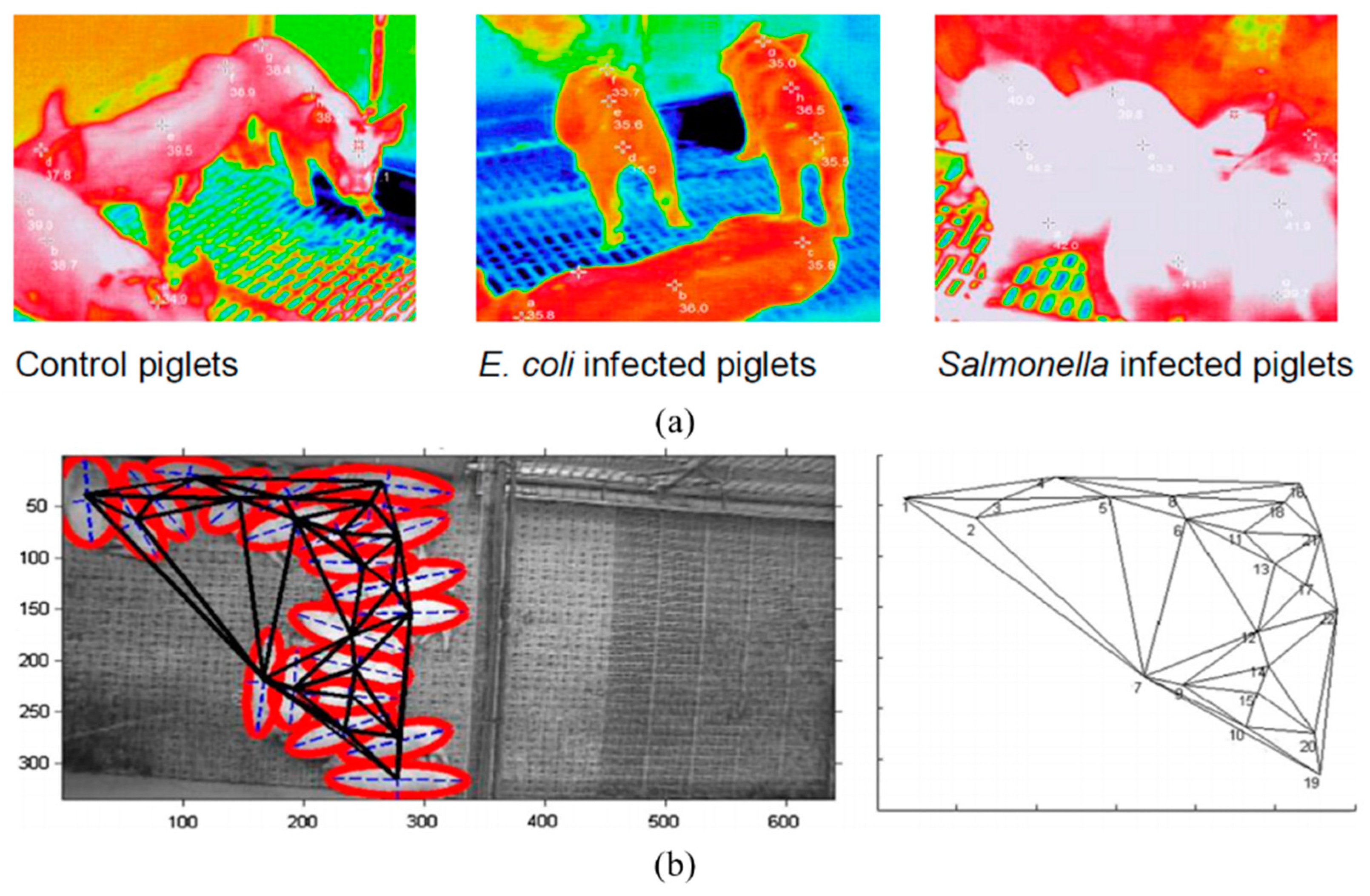

6. Early Disease Detection at a Farm Level

7. Discussion of the Real-Time Challenges and Limitations of Camera Sensors

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Conflicts of Interest

References

- Berckmans, D. Precision livestock farming technologies for welfare management in intensive livestock systems. OIE Rev. Sci. Tech. 2014, 33, 189–196. [Google Scholar] [CrossRef]

- Rosa, G.J.M. Grand Challenge in Precision Livestock Farming. Front. Anim. Sci. 2021, 2, 1–3. [Google Scholar] [CrossRef]

- Arulmozhi, E.; Moon, B.E.; Basak, J.K.; Sihalath, T.; Park, J.; Kim, H.T. Machine learning-based microclimate model for indoor air temperature and relative humidity prediction in a swine building. Animals 2021, 11, 222. [Google Scholar] [CrossRef]

- Benjamin, M.; Yik, S. Precision livestock farming in swinewelfare: A review for swine practitioners. Animals 2019, 9, 133. [Google Scholar] [CrossRef] [PubMed]

- Mutua, F.K.; Dewey, C.E.; Arimi, S.M.; Schelling, E.; Ogara, W.O. Prediction of live body weight using length and girth measurements for pigs in rural Western Kenya. J. Swine Health Prod. 2011, 19, 26–33. [Google Scholar]

- Schofield, C.P. Evaluation of image analysis as a means of estimating the weight of pigs. J. Agric. Eng. Res. 1990, 47, 287–296. [Google Scholar] [CrossRef]

- Walugembe, M.; Nadiope, G.; Stock, J.D.; Stalder, K.J.; Pezo, D.; Rothschild, M.F. Prediction of live body weight using various body measurements in Ugandan village pigs. Livest. Res. Rural. Dev. 2014, 26, 20. [Google Scholar]

- Neethirajan, S. Transforming the adaptation physiology of farm animals through sensors. Animals 2020, 10, 1512. [Google Scholar] [CrossRef]

- McManus, C.; Tanure, C.B.; Peripolli, V.; Seixas, L.; Fischer, V.; Gabbi, A.M.; Menegassi, S.R.O.; Stumpf, M.T.; Kolling, G.J.; Dias, E.; et al. Infrared thermography in animal production: An overview. Comput. Electron. Agric. 2016, 123, 10–16. [Google Scholar] [CrossRef]

- Ayaz, M.; Ammad-Uddin, M.; Sharif, Z.; Mansour, A.; Aggoune, E.H.M. Internet-of-Things (IoT)-based smart agriculture: Toward making the fields talk. IEEE Access 2019, 7, 129551–129583. [Google Scholar] [CrossRef]

- Frost, A.R.; Tillett, R.D.; Welch, S.K. The development and evaluation of image analysis procedures for guiding a livestock monitoring sensor placement robot. Comput. Electron. Agric. 2000, 28, 229–242. [Google Scholar] [CrossRef]

- Yang, Q.; Xiao, D.; Lin, S. Feeding behavior recognition for group-housed pigs with the Faster R-CNN. Comput. Electron. Agric. 2018, 155, 453–460. [Google Scholar] [CrossRef]

- Zhu, W.X.; Guo, Y.Z.; Jiao, P.P.; Ma, C.H.; Chen, C. Recognition and drinking behaviour analysis of individual pigs based on machine vision. Livest. Sci. 2017, 205, 129–136. [Google Scholar] [CrossRef]

- Vranken, E.; Berckmans, D. Precision livestock farming for pigs. Anim. Front. 2017, 7, 32–37. [Google Scholar] [CrossRef]

- Nasirahmadi, A.; Edwards, S.A.; Sturm, B. Implementation of machine vision for detecting behaviour of cattle and pigs. Livest. Sci. 2017, 202, 25–38. [Google Scholar] [CrossRef]

- Granatosky, M.C. Encyclopedia of Animal Cognition and Behavior. Encycl. Anim. Cogn. Behav. 2020. [Google Scholar] [CrossRef]

- Lu, M.; Norton, T.; Youssef, A.; Radojkovic, N.; Fernández, A.P.; Berckmans, D. Extracting body surface dimensions from top-view images of pigs. Int. J. Agric. Biol. Eng. 2018, 11, 182–191. [Google Scholar] [CrossRef]

- Kashiha, M.; Bahr, C.; Ott, S.; Moons, C.P.H.; Niewold, T.A.; Ödberg, F.O.; Berckmans, D. Automatic weight estimation of individual pigs using image analysis. Comput. Electron. Agric. 2014, 107, 38–44. [Google Scholar] [CrossRef]

- Matthews, S.G.; Miller, A.L.; Plötz, T.; Kyriazakis, I. Automated tracking to measure behavioural changes in pigs for health and welfare monitoring. Sci. Rep. 2017, 7, 1–12. [Google Scholar] [CrossRef]

- Li, Y.Y.; Sun, L.Q.; Zou, Y.B.; Li, Y. Individual pig object detection algorithm based on Gaussian mixture model. Int. J. Agric. Biol. Eng. 2017, 10, 186–193. [Google Scholar] [CrossRef]

- Kashiha, M.; Bahr, C.; Ott, S.; Moons, C.P.H.; Niewold, T.A.; Ödberg, F.O.; Berckmans, D. Automatic identification of marked pigs in a pen using image pattern recognition. Comput. Electron. Agric. 2013, 93, 111–120. [Google Scholar] [CrossRef]

- Adrion, F.; Kapun, A.; Eckert, F.; Holland, E.M.; Staiger, M.; Götz, S.; Gallmann, E. Monitoring trough visits of growing-finishing pigs with UHF-RFID. Comput. Electron. Agric. 2018, 144, 144–153. [Google Scholar] [CrossRef]

- Fernandes, A.F.; Dorea, J.R.; Rosa, G.J. Image Analysis and Computer Vision Applications in Animal Sciences: An Overview. Front. Vet. Sci. 2020, 7, 1–18. [Google Scholar] [CrossRef]

- Kongsro, J. Estimation of pig weight using a Microsoft Kinect prototype imaging system. Comput. Electron. Agric. 2014, 109, 32–35. [Google Scholar] [CrossRef]

- Lee, W.; Ham, Y.; Ban, T.W.; Jo, O. Analysis of Growth Performance in Swine Based on Machine Learning. IEEE Access 2019, 7, 161716–161724. [Google Scholar] [CrossRef]

- Kongsro, J. Development of a computer vision system to monitor pig locomotion. Open J. Anim. Sci. 2013, 3, 254–260. [Google Scholar] [CrossRef]

- Kashiha, M.A.; Bahr, C.; Ott, S.; Moons, C.P.H.; Niewold, T.A.; Tuyttens, F.; Berckmans, D. Automatic monitoring of pig locomotion using image analysis. Livest. Sci. 2014, 159, 141–148. [Google Scholar] [CrossRef]

- McFarlane, N.J.B.; Schofield, C.P. Segmentation and tracking of piglets in images. Mach. Vis. Appl. 1995, 8, 187–193. [Google Scholar] [CrossRef]

- Schofield, C.P.; Marchant, J.A.; White, R.P.; Brandl, N.; Wilson, M. Monitoring pig growth using a prototype imaging system. J. Agric. Eng. Res. 1999, 72, 205–210. [Google Scholar] [CrossRef]

- Brandl, N.; Jørgensen, E. Determination of live weight of pigs from dimensions measured using image analysis. Comput. Electron. Agric. 1996, 15, 57–72. [Google Scholar] [CrossRef]

- Wang, Y.; Yang, W.; Winter, P.; Walker, L. Walk-through weighing of pigs using machine vision and an artificial neural network. Biosyst. Eng. 2008, 100, 117–125. [Google Scholar] [CrossRef]

- Zhang, X.; Liu, G.; Jing, L.; Chen, S. Automated measurement of heart girth for pigs using two kinect depth sensors. Sensors 2020, 20, 3848. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Khan, A.; Sohail, A.; Zahoora, U.; Qureshi, A.S. A survey of the recent architectures of deep convolutional neural networks. Artif. Intell. Rev. 2020, 53, 5455–5516. [Google Scholar] [CrossRef]

- Patil, A.; Rane, M. Convolutional Neural Networks: An Overview and Its Applications in Pattern Recognition. Smart Innov. Syst. Technol. 2021, 195, 21–30. [Google Scholar] [CrossRef]

- Brodzicki, A.; Jaworek-Korjakowska, J.; Kleczek, P.; Garland, M.; Bogyo, M. Pre-trained deep convolutional neural network for clostridioides difficile bacteria cytotoxicity classification based on fluorescence images. Sensors 2020, 20, 6713. [Google Scholar] [CrossRef]

- Small, R.W. Review of Livestock Identification and Traceability in the UK. Development of Co-Ordinated In Situ and Ex Situ UK Farm Animal Genetic Resources Conservation Strategy And Implementation Guidance. Available online: https://webarchive.nationalarchives.gov.uk/ukgwa/20170207052351/https://www.nao.org.uk/wp-content/uploads/2003/11/02031144.pdf (accessed on 1 June 2021).

- Ahmed, S.T.; Mun, H.S.; Yoe, H.; Yang, C.J. Monitoring of behavior using a video-recording system for recognition of Salmonella infection in experimentally infected growing pigs. Animal 2014, 9, 115–121. [Google Scholar] [CrossRef] [PubMed]

- Kapun, A.; Adrion, F.; Gallmann, E. Case study on recording pigs’ daily activity patterns with a uhf-rfid system. Agriulture 2020, 10, 542. [Google Scholar] [CrossRef]

- Marsot, M.; Mei, J.; Shan, X.; Ye, L.; Feng, P.; Yan, X.; Li, C.; Zhao, Y. An adaptive pig face recognition approach using Convolutional Neural Networks. Comput. Electron. Agric. 2020, 173, 105386. [Google Scholar] [CrossRef]

- Maselyne, J.; Saeys, W.; De Ketelaere, B.; Mertens, K.; Vangeyte, J.; Hessel, E.F.; Millet, S.; Van Nuffel, A. Validation of a high frequency radio frequency identification (HF RFID) system for registering feeding patterns of growing-finishing pigs. Comput. Electron. Agric. 2014, 102, 10–18. [Google Scholar] [CrossRef]

- Ruiz-Garcia, L.; Lunadei, L. The role of RFID in agriculture: Applications, limitations and challenges. Comput. Electron. Agric. 2011, 79, 42–50. [Google Scholar] [CrossRef]

- Hansen, M.F.; Smith, M.L.; Smith, L.N.; Salter, M.G.; Baxter, E.M.; Farish, M.; Grieve, B. Towards on-farm pig face recognition using convolutional neural networks. Comput. Ind. 2018, 98, 145–152. [Google Scholar] [CrossRef]

- Wada, N.; Shinya, M.; Shiraishi, M. Pig face recognition using eigenspace method. ITE Trans. Media Technol. Appl. 2013, 1, 328–332. [Google Scholar] [CrossRef]

- Wongsriworaphon, A.; Arnonkijpanich, B.; Pathumnakul, S. An approach based on digital image analysis to estimate the live weights of pigs in farm environments. Comput. Electron. Agric. 2015, 115, 26–33. [Google Scholar] [CrossRef]

- Pezzuolo, A.; Milani, V.; Zhu, D.H.; Guo, H.; Guercini, S.; Marinello, F. On-barn pig weight estimation based on body measurements by structure-from-motion (SfM). Sensors 2018, 18, 3603. [Google Scholar] [CrossRef]

- Bracke, M.B.M.; Spruijt, B.M.; Metz, J.H.M.; Scheuten, W.G.P. Decision support system for overall welfare assessment in pregnant sows A: Model structure and weighting procedure. J. Anim. Sci. 2002, 80, 1819–1834. [Google Scholar] [CrossRef] [PubMed]

- Sungirai, M.; Masaka, L.; Benhura, T.M. Validity of weight estimation models in pigs reared under different management conditions. Vet. Med. Int. 2014, 2014, 3–7. [Google Scholar] [CrossRef] [PubMed]

- McGlone, J.J.; Vines, B.; Rudine, A.C.; DuBois, P. The physical size of gestating sows. J. Anim. Sci. 2004, 82, 2421–2427. [Google Scholar] [CrossRef] [PubMed]

- Doeschl-Wilson, A.B.; Whittemore, C.T.; Knap, P.W.; Schofield, C.P. Using visual image analysis to describe pig growth in terms of size and shape. Anim. Sci. 2004, 79, 415–427. [Google Scholar] [CrossRef]

- Cang, Y.; He, H.; Qiao, Y. An Intelligent Pig Weights Estimate Method Based on Deep Learning in Sow Stall Environments. IEEE Access 2019, 7, 164867–164875. [Google Scholar] [CrossRef]

- Zhu, Q.; Ren, J.; Barclay, D.; McCormack, S.; Thomson, W. Automatic animal detection from Kinect sensed images for livestock monitoring and assessment. In Proceedings of the 2015 IEEE International Conference on Computer and Information Technology; Ubiquitous Computing and Communications; Dependable, Autonomic and Secure Computing; Pervasive Intelligence and Computing, Liverpool, UK, 26–28 October 2015; pp. 1154–1157. [Google Scholar] [CrossRef]

- Shi, C.; Teng, G.; Li, Z. An approach of pig weight estimation using binocular stereo system based on LabVIEW. Comput. Electron. Agric. 2016, 129, 37–43. [Google Scholar] [CrossRef]

- Condotta, I.C.F.S.; Brown-Brandl, T.M.; Silva-Miranda, K.O.; Stinn, J.P. Evaluation of a depth sensor for mass estimation of growing and finishing pigs. Biosyst. Eng. 2018, 173, 11–18. [Google Scholar] [CrossRef]

- Van Steenbergen, E.J. Description and evaluation of a linear scoring system for exterior traits in pigs. Livest. Prod. Sci. 1989, 23, 163–181. [Google Scholar] [CrossRef]

- Wang, Y.; Yang, W.; Walker, L.T.; Rababah, T.M. Enhancing the accuracy of area extraction in machine vision-based pig weighing through edge detection. Int. J. Agric. Biol. Eng. 2008, 1, 37–42. [Google Scholar] [CrossRef]

- Horgan, G.W.; Murphy, S.V.; Simm, G. Automatic assessment of sheep carcasses by image analysis. Anim. Sci. 1995, 60, 197–202. [Google Scholar] [CrossRef]

- Doeschl, A.B.; Green, D.M.; Whittemore, C.T.; Schofield, C.P.; Fisher, A.V.; Knap, P.W. The relationship between the body shape of living pigs and their carcass morphology and composition. Anim. Sci. 2004, 79, 73–83. [Google Scholar] [CrossRef]

- Wang, K.; Guo, H.; Ma, Q.; Su, W.; Chen, L.; Zhu, D. A portable and automatic Xtion-based measurement system for pig body size. Comput. Electron. Agric. 2018, 148, 291–298. [Google Scholar] [CrossRef]

- Win, K.D.; Kawasue, K.; Yoshida, K.; Lee, G. Automatic pig selection system based on body size using a camera: Rotating mechanics for pig selection. Artif. Life Robot. 2020, 26, 155–161. [Google Scholar] [CrossRef]

- Yoshida, K.; Kawasue, K. Robust 3D pig measurement in pig farm. Lect. Notes Comput. Sci. 2019, 11129, 387–400. [Google Scholar] [CrossRef]

- Guo, H.; Ma, X.; Ma, Q.; Wang, K.; Su, W.; Zhu, D.H. LSSA_CAU: An interactive 3d point clouds analysis software for body measurement of livestock with similar forms of cows or pigs. Comput. Electron. Agric. 2017, 138, 60–68. [Google Scholar] [CrossRef]

- Nilsson, M.; Herlin, A.H.; Ardö, H.; Guzhva, O.; Aström, K.; Bergsten, C. Development of automatic surveillance of animal behaviour and welfare using image analysis and machine learned segmentation technique. Animal 2015, 9, 1859–1865. [Google Scholar] [CrossRef]

- Nasirahmadi, A.; Hensel, O.; Edwards, S.A.; Sturm, B. A new approach for categorizing pig lying behaviour based on a Delaunay triangulation method. Animal 2017, 11, 131–139. [Google Scholar] [CrossRef]

- Ahrendt, P.; Gregersen, T.; Karstoft, H. Development of a real-time computer vision system for tracking loose-housed pigs. Comput. Electron. Agric. 2011, 76, 169–174. [Google Scholar] [CrossRef]

- Zhang, L.; Gray, H.; Ye, X.; Collins, L.; Allinson, N. Automatic individual pig detection and tracking in pig farms. Sensors 2019, 19, 1188. [Google Scholar] [CrossRef] [PubMed]

- Cowton, J.; Kyriazakis, I.; Bacardit, J. Automated Individual Pig Localisation, Tracking and Behaviour Metric Extraction Using Deep Learning. IEEE Access 2019, 7, 108049–108060. [Google Scholar] [CrossRef]

- Chung, Y.; Kim, H.; Lee, H.; Park, D.; Jeon, T.; Chang, H.H. A cost-effective pigsty monitoring system based on a video sensor. KSII Trans. Internet Inf. Syst. 2014, 8, 1481–1498. [Google Scholar] [CrossRef]

- Guo, Y.Z.; Zhu, W.X.; Jiao, P.P.; Ma, C.H.; Yang, J.J. Multi-object extraction from topview group-housed pig images based on adaptive partitioning and multilevel thresholding segmentation. Biosyst. Eng. 2015, 135, 54–60. [Google Scholar] [CrossRef]

- Tu, G.J.; Karstoft, H.; Pedersen, L.J.; Jørgensen, E. Foreground detection using loopy belief propagation. Biosyst. Eng. 2013, 116, 88–96. [Google Scholar] [CrossRef]

- van der Zande, L.E.; Guzhva, O.; Rodenburg, T.B. Individual Detection and Tracking of Group Housed Pigs in Their Home Pen Using Computer Vision. Front. Anim. Sci. 2021, 2, 1–10. [Google Scholar] [CrossRef]

- Tillett, R.D.; Onyango, C.M.; Marchant, J.A. Using model-based image processing to track animal movements. Comput. Electron. Agric. 1997, 17, 249–261. [Google Scholar] [CrossRef]

- Sa, J.; Choi, Y.; Lee, H.; Chung, Y.; Park, D.; Cho, J. Fast pig detection with a top-view camera under various illumination conditions. Symmetry 2019, 11, 266. [Google Scholar] [CrossRef]

- Ekkel, E.D.; Spoolder, H.A.M.; Hulsegge, I.; Hopster, H. Lying characteristics as determinants for space requirements in pigs. Appl. Anim. Behav. Sci. 2003, 80, 19–30. [Google Scholar] [CrossRef]

- Dekker, M. The Effect of Temperature and Activity Patterns on Lying Behaviour and Space Use in Conventional Housed Fattening Pigs. Master’s Thesis, Wageningen University, Wageningen, The Netherlands, 2015. [Google Scholar]

- Xin, H. Assessing swine thermal comfort by image analysis of postural behaviors. J. Anim. Sci. 1999, 77, 1–9. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Shao, B.; Xin, H. A real-time computer vision assessment and control of thermal comfort for group-housed pigs. Comput. Electron. Agric. 2008, 62, 15–21. [Google Scholar] [CrossRef]

- Nasirahmadi, A.; Richter, U.; Hensel, O.; Edwards, S.; Sturm, B. Using machine vision for investigation of changes in pig group lying patterns. Comput. Electron. Agric. 2015, 119, 184–190. [Google Scholar] [CrossRef]

- Zheng, C.; Zhu, X.; Yang, X.; Wang, L.; Tu, S.; Xue, Y. Automatic recognition of lactating sow postures from depth images by deep learning detector. Comput. Electron. Agric. 2018, 147, 51–63. [Google Scholar] [CrossRef]

- Nasirahmadi, A.; Sturm, B.; Olsson, A.C.; Jeppsson, K.H.; Müller, S.; Edwards, S.; Hensel, O. Automatic scoring of lateral and sternal lying posture in grouped pigs using image processing and Support Vector Machine. Comput. Electron. Agric. 2019, 156, 475–481. [Google Scholar] [CrossRef]

- Geers, R.; Ville, H.; Goedseels, V.; Houkes, M.; Goossens, K.; Parduyns, G.; Van Bael, J. Environmental temperature control by the pig’s comfort behavior through image analysis. Trans. Am. Soc. Agric. Eng. 1991, 34, 2583–2586. [Google Scholar] [CrossRef]

- Hu, J.; Xin, H. Image-processing algorithms for behavior analysis of group-housed pigs. Behav. Res. Methods Instrum. Comput. 2000, 32, 72–85. [Google Scholar] [CrossRef] [PubMed]

- Riekert, M.; Klein, A.; Adrion, F.; Hoffmann, C.; Gallmann, E. Automatically detecting pig position and posture by 2D camera imaging and deep learning. Comput. Electron. Agric. 2020, 174, 105391. [Google Scholar] [CrossRef]

- Shao, H.; Pu, J.; Mu, J. Pig-Posture Recognition Based on Computer Vision: Dataset and Exploration. Animals 2021, 11, 1295. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.; Li, D.; Huang, J.; Chen, Y. Automated video behavior recognition of pigs using two-stream convolutional networks. Sensors 2020, 20, 1085. [Google Scholar] [CrossRef]

- Li, D.; Zhang, K.; Li, Z.; Chen, Y. A spatiotemporal convolutional network for multi-behavior recognition of pigs. Sensors 2020, 20, 2381. [Google Scholar] [CrossRef]

- Supakorn, C.; Stock, J.D.; Garay, E.; Johnson, A.K.; Stalder, K.J. Lameness: A principle problem to sow longevity in breeding herds. CAB Rev. Perspect. Agric. Vet. Sci. Nutr. Nat. Resour. 2018, 13, 3023. [Google Scholar] [CrossRef]

- Stavrakakis, S.; Guy, J.H.; Syranidis, I.; Johnson, G.R.; Edwards, S.A. Pre-clinical and clinical walking kinematics in female breeding pigs with lameness: A nested case-control cohort study. Vet. J. 2015, 205, 38–43. [Google Scholar] [CrossRef]

- Gronskyte, R.; Clemmensen, L.H.; Hviid, M.S.; Kulahci, M. Pig herd monitoring and undesirable tripping and stepping prevention. Comput. Electron. Agric. 2015, 119, 51–60. [Google Scholar] [CrossRef]

- Gronskyte, R.; Clemmensen, L.H.; Hviid, M.S.; Kulahci, M. Monitoring pig movement at the slaughterhouse using optical flow and modified angular histograms. Biosyst. Eng. 2016, 141, 19–30. [Google Scholar] [CrossRef]

- Wutke, M.; Schmitt, A.O.; Traulsen, I.; Gültas, M. Investigation of Pig Activity Based on Video Data and Semi-Supervised Neural Networks. AgriEngineering 2020, 2, 581–595. [Google Scholar] [CrossRef]

- Stavrakakis, S.; Li, W.; Guy, J.H.; Morgan, G.; Ushaw, G.; Johnson, G.R.; Edwards, S.A. Validity of the Microsoft Kinect sensor for assessment of normal walking patterns in pigs. Comput. Electron. Agric. 2015, 117, 1–7. [Google Scholar] [CrossRef]

- Viazzi, S.; Ismayilova, G.; Oczak, M.; Sonoda, L.T.; Fels, M.; Guarino, M.; Vranken, E.; Hartung, J.; Bahr, C.; Berckmans, D. Image feature extraction for classification of aggressive interactions among pigs. Comput. Electron. Agric. 2014, 104, 57–62. [Google Scholar] [CrossRef]

- Oczak, M.; Viazzi, S.; Ismayilova, G.; Sonoda, L.T.; Roulston, N.; Fels, M.; Bahr, C.; Hartung, J.; Guarino, M.; Berckmans, D.; et al. Classification of aggressive behaviour in pigs by activity index and multilayer feed forward neural network. Biosyst. Eng. 2014, 119, 89–97. [Google Scholar] [CrossRef]

- Chen, C.; Zhu, W.; Liu, D.; Steibel, J.; Siegford, J.; Wurtz, K.; Han, J.; Norton, T. Detection of aggressive behaviours in pigs using a RealSence depth sensor. Comput. Electron. Agric. 2019, 166, 105003. [Google Scholar] [CrossRef]

- Chen, C.; Zhu, W.; Ma, C.; Guo, Y.; Huang, W.; Ruan, C. Image motion feature extraction for recognition of aggressive behaviors among group-housed pigs. Comput. Electron. Agric. 2017, 142, 380–387. [Google Scholar] [CrossRef]

- Lee, J.; Jin, L.; Park, D.; Chung, Y. Automatic recognition of aggressive behavior in pigs using a kinect depth sensor. Sensors 2016, 16, 631. [Google Scholar] [CrossRef] [PubMed]

- Llonch, P.; Mainau, E.; Temple, D.; Manteca, X. Aggression in Pigs and Its Welfare Consequences the Farm Animal Welfare Fact Sheet. Technical Document. Available online: https://www.fawec.org/en/technical-documents-pigs/249-aggression-pigs-welfare (accessed on 8 August 2021).

- Ursinus, W.W.; Van Reenen, C.G.; Kemp, B.; Bolhuis, J.E. Tail biting behaviour and tail damage in pigs and the relationship with general behaviour: Predicting the inevitable? Appl. Anim. Behav. Sci. 2014, 156, 22–36. [Google Scholar] [CrossRef]

- D’Eath, R.B.; Jack, M.; Futro, A.; Talbot, D.; Zhu, Q.; Barclay, D.; Baxter, E.M. Automatic early warning of tail biting in pigs: 3D cameras can detect lowered tail posture before an outbreak. PLoS ONE 2018, 13, e0194524. [Google Scholar] [CrossRef]

- Taylor, N.R.; Main, D.C.J.; Mendl, M.; Edwards, S.A. Tail-biting: A new perspective. Vet. J. 2010, 186, 137–147. [Google Scholar] [CrossRef]

- Islam, M.M.; Ahmed, S.T.; Mun, H.-S.; Bostami, A.B.M.R.; Kim, Y.-J.; Yang, C.-J. Use of thermal imaging for the early detection of signs of disease in pigs challenged orally with Salmonella typhimurium and Escherichia coli. Afr. J. Microbiol. Res. 2015, 9, 1667–1674. [Google Scholar] [CrossRef]

- Andersen, H.M.L.; Dybkjær, L.; Herskin, M.S. Growing pigs’ drinking behaviour: Number of visits, duration, water intake and diurnal variation. Animal 2014, 8, 1881–1888. [Google Scholar] [CrossRef] [PubMed]

- Alameer, A.; Kyriazakis, I.; Dalton, H.A.; Miller, A.L.; Bacardit, J. Automatic recognition of feeding and foraging behaviour in pigs using deep learning. Biosyst. Eng. 2020, 197, 91–104. [Google Scholar] [CrossRef]

- Arulmozhi, E.; Basak, J.K.; Park, J.; Okyere, F.G.; Khan, F.; Lee, Y.; Lee, J.; Lee, D.; Kim, H.T. Impacts of nipple drinker position on water intake, water wastage and drinking duration of pigs. Turk. J. Vet. Anim. Sci. 2020, 44, 562–572. [Google Scholar] [CrossRef]

- Maselyne, J.; Adriaens, I.; Huybrechts, T.; De Ketelaere, B.; Millet, S.; Vangeyte, J.; Van Nuffel, A.; Saeys, W. Measuring the drinking behaviour of individual pigs housed in group using radio frequency identification (RFID). Animal 2016, 10, 1557–1566. [Google Scholar] [CrossRef]

- Lao, F.; Brown-Brandl, T.; Stinn, J.P.; Liu, K.; Teng, G.; Xin, H. Automatic recognition of lactating sow behaviors through depth image processing. Comput. Electron. Agric. 2016, 125, 56–62. [Google Scholar] [CrossRef]

- Kashiha, M.; Bahr, C.; Haredasht, S.A.; Ott, S.; Moons, C.P.H.; Niewold, T.A.; Ödberg, F.O.; Berckmans, D. The automatic monitoring of pigs water use by cameras. Comput. Electron. Agric. 2013, 90, 164–169. [Google Scholar] [CrossRef]

- Alameer, A.; Kyriazakis, I.; Bacardit, J. Automated recognition of postures and drinking behaviour for the detection of compromised health in pigs. Sci. Rep. 2020, 10, 1–15. [Google Scholar] [CrossRef]

- Nasirahmadi, A.; Edwards, S.A.; Sturm, B. Investigating the effect of rooting substrate provision on the group lying behaviour of pigs using machine vision. In Proceedings of the CIGR-AgEng Conference, Aarhus, Denmark, 26–29 June 2016; pp. 1–5. [Google Scholar]

- Martínez-Avilés, M.; Fernández-Carrión, E.; López García-Baones, J.M.; Sánchez-Vizcaíno, J.M. Early Detection of Infection in Pigs through an Online Monitoring System. Transbound. Emerg. Dis. 2017, 64, 364–373. [Google Scholar] [CrossRef]

- Fernández-Carrión, E.; Martínez-Avilés, M.; Ivorra, B.; Martínez-López, B.; Ramos, Á.M.; Sánchez-Vizcaíno, J.M. Motion-based video monitoring for early detection of livestock diseases: The case of African swine fever. PLoS ONE 2017, 12, e0183793. [Google Scholar] [CrossRef]

- Fernández-Carrión, E.; Barasona, J.Á.; Sánchez, Á.; Jurado, C.; Cadenas-Fernández, E.; Sánchez-Vizcaíno, J.M. Computer vision applied to detect lethargy through animal motion monitoring: A trial on african swine fever inwild boar. Animals 2020, 10, 2241. [Google Scholar] [CrossRef] [PubMed]

- Li, D.; Chen, Y.; Zhang, K.; Li, Z. Mounting behaviour recognition for pigs based on deep learning. Sensors 2019, 19, 4924. [Google Scholar] [CrossRef]

- Amezcua, R.; Walsh, S.; Luimes, P.H.; Friendship, R.M. Infrared thermography to evaluate lameness in pregnant sows. Can. Vet. J. 2014, 55, 268–272. [Google Scholar]

- Zhang, Z.; Wang, H.; Liu, T.; Wang, Y.; Zhang, H.; Yuan, F.; Yang, X.; Xu, S.; Meng, Y. Accurate detection method of pig’s temperature based on non-point source thermal infrared image. CAAI Trans. Intell. Technol. 2021. [Google Scholar] [CrossRef]

- Mason-D’Croz, D.; Bogard, J.R.; Herrero, M.; Robinson, S.; Sulser, T.B.; Wiebe, K.; Willenbockel, D.; Godfray, H.C.J. Modelling the global economic consequences of a major African swine fever outbreak in China. Nat. Food 2020, 1, 221–228. [Google Scholar] [CrossRef] [PubMed]

- Schaefer, A.L.; Cook, N.; Tessaro, S.V.; Deregt, D.; Desroches, G.; Dubeski, P.L.; Tong, A.K.W.; Godson, D.L. Early detection and prediction of infection using infrared thermography. Can. J. Anim. Sci. 2004, 84, 73–80. [Google Scholar] [CrossRef]

- Schmidt, M.; Lahrmann, K.H.; Ammon, C.; Berg, W.; Schön, P.; Hoffmann, G. Assessment of body temperature in sows by two infrared thermography methods at various body surface locations. J. Swine Health Prod. 2013, 21, 203–209. [Google Scholar]

- Siewert, C.; Dänicke, S.; Kersten, S.; Brosig, B.; Rohweder, D.; Beyerbach, M.; Seifert, H. Difference method for analysing infrared images in pigs with elevated body temperatures. Z. Med. Phys. 2014, 24, 6–15. [Google Scholar] [CrossRef]

| Category | Parameters | Used Cameras | Used Tools and Techniques | Reference(s) |

|---|---|---|---|---|

| Identification | Face recognition | 2D | Eigen space method | [44] |

| CNN (SVM) | [43] | |||

| Smartphone | Deep CNN | [40] | ||

| Live weight | 2D, CCD | Relating pixel values to morphological parameter dimensions | [6,29,30,50] | |

| ANN | [31] | |||

| Transfer function model (by using body area) | [18] | |||

| Vector-quantified temporal associative memory (VQTAM) | [45] | |||

| Depth images | Relating pixel values to morphological parameter dimensions | [24] | ||

| Machine vision module of the LabVIEW system | [53] | |||

| Faster R-CNN | [51] | |||

| 2D (reconstructed as 3D) | Structure-from-Motion | [46] | ||

| Growth and mass | CCD | Relating pixel values to morphological parameter dimensions | [29,60] | |

| 3D, Xtion, Depth images | Extract pig body surface dimension parameters | [17] | ||

| Relating pixel values to morphological parameter dimensions | [54] | |||

| Point cloud method | [32,59,62] | |||

| Point cloud method | [61] | |||

| Individual identification | 2D | Machine learning | [63] | |

| Ellipse fitting technique | [21,64] | |||

| Gaussian mixture model | [20] | |||

| R-CNN | [67] | |||

| CNN (tag-box method) | [66] | |||

| Adaptive partitioning and multilevel thresholding segmentation | [69] | |||

| Machine vision (YOLOv3 and SORT) | [71] | |||

| Blob detection | [28,72] | |||

| Depth images | Image segmentation (Otsu’s thresholding) | [73] | ||

| Behavior and activities | Posture detection | 2D | ANN (perceptron) | [76] |

| SVM | [80] | |||

| Faster R-CNN | [67,83] | |||

| Ellipse fitting method | [20,80,110] | |||

| Motion detection | [77] | |||

| YOLOv5 | [84] | |||

| Two-stream convolutional networks | [85] | |||

| Spatiotemporal convolutional network | [86] | |||

| 3D, Depth images | Otsu’s algorithm | [68] | ||

| Faster R-CNN (bounding box) | [79] | |||

| point cloud method | [19] | |||

| Locomotion and Lameness | 2D | CNN | [91] | |

| Ellipse fitting method, image locomotion | [27] | |||

| Delaunay triangulation method | [64] | |||

| Multivariate image analysis | [26] | |||

| GoPro | Optical flow filtering | [89] | ||

| Optical flow and angular histogram | [90] | |||

| 3D and Depth images | Motion capture | [92] | ||

| Aggression | 2D | Activity index | [94] | |

| Motion detection, Linear discriminant analysis | [93] | |||

| Sequence extraction and motion analysis | [13] | |||

| depth sensor | SVM | [97] | ||

| Sequence extraction and motion analysis | [95] | |||

| Tail biting | 3D | Tail injury scoring by tail posture-detecting algorithm | [100] | |

| Feeding and drinking | 2D | Transfer function modeling | [108] | |

| Object extraction | [13] | |||

| Faster R-CNN | [12] | |||

| CNN (Googlenet) | [104] | |||

| CNN (YOLO) | [109] | |||

| Depth image | Movement detection using centroids | [107] | ||

| 3D point clouds | [19] | |||

| Early disease detection | Abnormal behavior | 2D | Activity monitoring for 24 h | [68] |

| African swine Fever | Motion computation | [111,112,113] | ||

| Mounting behavior | Mask R-CNN | [114] | ||

| Infections from Salmonella typhimurium and Escherichia coli | Infrared thermography | Correlation between body temperature and average daily gain | [102] | |

| lameness | Temperature changes in leg area | [115] | ||

| Body temperature changes | Gray–temperature conversion model (G–T model) | [116] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Arulmozhi, E.; Bhujel, A.; Moon, B.-E.; Kim, H.-T. The Application of Cameras in Precision Pig Farming: An Overview for Swine-Keeping Professionals. Animals 2021, 11, 2343. https://doi.org/10.3390/ani11082343

Arulmozhi E, Bhujel A, Moon B-E, Kim H-T. The Application of Cameras in Precision Pig Farming: An Overview for Swine-Keeping Professionals. Animals. 2021; 11(8):2343. https://doi.org/10.3390/ani11082343

Chicago/Turabian StyleArulmozhi, Elanchezhian, Anil Bhujel, Byeong-Eun Moon, and Hyeon-Tae Kim. 2021. "The Application of Cameras in Precision Pig Farming: An Overview for Swine-Keeping Professionals" Animals 11, no. 8: 2343. https://doi.org/10.3390/ani11082343

APA StyleArulmozhi, E., Bhujel, A., Moon, B.-E., & Kim, H.-T. (2021). The Application of Cameras in Precision Pig Farming: An Overview for Swine-Keeping Professionals. Animals, 11(8), 2343. https://doi.org/10.3390/ani11082343