Complex Intelligent Systems: Juxtaposition of Foundational Notions and a Research Agenda

Abstract

:1. Backdrop remarks

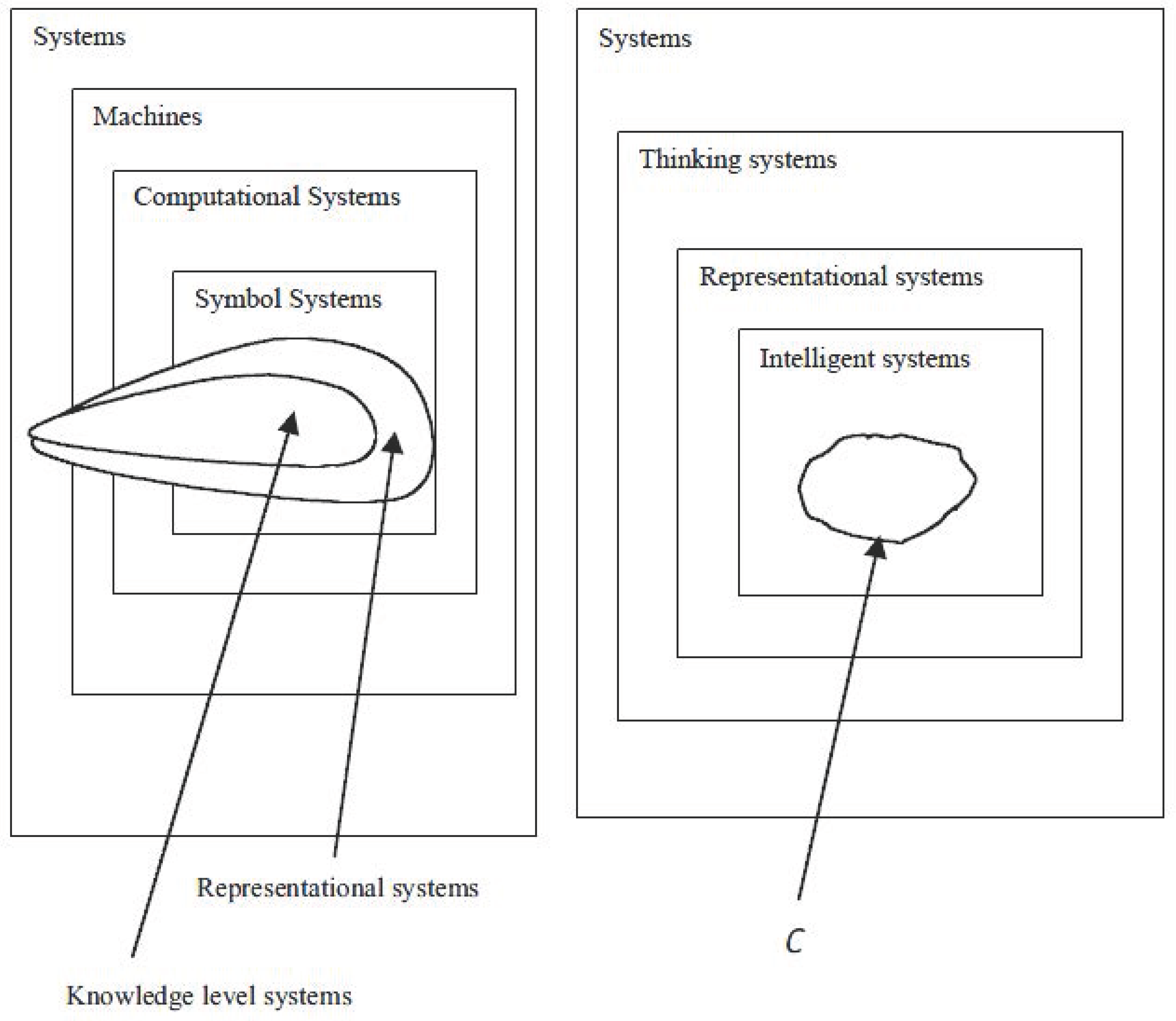

2. Juxtaposition of Foundational notions

The aim of this section is to initiate a discussion on the modelling of complex intelligent systems. As such the minimal information on the ideas introduced below is offered either as a memory refresher (re, Newell’s Soar) or as an agent provocateur for some of the underlying issues. As Torr [21] put it: “the recognition that two theories contain like terms that mean different things can facilitate comparison and communication.”“We have then, in the current industrial revolution with information at its core, a source of great technical and social change with which we need to come to terms. But to do so entails exploring and clarifying a set of concepts and activities which are currently both confused and confusing.”Checkland and Howell [20]

- On the nature of intelligence:

- a)

- possesses sensors.

- b)

- is able to act on its environment.

- c)

- posseses its own representational system Rs , i.e., Rs is independent of the language of another kind of entity S*.

- d)

- is able to connect sensory, representational, and motor information.

- e)

- is able to communicate with other systems within its own class.

- On the nature of representation, thinking, and meaning:

- Re –symbol for the representational system of entity E.

- Re = df is a thought system of E able to create representations. Where: a representation of a situation, say, S1, is another situation, say, S2, characterised by the properties:

- S2 simplifies S1; and

- S2 preserves the essential characteristics of S1.

- On the nature of communication and understanding:

- Definition of communication: H1 communicates with H2 on a topic T if, and only if: (i) H1 understands T {Symbol: U (H1 T)}; (ii) H2 understands T {Symbol: U (H2 T)}; (iii) U (H1 T) is describable to and understood by H2; and (iv) U (H2 T) is describable to and understood by H1.

- Definition of Understanding: An entity E has understood something, S, if and only if, E can describe S in terms of a system of own primitives (p is a primitive if and only if p’s understanding is immediate).

3. Research Agenda

- I1:

- The single most promising and, at the same time, most difficult research objective is to synthesise mathematical methods devised to explore complexity (for an introduction see [35]) with simulation models and an enhanced conceptual framework for the study of C and its members. Most likely, such an endeavour will require as yet untried mathematical tools and quite possibly invention of new ones.

- I2:

- Synthesise the objective and subjective viewpoints in the modelling of complex intelligent systems. In other words, hard and soft science; facts and values; qualities and quantities; criteria and measures of successful design or theoretical study.

- I3:

- In what specific sense the whole is greater than its parts. This brings in the issues of emergent properties and irreducibility (that is explanatory not constitutive reduction).

- I4:

- Appropriate placing of an artificial system within its sociotechnical environment requires the overcoming of the environment’s resistance to change. Naturally, this is greater the more extensive and radical the changes of the structure and functioning of the environment are required to be in order to accommodate the new entrant. In other words, the consequences incorporating the new designed system in its sociotechnical environment should constitute part of the overall development process. How?

- I5:

- Following from the above, how can one manage the changes ensued to a system by instabilities purposefully created by an intervention (e.g., Kosovo war)? How can one avoid singularities of C?

- I6:

- Classification of classes of systems, 37 different classes are introduced in [1], will enforce conceptual clarification.

- I7:

- A theory of C and its members requires regularities. The finding of such regularities should be a major research objective.

- I8:

- Given that a group mind is too far from the truth to be a useful scientific approximation, how could unified theories of cognition be related to social components (e.g., values, morals) for successful modelling of groups?

- I9:

- Discover or Design the fundamental relations among the key members of C, (i.e., the structure of C = the space of complex intelligent systems = Sc).

Appendix

| According to Allen Newell [23] | According to P.A.M. Gelepithis |

| 1. Behaving systems, | 1. Perception, |

| 2. Knowledge, | 2. Action, |

| 3. Representation, | 3. Growth (e.g., self-organisation), |

| 4. Machine* (e.g., computation), | 4. Meaning, |

| 5. Symbol, | 5. Thinking (e.g., computation), |

| 6. Architecture, | 6. Understanding, |

| 7. Intelligence, | 7. Communication, |

| 8. Search, | 8. Representation, |

| 9. Preparation vs. deliberation*. | 9. Intelligent system, |

| 10. Purpose (inc. expectation), | |

| 11. Emotion, | |

| 12. Human language, | |

| 13. Consciousness (inc. moral principles), | |

| 14. Beauty. |

- Machine: A mathematical function that produces its output given its input. Computational system: A machine that can produce many functions.

- Preparation: Knowledge encoded in a system’s memory.

- Deliberation: Use of knowledge to choose one operation rather than others.

References

- Skyttner, L. General Systems Theory: An Introduction; Macmillan Press Ltd, 1996. [Google Scholar]

- Stein, D. L. (Ed.) Lectures in the Sciences of Complexity; Addison-Wesley Publishing Company, 1989.

- Schweitzer, F. (Ed.) Self-Organisation of Complex Structures; Gordon and Breach Publishers, 1997.

- Atmanspacher, H. Cartesian Cut, Heisenberg Cut, and the Concept of Complexity. In The Quest for a Unified Theory of Information; Hofkirchner, W., Ed.; Gordon and Breach Publishers, 1997*1999. [Google Scholar]

- Piaget, J. The Psychology of Intelligence; Routledge and Kegan Paul; (Original in French); 1947*1972. [Google Scholar]

- Hebb, D. O. The Organisation of Behaviour; John Wiley and Sons, 1949. [Google Scholar]

- Guilford, J. P. The nature of intelligence; McGraw-Hill, 1967. [Google Scholar]

- Hunt, E. B. Intelligence as an information-processing concept. British Journal of Psychology 1980, 71, 449–474. [Google Scholar] [CrossRef] [PubMed]

- Nilsson, N. J. Logic and Artificial Intelligence. Artificial Intelligence 1991, 47, 31–56. [Google Scholar] [CrossRef]

- Rumelhart, D. E.; Hinton, G. E.; McClelland, J. L. A General Framework for Parallel Distributed Processing. Rumelhart, D. E., McClelland, J. L., the PDP research group, Eds.; In Parallel Distributed Processing: Explorations in the Microstructure of Cognition; 1986. [Google Scholar]

- Chandrasekaran, B. What kind of information processing is intelligence? A perspective on AI paradigms and a proposal. Partridge, D., Wilks, Y., Eds.; In Foundations of Artificial Intelligence: A Source Book; Cambridge University Press, 1990. [Google Scholar]

- Hofkirchner, W. (Ed.) The Quest for a Unified Theory of Information; Gordon and Breach Publishers, 1997*1999.

- Wiener, N. Cybernetics; or control and communication in the animal and the machine, Second edition; The MIT Press, 1948*1961. [Google Scholar]

- Pareto, V.D.F. Trattato di Sociolozia Generale; The Mind and Society, Translator; Lingston, A., Ed.; New York, 1916*1935. [Google Scholar]

- Parsons, T. The Structure of Social Action; Chicago Press, 1937*1949. [Google Scholar]

- Laszlo, E. A Note in Evolution. In The Quest for a Unified Theory of Information; Hofkirchner, W., Ed.; Gordon and Breach Publishers, 1997*1999. [Google Scholar]

- Simon, H.A. 1989; Models of Thought: Vol.2; Yale University Press, 1989. [Google Scholar]

- Langton, C. G. (Ed.) Artificial Life: An Overview; MIT Press, 1995.

- Adami, C. Introduction to Artificial Life; pringer-Verlag New York, Inc, 1998. [Google Scholar]

- Checkland, P.; Howell, S. Information, Systems and Information Systems- making sense of the field; John Wiley & Sons, 1998. [Google Scholar]

- Torr, C. Equilibrium and Incommensurability. In Contigency, Complexity and the Theory of the Firm; Dow, S. C., Earl, P. E., Eds.; Edward Elgar Publishing, Inc, 1998. [Google Scholar]

- Anderson, J. R.; Lebiere, C. The atomic components of thought; Lawrence Erlbaum, 1998. [Google Scholar]

- Newell, A. Unified theories of cognition; Harvard University Press, 1990. [Google Scholar]

- Newell, A.; Simon, H. A. Computer Science as Empirical Inquiry: Symbols and Search. Communications of the ACM 1976, 13, 113–126. [Google Scholar] [CrossRef]

- Anderson, J. R. The Architecture of Cognition; Harvard University Press, 1983. [Google Scholar]

- Newell, A.; Simon, H. A. Human Problem Solving; Prentice Hall, 1972. [Google Scholar]

- Gelepithis, P.A.M. Conceptions of Human Understanding: A critical review. Cognitive Systems 1986, 1, 295–305. [Google Scholar]

- Gelepithis, P.A.M. Survey of Theories of Meaning. Cognitive Systems 1988, 2, 141–162. [Google Scholar]

- Gelepithis, P.A.M. Knowledge, Truth, Time, and Topological spaces. In Proceedings of the 12th International Congress on Cybernetics; 1989; pp. 247–256, Namur, Belgium. [Google Scholar]

- Gelepithis, P.A.M. The possibility of Machine Intelligence and the impossibility of Human-Machine Communication. Cybernetica 1991, XXXIV, 255–268. [Google Scholar]

- Gelepithis, P.A.M.; Goodfellow, R. An alternative architecture for intelligent tutoring systems. Interactive Learning International 1992, 8, 29–36. [Google Scholar]

- Gelepithis, P.A.M.; Goodfellow, R. An alternative architecture for intelligent tutoring systems: Theoretical and Implementational Aspects. Interactive Learning International 1992, 8, 171–175. [Google Scholar]

- Gelepithis, P. A. M. Aspects of the theory of the human and the human-made minds. In 13th International W'shop of the European Society for the Study of Cognitive Systems, Wadham College, University of Oxford, 5-8 September, 1995. A revised version was given as an Invited Lecture to the Faculty of the Department of Methodology, History and Philosophy of Science, National and Kapodistrian University of Athens. Athens, 29 February 1996..

- Gelepithis, P. A. M. A Rudimentary Theory of Information: Consuequences for Information Science and Information Systems. World Futures 1997, 49, 263–274, Also published in The Quest for a Unified Theory of Information. Hofkirchner, W. (ed.), Gordon and Breach Publishers. [Google Scholar] [CrossRef]

- Nicolis, G.; Prigogine, I. Exploring Complexity: An Introduction; W. H. Freeman and Company, 1989. [Google Scholar]

- *This is a revised version of a paper of the same title delivered at the 17th Annual Workshop of the European Society for the Study of Cognitive Systems, 26-29 August 2000, Wadham College, Oxford, England. ESSCS Abstracts p. 9.

© 2001 by the author. Reproduction for noncommercial purposes permitted.

Share and Cite

Gelepithis, P.A.M. Complex Intelligent Systems: Juxtaposition of Foundational Notions and a Research Agenda. Entropy 2001, 3, 247-258. https://doi.org/10.3390/e3040247

Gelepithis PAM. Complex Intelligent Systems: Juxtaposition of Foundational Notions and a Research Agenda. Entropy. 2001; 3(4):247-258. https://doi.org/10.3390/e3040247

Chicago/Turabian StyleGelepithis, Petros A.M. 2001. "Complex Intelligent Systems: Juxtaposition of Foundational Notions and a Research Agenda" Entropy 3, no. 4: 247-258. https://doi.org/10.3390/e3040247

APA StyleGelepithis, P. A. M. (2001). Complex Intelligent Systems: Juxtaposition of Foundational Notions and a Research Agenda. Entropy, 3(4), 247-258. https://doi.org/10.3390/e3040247