Abstract

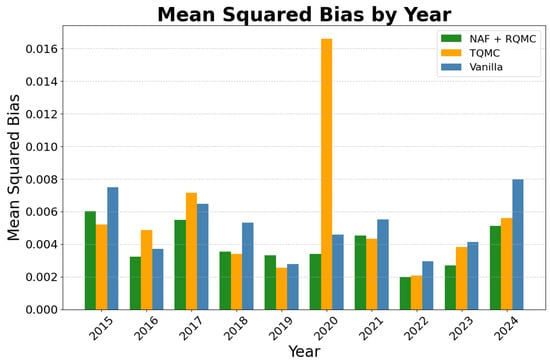

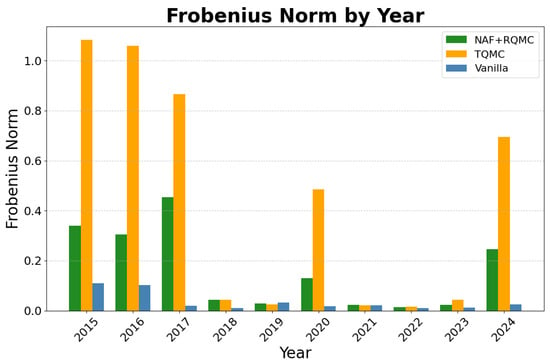

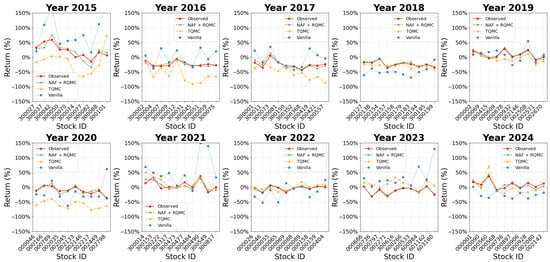

This paper proposes a novel transport quasi-Monte Carlo framework that combines randomized quasi-Monte Carlo sampling with a neural autoregressive flow architecture for efficient sampling and integration over complex, high-dimensional distributions. The method constructs a sequence of invertible transport maps to approximate the target density by decomposing it into a series of lower-dimensional marginals. Each sub-model leverages normalizing flows parameterized via monotonic beta-averaging transformations and is optimized using forward Kullback–Leibler (KL) divergence. To enhance computational efficiency, a hidden-variable mechanism that transfers optimized parameters between sub-models is adopted. Numerical experiments on a banana-shaped distribution demonstrate that this new approach outperforms standard Monte Carlo-based normalizing flows in both sampling accuracy and integral estimation. Further, the model is applied to A-share stock return data and shows reliable predictive performance in semiannual return forecasts, while accurately capturing covariance structures across assets. The results highlight the potential of transport quasi-Monte Carlo (TQMC) in financial modeling and other high-dimensional inference tasks.

1. Introduction

1.1. Quasi-Monte Carlo

For a wide range of problems in quantitative finance such as option pricing under stochastic volatility, portfolio risk estimation, Value-at-Risk (VaR) and Expected Shortfall (ES) computation, and optimal asset allocation under uncertainty, one often needs to compute expectations with respect to high-dimensional probability distributions. These expectations can typically be rewritten as integrals over a d-dimensional Gaussian or log-normal measure, where d reflects the number of underlying assets, discretized time steps, and model complexity. In realistic settings, d can reach into the hundreds or even thousands. Under such conditions, classical numerical quadrature methods, such as Gauss–Hermite or sparse grid integration, suffer from the curse of dimensionality and become computationally intractable [1].

To overcome this barrier, the Monte Carlo (MC) method has become a cornerstone of computational finance. Originally developed by Ulam and von Neumann during the Manhattan Project and later formalized for integration by Metropolis and Rosenbluth, MC methods use random sampling to approximate integrals and expectations. Their convergence rate, , is independent of dimension, making MC particularly attractive in high-dimensional settings [2]. MC methods have been applied to simulate geometric Brownian motion and price path-dependent derivatives such as Asian and barrier options, and to compute Greeks via pathwise or likelihood ratio methods [3,4]. The method’s generality and flexibility allow it to accommodate non-smooth payoffs, stochastic volatility, jump diffusion models, and more.

However, a major drawback of the MC method is its slow convergence: reducing the standard error by a factor of 10 requires 100 times more samples. This computational inefficiency becomes especially pronounced when simulating rare events or computing tail risk measures. To address this limitation, the field has increasingly turned to quasi-Monte Carlo (QMC) methods. QMC replaces random sampling with low-discrepancy sequences: deterministic point sets that fill the integration domain more uniformly than purely random points [5,6]. Common sequences include Sobol’, Halton, and Faure sequences. For sufficiently smooth integrands, QMC achieves a convergence rate of , which is asymptotically faster than MC for fixed dimension. Applications in finance have demonstrated the superior efficiency of QMC in pricing exotic options, computing risk measures, and solving portfolio optimization problems [7,8].

Nonetheless, QMC’s error is deterministic and thus lacks a statistical interpretation. Moreover, its performance is sensitive to the dimension and smoothness of the integrand, and error bounds depend on complex measures like variation in the sense of Hardy and Krause. To remedy this, Randomized Quasi-Monte Carlo (RQMC) methods were developed which combine the structure of QMC with randomization (e.g., digital scrambling, shifting, or lattice shifting) to generate unbiased estimators [9]. This allows for standard error estimation and the use of variance-based stopping rules while retaining the variance reduction benefits of QMC. RQMC has been particularly successful in financial simulations with smooth payoff structures and moderate effective dimension, as demonstrated in the pricing of mortgage-backed securities, insurance products, and risk aggregation models [3,10,11].

Despite their success, MC, QMC, and RQMC methods often rely on the assumption that the target distribution is known in closed form, at least up to a normalizing constant. This enables efficient sampling and importance weighting. However, in modern finance and econometrics, models are increasingly complex, with intractable or implicit densities as in latent variable models, generative stochastic networks, or when modeling real-world asset returns under heavy tails and asymmetries. In such contexts, classical sampling and quadrature approaches fail to scale or adapt. To address this, this paper proposes a learning-based method that estimates the target distribution through a transport map: a deterministic, invertible transformation that pushes forward a simple base distribution (such as a uniform distribution on the unit hypercube) onto the target distribution.

1.2. Normalizing Flows and Neural Autoregressive Flow

Normalizing flows (NFs) are a class of powerful generative models designed to model complex probability distributions through a sequence of invertible, differentiable transformations developed through the progress of machine learning techniques. Given a base distribution, typically a standard multivariate Gaussian or uniform distribution, NFs construct a bijective mapping to a target distribution via the change-of-variables formula, which allows for exact and tractable computation of the density function and efficient sampling. The elegant mathematical structure gives NFs several desirable properties over other generative models, including tractable likelihood evaluation and efficient sample generation.

The foundational theory behind NFs emerged from the field of optimal transport and statistical mechanics, particularly in the work of Tabak and Vanden-Eijnden (2010) [12], who introduced a method for transforming probability densities using transport maps governed by differential equations. Later, Tabak and Turner (2013) [13] generalized this approach into a family of density estimators based on nonparametric optimal transport, proposing an iterative learning mechanism for constructing transport maps via convex optimization and regularization. Normalizing flows offer a powerful framework for density modeling and variational inference, using compositions of invertible transformations to model complex distributions while enabling exact likelihood computation and efficient sampling [14,15]. These works laid the theoretical foundation for using invertible maps to perform density estimation and generation.

Early applications of NFs demonstrated their potential across tasks such as clustering, classification, and nonlinear dimensionality reduction. For example, Agnelli et al. (2010) [16] explored using transformations for feature-space clustering. Subsequently, Rippel and Adams (2013) [17] investigated NFs for high-dimensional density estimation, showing that NFs could transform structured, real-world datasets into simple latent representations that are more amenable to analysis and modeling.

A major breakthrough came with the introduction of NFs to variational inference by Rezende and Mohamed (2015) [15]. In their work, they introduced Planar and Radial Flows, simple invertible transformations that enhanced the expressiveness of variational posteriors in Variational Autoencoders (VAEs). These flows enabled gradient-based optimization and reparameterization while preserving tractable density evaluation, addressing a key limitation in standard VAEs, where the posterior is often overly simplistic (e.g., mean-field Gaussian).

In parallel, Dinh et al. (2014, 2016) introduced the NICE and RealNVP models [18], which popularized the use of coupling layers—a type of invertible transformation with triangular Jacobians enabling efficient computation of both forward transformations and Jacobian determinants. These architectures supported the high-capacity modeling of complex data such as images and audio, and became widely adopted due to their simplicity and tractability.

Building on these developments, the field progressed to more expressive architectures such as autoregressive flows. These models decompose a joint distribution into a sequence of conditionals, enabling expressive modeling of dependencies across dimensions. A notable instance is the Inverse Autoregressive Flow (IAF) by Kingma et al. (2016) [19], which conditions transformations on an auxiliary autoregressive network such as MADE (Masked Autoencoder for Distribution Estimation; [20]). IAFs preserve fast parallel sampling from the approximate posterior, making them suitable for training large-scale VAEs. However, IAFs rely on affine transformations, which may be insufficient to represent distributions with highly nonlinear structure unless many layers are stacked, resulting in deep and computationally expensive architectures.

To address the limitations of affine transformations in autoregressive flows, Huang et al. (2018) introduced the neural autoregressive flow (NAF) [21]. In NAF, each affine transformation is replaced with a strictly monotonic neural network, allowing for a universal approximation of continuous distributions under mild assumptions. The model preserves the autoregressive structure of IAF while greatly enhancing flexibility. However, NAF introduces an architectural bottleneck: each transformation is governed by a separate transformer network whose parameters are predicted by a distinct conditioner network, resulting in a quadratic parameter complexity in terms of model width. While this structure improves expressiveness, it can hinder scalability, particularly in high-dimensional settings where memory and computation are constrained.

Various techniques have been proposed to reduce the redundancy and inefficiency of this dual-network architecture. For instance, conditional weight normalization and sparsity regularization [22] help mitigate overparameterization. However, these fixes only partially resolve the scalability issues inherent in NAF’s design. In response, De Cao, Aziz, and Titov (2019) proposed Block Neural Autoregressive Flow (B-NAF) [23], which unifies the conditioner and transformer into a single feedforward neural network with carefully structured block-wise transformations. B-NAF achieves similar expressiveness to NAF while drastically reducing parameter count, training time, and memory usage. This innovation allows for tractable high-dimensional density estimation with improved computational efficiency, making B-NAF a practical candidate for tasks such as likelihood-based generative modeling, Bayesian posterior estimation, and density-based clustering in complex domains.

Overall, the development of normalizing flows represents a confluence of deep learning, probability theory, and numerical analysis, providing a flexible and theoretically grounded framework for density modeling and transformation-based sampling. Their applications now span generative modeling, Bayesian inference, density ratio estimation, and scientific computing, demonstrating wide applicability beyond the original scope of variational inference.

Inspired by the development of NAF and the transport map, a quasi-Monte Carlo method based on an NAF architecture is proposed. This idea builds on optimal transport theory and normalizing flows, particularly the neural autoregressive flow (NAF) model introduced by Huang et al. [21]. By learning such a map from data or samples, this paper aims to generalize QMC-type integration to implicitly defined distributions, combining function approximation with deterministic sampling to handle high-dimensional, non-Gaussian, and irregular financial distributions.

Building on the developments in normalizing flows and inspired in particular by the NAF architecture, the next section introduces a novel quasi-Monte Carlo (QMC) method guided by transport maps parameterized through NAF-like structures. This approach leverages the core insight from optimal transport theory: that complex distributions can be obtained by deterministically pushing forward a simple base distribution—such as the uniform distribution on the unit hypercube—through a sequence of invertible transformations across dimensions. By training a transport map using neural autoregressive architectures, this method effectively learns to approximate the target distribution from data or samples, even when its density is analytically intractable. The resulting map allows us to integrate quasi-Monte Carlo techniques within this learned representation. This fusion of function approximation and low-discrepancy sampling extends QMC methods to settings with implicitly defined, non-Gaussian, and high-dimensional target distributions—common in modern financial modeling—thus bridging the gap between classical numerical integration and deep generative modeling.

2. Methodology

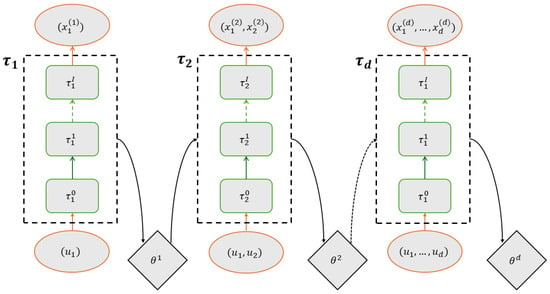

2.1. Neural Autoregressive Flow Architecture

Suppose is the d dimensional generated from RQMC. Our goal is to design an autoregressive flow to map , such that . P is a target distribution defined on with density function . To achieve this, a neural autoregressive flow (NAF) based on the architecture in [21] is built. The overview is shown in Figure 1. This architecture consists of d individual neural network sub-models. The k-th sub-model takes the input of the first k-dimension samples and a hidden variable from the -th model and aims to approximate the marginal distribution of the target distribution P on its first k dimensions, denoted by . The hidden variable will be explained later. The k-th sub-model pushes the inputs through reversible transport maps to obtain a set of samples , where is the composition of all mappings. is as defined in normalizing flows in the literature [13]. The density of , which is induced by the transport maps, can be written as

where is the Jacobian matrix of the projection .

Figure 1.

Neural autoregressive flow architecture.

To stimulate the training, the model uses forward Kullback–Leibler (KL) divergence as the objective function to measure the difference between the target and approximated distribution in the k-th sub-model:

A numerical calculation of the KL divergence from the trained samples would be . N is the total number of training data, and is the n-th trained sample from the k-th sub-model. Using the composition , there is a sequence of samples during the transport maps:

is a k-dimension variable generated after the transport map . With this sequence of midway samples and applying the chain rule, the objective function becomes

is the Jacobian matrix resulting from the i-th transport map in the k-th sub-model at the n-th sample. Therefore, the calculation of becomes individual . In practice, is often constructed as a lower-triangular matrix to further simplify the calculation. By parameterizing , the model training process is solving for

At each k, the explicit form of and depends on parameterization. The entire autoregressive flow architecture will start from and proceed to the next sub-model until the d-th one finishes training.

In the next paragraph, we will introduce a parameterization of the transport maps using averaging beta distributions and a choice of the hidden variable. It will also be demonstrated how the training is completed based on the design and how the hidden variable facilitates the training for each sub-model.

2.2. Parameterization of Transport Map

Focusing on the k-th sub-model, the purpose of is to map uniformly distributed samples to samples . A similar design as shown in Liu (2024) [24] is adopted. Starting from the base transformation, is parameterized as the inverse Gaussian CDF , which builds a projection from hypercubical to full space . The following transport maps perform projection from to . To introduce the dependence structure into the samples and construct an autoregressive relationship, this method uses the form to induce the variable correlations. Here, is an element-wise transform such that

where is a notation for any k-dimension vector. is a lower-triangle square matrix, and is a shift applied to each dimension. Given the properties of and , the lower-triangle structure is inherited by a Jacobian of . With this parameterization, it can be derived that the is a summation of the log derivatives on each element and contribution of the main diagonal of

where is the derivative of , is the j-th element of , and are the elements on the diagonal of . To ensure the inversibility of , the has to be positive.

The introduces nonlinearity to the transport map by taking a sandwich form on each element

is the base transformation, and is a mapping from to . More specifically, we parameterize to be the weighted average of a number of Beta distribution CDFs, i.e.,

is the CDF of Beta distribution. are the pre-defined parameters. is a vector of weights summed up to 1. can be shared across all , but are unique for each .

In the k-th sub-model, the parameters to be determined are the in each transport map. Since we have parameterized all the steps, the optimization problem can be solved using the BFGS algorithm. A full expression of the objective function can be found in Appendix A. The derivative of objective function over parameters are complex since each parameter involves multiple transport maps. In practice, those derivatives are calculated using numerical techniques. The hyperparameters I and S control the depth of the neural architecture and the flexibility of the transport maps, respectively. Larger values will increase the precision of the approximation for any continuous target distribution.

2.3. Hidden Variables

From the parameterization above, we can observe that as the sub-model index k goes from 0 to d, the dimension of L and b are increasing 1 at a time. Since an autoregressive feature is preserved along the flows, the training on the k-th sub-model will only depend on the first k dimensions. When training the to approximate , we can take advantage of the result that is already an approximation of the marginal distribution . At the beginning of the training, the model can borrow the already optimized parameters for the initialization value of . At each transport map , we can fill in the upper left part of with and the first elements of with . The weights in the Beta averaging can be carried over directly. The next sub-model solution will start from here and optimize , then pass it to the next sub-model as the starting value again. By including the as the hidden variable and passing through the flow, we can speed up the training process and stabilize the results.

3. Simulation

Building on the methodology introduced in the previous section, we conduct numerical experiments to evaluate the practical performance of the proposed model. Specifically, we consider the two-dimensional banana-shaped normal distribution, a canonical example of a complex distribution with strong nonlinear dependencies, to assess the model’s effectiveness in sampling and integral estimation. Training datasets are generated using RQMC methods and used to fit the model with , and a maximum of 150 iterations on each dimension. For comparison purposes, we trained another neural autoregressive flow on the training set generated by the vanilla MC method with the same set of hyperparameters. Both training sets have 1024 samples. Since the banana function is a two-dimension distribution (), there are two sub-models in each method. As we have pointed out in the previous section, the first sub-model will minimize the KL divergence between the trained distribution and the marginal banana distribution on the first dimension. The second sub-model will utilize the result in the first dimension and approximate the joint target distribution on both dimensions.

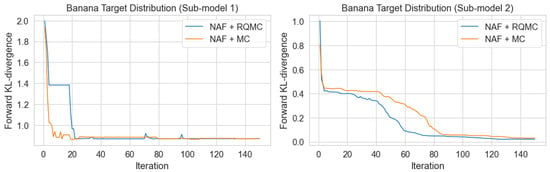

Figure 2 illustrates the progression of the forward KL divergence during training for both models as the number of iterations increases. In the first sub-model, where the marginal target distribution has a relatively simple structure, both methods exhibit rapid convergence. Although the NAF + MC method converges slightly faster than NAF + RQMC, both reach convergence within 20 iterations. This fast convergence can be attributed to the simplicity of the target distribution on the low dimension. In contrast, the second sub-model incorporates dependence structures into the training process, resulting in a slower convergence rate. Under this more complex joint distribution, the NAF + RQMC method converges more quickly than NAF + MC. While both approaches stabilize after approximately 100 iterations and ultimately attain similar final values, NAF + RQMC demonstrates greater efficiency in complex scenarios, achieving comparable performance with fewer computational resources.

Figure 2.

The trend in loss function as the number of iterations increases.

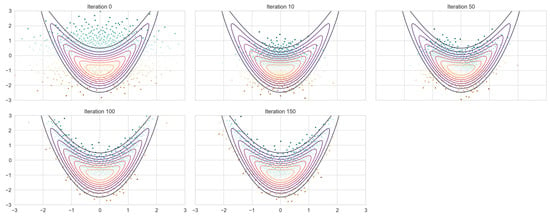

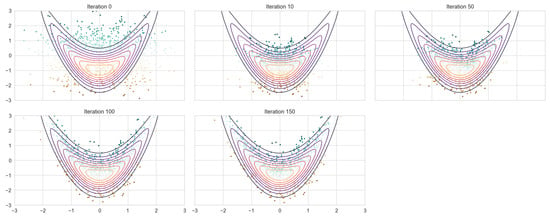

The trained model is then applied to an independent testing set generated via RQMC and MC according to their specific design. The resulting transformed samples approximate the banana-shaped distribution, as illustrated in Figure 3. As the number of iterations increases, the shape of the transformed samples gradually shifts from a circular form to the characteristic banana shape. In parallel, the empirical mean curve converges toward the true trajectory of the banana distribution. When compared to the neural autoregressive flow trained on Monte Carlo samples (NAF + MC) with the same hyperparameter, both approaches capture the target distribution well; however, the RQMC-based model (NAF + RQMC) exhibits superior performance in capturing the tail regions (Figure 4). The generated samples spread out more evenly around the shape of the target distribution instead of gathering in small clusters.

Figure 3.

NAF+ RQMC fitting on 1024 samples.

Figure 4.

NAF+MC fitting on 1024 samples.

We further evaluate the model’s utility in integral estimation by comparing it with NAF + MC and a standard importance sampling method. Specifically, we estimate second-order moments: , , and . Each experiment is repeated 10 times, and the mean squared errors (MSEs) of the estimates are reported in Table 1. The proposed method achieves notable improvements in MSE over both baselines, demonstrating enhanced accuracy and stability in high-variance regions of the target distribution.

Table 1.

Mean squared error (MSE) comparison for different integrals and methods.

5. Conclusions and Discussion

This paper proposed a transport quasi-Monte Carlo method built on a neural autoregressive flow architecture. The key idea of the model is to approximate the marginal density of the target distribution in the first few dimensions. As the model progresses, one more dimension is added at each step. The network construction of the transport map using normalizing flows within each sub-model and the flexible beta averaging bijection grants the model the ability to approximate any continuous target distribution. The unique feature of the lower triangular matrix introduces the dependence relationship among the elements and significantly reduces the computation burden for the optimization process. The utilization of parameters as hidden variables passing along the model further increases the efficacy and accuracy of the model. The choice of RQMC to generate initial samples from the hypercube ensures the sample coverage on the probability space, reducing the sample size required in each training and providing adequate samples at the tails of the target distribution. This is especially helpful when estimating integrals of high degrees or complex continuous distributions. In the simulation part, we compared the neural autoregressive flow applied to RQMC with the one applied to traditional Monte Carlo.

From the fitting results, it can be seen that the neural autoregressive flow pushes the samples to the designated shapes, while NAF + RQMC-generated samples show better performance at the edge of the target distribution compared to the NAF + MC. In the high-dimension optimization process, NAF + RQMC exhibits faster convergence. In terms of integral estimation, we calculated the first and second moments for both methods and added the estimation given by the trivial importance sampling method. We can observe improvements in the estimation of all moments in all dimensions in the proposed method. Especially compared with vanilla importance sampling, the MSE drops sharply by using the neural autoregressive flow architecture. In the A-share stock return prediction experiment, this model predicted the daily return and cumulative semiannual return for the stocks with high accuracy. The individual stock prediction also demonstrate the overall trend in the whole market. More importantly, the model captures the variance structures well even when the market fluctuates. All prediction errors are controlled within a low range. The model has good performance in mid-run predictions for the market.

There are still future works and potential improvements on this model. First, when dealing with a high-dimensional dataset, the model training becomes unstable as the sub-model goes to a high dimension. The optimization process may fail due to excessive training at the first few dimensions. A possible solution is to use an adaptive iterative step. By controlling the iteration numbers and changing the learning rate, we can let the training become more balanced on each dimension. Second, quasi-Monte Carlo usually has good convergence rates. By introducing a neural autoregressive flow on this task, it would be interesting to explore the rate of parameter convergence as the sample size grows. Last but not least, neural autoregressive flow builds a powerful architecture on the model; we would be excited to see other constructions of transport mapping on each of the sub-models.

Author Contributions

Conceptualization, Y.W.; Methodology, Y.W.; Software, Y.W.; Validation, Y.W. and W.X.; Formal analysis, Y.W.; Investigation, Y.W.; Data curation, Y.W.; Writing—original draft, Y.W.; Writing—review & editing, Y.W. and W.X. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Here, we show the full expression of loss function at the k-th sub-model:

The transport maps involved are

Since are lower-triangle matrices and are element-wise transforms, the Jacobian are lower-triangles as well

where

References

- Caflisch, R.E. Monte carlo and quasi-monte carlo methods. Acta Numer. 1998, 7, 1–49. [Google Scholar] [CrossRef]

- Kalos, M.H.; Whitlock, P.A. Monte Carlo Methods; John Wiley & Sons: Hoboken, NJ, USA, 2009. [Google Scholar]

- Glasserman, P. Monte Carlo Methods in Financial Engineering; Springer: New York, NY, USA, 2004; Volume 53. [Google Scholar]

- Boyle, P.; Broadie, M.; Glasserman, P. Monte Carlo methods for security pricing. J. Econ. Dyn. Control 1997, 21, 1267–1321. [Google Scholar] [CrossRef]

- Niederreiter, H. Random Number Generation and Quasi-Monte Carlo Methods; SIAM: Philadelphia, PA, USA, 1992. [Google Scholar]

- Dick, J.; Kuo, F.Y.; Sloan, I.H. High-dimensional integration: The quasi-Monte Carlo way. Acta Numer. 2013, 22, 133–288. [Google Scholar] [CrossRef]

- Papageorgiou, A.; Paskov, S. Deterministic simulation for risk management. J. Portf. Manag. 1999, 25, 122–127. [Google Scholar] [CrossRef]

- Leobacher, G.; Pillichshammer, F. Introduction to Quasi-Monte Carlo Integration and Applications; Springer: Cham, Switzerland, 2014. [Google Scholar]

- Owen, A.B. Randomly permuted (t, m, s)-nets and (t, s)-sequences. In Monte Carlo and Quasi-Monte Carlo Methods in Scientific Computing: Proceedings of a Conference at the University of Nevada, Las Vegas, NV, USA, 23–25 June 1994; Springer: New York, NY, USA, 1995; pp. 299–317. [Google Scholar]

- Lemieux, C. Using quasi–Monte Carlo in practice. In Monte Carlo and Quasi-Monte Carlo Sampling; Springer: New York, NY, USA, 2008; pp. 1–46. [Google Scholar]

- Wang, X.; Han, Y.; Leung, V.C.; Niyato, D.; Yan, X.; Chen, X. Convergence of edge computing and deep learning: A comprehensive survey. IEEE Commun. Surv. Tutor. 2020, 22, 869–904. [Google Scholar] [CrossRef]

- Tabak, E.G.; Vanden-Eijnden, E. Density estimation by dual ascent of the log-likelihood. Commun. Math. Sci. 2010, 8, 217–233. [Google Scholar] [CrossRef]

- Tabak, E.G.; Turner, C.V. A family of nonparametric density estimation algorithms. Commun. Pure Appl. Math. 2013, 66, 145–164. [Google Scholar] [CrossRef]

- Papamakarios, G.; Nalisnick, E.; Rezende, D.J.; Mohamed, S.; Lakshminarayanan, B. Normalizing flows for probabilistic modeling and inference. J. Mach. Learn. Res. 2021, 22, 1–64. [Google Scholar]

- Rezende, D.; Mohamed, S. Variational inference with normalizing flows. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 1530–1538. [Google Scholar]

- Agnelli, J.P.; Cadeiras, M.; Tabak, E.G.; Turner, C.V.; Vanden-Eijnden, E. Clustering and classification through normalizing flows in feature space. Multiscale Model. Simul. 2010, 8, 1784–1802. [Google Scholar] [CrossRef]

- Rippel, O.; Adams, R.P. High-dimensional probability estimation with deep density models. arXiv 2013, arXiv:1302.5125. [Google Scholar] [CrossRef]

- Dinh, L.; Krueger, D.; Bengio, Y. Nice: Non-linear independent components estimation. arXiv 2014, arXiv:1410.8516. [Google Scholar]

- Kingma, D.P.; Salimans, T.; Jozefowicz, R.; Chen, X.; Sutskever, I.; Welling, M. Improved variational inference with inverse autoregressive flow. In Advances in Neural Information Processing Systems 29 (NIPS 2016); NeurlPS: La Jolla, CA, USA, 2016; Volume 29. [Google Scholar]

- Germain, M.; Gregor, K.; Murray, I.; Larochelle, H. Made: Masked autoencoder for distribution estimation. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 881–889. [Google Scholar]

- Huang, C.W.; Krueger, D.; Lacoste, A.; Courville, A. Neural autoregressive flows. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 2078–2087. [Google Scholar]

- Steinley, D.; Hoffman, M.; Brusco, M.J.; Sher, K.J. A method for making inferences in network analysis: Comment on Forbes, Wright, Markon, and Krueger. J. Abnorm. Psychol. 2017, 126, 1000–1010. [Google Scholar] [CrossRef]

- De Cao, N.; Aziz, W.; Titov, I. Block neural autoregressive flow. In Proceedings of the Uncertainty in Artificial Intelligence, Virtual, 3–6 August 2020; pp. 1263–1273. [Google Scholar]

- Liu, S. Transport Quasi-Monte Carlo. arXiv 2024, arXiv:2412.16416. [Google Scholar] [CrossRef]

- Markowitz, H. Modern portfolio theory. J. Financ. 1952, 7, 77–91. [Google Scholar]

- Jin, Y.; Jorion, P. Firm value and hedging: Evidence from US oil and gas producers. J. Financ. 2006, 61, 893–919. [Google Scholar] [CrossRef]

- Cont, R. Empirical properties of asset returns: Stylized facts and statistical issues. Quant. Financ. 2001, 1, 223–236. [Google Scholar] [CrossRef]

- Sharpe, W.F. Capital asset prices: A theory of market equilibrium under conditions of risk. J. Financ. 1964, 19, 425–442. [Google Scholar]

- Black, F.; Scholes, M. The pricing of options and corporate liabilities. J. Political Econ. 1973, 81, 637–654. [Google Scholar] [CrossRef]

- Fama, E.F. The behavior of stock-market prices. J. Bus. 1965, 38, 34–105. [Google Scholar] [CrossRef]

- Elton, E.J.; Gruber, M.J.; Blake, C.R. Common Factors in Mutual Fund Returns; New York University: New York, NY, USA, 1997. [Google Scholar]

- Mandelbrot, B. The variation of certain speculative prices. J. Bus. 1963, 36, 394–419. [Google Scholar] [CrossRef]

- Praetz, P.D. The distribution of share price changes. J. Bus. 1972, 45, 49–55. [Google Scholar] [CrossRef]

- Lange, K.L.; Little, R.J.; Taylor, J.M. Robust statistical modeling using the t distribution. J. Am. Stat. Assoc. 1989, 84, 881–896. [Google Scholar] [CrossRef]

- Azzalini, A.; Capitanio, A. Statistical applications of the multivariate skew normal distribution. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 1999, 61, 579–602. [Google Scholar] [CrossRef]

- Azzalini, A.; Capitanio, A. Distributions generated by perturbation of symmetry with emphasis on a multivariate skew t-distribution. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 2003, 65, 367–389. [Google Scholar] [CrossRef]

- Nelsen, R.B. An Introduction to Copulas; Springer: New York, NY, USA, 2006. [Google Scholar]

- McNeil, A.J.; Frey, R.; Embrechts, P. Quantitative Risk Management: Concepts, Techniques and Tools; Princeton University Press: Princeton, NJ, USA, 2015. [Google Scholar]

- Bollerslev, T. Generalized autoregressive conditional heteroskedasticity. J. Econom. 1986, 31, 307–327. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).