1. Introduction

A defining characteristic of probability distributions is the presence of inherent inequality; some outcomes occur with higher probability than others. This asymmetry manifests in the distribution’s shape and is typically interpreted using global measures like the center and spread. However, a more refined understanding requires both quantification and localization of this inequality. Quantification, which has been the primary focus of our previous work [

1,

2,

3,

4], provides a rigorous, scale-free means of measuring how uniformly probability is distributed either across the entire space or within specific measurable subsets based solely on their inherent uncertainty. This enables comparisons of inequality both within a single distribution and across different distributions. The present paper, by contrast, centers on localizing the systematic identification of where in the probability space inequality is most pronounced. Without localization, global measures risk masking critical disparities within the distribution. By applying the Hahn decomposition theorem to the degree of uniformity measure, we introduce a recursive, principled framework that decomposes the distribution into disjoint regions of varying inequality. This shift in focus from quantifying how much inequality exists to revealing where it resides is essential for applications that demand both interpretability and targeted insights, such as diagnostics in statistical modeling, fairness analysis, or information-driven decision-making.

To situate our contribution within the broader literature, we briefly review prior entropy-based approaches to diversity and complexity. Entropy-based measures have long served as foundational tools for quantifying diversity, inequality, and complexity across disciplines. Shannon entropy captures the uncertainty of a distribution, while Hill numbers—widely used in ecology and information theory—translate entropy into effective counts of equally likely outcomes. These Hill numbers are mathematically linked to Renyi entropies; the Hill number of order q is the exponential of Renyi entropy of the same order, offering a unified framework for interpreting diversity and concentration.

Building on this lineage, LMC-type statistical complexity measures [

5] combine entropy with disequilibrium to quantify non-uniformity in probability densities. Recent refinements [

6] use differential-escort transformations to ensure monotonicity of these complexity metrics, particularly in systems governed by the power-law or non-extensive statistics. However, these approaches remain scalar in nature, offering global summaries of complexity without spatial or hierarchical localization.

In contrast, our contribution introduces a recursive Hahn decomposition of an entropy-derived signed measure, enabling structural localization of inequality within the probability space. This method partitions the space into disjoint subsets with monotonic ordering of uniformity, explicitly identifies null sets, and constructs a ternary tree that reveals the geometry of inequality across scales. By shifting from scalar quantification to recursive decomposition, we offer a new lens for analyzing distributions where localized disparities matter.

1.1. Ideas So Far

Uniform distributions, by their nature, exhibit a mathematical diversity that aligns directly with the support of the distribution. For discrete uniform distributions supported on , the diversity 1D, defined as the exponential of Shannon entropy and corresponding to the Hill number of order , is precisely K. Similarly, for continuous uniform distributions over the interval , the diversity 1D is equal to . Any departure from uniformity results in a reduced diversity when compared to a uniform distribution with the same support.

The concept of diversity

1D [

7,

8,

9,

10] carries an intuitive interpretation: one can conceptually transform the original non-uniform distribution into a Shannon Equivalent Equi-probable (SEE) uniform distribution, where the size of the support matches

1D. Although this equivalence is abstract, it proves valuable for visualizing the notion of diversity.

Diversity can also be analyzed in the context of sub-components of a distribution, as discussed in [

1,

2]. Specifically, a subset

P with cumulative probability

and Shannon diversity

, where

is the entropy of the part

P, can be evaluated using the ratio

to assess its degree of uniformity.

The term

Shannon Equivalent is used to emphasize that the original segment or the entire distribution thereof shares the same conditional Shannon entropy as its SEE counterpart. We have selected the exponential of Shannon entropy, also known as

mathematical diversity 1D (or

if it is a part

P and not the whole), to quantify the degree of inequality present in distributions, as outlined in [

1,

2]. This choice is primarily motivated by the fact that, among the Hill numbers

qD, where

q is a parameter governing various diversity measures, the value corresponding to

strikes an equal balance between the richness and evenness of the distribution. Furthermore, Shannon entropy is widely recognized as a fundamental measure of probabilistic, or information-theoretic uncertainty in a distribution.

Rather than directly quantifying the inequality of the original part or whole, which may not exhibit uniformity, the abstract SEE equivalent enables us to assess and compare the degree of uniformity on a common ground. This is because the redrawn SEE equivalents are (a) uniform distributions and (b) possess the same entropic uncertainty as the original part or whole from which they were derived. The uniformity inherent in the distribution of SEE parts (albeit abstract) makes it significantly easier to compare and quantify their degree of uniformity.

Much of our previous work [

1,

2,

3,

4] was focused on the

quantification of inequality in probability distributions. This was an important first step, where given a probability distribution or a part (or parts from different distributions), we aimed to compute and compare the degree of inequality of the given part (s) by computing

. We use the terms

degree of uniformity and

degree of inequality interchangeably in this paper since we have shown these two concepts to be equivalent in [

1,

2,

3,

4].

To quickly reiterate the idea, if we assume that

and

are two parts with

, say, then this means that, with respect to the entropy-derived measure used in this paper, the degree of uniformity defined as

-the part

is

R times more uniformly distributed than

. We emphasize that this interpretation is specific to the Shannon entropy framework adopted here. Other complementary measures of uniformity exist, such as LMC-type statistical complexity metrics [

5,

6], which combine entropy with disequilibrium to quantify non-uniformity from a different perspective. Our method focuses on structural localization of inequality using recursive Hahn decomposition, whereas LMC complexity offers a scalar summary of global non-uniformity. To bring in the idea of inequality here, we also note that, equivalently, this means that

is

R times less uniformly distributed compared to

. Hence,

is

R times more concentrated than

as well. This is also clear by noting that

. In other words, a larger ratio of

means a higher concentration of probability (or frequency) over the part

P.

Given this explanation, we use the terms degree of uniformity and degree of inequality interchangeably for the ratio for a given part P, with the caveat that the two concepts are inverses of each other, i.e., a higher degree of uniformity means a lower degree of inequality and vice-versa. This makes intuitive sense, as more uniformity for a part P means that the mass is more spread out in an equivalent equiprobable form. Hence, its opposite concept, i.e., inequality, should be that the part P is more concentrated rather than spread out, in its equivalent equiprobable form.

1.2. Potential Applications

The computation and comparison of inequality or uniformity within parts of a distribution have significant applications across various fields. In structural engineering, analyzing stress or strain distribution along beams or surfaces is crucial to identify regions prone to failure or excessive loading, ensuring structural safety and efficiency. In economics, measuring income inequality across different regions or demographic groups helps policymakers design equitable taxation and social welfare programs. In environmental science, the study of pollutant concentration distributions across ecosystems aids in identifying hotspots of contamination and planning remediation efforts. Similarly, in health care, the uniformity of drug distribution in the bloodstream or across a target organ can influence the effectiveness of treatment, such as chemotherapy or targeted drug delivery. In manufacturing, uniformity in thickness or material properties in products like films or coatings ensures quality and durability, while inequality could indicate defects or inefficiencies. In data communication, assessing the distribution of traffic in networks helps optimize load balancing and prevent bottlenecks. In physics, comparing energy or particle density distributions within systems can reveal insights into underlying processes, such as in plasma physics or astrophysics. In education, analyzing the distribution of test scores across schools or regions can highlight disparities in educational access or effectiveness. Across all these domains, understanding and comparing inequality or uniformity of distributions is integral to decision-making and optimization.

Entropy-based techniques have previously been applied to epidemiological modeling and mechanical stress analysis, often through scalar metrics such as Shannon entropy or LMC-type complexity measures [

5,

6]. Our recursive decomposition offers a structural alternative that localizes inequality and reveals null regions, potentially enhancing interpretability in these domains.

1.3. New in This Paper

Given that we can now precisely measure the degree of inequality of a probability distribution or its parts using the ratio for the part P, and also the fact that the degree of uniformity of a part is the weighted geometric mean of the degrees of uniformity of its sub-parts as seen in Theorem 2, we pondered on the following two questions:

Is there a way to systematically locate inequality in a given distribution, i.e., divide a given probability distribution into subsets with monotonically decreasing degrees of uniformity (or equivalently increasing degrees of inequality)?

Can we do this in a recursive way and maintain the nested ordering of degrees of inequality of parts? In other words, measurable subsets of two different measurable sets and with the ordering should also follow the same ordering of degree of inequality, i.e., (here is the degree of uniformity of the part P).

We emphasize the importance of preserving the ordering of inequality when subdividing a distribution. While one might use arbitrary cutoff points such as visual cues from probability values to define subsets, this does not guarantee monotonicity in the degree of inequality. In contrast, our method ensures that the monotonicity of degree of inequality is preserved, i.e., subsets of and with the ordering will automatically follow the same ordering of degree of inequality like we mentioned in the second point above.

Maintaining the monotonicity of the degree of inequality during subdivision is crucial for revealing the granularity of its localization. The method introduced in this paper enables a recursive partitioning of the probability space, where the cutoff points are determined by recursively employing the Hahn decomposition of the degree of uniformity measure. This approach ensures that each step refines the space into subsets with a well-defined and preserved ordering of inequality, derived from mathematical diversity, a concept rooted in entropy. The process continues until no further meaningful subdivisions arise, all while maintaining the hierarchy of inequality across levels. This allows for a coherent, scalable, and information-theoretically grounded localization of inequality.

The paper is organized as follows: In

Section 2.1, we briefly introduce the idea of a general signed measure and its Hahn decomposition. For a detailed discussion on measure theory, we refer to [

11]. In

Section 2.2, we recall some of the important background material related to the idea of mathematical diversity that is relevant to this paper. In

Section 3, we state and prove the main results of this paper related to the Hahn decomposition of the degree of uniformity measure. In

Section 4, we show some applications of our method to specific examples. In

Section 5, we conclude with some observations related to the main results in the paper.

2. Preliminaries

This section consolidates all foundational concepts required for the main results. We begin with signed measures and Hahn decomposition and proceed to diversity indices.

2.1. Signed Measures and Hahn Decomposition

A signed measure is an extension of the mathematical concept of measure, allowing values to be both positive and negative. Unlike a traditional measure, which assigns non-negative values to sets in a sigma-algebra, a signed measure can assign negative values, enabling it to model a broader range of phenomena. Key to understanding a signed measure are the notions of positive, negative, and null sets. A positive set is one where the signed measure assigns a non-negative value to every measurable subset, while a negative set has the signed measure assigning non-positive values to all measurable subsets. A null set, on the other hand, has a signed measure of zero for all its measurable subsets, making it distinct from a set of measure zero, which might still carry subsets with non-zero measures in the signed measure framework. The Hahn decomposition theorem formalizes the fundamental structure of a signed measure by asserting the existence of a partition of the space into two disjoint sets: one positive and one negative. This decomposition highlights the duality within a signed measure and provides a powerful tool for analyzing its properties. In our context, we will be applying the theory of Hahn decomposition to our degree of uniformity measure , which is a signed measure on a given probability space. As we will see later, we are able to apply the Hahn decomposition in a nested fashion in order to quantify and locate the concentration of inequality in different regions of a given probability space.

Next, we briefly define some key concepts to provide a clear foundation for introducing the Hahn decomposition.

Definition 1 (Signed Measure). A signed measure μ on a measurable space is a function such that:

Definition 2 (Positive (negative, null) Set). A set A is called a positive (negative, null) set with respect to a signed measure μ if for every measurable subset , we have: We note that in the Definition 2 above, the word positive can be replaced with strictly positive when , and negative can be replaced with strictly negative when . We emphasize the difference between a positive set and a set that has a positive value for the signed measure. A positive set necessarily has to satisfy the property that all measurable subsets should also have positive or zero measure. However, the total measure of a set can be positive due to cancellation of positive and negative measure values of its disjoint parts, for example. Analogous statements are true for negative and null sets which are, respectively, different than sets that have a measure value that is negative or zero.

Definition 3 (Hahn Decomposition). Given a signed measure μ defined on a measurable space , a partition of X into two disjoint sets P and N such that:

P is a positive set, i.e., for all measurable subsets ,

N is a negative set, i.e., for all measurable subsets ,

is defined as the Hahn decomposition of the space X with respect to the signed measure μ.

We state a theorem from [

11] below that guarantees the existence of the Hahn decomposition for a given signed measure on a measure space.

Theorem 1. Given a signed measure μ on a measurable space , there exists a Hahn decomposition which is unique up to null sets.

Theorem 1 means that given a Hahn decomposition , we could take a null set from and transfer it to (or vice-versa) to obtain another decomposition . Hence, any two decompositions differ only by null sets. However, as we will see later while applying this theorem to the degree of uniformity measure on a given probability space, the null set can be explicitly identified. This allows us to partition the original probability space into a disjoint triple , where P is a strictly positive set, Z is a null set, and N is a strictly negative set. This removes the ambiguity related to the null set that is presented in Theorem 1.

In the next section, we introduce some background on the topic of mathematical diversity and the newly discovered degree of uniformity measure.

2.2. Shannon Diversity and Hill Numbers

Mathematical diversity (denoted as

or

1D or just

D) quantifies both the variety of categories within a distribution (richness) and how uniformly they are represented (evenness), as described in [

7,

8,

9,

10]. A probability distribution that is uneven can be converted into a Shannon Equivalent Equiprobable (SEE) distribution, which is uniform but reflects the same amount of informational uncertainty. For a discrete distribution where all categories have equal probabilities, diversity corresponds to the total count of categories. In continuous distributions, it is determined by the length of the interval on which the distribution is uniform, represented by the Lebesgue measure of the support. Any lack of uniformity reduces the computed diversity. For further details on mathematical diversity, refer to [

7,

12,

13,

14,

15].

NOTE: We will summarize the key definitions and theorems on mathematical diversity from [

1,

2] that are pertinent to this study. In this paper, the subscript

K is used to denote a discrete distribution,

represents a set of contiguous indices in a discrete subset, and

indicates continuous distributions or intervals representing parts of these distributions. When a result applies to both discrete and continuous cases, we will specify this explicitly and omit subscripts. The notation

I will be used for the full support of a distribution:

for discrete distributions and

for continuous distributions, as applicable.

Definition 4. (Shannon Diversity corresponding to for Hill numbers) Consider a discrete random variable X with support (with allowed) and its probabilities , or a continuous random variable X with support (with and allowed) and its probability density . The diversity of the entire distribution 1D is defined as the length of the support of an equivalent uniform distribution that yields the same value of Shannon entropy H.

Shannon entropy for discrete and continuous distributions is defined as below:

To avoid mathematical pathologies, we only consider probability distributions for which the entropy

is finite. It has been shown [

7,

8,

9,

10] that Definition 4 implies that the total diversity

1D (for both continuous and discrete distributions) is given by:

We will focus exclusively on the case

for the Hill numbers and, hence, will omit the left superscript 1, referring to the diversity simply as

D. The choice of

is made because it assigns equal weight to both richness and evenness.

For any subset , we have already defined the partwise entropy and diversity . Let denote the partwise mass. While we do not introduce a separate symbol, the ratio serves as a useful interpretive quantity; it reflects the effective diversity per unit mass within P, and will play a role in the analysis of uniformity and concentration.

We have spent quite a bit of time constructing and justifying the name

degree of uniformity or

degree of inequality for the ratio

in [

1,

2,

3]. We have also discussed the scale-free and self-contained nature of the ratio

in [

4]. We recall a few of the ideas from our previous papers here for the sake of continuity.

Theorem 2. Let with be a discrete probability distribution, with permitted. Let be a probability density function (pdf) on , with and permitted. Let be a disjoint partition of a part . Then the following is true for both discrete and continuous distributions: We next define the degree of uniformity of a part or .

Definition 5. Let P denote a measurable subset of the form for a discrete probability distribution or for a continuous distribution. The ratio is termed as degree of uniformity or degree of inequality of the part P.

We define the

degree of uniformity measure [

4] as follows:

Definition 6. Let with be a discrete probability distribution, with permitted. Alternatively, let be a probability density function (pdf) on , with and permitted. Let P be a general measurable subset (not necessarily contiguous) of I. We define a new signed measure on I called the degree of uniformity or degree of inequality measure for such a measurable subset (irrespective of whether it is from a discrete or continuous distribution) by the following: Remark 1. The degree of uniformity measure in Definition 6 is with respect to the entire probability space I, where we have replaced the diversity with simply the letter D. This measure can also be defined for restrictions on proper subsets with D replaced by in Definition 6. The degree of uniformity measure for the restriction to the set P will then be denoted by . For the entire probability space I, we have that since and .

The degree of uniformity measure

is a signed measure as seen in Definition 6. Given a measurable subset

P, the sign of

indicates whether the given measurable subset

P has a degree of uniformity that is less than, equal to, or greater than

D, which is the degree of uniformity of the entire distribution. If we replace the

D in

with the ratio

, then

can be used to compare the degree of uniformity of the parts

and

which can actually be parts from two different distributions. We state a theorem below that shows this fact in addition to other properties of

which were proved in [

4].

Theorem 3. Let with and with be two different discrete probability distributions corresponding to random variables and , respectively, with and permitted. Similarly let and be two different continuous probability distributions on and corresponding to random variables and respectively, with and allowed. Let and be measurable subsets from the probability spaces of and respectively. Then the following is true:

This completes our brief introduction to the background material on mathematical diversity that is useful for this paper. In the next section, we try to connect the idea of Hahn decomposition to the degree of uniformity measure.

3. Hahn Decomposition of the Degree of Uniformity Measure

The most important part of Theorem 3 is Equation (

8). It says that if the measure

has a positive (zero or negative) value for a given set

then that means the degree of uniformity of

is larger (equal to or smaller) than the degree of uniformity of

. This fact was explored in [

4]. In this paper, however, we are motivated to take advantage of the Hahn decomposition as stated in Theorem 1, which guarantees the division of the probability space

I into positive and negative sets

P and

N using the degree of uniformity measure

, i.e.,

. The key observation here is that

and

. Although Theorem 1 guarantees that the decomposition is unique up to null sets, in fact, as we will see later, we can explicitly obtain a description of the null set

Z which satisfies

.

Hence, in our specific case of application of the Hahn decomposition theorem to the signed measure on the probability space I, we actually obtain a disjoint triplet . The set P in our case is called strictly positive and N is called strictly negative, and the null sets have been removed and placed into the set Z. In addition, for all measurable subsets , , and , we have that , , and . Hence the decomposition preserves the monotonicity with respect to the degree of uniformity measure in the following sense. The original probability space is split into three subsets: P has a larger degree of uniformity than I, Z has the same degree of uniformity as I, and N has a lesser degree of uniformity than I. Hence . Furthermore for subsets , we have that , i.e., all measurable subsets of , and N also follow the same monotonicity of order with respect to degree of uniformity. The monotonicity of ordering is preserved because of the fact that all measurable subsets of positive sets with respect to a given signed measure are necessarily positive as well, by definition. In what follows, we outline the general idea of the nested Hahn decomposition that we will construct in the rest of the paper. This will set the stage for the main results in this paper.

We can use Equation (

8) in a nested fashion in the following way:

First, we start with the probability space I that is given to us, for which we have and , the diversity of the entire distribution. We use the Hahn decomposition method for signed measures to the measure on the entire probability space I and split it into a strictly positive and strictly negative set P and N, respectively. As we will see during the construction, the null set can also be explicitly calculated and we will denote that by Z.

The strictly positive set P has a larger degree of uniformity, the null set Z has an equal degree of uniformity, and the negative set has a lesser degree of uniformity than the entire distribution, respectively. This is the first step in the decomposition.

Since the strictly positive set P has a degree of uniformity equal to , we can use the signed measure denoted by to further subdivide P into three parts using the Hahn decomposition for . The three parts will have a larger, equal, and lesser degree of uniformity, respectively, than P. Note that the measure is different than the measure . The former splits the entire distribution into three parts and the latter splits just the positive set P into three parts.

The set Z obtained from step 1 cannot be split any further because it is uniform, and every measurable subset of a uniform distribution has the same degree of uniformity as the entire distribution. However, due to the preservation of monotonicity, Z is carried over into the next level at the same position as before.

The set N obtained in step 1 can be split in a similar way to the set P in step 3, as a result of the Hahn decomposition of the measure leading to three subsets P, Z, and N.

Hence, in the second level, we would in general have 7 partitions, the that came from P, the Z from the previous level that cannot be split any further, and the that came from N.

This procedure can be continued with the caveat that all the

Zs obtained in previous levels get carried over into the next level and all the

Ps and

Ns get split into

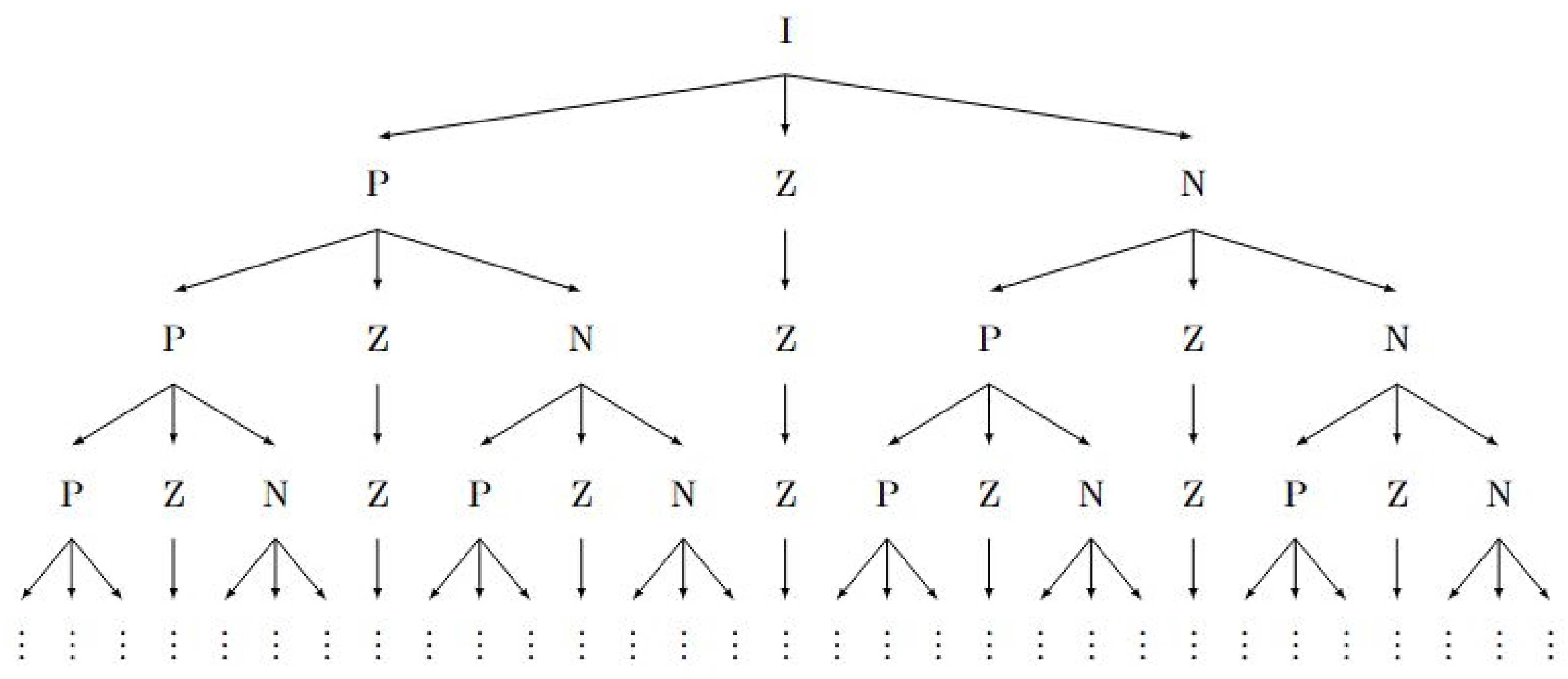

. We use arrows to indicate parent and children in the form of a tree diagram as shown in

Figure 1.

We next state and prove an important result relating to strictly positive, strictly negative, and null sets with respect to the degree of uniformity measure . We present the theorems for discrete and continuous cases separately for clarity of reading:

Theorem 4. Let with be a discrete probability distribution, with permitted. Alternatively, let be a probability mass function (pmf) on , with and permitted. Let P be a general measurable subset (not necessarily contiguous) of I, and let denote the degree of uniformity measure on I. Then we have the following: Proof. We will prove the theorem for the strictly positive property. The proof for strictly negative and null properties follow the same lines.

□

Theorem 5. Let with be a discrete probability distribution, with permitted. Alternatively, let be a probability density function (pdf) on , with and permitted. Let P be a general measurable subset (not necessarily contiguous) of I, and let denote the degree of uniformity measure on I. Then we have the following: We have the following immediate corollary:

Corollary 1. Let with be a discrete probability distribution, with permitted. Alternatively, let be a probability density function (pdf) on , with and permitted. Let A and P with be general measurable subsets (not necessarily contiguous) of I, and let denote the degree of uniformity measure on the restriction . Then we have the following: Proof. The proof follows the same lines as Theorems 4 and 5 by applying those steps to the measure . □

The main points of Corollary 1 are the following:

We first note that for , we have that the total probability is 1, i.e., and the diversity is (which we have written as D in this paper). Hence we recover Theorems 4 and 5.

If then the degree of uniformity measure restricted to P is as seen in Remark 1. Hence, we can now obtain the Hahn decomposition of the proper subset P with respect to , leading to three subsets that are more uniformly, equally uniformly, or less uniformly distributed, respectively, than P.

From point number 2, it is clear that the subsets obtained after decomposing P can further be decomposed using the degree of uniformity measure restricted to those subsets and, hence, this decomposition process is nested and can be continued ad infinitum until there are no new sets that are created. This is the main idea of the nested Hahn decomposition in this paper.

Next, we state and prove a theorem that explicitly calculates the Hahn decomposition of the probability space I or any of its subsets. We state and prove the theorem for an arbitrary measurable subset . This means that the starting point of the decomposition can be any measurable subset of I and not just I itself. We will revisit this idea later.

Theorem 6. Let with be a discrete probability distribution, with permitted. Alternatively, let be a probability density function (pdf) on , with and permitted. Let be an arbitrary measurable subset and be the degree of uniformity measure restricted to A. Then, A has the following disjoint Hahn decomposition triplet with respect to the measure where is a strictly positive set, is a null set, and is a strictly negative set:Furthermore, , and are the largest strictly positive, null, and strictly negative subsets of A with respect to the signed measure , respectively. Proof. We first note that the same proof works for

as well. Given that

in general, we can consider a renormalization of the probabilities to

in the discrete case and the density to

in the continuous case. With the renormalization,

A is now a bona fide probability space in its own right. Let us denote the sigma-algebra of all events in the sample space

A by

. An application of Theorem 1 to the signed measure denoted by

on the measure space

splits the set

A into

, where

is a positive set and

is a negative set. The decomposition is unique up to null sets. However, we note that from Corollary 1, the null set part of

A with respect to the signed measure

(denoted by

) is given by the following:

for the discrete and continuous cases, respectively. Hence, the ambiguity of where to put the null set can be resolved by simply removing

and treating it as a third part of the decomposition. After removing

(the null set portions from

and

),

will be strictly positive and

will be strictly negative, where we use the same notation for

and

even after removing

from them. Equations (

15) and (

17) will then follow from Corollary 1. Hence, for both the discrete and continuous cases, we have a disjoint Hahn decomposition given by

.

To show that

is the largest strictly positive subset of

A follows from Corollary 1 since all strictly positive subsets

should necessarily satisfy

, or

, we can collect all such

is in the discrete case or

xs in the continuous case and that will be the largest positive set. However,

is precisely this set by Equation (

15). To show that

is the largest null subset and

is the largest strictly negative subset follows the same line of argument.

This proves the theorem. □

Theorem 6 allows us to decompose any subset into a disjoint triplet where is strictly positive, is null and is strictly negative with respect to the measure , which is the degree of uniformity measure for the set . This lays down a concrete framework to recursively decompose and further into and with respect to the measures and , respectively. A few things are of importance here:

Since is strictly positive with respect to , it has a larger degree of uniformity than the set A. Furthermore, every subset of is also strictly positive with respect to and hence the sets from the next level of decomposition are guaranteed to be strictly positive with respect to and hence are guaranteed to have a higher degree of uniformity than A. By the same token, has a lower degree of uniformity than A and so do its subsets . Hence, the degree of uniformity preserves the ordering within subsets.

The set

is a uniform distribution, and by definition every subset of

has the same degree of uniformity as

itself. Hence, it cannot be decomposed any further. However, since

, to preserve the ordering of degree of uniformity in the subsequent levels,

needs to be carried over into all subsequent levels maintaining its position in the ordering. This will preserve the decreasing order of degree of uniformity for sets in each level from left to right in

Figure 1.

We reiterate that degree of uniformity is synonymous with degree of inequality in the sense that they are reciprocals of each other. The ratio

tells us the amount of SEE equivalent extent of the random variable situated per unit cumulative frequency in

P and is a direct indication of how uniform the random variable is in

P. Hence, the tree diagram in

Figure 1 is actually a decomposition of the original probability space

I into subsets with increasing degree of inequality

from left to right in a given horizontal level, and an increasing level of granularity as we go down the levels. This justifies the term

localization of inequality since we can pinpoint the subsets in a given level where there is a larger localization of inequality. In fact, for each of the subsets in a given level, we can explicitly calculate the degree of inequality

and compare it with that of other subsets in the same level. We can form ratios of degrees of inequality of subsets in a level to describe how much more or less unequally distributed two sets are compared to each other, or equivalently how much more or less concentrated the random variable is on the two sets.

4. Examples

We chose the exponential and binomial distributions as quick warm-up classical demonstrations of the nested Hahn decomposition method. The third example is a hypothetical distribution of disease contraction across age groups and the last one is an example of distribution of stress on a beam. These two examples are hypothetical and demonstrate the usefulness of our method for distributions obtained from real-life data. We end the examples section by showing a bona fide null set given that the previous examples did not admit a null set during the decomposition. For the disease contraction and the beam examples in this section, a standalone tree structure from [

16] was used to do the nested Hahn decomposition in Matlab, whereas the exponential and the binomial examples were done by hand. We state the recursive step to compute the subdivisions of a general measurable subset

A below:

Compute .

Divide A into , and in the discrete case or , and in the continuous case.

Repeat steps (1) and (2) with the set A replaced by and to compute the partitions in the next level. Carry over to the next level at the same horizontal position as the current level.

4.1. Exponential Distribution with

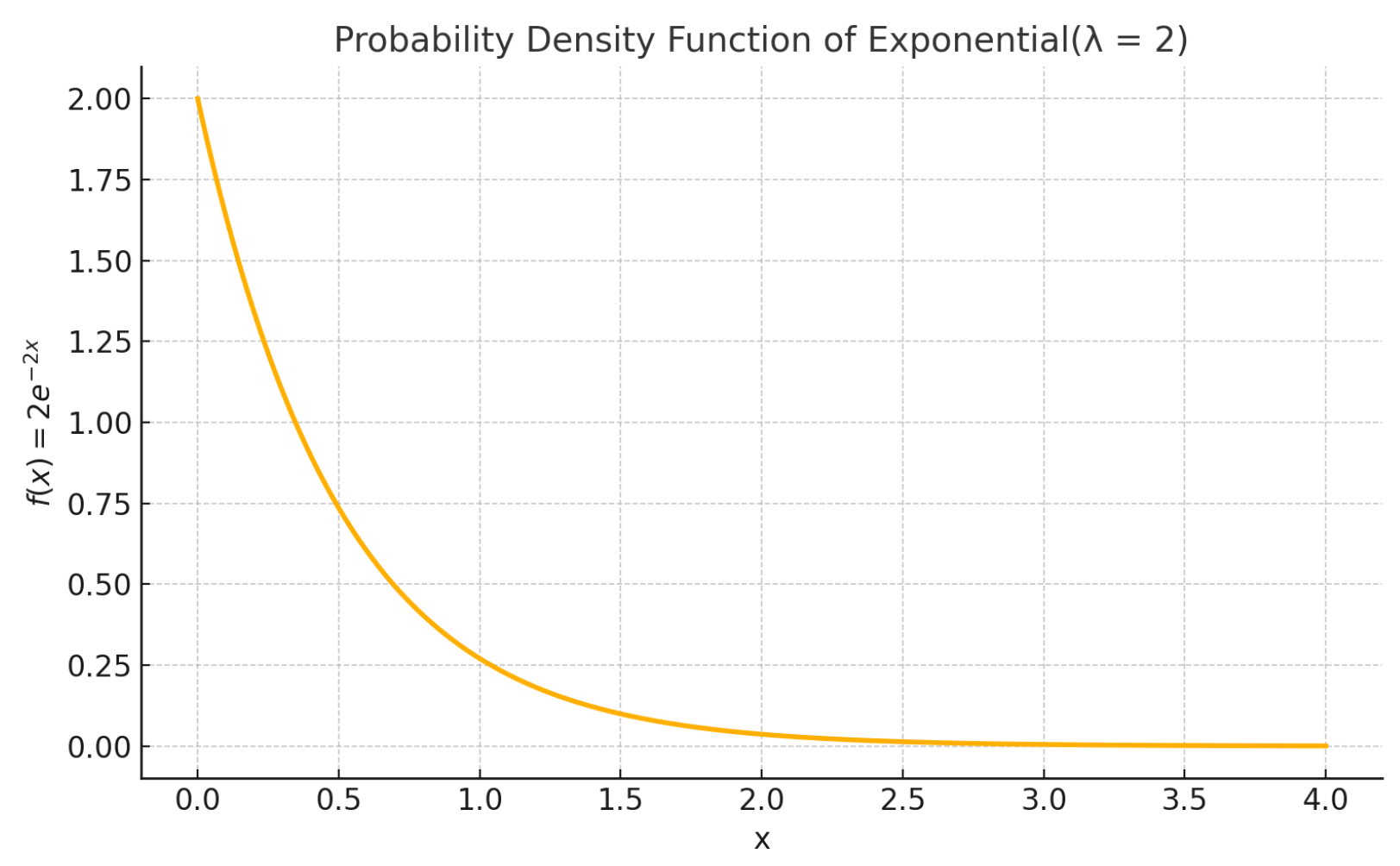

In the first example, we apply the nested Hahn decomposition method to the exponential distribution with

. We show the probability density function in

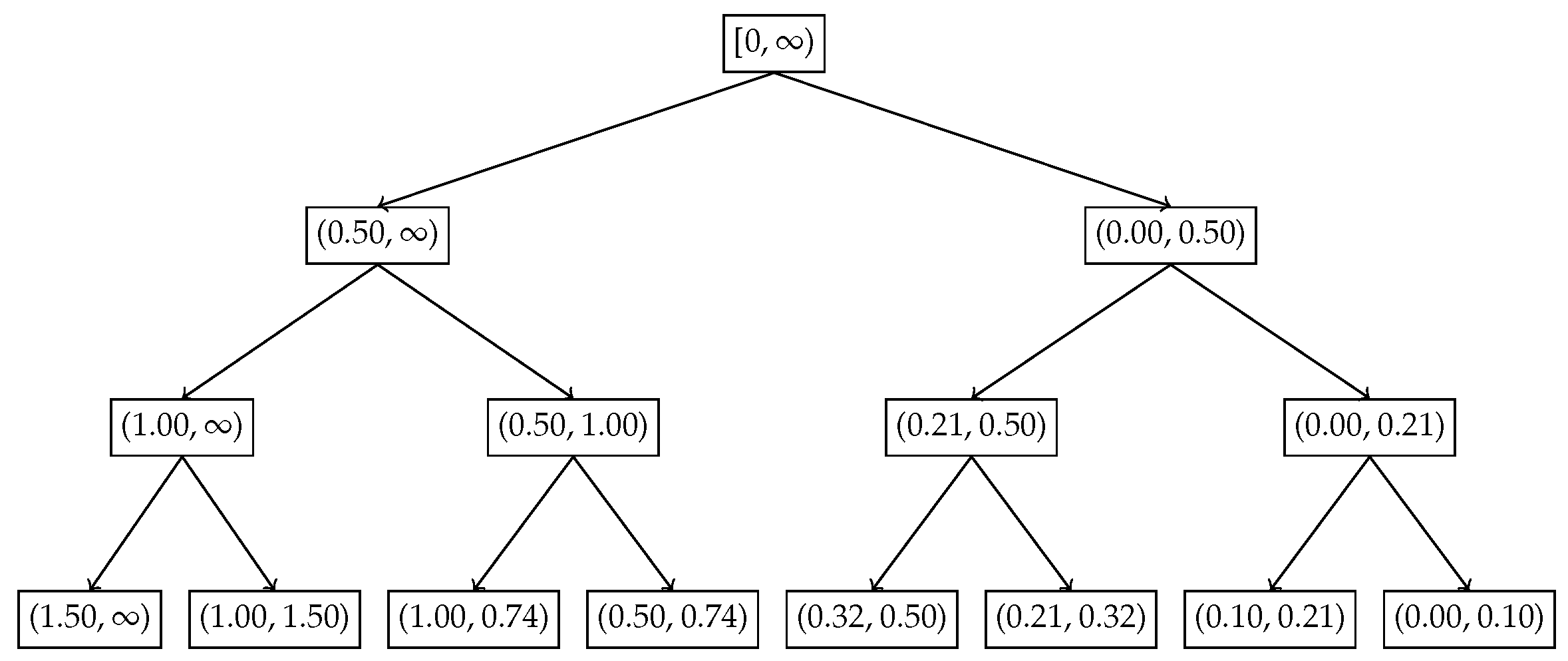

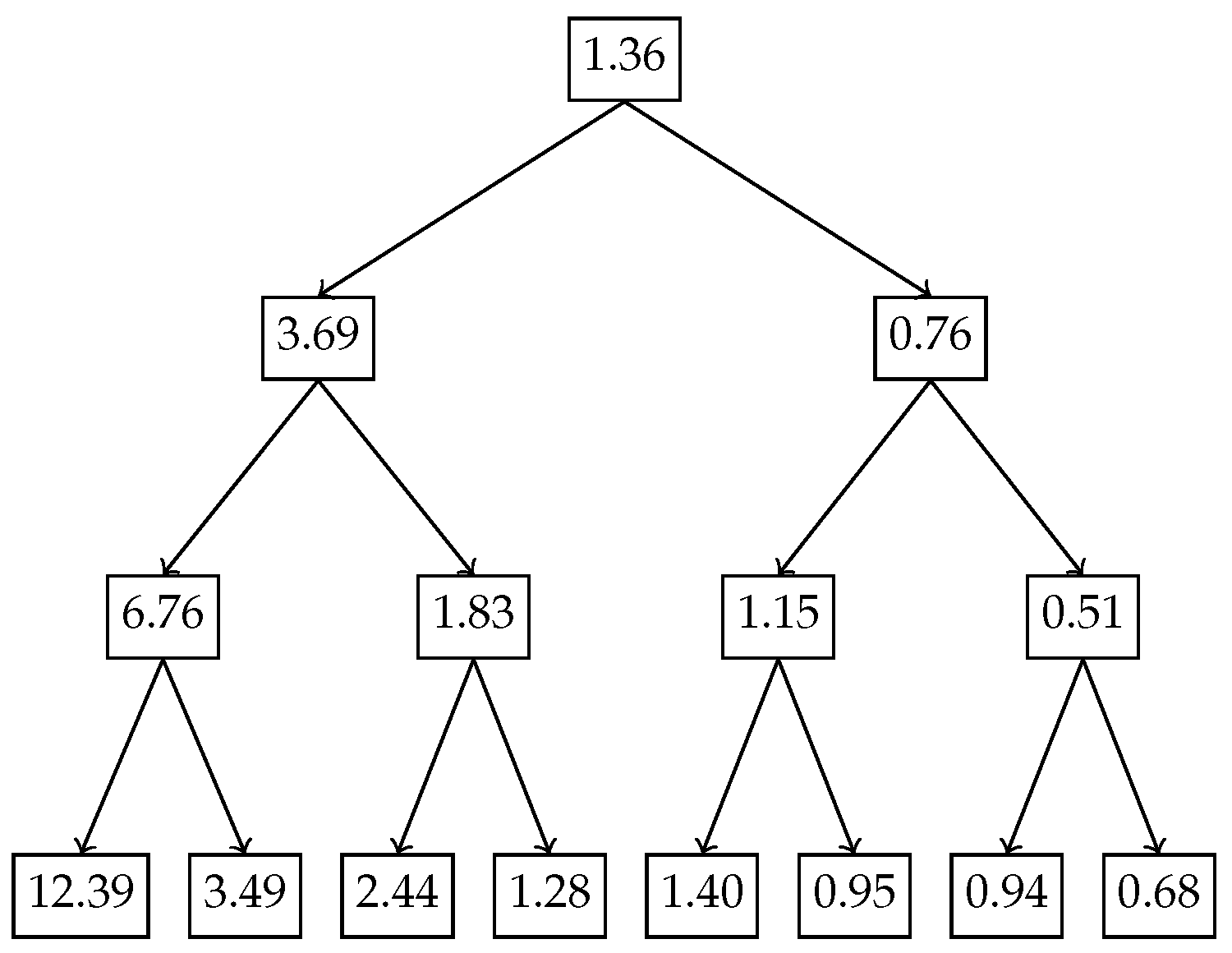

Figure 2, the tree with the subsets in

Figure 3 and the tree with the degrees of uniformity show in

Figure 4, respectively. Intuitively, from

Figure 2, it is clear that the distribution becomes more and more uniform as

. Hence, we expect that the subsets to the right have a higher degree of uniformity as opposed to the ones closer to

.

Figure 3 and

Figure 4 illustrate how the inequality is localized across recursive subdivisions of the exponential distribution using the recursive Hahn decomposition. The Hahn decomposition of the exponential distribution with rate parameter

exhibits a consistent monotonic ordering of the degree of uniformity, quantified by the ratio

, across all levels of refinement. At the second level, the probability space

is split into four disjoint intervals:

,

,

, and

, with corresponding

values of 6.76, 1.83, 1.15, and 0.51. These values decrease steadily from left to right, and the ratios between them are revealing: the uniformity in

is approximately

times greater than in

, over

times greater than in

, and over 13 times greater than in

, underscoring the decrease in degree of uniformity toward the origin. At the third level, each Level 2 part is further refined, producing eight sub-intervals:

,

,

,

,

,

,

, and

, with

values of 12.39, 3.49, 2.44, 1.28, 1.40, 0.95, 0.94, and 0.68, respectively. Here too, the most uniform region,

, has a

value nearly 5 times that of

and over 18 times that of the least uniform region

. These sharp contrasts quantitatively reinforce how the Hahn decomposition isolates areas of varying inequality, with the recursive partitioning honing in on regions of concentrated probability and diminished uniformity. Although the decomposition can be continued further, we chose to stop after 3 levels since there is no natural end to the process due to the continuous nature of the distribution.

4.2. Binomial Example

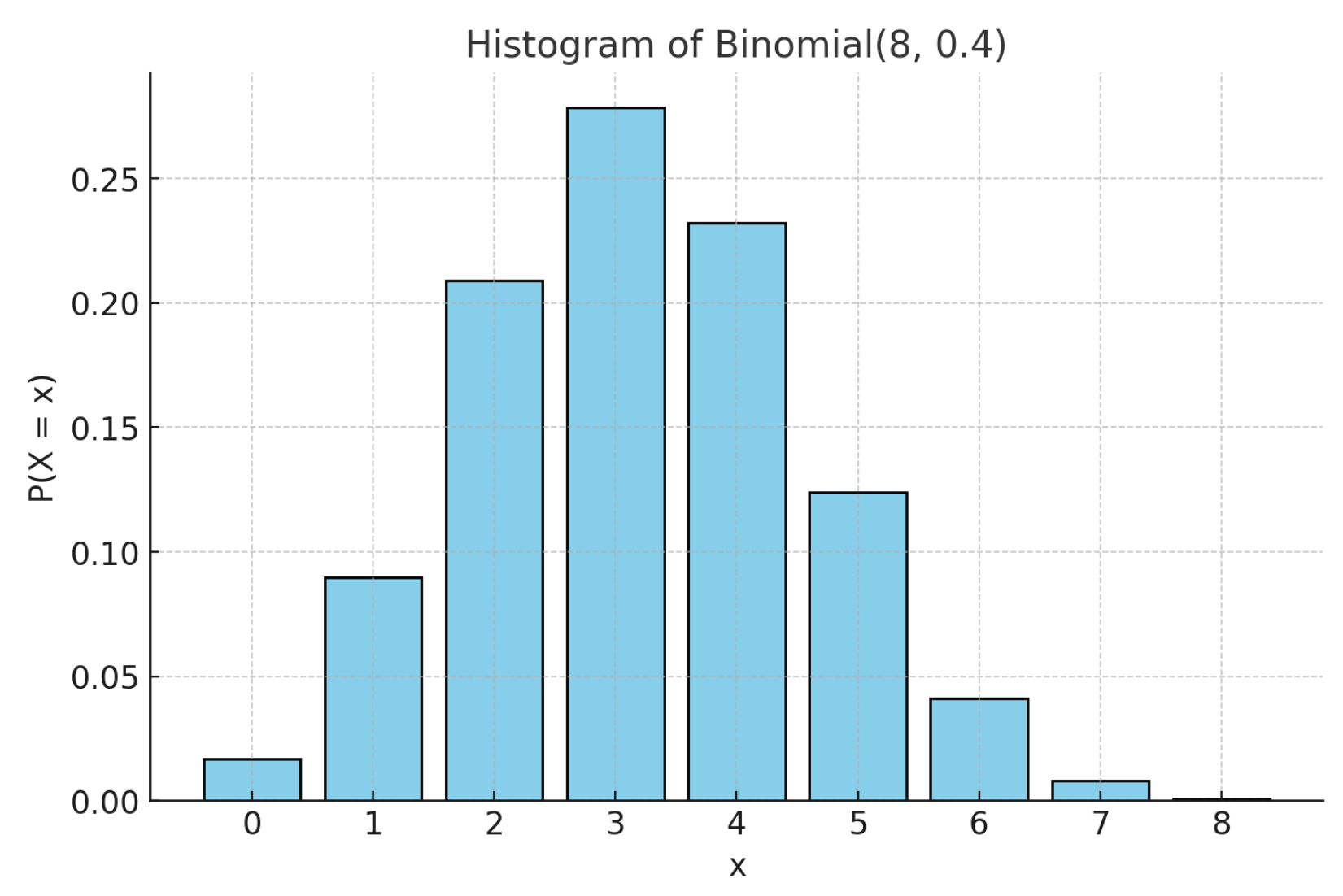

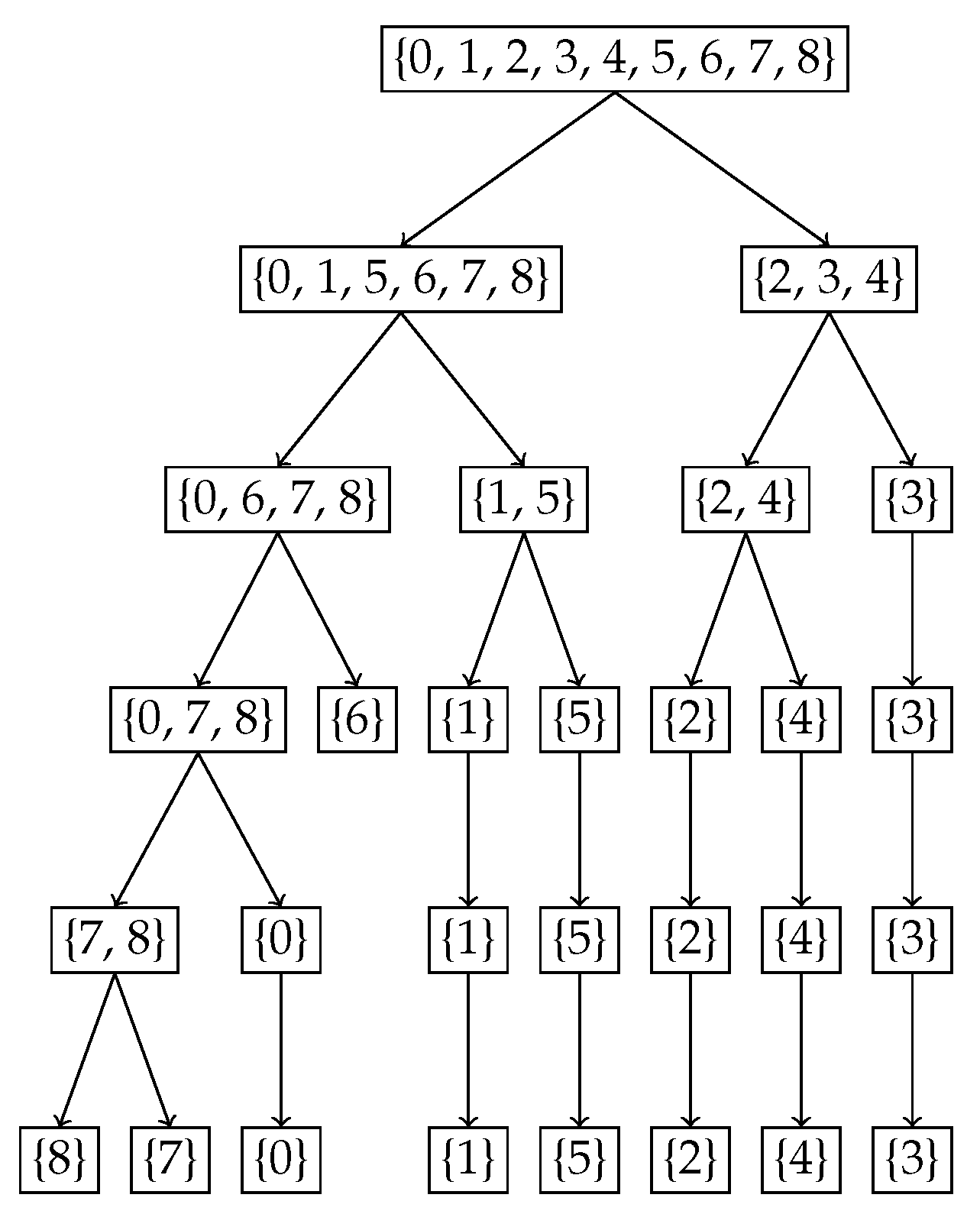

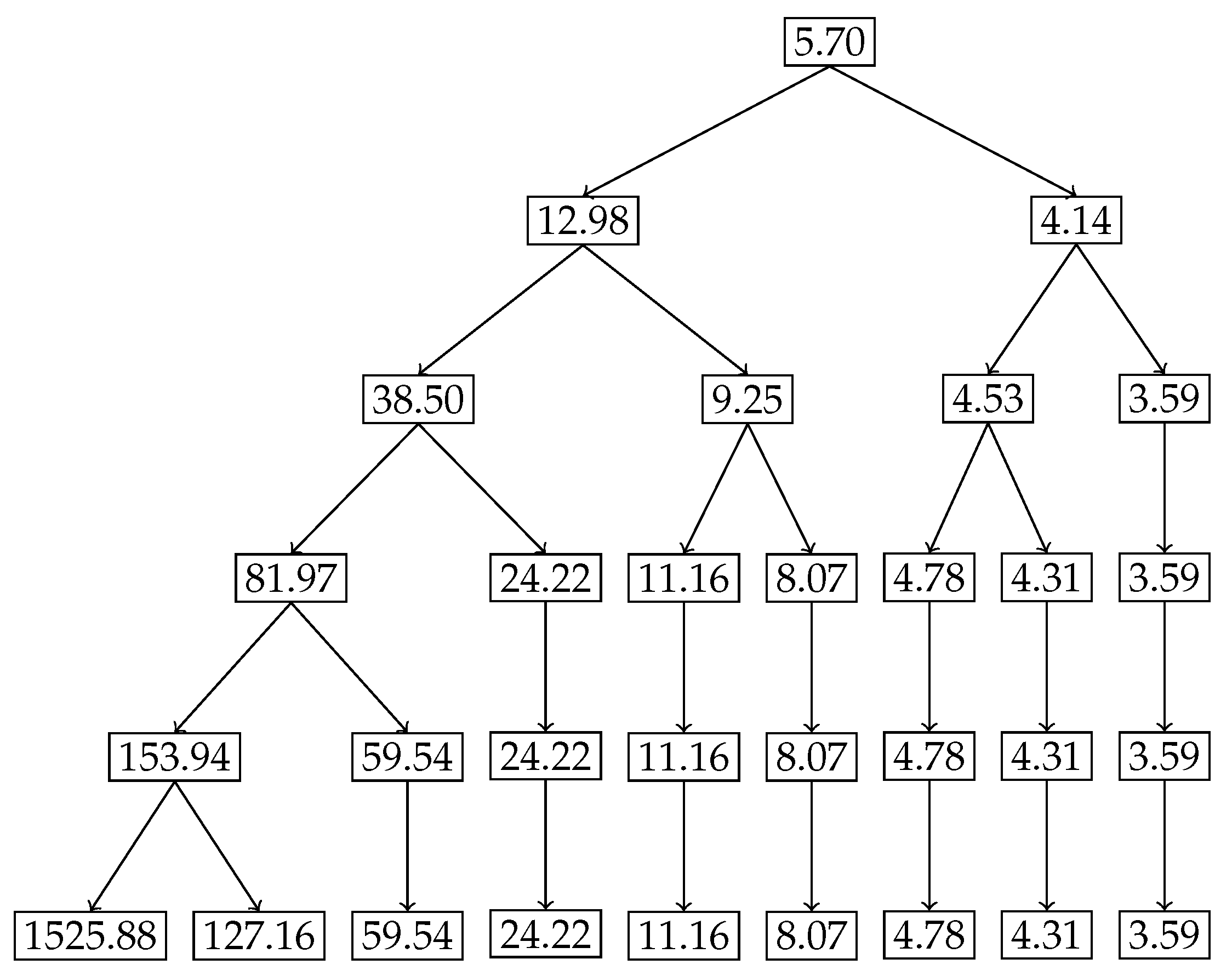

For the second example, we chose the binomial distribution

. Given the discrete and finite nature of this distribution, we expect the nested Hahn decomposition to stop after a few levels. We show the histogram of the original distribution in

Figure 5, the tree showing the subsets from the nested Hahn decomposition in

Figure 6 and the tree showing the corresponding degrees of uniformity of subsets in

Figure 7, respectively. Intuitively we expect the center portion of the distribution to be less uniformly distributed compared to the tails. This is precisely what we see in both trees. The decomposition stops eventually when all the subsets are singletons.

Figure 6 and

Figure 7 demonstrate how the monotonicity of the degree of uniformity is preserved across recursive subdivisions for the Binomial distribution

. In the Hahn decomposition of the

distribution, the sample space

is partitioned at Level 2 into four disjoint subsets:

,

,

, and

, with corresponding

values of 81.97, 24.22, 8.07, and 3.59, respectively. These values decrease consistently from left to right, indicating increasing inequality and decreasing degree of uniformity. Comparing the ratios, the first part

has a

over

times greater than

, over 10 times greater than

, and over 22 times greater than

, which captures the point of maximum probability and hence degree of uniformity. At the next level of refinement, the subsets are further decomposed into

,

,

,

,

,

, and

, with associated

values of 153.94, 59.54, 24.22, 11.16, 8.07, 4.78, 3.59. The singleton

stands out with a strikingly high

value of 1525.88, reflecting maximal uniformity in an outcome with very low probability mass. This sharp contrast further illustrates how the recursive Hahn decomposition localizes regions of high and low uniformity: subsets with rare outcomes (e.g.,

and

) exhibit high entropy per unit mass due to their dispersion in a skewed distribution, while concentrated mass points (e.g.,

) correspond to minimal uniformity. These results echo the patterns seen in the exponential case, but within a discrete framework, reinforcing the mathematical and intuitive consistency of the approach.

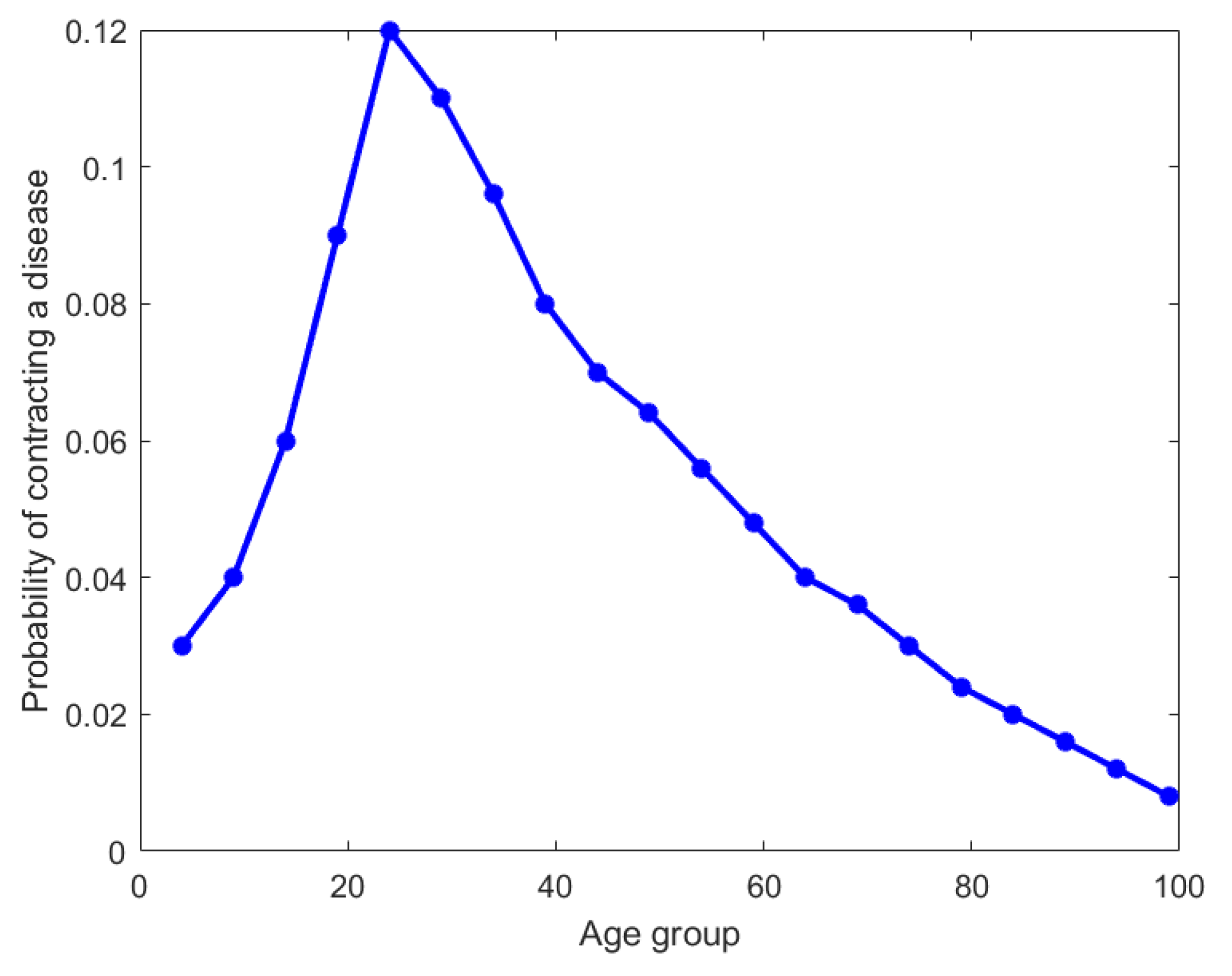

4.3. Disease Distribution Across Age Groups

For the third example, we analyze a hypothetical dataset that is a distribution of incidences of a particular disease across 20 age groups. As seen in

Figure 8 (the original distribution), the age groups are 0–4, 5–9, etc., until 95–99. For brevity, we have chosen to plot the upper bound of each interval on the x-axis. It is clear that this disease has a maximum incidence of

for the age group of 20–24 and tapers off on either side. We have applied our nested Hahn decomposition algorithm to quantify and locate the inequality of incidence in disease.

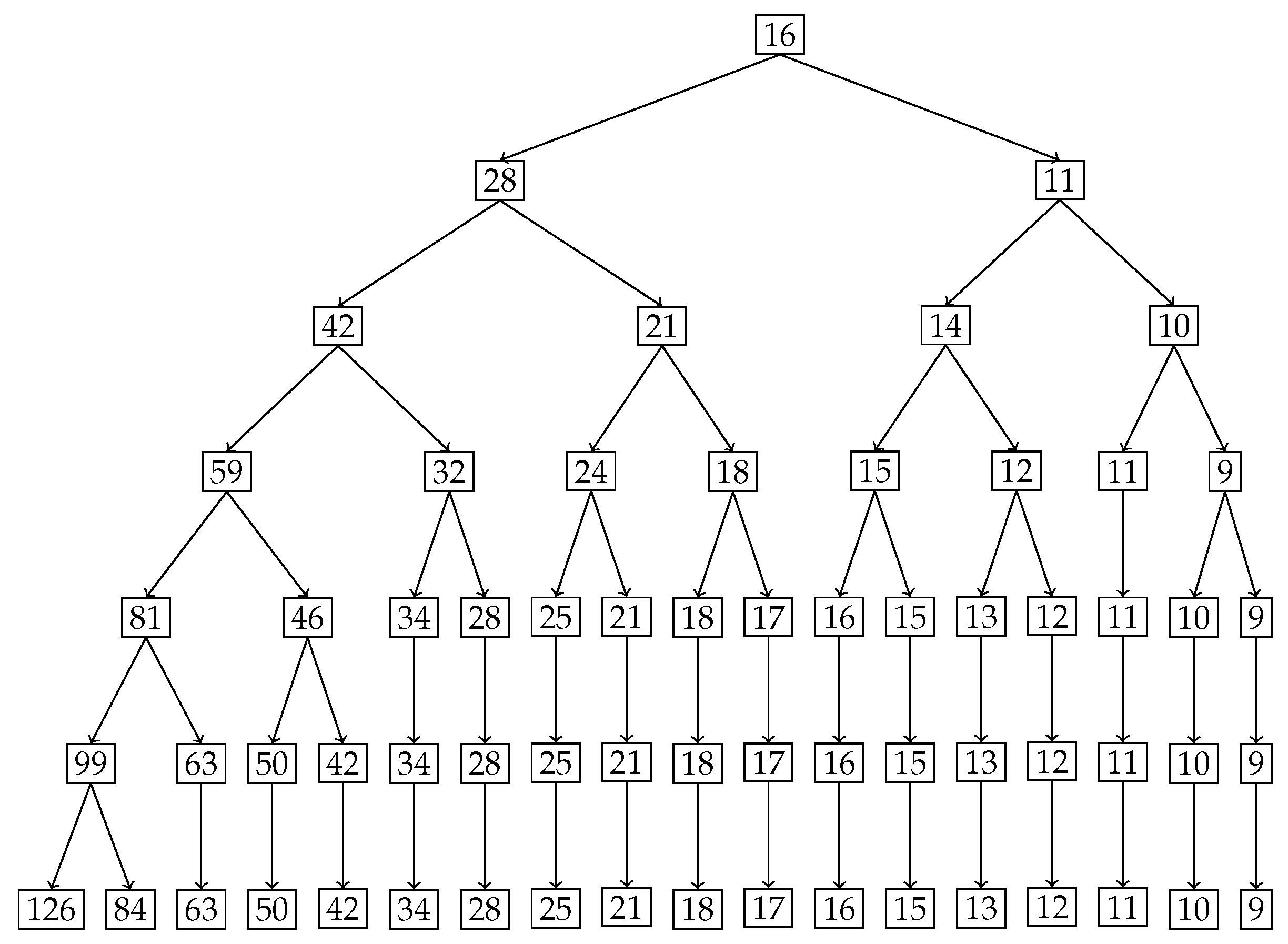

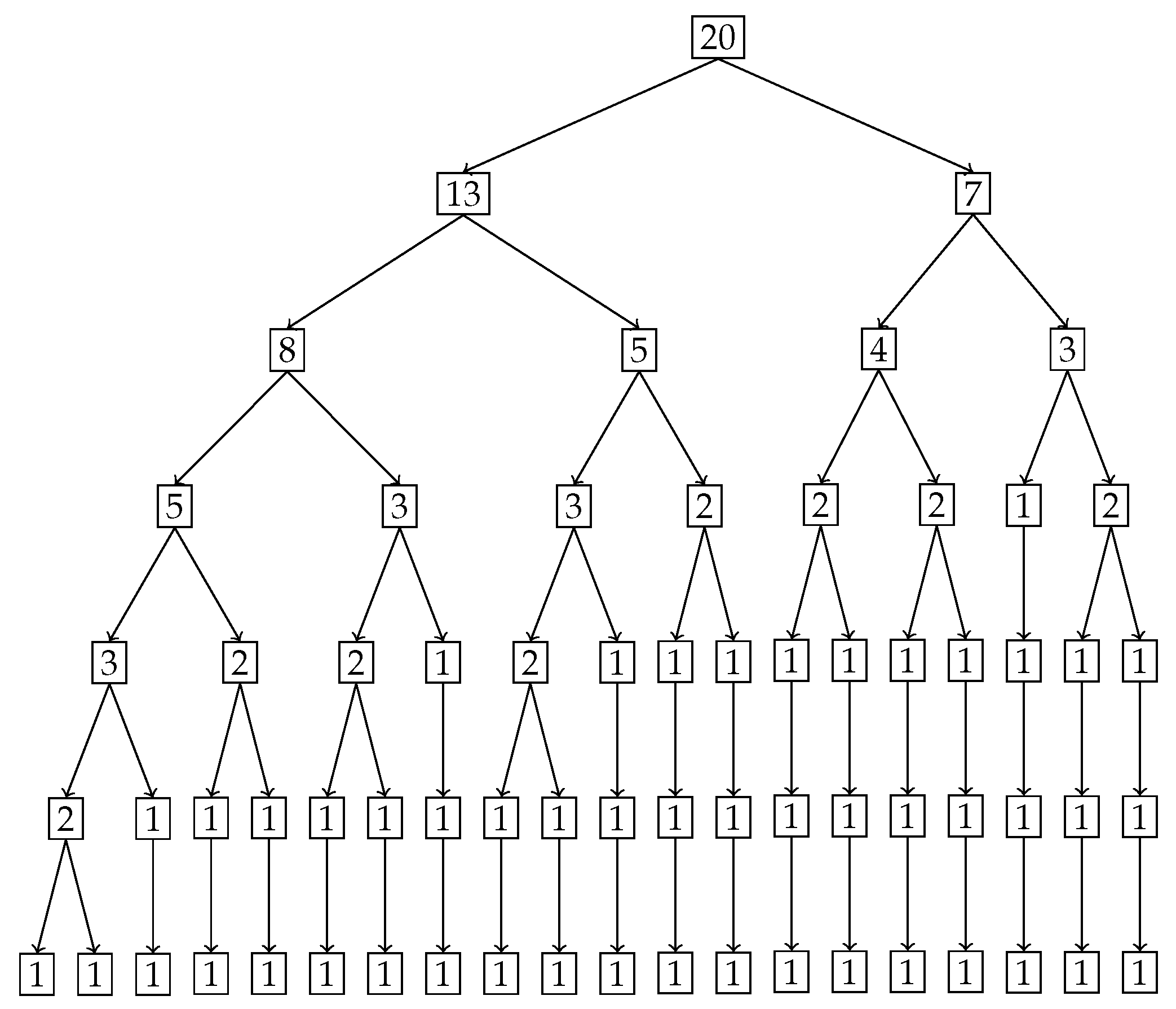

In

Figure 9, the tree diagram obtained from the nested Hahn decomposition algorithm is shown with the degree of uniformity of parts shown as numbers. For example, the entire distribution has a degree of uniformity of 16 which after splitting in the first level gives two parts, one with a

on the left and

on the right. There are no

Z (uniform) parts in this example. We see that there are 6 unique levels with the 7th level being a repeat, thereby serving as a stopping criterion for the algorithm. To keep our example simple, we only consider integer ages and assume that the ages are rounded down to the nearest integer. Hence an age of

years will be recorded as 3 years. We also chose to round up the degree of uniformity to the closest integer to avoid writing decimals in the tree diagram.

Figure 9 shows the degree of uniformity of each part at each level.

Figure 10 shows the number of elements in each part on the same tree diagram as

Figure 9.

We explore the meaning of the tree diagram in

Figure 9. The original dataset has 20 elements. We see that after level 4, there are way too many sub-parts with a single age group in them. We choose to discuss levels 2 and 3 to show how our method quantifies and locates the inequality of incidence of disease. We also note that after level 3, the right branch divides into sub-parts that all have more or less the same degree of uniformity. However, the left sub-part has a lot of inequality left as seen in the wide variation of degree of uniformity. We could discuss all the sub-parts in all levels, but in general, we choose levels with substantial or important age groups that are of interest from prior knowledge, or choose levels with decent sizes of sub-parts if needed.

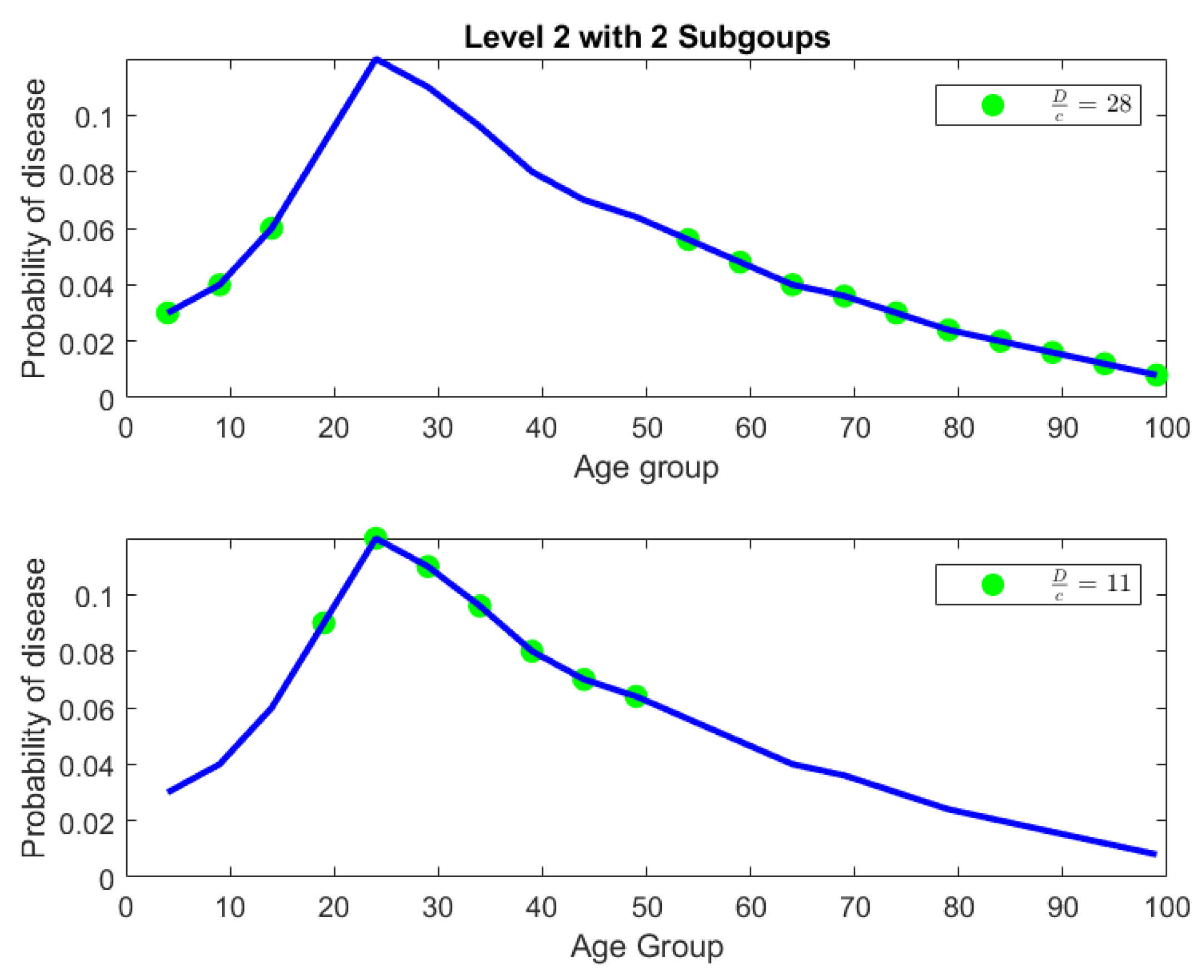

Level 2: In level 2, we have two parts. The first part is ages

with a degree of uniformity of 28. The second part is ages

with a degree of uniformity of 11. Both parts in Level 2 are shown in

Figure 11. There is a concentration of incidence of disease in the age group

that is more than twice (in fact the ratio is

) the concentration of disease in the age group

. Alternatively speaking, the age group

is

times more uniformly distributed compared to the age group

. It is already clear at this point that the age group

contains the bulk of incidence of disease, entropically speaking. We also note that by construction of the Hahn decomposition, we are automatically guaranteed that all sub-parts of the age group

will have a larger degree of uniformity than the entire distribution (the previous level). Similarly all sub-parts of

are guaranteed to automatically have a degree of uniformity smaller than the entire distribution (the previous level). In fact, it is clear from

Figure 9 that monotonicity of degree of uniformity is preserved as we go down the levels, which was an important consideration as explained in the introduction.

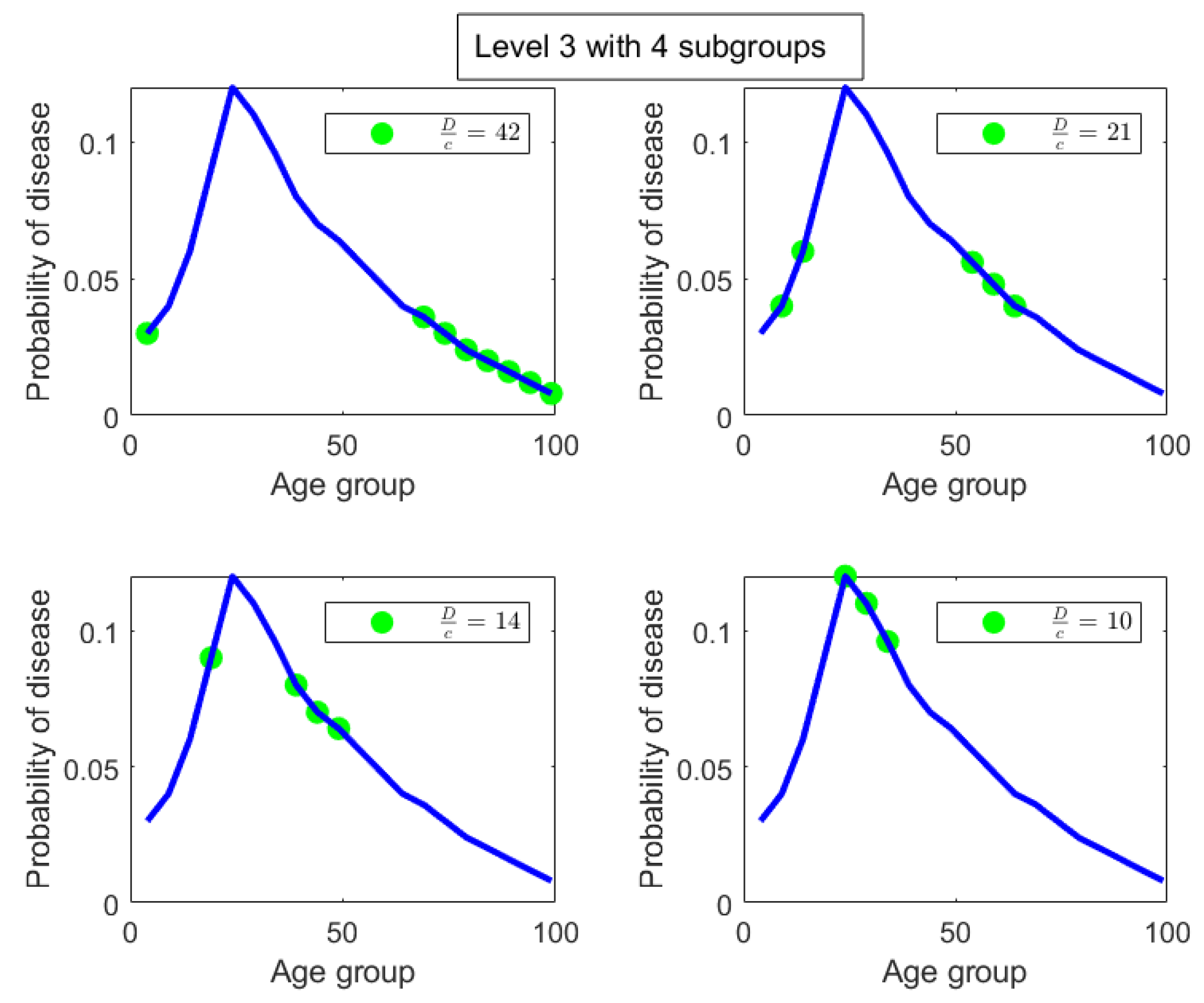

Level 3: We note that in Level 3, the age group

from Level 2 splits into 2 sub-parts, namely,

and

. Those have

values of 42 and 21, respectively, and are shown in the top-left and top-right pictures in

Figure 12 along with their degrees of uniformity labeled in the respective figures. Clearly we are further isolating the inequality within the

age group from Level 2, as the new sub-part

is twice as concentrated in disease incidence as the new sub-part

. The other part from Level 2, namely the age group

, splits into 2 sub-parts as well, given by

and

. These two sub-parts are shown in the bottom-left and bottom-right pictures in

Figure 12. Those have

values of 14 and 10, respectively, which are shown in the labels of the pictures. Hence, these two new sub-parts have comparable concentration of disease incidence. However, the sub-part

has approximately twice the concentration of disease incidence as the subpart

in the same level (Level 3).

We stop the analysis with these two levels but we make the following points:

We started with entire distribution of 20 age groups. However, we could have started with any sub-part of the distribution and the same algorithm can be used to construct a tree diagram.

It is clear from the analysis of the two levels (Level 2 and Level 3) that not only are we able to locate the inequality in the form of explicit age groups, but we are also able to quantify the relative concentrations of inequality between each sub-part.

In this example, there were no null sets that were formed since all the splits were binary. That is due to the monotonicity of the probability values. We do see in the last level (Level 6) that there are some sub-parts with two age groups that do not split. They are uniform distributions and they appear only at the end; however, they are not null sets as seen by their non-zero degree of uniformity values seen in

Figure 9.

To reiterate, we have successfully maintained the monotonicity of degree of uniformity across subsets, i.e., to repeat, measurable subsets of two different measurable sets and with the ordering also follow the same ordering of degree of inequality, i.e., .

We also reiterate that the ratio is the degree of uniformity, however its reciprocal is the degree of localization in the part P. For example, in Level 2, with and , we have that and for the two sub-parts generated. This means that is times more uniformly distributed than , or equivalently is times concentrated (or unequally distributed) than . This means that the population sub-group in part is approximately times more susceptible to the disease than the population sub-group in part .

Level 2 tells us that the age group is more than twice as vulnerable to this disease compared to the ages given by .

Level 3 delves deeper into both these subgroups to further isolate the inequality. In particular, ages are twice as vulnerable as ages . Also, ages are twice are vulnerable as .

We can continue to dig deeper by analyzing further levels if the goal is to compare age groups with smaller intervals by repeating the same analytical ideas presented with Levels 2 and 3. The quantification of inequality through the idea of degree of uniformity are not visually clear just by looking at the sub-parts, especially given that these are unions of disjoint age groups and not a single contiguous age group. We have used the idea of entropy and diversity to systematically locate and quantify the inequality of incidence of disease in the original distribution.

We end the example by stating that the algorithm definitively identifies the vulnerable age groups within the original distribution and quantifies the degree of inequality (vulnerability) with respect to incidence of disease. This information can be invaluable for medical practitioners while making health care decisions for an individual patient (or groups of patients in the case of community care) based on the age (or age groups) present.

4.4. Stress Distribution Across a Beam

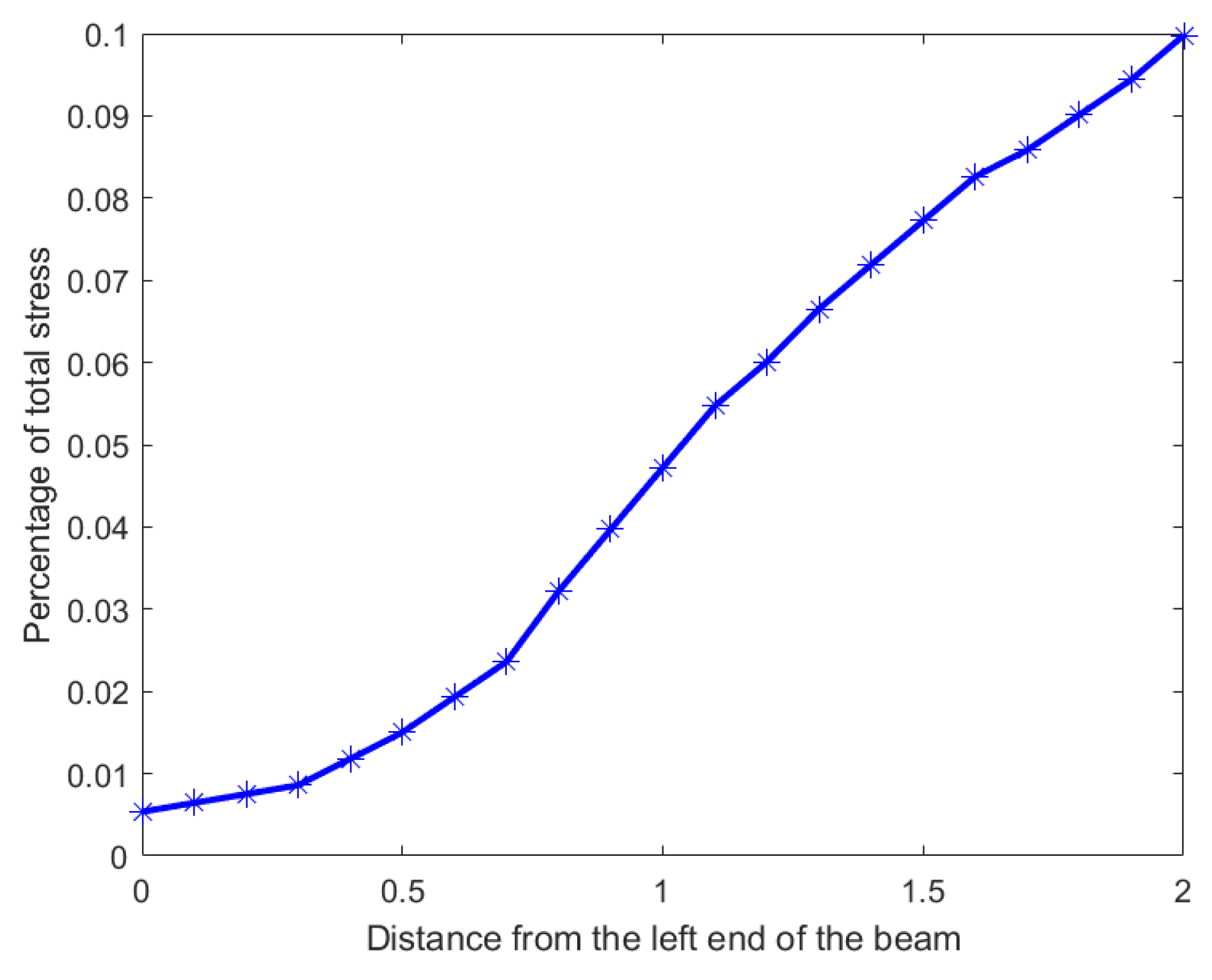

In our second example we use a hypothetical dataset depicting the stress distribution across a 2 m beam. Analyzing the inequality of distribution of stress across such a beam will allow engineers to predict and design beams with material compositions that can withstand the stress distribution. The original stress distribution is shown in

Figure 13, the x-axis denotes the position on the beam from 0 m to 2 m in

m increments, and the y-axis shows the percentage of total stress at each position. It is clear from the distribution that the stress percentages increase monotonically from left to right with a maximum of 10 percent at the right end, namely at 2 m. To locate and quantify the inequality in distribution of stress across the beam, we have applied our nested Hahn decomposition to this distribution.

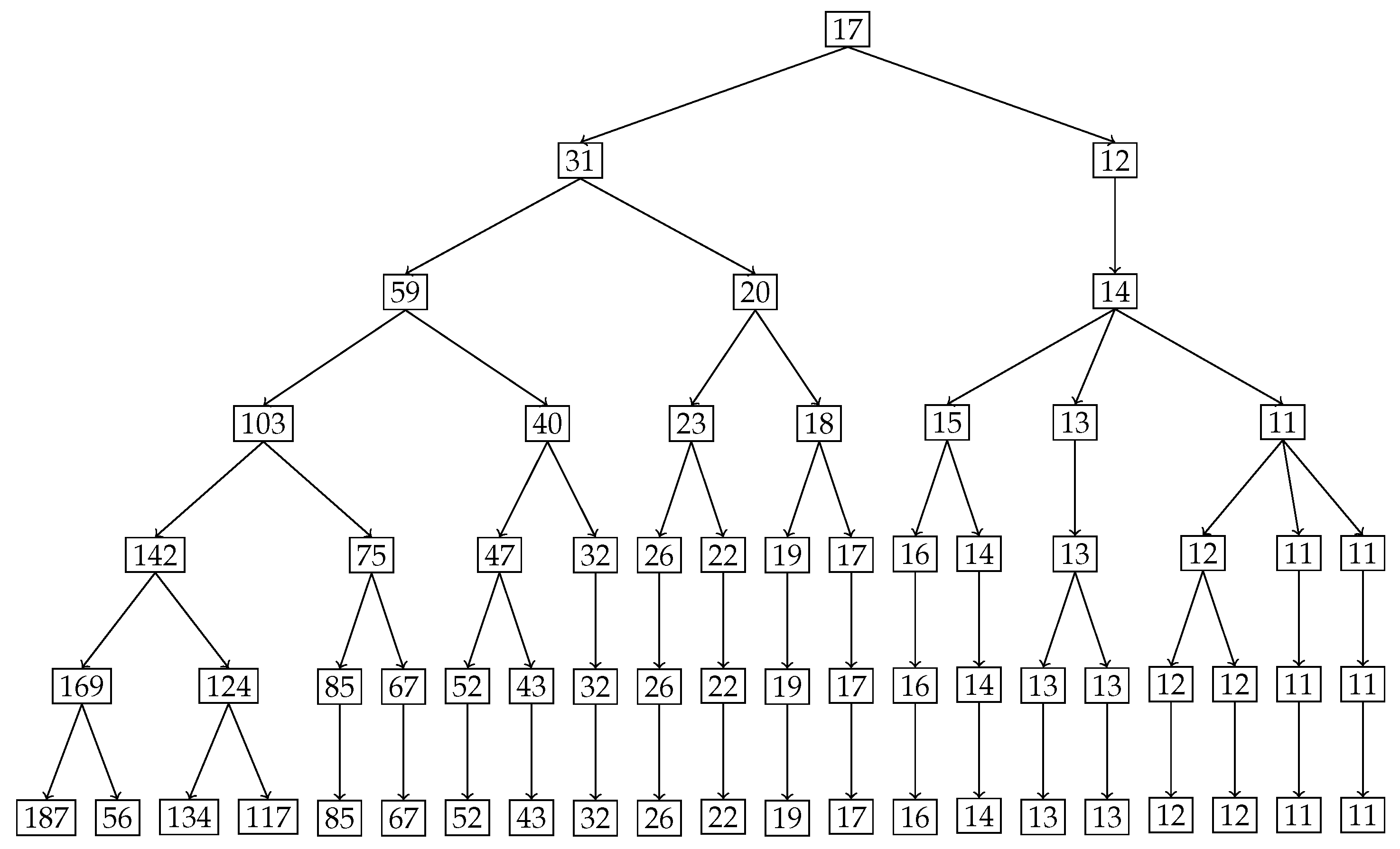

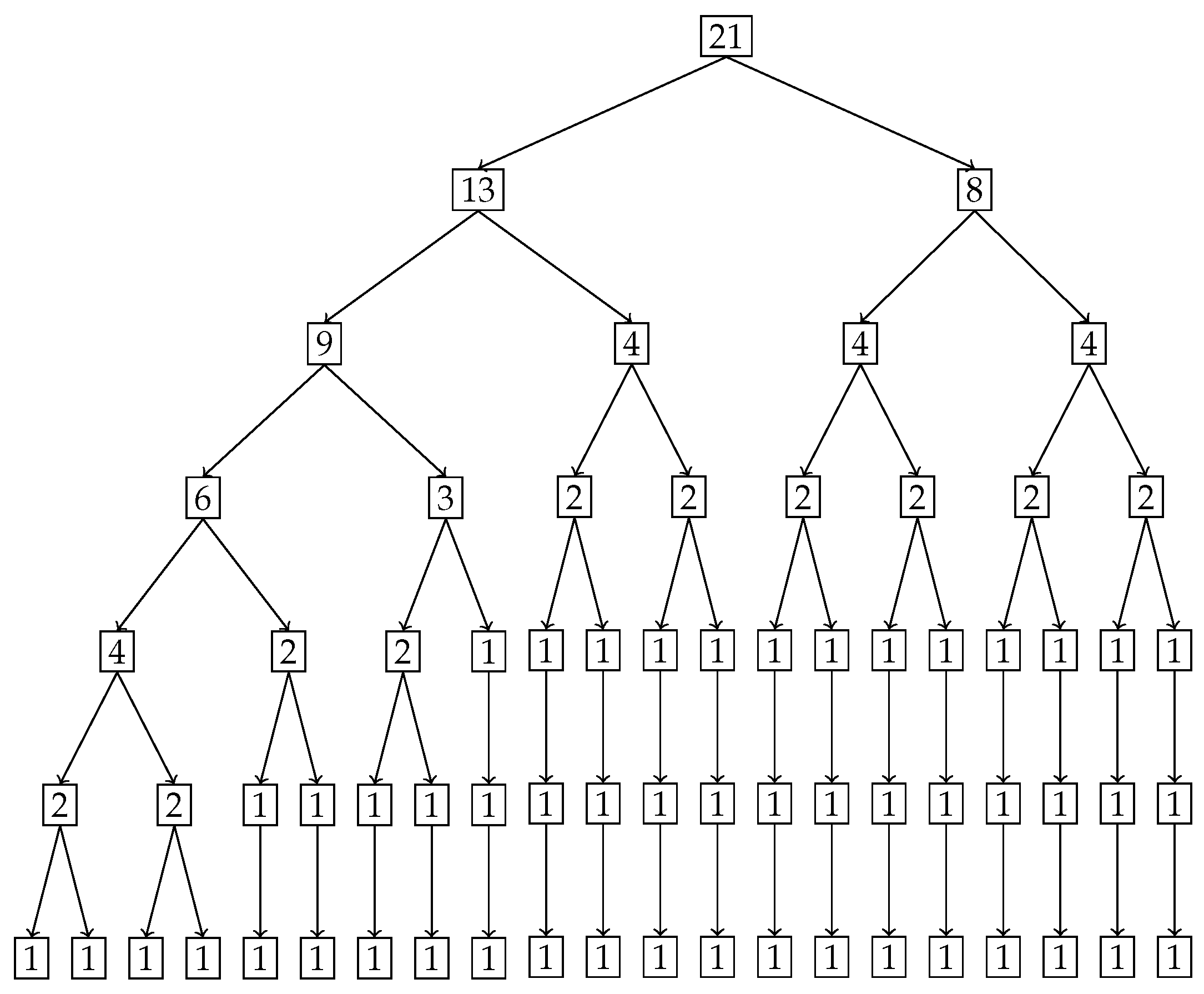

In

Figure 14, the degree of uniformity of parts is shown on the tree diagram obtained from the nested Hahn decomposition algorithm. It is clear from the tree diagram that there are 6 levels obtained from the decomposition, not including the entire distribution. We see that the degree of uniformity of the entire distribution is 17. In level 2, the entire beam is split into two parts, i.e.,

and

meters. Looking at the subdivisions of the

meter part across further levels, almost every sub-part across further levels have the same degree of uniformity. However, the

meter part sub-divides into sub-parts across further levels with large variations in degree of uniformity. This indicates that the

meter part has a larger concentration of stress within it as seen in the segregation of values in degree of uniformity within its further sub-parts.

Figure 15 shows the number of elements in each sub-part generated by the tree diagram. We note that after level 4, the right half of the tree diagram contains singletons and hence there are no more subdivision, whereas the left half has a few more levels of subdivisions until singletons appear there as well. Due to the monotonically increasing nature of the original distribution in

Figure 13, there are no

Z parts in the tree in this example as well, i.e., all splits are binary and not ternary.

Like in the disease contraction example, we could discuss all the sub-parts across all levels, but such a discussion would be too tedious. Hence, we choose to discuss levels 2 and 3 from

Figure 14 and

Figure 15 below:

Level 2: This is the first division of the entire distribution. There are two sub-parts in this level as stated before, namely

meters and

meters. The

meter part (

) is around

times more uniformly distributed than the

meter part (

). Alternatively, the

meter part is

times as heavily concentrated with stress compared to the

part, entropically speaking. The

meter part clearly has the bulk of the stress. Both these parts are shown in

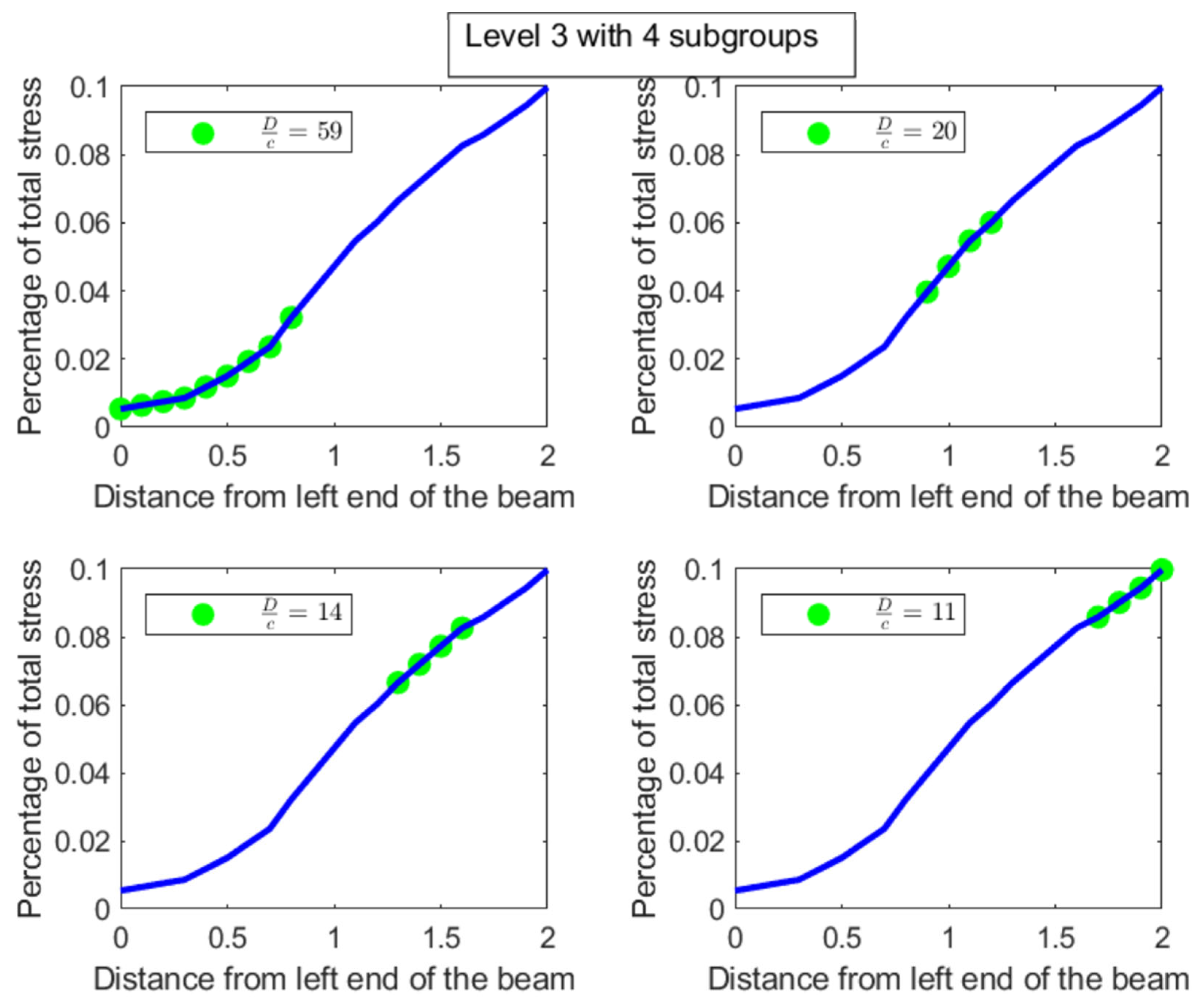

Figure 16, with the green dots denoting the sub-parts overlayed on the blue line which is the original distribution.

Level 3: In level 3, the

meter part from level 2 splits into two sub-parts, namely

meters with a degree of uniformity

and

meters with a degree of uniformity

. Hence, the

meter part is almost 3 times more heavily stressed than the

meter part. These two sub-parts are shown in the top-left and top-right pictures in

Figure 17. Similarly, the

meter part from level 2 splits into two parts, namely

meters with

and

meters with

, showing that these two parts are more or less equally distributed in their stress load. However, it is interesting to note that both these parts,

and

meters, are at least 4 times more concentrated than the

part in their stress load. These two sub-parts in level 3 are shown in the bottom-left and bottom-right pictures of

Figure 17. The material composition of the beam should be designed to withstand such inequalities in stress concentration in the parts described with higher stress loads. Similar analysis and comparison of stress loads can be done at a higher level of granularity by looking at levels 4 through 6 if needed by the designer.

We end by noting that the the inequality in distribution of stress across the 2 m beam has been quantified and located. We reiterate that, like in the previous disease contraction example, the monotonicity of degree of uniformity is maintained across the levels in the tree. Furthermore, we could have started the process with any sub-part of the original distribution (not necessarily contiguous) and not just the entire distribution.

4.5. Null Set Example

In the third example, we explore the possibility of bona fide null sets for the degree of uniformity measure . We first note that the , i.e., the degree of uniformity of the entire distribution is zero with respect to the diversity D. This is because the entire distribution (denoted by the index set I) has diversity equal to D. We are not interested in this type of a set because I is actually not a null set per se, since there can be subsets that have higher and lower degrees of uniformity in general. We explore the possibility of subsets of I (i.e., sub-parts of the original distribution) that are null sets, i.e., sets that satisfy the property that all measurable subsets satisfy . Our main goal in this example is to explore the relationships between the probabilities of parts of and N that need to be satisfied to guarantee the existence of a proper subset that is a null set. With this in mind, we set the stage for our exploration as follows:

Let I denote the full set of indices in the given distribution, i.e., .

Let P, Z and N denote the sub-parts in the next level which are more, equally and less uniformly distributed respectively compared to the entire distribution I.

Let D denote the degree of uniformity of the entire distribution. This also means that the degree of uniformity of Z is also equal to D, i.e., . We also have that . Let be such that . Similarly let be such that . We also have that , and by construction.

Let and be the probabilities of and N respectively. Hence .

With the above setup in mind, we first note the following from Theorem 2:

Equations (

19) and (

20) can be solved simultaneously to give the following relationship between

and

:

We can now try to choose values for and and try to compute and . With those in hand, we can try to create sub-parts and N. To keep things simple, we choose and . This gives .

Discrete null set: We choose 6 indices for

Z, say

and place a probability of

for each of those values of

Z. This means that

, i.e., the degree of uniformity of

Z is the same as for the entire distribution, thereby confirming that

Z is indeed a null set. This gives

as needed. Since we need

, we choose

. This automatically gives us

. And hence

is satisfied automatically. We let

. This means

as needed. Lastly, we let

and

. That automatically gives

and

. Hence

as needed as well. Hence, putting

and

N together we have

.

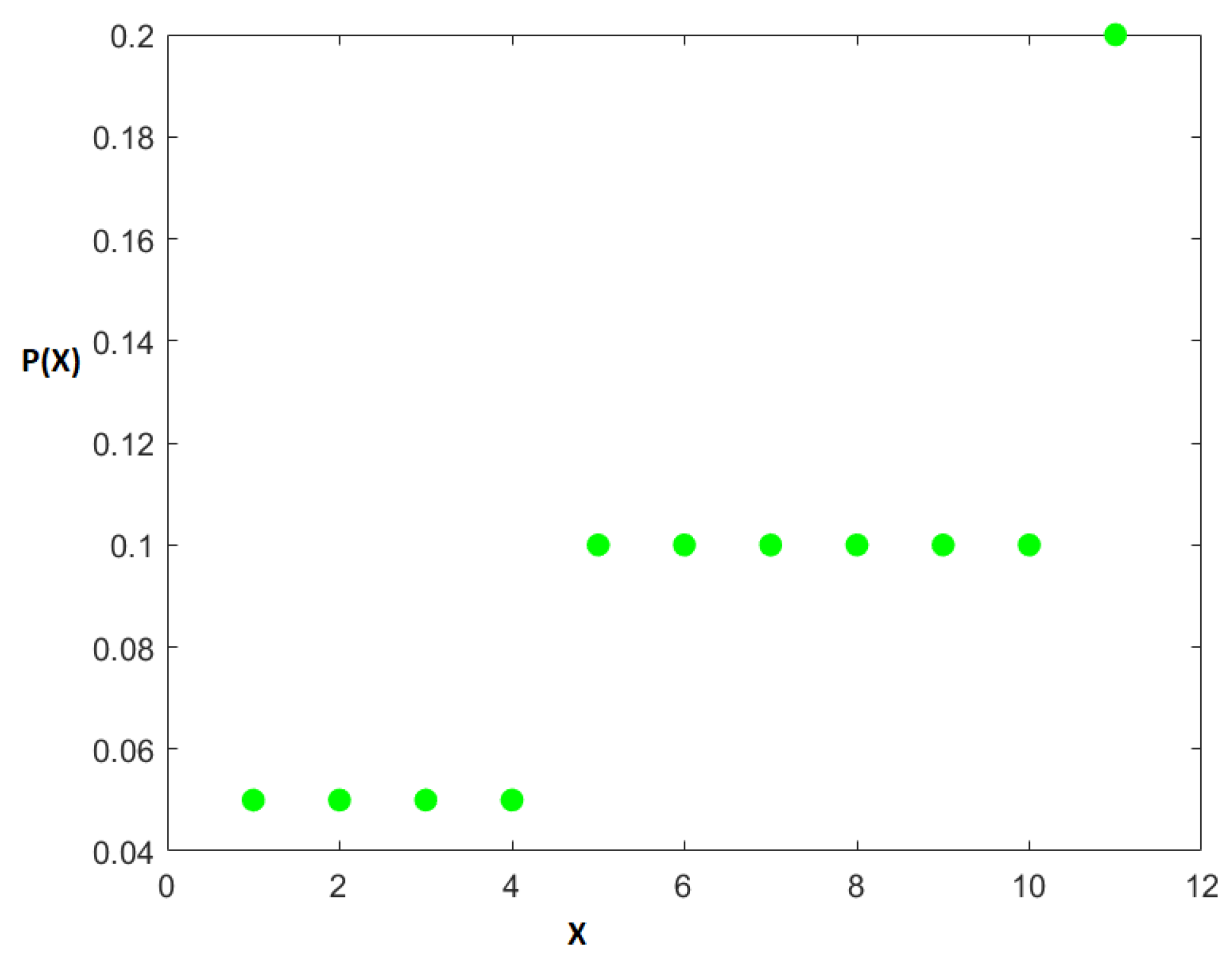

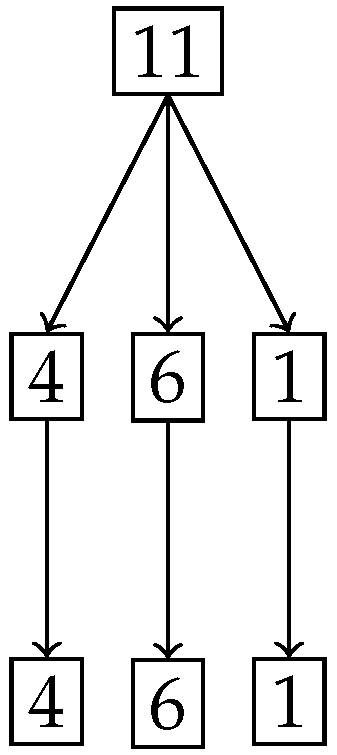

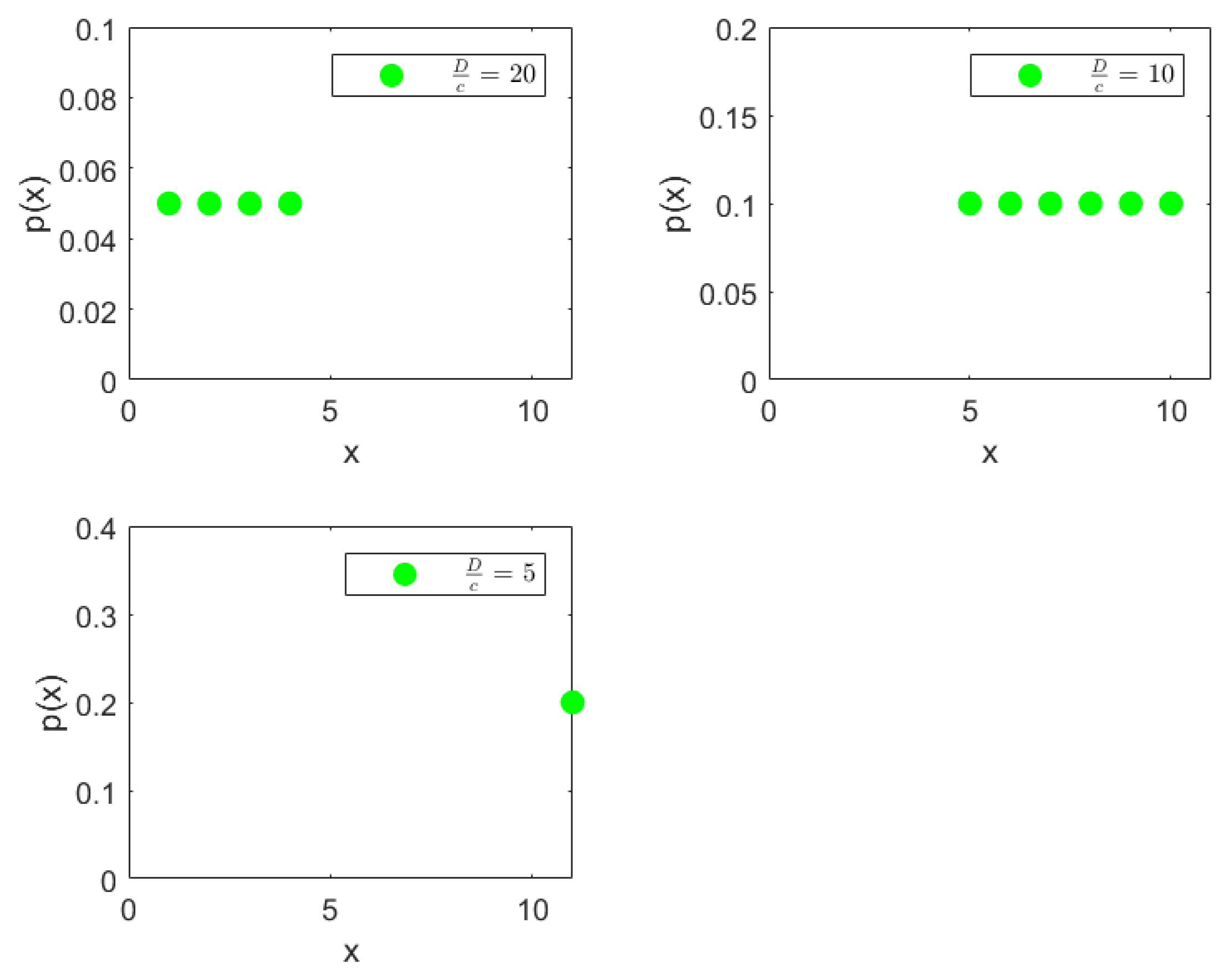

Figure 18 shows this entire distribution with green dots for probabilities.

Figure 19 shows the splitting of the degree of uniformity and

Figure 20 shows the number of elements in the split. It is clear from

Figure 19 that we have a ternary split and the middle portion

Z has the same degree of uniformity 10 as the entire distribution.

Finally

Figure 21 shows the three actual parts that are obtained after the ternary split shown as green dots for the probabilities, with the degree of uniformity of parts ordered from left to right across rows. The positive part with

is on the top-left, the null set with

is on the top-right and the negative set with

is on the bottom-left of

Figure 21.

- 2.

Continuous null set: We can easily modify the discrete null set example to a continuous one with a density

as follows:

Just like in the discrete null set example, it can be easily shown that is a null set, is a positive set and is a negative set with respect to the measure . It can also be easily shown that the diversity of the entire sample space is given by , , and just like in the discrete null set example.

5. Conclusions

In this paper, we presented a novel approach for the localization of inequality within a given probability distribution. Our method leverages the Hahn decomposition applied to the newly introduced degree of uniformity measure , enabling the systematic partitioning of the probability space into three key subsets: and Z. These subsets correspond to regions with higher, equal, and lower degrees of uniformity compared to the original distribution, respectively. By recursively applying the Hahn decomposition, we demonstrated that this process generates a specialized ternary tree structure where the degree of inequality decreases monotonically from left to right within each level.

The following are the salient points of the paper:

Granular Analysis of Inequality: The recursive decomposition reveals increasingly detailed information about the distribution of inequality. At deeper levels, subsets are refined to pinpoint regions of greater or lesser concentration of probability.

Monotonic Preservation: We showed that the subdivision method ensures monotonicity in the degree of inequality across subsets. This nested ordering guarantees that measurable subsets inherit the inequality hierarchy from their parent sets, preserving consistency and interpretability.

Explicit Null Sets: Unlike the traditional Hahn decomposition theorem, which leaves ambiguity regarding null sets, our approach explicitly identifies these sets Z and isolates them from positive P and negative N regions, enhancing analytical clarity. These null sets Z have the same degree of uniformity as their parent. This is a unique property of the degree of uniformity measure .

Versatility Across Domains: Applications to various fields, including disease distribution analysis and stress distribution in engineering beams, demonstrated the utility of our method. The ability to locate and quantify inequality enables tailored interventions in fields such as health care, manufacturing, and environmental science.

Example Validations: Through detailed examples, we illustrated how the algorithm effectively identifies regions of concentration and uniformity in distributions. The case studies highlighted the method’s robustness in quantifying relative degrees of inequality and isolating key regions for analysis.

Our findings have significant implications across diverse domains. In economics, the ability to pinpoint localized inequality in income distributions can inform targeted policy interventions. In health care, identifying concentrated areas of disease prevalence enables more focused resource allocation and preventive strategies. Moreover, the application to engineering stress distributions demonstrates how material design can be optimized by understanding stress localization.

Future work can extend this framework to multivariate distributions and dynamic settings where probability distributions evolve over time. Another promising avenue involves exploring the implications of the decomposition in machine learning models for feature importance analysis, where understanding the localization of information can enhance interpretability.

Our approach can be used to improve the GINI index by uncovering localized disparities in income or wealth distribution that a single scalar value often conceals. Using recursive Hahn decomposition and the degree of uniformity measure, we can pinpoint where inequality is most pronounced such as in the middle-income range, offering more targeted insights for policy. This enables more precise interventions like tax adjustments or wage reforms and allows dynamic tracking of inequality over time, providing a deeper, more actionable assessment than the GINI index alone.

What sets this paper apart is its introduction of a recursive Hahn decomposition applied to a novel signed measure—the degree of uniformity derived from Shannon entropy—to systematically localize inequality within probability distributions. Unlike traditional approaches that offer only global summaries of inequality, this method constructs a hierarchical, multi-scale partition of the probability space that preserves the ordering of inequality across all levels. It uniquely identifies not only regions of higher and lower concentration but also explicit null sets where uniformity matches that of the whole distribution—resolving ambiguities inherent in classical Hahn decomposition. This framework provides a principled, interpretable, and scalable tool for dissecting the internal structure of inequality, with broad applicability across domains such as epidemiology, engineering, and economics.

In conclusion, the recursive Hahn decomposition of the degree of uniformity measure provides a systematic and insightful tool for dissecting probability distributions. Its ability to partition distributions while preserving the hierarchical order of inequality makes it a valuable framework for both theoretical research and practical applications. By offering a nuanced view of inequality, this approach enables more precise analyses and decision-making across various domains, from economics to health care and engineering. The capacity to track and quantify changes in inequality over time further enhances its utility, making it possible to monitor the effectiveness of interventions or the evolution of inequality in complex systems. As this research reveals new applications, it holds significant potential for advancing equitable and data-driven optimization strategies across multiple fields.