Abstract

In the industrial IoT, it is vital to detect anomalies in multivariate time series, yet it faces numerous challenges, including highly imbalanced datasets, complex and high-dimensional data, and large disparities across variables. Despite the recent surge in proposals for deep learning-based methods, these approaches typically treat the multivariate data at each point in time as a unique token, weakening the personalized features and dependency relationships between variables. As a result, their performance tends to degrade under highly imbalanced conditions, and reconstruction-based models are prone to overfitting abnormal patterns, leading to excessive reconstruction of anomalous inputs. In this paper, we propose ITMMG, an inverted Transformer with a multivariate memory gate. ITMMG employs an inverted token embedding strategy and multivariate memory to capture deep dependencies among variables and the normal patterns of individual variables. The experimental results obtained demonstrate that the proposed method exhibits superior performance in terms of detection accuracy and robustness compared with existing baseline methods across a range of standard time series anomaly detection datasets. This significantly reduces the probability of misclassifying anomalous samples during reconstruction.

1. Introduction

Time series data has extensive applications across a wide range of disciplines, such as financial markets, industrial manufacturing, intelligent transportation, medical monitoring, and environmental monitoring [1]. Anomaly detection is a pivotal component of time series analysis, which pertains to the identification of points or intervals in the time series data that deviate from the norm expected features to detect potential fault risks [2]. With the rapid development of the IoT in industrial environments, an increasing number of sensors are being deployed for machine condition monitoring, generating vast amounts of multivariate time series data.

However, time series anomaly detection is complex and challenging, with diverse data, large scale, and various anomaly types, and there is a serious imbalance between normal and abnormal data. There are many normal samples and few abnormal samples, which often makes rare anomaly signals concealed by a large quantity of normal data; thus, it is difficult to accurately mark all historical data. In order to meet this challenge, unsupervised anomaly detection methods for multivariate time series have gradually become a focal point for research. Anomaly detection methods in the classical tradition, based on statistics and machine learning methods [3] (such as isolated forest [4], support vector machine [5], and local outlier factor [6]), perform well in dealing with low-dimensional and univariate time series. However, when faced with complex and high-dimensional time series, these methods are usually difficult to capture time dependence and variable correlation effectively and are easily affected by data noise.

Therefore, more research turns to using a deep learning model to build a more complex and flexible anomaly detection model. Advances in the field of artificial intelligence technology have precipitated a series of significant developments in the method of deep learning [7]. Reconstruction-based techniques constitute a fundamental paradigm in multivariate time series anomaly detection, leveraging the ability to faithfully reconstruct normal patterns while exhibiting significant reconstruction deviations for anomalous inputs. Because of the strong interpretability of reconfiguration-based methods, some research works have performed anomaly detection tasks in a reconfiguration-based manner. For example, TranAD [8] combines Transformer with Generative Adversarial Networks (GANs) to detect anomalies through a reconfiguration-based encoder–decoder architecture. Anomaly Transformer [9] introduces an innovative Anomaly Attention mechanism, which combines correlation differences and refactoring to distinguish outliers. Wang et al. propose a frequency-oriented VAE framework revisiting anomaly detection from spectral perspectives. Because the time series data often presents an extreme imbalance between typical and atypical samples in the actual scene, the reconstruction method has certain challenges in modeling.

In constructing time series embeddings, the conventional method represents all variables at each time point as a monolithic entity (a single token), which ignores the individualized characteristics and diversity among variables and has shortcomings in accurately capturing the complex associations of different variables [10]. Different points of the same time step often represent the physical quantities measured by different sensors, and these variables may have a unique correlation. However, embedding these measured values directly into a single word containing multivariate data will weaken this correlation and cover up the characteristics of individual variables. In addition, the markers formed by a single time step are highly localized in the receptive field, which not only limits the model’s capability of identifying cross-variables and long-term dependencies, but is also easily affected by misaligned events at time points, which further weakens the effectiveness of the model. Existing reconstruction-based methods are prone to over-generalization [11,12]. Because the encoder may extract abnormal features or the decoder has too strong reconstruction ability, the abnormal input may be accurately reconstructed. Such methods usually assume that the characteristics of all time steps can be represented by a unified reconstruction pattern, ignoring the differences in the relationship between different variables. In fact, each variable often presents a unique normal pattern, and the characteristics of different variables are easily confused, which affects the precision of anomaly recognition.

To tackle the identified challenges, we propose an inverted Transformer framework combined with improved multi-memory gating (ITMMG) for the detection of abnormal patterns in multivariate temporal data. ITMMG uses an inverted token encoding strategy. Specifically, the inverted token strategy encodes the time step data in the window of each variable into a token independently, which makes the model flexibly deal with complex multidimensional time series data. In view of the unique normal pattern of different variables, this paper proposes a new multivariate memory gate, which sets independent memory units for each variable. Each variable learns its normal pattern through its unique memory item. It can better adapt to the variable differences in multivariate time series in order to better deal with the feature confusion caused by the dependent variable differences and more accurately identify instances with notable discrepancies compared to normal patterns during anomaly detection.

While the proposed framework may exhibit reduced sensitivity to gradual trend anomalies—a subtype of collective anomalies characterized by slow-evolving deviations—it maintains robust overall effectiveness on datasets encompassing such patterns. Empirical validation of SWaT and NIPS-TS-SWAN (where trend anomalies challenge detection models) demonstrates competitive F1 scores of 70–90%, indicating practical utility despite theoretical limitations. This balanced performance across diverse anomaly types underscores our model’s viability as a general-purpose detection solution.

The main contributions of this paper can be summarized as follows:

- We propose a multi-memory gated Transformer framework based on inverted embedding. Through fine-grained modeling of complex interactions and dependencies among variables, global correlation learning is strengthened;

- We propose an improved multi-memory unit and self-supervised modeling mechanism, which can capture the specific normal pattern of each variable and adaptively adjust the memory state;

- The most advanced performance is achieved in a large number of experiments on four widely used benchmark datasets.

The remainder of this paper is structured as follows: The Section 2 of this text is dedicated to an examination of the literature surrounding the subject. Section 3 provides a detailed description of the problem and of the proposed ITMMG method. In Section 4, we describe the experimental methodology, summarize the evaluation findings, and conduct an ablation study. The paper’s final conclusions are drawn in Section 5.

2. Related Work

2.1. Detection of Time Series Anomalies

Finding anomalous samples that differ from the majority of data that are frequently linked to problems like equipment failures or structural flaws is the main goal of research in the subject of time series anomaly detection. Univariate anomaly detection focuses on abnormal behaviors of a single parameter. In contrast, multivariate anomalies involve joint abnormal behavior across multiple parameters, which makes the model construction more complicated because of the complex correlation between sequences.

Depending on the data labels, the current anomaly detection algorithms can be classified as either supervised or unsupervised. Because it is difficult to obtain data labels in practical applications, unsupervised methods are more common. Algorithms can also be classified according to classic machine learning and deep learning. The former includes a linear model, distance method and probability density estimation. For example, the Gaussian mixture model and deep neural network are combined in DAGMM [13]. With the development of neural networks, methods based on deep learning also appear in this field, such as employing deep learning models like graph neural networks (GNN [14]). LSTM-VAE [15] is a variational self-encoder model, replacing the traditional feedforward network with LSTM, and Omnianomaly [16] uses random cyclic neural networks, similar to LSTM variational automatic encoder and planar normalized flow, to generate reconstruction probability. Graph Learning with Transformer for Anomaly detection (GTA [17]) learns the link between several IoT sensors by using graph structure. TimeMixer [18] and HDMixer [19] recently demonstrated the efficacy of multiscale mixing in forecasting. In addition, a lot of work uses the method based on generating a confrontation network (GAN) [20,21,22] and the method based on deep reinforcement learning (DRL) [23]. Reconstruction techniques are the foundation of the majority of these deep learning models. Excellent accuracy in detection is achievable due to deep learning’s potent representation learning capability. Researchers have recently suggested a technique that uses self-supervised learning to increase the generalization capacity of unsupervised detection of anomalies. Recent studies have extended these methods to critical infrastructure domains, such as railway operations leveraging edge computing [24] and structural health monitoring systems for bridges [25].

2.2. Embedding and Tokenization of Time Series Data

Embedding technology is fundamentally a mathematical framework for mapping structured objects into low-dimensional continuous vector spaces [26]. Initially achieving breakthroughs in natural language processing, it successfully transformed discrete symbols (e.g., words, phrases) into dense vector representations to capture semantic relationships. With advancements in deep learning, the applicability of embeddings has significantly expanded to continuous data domains, including patch embedding representations in computer vision [27], node and edge embeddings in graph neural networks [28], speech recognition [29], etc.

While established segmentation/tokenization algorithms underpin effective word embeddings for natural language, the analogous partitioning of continuous objects—such as images, audio, and time series data—does not readily present equally simple and effective solutions, making the segmentation of time series particularly worthy of attention. The pursuit of versatile representations for heterogeneous time series data continues to pose significant hurdles, as highlighted in contemporary literature reviews [30]. This persistent challenge substantiates our specialized approach toward anomaly-centric feature learning, diverging from universal representation paradigms.

In multivariate time series modeling, data exhibits dual dimensions: temporal steps and variables. Conventional Transformer architectures typically adopt the temporal tokenization paradigm [31], where all variables at the same timestep are encoded into a single token vector. This representation treats timesteps as fundamental units, modeling temporal dependencies through attention mechanisms. In contrast, iTransformer innovatively proposes variable tokenization [10], treating entire temporal sequences of individual variables as independent tokens to achieve entity-centric embedding construction, explicit inter-variable interaction modeling, and preservation of variable-specific patterns. This paradigm shift demonstrates embedding techniques’ adaptability to domain-specific inductive biases through aligned vectorized representations, providing novel multivariate time series feature-learning perspectives with validated superiority. Unlike T-Rep’s time embeddings [32] that explicitly encode temporal positions, our inverted embedding discards absolute timing to prioritize cross-variable dependencies.

2.3. Transformer-Based Time Series Analysis

Transformer [33] has been successfully deployed in the area of natural language processing for the first time. Thanks to its powerful ability in sequential data processing, Transformer has also achieved considerable success in a variety of domains, including but not limited to audio processing, natural language processing, and computer vision [27,34]. The efficacy of the self-attention mechanism in addressing the long-distance dependence of time series data has led to its extensive utilization in the domain of time series analysis in recent years. Anomaly Transformer [9], TranAD [8], AnomalyBert [35] and so on have extensively explored the application of Transformer in anomaly detection methods. MEMTO [36] uses a Transformer encoder as feature extraction and proposes a module including memory gating to solve the detection error based on the reconstructed model. These modeling methods usually embed multivariate data through time steps. Although this method can capture the dependencies of time dimensions, it is insufficient for modeling the feature association between variables. In contrast, this paper uses an inverted embedding strategy to enhance the capture ability of complex interactions between variables and combines the method of multivariate memory units to capture the personalized features of variables more accurately.

3. Methods

3.1. Definition of the Problem

The dataset is separated into training and test sets based on the unsupervised time series anomaly detection task. The training set contains no anomaly labels. During training, the model learns the typical data patterns solely by leveraging intrinsic data associations, such as temporal dependencies and inter-variable relationships. The test set contains labels to evaluate the anomaly scores inferred by the model and assess its detection performance.

We formalize the core notation for multivariate temporal segmentation and anomaly detection mechanics to ensure mathematical clarity throughout this work.

Definition 1

(Temporal Partitioning). Given a multivariate time series with variables and timesteps, we partition S into contiguous windows , where each window spans exactly T timesteps, such that ,

where denotes the count of distinct timesteps.

Definition 2

(Anomaly Detection Criterion). Given , the anomaly detection task reduces to identifying windows (from Definition 1), where the anomaly score ϕ() exceeds a predefined threshold . The set of anomalous windows is

A time series S is flagged as anomalous if is non-empty.

This window-based formulation enables localized anomaly detection while maintaining computational tractability. Its efficacy stems from capturing local temporal dependencies, though the global context beyond window boundaries may be attenuated. The original multivariate time series data is partitioned into fixed-length, contiguous windows . The collection of these windows {} forms the dataset for both training and test. For each window X, our model aims to learn its underlying normal patterns.

3.2. Model Overview

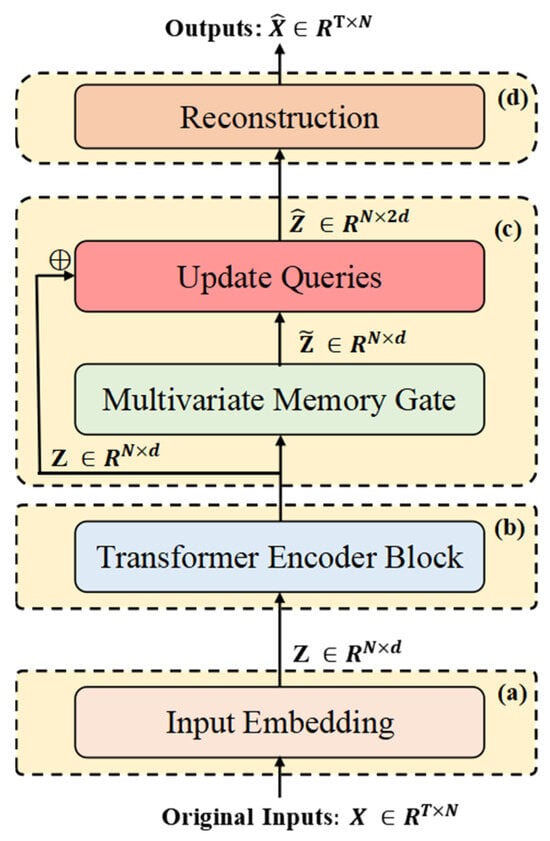

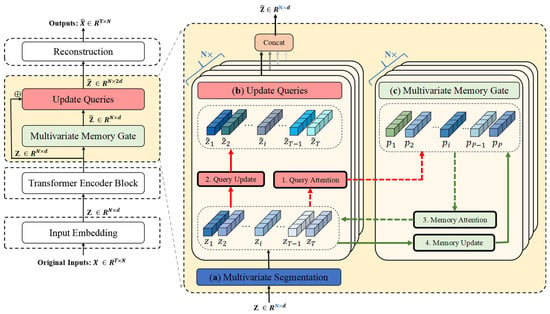

Figure 1 illustrates the overall architecture of ITMMG. The framework comprises four core components: (1) multi-dimensional time series embedding, (2) Transformer encoder, (3) multivariate memory gate, and (4) reconstruction decoder.

Figure 1.

The architecture of ITMMG. (a) Raw data of different variables are used as markers and independently embedded to obtain different representations. (b) Transformer encoder as the backbone for feature extraction. (c) The multivariate memory re-expresses the latent states output by the Transformer encoder in its learned latent state space. (d) The reconstruction module, as a simple decoder, is incapable of effectively reconstructing features of anomalous patterns, thus amplifying reconstruction errors for anomalous data.

Input windows X undergo tokenization to generate variable-oriented representations. This transformation enhances the capture of intra-window dependencies, enabling precise characterization of normal behavioral patterns within individual windows—essential for window-level anomaly detection during inference. The Transformer encoder performs feature extraction, capturing horizontal dependencies between variables and encoding the input data within the time series window into latent vector representations. Within the multi-query gating module, (1) dedicated memory modules are assigned per variable, (2) latent representations are decomposed into asynchronous variable-specific vectors, (3) these vectors update corresponding variable memory modules, (4) and the updated representations fuse with original latent vectors. Final fused vectors enter the reconstruction decoder to recover the original subsequence. Anomaly scores derive jointly from reconstruction error and latent spatial bias.

3.3. Input Embedding

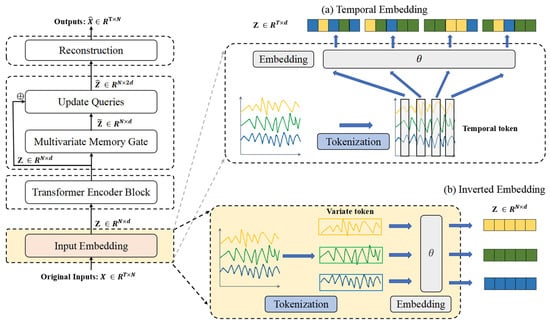

In multivariate time series modeling, data exhibits dual dimensions: temporal steps and variables. This work employs an inverted embedding strategy (visualized in Figure 2b), where feature dimensions—rather than temporal steps—serve as the primary embedding axis.

Figure 2.

The input embedding. (a) Temporal embedding: Adopts temporal tokenization (aggregating all variables per timestep into a single token) to construct embeddings, primarily excelling in directly modeling temporal dependencies. (b) Inverted embedding: Utilizes variable tokenization (treating each variable’s full time series as an independent token) with dimension swapping to formulate embeddings, whose key strength lies in explicitly capturing cross-variable interactions.

This approach projects multivariate observations at each timestep into a latent space , where d denotes embedding dimensionality. This technique fundamentally diverges from sequential tokenization by explicitly prioritizing cross-variable dependency modeling over temporal pattern extraction. Through dimension-swapping operations, it transforms each variable’s full time series into an independent latent representation, thereby directly capturing synchronous interactions between channels. Consequently, it circumvents the computational redundancy of temporal-step embeddings while enhancing sensitivity to synchronous anomalies across channels—a critical advantage for high-dimensional industrial sensors like the dataset SWaT’s 51-variable system.

3.4. Feature Extraction Encoder

The Transformer encoder is used to perform deep feature extraction on the token set of time series, which is dominated by variables. The main goal of this component is to reveal the horizontal relationships and dynamic features between multi-dimensional time series variables. This enhances the model’s capacity to capture the complex interactions between multiple variables.

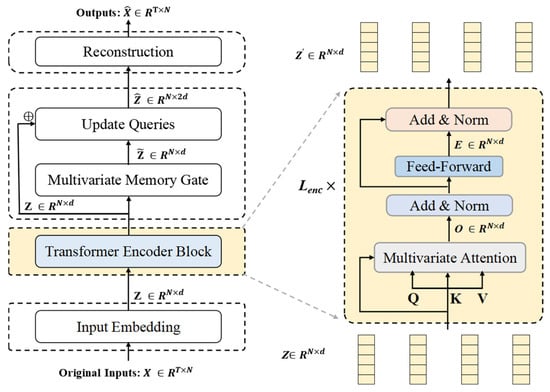

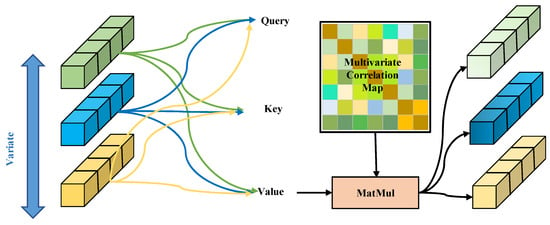

The structural composition of the Transformer encoder block is presented in Figure 3. A schematic diagram illustrating the multi-head attention mechanism under multivariate attention circumstances is provided in Figure 4. Note that the variable z in Figure 4 has dimensions N × d, indicating that each input vector represents an embedding of a specific variable rather than a temporal embedding. The inverted embedding applies a linear transformation to each subsequence: Z = , where , is the projection matrix, output preserves original timesteps, and no activation function or bias term is applied.

Figure 3.

The structure of the Transformer encoder block. The encoder employs Lenc stacked Transformer layers, with the internal structure of each layer illustrated in the right panel of the figure.

Figure 4.

Multivariate attention. Multiple variables are embedded using multi-head self-attention to capture their complex interdependencies.

3.4.1. Encoder Structure

The input data is embedded into a token set dominated by variables, forming the initial embedding representation , where is the embedding dimension for each variable. This embedding is then passed through the Transformer encoder consisting of Lenc layers. Each layer contains multi-head self-attention, layer normalization, multi-head self-attention, and a feed-forward neural network.

In the multi-head self-attention mechanism, each head performs a linear projection to obtain , , and , where represents the projection dimension for each head, where . The attention outputs are computed using scaled dot-product attention:

The encoder module also includes layer normalization and a feed-forward network with residual connections. It generates a representation for each variable . In each layer of the encoder, due to the inverted sequence dimension of the input, each token represents the sequence of a variable along the time dimension. This allows the multi-head self-attention to effectively analyze the dependencies between variables and generate new representations for each variable. After the multi-head attention mechanism, the newly generated representations undergo nonlinear mapping and feature compression through the feed-forward network, enabling the model to extract complex representations from the original features to describe the time series. This process aids in identifying complex anomaly patterns that are difficult for linear models to capture.

3.4.2. Reconstruction Decoder

Two fully connected layers make up the decoder. This design aims to balance the capabilities of the encoder and decoder, ensuring that the output accurately reflects the encoder’s processing results. This approach prevents an overly powerful decoder from accurately reconstructing random noise devoid of input data during the reconstruction process, which could introduce biases into the model during anomaly detection [36].

3.5. Multivariate Memory Module

To address the issues of overgeneralization in reconstruction-based models and variability among different variables in multivariate time series, where each variable may display specific normal pattern characteristics, we propose a solution. The differences in feature distribution and patterns across variables make it challenging for the model to learn and adapt to the distinct characteristics of each variable. Sharing a single memory module may cause the model to overlook certain variable patterns or confuse the features of different variables. Therefore, we introduce a novel multivariate gating module, which allows for the allocation of independent memory modules for each variable. The multivariate memory update requires calculating similarity across all memory units, which slightly reduces inference speed. This design allows the model to more effectively handle the variability among variables in multivariate time series.

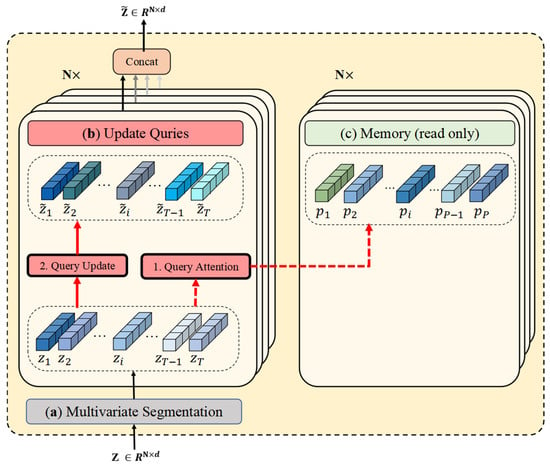

As delineated in Figure 5, the memory update via the multivariate gate is exclusively executed during the unsupervised learning phase. During inference, however, the memory module operates in the read-only mode solely for query processing. Figure 6 depicts the architecture of the multivariate memory module under this inference paradigm.

Figure 5.

Multivariate memory module during training. (a) Multivariate segmentation: Each variable is assigned a dedicated memory module, thus preserving its unique pattern characteristics without cross-variable interference. (b) The Update Queries operation reconstructs the input embedding (denoted as query) by computing attention scores between the query and memory vectors, followed by weighted aggregation. (c) The multivariate memory gate leverages all queries to refresh memory vectors through attention-based weighting.

Figure 6.

Multivariate memory module in inference mode. (a) Multivariate segmentation. (b) The Update Queries operation. (c) The multivariate memory gate (read only).

3.5.1. Multivariate Memory Update

The memory units are denoted as , where indexes the memory unit and indexes the variable. Additionally, denotes the number of memory units per variable. The memory units are trained during the model training process to hold the typical data patterns for various variables. We train the model’s memory units such that each variable’s memory unit contains prototype features corresponding to the latent vectors of normal timesteps. The memory items for different variables are updated incrementally. The dot product is computed between each memory unit and the latent representation, followed by normalization using the Softmax function. Regarding the -th variable’s data, the formula is as follows:

where represents the temperature hyperparameter. In the memory module, we utilize an update gate to flexibly train each memory unit, making it suitable for diverse normal patterns. Based on the data, the model may then adaptively learn the update magnitude of each memory unit. Updating of the memory module unit occurs solely during training.

The following is the formula for the memory update:

where and represent the projections, and and denote the sigmoid and element-wise multiplication.

3.5.2. Latent Vector Update

In the latent vector update phase, an updated latent vector is generated for each variable. The query attention weight is defined and computed by applying the function to the dot product, as shown below:

Next, the retrieved memory item is weighted by , and the corresponding latent vector is obtained.

The retrieved latent vector from the memory unit for each variable is concatenated with the original latent vector, forming the updated final latent vector . This updated latent vector serves as the input to the decoder. Since each variable memory unit continuously provides normal features, it results in significant bias in the abnormal data during reconstruction.

3.5.3. Loss Function

In the training phase, we adopt reconstruction loss as the primary optimization objective and utilize entropy loss as an auxiliary regularization term to achieve sparsity in memory attention. The following is the definition of the total loss function, where is the hyperparameter to maintain balance.

The reconstruction loss, which is defined as follows, guarantees that the model can reconstruct the input data precisely:

where denotes the input data and denotes the reconstructed data. By minimizing , the model learns to achieve low reconstruction error for normal samples, while significantly increasing the reconstruction error for anomalous data, thus assisting in locating outlier samples in the dataset.

To enhance the attention to the memory units allocated for each variable, we introduce entropy loss regularization. This regularization term encourages the attention weights to exhibit a sparse distribution. The entropy loss is defined as

The complete training process is shown in Algorithm 1.

| Algorithm 1 ITMMG Training Algorithm |

| Required: Training data ; ITMMG model; number of epochs; block number Lenc. Train encoder after first phase -) 1 04: while n ≤ epoch do: = Embedding(X) = 1 to Lenc: )) )) 09: End for 10: for each variable in do: 11: Compute attention scores 12: Update memory p 13: End for 14: 15: 16: 17: 18: 19: 20: n + 1 21: End while |

3.6. Anomaly Criterion

For the anomaly scoring criteria, we also adopt a dual standard based on reconstruction deviation and latent space distance, combined with threshold setting.

Anomalous data points typically exhibit a larger distance in the latent space compared to normal data points, as each memory unit contains a prototype of normal data patterns. The latent space distance is represented as the sum of the distances between each variable and its corresponding memory unit. The final anomaly score is defined as follows:

where is the element-wise multiplication. , the anomaly scoring function, scores the input sequence at each window . Anomalous data points receive a higher score compared to typical data points.

The entire testing process is shown in Algorithm 2.

| Algorithm 2 ITMMG Testing Algorithm |

| Required: Training data ; trained model parameters; block number Lenc. 01: = Embedding(X) 02: = 1 to Lenc: 03: Self-attention layer is applied on variate tokens. 04: )) 05: )) 06: End for 07: do: 08: Compute attention scores between 09: 10: End for 11: 12: 13: (X) 14: ) |

4. Experiment

4.1. Benchmark Dataset

We conducted experiments on four publicly available real-world datasets. A description of these datasets is provided below, and their characteristics are summarized in Table 1.

Table 1.

Dataset information.

- PSM (Pooled Server Metrics [37]): The PSM dataset, which comes from eBay server computers, is openly accessible. It has 25 characteristics that describe server machine metrics, including memory and CPU use.

- SMAP (Soil Moisture Active Passive [38]): The SMAP dataset is used by NASA and contains soil samples and telemetry information obtained from the Mars rover. It consists of 25 features, primarily used for studying the spatiotemporal variations of soil moisture.

- SWaT (Smart Water Treatment [39]): This dataset is collected from a real-world water treatment plant and contains sensor data with 51 dimensions, collected from continuously operating critical infrastructure systems.

- NIPS-TS-SWAN (Server Computer): This is a publicly available comprehensive multivariate time series benchmark dataset, derived from the solar photospheric vector magnetogram images extracted from the Spaceweather HMI Active Region Patch series [40,41].

4.2. Evaluation Metrics

We use precision (P), recall (R), and F1 score (F1) to assess the model’s anomaly detection capabilities. In the following text, they are denoted as P, R, and F1, respectively. TP denotes true positives, FP denotes false positives, TN denotes true negatives, and FN denotes false negatives.

Higher values of these metrics indicate better detection performance by the model. The F1 score offers a more impartial assessment. Therefore, the F1 score is considered a more comprehensive metric.

Since anomaly samples are typically in the form of continuous anomalous segments, we adopt a point adjustment method. If a single timestep is identified as anomalous, it is presumed that all associated timesteps within the corresponding anomalous interval are likewise recognized accurately [42].

4.3. Implementation Details

Our experimental setup is summarized as follows. We employed non-overlapping sliding windows to extract subsequences. The sliding window size was fixed at 64, with 8 attention heads, 3 encoder layers, an embedding dimension of 512, and 10 memory units per variable. The temperature hyperparameter was set to 0.1, and the loss balancing coefficient was set to 0.01 to balance the two components of the loss function. All experiments were implemented using PyTorch 2.1.0 and optimized with the Adam [43] optimizer. The batch size was set to 32, the initial learning rate was configured to 10−4, and the experiments were conducted on a single NVIDIA GeForce RTX 3060Ti GPU (Colorful Technology Co., Ltd., Shenzhen, Guangdong, China).

4.4. Detection Results

Our model was compared extensively with 10 baseline methods, including classical methods: OC-SVM [44] and Isolation Forest [4]; density-based model: DAGMM [13]; clustering-based methods: ITAD [45] and THOC [46]; reconstruction-based models: LSTM-VAE [15], Anomaly Transformer [9], OmniAnomaly [16], BeatGAN [22]; autoregressive models: LSTM and VAR; and contrastive representation learning methods: DCdetector [47]. The evaluation of multivariate time series anomaly detection is shown in Table 2. Overall, ITMMG demonstrates performance comparable with that of SOTA methods, confirming its effectiveness.

Table 2.

Overall results for real-world multivariate datasets. Precision (P), Recall (R), and F1 scores are used as evaluation metrics. All results are in %. For these three metrics, higher values indicate better performance, and the best ones are in bold.

Additionally, we assessed the NIPS-TS-SWAN dataset from Table 1, which, compared to the other three datasets, is more challenging due to a higher variety of anomaly types. As shown in Table 3, ITMMG’s performance metrics exceed the DCdetector method.

Table 3.

Overall results for NIPS-TS-SWAN. All results are in %, and the best ones are in bold.

4.5. Ablation Studies

We conducted a series of ablation experiments to evaluate the effectiveness of the key components of the proposed model. By progressively removing or replacing different modules, we comprehensively evaluated the impact of each component on performance, as shown in Table 4.

Table 4.

Performance results of the ablation experiment. The best F1 score is in bold.

When the Inverted Tokenization module was removed (w/o Inverted Tokenization), the model adopted a timestep-driven embedding method, which failed to capture the fine-grained relationships between variables using the multivariate attention mechanism. Similarly, when the multi-memory module was removed (w/o multi-memory), the model was unable to establish independent multi-memory gates for each variable. Instead, it used a shared global memory unit, causing the specific features of different variables to become mixed. Therefore, performance significantly decreased. In contrast, our model consistently achieved the highest performance across all datasets, demonstrating that the proposed approach effectively captures both temporal dependencies in time series and inter-variable dependencies. The model’s capacity to identify anomalous characteristics is improved by the multivariate memory gates for multivariate time series, which efficiently recognize the typical characteristics of several variables.

In addition, we explored the effectiveness of the model across various anomaly evaluation standards, including standalone reconstruction performance, standalone latent space deviation, and different combination methods for reconstruction performance and latent space deviation: multiplication and multiplication.

As shown in Table 5, the multiplicative combination achieves the highest overall performance, outperforming other fusion strategies across all evaluation metrics, as it enables better collaboration between reconstruction performance and latent space deviation. Numbers in bold represent the best-performing values.

Table 5.

Ablation of criterion definition.

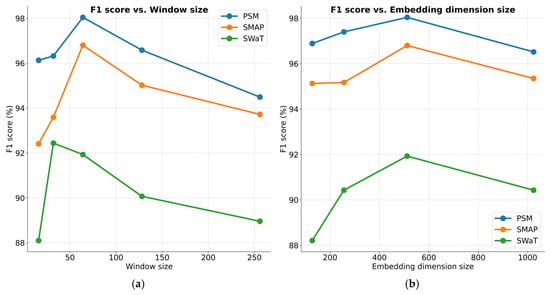

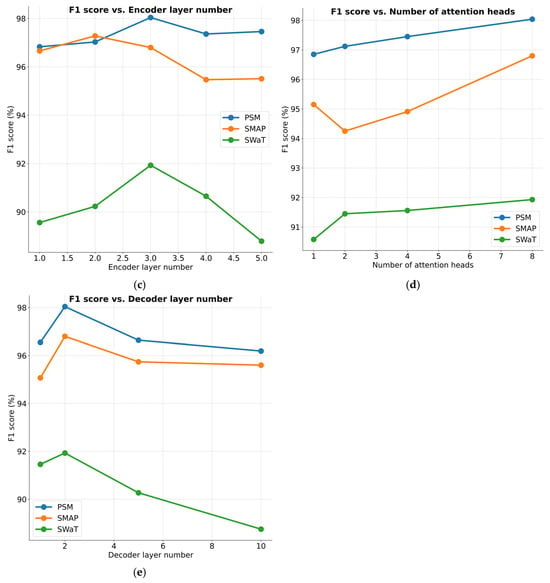

4.6. Parameter Sensitivity

This section further explores how critical hyperparameter configurations influence the performance of ITMMG. These hyperparameters include window size , embedding dimension size , number of encoder layers, number of attention heads, and number of decoder layers. We conducted hyperparameter analysis experiments on three datasets as follows.

Figure 7a presents a comparative analysis of model effectiveness under varying window lengths. When the window size is smaller than 32, performance significantly drops. A small window size provides limited contextual time information, but within the range of window sizes from 32 to 256, the model shows robustness, with performance fluctuations of less than 2.31%. Figure 7b shows the performance results for different embedding dimension sizes. Overall, the effectiveness of the proposed method remains consistent across varying conditions. However, for the SWaT dataset, performance drops noticeably when the embedding dimension is reduced to 128. A very small embedding dimension may result in insufficient extraction of window timestep information, affecting the learning of representations in conjunction with the multivariate memory units. In the implementation, we used a window size of 64 to balance memory consumption and computational efficiency. Figure 7c,d illustrate the performance results across varying numbers of encoder layers and attention heads, respectively. Overall, the model’s performance remains stable. Figure 7e highlights the impact of different decoder layer numbers on performance. A smaller number of decoder layers leads to performance degradation due to insufficient reconstruction capability for input data, while an excessive number of layers increases the risk of over-generalization and reduces computational efficiency.

Figure 7.

Parameter sensitivity studies of main hyper-parameters.

5. Conclusions

This paper proposes a multivariate time series anomaly detection method based on an inverted Transformer framework and a multi-memory gating mechanism. By employing an inverted token encoding strategy, the method encodes each variable in the time series independently, enabling finer-grained feature representations. This architectural choice enhances the model’s ability to model intricate variable-level relationships with greater precision. Meanwhile, the multi-memory gating mechanism assigns independent memory units to each variable, adaptively extracting normal patterns for different variables. This strengthens the model’s capability to detect outlier samples effectively. Empirical evaluations conducted on four publicly available datasets reveal that ITMMG surpasses many advanced methods in both detection precision and model robustness. Ablation experiments confirm the contribution of each individual module to the overall system performance.

Future research will focus on assessing the potential of ITMMG across various application domains, as well as enhancing its computational performance.

Author Contributions

Conceptualization, L.B. and Y.M.; methodology, W.L. and C.X.; software, Y.M. and W.L.; validation, E.Z. and J.W.; investigation, W.L. and C.X.; data curation, Y.M.; writing—original draft preparation, W.L.; funding acquisition, L.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the open project fund of the Center of National Railway Intelligent Transportation System Engineering and Technology (RITS2023KF04), the key project fund of China Academy of Railway Sciences Corporation Limited (2023YJ363).

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

Author Yuan Ma is employed by Academy of Railway Sciences Corporation Limited. This study was funded by China Academy of Railway Sciences Corporation Limited. Yuan Ma contributed to this work in his capacity as a researcher specializing in time-series anomaly detection and led the preliminary research phase. The remaining authors declare that the research was conducted in the absence of any other commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Gong, D.; Liu, L.; Le, V.; Saha, B.; Mansour, M.R.; Venkatesh, S.; Hengel, A.V.D. Memorizing normality to detect anomaly: Memory-augmented deep autoencoder for unsupervised anomaly detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1705–1714. [Google Scholar]

- Park, H.; Noh, J.; Ham, B. Learning memory-guided normality for anomaly detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 14372–14381. [Google Scholar]

- Chen, X.; Deng, L.; Huang, F.; Zhang, C.; Zhang, Z.; Zhao, Y.; Zheng, K. Daemon: Unsupervised anomaly detection and interpretation for multivariate time series. In Proceedings of the 2021 IEEE 37th International Conference on Data Engineering (ICDE), Chiang Mai, Thailand, 13–16 April 2021. [Google Scholar]

- Li, D.; Chen, D.; Jin, B.; Shi, L.; Goh, J.; Ng, S.-K. MAD-GAN: Multivariate anomaly detection for time series data with generative adversarial networks. In Proceedings of the International Conference on Artificial Neural Networks, Munich, Germany, 12–15 September 2019; Springer International Publishing: Cham, Switzerland, 2019. [Google Scholar]

- Zhou, B.; Liu, S.; Hooi, B.; Cheng, X.; Ye, J. Beatgan: Anomalous rhythm detection using adversarially generated time series. In Proceedings of the IJCAI, Macao, China, 10–16 August 2019. [Google Scholar]

- Bao, H.; Dong, L.; Piao, S.; Ye, Q.; Wei, F. BEiT v2: Masked Image Modeling with Vector-Quantized Visual Tokenizers. arXiv 2022, arXiv:2208.09210. [Google Scholar]

- Abdulaal, A.; Liu, Z.; Lancewicki, T. Practical approach to asynchronous multivariate time series anomaly detection and localization. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery Data Mining, Singapore, 14–18 August 2021. [Google Scholar]

- Hundman, K.; Constantinou, V.; Laporte, C.; Colwell, I.; Soderstrom, T. Detecting spacecraft anomalies using lstms and nonparametric dynamic thresholding. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery Data Mining, London, UK, 19–23 August 2018. [Google Scholar]

- Mathur, A.P.; Tippenhauer, N.O. SWaT: A water treatment testbed for research and training on ICS security. In Proceedings of the 2016 International Workshop on Cyber-Physical Systems for Smart Water Networks (CySWater), Vienna, Austria, 11 April 2016. [Google Scholar]

- Angryk, R.; Martens, P.; Aydin, B.; Kempton, D.; Mahajan, S.; Basodi, S.; Ahmadzadeh, A.; Cai, X.; Filali Boubrahimi, S.; Hamdi, S.M.; et al. SWAN-SF. Available online: https://dataverse.harvard.edu/dataset.xhtml?persistentId=doi:10.7910/DVN/EBCFKM (accessed on 20 May 2025).

- Lai, K.H.; Zha, D.; Xu, J.; Zhao, Y.; Wang, G.; Hu, X. Revisiting time series outlier detection: Definitions and benchmarks. In Proceedings of the Thirty-Fifth Conference on Neural Information Processing Systems Datasets and Benchmarks Track (Round 1), Virtual Event, 7–10 December 2021. [Google Scholar]

- Xu, H.; Chen, W.; Zhao, N.; Li, Z.; Bu, J.; Li, Z. Unsupervised anomaly detection via variational auto-encoder for seasonal kpis in web applications. In Proceedings of the 2018 World Wide Web Conference, Lyon, France, 23–27 April 2018. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the 3rd International Conference for Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Shin, Y.; Lee, S.; Tariq, S.; Lee, M.S.; Jung, O.; Chung, D.; Woo, S.S. Itad: Integrative tensor-based anomaly detection system for reducing false positives of satellite systems. In Proceedings of the 29th ACM International Conference on Information Knowledge Management, Virtual Event, 19–23 October 2020. [Google Scholar]

- Shen, L.; Li, Z.; Kwok, J. Timeseries anomaly detection using temporal hierarchical one-class network. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 6–14 December 2020. [Google Scholar]

- Manevitz, L.M.; Yousef, M. One-class SVMs for document classification. J. Mach. Learn. Res. 2001, 2, 139–154. [Google Scholar]

- Borghesi, M.; Molan, M.; Milano, A.; Bartolini, A. Anomaly detection and anticipation in high performance computing systems. In Proceedings of the 2021 International Conference on High Performance Computing Systems, New York, NY, USA, 20–22 April 2021. [Google Scholar]

- Fraikin, A.; Bennetot, A.; Allassonnière, S. T-Rep: Representation Learning for Time Series using Time-Embeddings. arXiv 2024, arXiv:2310.04486. [Google Scholar]

- Song, J.; Kim, K.; Oh, J.; Cho, S. Memto: Memory-guided transformer for multivariate time series anomaly detection. In Proceedings of the Advances in Neural Information Processing Systems, New Orleans, LA, USA, 10–16 December 2023. [Google Scholar]

- Yang, Y.; Zhang, C.; Zhou, T.; Wen, Q.; Sun, L. Dcdetector: Dual attention contrastive representation learning for time series anomaly detection. In Proceedings of the 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Singapore, 28 August–1 September 2023. [Google Scholar]

- Cook, A.A.; Mısırlı, G.; Fan, Z. Anomaly detection for IoT time-series data: A survey. IEEE Internet Things J. 2019, 7, 6481–6494. [Google Scholar] [CrossRef]

- Schmidl, S.; Wenig, P.; Papenbrock, T. Anomaly detection in time series: A comprehensive evaluation. Proc. VLDB Endow. 2022, 15, 1779–1797. [Google Scholar] [CrossRef]

- Liu, F.T.; Ting, K.M.; Zhou, Z.H. Isolation forest. In Proceedings of the of the 8th IEEE International Conference on Data Mining, Eighth IEEE International Conference on Data Mining, Pisa, Italy, 15–19 December 2008. [Google Scholar]

- Schölkopf, B.; Platt, J.C.; Shawe-Taylor, J.; Smola, A.J.; Williamson, R.C. Estimating the support of a high-dimensional distribution. Neural Comput. 2001, 13, 1443–1471. [Google Scholar] [CrossRef] [PubMed]

- Breunig, M.M.; Kriegel, H.P.; Ng, R.T.; Sander, J. LOF: Identifying density-based local outliers. In Proceedings of the 2000 ACM SIGMOD International Conference on Management of Data, Dallas, TX, USA, 16–18 May 2000. [Google Scholar]

- Canizo, M.; Triguero, I.; Conde, A.; Onieva, E. Multi-head CNN–RNN for multi-time series anomaly detection: An industrial case study. Neurocomputing 2019, 363, 246–260. [Google Scholar] [CrossRef]

- Park, D.; Hoshi, Y.; Kemp, C.C. A multimodal anomaly detector for robot-assisted feeding using an lstm-based variational autoencoder. IEEE Robot. Autom. Lett. 2018, 3, 1544–1551. [Google Scholar] [CrossRef]

- Tuli, S.; Casale, G.; Jennings, N.R. Tranad: Deep transformer networks for anomaly detection in multivariate time series data. arXiv 2022, arXiv:2201.07284. [Google Scholar] [CrossRef]

- Xu, J. Anomaly transformer: Time series anomaly detection with association discrepancy. arXiv 2021, arXiv:2110.02642. [Google Scholar]

- Jeong, Y.; Yang, E.; Ryu, J.H.; Park, I.; Kang, M. Anomalybert: Self-supervised transformer for time series anomaly detection using data degradation scheme. arXiv 2023, arXiv:2305.04468. [Google Scholar]

- Liu, Y.; Hu, T.G.; Zhao, H.R.; Wu, H.X.; Wang, S.Y.; Ma, L.T.; Long, M.S. iTransformer: Inverted Transformers Are Effective for Time Series Forecasting. In Proceedings of the 12nd International Conference on Learning Representations, Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Vaswani, A. Attention is all you need. In Proceedings of the Advances in Neural Information Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Zong, B.; Song, Q.; Min, M.R.; Cheng, W.; Lumezanu, C.; Cho, D.; Chen, H. Deep autoencoding gaussian mixture model for unsupervised anomaly detection. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Deng, A.; Hooi, B. Graph neural network-based anomaly detection in multivariate time series. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 2–9 February 2021. [Google Scholar]

- Su, Y.; Zhao, Y.; Niu, C.; Liu, R.; Sun, W.; Pei, D. Robust anomaly detection for multivariate time series through stochastic recurrent neural network. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery Data Mining, Anchorage, AK, USA, 4–8 August 2019. [Google Scholar]

- Chen, Z.; Chen, D.; Zhang, X.; Yuan, Z.; Cheng, X. Learning graph structures with transformer for multivariate time-series anomaly detection in IoT. IEEE Internet Things J. 2021, 9, 9179–9189. [Google Scholar] [CrossRef]

- Wang, S.Y.; Wu, H.X.; Shi, X.M.; Hu, T.G.; Luo, H.K.; Ma, L.T.; Zhang, J.Y.; Zhou, J. Timemixer: Decomposable multiscale mixing for time series forecasting. In Proceedings of the Twelfth International Conference on Learning Representations (ICLR), Vienna Austria, 7–11 May 2024. [Google Scholar]

- Huang, Q.; Shen, L.; Zhang, R.; Cheng, J.; Ding, S.; Zhou, Z.; Wang, Y. HDMixer: Hierarchical dependency with extendable patch for multivariate time series forecasting. In Proceedings of the 38th AAAI Conference on Artificial Intelligence (AAAI), Vancouver, BC, Canada, 20–27 February 2024. [Google Scholar]

- Yu, M.; Sun, S. Policy-based reinforcement learning for time series anomaly detection. Eng. Appl. Artif. Intell. 2020, 95, 103919. [Google Scholar] [CrossRef]

- Ping, C.X. Application Research of Edge Computing Technology Oriented to 5G in Railway Marshalling Stations. Proc. Railw. Signal. Commun. 2023, 59, 7–14. [Google Scholar]

- Fang, S.S. Research on Structural Strain Threshold Setting of Bridge Health Monitoring System Based on Deep Learning. Master’s Thesis, China Three Gorges University, Yichang, China, May 2023. [Google Scholar]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.S.; Dean, J. Distributed Representations of Words and Phrases and Their Compositionality. In Proceedings of the 26th International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 5–10 December 2013; Curran Associates Inc.: Red Hook, NY, USA; pp. 3111–3119. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. In Proceedings of the International Conference on Learning Representations (ICLR), Virtual, 3–7 May 2021. [Google Scholar]

- Hamilton, W.L. Graph Representation Learning; Morgan & Claypool Publishers: San Rafael, CA, USA, 2020. [Google Scholar]

- Yeh, C.-F.; Mahadeokar, J.; Kalgaonkar, K.; Wang, Y.; Le, D.; Jain, M.; Schubert, K.; Fuegen, C.; Michael; Seltzer, L. Transformer-transducer: End-to-end speech recognition with self-attention. arXiv 2019, arXiv:1910.12977. [Google Scholar]

- Trirat, P.; Shin, Y.; Kang, J.; Nam, Y.; Na, J.; Bae, M.; Kim, J.; Kim, B.; Lee, J.-G. Universal Time-Series Representation Learning: A Survey. arXiv 2024, arXiv:2401.03717. [Google Scholar] [CrossRef]

- Li, S.; Jin, X.; Xuan, Y.; Zhou, X.; Chen, W.; Wang, Y.X.; Yan, X. Enhancing the locality and breaking the memory bottleneck of transformer on time series forecasting. Adv. Neural Inf. Process. Syst. 2019, 32, 5243–5253. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).