The following subsections first formally define this hierarchical network structure, then introduce the two core analytical methods that operate upon it, and finally integrate everything into a complete, step-by-step procedural framework.

4.1. The Multi-Level Hierarchical BN Structure

To systematically translate a multicriteria decision-making problem into a probabilistic inference model, we first formally define the multi-level hierarchical Bayesian Network structure that serves as the backbone of our framework.

This architecture explicitly organizes the evaluation system into three distinct levels, as shown in Figure 10. At the apex is the Goal Layer, consisting of a single leaf node, G, which represents the final evaluation objective (e.g., ‘Swarm Performance’). This goal is directly influenced by the Criteria Layer, a set of intermediate nodes, , where each represents a high-level evaluation criterion like ‘Swarm Mobility.’ Finally, the foundation of the network is the Factor Layer, which contains all root nodes, . Each factor, such as ‘Path Planning Efficiency’ represents a fundamental performance metric and serves as a parent to one and only one criterion node.

Based on this hierarchical structure, the joint probability distribution of the entire network,

, can be precisely factorized into a product of local conditional probabilities according to the chain rule of BN. This factorization is the mathematical core of the entire framework and its final form is expressed as

where

represents the joint probability of all nodes in the network,

is the conditional probability for the Goal Layer, quantifying how the criteria jointly influence the final goal, and

is the product of conditional probabilities for the Criteria Layer. Here,

denotes the set of parent factor nodes for the criterion

and

is the product of prior probabilities for the Factor Layer (leaf nodes).

The significance of this factorization is that it decomposes a complex, high-dimensional joint probability problem into a combination of smaller, more manageable local probability tables. This decomposability, combined with the conditional independence assumptions inherent in the model, justifies simplifying our subsequent analysis to focus on generic parent–child sub-networks. These sub-networks can represent either the ‘Factor-to-Criterion’ relationships () or the ‘Criteria-to-Goal’ relationship (). Therefore, for the sake of generality and notational simplicity in the subsequent mathematical derivations, we will use the generic symbols to represent a set of parent nodes in an arbitrary sub-network, and Y to represent its corresponding child node. This approach ensures that our derived methods (such as Hierarchical Bayesian Network Sensitivity (HBNS) and Multicriteria Hierarchical Bayesian Network Sensitivity (M-HBNS)) are generalizable and can be applied to any hierarchical relationship within the network. Moreover, the entire BNDM framework is, in essence, built around learning and constructing these local probability terms from expert knowledge and data.

4.2. Hierarchical Bayesian Network Sensitivity Method

Once the hierarchical network structure is defined, the primary analytical task is to quantify the influence of each parent node on its child node Y. To this end, the Hierarchical Bayesian Network Sensitivity (HBNS) method is developed. It provides an analytical solution for computing sensitivity indices by adapting principles from variance decomposition. This approach enables the exact computation of sensitivities directly from the model’s parameters, thereby obviating the need for computationally expensive Monte Carlo simulations.

To demonstrate the derivation process in a hierarchical network, we define a simple BN with n parents of node Y. For simplicity in expressing conditional probabilities, is specified.

First, we calculate the mean value of

Y and

as follows:

Similar to (

10), where

is the state of

Y,

is the configuration of the parent set

, and

. Further, we can obtain the variance of

Y using (

13) and (

14).

It is complex to denote

with (

13) and (

14). So,

can be obtained by BN inference to simplify (

15)

Equations (

17)–(

19) are necessary for the further derivation of (

9)

where

is the

j-th value of

and

is the

t-th value of the node combination except

.

The simplification of (

19), which is necessary for deriving an analytical expression for sensitivity, relies on established principles for decomposing joint conditional probabilities in BNs under certain assumptions, as formalized in Theorem 1.

Theorem 1. Zhang and Poole [54] Let Y be a node in a BN and let , , …, be the parents of Y. If , , …, are causally independent (d-separation [12]) and Y has m states , the BN parameters can be obtained from the conditional probability , ,…, through (

20).

Here, * is the combination operator of Y, and .

Theorem 1 presents a general formulation of BN parameters and conditional probability, which decompose in . Moreover, we give the specific form under the hierarchical BN structure in Corollary 1.

Corollary 1. The joint probability can be calculated according to the marginal probability when the structure of BN is hierarchical. Thus, the specific form is as follows: Proof. First, (

22) holds according to the conditional probability formula.

Then, since

,

,

, …,

are independent of each other, (

23) holds.

Further,

,

,

, …,

are independent of each other, and thus, (

24) holds.

Finally, (

21) is obtained using (

23) and (

24) due to

. □

According to Corollary 1, we can simplify (

19) as (

25)

Then, (

26) holds according to the law of total probability.

Simultaneously with (

17), (

18), and (

26), we can obtain (

27).

Furthermore, considering (

9), (

16), and (

27), (

28) is given.

In this paper, we define that

X and

Y have two states, which are

represented by the numbers

. Under this assumption, we can further simplify (

28) as (

29).

where

refers to the main SOBOL indices obtained in this case using conditional probability calculations. Equations (

28) and (

29) exhibited a method for calculating the main SOBOL sensitivity indices.

Building upon these derivations, Theorem 2 formally articulates the HBNS method for calculating sensitivity indices directly from BN parameters under hierarchical network conditions.

In this work, we assume that there is a mapping relationship between the values obtained by the MCDM and SA. This assumption is common sense, and we present it as Definition 1.

Definition 1. The weight measures the relative importance of the factors. AHP applies expert intuition to access , and HBNS introduces conditional variance to obtain . Their mapping relationship is expressed by (

30)

where is the scope of , μ is the scaling factor, and the default value is 1. Theorem 2. Consider a Bayesian Network , where L denotes the set of nodes N and edges E in the network, and θ denotes the parameters of the network, specifically the conditional probability tables. Suppose there exists a system f that can be expressed (

3).

X and Y can be represented by the nodes N. We define an operator H that measures the contribution of X to Y. Then, we have the following expression: Under the assumption of parent node independence (as utilized in Corollary 1), the main sensitivity indices can be derived using (

28).

This expression further simplifies to (

29)

when the nodes and Y are binary. This efficiency is a key consideration for engineering managers, as it ensures that the framework remains scalable and practical for evaluating increasingly complex swarm configurations without prohibitive computational overhead. For a naïve Bayesian network with N binary nodes, if no independence assumptions are made, the number of parameters required for the CPTs grows exponentially, i.e., . However, by introducing the assumption of parent node independence, each node’s CPT can be decomposed into the product of its marginal conditional probabilities, significantly reducing the number of parameters to . This reduction in the parameter space not only greatly enhances the scalability of our model for multicriteria decision making in UAV swarm management, but also dramatically improves computational efficiency.

4.3. Multicriteria Hierarchical Bayesian Network Sensitivity Analysis

While HBNS establishes a method to derive sensitivity indices from BN probabilities, a crucial step for practical application, particularly when initializing a model with expert knowledge, is the inverse process: deriving BN conditional probabilities from expert-assigned importance weights. This subsection introduces the Multicriteria Hierarchical Bayesian Network Sensitivity (M-HBNS) method, which is designed to systematically convert such weights into consistent BN CPT parameters by leveraging the variance decomposition principles.

First, to obtain the conditional probabilities with respect to

, we use (

29) and constraints to build a system of equations, as shown in (

32).

Then, the results of (

33) are as follows:

where

and

imply that the effect of

on

Y is positive and

and

Y are positively correlated. In contrast,

and

Y are negatively correlated. We set

to indicate a positive correlation and

to indicate a negative correlation between

and

Y. According to the results of (

32),

is calculated when

,

, and

are known.

The mathematical formulation for this inverse mapping from sensitivity indices (weights) to conditional probabilities is encapsulated in Theorem 3.

Theorem 3. Consider the Bayesian Network and the system f expressed by (

3).

Let denote the set of weights, and represent the set of probabilities associated with the nodes. We define an operator R that maps the relationship between the CPTs θ and the weights Ω.

Then, we have the following expression: When the parent nodes are independent and the size is equal to 2, the parameters can be derived by (

33).

Additionally, we need to constrain the range of variables in (

33):

The probability is a real number between 0 and 1, and the square root must be greater than zero, which is projected as

The solution space of

,

, and

is shown in

Figure 4.

In

Figure 4, the solution space is the solid bounded by the surface and the

plane. When the value of

is larger, the value range of

and

is smaller, and vice versa. In the case where

is known, the value range of

is the largest when

, and when

is closer to 0 and 1, its value range decreases and distributes on both sides of 0.5.

has the same features, when

. In the plane composed of

, different curves reflect the relationship between

and

when

takes different values. When

is relatively large,

tends to be smaller as

becomes larger. In the opposite case,

tends to be larger as

becomes larger.

This relationship, depicted in

Figure 4, aligns with an intuitive understanding: a factor (

) is considered highly influential if its state significantly alters the probability of the desired outcome (

Y). Engineering managers can leverage this understanding when providing initial expert judgments (hyperparameters for

and

), ensuring that the model’s initial configuration reflects realistic operational sensitivities.

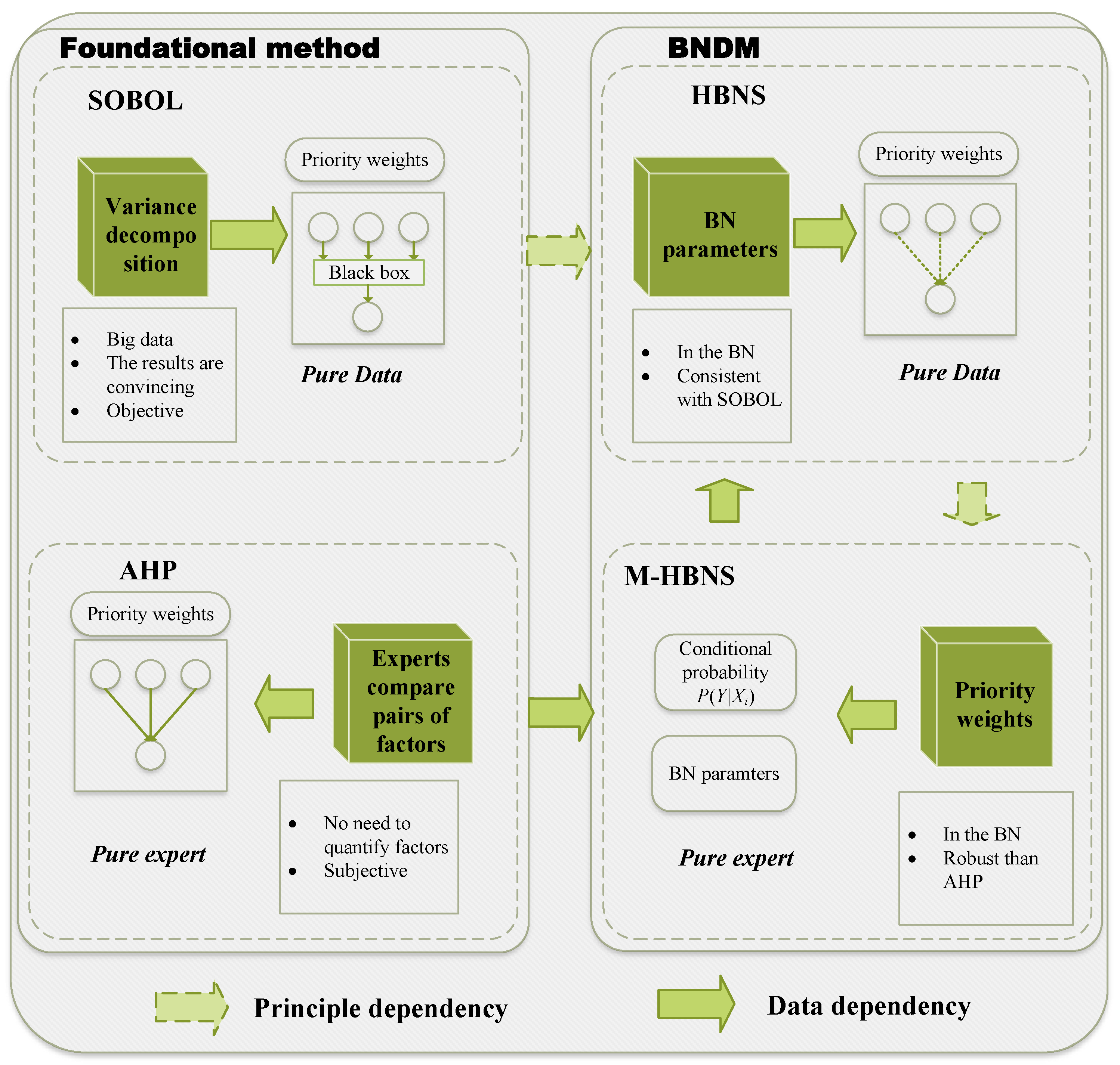

4.4. BNDM Framework

The preceding subsections defined the model’s structure and its core analytical components. We now integrate them into a comprehensive, four-phase procedure for practical application, as illustrated in

Figure 5. We used a two-round process: in Round 1, experts proposed/edited the criteria–factor lists adopting the literature that we cite before; in Round 2, disagreements were resolved in a moderated session by majority with the rationale recorded. This framework guides a manager from initial problem structuring with expert knowledge to a refined, data-driven performance model.

The four-phase procedure is outlined as follows.

Phase 1 Expert evaluation: This phase leverages the structured AHP methodology to systematically capture initial expert judgments on criteria importance, forming the foundational expert-driven input for the subsequent BN construction. This ensures that managerial priorities are embedded from the outset.

Step 1: After discussion, experts determine the criteria and factors that should be considered in different goals G.

Step 2: Organize the identified goal, criteria, and factors into a hierarchical structure . This AHP-based hierarchy provides a clear visual representation of performance dependencies and forms the basis for structured expert judgment elicitation, aligning the model with how managers often conceptualize complex problems.

Step 3: The criteria and factors are used to construct the pairwise comparison matrix , , ,…, .

Step 4: Compute weight , , , …, .

Step 5: Calculate the consistency index and go to the next step if the requirements are met.

Phase 2 M-HBNS: This phase translates the qualitative hierarchy from AHP into a quantitative, probabilistic model.

Step 6: Translate the AHP hierarchy directly into a multi-level hierarchical BN Structure. Each element (goal, criterion, factor) from the AHP becomes a node in the BN, and the hierarchical relationships define the directed edges in the DAG. This step formally encodes the multicriteria evaluation problem into a probabilistic model.

Step 7: Using the M-HBNS method, the AHP weights are converted into the initial CPTs that parameterize the BN, effectively translating expert beliefs into a probabilistic format.

Step 8: Calculate the scope and value of

. Here,

denotes the admissible interval of the target sensitivity

that is compatible with the probability constraints and the chosen correlation sign

. In practice,

is obtained from the feasibility conditions in Equation (

35), applied to Equation (

33): it is the set of

values for which there exist conditional probabilities

and marginals

satisfying the BN constraints. The scalar

then rescales sensitivities onto a common decision scale; throughout, we set

and normalize by

for a conservative scaling.

Step 9: Employ the M-HBNS method (Equations (

33) and (

35)) to calculate the initial conditional probabilities

for each parent–child relationship in the BN, based on

,

,

, and the target

. This critical step translates abstract importance weights into concrete probabilistic parameters for the BN.

Step 10: Utilize the derived values and Corollary 1 to construct the complete CPTs for all nodes in the BN. This completes the initialization of the BN with expert-derived parameters, creating a baseline model that reflects current managerial understanding.

Thus far, the structure and parameters of BN are constructed in steps 6 and 10, respectively.

Phase 3 Parameter construction: This phase focuses on refining the expert-initialized BN by incorporating empirical evidence from UAV swarm operations, allowing the model to adapt and improve its accuracy over time.

Step 11: Convert the initial CPTs (from Step 10) into Dirichlet hyperparameters

for Equation (

11). As new operational data (

counts) from UAV swarm missions becomes available, MAP estimation is employed to update the BN CPTs. This systematically integrates empirical evidence with initial expert beliefs, allowing managers to evolve the model from a primarily expert-driven one to a more data-informed one.

Phase 4 Refined Sensitivity Analysis using HBNS: The final phase uses the data-updated BN to re-evaluate factor sensitivities, providing managers with refined insights into current performance drivers.

Step 12: After learning the BN parameters,

,

and

change and their new values are obtained by VE. Then, (

29) is used to calculate the optimized results

. These updated indices reflect the influence of factors based on the latest available evidence.

Step 13: is normalized to the final results of BNDM . These refined indices provide engineering managers with an up-to-date understanding of the most critical drivers of UAV swarm performance, guiding targeted interventions, resource adjustments, or strategic reviews.

The flowchart of the BNDM is given in

Figure 5. The multi-phase BNDM procedure detailed above outlines a systematic workflow for integrating expert knowledge with empirical data to produce a refined assessment of UAV swarm performance factor sensitivities. Theorem 4 provides a formal aggregation of this entire process, illustrating how initial expert judgments (represented by weights

) are transformed into BN parameters, subsequently updated by data

D, and finally used to derive optimized sensitivity indices

. This theorem highlights the BNDM framework’s core capability of achieving a data-informed synthesis.

Theorem 4. Consider the Bayesian Network and the system f expressed by (

3).

Let denote the set of weights, represent the set of probabilities associated with the nodes, and denote the data. We define an operator H that measures the contribution of X to Y considering the data and expert judgments. Then, we have the following expression: Given operational data , where are observed counts, the posterior CPT parameters are obtained via MAP estimation (

11)

: Let be the set of these updated CPTs. Finally, the optimized sensitivity indices are derived from the data-updated BN using the HBNS operator H (Theorem 2): Thus, the entire BNDM process can be viewed as an overarching function B: This demonstrates the framework’s capacity to integrate expert intuition () with empirical data (D) within a unified Bayesian pipeline to produce refined sensitivity assessments.

In Theorem 4,

is derived from expert judgments based on MCDM and thus represents expert intuition.

D is the set of

, denoting the data. Based on this formulation (

38),

B integrates expert intuition and data within a BN, or in other words, within a pipeline.

Note that, when

, the formulation (

31) in Theorem 2 and the formulation (

34) in Theorem 3 are not strictly inverse functions. However, when

U is fixed, they can be considered inverse functions, which can be represented as

In summary, this section has detailed the BNDM model, a comprehensive framework that integrates expert knowledge with data-driven learning through its core HBNS and M-HBNS components. By establishing the formal structure, analytical methods, and a procedural workflow, we have laid the foundation for a transparent, adaptable, and robust tool for UAV swarm performance evaluation. The subsequent section will present a comprehensive experimental evaluation designed to validate the framework’s effectiveness and practical applicability.