In this section, we illustrate the feasibility and effectiveness of the proposed estimation procedure through the analysis of two real-world datasets. By applying our methodology to empirical data, we demonstrate its practical capability to capture the underlying patterns and dependencies present in complex data. This analysis not only serves to validate the performance of the estimation approach but also underscores its broad applicability across diverse domains. Moreover, it highlights the model’s flexibility and robustness in handling intricate, time-dependent, and non-Euclidean data structures, thus emphasizing its value as a versatile tool for real-world applications.

6.1. COVID-19 Data

On 11 March 2020, the World Health Organization (WHO) officially declared COVID-19, a contagious disease caused by the severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2), a global pandemic. The rapid and widespread transmission of the virus presented unprecedented challenges to global public health, prompting countries worldwide to implement lockdowns and other measures aimed at controlling the spread of the disease. As of 15 August 2021, WHO reports indicated a staggering 221,885,822 confirmed cases and 4,583,539 deaths spanning nearly all countries, underscoring the extensive and profound impact of the pandemic. Given the scale of this crisis, it is crucial for international health organizations and research institutions to continuously monitor the evolving global trends of COVID-19. Such monitoring enables timely, accurate analysis that supports effective public health responses, informs medical treatment strategies, and guides prevention and control measures for future outbreaks. Understanding the epidemic’s dynamics through data-driven modeling is therefore essential for shaping informed policy decisions and improving health outcomes worldwide amid this ongoing global crisis.

To illustrate this point, we focus on the mortality rate as a key indicator for tracking the global trend of the COVID-19 pandemic. The mortality rate is defined as the ratio of the cumulative number of deaths each day to the total population of each country, serving as a critical measure of the disease’s lethality and spread. Importantly, the calculation of mortality rates inherently involves temporal dependence, as daily figures are based on previous days’ data. Consequently, the mortality rates, and thus the global epidemic trend, exhibit temporal autocorrelation.

The data on COVID-19-related deaths, essential to our analysis, are sourced from the publicly accessible Johns Hopkins University repository. This resource provides a dynamic tracking map offering comprehensive insights into global pandemic trends. The dataset, available at

https://www.jhu.edu/ (accessed on 25 July 2021), covers the period from 22 January 2020 through 15 April 2021. Additionally, the most recent population data required to compute mortality rates for each country are obtained from the World Bank’s online platform, accessible at

https://data.worldbank.org (accessed on 17 September 2021). These publicly available datasets form a valuable foundation for monitoring the pandemic’s progression and conducting rigorous statistical and epidemiological analyses to better understand the disease’s behavior across regions.

Because the timing of outbreaks varies between countries and regions, we standardize the time scale by defining day zero as the date when each country reached 100 cumulative confirmed COVID-19 cases. Our analysis considers daily cumulative death data from 189 countries over the subsequent 100-day period. At each time point

t, we estimate the density function of the mortality rate, denoted as

, using data from these countries.

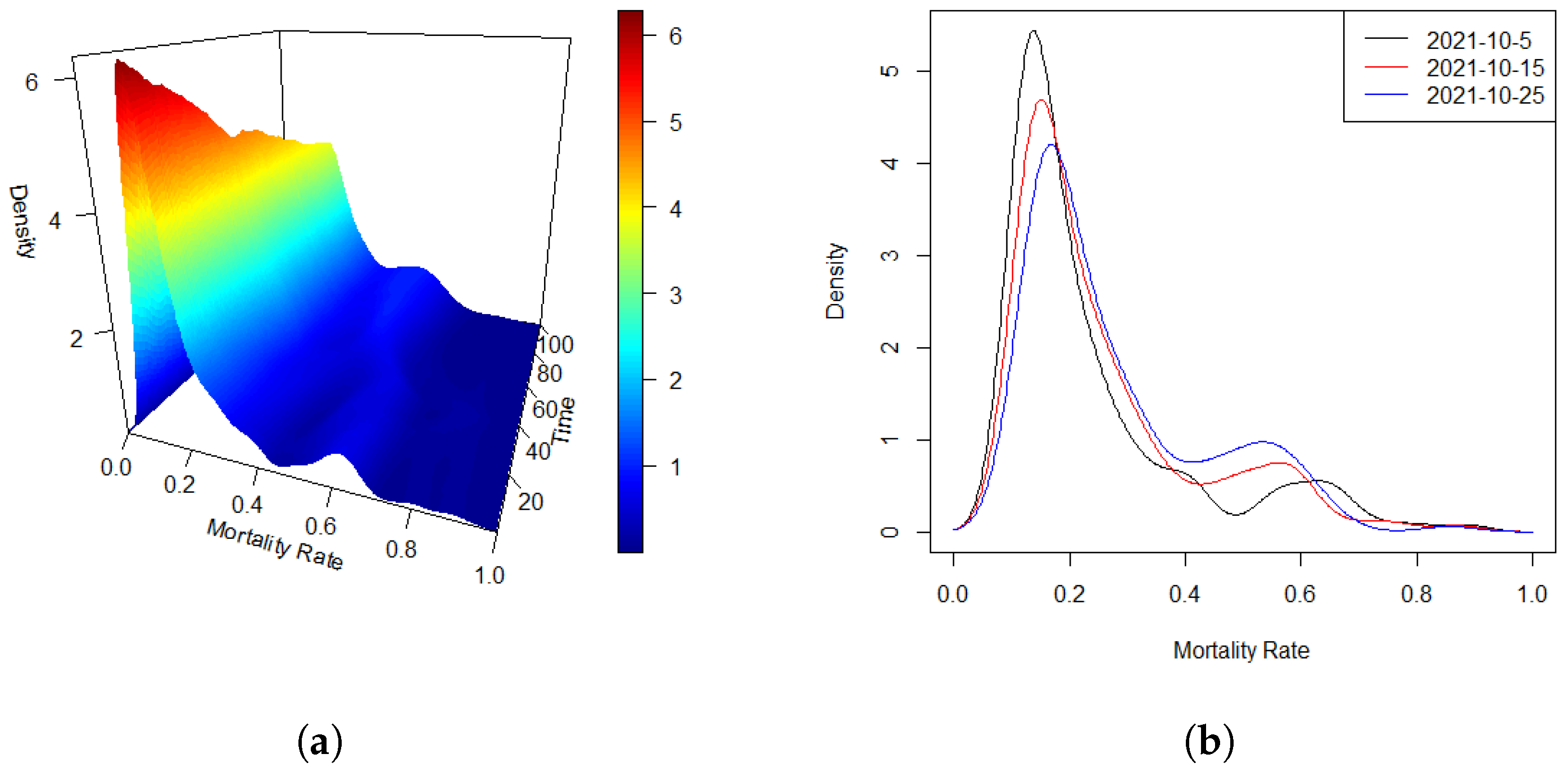

Figure 1a displays the estimated densities of the global mortality rate (‰) across the 100 days, with data from up to 189 countries at each time point.

Figure 1b presents an alternative view by showing the estimated densities on three selected days. From these visualizations, it is clear that the mortality rate densities remain well-defined throughout the observed period. Moreover, a temporal dependency among the distributions is clearly observable, suggesting the presence of an auto-regressive structure in the data, which supports the hypothesis of a functional auto-regressive (FAR) error process.

The main objective of this analysis, based on the COVID-19 data, is to identify the FAR process underlying the mortality rate and estimate its component functions. For the sake of simplicity, we begin by considering a special case where the covariate

z is constant (set to 1), and

x represents a scaled time variable, denoted as

. The model is specified as

where

and

denotes the time scale

.

Using the initial spline estimate of

, we apply a testing algorithm to determine the order

p of the FAR process.

Table 7 reports the corresponding

p-values under different hypotheses. The results provide strong evidence of significant autocorrelation in the data, supporting the model of a first-order functional auto-regressive process,

. These findings empirically confirm the presence of temporal dependencies in the COVID-19 mortality rates, further justifying the application the use of a FAR error structure to effectively capture the evolving epidemic dynamics.

Figure 4 presents a heat map of the estimated bivariate function

, obtained after accounting for the functional error process and determining the auto-regressive order. The heat map reveals a relatively stable temporal pattern, where the function initially attains a minimum at lower values of

u, gradually increases, and reaches a maximum at later time points. This pattern reflects the underlying dynamics of the COVID-19 mortality rate over successive days. The observed correlation between consecutive days further supports the notion that the global mortality rate exhibits substantial temporal dependence, consistent with the nature of the mortality measure derived from prior daily data.

To evaluate the uncertainty associated with these estimates, we conducted a residual-based bootstrap analysis. Specifically, we first fitted the model to obtain the estimated coefficient surface and the residual functions. Then, using the estimated functional auto-regressive operator from the FAR(1) error process, we recursively generated bootstrap residual samples by resampling the innovation functions with replacement. For each of the 500 bootstrap replications, new response functions were constructed by adding the bootstrap residuals to the fitted values based on . The entire estimation procedure was repeated on each bootstrap dataset to obtain bootstrap replicates of the coefficient surface. The variability among these bootstrap replicates was then used to calculate point-wise standard errors and confidence intervals. The resulting standard errors were generally small across the domain, typically ranging between 0.04 and 0.07, indicating stable estimates throughout. The 95% confidence intervals for the bivariate varying-coefficient surface consistently excluded zero along the increasing temporal trend, confirming its statistical significance. Moreover, the bootstrap results showed that the identified pattern, a minimum at early u values followed by a gradual rise, was robust across replications, demonstrating that the observed dynamic is unlikely to be due to random noise. These findings underscore the reliability of the estimated surface and validate the importance of accounting for the temporal dependence captured by the FAR(1) process in modeling the functional response. This suggests that the observed structure is not an artifact of noise, but reflects a meaningful underlying dynamic, which reinforces the reliability of the visual patterns in the figure. Overall, these findings reinforce the necessity of incorporating a functional auto-regressive process to accurately model the temporal structure of the mortality rate. The clear and significant progression observed in the heat map further validates the model’s ability to capture the global evolution of the pandemic over time.

6.2. USA Income Data

Personal income statistics are essential for enabling governments to understand the interplay between national income, consumption, and saving. These statistics also serve as a valuable tool for assessing and comparing economic well-being across different regions or countries. In this study, we focus on the density time series of per capita personal income, defined as the total personal income of a region divided by its population. This metric offers a detailed perspective on the economic conditions within a region by capturing the distribution and evolution of income on a per-person basis over time. Analyzing such time series allows policymakers and researchers to gain insights into the long-term economic trends of a region, evaluate income disparities, and make more informed decisions regarding fiscal policies, social welfare programs, and strategies for economic development.

Income data for the United States are publicly publicly accessible through the official website of the United States Bureau of Economic Analysis (

http://www.bea.gov/ accessed on 16 October 2021). We consider the quarterly per capita personal income of all 50 states in the USA spanning from the first quarter of 2010 through the fourth quarter of 2020, resulting in 44 time points,

. At each quarter

t, we estimte the density function of per capita income,

, based on these 50 observations. Given that the quarterly personal income reflects broader national economic conditions, we incorporate two related covariates, ‘GDP’ (quarterly gross domestic product of the USA) and ‘Population’ (quarterly total population of the USA), both also available from the BEA (

http://www.bea.gov/ accessed on 16 October 2021).

Traditionally, income curves are studied as panel data in economics, focusing on the relationship between consumers’ equilibrium points. As individual incomes fluctuate, the connections among these equilibrium points form trajectories that represent not only income growth but also increased consumer satisfaction. This perspective highlights the dynamic nature of income changes and their impact on well-being, offering valuable insights into consumer behavior over time.

In contrast, the income density curve, treated here as functional data, captures the distribution of income within a region or demographic group. It visually represents the shape and trends of income across different intervals, providing a more comprehensive view of the socio-economic environment. By examining income density curves, one can effectively observe income inequality within a population and identify key patterns of wealth distribution. Such curves are critical for economic research as they facilitate a deeper understanding of consumption behavior, socio-economic status, and the design of social policies.

Furthermore, income density curves are important tools for economic forecasting and analysis. By tracking changes in income distribution over time, economists can integrate insights about consumer preferences and consumption habits at different income levels. This approach enhances the ability to predict future economic conditions and shifts in consumption patterns, making income density curves indispensable for both microeconomic and macroeconomic analyses. Consequently, these curves play a crucial role in shaping policy decisions, economic planning, and our broader comprehension of economic well-being.

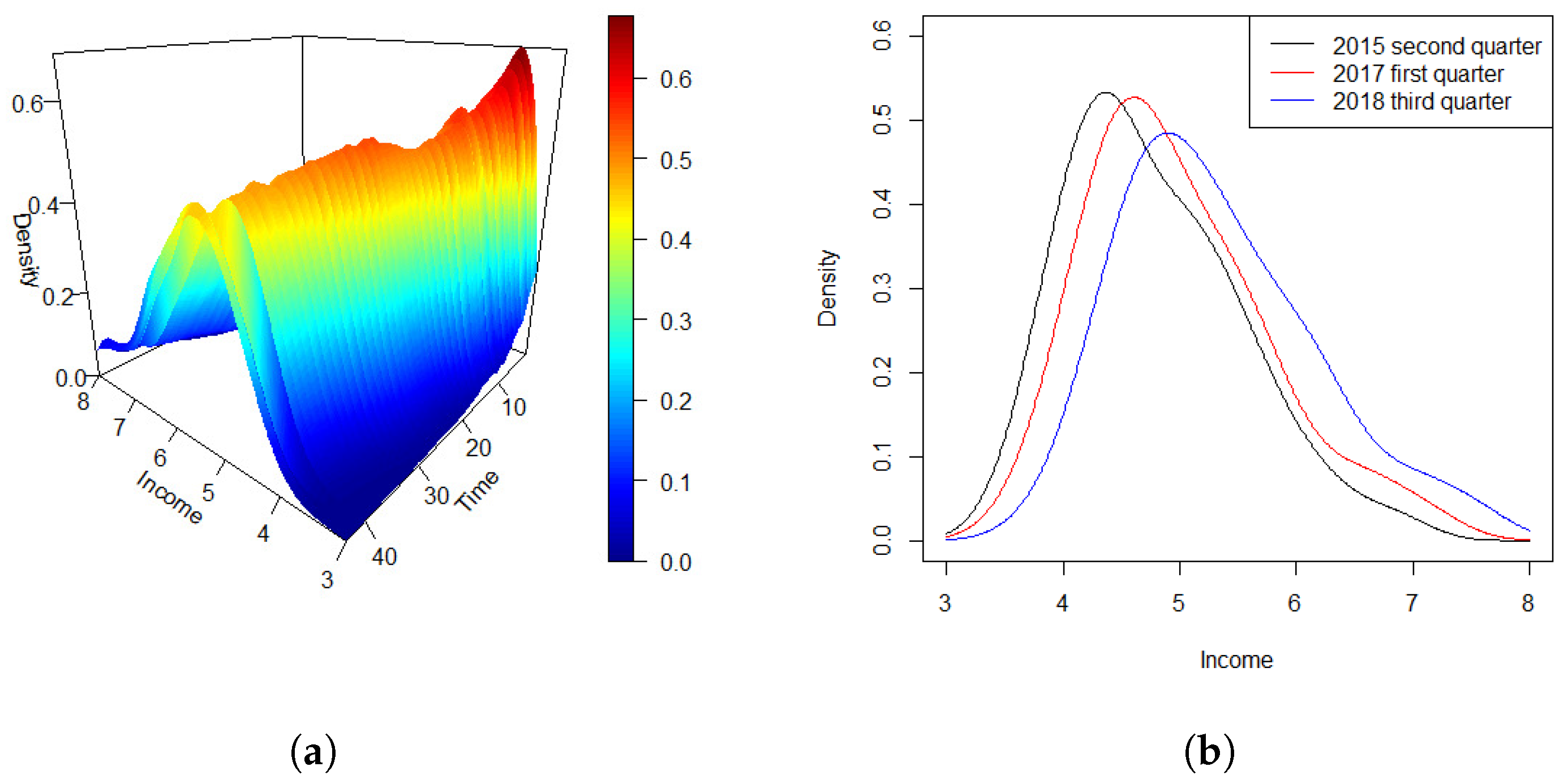

Figure 5a depicts the density time series of quarterly personal income across the 44 quarters. The density curves indicate a consistent pattern in the distribution of per capita income across states over the past decade. Specifically, there are relatively few individuals in the high-income and upper-middle-income brackets, a moderate number in the middle-income category, and a larger share in the lower-middle-income range.

To illustrate the temporal changes in income distribution more clearly,

Figure 5b shows density curves at three distinct time points: the second quarter of 2015, the first quarter of 2017, and the third quarter of 2018. The curves reveal a gradual shift towards higher income levels over time, alongside a corresponding decrease in the peak density. This trend aligns with broader economic and technological progress in recent years. As the economy develops, the proportion of individuals in lower income brackets steadily decreases, while the middle-to-high income groups grow. As a result, income distribution is becoming more balanced, with an increasing share of the population moving into middle- and higher-income categories. This pattern reflects general trends of economic growth and income redistribution over the period.

We model the income density curves using the following dynamic varying-coefficient auto-regressive functional regression (DVCA-FAR) model:

where

. Here,

denotes the quarterly gross domestic product of the USA,

denotes the quarterly total population of the USA, and

represent scaled time variables

.

Following the initial spline-based estimation, we apply a testing procedure to determine the appropriate order

p of the functional auto-regressive error process. The

p-values in

Table 8 indicate that an FAR(2) model best captures the autocorrelation structure in the error terms. This finding suggests that a second-order functional auto-regressive process effectively accounts for the dynamic dependencies within the income data.

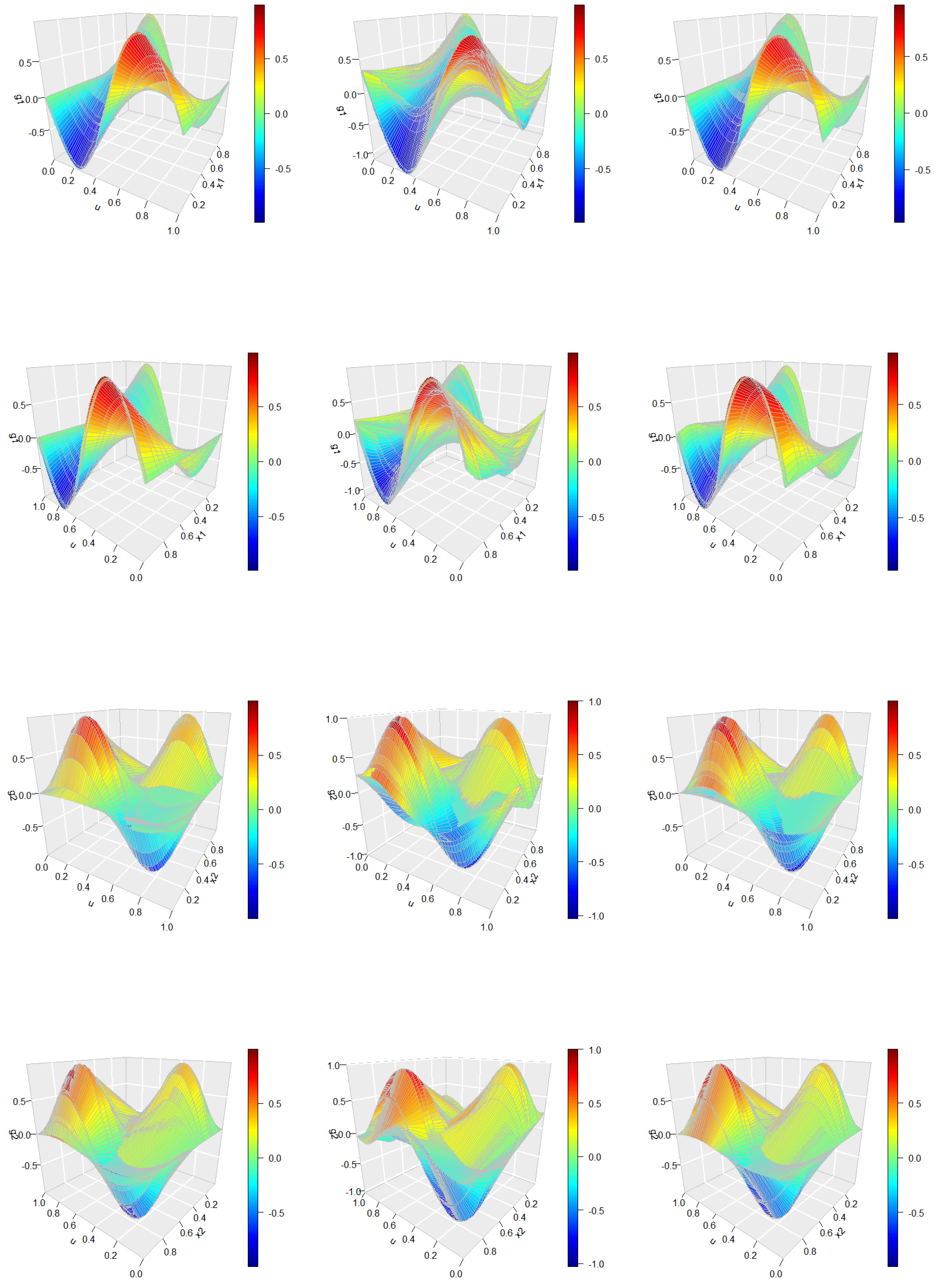

Using the three-step estimation procedure, we estimate the bivariate varying-coefficient functions.

Figure 6 presents heat maps of the three estimated functions, where

represents the baseline effect over time,

captures the influence of GDP, and

reflects the impact of population on data respectively. The heat map of

shows an alternating pattern over time between high and low values, indicating that individuals at both high and low per capita income levels experience similar effects, whereas those in the middle-income range tend to display the opposite trend. The function

, related to GDP, exhibits a consistent pattern across income levels

u that changes over time, with an initial peak followed by a dip and a subsequent rise toward the end of the period. Conversely, the effect of population, as shown in

, generally opposes the baseline pattern and remains relatively stable across both high- and low-income groups.

To evaluate the uncertainty associated with these estimated varying-coefficient surfaces, we conducted a residual-based bootstrap analysis analogous to that described previously. Specifically, we used the estimated FAR(2) operator and innovation residuals to generate 500 bootstrap samples, each time reconstructing the response functions and refitting the entire model. Point-wise standard errors and confidence intervals for all derived from these bootstrap replications indicate that the main features of the estimated coefficient functions are statistically significant and robust throughout the domain. Point-wise standard errors and confidence intervals for all estimated coefficient functions obtained from the 500 bootstrap replications show that the estimation uncertainty is generally low across most of the domain. For example, the standard errors of remain below 0.25 in regions corresponding to the high- and low-income groups, supporting the stability of the observed alternating pattern over time. Similarly, , representing the GDP effect, exhibits standard errors typically under 0.38 near the temporal peaks and troughs, confirming that the initial peak, mid-period dip, and late-period rise are statistically significant features rather than random fluctuations. The function , reflecting population impact, has slightly larger uncertainty in the mid-income range but remains stable and significant across the majority of the income spectrum. Overall, the 95% bootstrap confidence intervals exclude zero in these key regions, reinforcing the robustness and reliability of the identified macroeconomic influences on quarterly personal income distributions. Together, these findings highlight a significant dependence of quarterly personal income distributions in the United States on prior values, with the dynamics closely linked to key macroeconomic factors such as GDP and demographic changes represented by population growth.