The cognitive strategy directs the agent to move in a predefined direction with a fixed step size. The optional action set A contains 5 actions, i.e.,

, where the symbols denote movements: ↑ for forward, ↓ for backward, ← for leftward, → for rightward, and · for staying in the original position. Assume the agent moves with a step size

. In the source confirmation method (

Section 4.3), a fixed movement step length of 2 m was set, and the distance threshold conditions were calculated based on this value. This step length is aligned with the

parameter employed throughout the decision-making process. In this study,

was uniformly defined as 2 m. At time

, the agent’s position

is calculated as follows. Note that although

is set to 2 m, agents may also remain stationary in certain situations, corresponding to a step size of 0.

In multi-agent motion scenarios, collision detection is essential to ensure safe and efficient navigation. A collision is considered to occur if the distance between any two agents is less than 1 m. When such collisions are detected, the corresponding positions of the agents involved are removed to maintain operational integrity and avoid conflicts in the system.

4.5.1. Classical Cognitive Strategy

When the agent moves based on the optional action set, it uses its sensors to gather useful information about its surroundings. In the classic infotaxis algorithm [

17], Shannon entropy reduction is employed to represent the information state of the agent concerning the source’s location, thereby quantifying the source’s uncertainty. The core concept involves selecting the next optimal action based on Markov decision processes. As the decision criterion for the robot, the information entropy reduction must clearly demonstrate the advantages and disadvantages of alternative movement directions to facilitate the optimal choice. The formula is provided below:

In Equation (

15), the first term is relevant only when the source location

coincides with the

, so it is discarded. The infotaxis II reward [

28], denoted as

, is then solely represented by the second term in Equation (

15) when

, which is

where

denotes the Shannon entropy at time

k,

represents the expected entropy at time

, and

denotes the hit value at time

, with its probability distribution following a Poisson distribution as described in

Section 4.1.

represents the entropy at time

, given by Equation (

17).

where

can be approximated by a particle filter. According to Equation (

11), the non-normalized weight of the potential source term at

time can be update as:

is a normalized weight, and the Shannon entropy at

is:

To simplify calculations, the sensor model is divided into two cases:

F (non-zero measurements) and

(zero measurements). The expected entropy

at the time

varies according to random variable

and can be expressed as follows:

The probability

can be obtained from Equation (

8). The reward function of infotaxis II is then given by:

In 2018, Hutchinson et al. proposed a framework based on the maximum entropy sampling principle, referred to as entrotaxis [

29]. The maximum entropy sampling principle is newly employed to guide the searcher. The approach follows a similar procedure to infotaxis II, utilizing the probabilistic representation of the source. However, the reward function considers the entropy of the predictive measurement distribution rather than that of the entropy of the expected posterior. Essentially, entrotaxis directs the searcher to locations characterized by the highest uncertainty in the next measurement, while infotaxis II moves the searcher to locations where the next measurement is expected to minimize posterior uncertainty. The reward function of entrotaxis, simplified using the sensor model and approximated using the particle filter, is expressed as follows:

Using information entropy as the decision metric, the agent must effectively illustrate the advantages and disadvantages of alternative movement directions to facilitate optimal decision-making. However, during the initial stage of searching away from the source, the probability of detecting odor particles is nearly zero. The update of the source location posterior probability map relies on the gradual update of odor-free sampling particles and their probability updates through the particle filter. As the particle count is significantly lower than the number of grid cells, this discrepancy makes it challenging to accurately assess the quality of the selected movement direction. This reduction in particle count adversely effects search efficiency.

In comparison to information entropy,

divergence offers broader applicability for information measurement. The information increment derived from the

measure focuses on low-probability regions and allows for the differentiation of small differences between probability distributions. In contrast, the information increment derived from Shannon entropy may lead to suboptimal decisions by the agent and reduce traceability efficiency. Therefore, the

divergence can be utilized as the reward function, and the

-

cognitive strategy can be established based on this increment. The formula is provided below:

In Equation (

23),

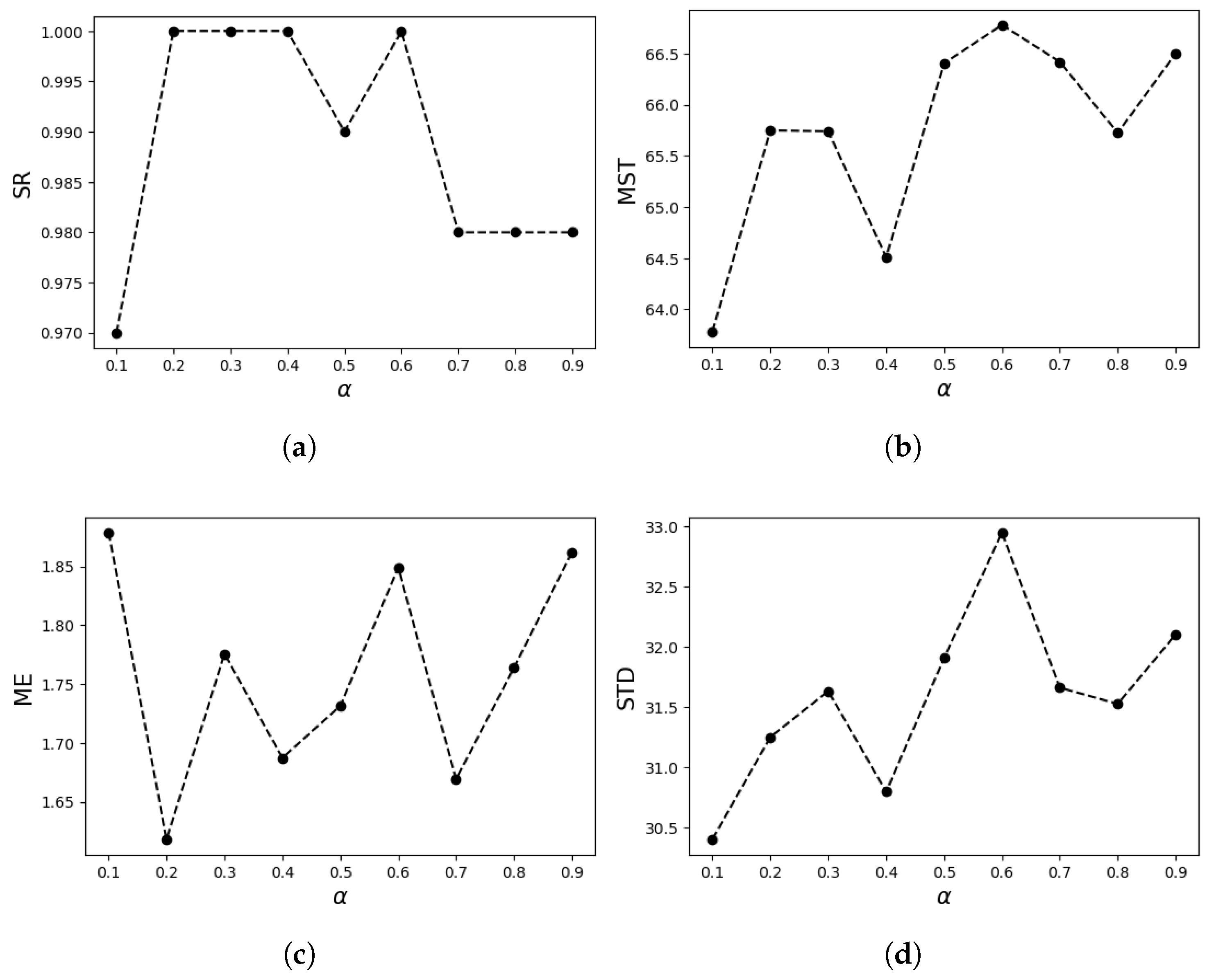

is a hyperparameter, where typically

and

. When

, the

divergence degenerates to the Kullback–Leibler (K-L) divergence. When

, the

divergence becomes more sensitive to low-probability regions, while for

the Rényi divergence becomes more sensitive to high-probability regions. After approximating the posterior probability using the particle filter,

is defined as follows:

After dividing the sensor model into two cases, the equation becomes:

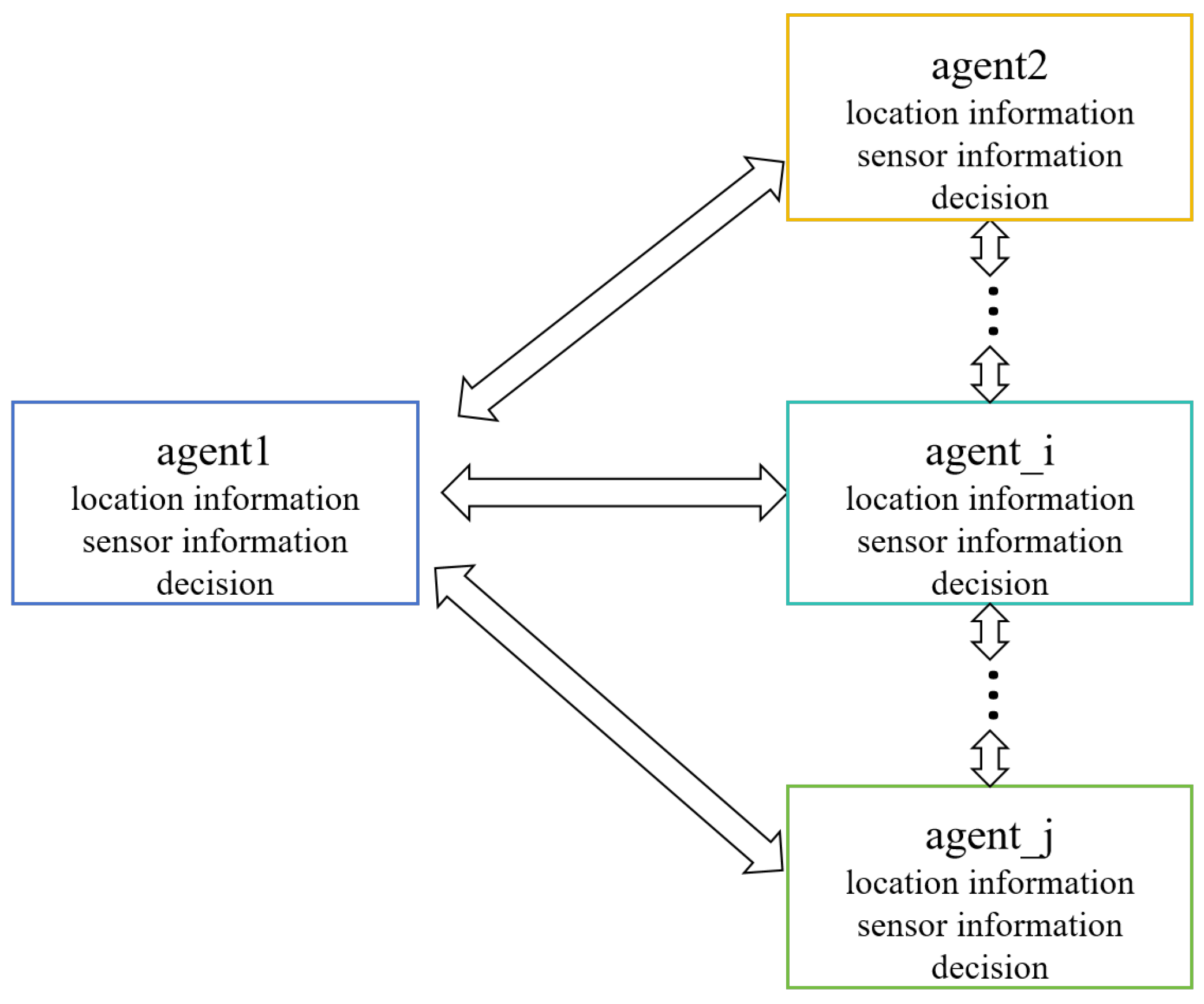

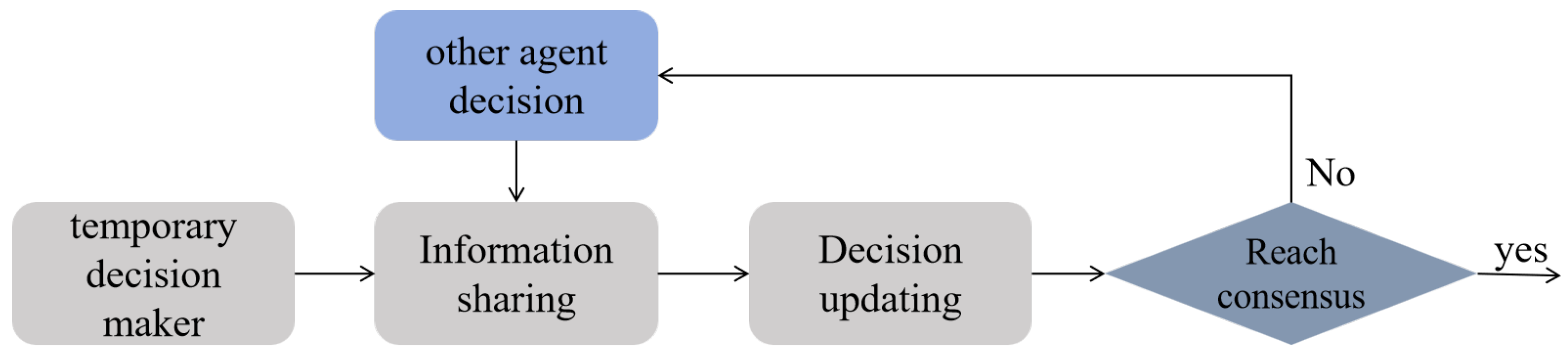

4.5.4. Cooperative Gravitational-Renyi Infotaxis Congnitive Strategy

In estimating and searching for odor sources using multi-agent systems, a distributed decision-making structure for coordinated cooperation through mutual information exchange among sensors is required. This study extends the hybrid cognitive strategy to the multi-agent domain, proposing a new collaborative hybrid cognitive strategy, termed Cooperative Gravitational-Rényi Information Infotaxis (CGRInfotaxis). During the multi-agent search process, multiple sensors enhance the accuracy of source estimation through the exchange of measurement values, allowing for faster and more precise estimates. During the search, each agent performs a temporary update of its posterior probability distribution based on its own measurements and those shared by neighboring agents. This update allows the agent to derive a provisional optimal decision. The provisional decisions are then shared across the team, facilitating iterative refinement of the posterior probabilities. This process is repeated iteratively until a consensus decision is achieved among all agents, ensuring coordinated and efficient source estimation. The entire process is illustrated in

Figure 7.

In the process of utilizing multi-agent systems for odor source detection, the posterior probabilities are updated based on measurements from multiple real-time sensors, which are capable of sharing communication. At time

k, if the

n-th agent moves to position

and obtains a measurement

, where

and

N represents the number of agents, the posterior probability update formula for the

n-th agent is given by Equation (

27):

Equation (

27) is derived from Equation (

7), where

denotes the likelihood function of the

n-th agent at time

k. In a multi-agent system, where the likelihood function is updated based on sensor measurements from multiple agents, it is assumed that the measurements acquired by each agent are mutually independent and solely influenced by the release of the odor source and not by other agents. Specifically, if the sensor measurements at different time steps and locations,

, are independent, and the likelihood function for each sensor at time

k is

, then the joint likelihood function of multiple sensors can be expressed as Equation (

28). Consequently, the joint posterior probability distribution for

N agents is expressed as Equation (

29), where

represents the normalization constant.

The posterior probability is approximated through particle filtering, yielding:

The normalized weights are expressed as:

In the multi-agent odor source detection process, the potential source term exerts an attractive force on each agent. The total gravitational potential is considered as the exploitation term, with

driving multiple agents toward the potential source. The gravitational potential experienced is expressed as follows:

where

represents the weight of the gravitational potential experienced by the

n-th agent, with the condition that

.

changes according to the magnitude of the gravitational force experienced by the agent; the larger the gravitational force, the higher the weight.

denotes the gravitational potential acting on the

n-th agent. The gravitational force is used as the exploitation term in the decision function, while the Rényi divergence is used as the exploration term in the reward function. To improve the adaptability of the algorithm, a dynamic weight adjustment mechanism is incorporated. A dynamic adjustment factor is introduced to regulate the strength of the gravitational force and Rényi divergence in the decision function based on time, to better balance the exploitation and exploration terms. The decision function is as follows:

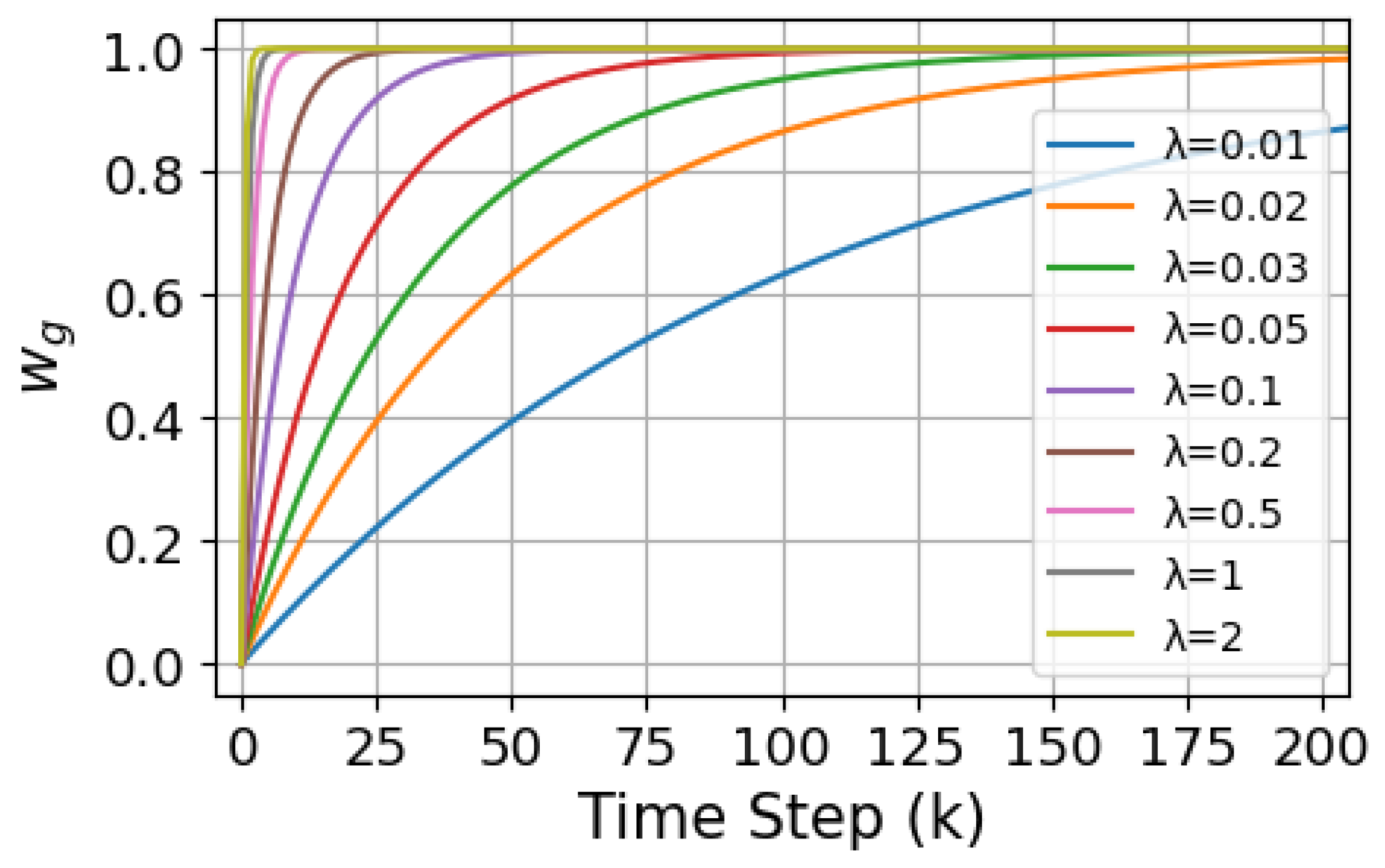

where

and

. The weight of the attractive force changes with the time step

k. In the early stages of the search, the agent has less useful information and therefore focuses more on exploring unknown regions, with

, prioritizing exploration. As the search progresses, the agent gathers more useful information and gradually focuses on known regions, allowing the weight of the exploitation term to increase, thus facilitating faster identification of the odor source.

represents the balance factor, which controls the decay rate of

. The weights are designed to vary as an exponential function of the time step

k through the parameter

, enabling a dynamic transition from exploration to exploitation. Based on the system’s search time steps

k and the expected transition between exploration and exploitation, the value of

can be initially estimated theoretically. Under the assumption of time steps

k = 0–200, the plotted curve of

is shown in

Figure 8:

From the curve, it can be observed that the value of

has a critical impact on

. As

k approaches infinity,

tends towards 1. When

is small (e.g.,

),

approaches 1 quickly, leading to an early bias towards exploitation of the information already gathered.This may result in premature particle convergence during the search process, causing insufficient information collection, thereby lowering the search efficiency or causing instability. Assuming that the transition from exploration to exploitation is to be completed within the time step range

, the following condition must be satisfied, as shown in Equation (

34):

Then, . Anticipate that will rise to around at approximately steps and drop to around . Therefore, . To maintain the balance between exploration and exploitation, we set , which ensures a smoother dynamic adjustment of , preventing over adjustment due to fluctuations in distance and maintaining the system’s stability.

However, it must be noted that the parameter is statically defined based on mathematical derivation, and this static parameter design offers a two-fold advantage. First, the smoothing property of the exponential function effectively mitigates parameter oscillations caused by system fluctuations, ensuring the stability of the search process. Second, the fixed-parameter configuration significantly reduces algorithmic complexity, facilitating efficient implementation and validation in a numerical simulation environment.

Nevertheless, this approach also has inherent drawbacks. Static parameters exhibit significant limitations when the environmental context changes, particularly when the simulation range varies. In this work, static parameters are adopted to preliminarily verify the theoretical efficiency and fundamental validity of the proposed CGRInfotaxis strategy through numerical simulations. Future work will focus on developing adaptive parameter tuning algorithms to further enhance the engineering applicability of the strategy.

In selecting the optimal decision, the value of

J for each possible movement direction is calculated. The iterative process allows agents to collaboratively choose the direction that maxes the collective reward, expressed as:

where

represents the best common movement direction of multiple agents. The agents iteratively seek the optimal movement strategy, progressing step by step until the odor source is located. If the odor source is not found within the specified maximum search time, the search is deemed unsuccessful. Algorithm 1 describes the entire process of the interactive decision making for the CGRInfotaxis.

| Algorithm 1 CGRInfotaxis for Multi-Agent System |

| Input: Searching environment parameters, particle filter parameters, and agent parameters. |

| Output: Action of multi-agent and the position of predicted source |

- 1:

for do - 2:

for all agent do - 3:

Observation: read multi-agent measurements; - 4:

Share Sensor Measurements: Broadcast to all agents and receive ; - 5:

end for - 6:

using Equations ( 30) and ( 31); - 7:

if then - 8:

Resampling and set ; - 9:

end if - 10:

Compute Initial Temporary Decisions for all Agents - 11:

while not Decisions reach consensus do - 12:

for all agent do - 13:

Decision Sharing: Broadcast temporary decision and receive other agent decisions - 14:

for all do - 15:

for other agent decisions and using Equation ( 29) - 16:

using Equation ( 30); - 17:

computation using Equation ( 25); - 18:

computation using Equation ( 26); - 19:

computation using Equation ( 33); - 20:

end for - 21:

select the motion control; - 22:

Update the decision of agent n - 23:

end for - 24:

Check consensus status: - 25:

if Decisions reach consensus then - 26:

output the action of multi-agent - 27:

of every agent computation using Equation ( 14) - 28:

end if - 29:

end while - 30:

if source confirmation conditions reached then - 31:

output the position of predicted source - 32:

break - 33:

end if - 34:

end for

|