Abstract

Outlier detection plays a key role in data analysis by improving data quality, uncovering data entry errors, and spotting unusual patterns, such as fraudulent activities. Choosing the right detection method is essential, as some approaches may be too complex or ineffective depending on the data distribution. In this study, we explore a simple yet powerful approach using the range distribution to identify outliers in univariate data. We compare the effectiveness of two range statistics: we normalize the range by the standard deviation () and the interquartile range (IQR) across different types of distributions, including normal, logistic, Laplace, and Weibull distributions, with varying sample sizes (n) and error rates (). An evaluation of the range behavior across multiple distributions allows for the determination of threshold values for identifying potential outliers. Through extensive experimental work, the accuracy of both statistics in detecting outliers under various contamination strategies, sample sizes, and error rates () is investigated. The results demonstrate the flexibility of the proposed statistic, as it adapts well to different underlying distributions and maintains robust detection performance under a variety of conditions. Our findings underscore the value of an adaptive method for reliable anomaly detection in diverse data environments.

1. Introduction

In data analysis, ensuring the quality and reliability of data is important for making informed decisions and extracting meaningful insights [1]. However, datasets often contain irregularities known as outliers, which can significantly distort statistical analyses and mislead predictive models. An outlier is a data point that deviates substantially from other observations, either due to errors, natural variation, or rare events [2]. If not properly identified and handled, outliers can affect the results of studies, making outlier detection a critical task in data analysis.

Outlier detection, also known as anomaly detection, refers to the process of identifying data points that deviate significantly from the majority of the data. These points often “lie outside” the expected pattern or distribution, making them potential anomalies. Outliers can skew analyses, especially in fields such as fraud detection [3,4], medical diagnosis, network security, and scientific research. For instance, outlier detection can be used to uncover fraudulent transactions, diagnose potential health issues [5], and detect cyberattacks [6], and it can even lead to groundbreaking scientific discoveries. Identifying outliers is challenging due to the unique characteristics of each dataset. While some outliers may be the result of measurement errors or data corruption, others may represent valuable insights. Statistical methods can be used to flag these anomalies, but interpretation often requires domain expertise. Outliers can occur in both univariate and multivariate contexts, necessitating specialized techniques to detect and analyze them.

The role of outlier detection has become increasingly vital in today’s world, where diverse fields rely on accurate data analysis to make informed decisions. As digital data continue to expand in volume and complexity, the efficient detection of outliers is fundamental to managing unexpected patterns and extracting meaningful knowledge from vast datasets, ultimately reinforcing the dependability of data-driven systems across sectors.

Numerous outlier detection methods are found in the literature. The most widely used outlier method is the boxplot, which has been applied in various fields of study. Tukey’s boxplot is a graphical tool used to visualize the distribution of data, highlighting the median, quartiles, and potential outliers based on the interquartile range (IQR) [7]. An IQR defined by is used as a robust measure of statistical dispersion; and denote the first and third quartiles, respectively.

Tukey proposed identifying outliers as observations lying outside the interval . However, its effectiveness diminishes when dealing with skewed data distributions. One of the primary issues with Tukey’s boxplot is that it often constructs fences (thresholds for identifying outliers) that extend too far from the data on the compressed side of the distribution while declaring erroneous outliers on the extended side. To address this problem, Ref. [8] introduced an adjustment to Tukey’s technique, incorporating a robust measure of skewness known as “Medcouple.” However, this adjustment sometimes leads to the construction of fences that extend beyond the extremes of the data, rendering it ineffective in detecting outliers. Findings demonstrate that the modified technique’s fences are closer to the true 95 percent values compared to the adjusted boxplot, indicating its superiority in outlier detection, especially for skewed data. Another useful outlier detection method is Grubbs’ test, which is commonly used in various engineering fields, particularly in quality control and industrial engineering [9]. Grubbs developed a statistical test to determine whether a single outlier exists in a univariate dataset. For a sample of n observations () assumed to be normally distributed, with representing the most extreme observation, this test assesses the null hypothesis that the dataset contains no outliers, contrasting it with the alternative hypothesis that an outlier is present.

More recently, the authors of [10] proposed a boxplot-based outlier detection method that enhances the traditional IQR approach by modifying the fences with semi-interquartile ranges tailored to specific location-scale distributions, such as logistic and exponential distributions. By using distribution-specific constants for the fence boundaries, their approach accounts for the underlying shape of the data, which improves the accuracy of identifying outliers across skewed and symmetric distributions. This work demonstrates the importance of adjusting detection methods based on distributional properties to maintain a controlled error rate, particularly the “some-outside rate,” which measures the probability of falsely labeling a data point as an outlier within a given sample size.

One significant advancement in outlier detection can be found in [11]; this work proposed the standardized range statistic, which is a range of the observations relative to the standard deviation. This approach was a step forward, yet its applicability remains limited for skewed distributions. Building on these methods, Ref. [12] recently introduced the relative range statistic, designed to enhance the standardized range approach by offering a more adaptable measure for outlier detection. The statistic was initially applied to the normal and Weibull distributions [13,14], chosen to represent the symmetric and skewed cases, respectively. Through simulation studies, the statistic demonstrated advantages in accuracy and robustness over traditional methods, especially in its ability to accurately detect outliers without disproportionately flagging observations in skewed data. The promising results of this proposed technique in handling varied distributions suggest potential for further application across other location-scale families.

The current study seeks to examine the applicability of the statistic by extending it to additional distributions, namely, logistic and Laplace distributions, each characterized by unique location-scale properties and tail behaviors. Inspired by the work in [10], this paper examines how the relative range performs when applied to logistic and Laplace distributions. The goal is to evaluate the flexibility and robustness of the proposed statistic across a broader spectrum of distributions, providing a more comprehensive approach to outlier detection that can adapt to varying shapes and scale characteristics.

The remainder of this paper is organized as follows: Section 2 introduces the range test statistic used for outlier detection and empirically investigates its distributional characteristics using randomly generated samples from normal, logistic, Laplace, and Weibull distributions. Section 3 presents a methodology to assess the performance of the proposed range statistic in detecting outliers, with comparisons to the the performance of the standardized range introduced in [11]. Section 4 discusses the experimental results, demonstrating that the proposed statistic is more robust and effective than the standardized range. Finally, Section 5 provides some conclusions and insights for future work.

2. Methodology

In statistics, the range is a statistical measure that indicates how diverse the data values are in a dataset. It can be a useful tool for detecting outliers, where the existence of one extreme value at either end of the dataset results in an entirely different and inflated range. The range statistic was introduced in [11], primarily for identifying outliers in a sample from a normal distribution. The author proposed the following statistic:

where R is the sample range, and is the population standard deviation.

A table of percentage points of the range distribution was constructed for normally distributed data with different sample sizes up to n = 100. While W is a useful tool for detecting outliers, it has a significant drawback. As noted in [14], if is estimated externally (such as from the sample itself), W can become highly sensitive to outliers. This is because outliers can inflate the standard deviation, which may lead W to falsely identify outliers, even when their impact is minimal.

In an attempt to overcome this issue, a more robust measure of variation, namely, the interquartile range, is proposed here. In [14], the authors proposed standardizing the range, R, by the interquartile range, . They defined the K statistic as

Unlike the standard deviation, which takes all values into account and can be heavily influenced by extreme points, the IQR focuses on the middle 50 percent of the data. As a result, it provides a more stable summary of variability, especially in datasets that contain outliers. Using the IQR instead of the standard deviation allows for a more accurate representation of the data’s core structure, reducing the risk of distortion caused by unusually large or small values.

The authors called K the relative range because it adjusts the range of the entire dataset relative to the range of the middle half of the dataset. The authors first explored the distributional behavior of K and its performance in detecting anomalous observations by constructing the probability density function (PDF) and cumulative distribution function (CDF) using the concept of order statistics. Looking at the CDFs, they concluded that the analytical construction and interpretation of the probability behavior of K can be challenging. Therefore, the probability behavior of the relative range, K, was explored empirically through simulation experiments in order to shed some light on its probability distribution characteristics and establish some understanding of its performance. The simulation experiments in [13,14] were conducted using data generated from the normal and Weibull distributions for a fixed error rate of . The resulting probability density functions (PDFs) for W and K exhibited consistent behavior across different sample sizes, with K showing a slightly greater rightward skewness than W for smaller sample sizes. Numerical simulations have shown that the W statistic is particularly sensitive in detecting outliers for , whereas the K statistic provides a more stable and precise identification of anomalous observations in that range. Interestingly, for sample sizes greater than 100, the performance of W improves and becomes comparable to that of K, which may explain why the generated tables in [11] were limited to : beyond that point, the statistical advantage of K in detecting outliers becomes comparable to that of W.

In this work, we aim to explore the distributional behavior of K and its performance in detecting outliers, and we compare it to the performance of the standardized range, W. We first examine the probability behavior of the relative range, K, for various distributions. Unlike previous studies, which primarily focused on normal and Weibull distributions, this work extends the analysis to also include logistic and Laplace distributions. The behavior of K is explored empirically through simulation experiments in order to shed light on its probability distribution characteristics and to better understand its performance in detecting anomalous observations. Furthermore, while earlier work evaluated the range statistics at a single error rate, our study investigates the performance of both W and K across multiple error rates: , and . An important advantage of this approach is that it leverages the underlying distributional characteristics of the data to inform the detection of outliers, providing an adaptable framework for anomaly identification.

2.1. Distributional Behavior of W and K from Different Distributions

We utilize the program RStudio version 4.4.1 as our programming tool to conduct a simulation experiment aimed at investigating the probability distribution of W and K. This experiment aims to explore how these statistics behave across various distributions and sample sizes, providing insights into their properties and potential applications.

2.1.1. Normal Distribution

The simulation process involves random sampling from the standard normal distribution, with a location of 0 and a scale of 1. We generate multiple random samples, each of a specified size denoted as n, and we calculate the statistics W and K for each sample. We perform 10,000 simulations for each of the chosen sample sizes. To gain insights into their distributions, we visualize them by empirically plotting their distributions using the kernel density function.

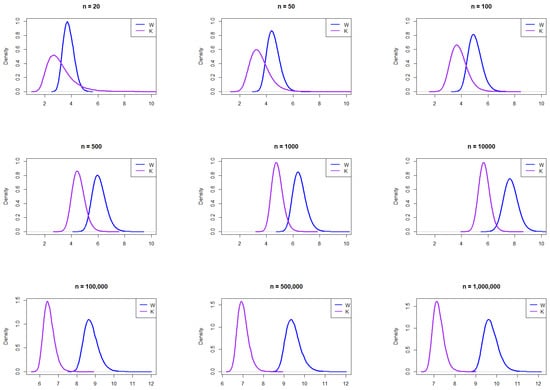

Figure 1 illustrates the empirical PDFs of W and K from the normal distribution for various sample sizes. The PDFs for W and K exhibit consistent behavior across sample sizes. The relative range shows slightly more right skewness than W, indicating that K can take larger values for smaller sample sizes, making it more capable of capturing outlying values in bell-shaped curves than W. Beyond a sample size of 100, W exhibits right skewness and shifts further to the right of K assuming larger values.

Figure 1.

Empirical exploration of the distributions of W and K for , 50, 100, 500, 1000, 10,000, 100,000, 500,000, and 1,000,000 from the normal distribution .

2.1.2. Logistic Distribution

To further explore how distributional differences influence the behavior of W and K, we extend our analysis to the logistic distribution. Following the same simulation procedure, we generate random samples from the logistic distribution, with a location of 0 and a scale of 1.

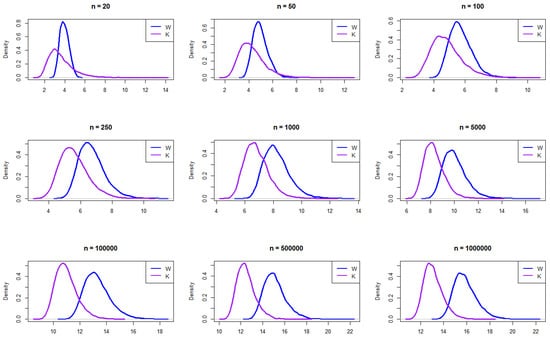

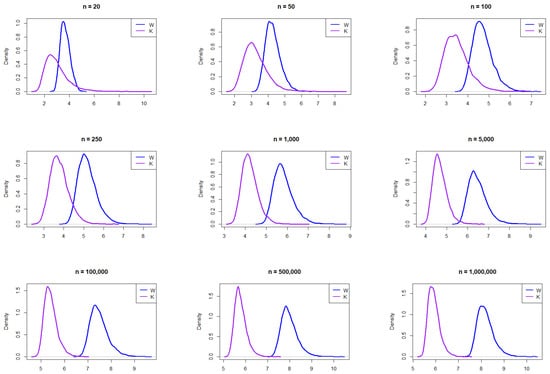

The resulting empirical PDFs of W and K from the logisitic distribution for the different n values are plotted in Figure 2. The figure demonstrates that the PDFs for W and K generally align in behavior across sample sizes. Similar to the normal distribution, K exhibits more right skewness than W, particularly for smaller sample sizes. Beyond , W shows a subtle shift to the right and becomes slightly skewed.

Figure 2.

Empirical exploration of the distributions of W and K for 20, 50, 100, 250, 1000, 100,000, 500,000, and 1,000,000 from the logistic distribution.

2.1.3. Laplace Distribution

The Laplace distribution has heavier tails than both the normal and logistic distributions. Multiple random samples are generated from the Laplace distribution, with a location parameter of 0 and a scale parameter of 1, following the same simulation procedure.

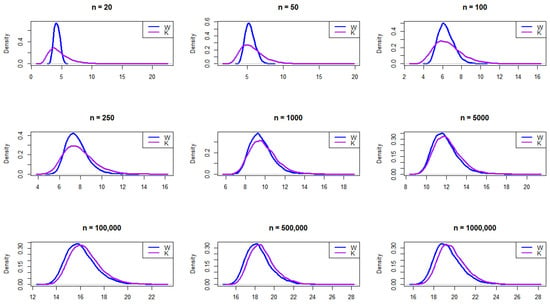

The resulting empirical PDFs of W and K for the different sample sizes from a Laplace distribution are plotted in Figure 3. The figure demonstrates that, overall, at smaller sample sizes (, 50, and 100), the distributions exhibit noticeable differences, with W appearing to have a sharper peak and K showing a broader, lower curve. As the sample size increases, the densities of both distributions converge closer together, indicating greater similarity in shape. By and beyond, both distributions become almost identical, suggesting that, with large sample sizes, the distributions of W and K approach similar density functions. This trend implies that, while the distributions differ significantly at small sample sizes, asymptotically, they exhibit similar distributional characteristics.

Figure 3.

Empirical exploration of the distributions of W and K for 20, 50, 100, 250, 1000, 100,000, 500,000, and 1,000,000 from the Laplace distribution.

2.1.4. Weibull Distribution

Finally, we analyze the two-parameter Weibull distribution, which is defined by a shape parameter and a scale parameter. Unlike the previous distributions, the Weibull distribution exhibits skewness that is directly influenced by its shape parameter. This parameter is particularly important because it influences the form of the probability density function (PDF) and, as a result, the overall behavior of the distribution. Depending on its value, the Weibull distribution can resemble other well-known distributions. For instance, when the shape parameter is equal to 1, it simplifies to the exponential distribution. This adaptability makes the Weibull distribution a useful tool in both statistical analysis and engineering applications. While the shape parameter controls the tail behavior and thus affects skewness and kurtosis, the scale parameter adjusts the spread of the distribution without changing its overall shape.

In this study, we decided to generate samples from two Weibull distributions: one with a shape parameter less than 1 (shape = 0.5), indicating greater skewness, and another with a shape parameter greater than 1 (shape = 2), indicating moderate skewness. In both cases, the scale parameter was fixed at 1.

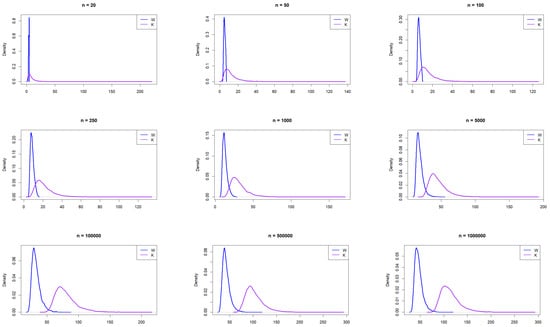

Figure 4 presents the empirical PDFs for W and K sampled from the Weibull distribution with shape = 0.5. Looking at the figure, it can be seen that K consistently exhibits a much more pronounced right skewness than W. Its density curves have long, heavy tails stretching toward larger values, and they are particularly visible, even as the sample size increases. In contrast, W is more concentrated, with a sharper peak and lighter tail, indicating less variability and skewness. This contrast suggests that K is more sensitive to extreme values or outliers, while W maintains a more compact distribution as the sample size increases.

Figure 4.

Empirical exploration of the distributions of W and K for 20, 50, 100, 250, 1000, 100,000, 500,000, and 1,000,000 from the Weibull distribution with shape = 0.5.

Figure 5 presents the empirical PDFs for W and K sampled from the Weibull distribution. Both have comparable shapes. Moreover, we can see that the overlap decreases as the sample size increases, suggesting that sample size plays a crucial role in determining their behavior.

Figure 5.

Empirical exploration of the distributions of W and K for 20, 50, 100, 250, 1000, 100,000, 500,000, and 1,000,000 from the Weibull distribution with shape = 2.

3. Experimental Result

With the empirical distributions of W and K constructed in the preceding section, it is evident that large values of these two non-negative statistics indicate the potential presence of outliers. In this section, we present a comparison of the performance of W and K in detecting outliers based on the upper 1 percent, 5 percent, and 10 percent tail thresholds defining the outlier region. Table 1 gives the threshold values of the two range-based statistics, W and K, for each type of data, with a varying sample size ( to ) and level of error (). represents the probability that at least one or more observations are wrongly declared outliers in the entire random sample of n regular observations. Smaller values imply that very extreme data points would be flagged as outliers.

Table 1.

The 99th, 95th, and 90th percentiles of W and K from Normal, Logistic, Laplace and Weibull Distributions.

A quick examination of Table 1 reveals that, for a smaller sample size, K tends to produce higher threshold values than W, making it more conservative and less likely to classify data points as outliers unless their deviation is substantial. As the sample size increases, the thresholds of both statistics increase, reflecting the greater variability expected in larger datasets. For instance, the threshold for is significantly lower than that for , as smaller datasets are less likely to exhibit extreme variations.

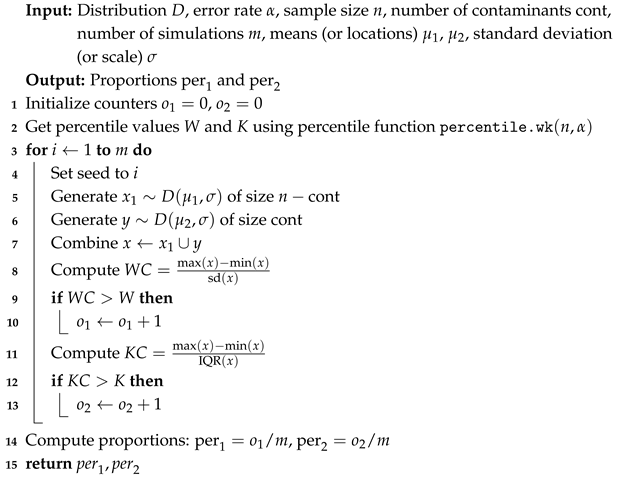

The authors in [14] developed an algorithm to examine the performance of the range statistics W and K in detecting outliers in a skewed distribution with a single error rate of 5 percent. Algorithm 1 is generalized here for any distribution and error rate () as follows:

| Algorithm 1: General Outlier Detection Algorithm for Any Distribution |

|

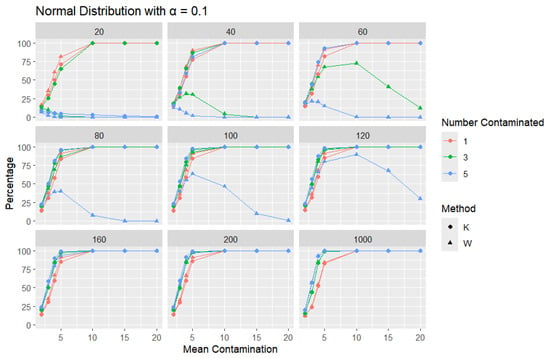

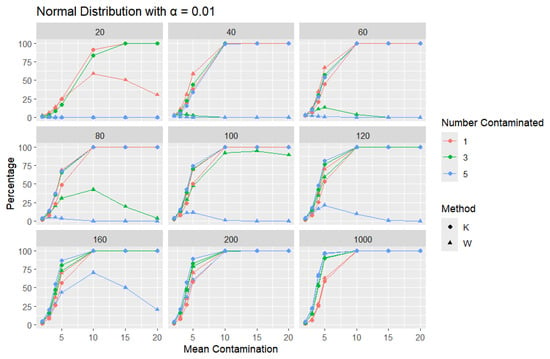

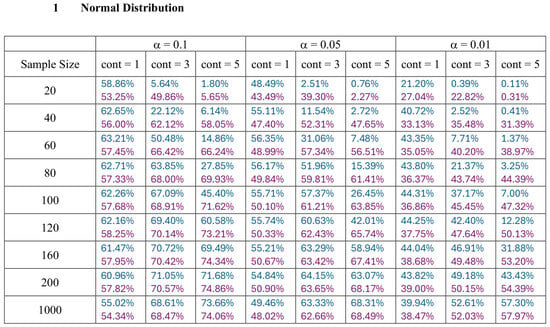

Normal Distribution: For each sample size, n, we contaminate each sample originally generated from the standard normal distribution ( = 0, scale = 1) with 1, 3, and 5 points and different means ( = 2, 3, 4, 5, 10, 15, and 20). The algorithm is applied, and the relative frequency of detecting at least one outlier is calculated for each sample size and each mean contamination. The results are plotted in Figure 6, Figure 7 and Figure 8.

Figure 6.

Line graphs of a 10% error rate for the percentage of detecting at least one outlier for the selected mean contamination values (x-axis). The bullet point line represents K percentages, and the triangle point line represents W percentages.

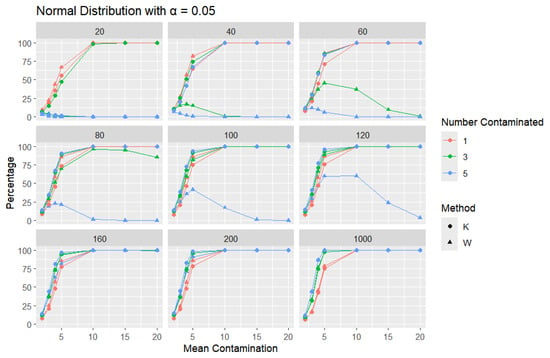

Figure 7.

Line graphs of a 5% error rate for the percentage of detecting at least one outlier for the selected mean contamination values (x-axis). The bullet point line represents K percentages, and the triangle point line represents W percentages.

Figure 8.

Line graphs of a 1% error rate for the percentage of detecting at least one outlier for the selected mean contamination values (x-axis). The bullet point line represents K percentages, and the triangle point line represents W percentages.

The x-axis of each of the figures represents the selected contamination values of the location parameter, , and the y-axis represents the percentage of detecting at least one outlier. It is expected that, when contamination produces a distribution that deviates significantly from the original data distribution, the likelihood of outliers existing in the dataset increases, making the identification of such outlier values easier.

The results clearly show that K consistently outperforms W in detecting outliers. This disparity can be illustrated with a specific example: when looking at a sample size of 20, the contamination of five data points constitutes a significant portion, amounting to 25% of the data. As a result, these five points become indistinguishable from the rest of the dataset and are no longer flagged as outliers, even though they originate from a different mean. This leads W to detect a low percentage of outliers. The W statistic becomes highly influenced by the existence of unusual values in the data because the standard deviation is inflated in value by the unusual or outlier values. This trend is observable throughout but diminishes as the sample size increases. For large sample sizes, the statistics exhibit comparable behavior.

Moreover, K’s robustness is particularly evident at a smaller error rate (), where K remains more reliable, while W struggles. This is a distinct advantage of K, especially that a lower error rate () produces a lower percentage than or , particularly for small sample sizes and small mean contamination. For lower means (e.g., mean = 2), the detection percentages are generally low, as contamination may not deviate significantly from the central tendency. These findings highlight K’s superior ability to detect outliers across a range of conditions, making it a more effective choice.

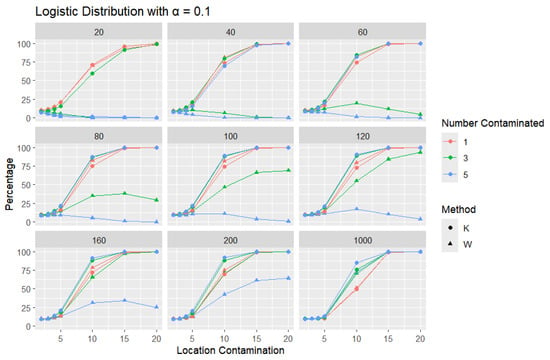

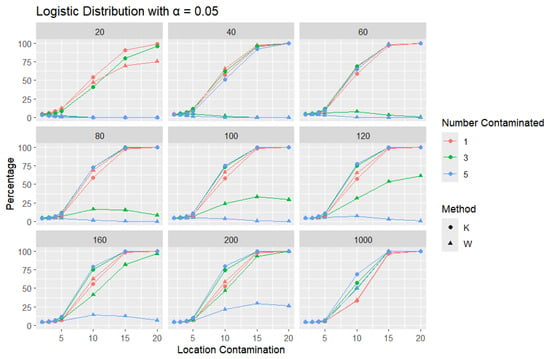

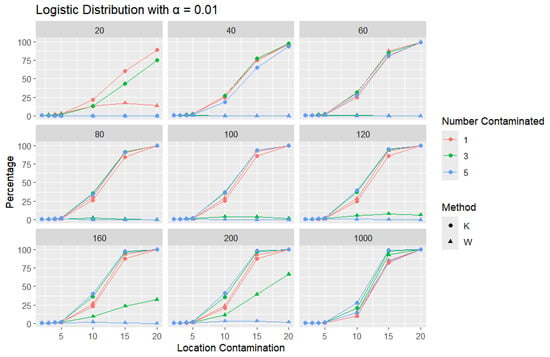

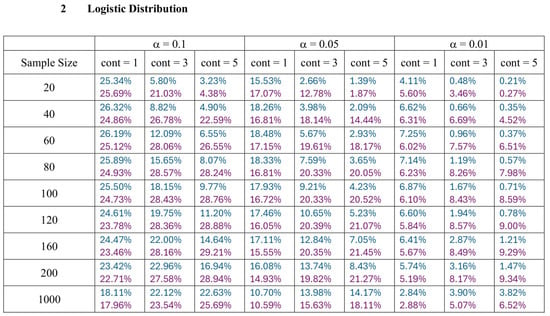

Logistic Distribution: For each sample size, n, we contaminate each sample originally generated from the logistic distribution ( = 0, scale = 1), with 1, 3, and 5 points and different locations ( 2, 3, 4, 5, 10, 15, and 20). The relative frequency of detecting at least one outlier is calculated for each sample size and each location contamination. The results are presented in Figure 9, Figure 10 and Figure 11.

Figure 9.

Line graphs of a 10% error rate for the percentage of detecting at least one outlier for the selected location contamination values (x-axis). The bullet point line represents K percentages, and the triangle point line represents W percentages.

Figure 10.

Line graphs of a 5% error rate for the percentage of detecting at least one outlier for the selected location contamination values (x-axis). The bullet point line represents K percentages, and the triangle point line represents W percentages.

Figure 11.

Line graphs of a 1% error rate for the percentage of detecting at least one outlier for the selected location contamination values (x-axis). The bullet point line represents K percentages, and the triangle point line represents W percentages.

In all scenarios, K consistently detects a higher percentage of outliers than W, particularly as the level of contamination and sample size increase. The difference in detecting outliers becomes more evident for higher contamination levels and lower values of . Once again, this trend suggests that K is more sensitive to outliers, making it a preferable approach over W. It is important to note that the logistic distribution is considered a heavy-tailed distribution, meaning that its tails extend farther on both sides compared to those of the normal distribution. This characteristic is reflected in the high threshold values reported in Table 1. The figures show that, for smaller contamination values, the detection rate is very low (almost zero) for both statistics. However, at large contamination values, that is, 15 and 20, as the contamination value increases substantially, K outperforms W at small sample sizes, after which their performance becomes comparable.

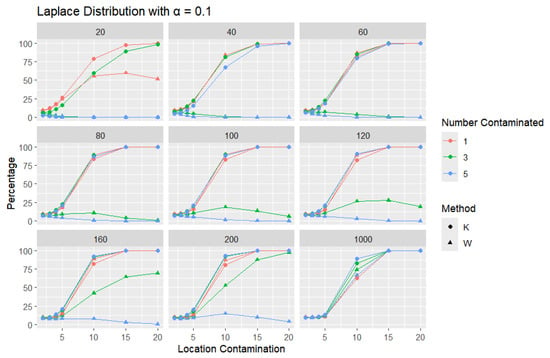

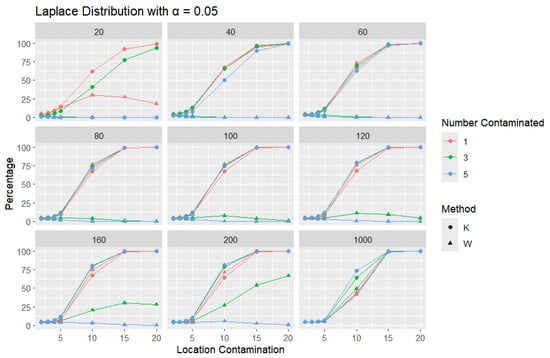

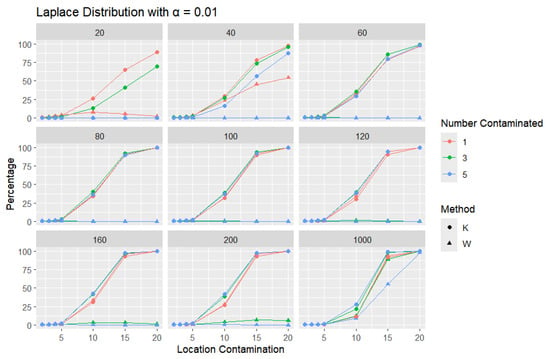

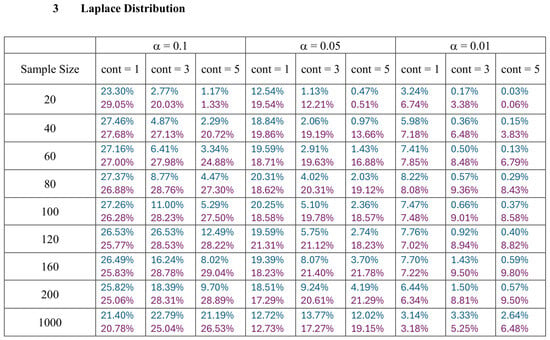

Laplace Distribution: For each sample size, n, we contaminate each generated sample with 1, 3, and 5 points from a Laplace distribution with different locations ( = 2, 3, 4, 5, 10, 15, and 20). The relative frequency of detecting at least one outlier is calculated for each sample size and each location contamination. The results are presented in Figure 12, Figure 13 and Figure 14.

Figure 12.

Line graphs of a 10% error rate for the percentage of detecting at least one outlier for the selected location contamination values (x-axis). The bullet point line represents K percentages, and the triangle point line represents W percentages.

Figure 13.

Line graphs of a 5% error rate for the percentage of detecting at least one outlier for the selected location contamination values (x-axis). The bullet point line represents K percentages, and the triangle point line represents W percentages.

Figure 14.

Line graphs of a 1% error rate for the percentage of detecting at least one outlier for the selected location contamination values (x-axis). The bullet point line represents K percentages, and the triangle point line represents W percentages.

A trend similar to that observed in the normal distribution can be seen in the figures for the Laplace distribution, where K outperforms W. However, the overall detection percentages are generally lower than those for the normal distribution. Specifically, with a smaller error rate (, we see that the percentage of detecting at least one outlier is much lower than with a higher error rate (, though K still maintains better performance than W. The Laplace distribution, just like the logistic distribution, is a heavy-tailed distribution, as reflected in the high threshold values shown in Table 1. This heavy-tailed nature makes outlier detection more challenging, especially when contaminating with small location shifts. The figures reveal that, for smaller contamination values, the detection rate is almost zero for both statistics. At higher mean values, such as 15 and 20, the detection rate increases, with K outperforming W with a smaller sample size. Therefore, K is more accurate in detecting outliers than W (has a high level of accuracy), showing its flexibility in terms of adjusting the detection method to the distribution of the data.

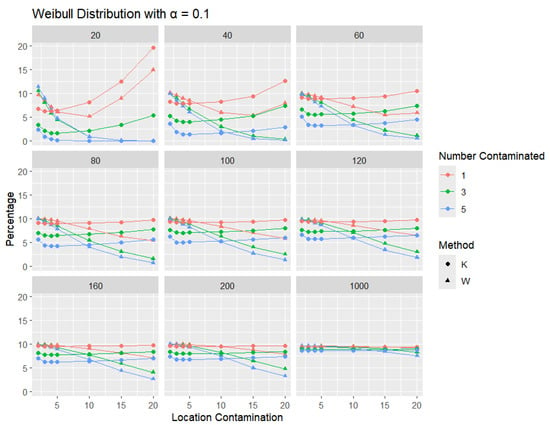

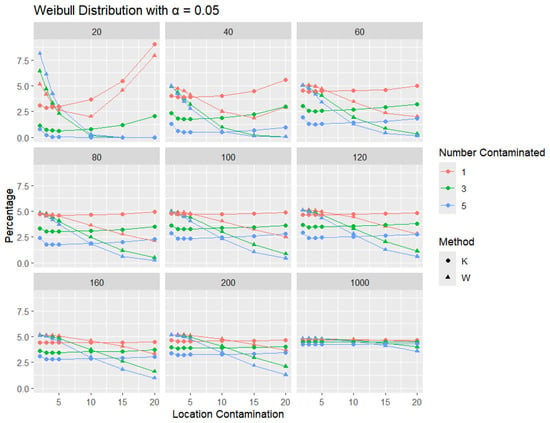

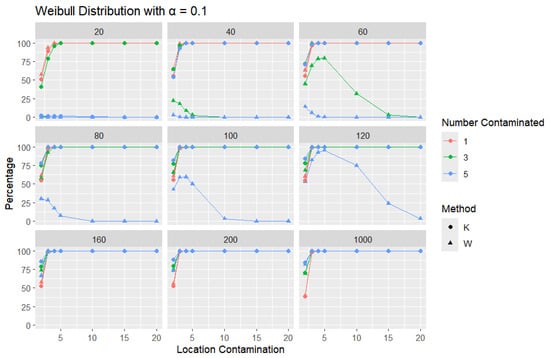

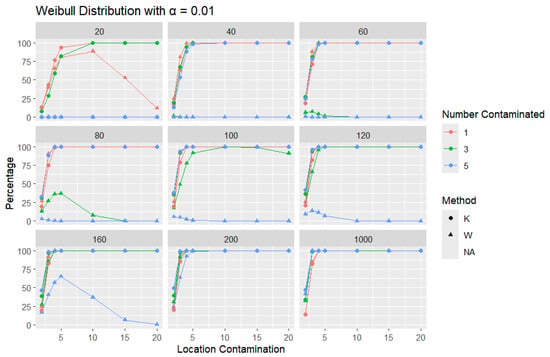

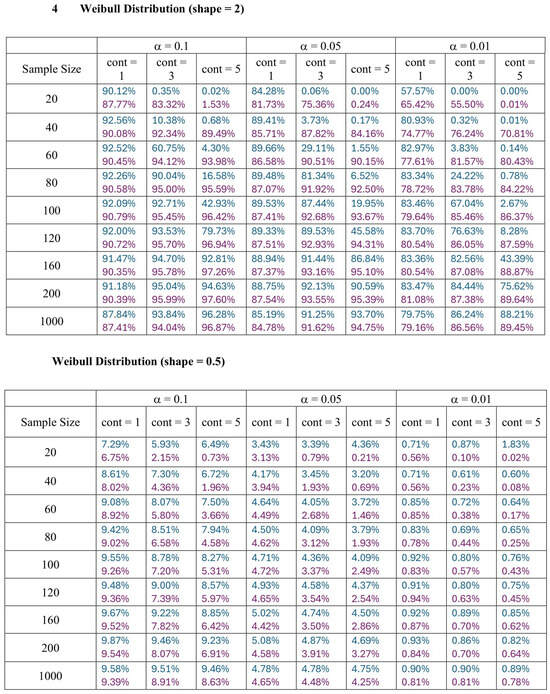

Weibull Distribution: For each sample size, n, we contaminate each generated sample with 1, 3, and 5 points from two different shape parameters of a Weibull distribution (shape = 0.5 and shape = 2) shifted by different locations ( = 2, 3, 4, 5, 10, 15, and 20). The relative frequency of detecting at least one outlier is calculated for each sample size and each mean contamination. The results for a shape parameter of 0.5 are presented in Figure 15, Figure 16 and Figure 17.

Figure 15.

Line graphs of a 10% error rate for the percentage of detecting at least one outlier for the selected location contamination values (x-axis) from Weibull shape = 0.5. The bullet point line represents K percentages, and the triangle point line represents W percentages.

Figure 16.

Line graphs of a 5% error rate for the percentage of detecting at least one outlier for the selected location contamination values (x-axis) from Weibull shape = 0.5. The bullet point line represents K percentages, and the triangle point line represents W percentages.

Figure 17.

Line graphs of a 1% error rate for the percentage of detecting at least one outlier for the selected location contamination values (x-axis) from Weibull shape = 0.5. The bullet point line represents K percentages, and the triangle point line represents W percentages.

Across all sample sizes, K consistently shows higher detection rates than W, particularly at smaller sample sizes and lower contamination levels. As the number of contaminated points increases, both methods tend to detect outliers more frequently; however, the relative advantage of K over W remains evident. As expected, with a stricter significance level, the detection rates of both methods are generally lower than in the 5% case. Nonetheless, K consistently demonstrates slightly higher detection percentages than W, especially at small sample sizes and when the contamination is mild. The performance gap gradually narrows with an increasing sample size, and both methods converge toward similar detection rates when the sample size reaches 1000. These results illustrate the robustness and sensitivity of K, especially under strong right-skewness and small-sample conditions.

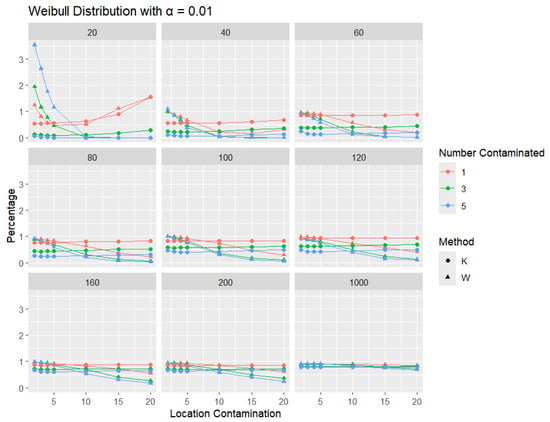

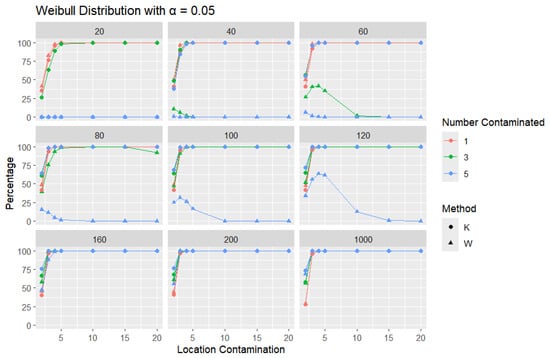

Figure 18.

Line graphs of a 10% error rate for the percentage of detecting at least one outlier for the selected location contamination values (x-axis) from Weibull shape = 2. The bullet point line represents K percentages, and the triangle point line represents W percentages.

Figure 19.

Line graphs of a 5% error rate for the percentage of detecting at least one outlier for the selected location contamination values (x-axis) from Weibull shape = 2. The bullet point line represents K percentages, and the triangle point line represents W percentages.

Figure 20.

Line graphs of a 1% error rate for the percentage of detecting at least one outlier for the selected location contamination values (x-axis) from Weibull shape = 2. The bullet point line represents K percentages, and the triangle point line represents W percentages.

Across all error rates and sample sizes, the K statistic consistently outperforms W in detecting outliers in the Weibull distribution. Notably, in the Weibull case, both K and W generally achieve much higher detection rates than in the normal, logistic, and Laplace distributions. This can be attributed to the skewed nature of the Weibull distribution, where the asymmetry makes outliers more distinguishable from the rest of the data. For small sample sizes (), K detects outliers at a very high rate, even with small increases in location contamination, while the performance of W remains extremely low, often close to zero. As the sample size increases (), both K and W detect outliers more effectively, but K still identifies them more quickly and consistently, especially at lower contamination levels.

4. Discussion

The experimental results in this study provide important insights into the performance of the standardized range statistic W and the relative range statistic K in detecting outliers across different probability distributions. While both statistics aim to identify anomalous observations in datasets, an analysis revealed clear distinctions in their behavior and effectiveness depending on the underlying distribution, sample size, contamination level, and error rate. Empirical analyses across four distributions, namely, normal, logistic, Laplace, and Weibull distributions, consistently highlighted the relative range statistic K as a more robust outlier detection method than the standardized range W, particularly in skewed distributions and under small error rates.

For the normal distribution, K consistently detected a higher proportion of outliers across sample sizes and contamination levels, particularly at a lower error rate of . This advantage became more prominent as the contamination mean increased, demonstrating K’s superiority to subtle anomalies in normally distributed data. Interestingly, for larger samples (e.g., ), both methods showed convergence in performance, which might explain why earlier works, such as that by Harter [11], focused only on sample sizes up to 100, where the sensitivity and variability of range-based statistics are the most noticeable. In the logistic and Laplace distributions, which are symmetric but have heavier tails than the normal distribution, K clearly outperformed W in almost all scenarios. Both distributions exhibited low detection rates, particularly at smaller contamination levels and lower error rates. This was expected, as heavy tails tend to hide moderate outliers. The advantage was particularly evident for smaller sample sizes and higher location contamination values, where W’s detection rates increased only marginally, while K continued to show improved performance. Interestingly, even when the error rate decreased or the sample size increased, K kept performing better, while W stayed small, with an insignificant increase in the percentage. This shows that K handles data spread and variability more effectively. The most remarkable results emerged from the Weibull distribution with shape = 2, which is positively skewed. The detection rates for both statistics were significantly higher than in the other distributions. Even for small contamination values and small samples, K achieved an almost 100% detection rate in many scenarios. This suggests that skewed distributions may inherently increase the visibility of outliers, making range-based methods more effective.

In terms of performance across multiple error rates ( = 0.1, 0.05, 0.01), K appeared to maintain a more stable behavior than W, further establishing its robustness under various testing conditions. Lower values were associated with lower detection rates for both K and W. An important advantage of our simulation-based approach is its reliance on the empirical probability characteristics of the data. This is particularly useful when working with real-world datasets where the underlying distribution is unknown or deviates from normality. By leveraging the distributional behavior of K, this method provides a flexible and adaptable framework for practical outlier detection. In general, the K statistic proves to be a more flexible and reliable method, especially when working with data that are not normally distributed. K is robust in terms of maintaining accuracy in outlier detection under various conditions. It adapts better across different distributions and sample sizes, making it a solid choice for feasible outlier detection. This is also reflected in the numerical summaries of the line graphs, presented as the average percentage detected for each distribution, which can be found in the Appendix Figure A1, Figure A2, Figure A3 and Figure A4. In addition to its statistical performance, the relative range statistic K is computationally efficient and straightforward to implement. It requires only the calculation of the sample maximum, minimum, and quartiles.

Analytical Justification of K’s Performance

The use of the IQR to standardize the range statistic plays a critical role in enhancing the performance and robustness of K for outlier detection. Unlike the standard deviation, which is highly sensitive to extreme values, the IQR is a robust measure of scale that focuses on the middle 50% of the data and is therefore resistant to distortion by outliers. When the standard deviation is used, a single extreme observation can inflate the estimate of variability, masking the presence of additional outliers and leading to inconsistent performance. In contrast, dividing by the IQR stabilizes the behavior of the statistic across different distributional shapes and tail behaviors. This robustness is particularly important when dealing with skewed or heavy-tailed distributions, where the presence of extreme values is more common. As a result, K offers a more consistent detection of outliers across a wide range of settings, which is supported by the empirical findings presented in this study.

5. Conclusions

This study evaluated the effectiveness of two range-based statistics, the standardized range W and the relative range K, for outlier detection across four common distributions and a variety of sample sizes. By normalizing the range by the interquartile range, K proved to be better than W at handling outlier values in different data scenarios. Across all distributions, K showed higher detection rates than W, with the difference being more noticeable in heavy-tailed or skewed distributions like Laplace and Weibull distributions. In the Weibull case, both methods performed better than in the other distributions, but K still produced higher and more stable detection rates than W. These results consistently remained true for different error rates () and varying numbers of contaminants. Consequently, the findings confirm that K is a more effective and reliable tool for detecting outliers, particularly for small sample sizes and non-normally distributed datasets.

It would be of interest for future work to see how K performs in more complex situations such as multivariate data or real-world applications where the underlying distribution is unknown. But for univariate outlier detection, especially in exploratory analysis, K appears to be a strong contender.

Author Contributions

Conceptualization, D.D., H.S., A.A.Z. and F.K.; formal analysis, D.D., H.S. and A.A.Z.; methodology, D.D. and H.S.; software, D.D., A.A.Z. and F.K.; writing—original draft, D.D. and H.S.; writing—review and editing, D.D., H.S. and F.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the open access program from the American University of Sharjah (AUS) and by the AUS Faculty research grant FRG22-C-S60/AS1624.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Numerical descriptions of the line graphs are represented in the tables below as the average percentages of each distribution.

Figure A1.

Average percentages of W and K for each number of contaminated points and each sample size from the normal distribution: blue indicates the average percentage of W, and purple indicates the average percentage of K.

Figure A2.

Average percentages of W and K for each number of contaminated points and each sample size from the logistic distribution: blue indicates the average percentage of W, and purple indicates the average percentage of K.

Figure A3.

Average percentages of W and K for each number of contaminated points and each sample size from the Laplace distribution: blue indicates the average percentage of W, and purple indicates the average percentage of K.

Figure A4.

Average percentage of W and K for each number of contaminated points and each sample size from the Weibull distribution with shape parameters of 0.5 and 2: blue indicates the average percentage of W, and purple indicates the average percentage of K.

References

- Dastjerdy, B.; Saeidi, A.; Heidarzadeh, S. Review of applicable outlier detection methods to treat geomechanical data. Geotechnics 2023, 3, 375–396. [Google Scholar] [CrossRef]

- Saleem, S.; Aslam, M.; Shaukat, M. A Review and Empirical Comparison of Univariate Outlier Detection Methods. Pak. J. Statist. 2021, 37, 447–462. [Google Scholar]

- Massi, M.; Ieva, F.; Lettieri, E. Data mining application to healthcare fraud detection: A two-step unsupervised clustering method for outlier detection with administrative databases. BMC Med. Inform. Decis. Mak. 2020, 20, 160. [Google Scholar] [CrossRef]

- Malini, N.; Pushpa, M. Analysis on credit card fraud identification techniques based on KNN and outlier detection. In Proceedings of the 2017 Third International Conference on Advances in Electrical, Electronics, Information, Communication and Bio-Informatics (AEEICB), Chennai, India, 27–28 February 2017; pp. 255–258. [Google Scholar]

- Capelleveen, G.; Poel, M.; Mueller, R.; Thornton, D.; Hillegersberg, J. Outlier detection in healthcare fraud: A case study in the Medicaid dental domain. Int. J. Account. Inf. Syst. 2016, 21, 18–31. [Google Scholar] [CrossRef]

- Chakhchoukh, Y.; Liu, S.; Sugiyama, M.; Ishii, H. Statistical outlier detection for diagnosis of cyber attacks in power state estimation. In Proceedings of the 2016 IEEE Power And Energy Society General Meeting (PESGM), Boston, MA, USA, 17–21 July 2016; pp. 1–5. [Google Scholar]

- Tukey, J. Exploratory Data Analysis; Addison-Wesley Pub. Co.: Reading, MA, USA, 1977. [Google Scholar]

- Hubert, M.; Vandervieren, E. An adjusted boxplot for skewed distributions. Comput. Stat. Data Anal. 2008, 52, 5186–5201. [Google Scholar] [CrossRef]

- Grubbs, F. Sample Criteria for Testing Outlying Observations; University of Michigan: Ann Arbor, MI, USA, 1949. [Google Scholar]

- Dovoedo, Y.; Chakraborti, S. Boxplot-based outlier detection for the location-scale family. Commun. Stat.-Simul. Comput. 2015, 44, 1492–1513. [Google Scholar] [CrossRef]

- Harter, H. Use of tables of percentage points of range and studentized range. Technometrics 1961, 3, 407–411. [Google Scholar] [CrossRef]

- Dallah, D.; Sulieman, H. Outlier Detection Using the Range Distribution. In Proceedings of the International Conference on Recent Developments in Mathematics, Dubai, United Arab Emirates, 24–26 August 2022; pp. 687–697. [Google Scholar]

- Dallah, D.; Sulieman, H.; Alzaatreh, A. Outlier Detection Based on Range Statistic. In Statistical Outliers and Related Topics; CRC Press: Boca Raton, FL, USA, 2025; p. 153. [Google Scholar] [CrossRef]

- Dallah, D.; Sulieman, H.; Alzaatreh, A. Relative range for skewed distributions: A tool for outlier detection. Gulf J. Math. 2024, 16, 298–312. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).