Abstract

Online recruitment platforms are transforming talent acquisition paradigms, where a precise person-job fit plays a pivotal role in intelligent recruitment systems. However, current methodologies predominantly rely on coarse-grained semantic analysis, failing to address the textual structural dependencies and noise inherent in resumes and job descriptions. To bridge this gap, the novel fine-grained semantics-enhanced graph neural network for person-job fit (FSEGNN-PJF) framework is proposed. First, graph topologies are constructed by modeling word co-occurrence relationships through pointwise mutual information and sliding windows, followed by graph attention networks to learn graph structural semantics. Second, to mitigate textual noise and focus on critical features, a differential transformer and self-attention mechanism are introduced to semantically encode resumes and job requirements. Then, a novel fine-grained semantic matching strategy is designed, using the enhanced feature fusion strategy to fuse the semantic features of resumes and job positions. Extensive experiments on real-world recruitment datasets demonstrate the effectiveness and robustness of FSEGNN-PJF.

1. Introduction

Online recruitment platforms (e.g., LinkedIn, Zhipin, and Liepin) have emerged as mainstream tools for talent recruitment due to their efficiency [1]. As of July 2023, LinkedIn reported over 930 million global users across 200+ countries, underscoring its widespread adoption. However, this growth has exacerbated information overload, necessitating advanced methods to accurately align job requirements with candidate capabilities from massive datasets, a critical challenge in person-job fit research.

Early approaches framed person-job fit as a recommendation task [2,3]. For instance, Malinowski et al. [2] proposed a bilateral recommendation model via mining key characteristics such as candidate skills and educational backgrounds. While effective, such methods incur high costs and subjective biases due to their reliance on domain expertise. Subsequent studies shifted toward supervised text matching.

More recently, with the resurgence of deep learning, deep learning-based person-job fit methods have garnered significant attention from researchers. Zhu et al. [4] employed parallel convolutional neural networks (CNNs) to extract the features of resumes and job positions and measure similarity via cosine metrics. Qin et al. [1] integrated bidirectional long short-term memory (BiLSTM) with hierarchical attention to prioritize critical skills, while Shao et al. [5] utilized bidirectional encoder representations from transformers (BERT) and multi-head attention to explore internal and external interactions for multivariate attributes in job-resume matching. Despite the progress made by the aforementioned methodologies in the field of person-job fit, they are still confronted with several notable challenges. On the one hand, candidate resumes and job requirement descriptions often contain noisy information, and existing methods demonstrate limited capacity in filtering out such information, thereby undermining the matching performance. On the other hand, current approaches predominantly rely on coarse-grained semantics to match resumes with job requirements, impeding the comprehensive exploration of the full matching potential between the two parties.

To capture the deep semantic associations and textual structural information between job seekers’ resumes and job requirements, graph attention networks (GATs) were introduced. A differential attention mechanism is incorporated to mitigate the impact of noise, enabling more accurate semantic representations of both resumes and job requirements. The primary objective is to further capture the global dependencies within the texts of resumes and job requirements, focusing on the importance weights of different words. A self-attention mechanism is employed to semantically encode the resumes and job positions. To facilitate semantic matching between job seekers’ resumes and job requirements from a multi-granularity perspective, a fine-grained semantic matching strategy at the resume-job position level was devised. Inspired by the successful application of the Kronecker attention network (KAN) to capturing nonlinear relationships, we introduce it into the person-job matching task.

To this end, this paper proposes a framework termed fine-grained semantics-enhanced graph neural network for person-job fit. Inspired by the successful application of graph neural network (GNN)-based methods [6,7] in other tasks, a GNN is introduced into the person-job fit task. Specifically, initially, key features, including skills and experiences, are extracted from both resumes and job postings and defined as nodes within a graph structure. The edges connecting these nodes are established through pointwise mutual information (PMI) and a sliding window method, which effectively captures the contextual associations between job requirements and resume attributes. Subsequently, GATs are employed to learn the structural representations of the constructed graph. To further optimize the encoding process, a differential transformer (DIFF Transformer) is introduced. This model encodes both resume and job posting inputs and integrates self-attention mechanisms, thereby significantly enhancing the model’s ability to focus on critical features relevant to job-resume fit. Finally, a novel fine-grained semantic matching computation method is designed. This method leverages Kolmogorov-Arnold networks (KANs) to deeply fuse semantic features from applicants’ resumes and job requirements, thereby significantly improving person-job fit performance. The experimental results validate the effectiveness of the FSEGNN-PJF framework.

The principal contributions of this paper are as follows:

- (1)

- An innovative graph construction methodology, grounded in the principles of co-occurrence windows and PMI, has been meticulously developed to construct graph representations for job seekers’ resumes and job requirement texts. The overarching objective of this approach is to conduct an in-depth exploration of the semantic structural interdependencies embedded within these texts. By leveraging GATs, the graph structures are encoded, facilitating the enhancement of node feature representations through the aggregation of pertinent neighborhood information.

- (2)

- A sophisticated semantic encoding framework tailored to resumes and job requirements and integrating a hybrid attention mechanism is proposed. This framework is designed to adeptly capture the semantic dependencies between job seekers’ resumes and job requirements, dynamically allocate feature weights, and effectively filter out noise within data. Consequently, it significantly bolsters the semantic representations of both resumes and job requirements, thereby refining their semantic fidelity.

- (3)

- A meticulously crafted fine-grained semantic matching computation methodology is devised which synergistically combines multi-granularity text similarity measurement strategies. A KAN is introduced to optimize the activation function expression and augment the fine-grained semantic representations inherent in job seekers’ resumes and job requirements, culminating in the attainment of highly precise person-job matching performance.

- (4)

- Empirical validation showing the novel FSEGNN-PJF framework achieves state-of-the-art performance.

The rest of this paper is organized as follows. Section 2 reviews the recruitment analysis and text match literature. Section 3 formalizes the problem and details the FSEGNN-PJF framework. Section 4 presents the experimental set-up, baselines, evaluation metrics, overall performance, ablation study, and case study. Section 5 concludes this work.

2. Literature Review

The related work in this paper is categorized into two main areas: recruitment analysis and text matching.

2.1. Recruitment Analysis

Recruitment serves as the fundamental method for organizational talent acquisition, playing a pivotal role in enterprise success. As a critical task in talent management, effective recruitment strategies aim to optimize candidate-position matches, with empirical evidence demonstrating that employee-position mismatches cause significant turnover [8,9]. The burgeoning field of person-job fit research has emerged as a focal point in contemporary human resource analytics.

Several researchers have studied person-job fit from a performance prediction [10,11] perspective. In particular, the matching of recruitment positions with job seekers’ resumes has received great attention from researchers. Recommendation system paradigms have yielded notable contributions, including hybrid collaborative filtering techniques combining K-nearest neighbor algorithms with clustering approaches to enhance recommendation accuracy [12]. Bilateral recommendation methods have further advanced the field through separate modeling of candidates and job position preference relationships [2]. A job recommendation model based on gradient-boosted regression trees (GBRTs) and time factors incorporating GBRTs with neighborhood-based filtering demonstrated improved preference prediction through temporal feature extraction [13]. In the LinkedIn recommendation system, a generalized linear mixed model achieved scalable job recommendations through user- and item-level personalization [3].

Recently, researchers have studied the person-job fit task from another perspective. Deep learning technology has achieved impressive performance in the person-job fit task. A parallel CNN enabled semantic matching of resumes and position descriptions through shared latent space mapping and cosine similarity methods [4]. An ability-aware person-job fit model employing BiLSTM networks with multi-level hierarchical attention mechanisms introduced encoding representation and ability-aware representation learning of job seekers’ resumes and job requirements [1]. Feature fusion approaches combining a factorization machine-based neural network and CNN with long short-term memory network modeling effectively integrated explicit and implicit characteristics from historical recruitment data to extract the global features of resumes and job requirements [14]. Subsequent studies addressed critical limitations in preference modeling through gated recurrent unit-based frameworks incorporating historical interaction patterns [15], while reinforcement learning strategies introduced a dynamic matching optimization strategy considering the labor market fluctuations [16].

Current research frontiers focus on sophisticated semantic modeling through transformer architectures. The BERT framework has demonstrated superior contextual understanding in cross-domain matching tasks. Recent studies employed BERT to project attribute keys, values, and their sources from both resumes and job postings into a unified semantic space and then employed multi-head attention mechanisms to model intra-attribute and inter-attribute interactions across candidate resumes and position requirements [5]. This progression from traditional recommendation systems to deep semantic modeling reflects the field’s evolution toward sophisticated context-aware matching paradigms.

2.2. Text Matching

Text-based person-job matching constitutes a specialized text mining task fundamentally aligned with natural language processing (NLP) paradigms, particularly text classification [17]. As a core NLP task, text matching has witnessed substantial methodological evolution through deep learning advancements, with contemporary research predominantly leveraging deep learning technology for text matching.

Current methodologies bifurcate into representation-based and interaction-based paradigms. The former employs Siamese networks for semantic similarity computation through two-sentence encoding. Pioneering work includes deep structured semantic models (DSSMs) utilizing deep neural networks for semantic vector projection and cosine similarity measurement [18], and convolutional neural network architectures extracting lexical matching patterns from pretrained embeddings via convolution layers and pooling layers [19]. While effective in individual text representation, these approaches exhibit limitations in modeling cross-text interactions.

Interaction-based methods address this gap through explicit inter-sentence relationship modeling. Enhanced sequential inference frameworks combine BiLSTM-processed embeddings with soft attention mechanisms for high-order interaction extraction [20]. Iterative interaction modules with multi-perspective pooling layers enable progressive relational understanding between text pairs [21]. Graph-based relevance models introduce structural flexibility through term-level interaction and document-level relationship modeling, effectively addressing long-distance semantic matching challenges [22]. Advanced architectures integrate frame semantics with multi-level attention mechanisms, capturing contextualized frame elements and hierarchical semantic relationships [23]. Recent innovations employ keyword-centric attention strategies to simultaneously model word-level alignments and sentence-level semantic dependencies [24]. This paradigm shift from isolated representation learning to explicit interaction modeling has significantly advanced text matching precision in complex semantic environments.

3. Method

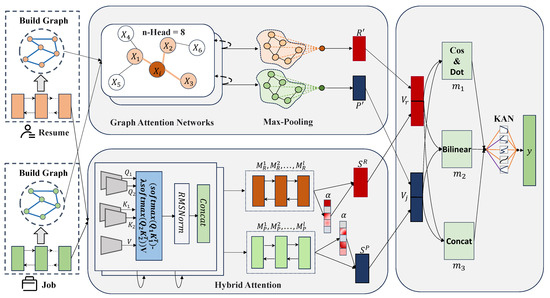

The proposed fine-grained semantics enhanced graph neural network for person-job fit framework comprises three structured components: graph-based semantic encoding for resumes and job positions, hybrid attention mechanism-based semantic encoding for resumes and job positions, and person-job fit. Figure 1 illustrates the architectural of the proposed FSEGNN-PJF framework.

Figure 1.

The architecture of the proposed FSEGNN-PJF framework.

Subsequently, based on a resume and a job description, the workflow of the FSEGNN-PJF framework will be elaborated in detail. The framework utilizes the applicant’s resume (Resume) and the job requirements (Job) posted by the enterprise as inputs and outputs the prediction results for person-job fit.

Specifically, in the graph-based semantic encoding of resumes and job descriptions, firstly, text graph structures for both the applicant’s resume and the job requirements are constructed. The texts from the resume and the job description are preprocessed through tokenization and other necessary steps, yielding word sequences for the resume (“Asp”, “SQLServer”, “database”, “proficient in”, etc.) and for the job requirements (“database”, “programming language”, “JavaScript”, etc.). These word sequences serve as nodes in the graph. After that, the PMI values between nodes determining the weights of the edges are calculated, thereby highlighting the semantic association strengths among key skills (e.g., “SQLServer”, which is one of the “database programming languages”). This approach provides more effective structural information for the graph neural network. Subsequently, GATs are then employed to encode and learn from the constructed text graphs, aiming to capture the semantic structures within both the resume and job requirements.

In the semantic encoding module for resumes and job descriptions based on a hybrid attention mechanism, a differential attention encoder is primarily employed to encode both the resume and job requirements. This encoder is capable of effectively reducing the attention weights assigned to misspelled or noisy words, such as “jingxing”. Subsequently, a self-attention mechanism is utilized to further focus on the key skill features within both the resume and job requirements (“JavaScript”, “SQLServer”, “database”, etc.). Finally, considering that terms like “.Net platform” and “.Net framework” are highly similar in semantics but exhibit low similarity in their word vector representations, adversely affecting the matching results, a feature fusion strategy with fine-grained semantic enhancement is designed to effectively integrate the graph-based semantic encoding features and hybrid attention-based semantic encoding features, thereby determining the match between the applicant’s resume and the job posting.

3.1. Problem Definition

This paper aims to study the matching between job seekers’ resumes and job requirements in person-job fit tasks. Specifically, given a job seeker’s resume containing n skills, denoted as , each skill consists of s words, represented by . Similarly, a job posting contains m requirements, denoted as , with each job requirement comprising v words, represented by . A label is used to indicate the recruitment outcome, where signifies a successful match and indicates a mismatch.

Based on the above definitions, the person-job fit task can be formulated as learning a predictive model from the interaction records of existing job seekers’ resumes and job requirements. This model calculates the matching score between R and P and subsequently predicts the corresponding outcome label y.

3.2. Graph-Based Semantic Encoding for Resumes and Job Positions

Enterprises primarily rely on keyword-based matching of skills and experiences to identify suitable candidates. However, this approach struggles to accurately capture the deep semantic associations and textual structural information between job seekers’ resumes and job requirements. To address this issue, graph networks need to be introduced into the person-job matching task. The initial step in employing graph networks involves graph construction.

3.2.1. Graph Construction Methods for Resumes and Job Positions

The resumes R and the job requirements P to be matched need to be transformed into graph structures. Next, taking resumes as an example, the specific way to construct a graph structure is as follows.

First, an empty undirected graph G is initialized. Each skill in the job seekers’ resume texts R is segmented into a word sequence. The word sequences of the resumes are traversed in order . If a word exists in the pretrained Word2Vec word embedding model, then its corresponding –dimensional feature vector is obtained. In this context, the word vectors for all nodes in both the resume graph and the job graph are derived from the same pretrained Word2Vec model. The words are added as nodes to the graph G, with the corresponding word vector serving as the nodes’ representation.

Second, edges are constructed using a co-occurrence window approach. Specifically, for the segmented sequence of the job seekers’ resumes, edges are formed between words within the co-occurrence window range. The above steps are repeated until a complete graph structure is constructed.

Finally, PMI is utilized to quantify the semantic association strength between key features. Subsequently, a sliding window approach is employed to construct edges between nodes, with weights assigned to these edges to focus on the strong associative relationships among local features. The probability of each pair of connected nodes representing words co-occurring in the resumes in graph G is calculated and denoted as . The probability of each node’s word occurring individually is calculated as and . The PMI between adjacent words is computed using the following formula:

where represents the word corresponding to the ith node in graph G and represents the word corresponding to the jth node. When the PMI is positive, a weighted edge is added between the nodes corresponding to the two words, with the weight being the PMI value.

The graph construction method for the job requirements P to be matched is similar to the above method for the job seekers’ resumes.

3.2.2. Graph Attention Network-Based Semantic Encoding for Resumes and Job Positions

GATs [25], by stacking multiple layers of network structures, enable each node to dynamically attend to the features of its neighboring nodes. This approach adaptively learns the importance weights between nodes, effectively extracting and encoding key features from job seekers’ resumes and job requirements.

Taking job seekers’ resumes as an example, the resume graph is represented by , where V denotes the node sets and E denotes the edge sets. The similarity between node i (denoted as ) and node j (denoted as ) via linear transformation and concatenation operation is calculated using the formula:

where and are the linearly transformed features of and , respectively, represents the trainable parameters of the lth attention head, represents the parameters of the lth attention head, is the LeakyReLU activation function, and ‖ denotes the concatenation operation.

The softmax function is then applied to normalize the similarity between and , yielding the attention coefficient . The formula is as follows:

where is the set of neighboring nodes of node i.

After constructing the resume graph, the features of each node’s neighboring nodes are weighted and summed using the attention coefficients. The feature representations from each attention head are then concatenated to obtain the node features of the resume. The formula for this is as follows:

where L is the total number of attention heads, is the LeakyReLU activation function, and ‖ denotes vector concatenation.

Through the above method, a single GAT layer is obtained. In the experiments, a two-layer stacked GAT layer is employed, where each GAT layer updates and aggregates the node features to obtain refined node representations .

Subsequently, global max pooling is used to refine and compress the information within the graph attention network structure. Specifically, for the neighborhood feature vectors of each node, the element-wise maximum value is selected as the node’s global feature representation. The formula for this is as follows:

The semantic encoding method for the job requirements to be matched is similar to the above semantic encoding method for the job seekers’ resumes.

3.3. Hybrid Attention Mechanism-Based Semantic Encoding for Resumes and Job Positions

In this subsection, a semantics-focused hybrid attention mechanism encoding method is introduced to capture the semantics of job seekers’ resumes and enterprise recruitment requirements.

3.3.1. Differential Attention Mechanism-Based Semantic Encoding for Resumes and Job Positions

Differential attention mechanism mitigates the impact of noise, enabling more accurate semantic representation of the content within job seekers’ resumes and job requirements. Taking job seekers’ resumes as an example, the resume semantic encoding method using the differential attention mechanism is as follows.

First, the pretrained word embedding matrix is used to map job seekers’ skills into a continuous vector space:

where denotes the sth word in the lth skill and represents the vector of the sth word within the skill . It is crucial to emphasize that the word embedding matrix is the shared between enterprise recruitment requirements and job seekers’ resumes.

Subsequently, the resumes’ representation of the job seekers is obtained, and the calculation formula is as follows:

where represents the embedded representation of the nth skill. For example, for 1,1:s, 1 represents the first skill, and 1:s refers to the first to s words in the first skill. The rest follow similarly.

Next, the multi-head differential attention mechanism from the differential transformer (Diff-Transforms) [26] is introduced to encode the job seekers’ resume. To remove noise from the attention scores, the difference between a pair of softmax functions is used to eliminate noise.

Specifically, given the embedded representation of the job seekers’ resumes , it is projected into a query, key, and value. By multiplying with the weight matrices of the query, key, and value (, , and , respectively), the representations of the query and , key and , and value V are obtained. The query differential attention operation is calculated using the following formula:

where , , and are the projection matrices for each attention head and represents the number of differential multi-head attention heads, while is a learnable scalar used to control the balance between the two softmax functions.

Next, root mean square layer normalization (RMSNorm) is applied to the output of each attention head, using a fixed multiplier as the normalization scaling factor . The outputs of multiple heads are concatenated and linearly transformed through a learnable projection matrix to obtain the output result of the differential multi-head attention , as shown in the following formulas:

where represents an attention head representation on the input after differential attention calculation and normalization for the attention head. denotes the concatenated output result of h attention heads, and is a hyperparameter used to initialize .

Finally, the output of the multi-head attention is fed into a feedforward neural network to obtain the semantic representation of the resumes :

The differential attention semantic encoding method for the recruitment requirements is similar to the above semantic encoding method for resumes.

3.3.2. Self-Attention Mechanism-Based Semantic Encoding for Resumes and Job Positions

The self-attention mechanism dynamically allocates weights to capture the global dependencies inherent in job seekers’ resumes and job requirements, thereby further excavating the semantic structures among skills and enhancing the representation of key features.

Taking job seekers’ resumes as an example, the resumes’ semantic encoding results using the differential attention mechanism are used as input, where the resumes’ semantic representation , where , consists of a sequence of semantic representations of l skills in the resumes. Let represent the semantic representation of the lth skill.

The softmax function is used to calculate the attention scores , and the formulas are as follows:

Here, and are learnable parameters during training, is the bias, Tanh represents the hyperbolic tangent activation function, and indicates the importance of the lth skill in the resumes.

Finally, the resumes’ representations of the job seekers are generated by calculating :

The semantic encoding for recruitment requirements is similar to the above semantic encoding method for job seekers’ resumes.

3.4. Person-Job Fit

3.4.1. Fine-Grained Semantics

In person-job matching tasks, cosine similarity is frequently employed to gauge the similarity between job seekers’ resumes and job requirements. However, relying solely on cosine similarity overlooks the fine-grained semantic matching between job seekers’ resumes and job requirements. To address this, we further introduce dot product similarity in addition to cosine similarity. The following describes the calculation process of fine-grained semantic matching.

First, the resume representation of job seekers obtained through hybrid attention mechanism encoding is concatenated with the resume features extracted by the GATs to obtain the fused resume representation . The semantics representation of job requirements is similar to that of job seekers’ resumes. The calculation formulas for the resume representation and job requirements are as follows:

Second, cosine similarity is used to calculate the overall semantic similarity between the resumes and job requirements. Then, the dot product function is used to individually calculate the similarity of key features such as skills and work experience in the resumes and job requirements. After that, the fine-grained semantic similarity obtained by is used to supplement the overall similarity . The and features are concatenated to obtain a fine-grained semantic feature vector , which captures the fine-grained differences between the work experience in the job seeker’s resumes and the business needs in the job requirements. The formulas for this are as follows:

where and are trainable weight matrices.

Vector primarily focuses on the fine-grained directional differences in the representation vectors of job seekers’ resumes and job requirements while being insensitive to distance. A more effective approach is to compute the bilinear distance between resumes and job requirements based on vector distances. The bilinear distance is calculated as follows:

where Bilinear denotes the bilinear function.

The aforementioned vector representations measure the alignment between resumes and job requirements from the perspectives of fine-grained semantics and bilinear distance semantics, yet they neglect the interactive relationships at the feature level. To this end, we construct an interaction vector . By combining the representation vectors of resumes and job positions, we calculate the element-wise absolute differences, thereby achieving fine-grained semantic interactions at the level of resume-job position pairs. Finally, the resume representation , job requirements representation , and absolute value of the difference between these two vectors are concatenated to obtain , and the calculation formula is as follows:

3.4.2. Enhanced Feature Fusion

The multilayer perceptron (MLP) has limited ability to capture the nonlinear relationships between job seekers’ resumes and job requirements. Therefore, the feature fusion strategy of the KAN [27] is introduced to more effectively capture the nonlinear relationships between job seekers’ work experience and job requirements.

A KAN comprising L layers adopts a form of multi-layer composite functions, and its computational formula is as follows:

Here, denotes the activation function matrix, where each activation function is constructed from a learnable parameterized spline curve, which is calculated as follows:

where represents the activation function, w is the trainable weight matrix, b(x) is the basis function, and spline(x) is a nonlinear function formed by the linear combination of B spline basis functions, while consists of trainable parameters and is the ith B spline basis function. To ensure the expressiveness and smoothness of the activation functions, in the experiments, the order of the B spline functions was set to three (i.e., cubic B splines), and the grid size for spline interpolation was set to five.

In the person-job fit task, the KAN enhances feature fusion by dynamically adjusting the learning strategy for B spline curves. It integrates the fine-grained semantic feature vectors of resumes and job positions, the bilinear distance vectors between resumes and job positions, and the fine-grained semantic interaction vectors at the resume-job position level. This integration yields the final matching results y between resumes and job positions:

The cross-entropy loss (CEL) function is a commonly employed loss function in the training process of neural networks, serving to measure the discrepancy between the predicted values and true values. During the model training phase, the CEL guides the model to continuously optimize network parameters, reduce the output loss, and enhance the performance of person-job fitting. Finally, the CEL function is used to train the FSEGNN-PJF framework. The CEL is calculated as follows:

where g represents the number of batches in the training process, denotes the predicted value, denotes the true value, is the predicted similarity value, and represents the true similarity value between and .

4. Experiments

In this section, the experimental set-up, baselines, and evaluation metrics are introduced first. Then, the performances of the proposed FSEGNN-PJF framework and baselines are reported. Finally, an ablation study and case study are designed to demonstrate the effectiveness of the proposed components and the interesting characteristics of the FSEGNN-PJF framework.

4.1. Dataset

All of the records in a real-world recruitment dataset were anonymized by removing user profiles. The experimental dataset included information on recruitment job positions, job seekers’ resumes, and the matching label for recruitment results, consisting of 4278 samples. The first job position column represents the recruitment requirements for the job position, the second resume column describes the experiences in the job seeker’s resume (work experience, etc.), and the third matching label column indicates the label for the recruitment result. Essentially, the person-job fit task is a binary classification problem. If the recruitment is successful, then the label is 1, and if unsuccessful, then the label is 0.

4.2. Experimental Set-Up

All experiments were conducted on a computer equipped with a 12th Gen Intel(R) Core(TM) i7-12700H processor, 16.0 GB of onboard RAM, and an NVIDIA GeForce RTX 3060 Laptop GPU.

In the experiments, the resumes and job requirements were initialized using the Word2Vec word embedding method, with a word embedding dimension of 128. The co-occurrence window size was set to 2. The hidden layer size of the GATs was 128. The number of differential transformer layers was 2, the number of attention heads in the differential multi-head attention mechanism was 8, the dimension of the differential transformer feedforward layer was 2048, and the weight coefficient lambda value in the differential loss function was 0.8. The AdamW optimizer was chosen to optimize the framework, with a training batch size of 128 and a learning rate of 0.0005. Table 1 shows the parameter settings for the experiments.

Table 1.

Parameter settings.

4.3. Baselines

To validate the efficacy of the FSEGNN-PJF framework, comprehensive comparisons were conducted with three methodological categories: conventional supervised learning approaches, deep learning methods, and state-of-the-art person-job matching models. All methods can be described as follows:

- Conventional supervised learning methods include decision tree (DT), support vector machine (SVM), Adaboost (AB), and gradient boosting decision tree (GBDT), implemented using Doc2Vec vector as feature inputs.

- DSSM utilizes dual deep neural networks for semantic vector projection with cosine similarity measurement [18].

- Siamese-LSTM involves inputting two sentences into the same LSTM model to obtain forward and backward sentence vectors and finally inputting the sentence pair representations into the softmax layer [28].

- ABCNN implements attention-based convolutional neural networks for sentence pair modeling [29].

- MatchPyramid has sentence pairs inputted, features extracted via a CNN, and the matching scores finally outputted using an MLP [30].

- PJFNN involves taking resume and job requirement pairs as input, encoding resume and job requirement texts by employing a parallel CNN, and calculating the matching results by using the cosine similarity [4].

- BPJFNN [1] is a simplified version of APJFNN. It takes resumes and recruitment requirements as the input sequences and employs BiLSTM to learn the semantic representation of the job seekers’ resumes and job requirements.

- APJFNN considers resumes and job requirements as input sequences, uses BiLSTM to encode job seekers’ resumes and job requirements, and adopts hierarchical ability-aware attention mechanisms to learn word-level semantic representations of resumes and job requirements [1].

- IPJF uses a CNN and collaborative attention mechanism to represent resumes and job requirements, and it employs an MLP to predict the matching between resumes and job positions [31].

- conSultantBERT employs fine-tuned Siamese sentence-bert to match job seekers and jobs [32].

- MKPM combines BiLSTM encoding with attention mechanism for keyword pairs extracted and interaction feature generation [24].

- InEXIT leverages BERT and a multi-head attention mechanism for encoding and cross-attribute interaction modeling and uses an aggregation matching layer to predict the matching between resumes and job positions [5].

- FSEGNN-PJF is the proposed framework in this paper.

4.4. Evaluation Metrics

The binary classification nature of the person-job fit necessitates four evaluation metrics: accuracy, precision, recall, F1 score (F1), and AUC. Accuracy refers to the proportion of correctly predicted positive (successful hiring) and negative (unsuccessful hiring) samples to the total number of samples. Precision is defined as the proportion of correctly predicted successful hiring samples among all samples predicted as successful hirings. Recall is the proportion of correctly predicted successful hiring samples to all actual successful hiring samples. The F1 score is the harmonic mean of precision and recall. AUC is the area under an ROC curve. The ROC curve is determined by the true positive rate (TPR) and false positive rate (FPR). These metrics collectively assess model performance. The formulas for these evaluation metrics are as follows:

Here, true positive (TP) represents the number of samples that are actual successful hirings and are also predicted to be successful hirings. True negative (TN) represents the number of samples that are actually unsuccessful hirings and are also predicted to be unsuccessful hirings. False negative (FN) represents the number of samples that are actually successful hirings but are predicted to be unsuccessful hirings. False positive (FP) represents the number of samples that are actually unsuccessful hirings but are predicted to be successful hirings.

The accuracy, precision, recall, F1 score, and AUC values ranged from 0 to 1. The higher the value, the better the performance of the method.

4.5. Overall Performance

To verify the effectiveness of the proposed FSEGNN-PJF framework on real recruitment datasets, the FSEGNN-PJF framework was compared with several baselines. Table 2 lists the performance of the FSEGNN-PJF framework and baselines in terms of accuracy, precision, recall, F1 score, and AUC. The first column shows the 14 baselines for comparison and the proposed method. refers to the method proposed in this paper, which utilizes the traditional transformer. FSEGNN-PJF is the final framework selected in this paper, which employs the differential transformer. Columns 2–6 show the experimental results for the accuracy, precision, recall, F1 score, and AUC. The optimal results for each evaluation metric are shown in bold.

Table 2.

Overall performance of FSEGNN-PJF framework and all baselines for person-job fit in terms of accuracy, precision, recall, F1 score, and AUC.

As can be observed from Table 2, among all the baselines, the classic supervised learning methods exhibited the worst overall performance. For the classic supervised learning methods, in terms of accuracy, the AB model performed the worst, while the GBDT model performed the best.

Compared with the classic supervised learning methods, the deep matching models demonstrated superior overall performance in person-job matching tasks. Among them, the classic deep semantic matching model DSSM exhibited the poorest performance among all deep matching models. However, the performance of DSSM was significantly better than that of GBDT, the best-performing model among the classic supervised learning methods. In terms of accuracy, DSSM’s was 16.97% higher than GBDT’s. In terms of F1 score, DSSM showed an improvement of 13.94% over GBDT. This may be due to the fact that GBDT relies on text feature embeddings generated by the Doc2Vec method, which results in feature vectors containing more noise and difficulty in effectively capturing subtle semantic differences between job seekers’ resumes and job requirements.

Among deep matching models, MKPM demonstrated further improved performance compared with DSSM. This performance improvement may be attributed to the fact that MKPM can more accurately identify matching relationships when associating job seekers’ resumes with job requirements, whereas the DNN structure of DSSM cannot fully reflect the matching degree between resumes and jobs when dealing with complex matching relationships.

Driven by the demand for complex person-job matching relationships, the APJFNN model, designed for person-job matching tasks, demonstrated improvements of 1.63% in accuracy and 1.25% in F1 score compared with MKPM. The experimental results indicate that the APJFNN model has advantages in modeling person-job matching interaction information.

Further observation of the experimental results revealed that the pretrained model conSultantBERT performed better than the APJFNN model. This suggests that BERT-based person-job matching methods can effectively capture the semantics of job seekers’ resumes and job requirements, indicating that the BERT method is beneficial for modeling person-job matching tasks.

It is gratifying to find that, in terms of accuracy and F1 score, the performance of the method was superior to all baselines in person-job matching tasks. The experimental results for accuracy, precision, recall, F1 score, and AUC were 0.9182, 0.9220, 0.9178, 0.9199, and 0.9203, respectively. The experimental results demonstrate the effectiveness of the method in capturing deep semantic and structural information in resumes and job titles.

The experimental results of the FSEGNN-PJF framework on the accuracy, precision, recall, F1 score, and AUC evaluation metrics were 0.9369, 0.9486, 0.9269, 0.9376, and 0.9248, respectively. The results indicate that the performance of FSEGNN-PJF was superior to all baselines. Compared with the method, the performance of the FSEGNN-PJF framework in terms of the accuracy, precision, recall, and F1 score evaluation metrics improved by 1.87%, 2.66%, 0.91%, and 1.77%, respectively. This phenomenon suggests that the differential transformer in the FSEGNN-PJF framework reduces invalid noise, enabling the method to focus on the matching between job seekers’ core skills and job requirements when dealing with complex person-job matching scenarios, which is conducive to achieving a precise person-job fit.

4.6. Ablation Study

To evaluate the impact of each variant on the performance of the FSEGNN-PJF framework, ablation experiments were conducted. Five variants of the FSEGNN-PJF framework were designed: (1) FSEGNN-PJF w/o KAN denotes that a KAN was not used, and it adopted an MLP instead of a KAN to fuse features; (2) FSEGNN-PJF w/o GAT denotes the removal of the GAT variant, i.e., removing the graph-based resume-job semantic encoding; (3) FSEGNN-PJF w/o D-Transformer denotes the removal of the D-Transformer variant, i.e., removing the differential transformer; (4) FSEGNN-PJF w/o FGSM denotes the removal of the fine-grained semantic variant; and (5) FSEGNN-PJF w/o Satt denotes the removal of the self-attention variant. The results of the ablation experiments are shown in Table 3.

Table 3.

Ablation study on FSEGNN-PJF framework with various modules for person-job fit.

The experimental results of the ablation study show the following:

- (1)

- Regardless of which variant was removed, the performance decreased, indicating that each variant was useful in the overall framework.

- (2)

- The FSEGNN-PJF w/o KAN variant experienced decreases in accuracy, precision, recall, and F1 score of 2.57%, 3.54%, 1.37%, and 2.44%, respectively. The performance degradation of the proposed framework when using an MLP for feature fusion indicated the insufficiency of the MLP in modeling the nonlinear relationships among job seekers’ experiences, skills, and job requirements. The results indicate that the KAN could effectively capture multi-dimensional complex nonlinear features between job seekers’ experience, skills, and job requirements.

- (3)

- The FSEGNN-PJF w/o GAT variant showed decreases in accuracy, precision, recall, and F1 score of 2.10%, 1.08%, 3.19%, and 2.17%, respectively. The results show that the resume-job semantic encoding module of the graph attention network could effectively capture deep semantic associations and structural information between resumes and job requirements.

- (4)

- The FSEGNN-PJF w/o D-Transformer variant demonstrated decreases in accuracy and F1 score of 2.80% and 2.52%, respectively. The results indicate that the D-Transformer variant could reduce data noise and allow the frame to focus more on the matching between job seekers’ core skills and job requirements.

- (5)

- The performance degradation of the FSEGNN-PJF w/o FGSM variant demonstrates that this module can effectively capture the fine-grained semantics of job seekers’ resumes and job requirements.

- (6)

- The FSEGNN-PJF w/o Satt variant exhibited decreases of 7.71%, 6.82%, 8.67%, 7.78%, and 6.45% in accuracy, precision, recall, F1 score, and AUC, respectively. These results demonstrate the critical role of the self-attention mechanism in capturing intra-sentential semantic dependencies within resumes and job descriptions, as well as perceiving key features such as skills.

4.7. Robustness to Noise

To validate the robustness of the proposed framework against input noise in real-world recruitment application scenarios, three common types of noise (e.g., word deletion, spelling errors, and word repetition) were simulated in experiments. Specifically, word deletion was achieved by randomly removing some words to simulate situations where job seekers or recruiters provide incomplete or disorganized inputs. Spelling errors were simulated by substituting characters or words to mimic typing mistakes or non-standard spellings. Word repetition was simulated by randomly duplicating and inserting existing words to represent redundant expressions or repeated information caused by copy-pasting. These noise perturbations simulated common spelling errors or formatting issues in job seekers’ resumes or job descriptions. Artificial noise was introduced into the original test dataset, and the performance of the proposed framework was evaluated under the same settings. The experimental results for robustness, with the highest scores displayed in bold font, are shown in Table 4.

Table 4.

Performance of the FSEGNN-PJF framework on the test sets and perturbed samples of the person-job fit task.

As can be seen from the table above, the framework demonstrated the best overall performance across various evaluation metrics on the original data. After introducing noise, there was a slight decline in performance, but it remained generally stable. Word deletion led to a relatively more pronounced performance drop, possibly because the randomly removed words contained information expressing core semantics or key entities, resulting in incomplete semantic representation. In contrast, the impact of word repetition noise was relatively minor, indicating that the framework has a certain degree of robustness against redundant information. The impact of spelling error noise was the smallest, suggesting that the proposed framework possesses a certain spelling error tolerance capability.

Under the three types of noise perturbations, the proposed framework’s performance remained relatively stable, with the F1 score consistently maintained at about 92%. The experimental results demonstrate that the FSEGNN-PJF framework exhibits good robustness in real-world scenarios.

4.8. Case Study

The attention mechanism helps enhance the interpretation ability of person-job matching tasks. In this subsection, the experimental results will be illustrated through visualization of the attention results.

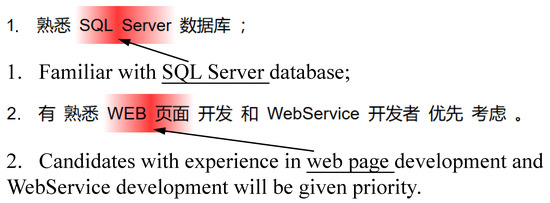

The attention mechanism can intuitively display the importance of different words in job posting requirements, as shown in Figure 2. In Sentence 1, some words are highlighted as key phrases, such as “SQL Server”. The highlighted key phrases are shown in dark red, indicating that the attention weight of this key phrase is higher. This experimental results indicate that the proposed FSEGNN-PJF framework paid a great deal of attention to job skills. In Sentence 2, the skill of “WEB page” is highlighted as a key phrase. In contrast, some more general or common words, such as “WebService development”, are not focused on. Analysis of the experimental results shows that the proposed FSEGNN-PJF framework in this paper performs well in capturing key skills.

Figure 2.

Ability-aware examples.

Through the visualization results of the attention mechanism, it can be clearly seen that higher weights were assigned to the key skills that were focused on. This case study demonstrates that the proposed FSEGNN-PJF framework can provide a good interpretation ability for person-job fit tasks.

5. Conclusions

This paper proposes a novel fine-grained semantics-enhanced graph neural network for person-job fit (FSEGNN-PJF) framework. Specifically, given a pair of resumes and job positions, first, a GAT was used to semantically encode the job seekers’ resumes and job requirements. Second, a hybrid attention mechanism was employed to semantically encode the job seekers’ resumes and job requirements. Third, a fine-grained semantic matching calculation method was designed to deeply fuse the semantic features of the job seekers’ resumes and job requirements using an enhanced feature fusion strategy. Finally, the experimental results validated the effectiveness and robustness of the proposed FSEGNN-PJF framework.

In the future, we will explore person-job fit tasks oriented toward multimodal data (e.g., audio or video data from interviews). Further research will be conducted on other text representation methods for resumes and job requirements to improve the performance of person-job fitting.

Author Contributions

Methodology and writing—original manuscript, X.X.; methodology, performing experiments, and writing—original manuscript, J.W.; performing experiments and writing manuscript, B.M., J.R. and W.Z.; conceptualization and review and editing, S.G.; writing editing, M.T. and C.W.; debugging code and validation, Y.C.; data preprocessing and validation, H.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Fundamental Research Program of Shanxi Province (No. 202203021212174), the Research Project of Humanities and Social Sciences of the Ministry of Education in China (No. 24YJCZH289), the Scientific and Technological Innovation Programs of Higher Education Institutions in Shanxi (No. 2022L474), the 2024 Undergraduate Talent Cultivation Project of Northwest University (No. JX2024008), the Yuncheng University Scientific Research Project (No. YY-202508), the Special Fund for Science and Technology Innovation Teams of Yuncheng University (No. YCXYTD-202402), and the Yuncheng University Doctoral Research Foundation Program (No. YQ-2022003).

Institutional Review Board Statement

The study did not require ethical approval.

Data Availability Statement

The data used to support the findings of this study are available from the corresponding author upon request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Qin, C.; Zhu, H.; Xu, T.; Zhu, C.; Jiang, L.; Chen, E.; Xiong, H. Enhancing Person-Job Fit for Talent Recruitment: An Ability-aware Neural Network Approach. In Proceedings of the 41st International ACM SIGIR Conference on Research & Development in Information Retrieval, SIGIR 2018, Ann Arbor, MI, USA, 8–12 July 2018; pp. 25–34. [Google Scholar]

- Malinowski, J.; Keim, T.; Wendt, O.; Weitzel, T. Matching people and jobs: A bilateral recommendation approach. In Proceedings of the 39th Annual Hawaii International Conference on System Sciences (HICSS’06), Kauai, HI, USA, 4–7 January 2006; Volume 6, p. 137c. [Google Scholar]

- Zhang, X.; Zhou, Y.; Ma, Y.; Chen, B.; Zhang, L.; Agarwal, D. GLMix: Generalized Linear Mixed Models for Large-Scale Response Prediction. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 363–372. [Google Scholar]

- Zhu, C.; Zhu, H.; Xiong, H.; Ma, C.; Xie, F.; Ding, P.; Li, P. Person-Job Fit: Adapting the Right Talent for the Right Job with Joint Representation Learning. ACM Trans. Manag. Inf. Syst. 2018, 9, 12:1–12:17. [Google Scholar] [CrossRef]

- Shao, T.; Song, C.; Zheng, J.; Cai, F.; Chen, H. Exploring Internal and External Interactions for Semi-Structured Multivariate Attributes in Job-Resume Matching. Int. J. Intell. Syst. 2023, 2023, 2994779. [Google Scholar] [CrossRef]

- Zhao, C. Graph Adaptive Attention Network with Cross-Entropy. Entropy 2024, 26, 576. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Lei, H.; Ma, Z.; Zhang, F. Code Similarity Prediction Model for Industrial Management Features Based on Graph Neural Networks. Entropy 2024, 26, 505. [Google Scholar] [CrossRef]

- Xue, X.; Sun, X.; Wang, H.; Zhang, H.; Feng, J. Neural network fusion with fine-grained adaptation learning for turnover prediction. Complex Intell. Syst. 2023, 9, 3355–3366. [Google Scholar] [CrossRef]

- Teng, M.; Zhu, H.; Liu, C.; Zhu, C.; Xiong, H. Exploiting the Contagious Effect for Employee Turnover Prediction. In Proceedings of the The Thirty-Third AAAI Conference on Artificial Intelligence, AAAI 2019, The Thirty-First Innovative Applications of Artificial Intelligence Conference, IAAI 2019, The Ninth AAAI Symposium on Educational Advances in Artificial Intelligence, EAAI 2019, Honolulu, HI, USA, 27 January–1 February 2019; pp. 1166–1173. [Google Scholar]

- Xue, X.; Feng, J.; Gao, Y.; Liu, M.; Zhang, W.; Sun, X.; Zhao, A.; Guo, S.X. Convolutional Recurrent Neural Networks with a Self-Attention Mechanism for Personnel Performance Prediction. Entropy 2019, 21, 1227. [Google Scholar] [CrossRef]

- Xue, X.; Gao, Y.; Liu, M.; Sun, X.; Zhang, W.; Feng, J. GRU-based capsule network with an improved loss for personnel performance prediction. Appl. Intell. 2021, 51, 4730–4743. [Google Scholar] [CrossRef]

- Rafter, R.; Bradley, K.; Smyth, B. Personalised retrieval for online recruitment services. In Proceedings of the 22nd Annual Colloquium on Information Retrieval, Cambridge, UK, 5–7 April 2000; pp. 151–163. [Google Scholar]

- Wang, P.; Dou, Y.; Xin, Y. The analysis and design of the job recommendation model based on GBRT and time factors. In Proceedings of the 2016 IEEE International Conference on Knowledge Engineering and Applications (ICKEA), Singapore, 28–30 September 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 29–35. [Google Scholar]

- Jiang, J.; Ye, S.; Wang, W.; Xu, J.; Luo, X. Learning Effective Representations for Person-Job Fit by Feature Fusion. In Proceedings of the CIKM ’20: The 29th ACM International Conference on Information and Knowledge Management, Virtual Event, Ireland, 19–23 October 2020; pp. 2549–2556. [Google Scholar]

- Yan, R.; Le, R.; Song, Y.; Zhang, T.; Zhang, X.; Zhao, D. Interview choice reveals your preference on the market: To improve job-resume matching through profiling memories. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 914–922. [Google Scholar]

- Fu, B.; Liu, H.; Zhao, H.; Zhu, Y.; Song, Y.; Zhang, T.; Wu, Z. Market-Aware Dynamic Person-Job Fit with Hierarchical Reinforcement Learning. In Proceedings of the Database Systems for Advanced Applications—27th International Conference, DASFAA 2022, Virtual Event, 11–14 April 2022; Proceedings, Part II. Bhattacharya, A., Lee, J., Li, M., Agrawal, D., Reddy, P.K., Mohania, M.K., Mondal, A., Goyal, V., Kiran, R.U., Eds.; Lecture Notes in Computer Science. Springer: Berlin/Heidelberg, Germany, 2022; Volume 13246, pp. 697–705. [Google Scholar]

- Xue, X.; Feng, J.; Sun, X. Semantic-enhanced sequential modeling for personality trait recognition from texts. Appl. Intell. 2021, 51, 7705–7717. [Google Scholar] [CrossRef]

- Huang, P.; He, X.; Gao, J.; Deng, L.; Acero, A.; Heck, L.P. Learning deep structured semantic models for web search using clickthrough data. In Proceedings of the 22nd ACM International Conference on Information and Knowledge Management, CIKM’13, San Francisco, CA, USA, 27 October–1 November 2013; pp. 2333–2338. [Google Scholar]

- Hu, B.; Lu, Z.; Li, H.; Chen, Q. Convolutional Neural Network Architectures for Matching Natural Language Sentences. In Proceedings of the Advances in Neural Information Processing Systems 27: Annual Conference on Neural Information Processing Systems 2014, Montreal, QC, Canada, 8–13 December 2014; pp. 2042–2050. [Google Scholar]

- Chen, Q.; Zhu, X.; Ling, Z.; Wei, S.; Jiang, H.; Inkpen, D. Enhanced LSTM for Natural Language Inference. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, ACL 2017, Vancouver, BC, Canada, 30 July–4 August 2017; Volume 1, pp. 1657–1668. [Google Scholar]

- Yu, C.; Xue, H.; Jiang, Y.; An, L.; Li, G. A simple and efficient text matching model based on deep interaction. Inf. Process. Manag. 2021, 58, 102738. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, J.; Cui, Z.; Wu, S.; Wang, L. A Graph-based Relevance Matching Model for Ad-hoc Retrieval. In Proceedings of the Thirty-Fifth AAAI Conference on Artificial Intelligence, AAAI 2021, Thirty-Third Conference on Innovative Applications of Artificial Intelligence, IAAI 2021, The Eleventh Symposium on Educational Advances in Artificial Intelligence, EAAI 2021, Virtual Event, 2–9 February 2021; pp. 4688–4696. [Google Scholar]

- Guo, S.; Guan, Y.; Li, R.; Li, X.; Tan, H. Frame-based Multi-level Semantics Representation for text matching. Knowl. Based Syst. 2021, 232, 107454. [Google Scholar] [CrossRef]

- Lu, X.; Deng, Y.; Sun, T.; Gao, Y.; Feng, J.; Sun, X.; Sutcliffe, R.F.E. MKPM: Multi keyword-pair matching for natural language sentences. Appl. Intell. 2022, 52, 1878–1892. [Google Scholar] [CrossRef]

- Velickovic, P.; Cucurull, G.; Casanova, A.; Romero, A.; Liò, P.; Bengio, Y. Graph Attention Networks. In Proceedings of the 6th International Conference on Learning Representations, ICLR 2018, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Ye, T.; Dong, L.; Xia, Y.; Sun, Y.; Zhu, Y.; Huang, G.; Wei, F. Differential transformer. arXiv 2024, arXiv:2410.05258. [Google Scholar]

- Liu, Z.; Wang, Y.; Vaidya, S.; Ruehle, F.; Halverson, J.; Soljačić, M.; Hou, T.Y.; Tegmark, M. Kan: Kolmogorov-arnold networks. arXiv 2024, arXiv:2404.19756. [Google Scholar]

- Mueller, J.; Thyagarajan, A. Siamese Recurrent Architectures for Learning Sentence Similarity. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; pp. 2786–2792. [Google Scholar] [CrossRef]

- Yin, W.; Schütze, H.; Xiang, B.; Zhou, B. ABCNN: Attention-Based Convolutional Neural Network for Modeling Sentence Pairs. Trans. Assoc. Comput. Linguist. 2016, 4, 259–272. [Google Scholar] [CrossRef]

- Pang, L.; Lan, Y.; Guo, J.; Xu, J.; Wan, S.; Cheng, X. Text Matching as Image Recognition. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; pp. 2793–2799. [Google Scholar]

- Le, R.; Hu, W.; Song, Y.; Zhang, T.; Zhao, D.; Yan, R. Towards Effective and Interpretable Person-Job Fitting. In Proceedings of the 28th ACM International Conference on Information and Knowledge Management, CIKM 2019, Beijing, China, 3–7 November 2019; pp. 1883–1892. [Google Scholar]

- Lavi, D.; Medentsiy, V.; Graus, D. conSultantBERT: Fine-tuned Siamese Sentence-BERT for Matching Jobs and Job Seekers. In Proceedings of the Workshop on Recommender Systems for Human Resources (RecSys in HR 2021) Co-Located with the 15th ACM Conference on Recommender Systems (RecSys 2021), Amsterdam, The Netherlands, 27 September–1 October 2021; Volume 2967. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).