1. Introduction

The age of information (AoI) [

1]—a metric for data freshness—has attracted significant research interest in diverse areas, including channel coding [

2,

3,

4,

5,

6], network scheduling [

7,

8], remote estimation [

9,

10,

11,

12], and other related fields. This growing interest stems from the surge of applications demanding timely status updates, such as the Internet of Things (IoT), Vehicular Ad-hoc NETwork (VANET), and surveillance networks [

13,

14,

15]. In practical applications, many systems often deploy multiple nodes to support complex monitoring tasks. Taking the industrial scenario as an example, a sensor network composed of distributed nodes can monitor key indicators such as temperature and pressure in real time, then transmit the data to the central monitoring system through the network in a timely manner. Based on these real-time data streams, factories can further optimize production processes to improve efficiency. However, for analog (i.e., continuous-valued) sources, high-precision encoding typically requires longer transmission times, inevitably causing information staleness at the receiver. Therefore, it is crucial to design an efficient compression scheme to ensure both timely and accurate recovery.

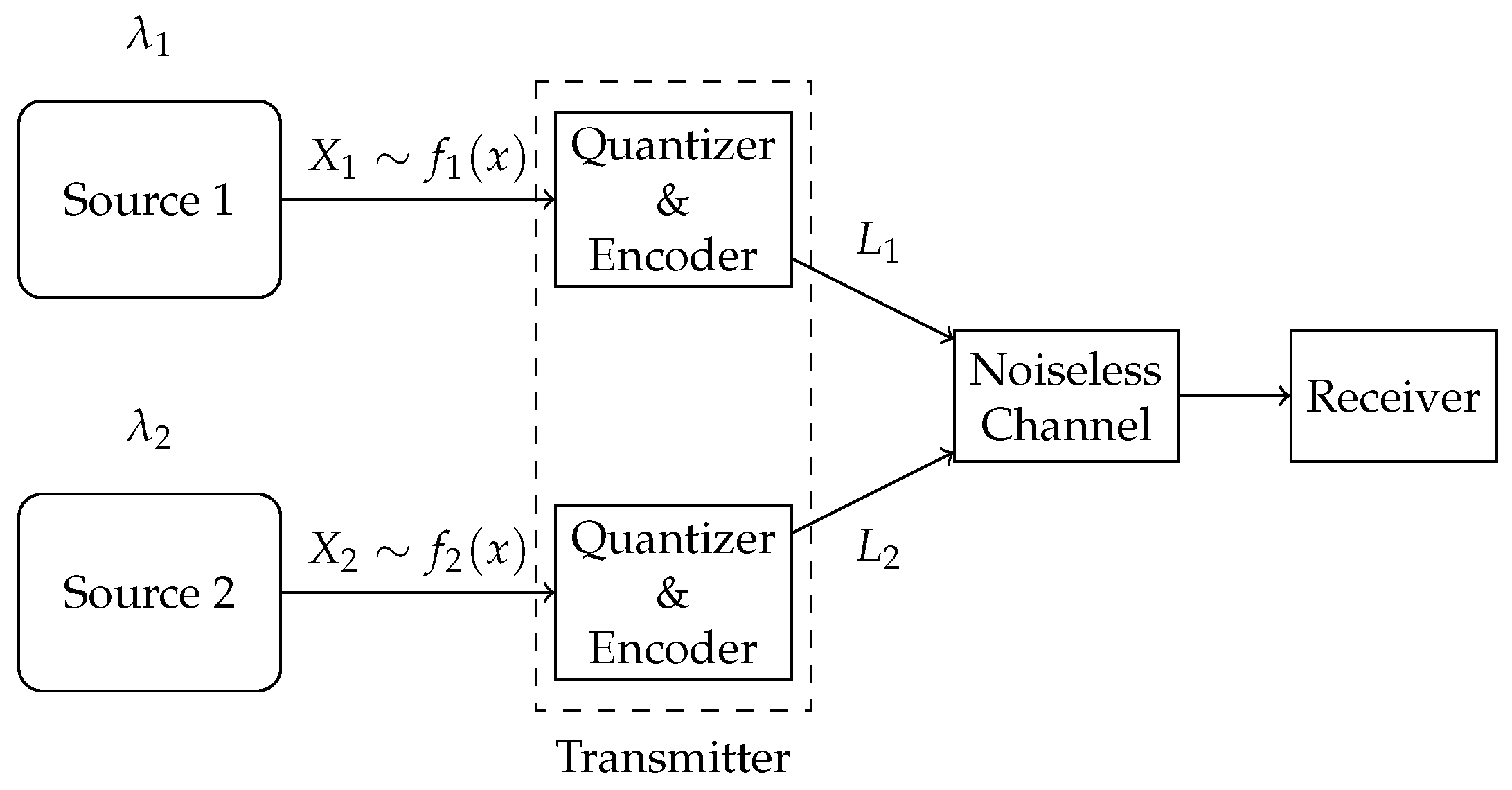

In this paper, we consider a compression problem in a multi-source (multi-stream) status-updating system through a representative two-source scenario, as illustrated in

Figure 1. The system consists of two independent continuous-time analog sources, each generating independent and identically distributed (i.i.d.) symbols with possibly different probability density functions (pdfs). Status updates from the sources arrive according to heterogeneous Poisson processes. These symbols are compressed into binary strings and sent through a shared error-free unit-rate link. When the channel is busy, newly arrived updates are discarded. The system is to be designed to optimize the acquisition of fresh and accurate data at the receiver.

References relevant to this work can be broadly categorized into three research directions.

The first research direction focuses on analyzing the average age in multi-source (multi-stream) status-updating systems. The exact expression of the multi-source M/M/1 non-preemptive queue has been derived in [

16]. Furthermore, the average age of the queues with more general service processes has been analyzed. Specifically, Najm and Telatar [

17] has investigated the average age for multi-source M/G/1/1 preemptive systems, while Chen et al. [

18] has derived the average age for multi-source M/G/1/1 non-preemptive systems, a result particularly relevant to our work. While these works provide fundamental queueing-theoretic insights, they do not incorporate the coding aspects.

The second research direction corresponds to the timely lossless source coding problem under different queuing-theoretic considerations. Therein, transmitting one bit requires one unit of time, so the transmission time of a symbol is equal to the assigned codeword length. Unlike conventional queuing systems with fixed service times, these studies treat codeword lengths as design variables to maximize information freshness, given the symbol arrival processes and probability mass functions (pmf). Existing work has studied various coding schemes under strict lossless reconstruction requirements for the entire data stream—including fixed-to-variable [

19], variable-to-variable [

20] and variable-to-fixed lossless source coding schemes [

21]—as well as more flexible systems permitting symbol skipping when the channel is busy [

22,

23]. Specifically, Mayekar et al. [

22] derived the optimal real-valued codeword lengths under a zero-wait policy, where a new update is generated immediately upon successful delivery of the previous one. In [

23], a selective encoding policy was proposed, which discards updates during busy periods and only encodes the most probable

k realizations; the corresponding optimal real-valued codeword lengths have been also derived. However, these works have only considered single-source scenarios, leaving the multi-source source coding problem unexplored. The analog sources have also not been taken into account, so there is no role of distortion.

The third research direction explores the age–distortion tradeoff, where distortion is defined in different ways across various studies. In [

24], distortion was modeled as a monotonically decreasing function of symbol processing time, and the optimal update policies were derived under distortion constraints. In [

25], distortion was measured by the mean-squared error (MSE), and the age–distortion tradeoff was studied in a sensing system where the energy harvesting sensor node monitored and transmitted the status updates to the remote monitor. In [

26], distortion was modeled as the importance of data, and the age–distortion tradeoff was studied using dynamic programming methods. Another work [

27] proposed a cross-layer framework to jointly optimize AoI and compression distortion for real-time monitoring over fading channels. However, these works did not consider the design of variable-rate quantizers.

An important case of discrete sources arises from the output of a quantizer. Therefore, we focus on the natural combination of timely lossless source coding and quantization. In our earlier work [

28], a joint sampling and compression problem involving the age–distortion tradeoff for a single-source system was investigated, where the arrival process was controlled by the sampler. While [

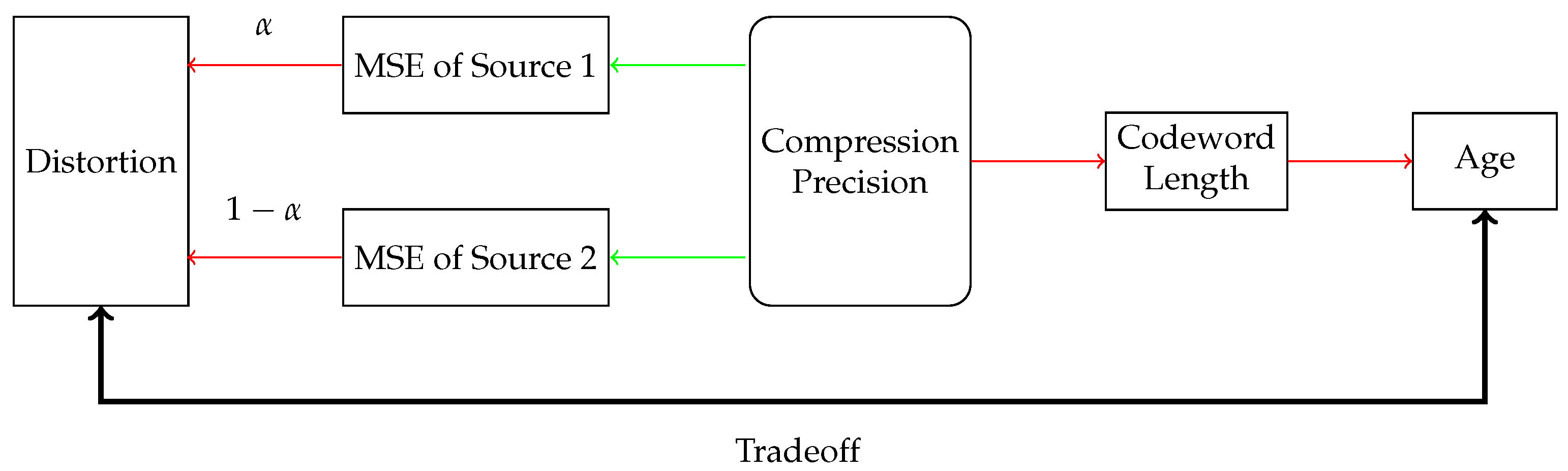

28] established a series of results for single-source systems, multi-source scenarios introduce new issues in the following four aspects: (1) The deployment of multiple quantizers and encoders increases the number of optimization parameters, which are often interdependent. (2) Age evolution in multi-source systems exhibits intrinsic interdependencies distinct from the single-source case. The average age of any individual source may be a nonlinear multivariate function of service times for all sources, which cannot be decoupled, thereby increasing system design complexity. (3) System performance metrics inherently depend on collective behavior across all sources. (4) Significant heterogeneity among sources—in terms of probability distribution characteristics, arrival rates, and other parameters—further complicates the design task. The age–distortion tradeoff visualization is illustrated in

Figure 2.

To accommodate heterogeneous requirements on accuracy, we introduce weights

and

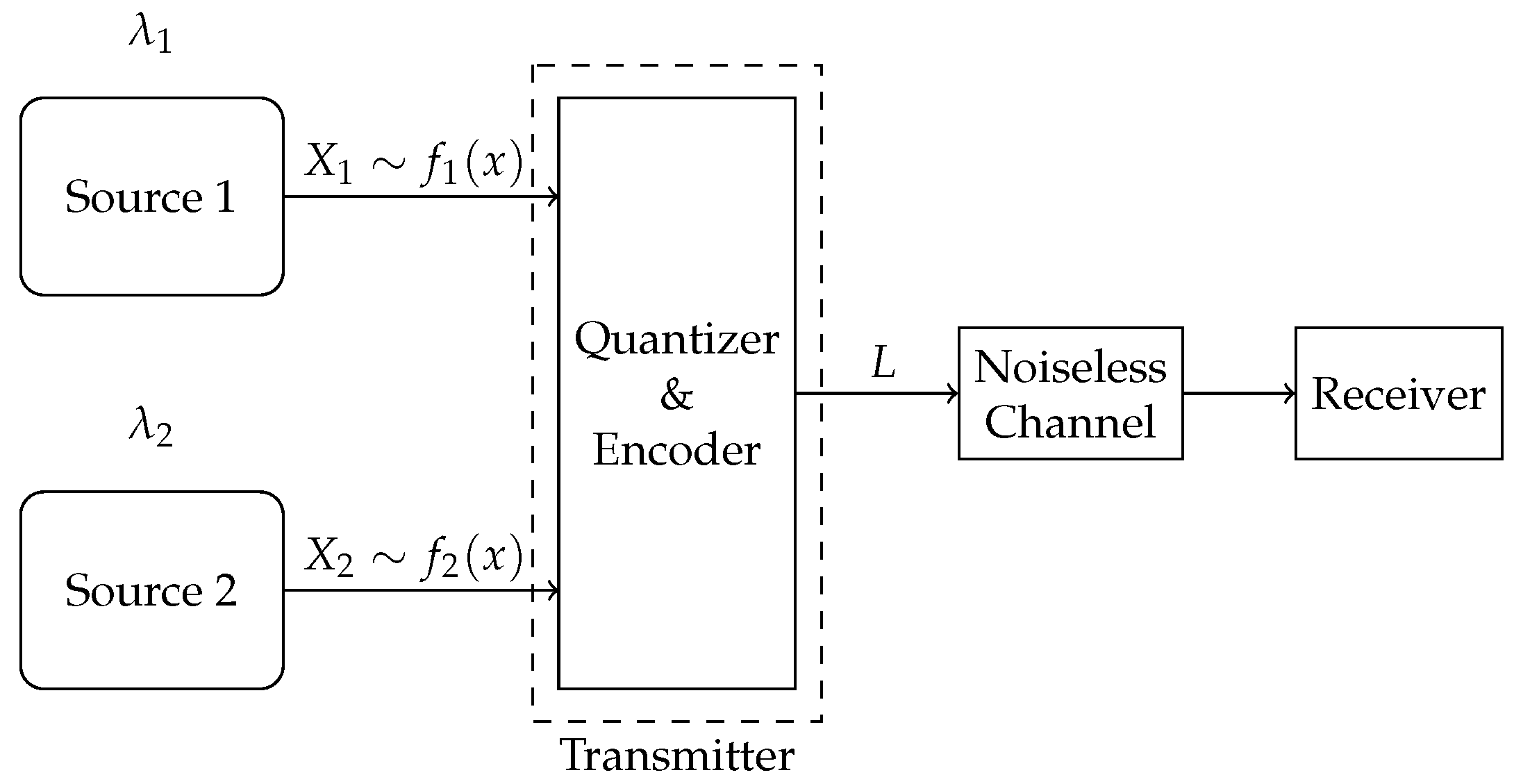

, and use the weighted sum of mean-squared errors (WSMSE) as the system distortion measure. We propose two compression schemes—a multi-quantizer compression scheme with dedicated quantizer–encoder pairs for each source, and a single-quantizer compression scheme, employing a unified quantizer–encoder pair shared across both sources, as illustrated in

Figure 3 and

Figure 4, respectively.

For each compression scheme, we formulate a joint optimization problem to design quantizers and encoders, minimizing the sum of the average ages under a given distortion constraint of symbols. For the multi-quantizer compression scheme, the combination of two uniform quantizers with different parameters, along with the corresponding AoI-optimal encoders, provides an asymptotically optimal solution. For the single-quantizer compression scheme, the combination of a piecewise uniform

w-quantizer with the corresponding AoI-optimal encoder provides an asymptotically optimal solution. Our analysis reveals that the optimal sum of average ages follows an asymptotically linear relationship with log WSMSE, with the same slope determined by the arrival rates of both sources. In comparison, the optimal average age versus log MSE is asymptotically linear with a slope of

, as established by [

28] for the single-source case. A classical result in the high-resolution quantization theory states that entropy versus log MSE is asymptotically linear with a slope of

[

29].

The remainder of this paper is organized as follows: In

Section 2, we describe the system model and propose two compression schemes. In

Section 3, we study the multi-quantizer compression scheme and develop its asymptotically optimal solution. In

Section 4, we turn to the single-quantizer compression scheme and develop its corresponding results. The influence of different parameters on system performance is studied in

Section 5. Numerical results are provided in

Section 6. Finally, we conclude the paper in

Section 7.

2. System Model

We consider a continuous-time status-updating system with two independent analog sources, as shown in

Figure 1. For each source

, updating symbols

are generated as i.i.d. random variables with known pdf

, and they arrive according to a Poisson process with rate

. We assume that the pdfs satisfy the following conditions—-an assumption that is typically employed in high-resolution quantization theory [

30]:

(1) Each pdf is continuous and sufficiently smooth, and both pdfs share the same bounded support interval . (2) The quantization cells are sufficiently small such that each pdf can be considered approximately constant within each cell. (3) Each reconstruction point is positioned at the centroid of its corresponding quantization cell. (4) The quantization rate R is sufficiently high (i.e., ).

We propose two compression schemes—a multi-quantizer compression scheme, where a dedicated quantizer–encoder pair is assigned to each source for compression, and a single-quantizer compression scheme, employing a unified quantizer–encoder pair shared across both sources, as shown in

Figure 3 and

Figure 4, respectively. The difference between the two schemes lies in their required number of quantizer–encoder pairs. The multi-quantizer compression scheme offers greater design flexibility, as it can be designed separately for each source. In contrast, the single-quantizer compression scheme requires only one quantizer–encoder pair, whose design is dependent on the characteristics of both sources simultaneously, potentially limiting its performance.

During idle channel periods, arriving symbols are quantized and then assigned binary prefix-free codewords by an encoder. These compressed symbols are then sent to the receiver through a shared noiseless channel at a rate of one bit per unit time, while any new arrivals generated during channel busy periods are discarded. Therefore, the transmission time of a symbol is equal to its assigned codeword length, and our system can be modeled as a multi-source (multi-stream) M/G/1/1 non-preemptive system [

18]. We assume that the receiver can identify the corresponding source of the symbols received.

We use AoI to quantify information freshness. For source

i, the AoI at time

t is defined as

, where

denotes the generation time of the most recently received update at time

t, and the average age is given by the following:

For the multi-source M/G/1/1 non-preemptive system, the average age of source

is given by the following [

18]:

where

and

denote the transmission times of symbols generated by sources 1 and 2, respectively.

The sum of the average ages—with the direct use of AoI to represent the sum of the average ages—is expressed as follows:

2.1. Multi-Quantizer Compression Scheme

In the multi-quantizer compression scheme, the symbols from two sources are compressed by two separate quantizer–encoder pairs, respectively. For source

i, given a quantizer

,

is used to denote the

th quantization cell. Each cell is represented by a reproduction point

with occurrence probability

. Let

denote the set of quantizers. The MSE for source

i is expressed as follows:

To accommodate heterogeneous requirements on accuracy, we introduce weights

and

. For the multi-quantizer compression scheme, we define the WSMSE

as the system distortion measure, as expressed in Equation (

5). In this paper, we use the subscript “m” to denote variables under the multi-quantizer compression scheme, and “s” for variables under the single-quantizer compression scheme.

For a given pmf, we assign binary prefix-free codewords to the quantization cells, where the codeword length for the

jth cell of source

i is denoted by

. The random variable

represents the codeword length for the quantized symbols of source

i, and the set of all prefix-free codeword length assignments is denoted by

. As established in information theory, the codeword lengths of any prefix-free code must satisfy the Kraft inequality, i.e.,

([

29], p. 24). In our analysis, we focus on the high-resolution regime where the average codeword lengths are sufficiently large. In subsequent analysis, we ignore the integer constraint and consider the real-valued length assignments. The transmission time of a symbol is equal to its assigned codeword length; the AoI is given by the following:

The AoI

is a nonlinear function of

and

, which is difficult to decouple directly. Furthermore, each quantizer

determines the output pmf

. Therefore, the AoI minimization problem can be formulated as a joint codeword length assignment problem, which is a complex nonlinear fractional problem that involves the joint design of two sets of codeword lengths, as follows:

Given the arrival processes for both sources, the AoI is governed by the assigned codeword lengths, which in turn are determined by the pmfs of the quantizer outputs. The WSMSE distortion metric, characterized by the MSEs for both sources, is directly influenced by the design of both quantizers. When the analog sources are considered, this framework naturally gives rise to an inherent tradeoff between AoI and distortion performance, which necessitates the joint optimization of both quantizer-encoder pairs, leading to a generalized formulation of Problem (

7) as follows:

2.2. Single-Quantizer Compression Scheme

In the single-quantizer compression scheme, the symbols generated by both sources are compressed by a shared quantizer–encoder pair. Therefore, the design of this pair depends simultaneously on both sources.

For a given quantizer

Q, we denote the

jth quantization cell by

, represented by a reproduction point

. The quantizer maps continuous inputs

and

to discrete outputs

and

, with pmfs

and

, defined as follows:

representing the occurrence probabilities for sources 1 and 2, respectively. The MSE for source

i is given by the following:

For the single-quantizer compression scheme, we similarly adopt the WSMSE

as system distortion metric, as follows:

We assign binary prefix-free codewords to the quantization cells, where the codeword length assigned to the

jth cell is denoted by

. Since both sources share the same quantizer–encoder pair, the quantization regions, reproduction points, and codeword length assignments remain identical. However, the pmfs of the codeword length assignments are different, leading to distinct statistical properties of the transmission times. Although the same symbol has identical transmission time values for both sources, their distributions differ. To explicitly capture the differences in distribution, we denote the first and second moments of the codeword lengths as

and

for each source

i, respectively. The AoI is given by

The AoI

is a nonlinear function of

and

, which is difficult to decouple directly. Furthermore, the quantizer

Q determines the output pmfs

for source

i, thereby necessitating the design of codeword lengths for both pmfs. This leads to the following AoI minimization problem:

When the analog sources are considered, this framework naturally gives rise to an inherent tradeoff between AoI and distortion performance. Specifically, for both sources, the quantizer design and codeword length assignment are intrinsically coupled. The quantizer determines both the distortion characteristics and the output probability distributions, which subsequently govern the optimal codeword length assignment and, consequently, the achievable AoI performance. We study a joint quantization and encoding problem to optimize AoI under a distortion constraint, as follows:

The encoders are different between the two compression schemes. The multi-quantizer scheme employs two distinct sets of codeword lengths (one for each source), whereas the single-quantizer scheme employs a unified set of codeword lengths for both sources. To maintain notational simplicity, for both schemes, we refer to encoders with codeword lengths satisfying optimization problems (

7) and (

13) collectively as the AoI-optimal encoder, denoted by

. In addition, the Shannon encoder

is defined as the encoder with lengths

, where

represents the probability of the

jth realization.

2.3. Preliminaries

In this section, we present some definitions that will be used throughout the rest of the text.

Definition 1. For the multi-quantizer compression scheme, given a distortion threshold , the optimal AoI is defined as follows:where the infimum is taken over all quantizers satisfying the distortion constraint and codeword length assignments . A quintuple is asymptotically optimal under the distortion threshold D if:For any distortion thresholds and satisfying , we define the constrained optimal AoI under the distortion thresholds and as follows: Similarly, a triplet is asymptotically optimal under the distortion thresholds and if: Remark 1. Since the system distortion metric is the WSMSE, in the multi-quantizer compression scheme and under a distortion threshold , the design problem not only involves the design of the quantizer and corresponding encoders but also needs to allocate the appropriate distortion for each source. Consequently, the complete solution can be formally represented as a quintuple .

Definition 2. For the single-quantizer compression scheme, given a distortion threshold , the optimal AoI is given by the following: A pair is asymptotically optimal under the distortion threshold D if: Subsequently, we present some definitions and known results of the high-resolution quantization theory. Given a quantizer Q, the output entropy is denoted by . A quantizer that achieves the minimum entropy for a given distortion threshold D is called the optimal quantizer denoted by . The uniform quantizer is denoted by . The definition of an asymptotically optimal quantizer is as follows:

Definition 3. A quantizer Q is asymptotically optimal if For a pdf

and a weight function

, the weighted mean-squared error (WMSE) distortion is defined by the following:

According to the results of [

30], for a continuous and sufficiently smooth weight function

and the corresponding WMSE distortion, a piecewise uniform quantizer—which we call the

w-quantizer denoted by

—can be constructed. The construction proceeds as follows: First, the support interval

U is partitioned into intervals

of equal step size

. For sufficiently small

,

is approximately constant

within each

. Then, each interval

is subdivided into the cells with length

, and the midpoint of each cell is the reproduction point. The results are recapitulated as follows:

Lemma 1. ([

30]).

Let be a continuous, sufficiently smooth weight function with the bounded support interval . Under the WMSE distortion, the w-quantizer is asymptotically optimal, as follows:Furthermore, the asymptotic behavior of the distortion satisfies the following:andwhereis the differential entropy of the random variable X. Remark 2. A well-known result in the high-resolution quantization theory is that the uniform quantizer is asymptotically optimal for entropy-constrained quantization [31], which is a special case of . The key properties follow from Lemma 1 with directly, as follows: 3. Asymptotically Optimal Solution for Multi-Quantizer Compression Scheme

For the multi-quantizer compression scheme, we develop an asymptotically optimal solution. For notational simplicity, we define the following for use throughout the paper:

The results are then presented as follows:

Theorem 1. The uniform quantizer , with cell size and distortion for source 1, along with the uniform quantizer with cell size and distortion for source 2, as well as the AoI-optimal encoder, together provide an asymptotically optimal solution to the problem (8), in the sense that, as the distortion , this solution asymptotically achieves the optimal AoI.Under this solution, the asymptotic behavior of the optimal AoI satisfies the following:and Remark 3. Theorem 1 reveals that the performance of the multi-quantizer compression scheme exhibits a strong dependence on the weight α and the ratio , since the allocation of distortion for each source is directly determined by these parameters. The ratio quantifies the relative contribution of source 1 to the sum of the average ages, as implied in the proof below. Intuitively, a larger ratio necessitates a smaller average age for source 1 to minimize the sum of the average ages, and a smaller value of α indicates that the source can tolerate a larger distortion. This is consistent with the optimal distortion allocation . The analysis for follows analogously.

Remark 4. LetAs and , and . Then,which yieldsThus, we define δ as the step size for the multi-quantizer compression scheme. Remark 5. A classical result in the high-resolution quantization theory is that entropy versus log MSE is asymptotically linear with a slope of [29]. Similarly, our multi-quantizer scheme reveals an analogous asymptotically linear relationship—the performance curve of the optimal AoI versus log WSMSE is asymptotically linear with a slope of , which depends explicitly on the source arrival rates. The proof of Theorem 1 proceeds as follows: First, a lower bound of the AoI

is constructed, which decouples the design of codeword length assignments for both sources. Then, we obtain the upper and asymptotically lower bounds of the optimal AoI

through (i) allocating appropriate distortion to each source and (ii) designing the corresponding quantizer–encoder pair. We further prove that the two bounds are tight asymptotically. During this process, to avoid directly solving Problem (

8), the performance of the Shannon encoder is used to approximate the solution of (

8) in the high-resolution regime. Finally, we prove the asymptotically optimality of the solution and analyze its performance. The proof flowchart of Theorem 1 is shown in

Figure 5.

Proof. We divide the proof of Theorem 1 into four steps.

Lemma 2. is lower bounded by the following: For notational simplicity, we define the following:

where

denotes the output entropy of the uniform quantizer for source

with the allocated distortion of

.

In the following lemma, an asymptotically lower bound of is given:

Lemma 3. For any , there exists a distortion such that , the following inequality holds:where and . Given the optimal distortion allocation scheme

, the AoI achieved by the corresponding uniform quantizers with the Shannon encoders

is obviously an upper bound of the optimal AoI, expressed as follows:

Step 3. We proceed to prove that the upper bound and the asymptotically lower bound of the optimal AoI coincide asymptotically.

Lemma 4. If the uniform quantizers and the Shannon encoders for sources 1 and 2 are given, thenwhere and . From Lemma 4, we can obtain that, for any

, there exists some

, such that

implies the following:

Letting

, for any

,

satisfying

and

, the following inequality holds:

Since

can be arbitrarily small, for sufficiently small

D, the following inequality holds:

Thus, the quintuple

is the asymptotically optimal solution.

Next, we prove (

32) and (

33). We have the following:

Then, we derive the following:

From the results of the high-resolution theory, then we obtain the following:

Equations (

46)–(

48) yield the following:

Then, (

45), (

49), and Lemma 4 imply the following:

Then, we can directly obtain the following result:

This completes the proof. □

4. Asymptotically Optimal Solution for Single-Quantizer Compression Scheme

For the single-quantizer compression scheme, we develop an asymptotically optimal solution. Let and introduce two random variables and , with the pdfs and , respectively.

Theorem 2. The pair , consisting of the w-quantizer and the AoI-optimal encoder, forms an asymptotically optimal solution to the problem (14), as follows:Under this solution, the asymptotic behavior of the optimal AoI satisfies the following:where is the relative entropy between and . Furthermore, we have the following: Remark 6. Theorem 2 reveals that the performance of the single-quantizer compression scheme also exhibits a strong dependence on the weight α and the ratio . This scheme can essentially be viewed as a single-source compression problem, as implied in the proof below. Relative entropy characterizes the “mismatch" between the equivalent probability distribution in the objective function and that in the distortion constraint.

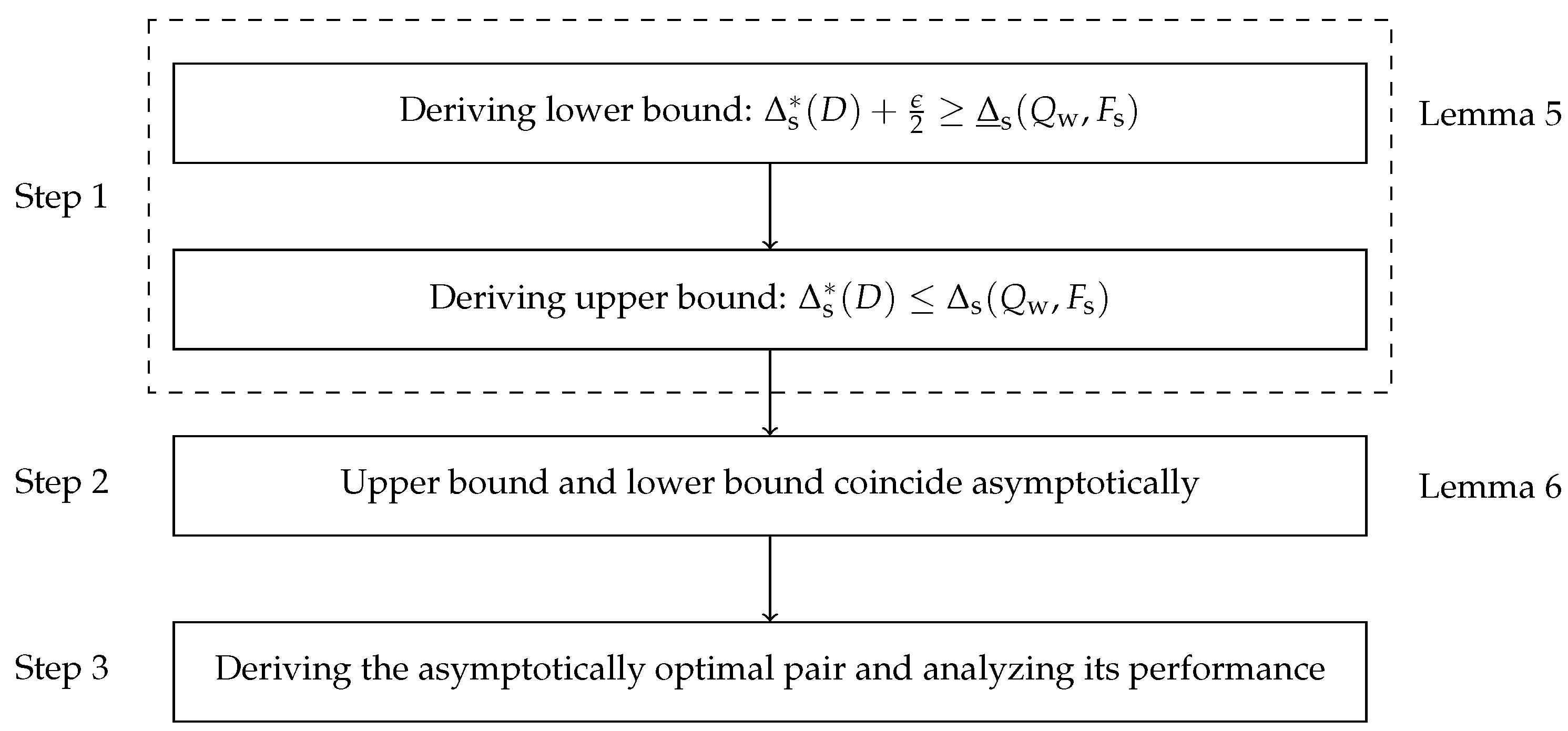

The proof of Theorem 2 proceeds as follows: First, for the single-quantizer compression scheme, we derive the upper and asymptotically lower bounds of the optimal AoI

. Then, we prove that the two bounds asymptotically coincide. Crucially, this is achieved by leveraging the Shannon encoder’s performance to approximate the solution of (

14) in the high-resolution regime, thereby circumventing the need to solve the original nonlinear fractional optimization problem directly. Finally, we derive the asymptotically optimal pair and analyze its performance. The proof flowchart of Theorem 2 is shown in

Figure 6.

Proof. We divide the proof into the following three steps:

Let

. Then, the original WSMSE distortion metric in (

11) is transformed into the following WMSE metric:

By treating

as a weight function, the original optimization problem (

14) can be reformulated as follows:

Then, we present an asymptotically lower bound of the optimal AoI

, as follows:

Lemma 5. For any , there exists a distortion such that , the following inequality holds:where Proof. By introducing the pmf

, we obtain the following:

where (a) follows from Lemma 2.

For any

, from the definition of infimum, there exists a pair

satisfying

. In addition, from Lemma 1, we know that the

w-quantizer is asymptotically optimal under the WMSE distortion. Therefore, there exists some

, such that

implies

. Then

where (a) uses the fact that

for a prefix-free code. This completes the proof. □

The AoI under the

w-quantizer and the Shannon encoder provides an upper bound of the optimal AoI

. Then

Step 2. We prove that the two bounds asymptotically coincide.

Lemma 6. If the w-quantizer and the Shannon encoder are given, then we have the following: For any

, there exists some

, such that

implies

. Thus, we have the following:

Letting

, for any

D satisfying

, we have the following:

Since

can be arbitrarily small, for sufficiently small

D, the following results can be obtained:

Thus, the

w-quantizer and the AoI-optimal encoder are asymptotically optimal.

Next, we prove (

53) and (

54). We have the following:

By using Lemma 1, we obtain the following:

Then, (

67), (

68) and Lemma 6 imply the following:

From (

69), the following result can be directly obtained:

This completes the proof. □

6. Numerical Results

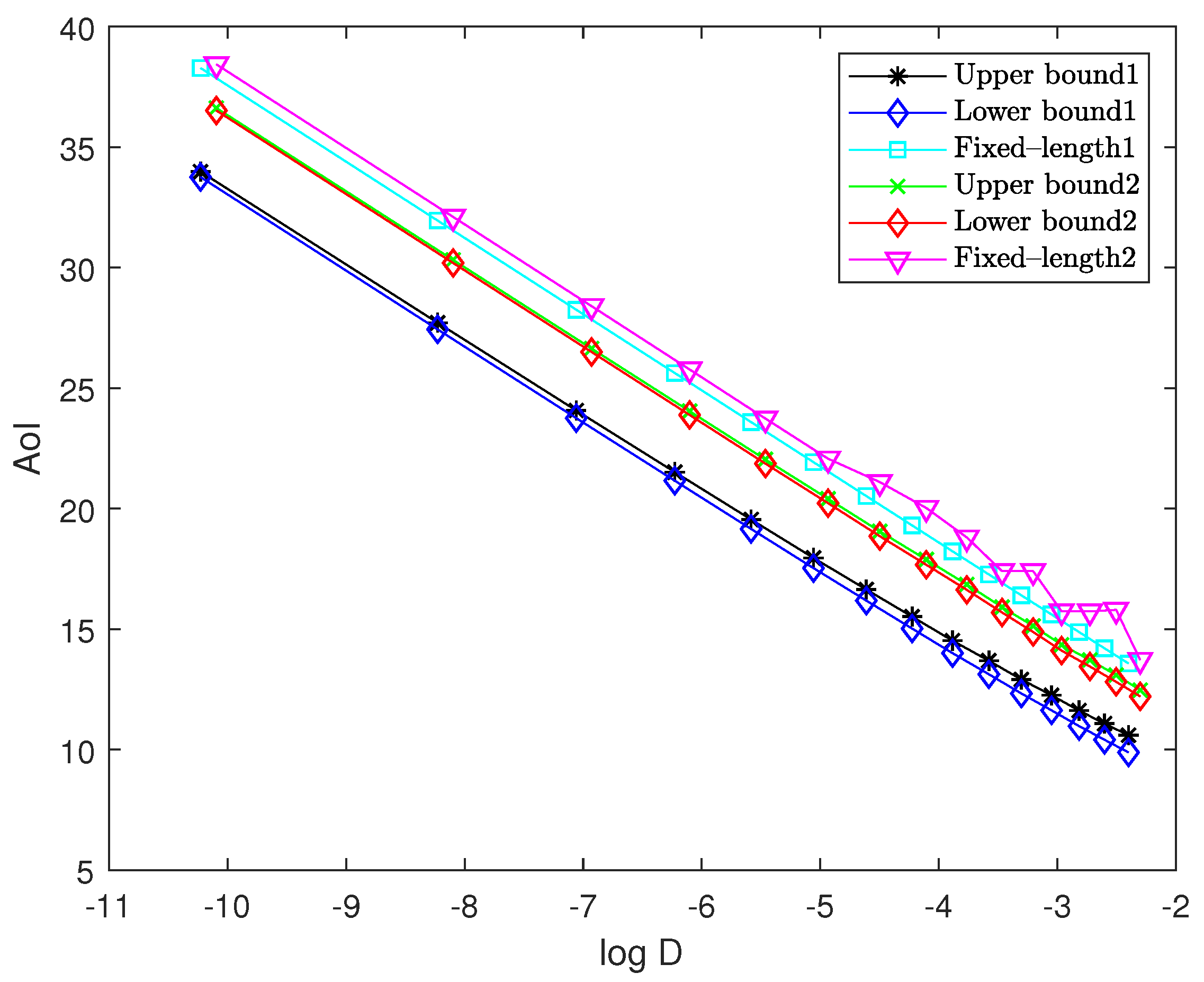

In this section, we present numerical results to evaluate the performance of the proposed solution. For sources 1 and 2, to satisfy the assumptions of pdfs, we truncate the pdfs and to the interval , respectively. We use “Upper bound1” and “Lower bound1” to represent the upper bound and asymptotically lower bound of the optimal performance for the multi-quantizer compression scheme, respectively. Moreover, we use “Upper bound2” and “Lower bound2” to represent the upper bound and asymptotically lower bound of the optimal performance for the single-quantizer compression scheme, respectively. In addition, we use “Fixed-length1” and “Fixed-length2” to represent the fixed-length encoding for the multi-quantizer compression scheme and the single-quantizer compression scheme, respectively.

Figure 7 illustrates the upper bound and the asymptotically lower bound of the optimal AoI versus log distortion for both compression schemes. The arrival rates for sources 1 and 2 are set to

and

, respectively. The weight is set to

. Through calculation, we derive the parameters

and

. As the step size

decreases from 1.5 to 0.1, the performance curve moves from the lower right to the upper left. For the multi-quantizer compression scheme, we implement uniform quantizers with cell sizes

for source 1 and

for source 2, each paired with their corresponding Shannon codes. The resulting AoI—plotted as the black curve—serves as the upper bound of the optimal AoI, while the asymptotically lower bound of the optimal AoI is plotted as the blue curve.

For the single-quantizer compression scheme, we employ the w-quantizer with its corresponding Shannon code. The resulting AoI—plotted as the green curve—is the upper bound of the optimal AoI, while the asymptotically lower bound is plotted as the red curve. We observe that the gaps between the upper and lower bounds for both schemes are remarkably small. As the quantization step size decreases, the gaps asymptotically approach zero, confirming Lemmas 4 and 6. In addition, four curves exhibit asymptotically linear behavior with the same slope of , confirming Theorems 1 and 2. Furthermore, while maintaining the previously described quantizer structures, we evaluate the performance of fixed-length encoding for the multi-quantizer compression scheme (light blue curve) and the single-quantizer compression scheme (magenta curve). As we can observe, significant performance gaps exist between fixed-length encoding and the theoretical asymptotic optimum for both schemes. Additionally, the single-quantizer scheme (magenta curve) exhibits jitter at low bit rates. This jitter phenomenon stems from the design principle of the w-quantizer, which inserts about quantization points within each interval on the basis of the uniform quantizer with cell size . At low bit rates (where is large), cannot be treated as a constant within each interval, leading to poor approximation performance. As decreases, this jitter effect gradually diminishes and eventually disappears when the quantization becomes sufficiently fine.

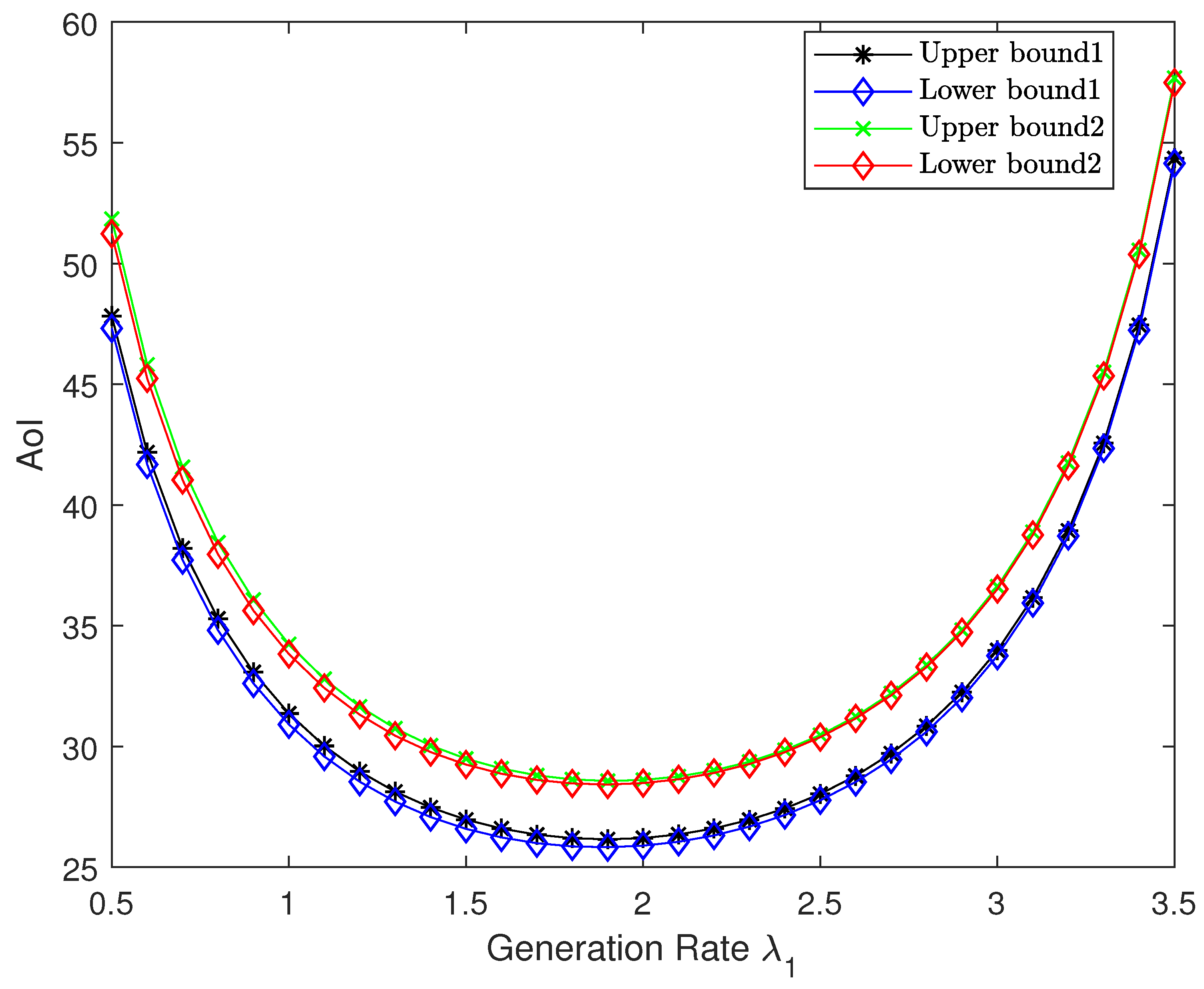

Figure 8 illustrates the impact of source 1’s arrival rate

on the optimal AoI for both compression schemes in the high-resolution regime, with a fixed total arrival rate

, weight

, and step size

. We vary

from 0.5 to 3.5 in steps of 0.1 and plot the corresponding upper bounds and asymptotically lower bounds for both compression schemes. Notably, the gap between the upper and lower bound remains small for each scheme. In addition, the bounds for both compression schemes exhibit an initial monotonic decrease followed by a subsequent increase with respect to

, with a unique minimum, confirming Proposition 1. This behavior can be explained through information freshness dynamics. As the arrival rate of either source becomes very small, the corresponding source experiences severely diminished update frequency, resulting in substantial age accumulation that dominates the system’s overall AoI performance. Specifically, when

is too small, source 1’s infrequent updates create an age bottleneck; conversely, when

approaches

(making

small), source 2 becomes the freshness-limiting factor. This dependency creates the observed profile, with the optimal point occurring at a balanced rate allocation that avoids either extreme.

Figure 9 analyzes the effect of the weight

on the optimal AoI performance for both schemes in the high-resolution regime. The arrival rates for sources 1 and 2 are set to

and

, respectively. The step size is set to

. We vary

from 0.1 to 0.9 in steps of 0.05 and plot the upper and the asymptotically lower bounds. Our results reveal that the bounds exhibit concave behavior with respect to

, with

as the unique maximum, confirming Propositions 2 and 3.

Numerical simulations demonstrate that the multi-quantizer compression scheme, with its additional design flexibility, achieves better performance compared to the single-quantizer compression scheme. The multi-quantizer compression scheme can customize quantization cells for each source, while the single-quantizer scheme treats multiple sources as a single equivalent source, limiting its adaptability.