Abstract

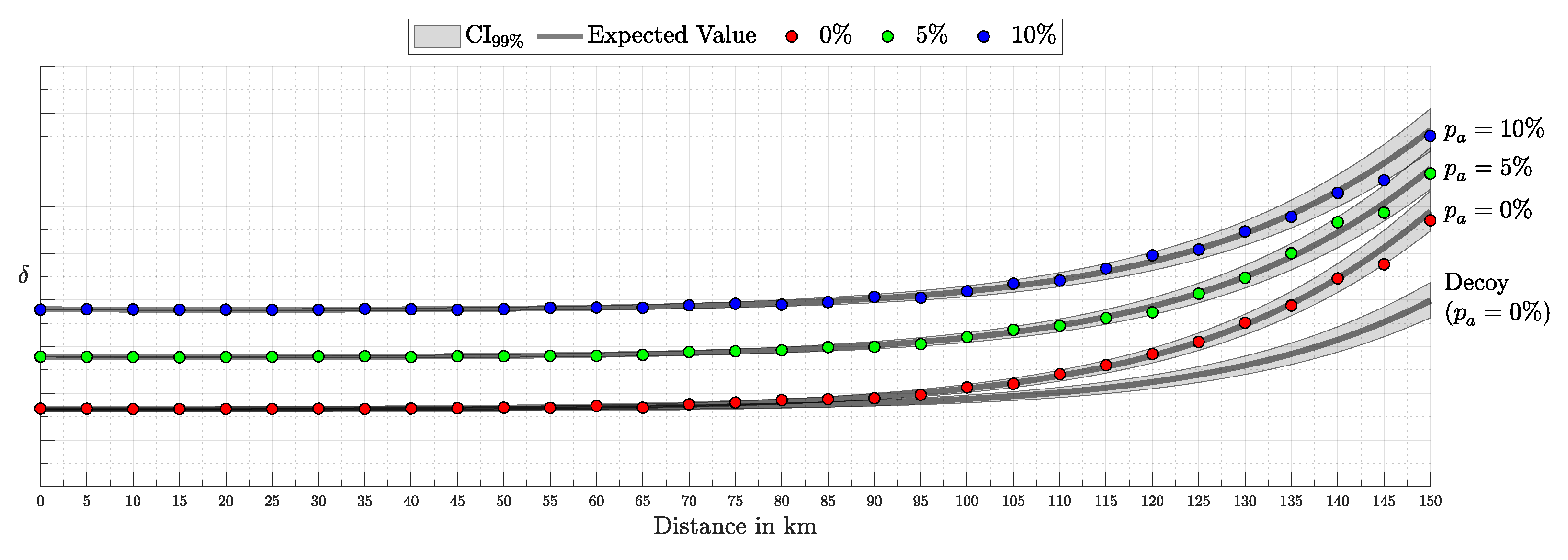

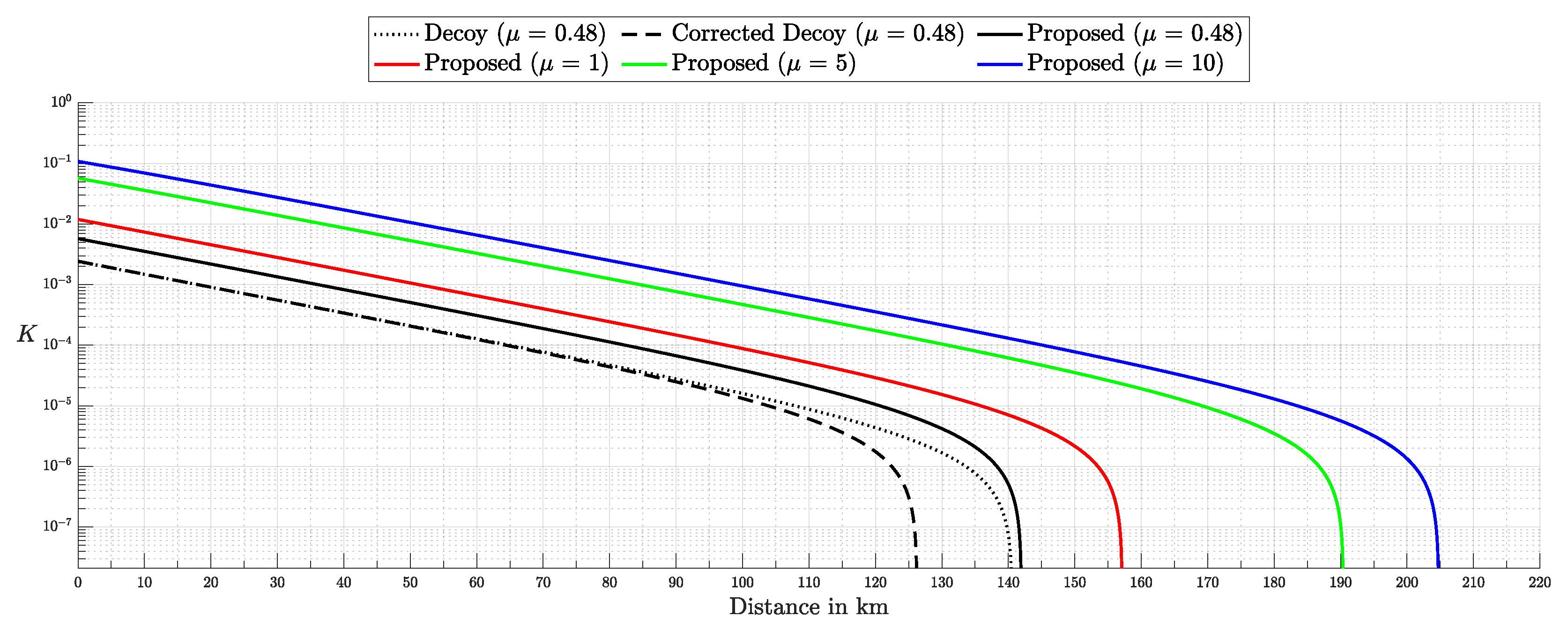

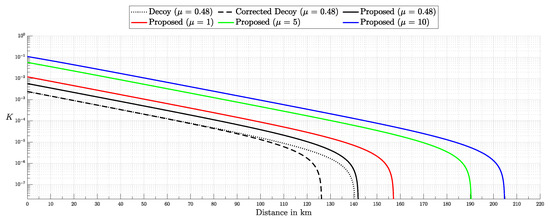

Quantum Key Distribution (QKD) enables the sharing of cryptographic keys secured by quantum mechanics. The BB84 protocol assumes single-photon sources, but practical systems rely on weak coherent pulses vulnerable to Photon-Number-Splitting (PNS) attacks. The Gottesman–Lo–Lütkenhaus–Preskill (GLLP) framework addresses these imperfections, deriving secure key rate bounds under limited PNS scenarios. The decoy-state protocol further improves performance by refining single-photon yield estimates, but still considers multi-photon states as insecure, thereby limiting intensities and constraining key rate and distance. More recently, finite-key security bounds for decoy-state QKD have been extended to address general attacks, ensuring security against adversaries capable of exploiting arbitrary strategies. In this work, we focus on a specific class of attacks, the generalized PNS attack, and demonstrate that higher pulse intensities can be securely used by employing Bayesian inference to estimate key parameters directly from observed data. By raising the pulse intensity to 10 photons, we achieve a 50-fold increase in key rate and a 62.2% increase in operational range (about 200 km) compared to the decoy-state protocol. Furthermore, we accurately model after-pulsing using a Hidden Markov Model (HMM) and reveal inaccuracies in decoy-state calculations that may produce erroneous key-rate estimates. While this methodology does not address all possible attacks, it provides a new approach to security proofs in QKD by shifting from worst-case assumption analysis to observation-dependent inference, advancing the reach and efficiency of discrete-variable QKD protocols.

1. Introduction

QKD allows two parties, Alice and Bob, to share a secret key secured by quantum mechanics. The BB84 protocol [1] introduced QKD using single-photon polarization states and its unconditional security was proven in [2] via quantum error correction and entanglement purification, assuming ideal single-photon sources. True single-photon sources are hard to implement. Instead, most QKD systems use weak coherent pulses from attenuated lasers, which produce multi-photon states. These enable a Photon-Number-Splitting (PNS) attack [3], where an eavesdropper, commonly referred to as Eve, intercepts some photons and forwards the rest, gaining key information undetected and compromising security.

The GLLP framework [4] addresses this by extending security proofs to imperfect devices and multi-photon pulses. It ensures secure QKD by treating multi-photon pulses as “tagged” and deriving a secure lower bound on the secret key rate that can be extracted from the sifted key:

where is the overall error rate, is the fraction of tagged photons, and is the binary Shannon entropy function.

Building on GLLP’s framework, the decoy-state protocol [5,6,7,8,9] improved key rates and operational ranges by enhancing single-photon yield estimation through random pulse-intensity variations, and it was first successfully demonstrated in [10]. However, while it improves performance, the decoy-state approach relies on conservative assumptions for multi-photon pulses—assuming they are fully compromised—which forces conservative intensity choices, limits maximum transmission distances, and imposes high detector efficiency requirements.

While decoy-state protocols extend BB84, other approaches, like SARG04 and Coherent One-Way (COW), also address PNS attacks. SARG04 [11] uses the original four BB84 states but alters the sifting procedure, improving resilience to multi-photon attacks at the expense of reduced key rates. The COW protocol [12] transmits weak coherent pulses in time-bin sequences and checks their coherence to detect eavesdropping. Although these methods enhance certain security aspects, they generally yield lower key rates and remain more susceptible to side-channel attacks compared to decoy-state protocols.

Despite its improved accuracy in estimating single-photon contributions, the decoy-state protocol still treats multi-photon pulses as insecure. By relying on additional intensity settings, it refines the statistical bounds on single-photon yields, but the need to suppress multi-photon probabilities enforces conservative intensity choices. This limits the maximum transmission distance and demands high detector efficiencies, posing significant experimental challenges for near-term implementations.

Advancements such as those presented in [13] have extended the security analysis by considering more general attack models, thereby further advancing the decoy-state framework. In summary, Ref. [13] broadens the scope of adversarial strategies beyond the conventional decoy approach.

Although previous works have accounted for various device imperfections, the correlations induced by after-pulsing are often overlooked. After-pulsing, where a detector emits spurious clicks following a real detection [14], induces correlations that violate the i.i.d. assumption. A common mitigation strategy is to increase the detector’s dead time, reducing after-pulse probabilities to negligible levels at the expense of lowering the achievable key rate. Even then, neglecting residual after-pulsing can still distort error estimates and weaken QKD security [15].

Twin-Field QKD (TF-QKD) [16] exploits quantum interference at a central node to surpass conventional rate-loss limits. By sending weak coherent pulses from both Alice and Bob to a measurement station, TF-QKD reduces reliance on trusted relays and enables secure key distribution over longer distances with minimal loss.

This work reframes QKD security from worst-case assumptions to a probabilistic inference model. Treating eavesdropper detection as an inference task, we use Bayesian methods to estimate key parameters like from observed data. This tighter parameter estimation refines the GLLP formula, enabling higher pulse intensities and improving both key rate and operational range.

Instead of limiting source intensity to reduce Eve’s potential interference, we recast her presence as an inference problem. By modeling the QKD protocol as a probability distribution over system parameters and detection outcomes and applying Bayesian inference [17], we directly estimate Eve’s interception rate from observed data. Notably, our analysis focuses on the generalized PNS attack model—which captures a broader spectrum of adversarial strategies than the conventional PNS attack—rather than the more comprehensive attack model discussed in [13]. This approach eliminates overly conservative assumptions, extends PNS-attack analysis to arbitrary interception scenarios and channel conditions, and enables secure operation at higher pulse intensities when is negligible.

To account for temporal correlations introduced by detector effects like after-pulsing and device variabilities, we incorporate a Hidden Markov Model (HMM) [18]. This refinement yields more accurate error estimates and facilitates reliable key extraction.

Building on the GLLP framework without imposing intensity constraints, our approach supports greater operational distances, reduces reliance on high-efficiency detectors, and enhances key rates.

The remainder of this paper is organized as follows: Section 2 presents the probabilistic model; Section 3 outlines the Bayesian inference and HMM formulations; Section 4 provides simulation results and comparisons to the decoy-state protocol; Section 5 addresses time complexity; and Section 6 concludes with final remarks and potential future directions.

2. Probabilistic Modeling

To better analyze and secure Quantum Key Distribution (QKD) systems, a deeper understanding of the underlying processes is essential. Realistic devices are subject to various sources of noise and inherent randomness, making it necessary to adopt a probabilistic modeling approach. In this section, we construct a comprehensive probabilistic framework that captures these complexities, accounting for factors such as detector efficiency, dark counts, beam-splitter misalignment, laser intensity, and fiber attenuation. By modeling these random processes, we derive the probabilities of different click events, laying the foundation for a more rigorous security analysis of QKD implementations.

We begin by modeling each component of the QKD setup separately to understand how the distribution of photons evolves as they travel from Alice to Bob. This modular approach enables us to systematically build the complete probability distribution, ensuring that we incorporate all relevant factors that influence the detection process.

Next, we extend our analysis to incorporate eavesdropping scenarios, specifically generalizing the Photon-Number-Splitting (PNS) attack. By introducing Eve’s parameters into our model, we calculate how the probabilities of click events change under various strategies she might employ. This generalization enables us to quantify the security of the QKD system under more realistic and flexible attack models.

Following this, we address the temporal dependencies introduced by after-pulsing. We develop a Hidden Markov Model (HMM) to capture these dependencies and refine our probability estimates accordingly. The HMM framework allows us to model correlations between detection events, which are crucial for accurately assessing the impact of after-pulsing on error rates and key generation.

Finally, we derive the error and gain probabilities from both the independent and identically distributed (i.i.d.) model and the HMM-based model. These probabilities are essential for key rate calculations and form the basis for evaluating the security and performance of the QKD protocol under realistic conditions. By the end of this section, we will have constructed a detailed and adaptable probabilistic model that serves as the foundation for our Bayesian inference and subsequent analysis.

2.1. QKD Components

Accurately modeling the QKD system requires a detailed understanding of the components involved, including detectors, beam splitters, and the interaction with quantum channels. In this section, we construct a probabilistic framework to model the behavior of a QKD system, incorporating various noise sources and potential attack vectors.

2.1.1. Detectors

The detection process in QKD systems can be characterized by the following key probabilities:

- (After-pulse): probability of a click given a click in the previous detection window.

- (Efficiency): probability of a click given a single photon.

- (Dark count): probability of a click in the absence of a photon.

- (Misalignment): probability of a detection error due to misalignment.

Throughout the paper, we will also use the notation for several parameters, for example, , , and so on.

For detectors and , we denote their respective click probabilities as and , and similarly for dark count and after-pulse probabilities. When n photons reach a detector and each has an independent detection probability, , the detected signal photon count, s, is binomially distributed: . Similarly, the occurrence of a dark count follows a Bernoulli distribution with probability : .

These distributions assume independence and fixed probabilities. To overcome this, we will use a Bayesian inference framework, modeling parameters as random variables with priors and updating posteriors based on observed data to incorporate uncertainties. Additionally, a Hidden Markov Model (HMM) will address dependencies between successive detection events, relaxing the independence assumption.

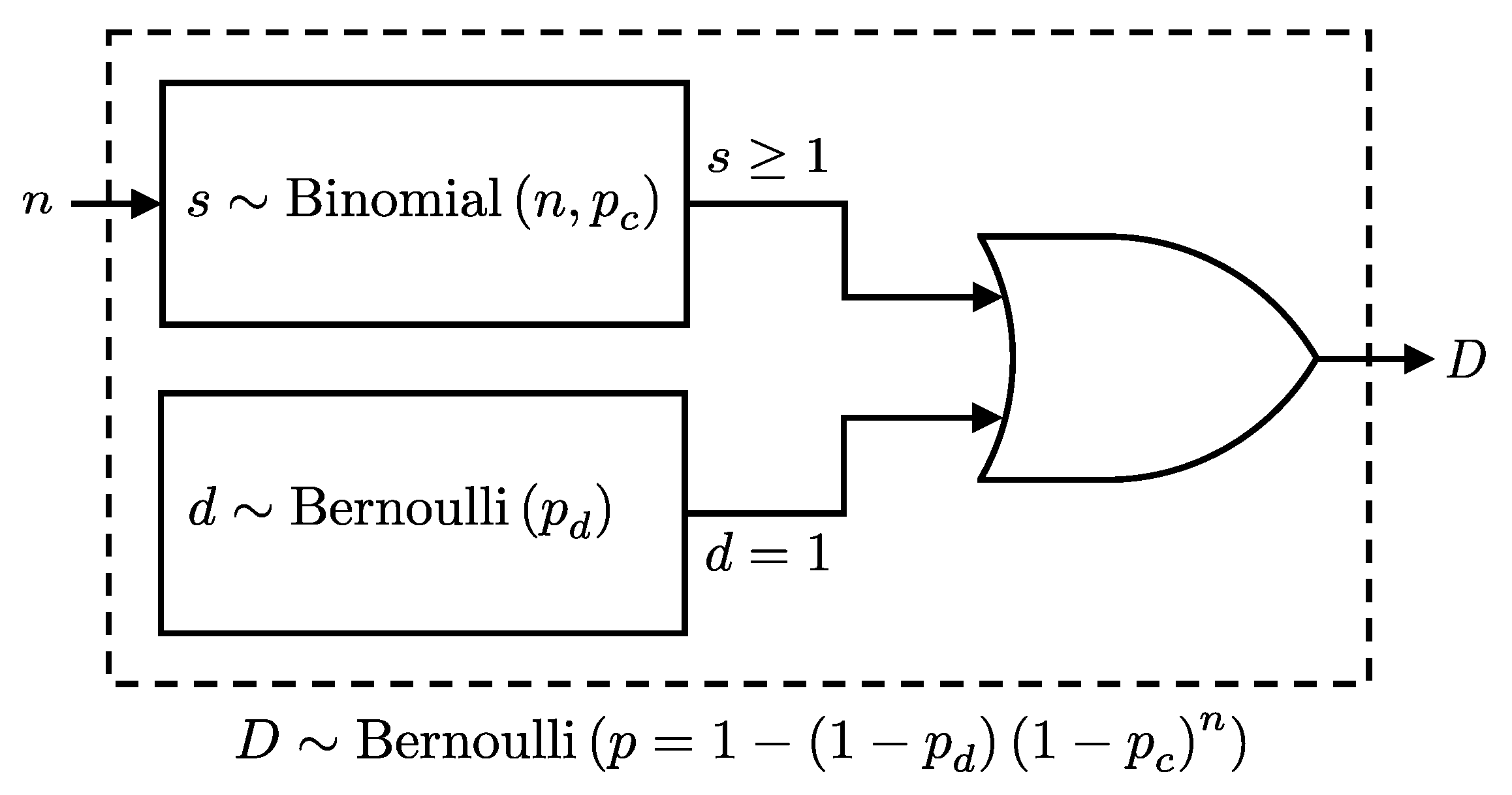

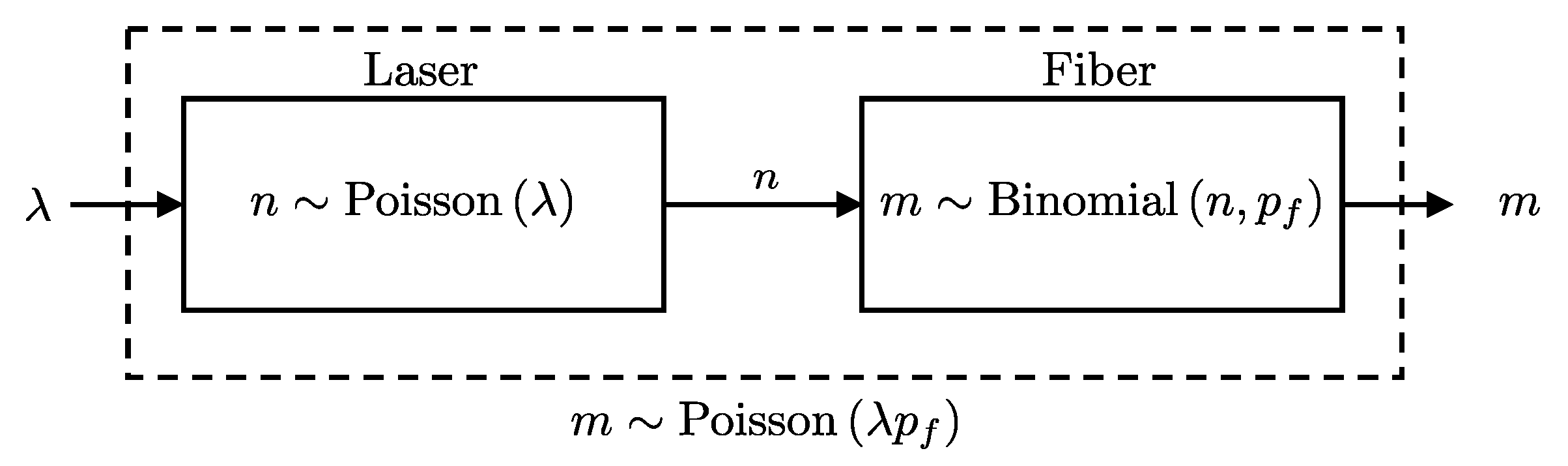

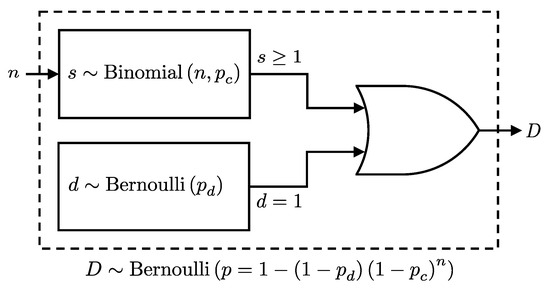

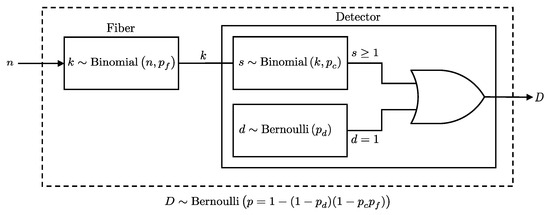

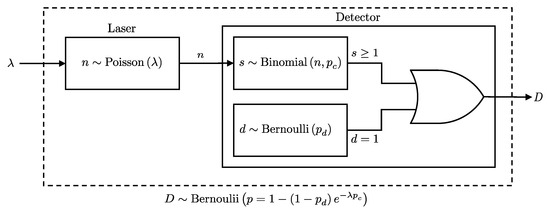

The probability of a click event at the detector, D, can be considered as the combined probability of detecting at least one photon or registering a dark count; see Figure 1. Given these considerations, we present the following lemma, which formalizes the combined click probability at a detector:

Figure 1.

Schematic representation of the detection process in a single-photon detector considering n incoming photons with a detector efficiency of and dark count probability . The detection event, D, will follow a Bernoulli distribution as per Lemma 1.

Lemma 1.

Given n photons incident on a detector, each detected independently with probability , and a dark count probability , the detection event D, detecting at least one photon or registering a dark count, follows a Bernoulli distribution:

where and

Proof.

The detection event D occurs if at least one signal photon is detected or a dark count occurs. Since these are independent events, the probability that neither occurs is:

Therefore, the probability of a detection event is:

□

2.1.2. Fiber-Detector

Optical fibers affect the probability of photons arriving at the detector by attenuating their intensity. The attenuation rate (dB/km) determines the probability of a photon successfully traversing a distance d, given by:

Each photon independently emerges with probability , making the number of photons k exiting the fiber, given n entered, follow a binomial distribution: .

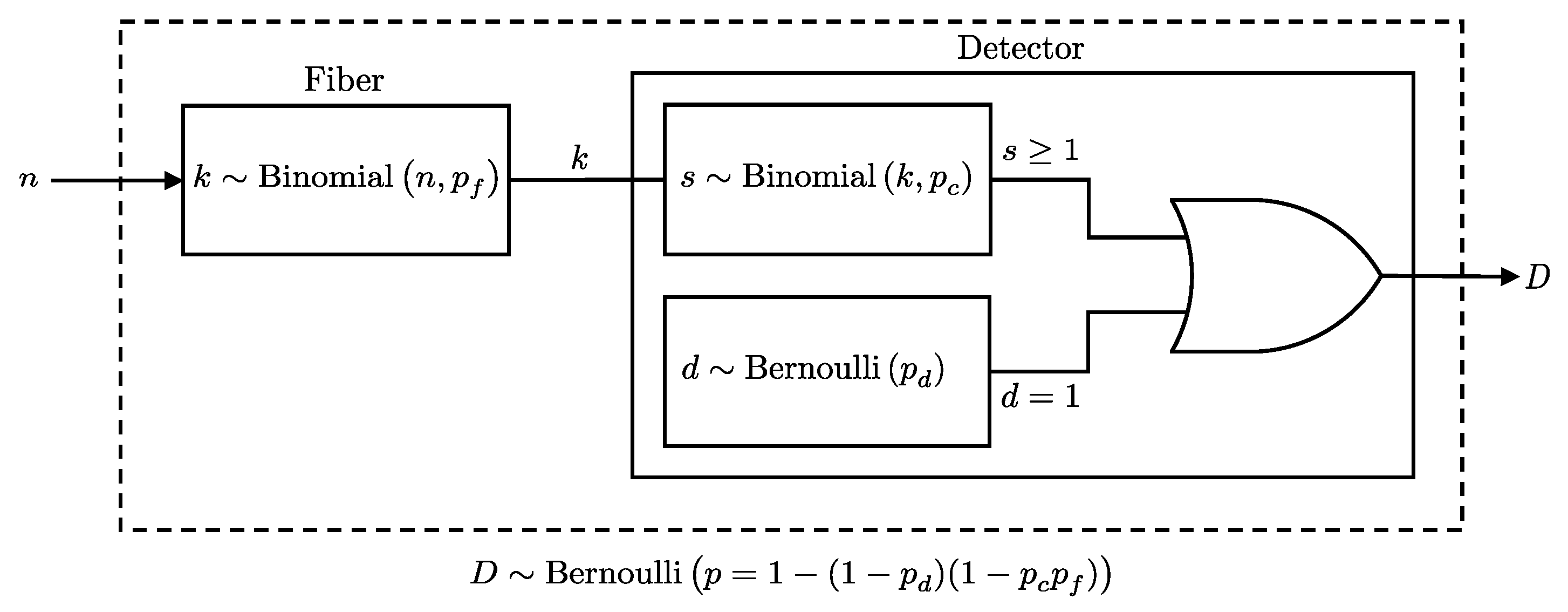

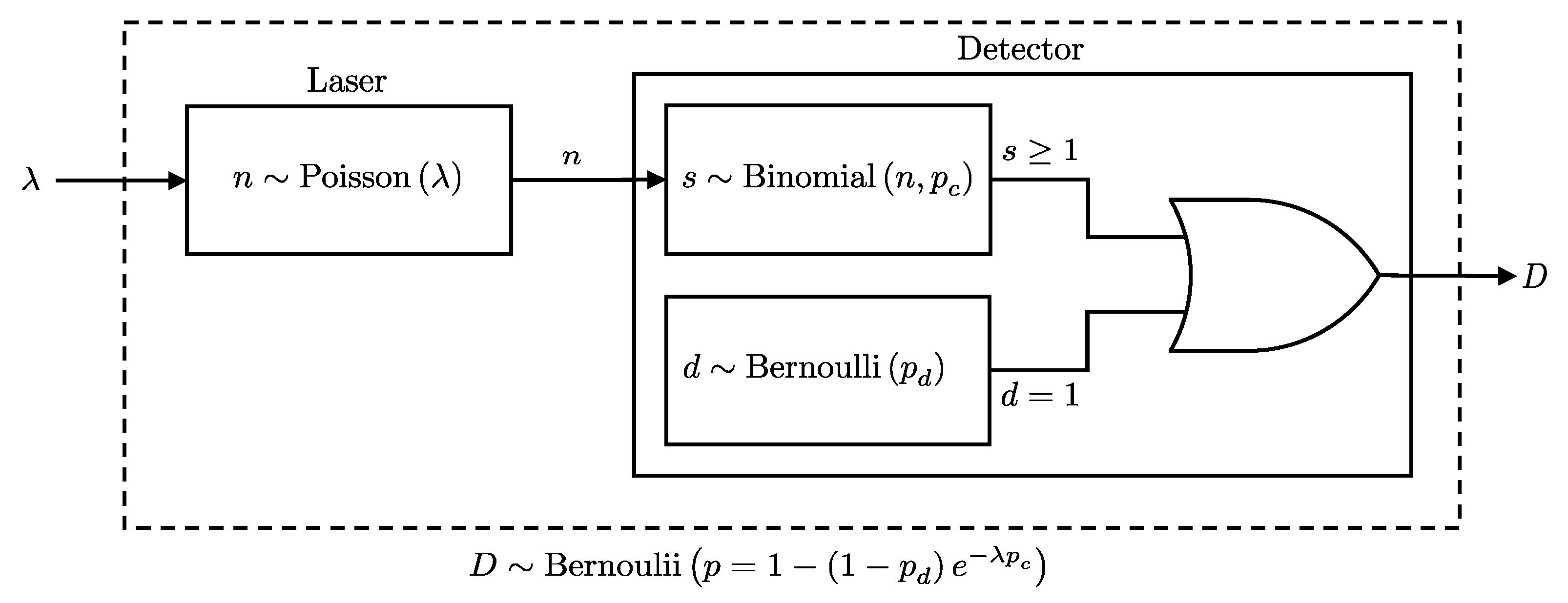

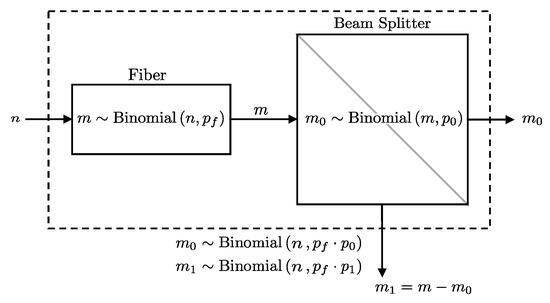

Given this model, the probability of a detection event at a detector with efficiency and dark count probability , after photons pass through a fiber with loss , is derived using the binomial distribution of photons emerging from the fiber and their detection probabilities. This is formally presented in Lemma 2 (see also Figure 2).

Figure 2.

Illustration of the detection process considering both photon transmission through a fiber and the detector’s response. The detection event D will follow a Bernoulli distribution as per Lemma 2.

Lemma 2.

When n photons pass through a fiber before reaching a detector, the probability of a detection event at the detector, given the fiber transmission probability , detector efficiency , and dark count probability , is equivalent to the probability of a detection event for n photons directly incident on a detector, with its efficiency scaled by the fiber transmission probability. Specifically, this means:

where the right-hand side is the detection probability derived in Lemma 1.

Proof.

Since each photon has a probability of passing through the fiber and reaching the detector, the number of photons k that reach the detector is a binomial random variable:

The probability of a click event at the detector is computed by marginalizing over all possible values of k, summing the probabilities of a click given k, weighted by the probability that exactly k photons reach the detector:

where:

- is the probability of a click given that k photons reach the detector, as previously derived in Lemma 1.

- is the probability that exactly k photons pass through the fiber, modeled as .

Substituting these into the sum:

Expanding and simplifying:

Applying the binomial theorem [19]:

where and , we get:

This matches the form in Lemma 1, where the efficiency is effectively . □

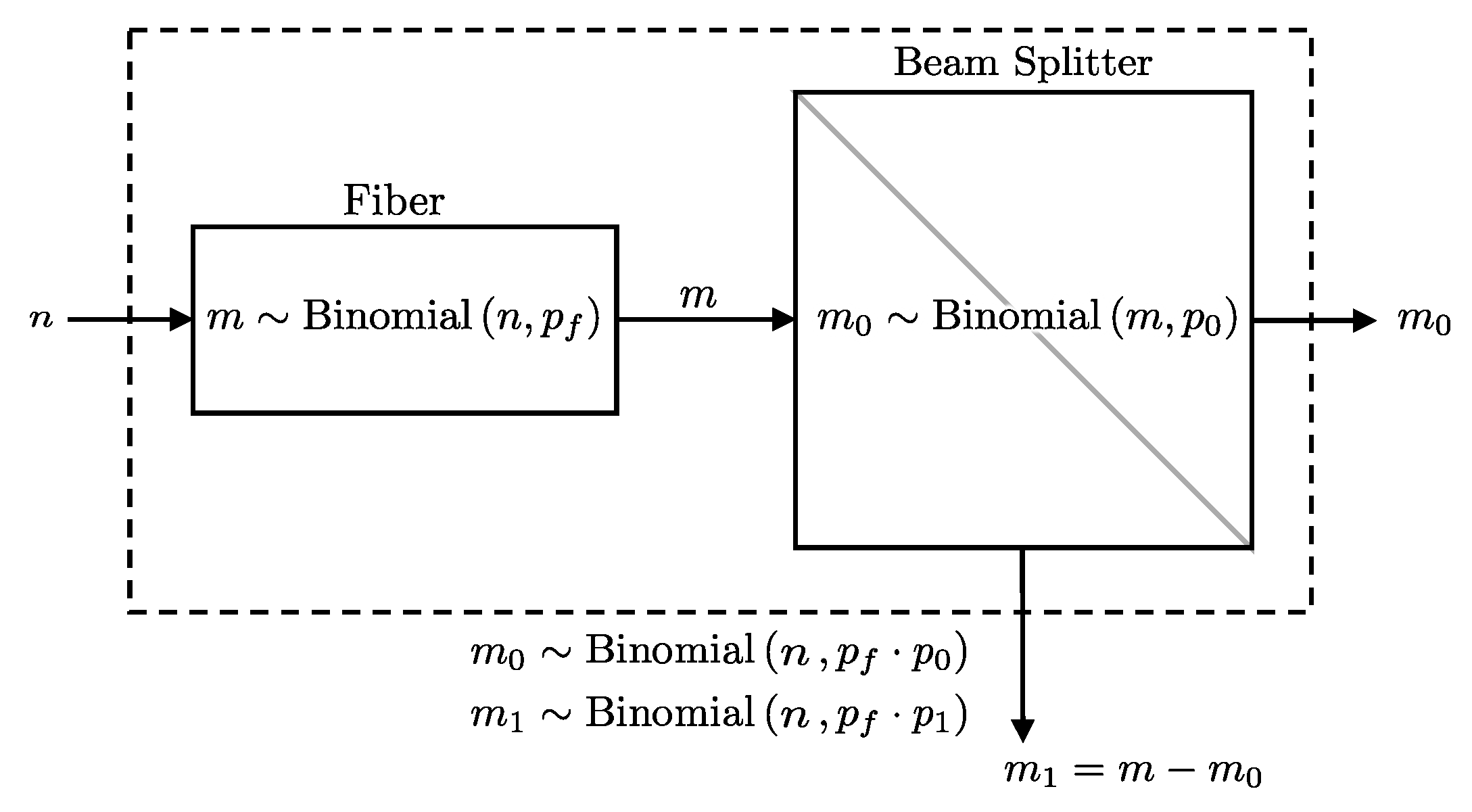

2.1.3. Fiber-Beam Splitter

If, after passing through the fiber, the photon stream encounters a beam splitter, each photon is independently directed to one of two paths, labeled 0 and 1, with probabilities and , respectively. Then, the number of photons taking the 0 path follows , while those taking the 1 path follow .

The objective is to derive the distribution of photons directed to detector () given n photons initially entering the fiber, combining the effects of fiber attenuation and the beam splitter. This is formally presented in Lemma 3 (see also Figure 3).

Figure 3.

A diagram representing the probabilistic model for a fiber followed by a beam splitter. The number of photons directed towards detector will follow a binomial distribution as per Lemma 3.

Lemma 3.

If n photons go through a fiber with a transmission probability and then encounter a beam splitter that directs each photon to path i with probability , the number of photons directed towards detector i follows a binomial distribution with a combined success probability of . Formally, this can be expressed as:

Proof.

results from two successive binomial processes: first, the probability of m successes in n trials with probability , and then the probability of successes in m trials with probability . To find the distribution of , we marginalize over the latent variable m:

The index starts at , as is not possible. Since and are both binomial distributions, we substitute them as follows:

Letting and , and re-indexing:

Recognizing the binomial theorem [19]:

Simplifying and substituting back:

This has the form of the binomial distribution with number of trials and success probability . □

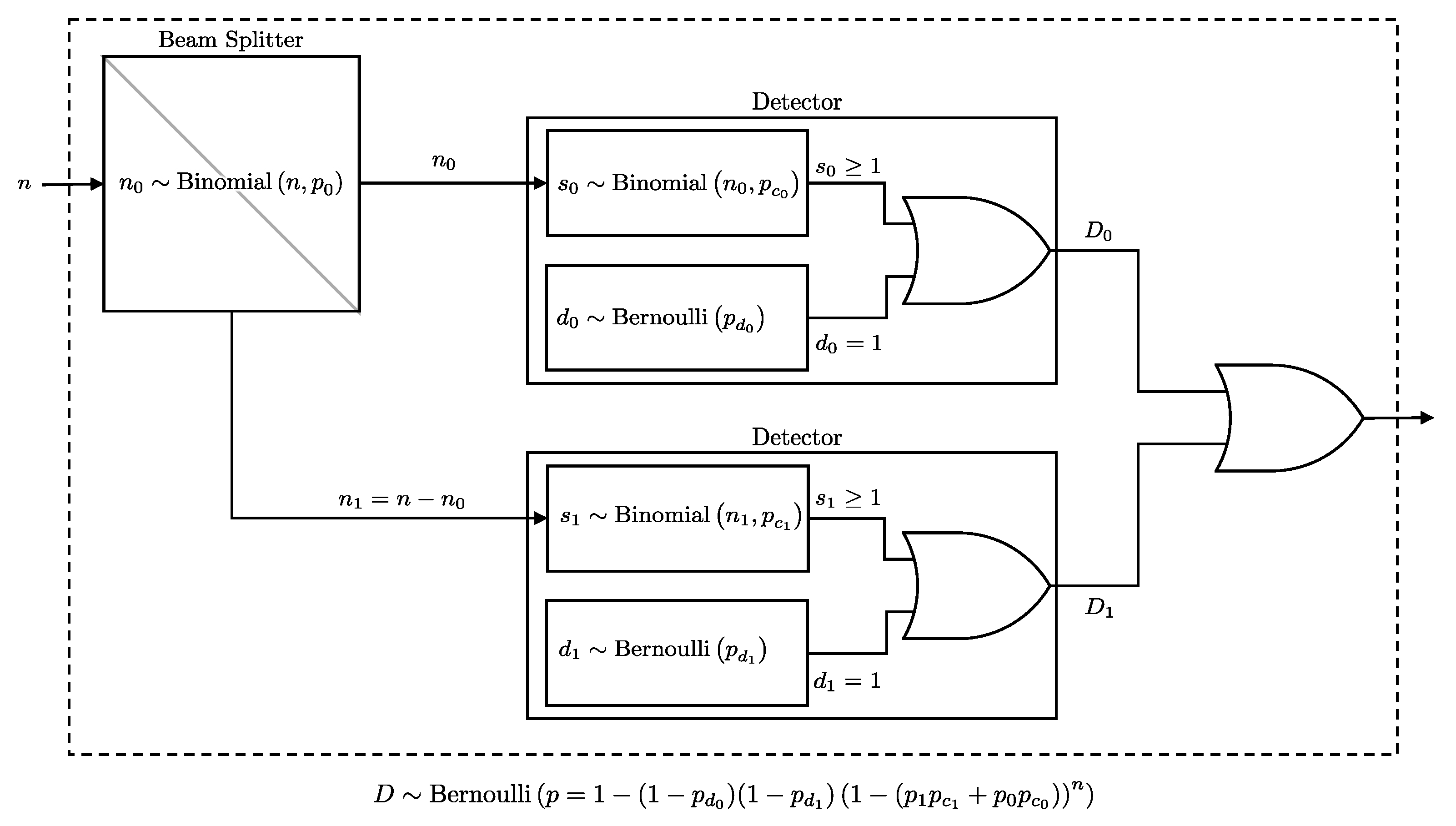

2.1.4. Pair of Detectors

In practical BB84 implementations, two detectors are used to distinguish between the detection of a true 0 bit and the absence of photon arrival. Therefore, to fully characterize the detection system, we compute the four joint probabilities of detector outcomes:

- : The probability of neither detectors clicking.

- : The probability of only clicking.

- : The probability of only clicking.

- : The probability of both detectors clicking.

If we have access to the marginal probabilities for each detector:

as well as the union probability:

we can use these to infer the joint probabilities as follows:

The marginals are straight forward results of previously stated lemmas. From Lemma 3, we showed that the effect of a fiber with loss followed by a beam-splitter path probability is essentially equivalent to a single fiber with a loss of . Additionally, in Lemma 2, we showed that the effect of a fiber with loss connected to a detector with efficiency is equivalent to a single detector with an efficiency of . Therefore, we can combine these results and conclude that a fiber connected to a beam splitter that is connected to a detector is equivalent to a single detector with an efficiency of , or more formally:

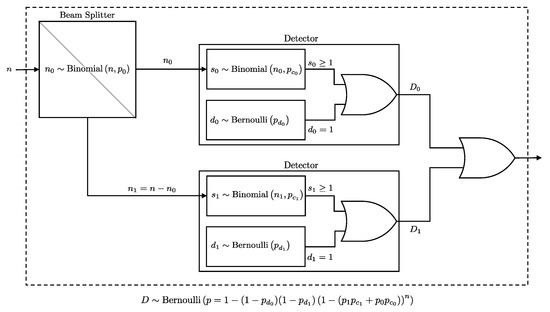

In the following Lemma, we derive the union probability in order to derive the joint probabilities. A schematic representation of the two-detector system is presented in Figure 4.

Figure 4.

A diagram illustrating the detection setup for two detectors after a beam splitter. The detection event follows a Bernoulli distribution as per Lemma 4.

Lemma 4.

If n photons encounter a beam splitter that independently directs each photon to one of two paths, , with probabilities and , and these paths lead to two detectors, or , with click probabilities and , and dark count probabilities and , respectively, then the probability of at least one of the detectors clicking is equivalent to the click probability of a single pseudo detector with n photons directly incident on it, where the click probability is and the dark count probability is . Formally, this probability is given by:

where

and is as defined in Lemma 1.

Proof.

To compute the probability that at least one detector, either or , registers a click, we sum over all possible numbers of photons k that could be passed to and marginalize over this variable:

Since after the beam-split, the click events at and become independent, the probability that neither detector clicks is the product of their independent non-click probabilities. Therefore, the probability that at least one detector clicks (the union probability) is:

We substitute the non-click probabilities:

Thus, the probability of a click in either detector is:

This can be rewritten by expanding and simplifying as:

Recognizing the binomial theorem [19]:

Further simplifying:

This has the same form as the probability in Lemma 1, but with the dark count probability defined as and the click probability defined as . □

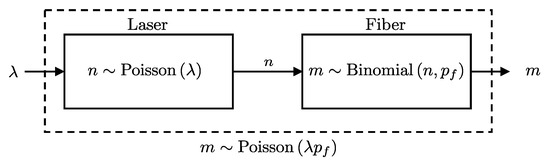

2.1.5. Laser-Fiber

In Quantum Key Distribution (QKD) systems, when using a weak coherent laser source with randomized phases, the number of photons emitted by the source follows a Poisson distribution [8]:

where is the average photon number per pulse, determined by the intensity of the laser source. Since each of the n photons emitted from the laser has an independent probability of passing through the optical fiber, the number of photons m that successfully exit the fiber follows a binomial distribution with parameters n and .

To determine the distribution of m given and , we need to account for the combined effect of the Poisson-distributed photon source and the binomial transmission process through the fiber. This relationship is formally described in Lemma 5 and visually illustrated in Figure 5, which shows that the effect of the fiber is to reduce the average number of photons by a factor of .

Figure 5.

Schematic representation of a laser source connected to a fiber. The number of photons m that passes the fiber follows a Poisson distribution as per Lemma 5.

Lemma 5.

If the number of photons n entering a fiber is Poisson-distributed with parameter λ, and each photon has an independent probability of being transmitted through the fiber, then the number of photons m exiting the fiber follows a Poisson distribution with parameter :

Proof.

To derive the distribution of m, we calculate the total probability of m photons exiting by summing over the probabilities of all possible photon counts n that could enter the fiber:

where the indexing is set to start at m, since . Expanding the expressions for the binomial and Poisson probabilities:

Simplifying this expression:

We define and re-index the sum:

Recognizing the power series expansion for the exponential function [20]:

the expression simplifies to:

This final form is the Poisson distribution with parameter . □

2.1.6. Laser-Detector

Consider a scenario where a Poisson-distributed photon stream is directly connected to a detector with efficiency and a dark count probability . The objective is to derive the probability of a click event at the detector, considering the stochastic nature of both the photon emission process and the detection. This involves marginalizing over all possible numbers of photons n emitted by the source, weighting each by the probability that n photons cause a detection event. This process is formally presented in Lemma 6 and illustrated in Figure 6.

Figure 6.

Illustration of the detection process for photons emitted directly from a laser source. The detection event follows a Bernoulli distribution as per Lemma 6.

Lemma 6.

If a Poisson-distributed number of photons with mean λ enters a detector with efficiency and dark count probability , then the click event D is Bernoulli distributed as follows:

Proof.

The probability that the detector registers at least one click, since the processes of incoming photons and dark counts are independent, can be expressed as:

From Lemma 5, we know that if the laser source emits photons following a Poisson distribution with mean , and the signal detection process has efficiency , then the number of detected signal photons s is Poisson-distributed with mean . Thus, the probability that no photon is detected is:

Combining these results, we have:

□

2.2. PNS Attack

We now model Eve’s interception of the key, considering specific choices of the bit Alice sends (x) and the bases chosen by Alice (a) and Bob (b). The model integrates the effects of the laser source, fiber losses, Eve’s attack vector, misalignment in the optical components, and detector characteristics.

2.2.1. Eavesdropper Assumptions (The Generalized PNS Attack)

In our analysis, we consider a generalized Photon-Number-Splitting (PNS) attack, where Eve has a range of strategic choices that extend beyond the standard assumptions typically made in QKD security models. This model allows Eve to optimize her attack strategy according to a broader set of parameters, providing a more flexible and realistic scenario. Specifically, Eve can choose:

- (Distance from Alice): Eve’s interception point is between Alice and Bob, with , where is their total distance.

- (Proportion of intercepted pulses): Eve intercepts a fraction of pulses, , balancing information gain and detection risk.

- (Number of intercepted photons): Eve intercepts photons per intercepted pulse.

- (Channel efficiency): Eve selects a channel efficiency to transmit intercepted pulses, simulating legitimate loss to avoid detection.

While many traditional PNS attack models assume that Eve optimizes the channel efficiency to maintain the appearance of normal channel loss, they often implicitly assume some or all of the following:

- Eve intercepts every pulse ().

- Eve intercepts immediately after Alice ().

- Eve intercepts exactly one photon per pulse ().

By allowing Eve to vary these parameters beyond just optimizing , the generalized PNS attack model captures a broader range of potential eavesdropping strategies, which is crucial for accurately inferring , the proportion of intercepted pulses. Assuming a fixed scenario for , k, or could lead to incorrect inferences about the extent of Eve’s presence. Furthermore, this generalized model enhances the inference on ; for instance, if Eve intercepts photons per pulse while the model assumes , the model might compensate by predicting a larger than actually exists. By considering a wider variety of possible attack scenarios, the generalized model provides a more comprehensive understanding of the robustness of QKD protocols and improves the accuracy of security assessments.

2.2.2. PNS Model

To simplify our derivation, we start with a basic scenario where Alice, Eve, and Bob are directly connected, with Bob using a single detector. This simplified model provides a foundational basis that can be extended to more complex and realistic setups using previously derived lemmas. More specifically, a detection setup that includes both a fiber and a detector can be modeled as a single detector with an effective efficiency that accounts for the fiber loss factor (Lemma 2). For setups involving multiple detectors, the beam-splitter probabilities can be integrated into the detection efficiencies (Lemmas 3 and 4). Additionally, a laser source followed by a fiber can be modeled as a source with reduced intensity, scaled by the fiber’s transmission probability (Lemma 5). This modular approach allows us to seamlessly extend the simplified model to more sophisticated real-world configurations without sacrificing analytical rigor.

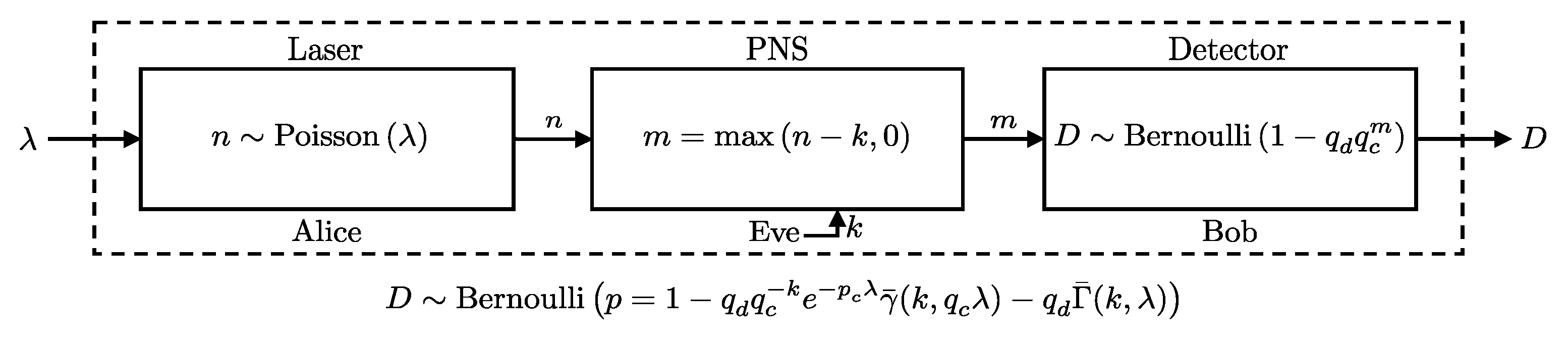

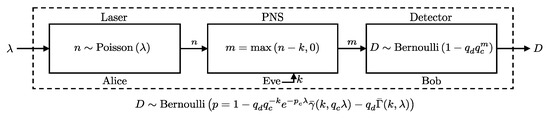

In this scenario, Alice sends n photons, sampled from a Poisson distribution with parameter , directly to Bob, while Eve intercepts the pulse by capturing up to k photons. If , Eve captures all n photons, and Bob receives the remaining photons (see Figure 7). We present a closed-form expression for the probability of Bob’s detector clicking under these conditions in Theorem 1.

Figure 7.

Model representation of the Photon-Number-Splitting (PNS) attack scenario. Here, Alice uses a laser source emitting photons following a Poisson distribution with mean . Eve intercepts the communication, capturing k photons using a PNS attack strategy. The remaining photons, , reach Bob. The probability of a detection event at Bob’s detector follows a Bernoulli distribution as per Theorem 1.

Theorem 1.

Consider a QKD setup where Alice’s photon source emits pulses with the number of photons following a Poisson distribution with mean λ. Eve intercepts up to k photons from each pulse. The remaining photons are sent to Bob, who uses a detector characterized by an efficiency and a dark count probability . Under these conditions, the detection event D at Bob’s detector is distributed as follows:

where and . and are the upper and lower regularized incomplete Gamma functions, respectively [20]. The regularized upper incomplete Gamma function is defined as [20]:

Proof.

To derive the click probability , we consider all possible numbers of photons n that Alice could send, and we marginalize over these photon counts to account for the different scenarios of interception and detection. The total probability is computed as:

In this expression, the first term represents the click probability given n photons and the detector’s characteristics. For , Eve intercepts all photons, leaving none for Bob, so the click probability reduces to the dark count probability . For , Eve intercepts exactly k photons, and the click probability is determined by the remaining photons. Therefore, we can split the summation into two parts based on the value of n:

Expanding this expression, we use the Bernoulli distribution for the click probability, from Lemma 1, and the Poisson distribution for the photon count:

Recognizing that the second summation represents the Cumulative Distribution Function (CDF) of the Poisson distribution, and utilizing the following relationship [20]:

we rewrite the expression as:

To further simplify, we split the exponential series:

□

Notably, by utilizing the relationship between the Poisson CDF and the incomplete Gamma function, we extend the domain of k to the non-negative real numbers. This extension is particularly useful when computing gradients later, as k can now be treated as a continuous parameter rather than as a discrete summation index, and the derivatives of the incomplete Gamma functions with respect to it can be derived.

Alice to Eve

According to Lemma 5, the combination of the laser source and the fiber from Alice to Eve can be modeled as a Poisson distribution with a reduced intensity parameter , where , being the distance between Alice and Eve, representing the average photon number emitted by Alice, and as the attenuation coefficient in dB/km.

Eve to Bob

If the measured error probability due to misalignment in the beam splitter is , the corresponding angular misalignment can be computed as:

The probability that a photon reaches detector i for specific choices of bit x, and bases a and b, is given by:

We can continue modeling the photon transmission through the system by applying Lemmas 2 and 3 to combine the effects of the fiber loss from Eve to Bob, the beam splitter, and the detector into a single detector model with an effective efficiency scaled by both the fiber loss and the beam-splitter path probability. We then state the following corollary derived from Theorem 1:

Corollary 1.

Let θ represent the set of system parameters:

The probability of detection at detector for specific choices of bases (a and b), a bit choice (x), intensity (λ), and whether Eve intercepted or not (e), is given by:

where is the probability of detection from Theorem 1, and the adjusted parameters , , and are defined as:

where and are the channel efficiencies from Alice to Bob and Alice to Eve, respectively.

2.2.3. All Possible Detection Events

We now have all the necessary components to derive the probabilities of the four joint detection events that Bob can observe, given the set of session parameters, , and pulse parameters, namely a, b, x, and e. By deriving the marginal and union probabilities, as discussed in Section 2.1.4, we have:

which can also be expressed as:

and the joint probabilities can be reconstructed as:

From Lemma 4, we know that the union probability for a two-detector system can be modeled as a single detector with adjusted dark count and click probabilities:

We denote the set of four possible outcomes given the session and pulse parameters as:

2.2.4. Marginalization of Unknowns in the Sifting Phase

For the purpose of inferring the percentage of tagged photons , we rely only on the information available during the sifting phase, specifically, the basis choices a and b marginalize over the variables x and e:

Assuming that Alice sends the bits with equal probabilities using a quantum random number generator, then . If Eve tags a pulse with probability , then and . Note from Equation (20) that:

Thus, swapping the basis will either swap the terms of the sum in Equation (39) when , or it will have no effect when . Consequently, the probability only depends on whether the bases matched or not, or more formally:

Therefore, for analytical purposes, we can assume Bob always measures in a fixed basis (e.g., ), while Alice switches her basis with 50% probability. The observed probabilities for and will be identical.

In conclusion, for a specific intensity , Bob can observe two distinct probabilities for a given detector i based on whether the bases matched or not:

where m is a flag to encode whether or not Alice and Bob have matching basis. Finally, the set of probabilities for all possible outcomes is:

If we assume Alice and Bob switch bases with equal probability, , this simplifies to:

2.2.5. Multiple Intensities

Thus far, our probabilistic model has assumed a single intensity for the session. However, as discussed in Section 2.2.1, Eve can manipulate the channel by choosing a different attenuation rate, allowing her to disguise her presence as normal channel loss. With a single intensity, Eve can select a channel efficiency that minimizes the statistical differences between the click distributions with and without her interference, potentially reducing the distinguishability between and (e.g., by minimizing the KL divergence between them).

To mitigate this, we must use multiple intensities, ensuring that Eve cannot conceal herself by tuning a single channel efficiency value. By varying the intensities, it becomes more challenging for Eve to simultaneously minimize the statistical differences across all intensity settings, thereby making her presence more detectable.

We adopt a straightforward strategy of selecting equally spaced intensities, using at least four to account for the four unknown parameters in Eve’s potential attack (i.e., , , k and ). The range for the minimum and maximum intensity values is determined using the following heuristics:

- : The lowest intensity where, if Eve intercepts a single photon immediately after Alice, she must use a channel with efficiency to avoid detection.

- : The highest intensity that maximizes the proportion of events where occurs, as cases where both detectors either click or do not click provide no useful information and appear uniformly random from Bob’s perspective.

To reflect the use of multiple intensities, we update our notation as follows:

where is the number of intensities. In this study, we assume that each intensity is equally likely to be selected. Note that while other protocols discard non-matching bases, we still probabilistically modeled them to utilize their statistics when inferring Eve’s presence (though not for key generation), thus increasing the statistical power to detect eavesdropping.

2.3. After-Pulsing

In real-world QKD systems, photon detectors are affected by after-pulsing, a phenomenon where a click from a prior detection event increases the chance of another click in the next pulse, regardless of whether or not a new photon is actually detected [14]. This effect can introduce correlations between detection events, breaking the independence assumption that would otherwise imply each event is isolated from the others. After-pulsing can be reduced by increasing the detector’s dead time, which lowers the pulse frequency and consequently reduces the key rate. On the other hand, simply neglecting the impact of after-pulsing results in incorrect error rate assessments and inaccurate key rate calculations. Both approaches fail to fully address the complexities introduced by after-pulsing, potentially compromising the security analysis of a QKD system or the key generation rate.

To address this challenge, we employ a Hidden Markov Model (HMM) to rigorously capture the behavior of detectors affected by after-pulsing. This approach improves the accuracy of security analysis and key rate calculations. We treat after-pulsing as an intrinsic property of the detector—unaffected by Eve—analogous to the way we model the dark-count rate. The details of this HMM-based modeling are presented in the following subsections.

2.3.1. Single Detector, Single Probability

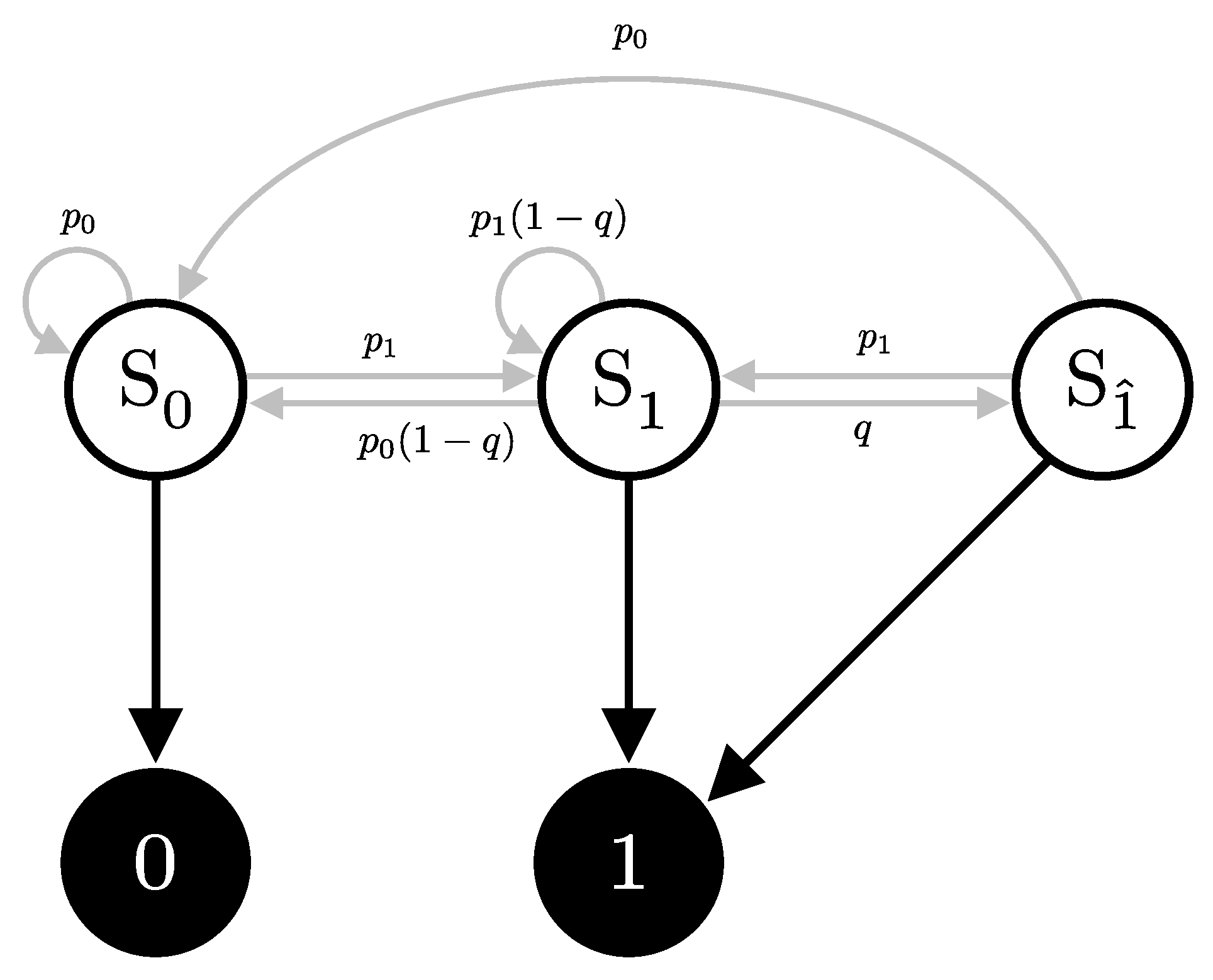

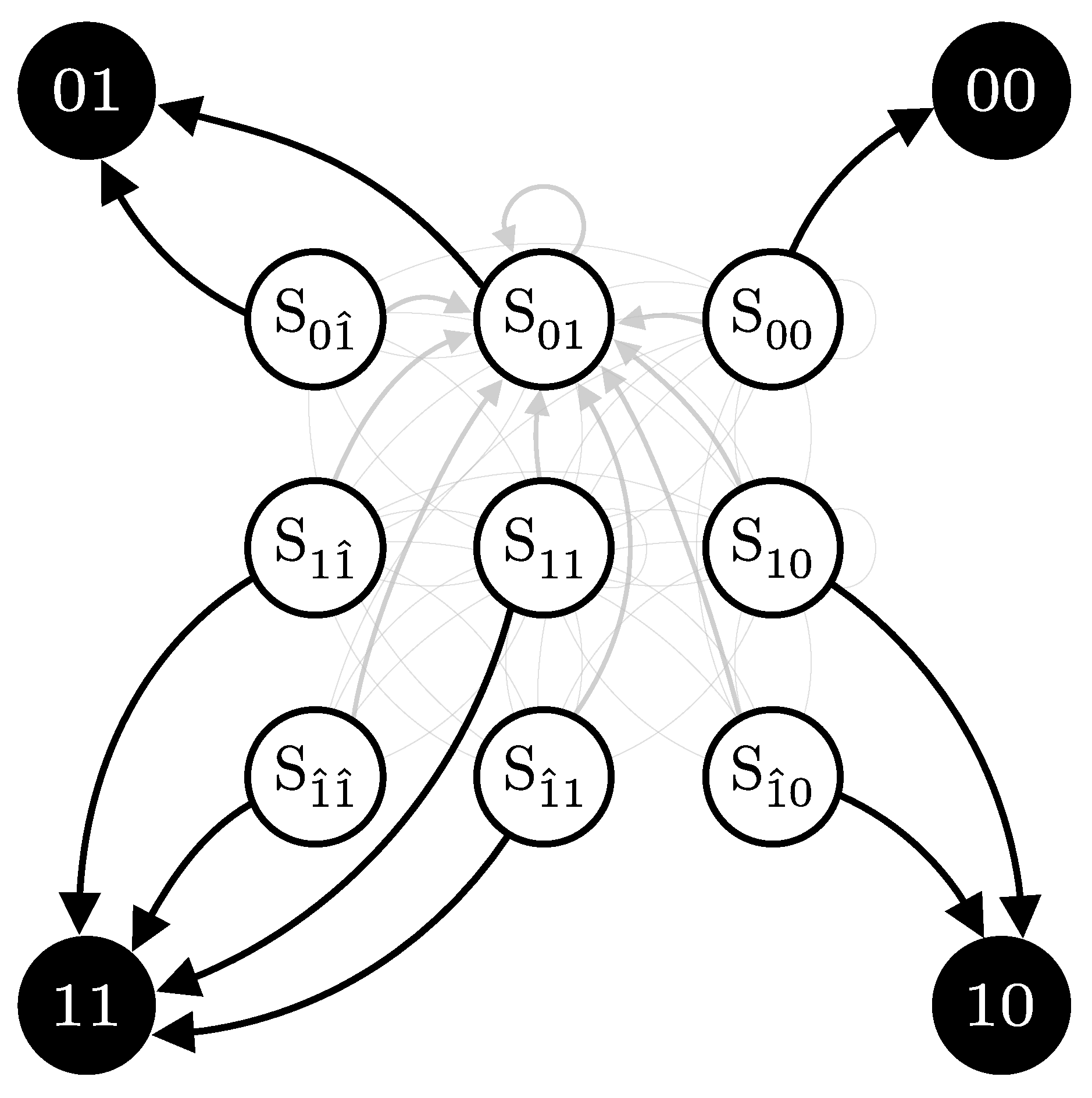

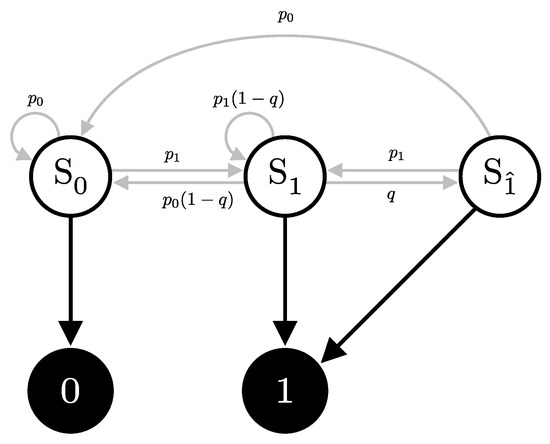

Consider a scenario where Alice repeatedly sends identical pulses to Bob, consistently choosing the same bit value x, basis a, and intensity . Bob, on the other hand, measures these pulses in a fixed basis b, with no interference from Eve. The signal will cause the detector to click with probability , and, consequently, does not click with probability . The detector can then be in one of three internal states, depending on its recent detection history; see Figure 8:

Figure 8.

Hidden Markov Model (HMM) representation of the single detector scenario. The states , , and represent no click, a click due to signal or dark count, and a click due to an after-pulse, respectively. Arrows indicate possible state transitions with associated probabilities.

- State : No click occurs, at which it remains in this state with probability , or transition to the state with probability .

- State : A click due to either a signal or a dark count. It will then transition to the after-pulse state with probability q, or with probability transition to state or remain in state with probability .

- State : A click due to an after-pulse effect. This state occurs following a click in the previous detection window (state ). Then, it transitions to the state with probability or state with probability , but it cannot remain in this state.

Hidden Markov Models (HMMs) are characterized by three sets of probabilities:

- Transition matrix (): Probabilities of transitioning from one hidden state to another (gray arrows in Figure 8).

- Emission matrix (): Probabilities of observing specific outputs given the current hidden state (black arrows in Figure 8).

- Initial state probabilities (): Represents the probabilities of starting in each hidden state at the beginning of the process (usually assumed uniform or alwayas starts at a specific state).

For the HMM presented in Figure 8, the transition and emission matrix are defined as follows:

where . The emission matrix , which represents the probabilities of observing each possible outcome (0 or 1) given the detector’s state, the black arrows in Figure 8, is structured as follows:

We assume a first-order Markov chain (i.e., the next state depends only on the current state) and disallow consecutive after-pulse states. This reflects typical detector behavior; however, the model is flexible: transition probabilities can be adjusted for different after-pulsing dynamics, and higher-order Markov chains can be implemented by expanding the state space [21].

2.3.2. Two Detectors, Single Probability

For a two-detector setup, the click probabilities for each detector combination—namely (00), (01), (10), and (11)—have already been derived in Equation (38) for specific pulse parameters a, b, x, e, and . We denote these probabilities as , , , and , respectively. Let and represent the after-pulse probabilities for detectors and , respectively. We also define the following marginal probabilities:

For the after-pulse probabilities, we would need to derive the opposite; we already have access to the marginals, and , and we would like to derive the joint distributions. Since the after-pulse triggers are considered independent:

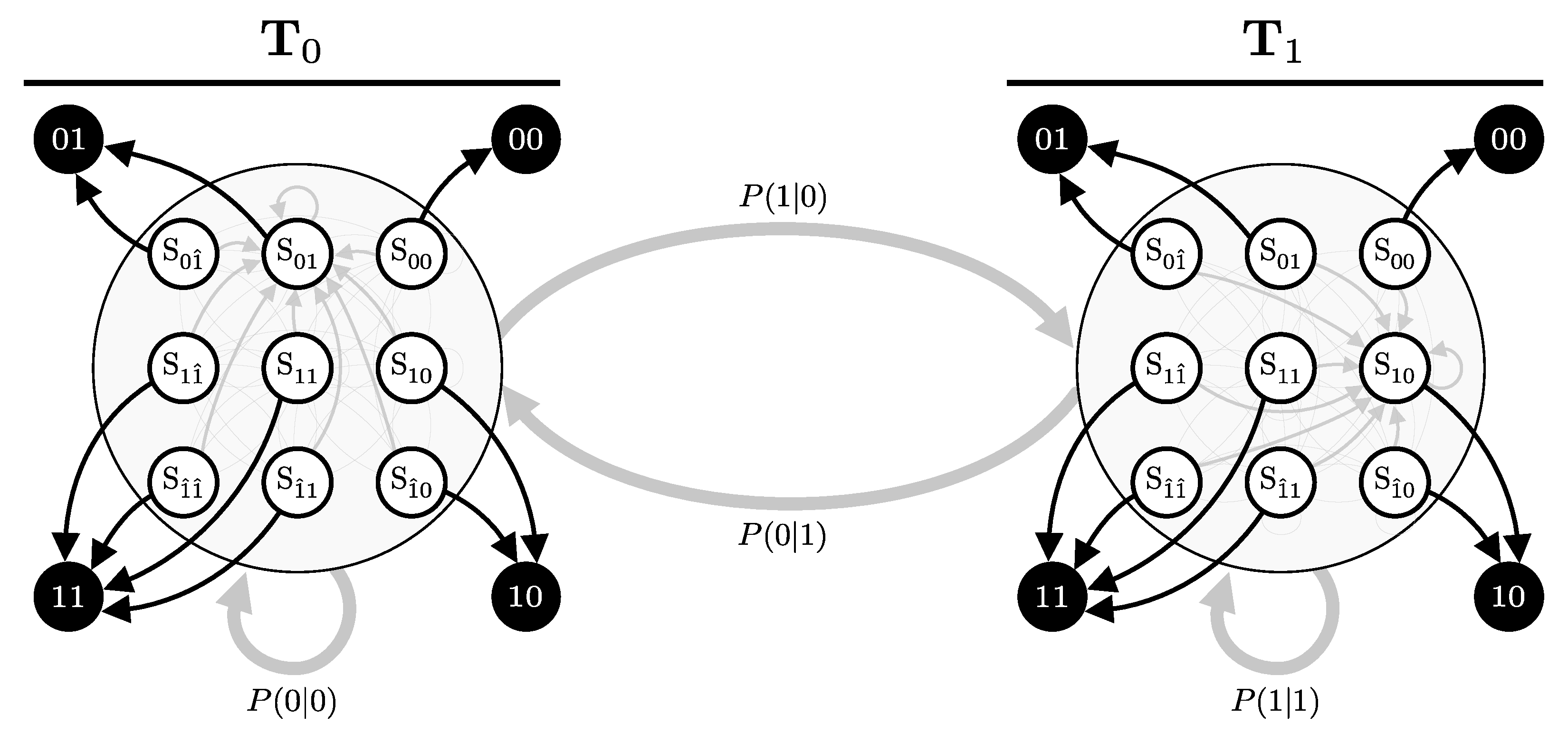

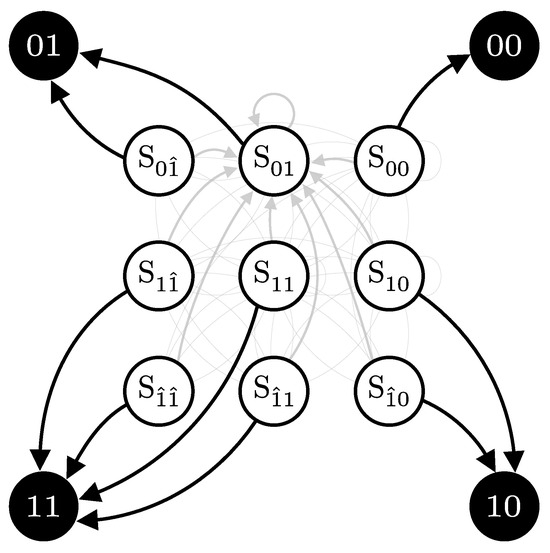

In this two-detector scenario, the state and observation spaces expand accordingly. The set of possible states S and observations O are given by:

where, for example, denotes the state where detector did not click and detector clicked due to an after-pulse.

The emission matrix is constructed to reflect the probabilities of each possible observation given the detector states:

Constructing the transition matrix is more complex due to the extended state space. The matrix captures the transition probabilities between each state, accounting for both the regular transitions and those influenced by after-pulsing effects:

where and . The order of states in both the rows and columns of the transition matrix is consistent with the order of the states in the rows of the emission matrix .

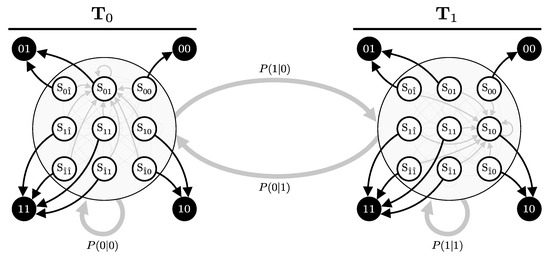

The matrix is organized into sub-blocks to highlight different transitions. The left sub-block represents the transitions without after-pulsing, while the right sub-blocks account for transitions influenced by after-pulsing. For instance, when the system is in state , second row, there is a probability of transitioning to an after-pulse state, either with probability or with probability . If no after-pulse occurs, the system transitions among states , , , and with their respective probabilities , , , and but scaled by (). An illustration for specific sets of p and q is provided in Figure 9.

Figure 9.

State transition diagram for a specific set of pulse parameters. The figure illustrates the transitions for the probabilities and after-pulse probabilities . Each state represents a unique combination of detector responses and after-pulse conditions. Arrows indicate the possible transitions between states, with the thickness of the lines being proportional to their associated transition probabilities.

2.3.3. Two Detectors, Multiple Probabilities

In the previous subsection, we considered the scenario where Bob receives a repeated pulse with fixed parameters (a, b, x, e, and ). Under this condition, the behavior of the system could be modeled using a Hidden Markov Model (HMM) with a single transition matrix that corresponds to these fixed parameters.

Now, we consider a more complex scenario where Alice alternates her bit choice x between 0 and 1. Although in QKD protocols this bit switching is typically assumed to be independent and occur with equal probability, we will formulate a more general case. We define the transition probabilities between bit states as follows:

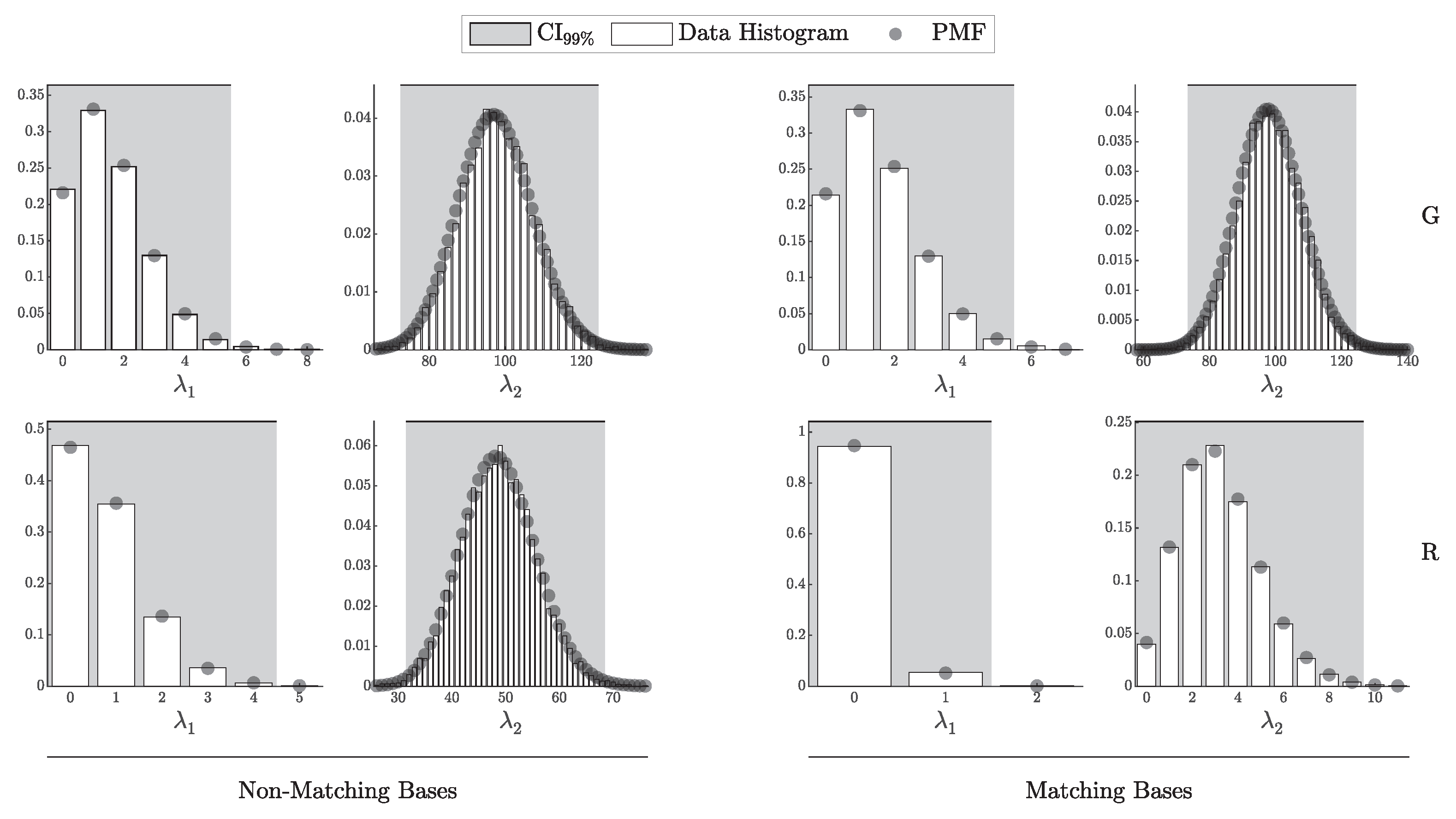

Let and represent the detector dynamics for cases where Alice’s bit x was consistently 0 or 1, respectively. Given this setup, our goal is to construct an HMM that captures the combined dynamics of the system as Alice’s bit choice switches over time. The key is to describe both the intra-mode transitions (transitions within the same bit choice) and inter-mode transitions (transitions between different bit choices), effectively combining the two HMMs ( and ) that represent the system’s behavior for and (see Figure 10).

Figure 10.

State transition diagram for a specific set of pulse parameters. The left and right clusters represent transitions within the HMMs corresponding to different pulse parameters, specifically for cases where Alice’s bit x changes. For illustrative purposes, was constructed using probabilities and using probabilities , both with after-pulse probabilities .

To demonstrate this, consider a generic state representing the detectors’ internal states in a particular mode, either or . The mode here indicates which of the two separate HMMs (either or ) currently governs the transitions.

If the system is in state and mode , there are two possible types of transitions:

- Intra-Mode Transition: With probability , the bit choice remains . The system transitions according to the probabilities defined by the current state’s corresponding entry in . For example, the probability of transitioning from in mode 0 to in mode 0 is given by .

- Inter-Mode Transition: With probability , the bit choice switches to . The system then transitions according to the probabilities defined by the corresponding entry in . For example, the probability of transitioning from in mode 0 to in mode 1 is .

The analysis is equivalent if the system is in mode ; however, the intra-mode transitions will involve and the inter-mode transitions will involve , transitioning according to either or , respectively.

Using this framework, the combined transition matrix can be constructed to account for both the detector state transitions and the changes in Alice’s bit choice. This leads to the following block matrix structure:

The combined transition matrix captures all possible transitions from any state in any mode to any state in any mode, encompassing the full dynamic process of the system as it evolves both within and between modes.

2.3.4. Constructing the Full Transition Matrix

This section constructs the full HMM by incorporating transitions for all pulse parameters, such as basis choices (a and b), bit choices (x), the eavesdropping flag (e), and intensity settings (), to fully describe the detection dynamics in a Quantum Key Distribution (QKD) system. The transition matrix is built incrementally, starting with individual components and progressively incorporating all relevant variables.

Intensity-Based Transitions

We begin by constructing the transition matrix for a specific set of pulse parameters. This matrix captures the transition dynamics when Alice and Bob’s bases (), Alice’s bit choice (x), the presence or absence of eavesdropping (e), and the photon intensity () are all fixed:

Here, represents the click probabilities given the session and pulse parameters, as defined in Equation (38). The vector denotes the after-pulse probabilities for detectors and , respectively. The parameter set includes Alice’s, Bob’s, and Eve’s parameters, where Bob’s parameters are updated to include the after-pulse probabilities:

Next, we construct the transition matrix across all possible intensity settings , using the same approach outlined in Section 2.3.3, by combining the individual matrices for each . If Alice transitions between the different intensities uniformly and independently, the transition matrix simplifies to the following:

Eavesdropping Transitions

To incorporate the possibility of eavesdropping, we expand the matrix to account for scenarios where Eve transitions between intercepting () and not intercepting (), with probabilities and , respectively:

Bit Choice Transitions

To model transitions associated with Alice’s bit choices, we assume she switches between and with equal probability (50%). The corresponding transition matrix that captures this behavior is given by:

Basis Choice Transitions

Similarly, we can construct the transition matrix to account for the basis choices made by Alice (a) and Bob (b), assuming that both choose their bases independently and uniformly, with equal probability for each choice. First by incorporating Bob’s basis transition:

then incorporating Alice’s basis transition:

This final matrix encapsulates the complete set of transition probabilities, integrating all pulse parameters—basis choices, bit choices, eavesdropping scenarios, and intensity settings—to model the full dynamics of the QKD system.

2.3.5. Extracting the Probabilities of Click Events

The probabilities of different click events in an HMM, denoted as , are derived by first computing the state probabilities from the transition matrix and then mapping these state probabilities to observations using the emission matrix . The stationary distribution, , represents the long-term probabilities of visiting each state and satisfies the following equation:

This is equivalent to finding the eigenvector corresponding to the eigenvalue of 1. Since the transition matrix is aperiodic (states are not revisited at fixed intervals) and irreducible (every state is reachable from any other), the Perron–Frobenius theorem [22,23] shows that this eigenvector exists, is the maximum eigenvector, and is unique. This property guarantees that the system converges to an equilibrium representing its long-term behavior after sufficient transitions, regardless of the initial state [24]. Furthermore, since we are only interested in the eigenvector corresponding to the maximum eigenvalue, we can leverage more efficient decomposition algorithms, such as the Power Method or Krylov subspace methods [25,26].

By normalizing , we obtain a valid probability distribution over the hidden states:

The vector has a length of , corresponding to the number of states () times the possible choices of a, b, x, and e () times the number of intensities (). To correctly project the vector of state probabilities into observable probabilities using the emission matrix , which is of size , the vector is first “folded” into a matrix of size . This folding operation is defined by the mapping function:

where each column corresponds to a specific pulse configuration, and each row corresponds to one of the HMM states. We then compute the observable probabilities by projecting this folded matrix onto the emission matrix :

where and represents the adjusted probabilities of the observable detection events.

At this point, we have obtained the probabilities of the four possible detection outcomes given any configuration of . However, Bob only observes a, b, and . Therefore, we can marginalize over the variables x and e by summing over the corresponding columns in .

We can apply this to x, e and utilize the symmetry in Equation (41) to only focus on whether the basis matched or did not match. This allows us to obtain , which contains outcomes (corresponding to 4 detection events, 2 cases of matching or non-matching bases, and intensity levels).

While this approach provides a complete probabilistic view for analytical purposes, it may not be the most computationally efficient due to the large size of the transition matrix and the eigenvalue decomposition required. If we are only interested in instead of , we can construct a smaller transition matrix directly based on the matching or non-matching cases. This results in a much smaller matrix of size , significantly reducing computational complexity.

2.4. Error and Gain Probabilities

To accurately compute the secure-key rate in the QKD protocol, particularly following the Gottesman–Lo–Lütkenhaus–Preskill (GLLP) formula, it is essential to derive the probabilities associated with the gain (probability of a detection events) and erroneous click events (probability of a click at the incorrect detector). Our previous results, encapsulated in the probability vector , allow us to compute the detection probabilities under various configurations of basis settings, bit choices, intensities, and interception scenarios. By leveraging these configurations, we can rigorously determine the gain and error probabilities required for the secure-key rate calculation, which will be described in the following subsections.

2.4.1. Marginalizing over Eve’s Interception Flag

For each combination of basis settings (a and b), bit choice (x), and intensity (), we first marginalize over the possible states of Eve’s interception flag (e), where represents interception and represents no interception. This marginalization enables us to calculate the effective probabilities under the influence of Eve’s interception rate :

The resulting vector comprises four elements corresponding to each detection outcome:

where each element represents the probability of a particular click configuration (e.g., represents a click at detector only for a specific bit choice (x), bases configuration (a, n), and intensity ).

2.4.2. Gain and Error Probabilities

The gain probability for a given bit choice x is obtained by summing the probabilities of the events where only one detector clicks (i.e., single-click events):

The probability of an erroneous click event, , where the detector registers an error, depends on the bit choice x. Specifically:

2.4.3. Marginalizing over Bit Choice

To obtain the total click and error probabilities for a given basis configuration and intensity, we marginalize over the bit choice x, assuming Alice’s bit choices are uniformly distributed. This leads to the overall gain and error probabilities given by:

These expressions represent the probabilities of a signal and an erroneous click under the conditions specified by . To further simplify notation, we consider the two distinct cases of basis alignment, where and . Referring to the symmetry from Equation (41), where , we redefine the gain and error probabilities in terms of alignment cases:

where denotes matching bases () and denotes non-matching bases ().

2.4.4. Distribution of Gain and Error Counts

The number of gain clicks, , and error clicks, , for a specific bases alignment m and intensity are distributed as follows (assuming i.i.d.):

where is the total number of pulses with basis alignment m and intensity . Here, and are not independent, as the number of error clicks is a subset of the number of gain clicks.

The number of error clicks can be viewed as resulting from sequential binomial sampling. First, the gain clicks are selected from with probability , then is selected from with probability (the conditional probability of an error given a signal). More formally,

To derive the conditional error probability , we apply Lemma 3, which can be rephrased in terms of two sequential binomial sampling processes. If we first sample , and then sample , then

This lemma shows that the effective success probability for sequential binomial sampling is the product of the individual probabilities in each stage. Applying this to , we have:

We can apply the same principle of sequential binomial sampling to express and distributions in terms of N, the number of session pulses, directly. Since results from first selecting with probability (the probability of bases alignment), then selecting with probability (assuming uniform intensity selection), we can also express them as:

While and are sufficient for secure-key rate calculations, we also derive and to facilitate a robust comparison between theoretical expectations and simulated data. The following lemma provides these values, establishing a basis for evaluating the alignment of our model with empirical results.

Theorem 2.

Let r be a random variable obtained through a two-step sampling process where first and then, given s, . Define when . Then, the expected value and variance of ρ are given by:

where is the expected value of the reciprocal of s.

Proof.

First, compute the expected value using the law of total expectation [25]:

Given s, since and , we have:

therefore,

The variance can be expressed using the law of total variance [25]:

Given s, the conditional variance is:

Thus,

Since is constant,

Combining these results, we obtain:

□

Note that the derivation in Theorem 2 assumes , ensuring that is well-defined. For clarity and simplicity, this condition has been omitted from the main expressions, but all expectations and variances are implicitly conditioned on . Additionally, does not have a closed-form expression for ; however, for large , it can be approximated as:

This leads to an approximate variance:

This approximation holds well when N is large and p is not too small, rendering the probability negligible.

Applying this result to Equations (86) and (90), we obtain the expected value and variance of the error rate for a basis alignment m and intensity as follows:

Since , it is natural to model it with a Beta distribution. Using Equation (129), we derive parameters for a Beta distribution that match the expected value and variance in Equations (94) and (95). This allows us to approximate the distribution of the error rate as follows:

where and are calculated to ensure that the Beta distribution’s mean and variance align with Equations (94) and (95).

3. Bayesian Inference

In this section, we aim to infer the unknown parameters of the QKD system based on the observed detection events. Recall that the parameter set encompasses all aspects of the QKD system, where represents Alice’s parameters, represents Bob’s parameters, and captures Eve’s potential strategies. Let C be the vector of counts for each detection outcome:

where , for example, represents the count of events where , , and the bases did not match.

The objective is to infer the unknown parameters associated with Eve, specifically , based on observed data C and the known parameters and . Utilizing Bayes’ theorem, the posterior distribution of Eve’s parameters, given the observed data and known parameters, is formulated as:

where

where is the likelihood of the data given the parameters, and is the prior distribution of Eve’s parameters, with representing the hyper-parameters of these priors, and is the marginal likelihood (or evidence) that normalizes the posterior distribution.

3.1. Constructing the Likelihood

To apply Bayesian inference, we first need to construct the likelihood function that describes the probability of observing the data given the parameters of the system. This is formally presented in the following theorem:

Theorem 3.

Let Alice emit a Poisson-distributed number of photons with average intensities selected uniformly at random from a set of intensities . For each pulse, Alice randomly selects a basis with equal probability, and Bob independently measures in a randomly chosen basis , also with equal probability.

Let Eve be positioned at a distance from Alice, potentially intercepting a fraction Δ of the total transmission and capturing k photons from each intercepted pulse. Bob is positioned at a distance from Alice, with fiber attenuation α. Bob uses two detectors characterized by detection efficiencies and , dark count probabilities and , and a beam splitter with a misalignment error . The detection events are assumed to be independent.

Under these conditions, the vector of counts for the distinct detection outcomes—corresponding to each combination of intensity, basis choices, and detection results—is distributed according to a multinomial distribution:

where is the vector of probabilities for each detection outcome, derived from the set of system parameters as in Equation (45).

HMM Likelihood

The likelihood of observing a specific sequence of detection events given state transition probabilities , emission probabilities , and initial state probabilities can be computed using the forward algorithm [19]:

where is the number of states and the forward probability at time t for state i, is recursively expressed as:

and the initial forward probability

Computing the forward algorithm is computationally intensive and impractical for large N. Additionally, our primary interest lies not in the likelihood of a specific sequence of detection events but in the aggregated counts of detection outcomes over the session.

To address this, we can use the long-term stationary distribution as the parameter for the multinomial distribution. Although individual detection events are dependent, the aggregated counts over a large number of trials can be modeled using a multinomial distribution with these adjusted probabilities.

Theorem 4.

Given a Hidden Markov Model (HMM) with an irreducible and an aperiodic state transition matrix , emission probabilities , and initial state probabilities , where the system accounts for dependencies induced by after-pulsing, the likelihood function for the aggregated counts C over N trials is formulated as:

where represents the adjusted probabilities derived from the stationary distribution of the Markov chain, as in Equation (72).

By replacing the i.i.d. probabilities with the adjusted probabilities from the stationary distribution, we effectively account for the dependencies introduced by after-pulsing. This allows us to use the multinomial likelihood function for the aggregated counts, enabling accurate and computationally efficient inference of the system parameters.

3.2. Constructing the Prior

To construct a robust Bayesian inference framework, it is essential to carefully choose priors for the unknown parameters . The parameters represent Eve’s distance from Alice, the channel efficiency chosen by Eve, the number of photons intercepted per pulse, and the fraction of intercepted pulses, respectively.

3.2.1. Assumptions and Independence

To address the uncertainty surrounding Eve’s strategy, we encode a bias towards worst-case scenarios while incorporating practical constraints. We assume that Eve’s choices are independent of Alice and Bob’s configurations, allowing her to select parameters freely from the allowable domains. Although a strategic Eve might optimize her settings to maximize information gain based on Alice’s and Bob’s choices, modeling such behavior would introduce another exploitable vulnerability. Instead, we take a precautious approach, treating Eve’s parameters as independent, ensuring that she cannot exploit any alignment with Alice and Bob’s configurations.

3.2.2. Priors for Bounded Parameters

The parameters , , and are all bounded within specific ranges:

Let be a bounded parameter in . For such a parameter, we utilize the Beta distribution due to its flexibility in modeling probabilities over a finite range after rescaling the parameter to the range. Specifically, the prior for :

where are the hyper-parameters of the prior distribution, namely the shape parameters of the Beta distribution ( and ), as well as the upper and lower bound of ( and ). The scaling factor ensures proper normalization over the interval .

3.2.3. Prior for Semi-Bounded Parameters

The parameter k, representing the number of photons intercepted per pulse, is semi-bounded with . In Theorem 1, we utilized the incomplete Gamma function to extend the domain of k to the positive real numbers. This extension allows us to treat k as a continuous variable, which is particularly useful for gradient-based optimization or numerical integration techniques and more nuanced probabilistic modeling.

Given this extended domain, we employ a Gamma distribution for k, which is suitable for modeling continuous variables that are bounded below. Although, in this specific setting, there is only one semi-bounded parameter, i.e., k, in preparation for a fully Bayesian approach where we can treat other fixed variables as random variables, we will denote a semi-bounded parameter modeled with a Gamma prior as :

where are the hyper-parameters of the Gamma distribution. In the case of k, the distribution is shifted by to ensure alignment with the lower bound , reflecting that if Eve intercepts, she must capture at least one photon.

3.2.4. Selection of Prior Parameters

For the bounded parameters, setting in the Beta distribution yields a uniform distribution, reflecting maximal uncertainty within the given bounds. We apply this uniform distribution to parameters like , encoding no prior preference over Eve’s channel efficiency. However, to introduce a cautious bias against Eve’s activity, we set and , making more likely a priori. This choice implies a precautionary stance, assuming Eve’s presence unless data suggests otherwise.

For the distance parameter , we set the prior parameters in the Beta distribution oppositely, with and . This encodes the assumption that Eve is more likely to position herself closer to Alice, where the signal intensity is higher, minimizing the efficiency requirements for her alternative channel when intercepting photons.

For the semi-bounded parameter k, setting in the Gamma distribution places the mode of the distribution at . This choice encodes the assumption that, in the absence of data, Eve is most likely to capture the minimum number of photons from an intercepted pulse, which is 1. To provide practical constraints within this framework, we define a useful pseudo upper bound on k:

- : The maximum number of photons Eve can intercept from a pulse immediately emitted by Alice such that she would require a channel efficiency of to avoid detection.

This pseudo upper bound, , serves as a guideline for setting the rate parameter of the Gamma distribution. Specifically, is chosen such that the expected value lies halfway between 1 and , ensuring that the prior realistically constrains Eve’s capabilities while remaining theoretically unbounded.

3.2.5. Final Formulation of the Prior

Combining the priors for each parameter, the joint prior distribution for Eve’s parameter is given by:

and

where and are the upper and lower bounds of the parameters. We store these in the set of priors’ hyper-parameters as they will be used later to un-bound the parameters when constructing the posterior.

3.3. Constructing the Posterior

With the likelihood and prior established, the final step is to compute the marginal likelihood , as defined in Equation (99), to derive the posterior distribution of Eve’s parameters conditioned on the system parameters and observed data.

However, due to the complexity of the likelihood function in our model, stemming from the multiple parameters and the interactions modeled within the QKD system, analytically computing this marginal is infeasible. Additionally, an analytical approach might restrict our choice of priors to conjugate priors, which could fail to accurately represent the underlying physics and real-world constraints of the problem.

Methods for Approximating the Posterior

Given the challenges in deriving the posterior analytically, we consider several computational methods. One common approach is Markov Chain Monte Carlo (MCMC) [27,28], which can handle complex, high-dimensional distributions. MCMC methods, such as Metropolis–Hastings [27,28] or Gibbs sampling [29], do not require the posterior to have a specific form, allowing flexible modeling with realistic priors that reflect the physical constraints of the QKD system. Although MCMC converges to the true posterior with sufficient samples, it can be computationally intensive and requires careful tuning and convergence checks [30,31].

Variational Bayes (VB) [25] approximates the posterior by optimizing a simpler, parameterized distribution to minimize KL divergence. This can be more efficient than MCMC, especially with large datasets, but yields only an approximate posterior that depends on the chosen family of distributions. In security-critical QKD applications, where assumptions about the posterior can introduce vulnerabilities, we prefer methods that let the data define the distribution.

Bayesian Quadrature could be used for low-dimensional parameter spaces by modeling the integrand as a Gaussian process [32,33]. Although it may require fewer evaluations than traditional quadrature, it assumes integrand smoothness, which might miss important features in security-critical scenarios. Consequently, like VB, Bayesian Quadrature is less suitable when avoiding assumptions is essential for security.

Given the complexity of our parameter space, we also considered gradient-based numerical integration methods like Hamiltonian Monte Carlo (HMC) [34,35] and its adaptive variant, the No-U-Turn Sampler (NUTS) [36]. We computed gradients for all variables via the incomplete Gamma function, enabling a continuous likelihood function and methods that exploit gradient information to navigate the parameter space more efficiently [37].

Ultimately, we chose Covariance-Adaptive Slice Sampling [38], which incorporates gradient-based adaptation while retaining the robustness of slice sampling. With fewer hyper-parameters, it minimizes manual tuning and enhances reliability while efficiently exploring high-dimensional parameter spaces by adapting to local covariance structure. Our fully Bayesian framework—where any parameter can be switched from fixed to random by assigning a prior—maintains security guarantees in QKD systems by avoiding restrictive modeling assumptions [17].

3.4. From Bounded to Unbounded Variables

To perform effective Bayesian inference, especially using gradient-based numerical integration methods, it is crucial to transform the parameter space so that all variables are unbounded. However, this process is not as straightforward as simply applying a transformation step during posterior computation. Transformations alter the probability distribution non-uniformly, effectively mapping the original distribution into a different space. To ensure that sampling remains consistent with the true posterior, the Probability Density Function (PDF) must be adjusted to account for these changes. This adjustment is achieved through the transform of variables method, which ensures an accurate and mathematically consistent mapping between the original and transformed spaces.

3.4.1. Defining Transformations and Their Inverses

To transform the parameters into an unbounded space and vice versa, we use the sigmoid, , and logit, , transformations for the bounded parameters, and the exponential, , and logarithmic, , for the semi-bounded.

Let b be the set of bounded parameters modeled with a Beta prior, and g be the set of semi-bounded parameters modeled with a Gamma prior; we define the width , where and are the parameter’s upper and lower bounds, respectively. The transformation to the unbounded space and its reverse are given by:

and for the semi-bounded variables,

More generally, let:

be the two mapping functions that transform the variables from and to different spaces. By applying these transformations, we define the new set of transformed variables , where each now lies in the real, unbounded space .

3.4.2. Jacobian of the Transformation

When transforming variables in a probability distribution, it is essential not only to transform the variables but also to update the Probability Density Function (PDF) accordingly. This is because the original PDF is defined over the original variable space, and a transformation changes the volume element in this space. The transformation can stretch, compress, or otherwise distort the space, altering the relative spacing between points.

To ensure that the transformed PDF correctly reflects the distribution of probabilities over the new variable space, we must incorporate the Jacobian determinant of the transformation. The Jacobian determinant represents the factor by which the volume element changes under the transformation. Mathematically, if we have a transformation and the original PDF is , the transformed PDF is given by [19]:

This ensures that the total probability remains normalized to one after the transformation, preserving the properties of a probability distribution. For multidimensional distributions, it generalizes to finding the determinant of the Jacobian matrix of the transformation from back to :

The individual partial derivatives, using the inverse functions of the transformations, are:

Considering that and , the determinant of the Jacobian matrix, , can be expressed as:

3.4.3. Updating the Posterior with the Jacobian

The posterior distribution with respect to the transformed variables is given by incorporating the Jacobian determinant to adjust for the change of variables:

where is the likelihood as derived in Theorem 3 and is the prior as in Equation (110), but with .

3.5. From Fixed to Random Variables

Until now, our modeling approach has operated under the assumption that the parameters governing our system are fixed and known, leading to the treatment of detection events as independent and identically distributed (i.i.d.). Under the i.i.d. framework, each detection event is considered independent, and the probability distribution governing these events remains constant over time. However, this assumption of identically distributed data does not align with the inherent stochasticity present in real-world Quantum Key Distribution (QKD) systems, where variables such as the intensity of a laser source or detector efficiencies may vary due to external conditions or device imperfections.

By transitioning from a fixed-parameter model to a Bayesian framework, we allow these parameters to be treated as random variables. This shift introduces prior distributions over the parameters, which are updated as more data become available, reflecting their true variability. As an example, consider the intensity of a laser source. Instead of assuming that is fixed and known, we can model it as a random variable with a Gamma prior distribution, Gamma, where and are the shape and rate parameters of the Gamma distribution, respectively.

To illustrate, suppose the laser’s intensity has an expected value and a variance based on the laser’s specifications. We can set the parameters of the Gamma prior to match these moments [25]:

Solving these equations for and gives:

This formulation provides a prior distribution for that reflects both its expected value and the uncertainty due to variability, allowing our model to capture the true stochastic nature of the laser intensity. Similarly, for the detector efficiency , a Beta distribution can be used as a prior.

Applying the same method to the Beta distribution, the parameters and can be derived from the expected value and variance as [25]:

By setting these parameters, we construct a Beta prior distribution for that accurately reflects both the expected efficiency and the uncertainty inherent in the detector’s performance.

By adopting this approach, we move along a spectrum from deterministic models with fixed parameters to fully Bayesian models that account for uncertainties in all parameters. At one extreme, a fixed parameter can be viewed as having a Dirac delta function as its prior [25], reflecting complete certainty about its value. At the other extreme, we have models like those used for Eve’s parameters, where the priors are non-informative or represent maximal uncertainty. In between, partially informative priors, such as those for and , provide a balanced representation that incorporates both prior knowledge and observed data.

An alternative approach is to remodel the likelihood by integrating over the prior distribution of the parameter, thus accounting for its uncertainty directly in the likelihood function. For example, the distribution of photons n from a laser with intensity could be updated as follows:

This is known to follow a negative binomial distribution with parameters (number of successes) and (success probability) [39], or more precisely:

A similar approach can be applied to the p parameter in a binomial distribution. If p is a random variable following a Beta distribution, the probability of observing k successes in n trials with a variable success probability p can be expressed as:

This scenario is modeled by a Beta-Binomial distribution [25], specifically:

where denotes the Beta function:

Modeling the photon distribution of the laser and the detector’s efficiency in this manner accounts for the uncertainty in the device parameters. However, while this approach is mathematically elegant and can simplify calculations under certain conditions, it constrains the choice of priors and necessitates a comprehensive reformulation of the likelihood function. By contrast, the first approach—placing priors directly on the parameters and updating them through the posterior—offers greater flexibility and allows for more nuanced modeling of the actual dynamics of the physical system. This flexibility is particularly crucial in security-critical applications like QKD, where the accurate modeling of all system components is essential to ensure robustness against potential attacks [40].

With this framework in place, we have moved beyond the assumption of identically distributed detection events by treating key parameters as random variables with their own distributions.

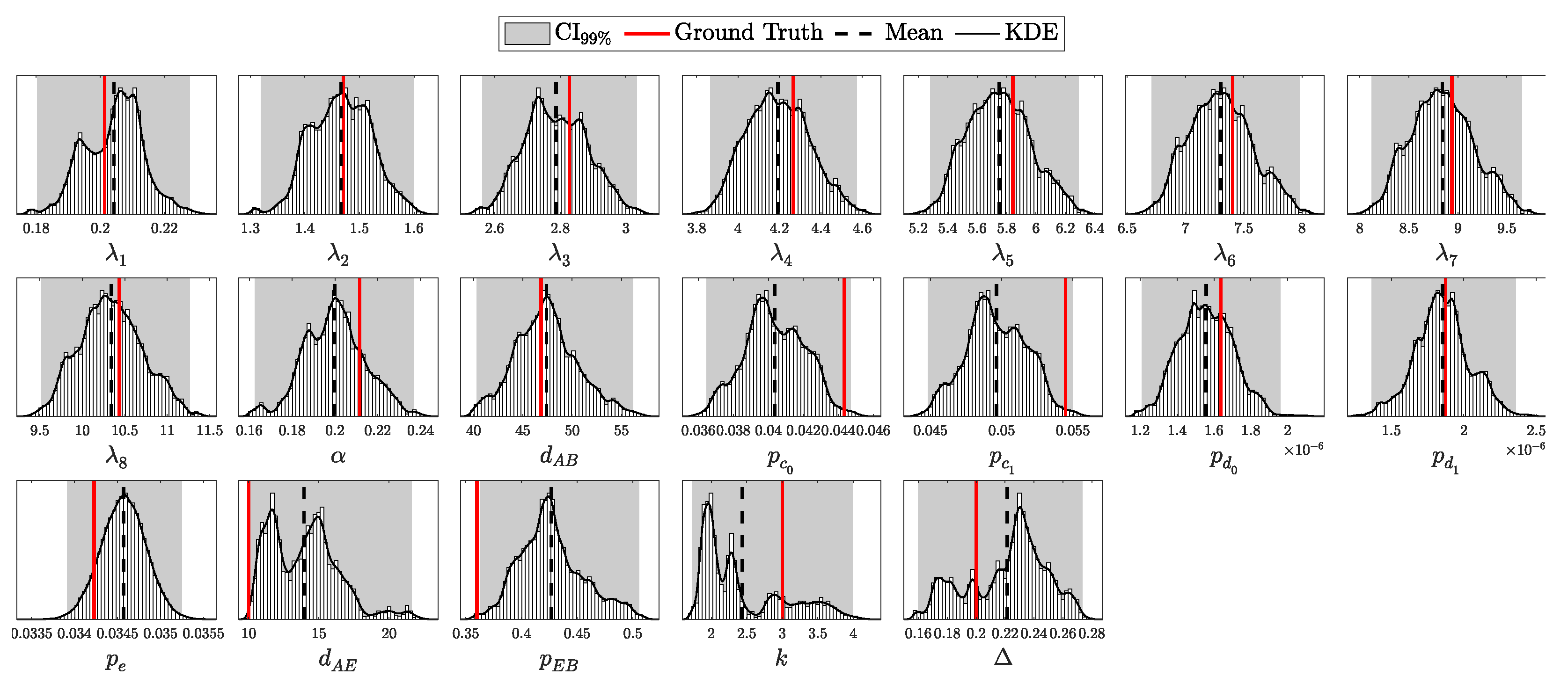

4. Experimental Results

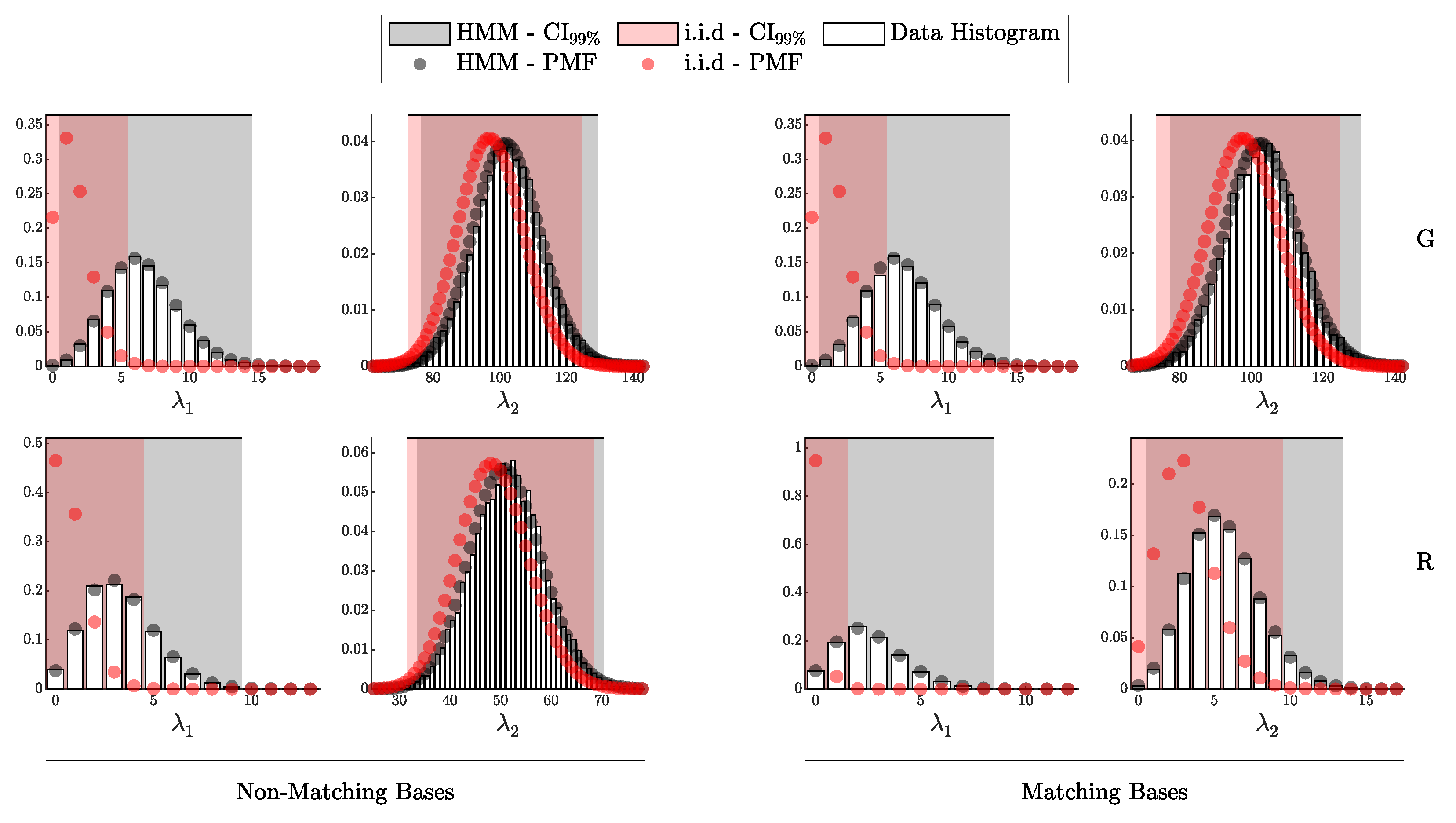

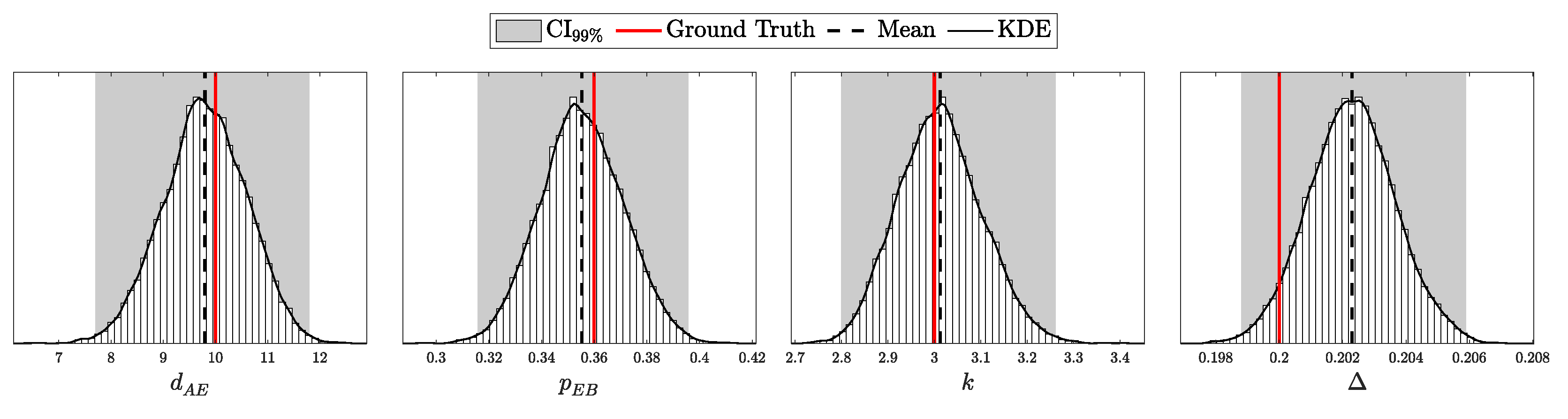

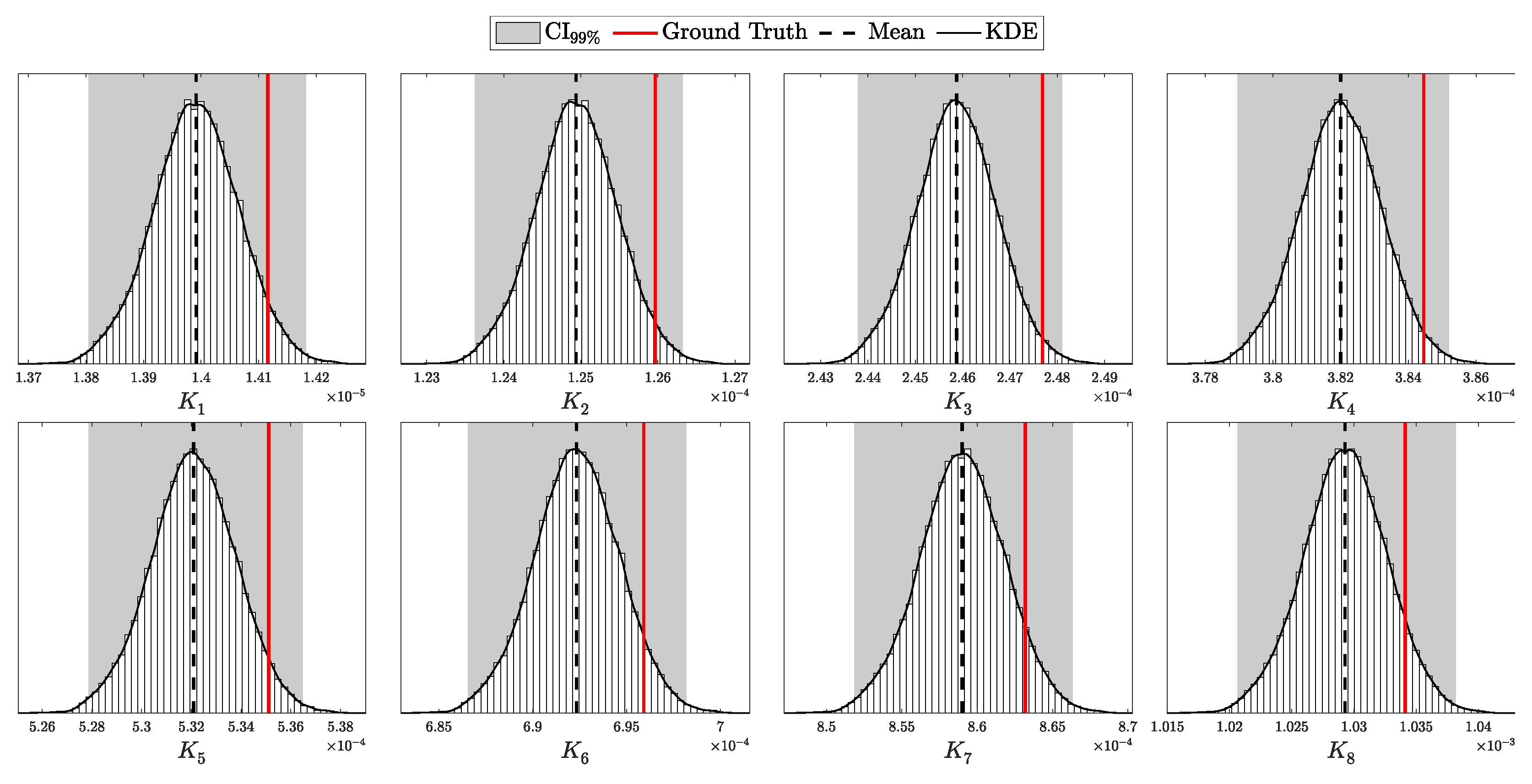

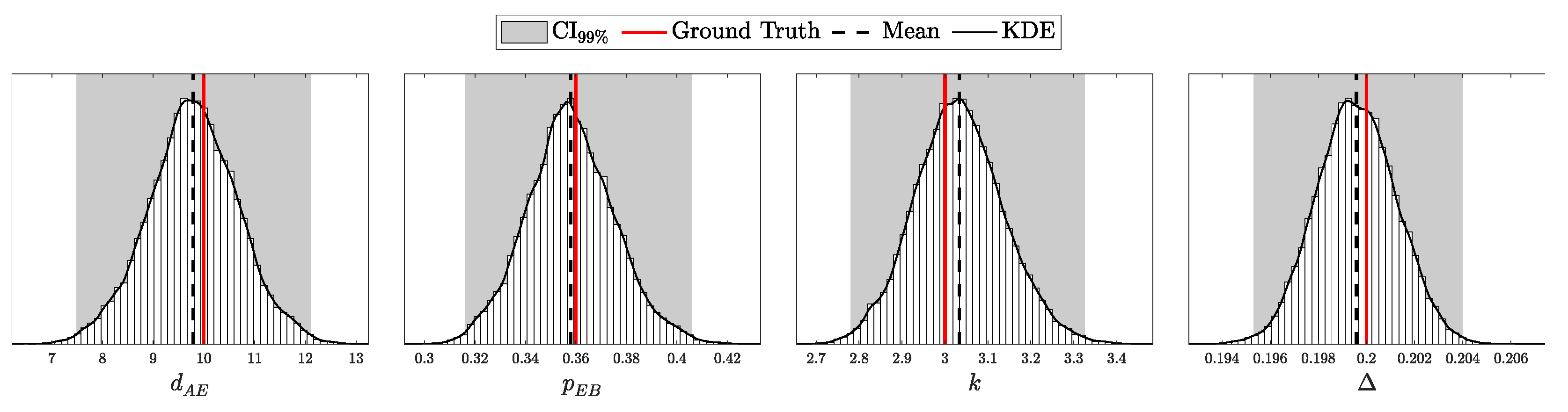

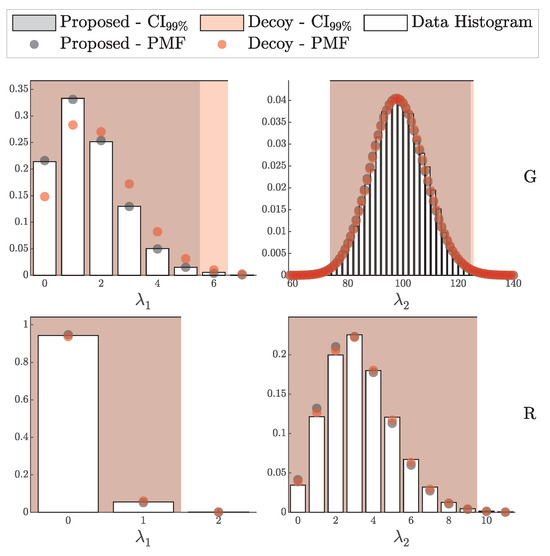

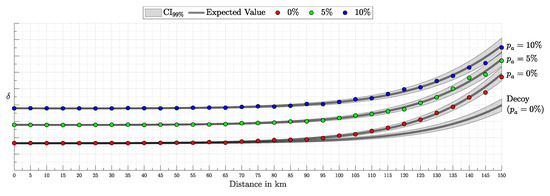

This section presents the validation and performance assessment of the proposed probabilistic framework through a series of simulations and comparisons with the decoy-state protocol. First, we test the accuracy of the probabilistic model (both i.i.d. and HMM) in predicting detection events and errors. Next, we evaluate the error modeling and its alignment with simulated results. The third subsection focuses on the secure key generation rate, highlighting improvements over the decoy-state approach. Finally, we explore the advantages of the Bayesian inference framework, demonstrating its robustness under after-pulsing scenarios (via HMM) and noisy conditions, emphasizing the superiority of the fully Bayesian approach.

4.1. Validation Through Simulation

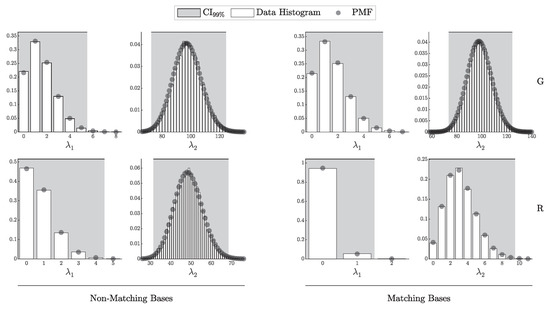

This section is dedicated to assessing how well the theoretical models developed in this work fit the data generated by simulations. Specifically, we aim to evaluate the extent to which the theoretical model captures the observed behavior under both i.i.d. and Hidden Markov Model (HMM) conditions.

The first subsection focuses on validating the i.i.d. model as formalized in Theorem 3, using data generated by the simulation algorithm described in Algorithm 1. The second subsection examines the HMM model, as formulated in Theorem 4, with data produced according to Algorithm 2. By examining these models in turn, we determine the degree to which each theoretical framework explains the simulated detection events.

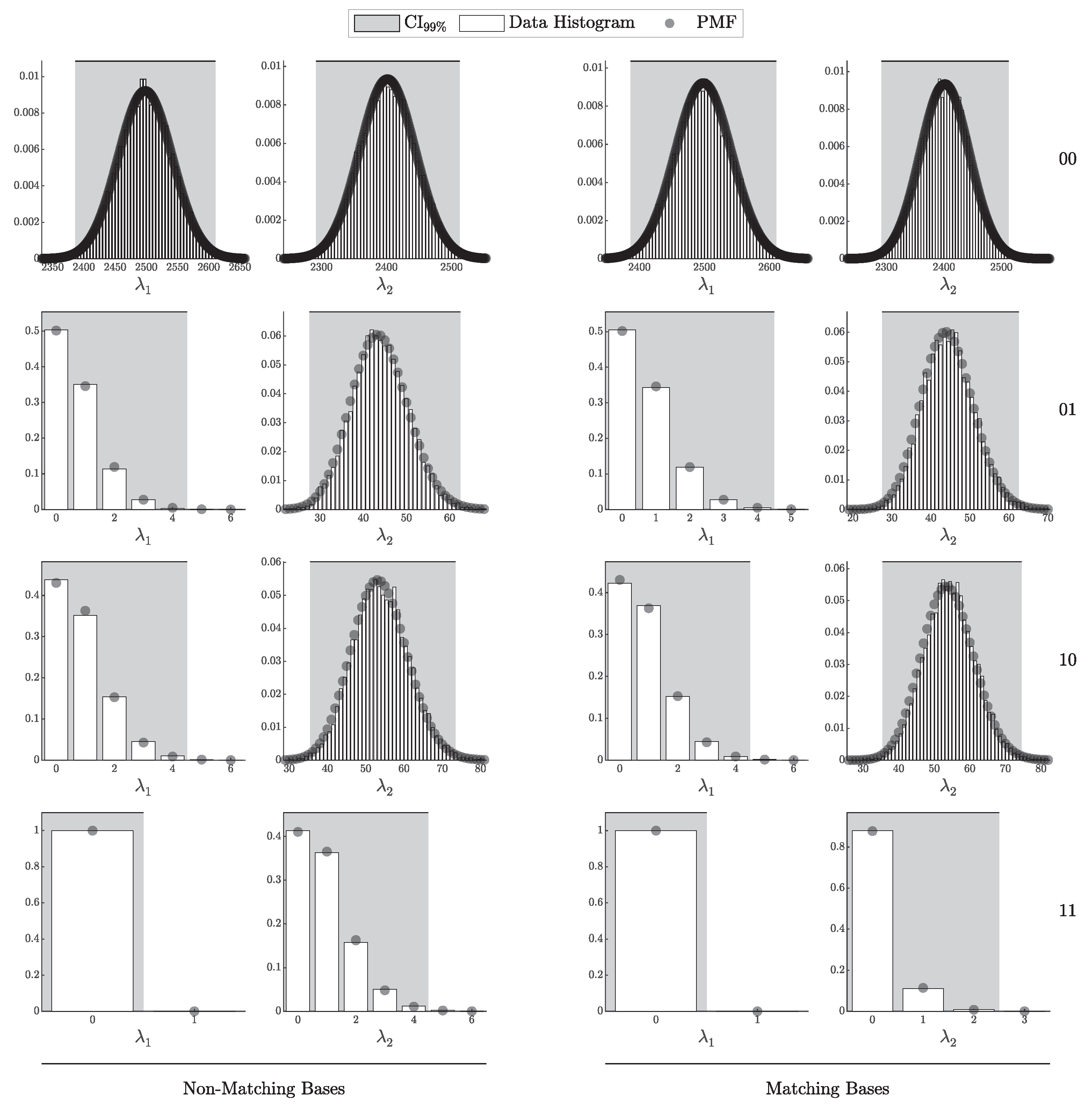

To model realistic QKD conditions, we adopted the Gobby–Yuan–Shields (GYS) configuration [41], providing parameterization suitable for real-world applications and establishing a foundation for later comparison with the decoy-state protocol [8]. The main simulation parameters are presented in Table 1. Additionally, we introduced variability in detector-specific parameters to test asymmetric scenarios, as shown in Table 2. For the i.i.d. test, the after-pulse probability was set to 0. The selected intensities for this experiment correspond to the minimum and maximum values, and , as described in Section 2.2.5. Each simulation was run 10,000 times with 10,000 pulses per run, ensuring sufficient data for a comprehensive validation of the theoretical predictions.

| Algorithm 1 Simulation of QKD detection events under the i.i.d assumption |

|

| 25: |

|

| Algorithm 2 Simulation of QKD detection events under the HMM assumption |

|

Table 1.

Parameter configuration for the simulation experiment, grouped by Alice’s parameters (), Bob’s parameters (), Eve’s parameters (), and session parameters ().

Table 2.

Detector-specific values for Bob’s parameters: after-pulse probability , detection efficiency , and dark count probability for detectors and .

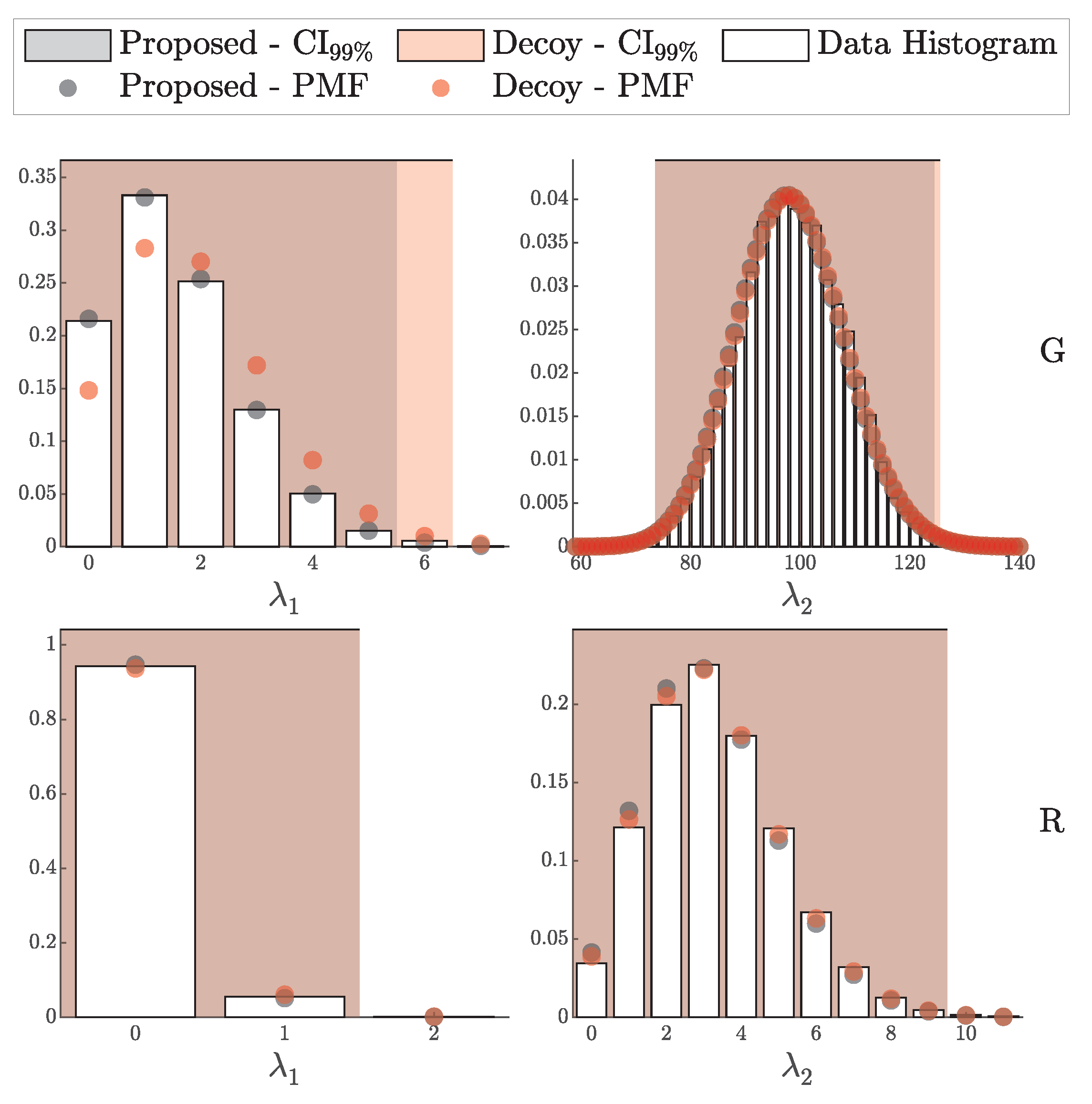

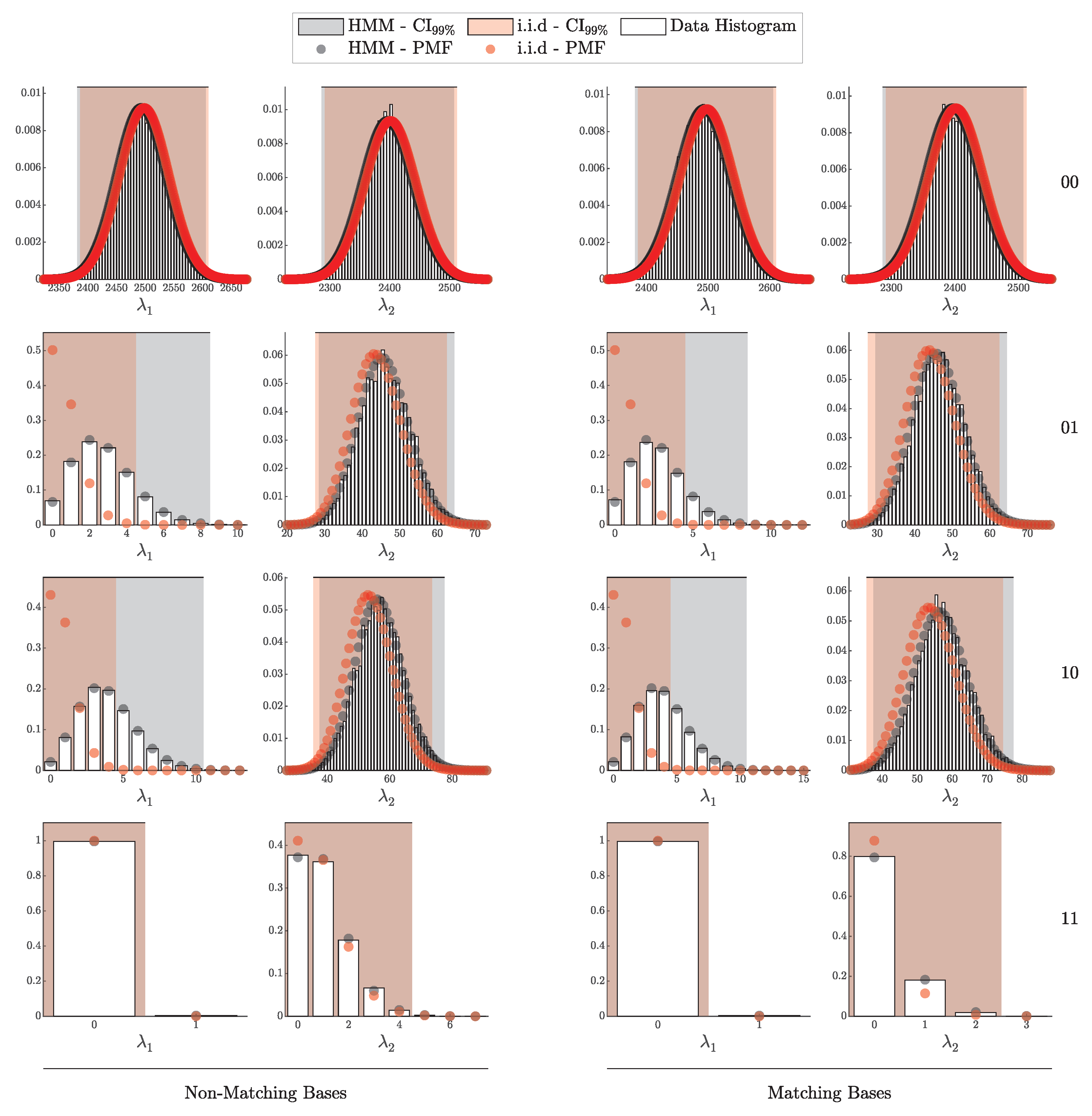

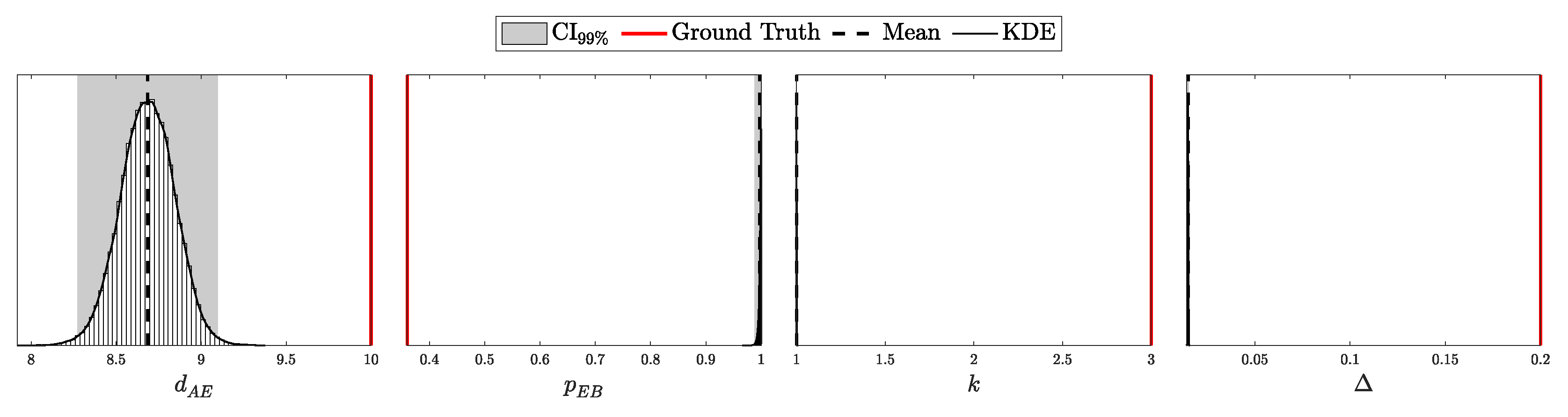

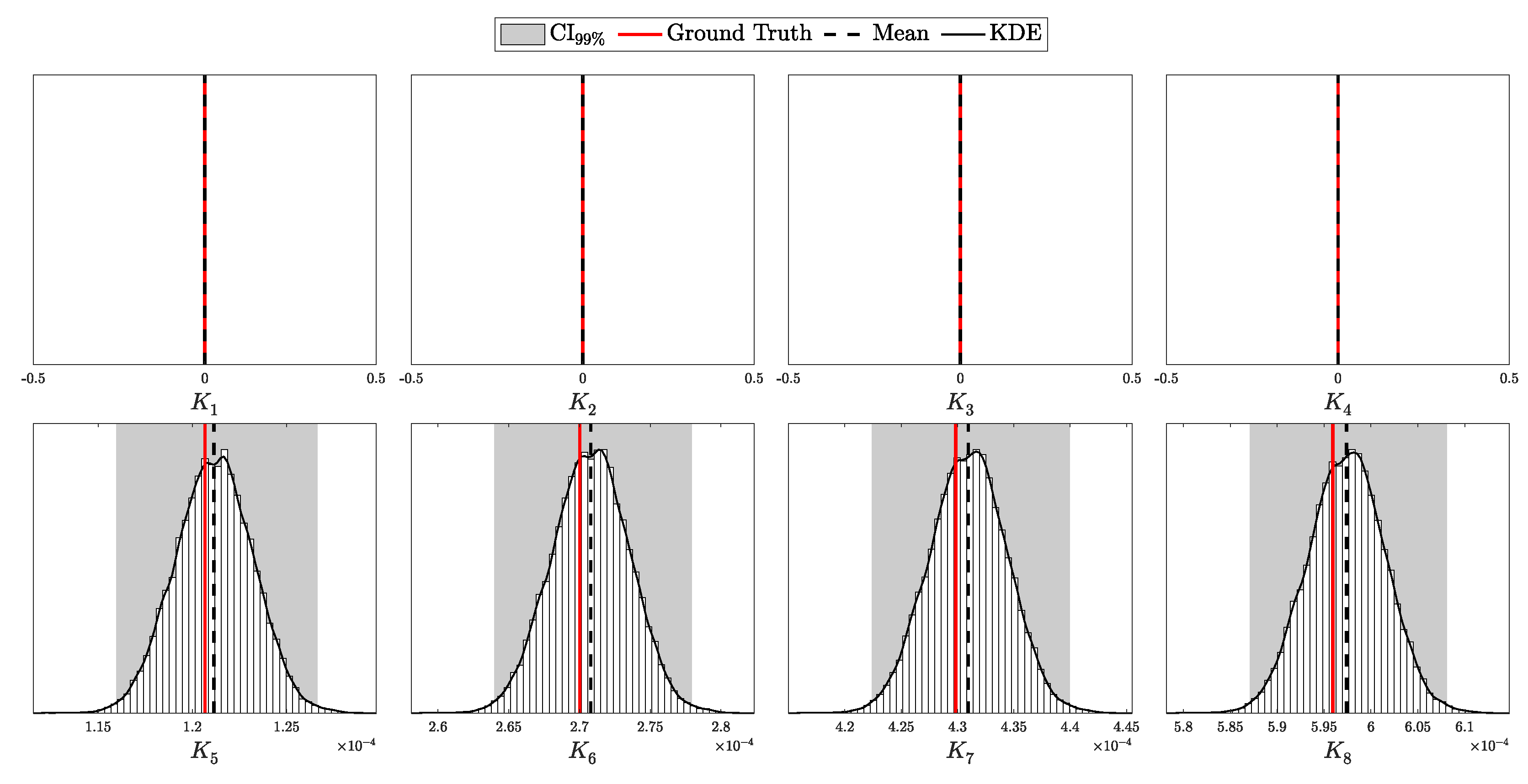

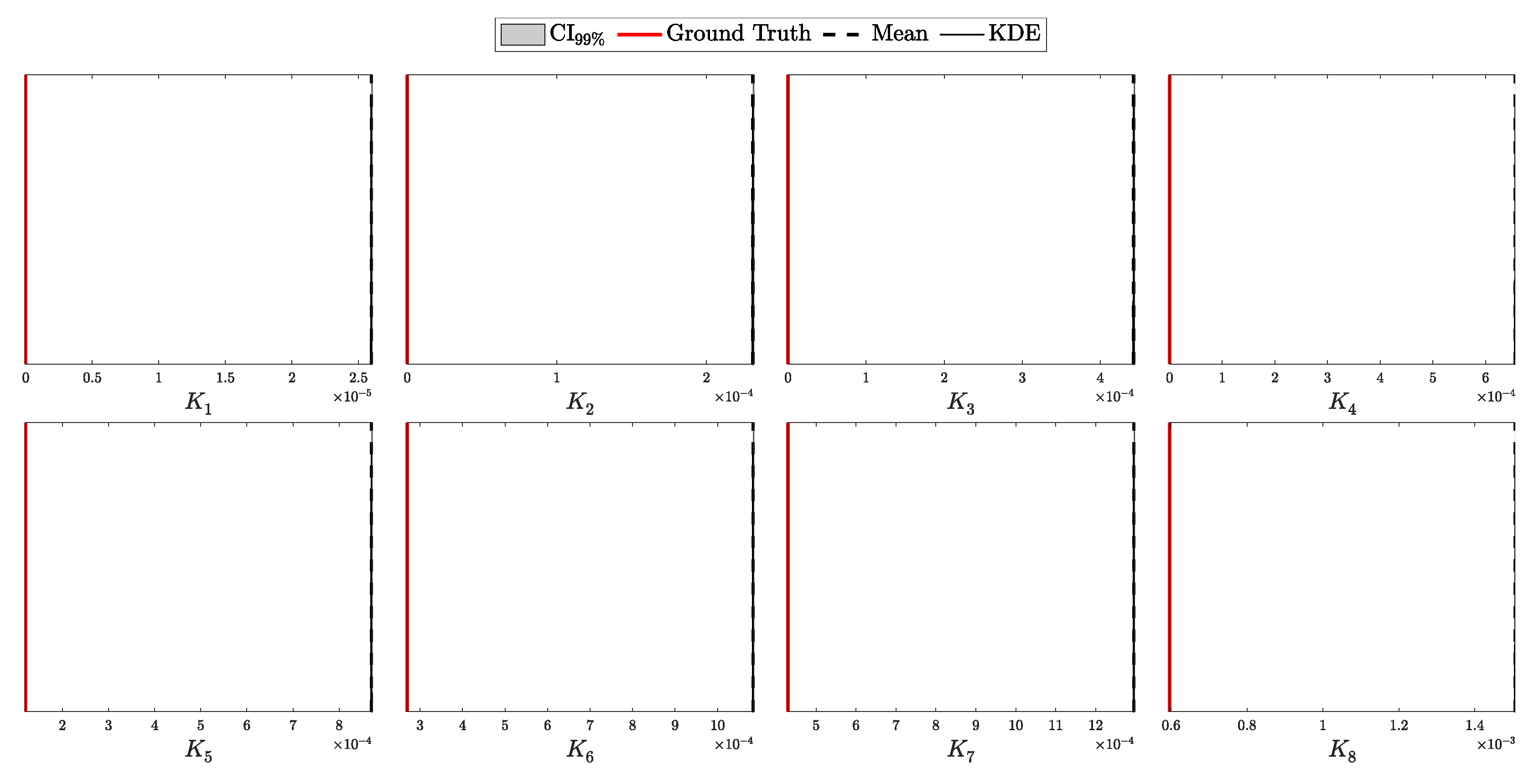

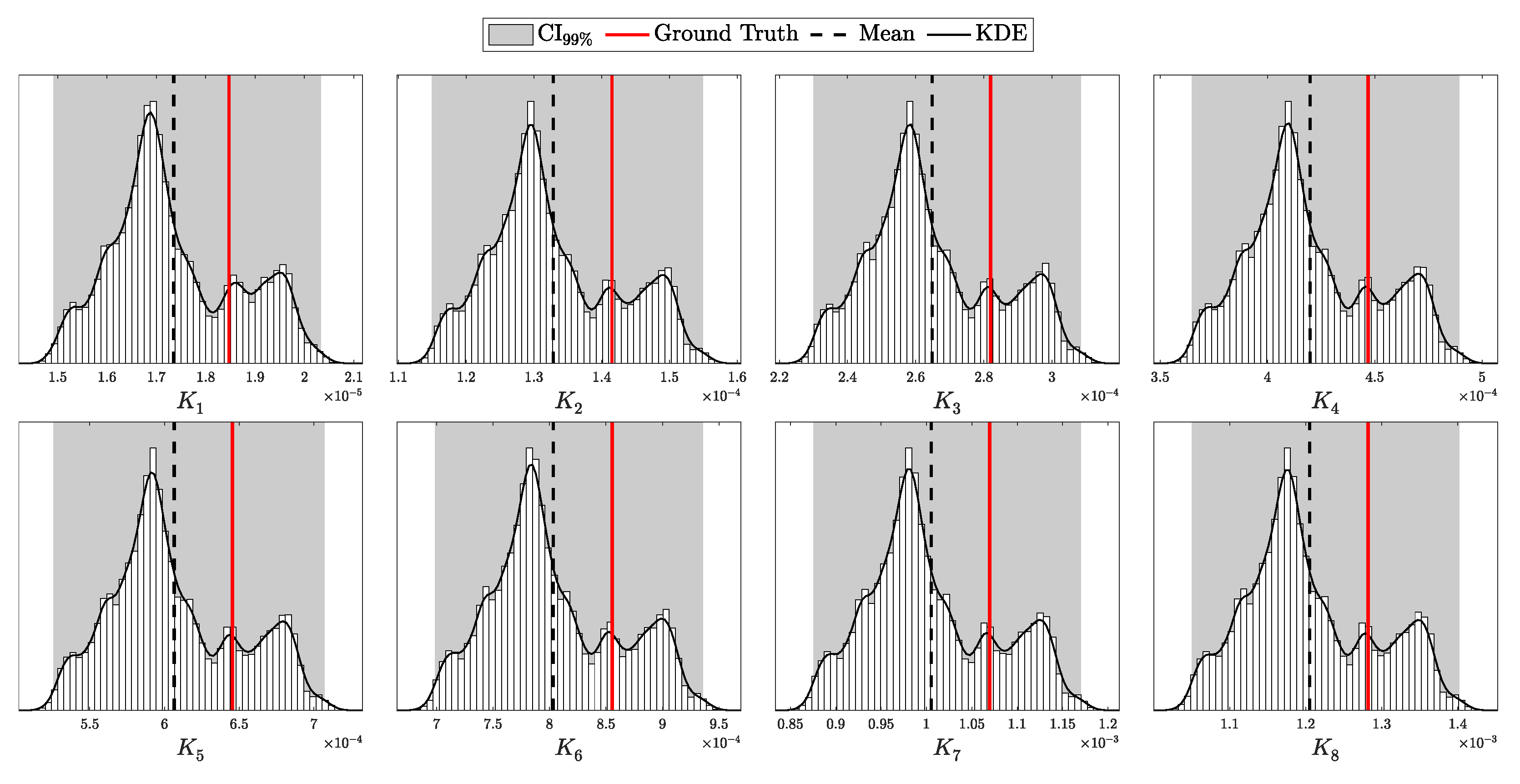

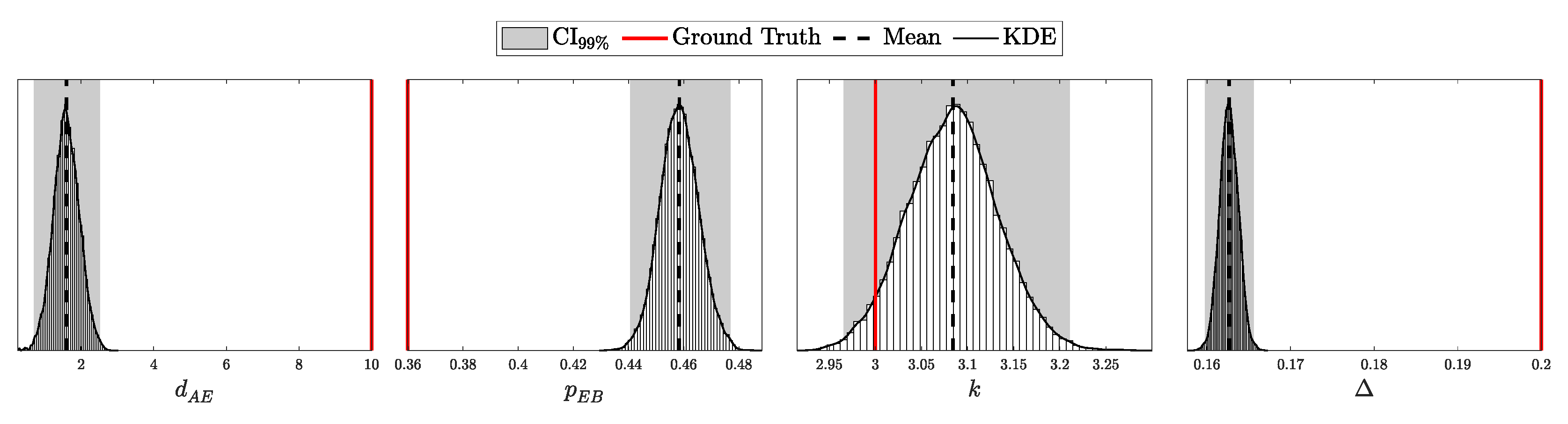

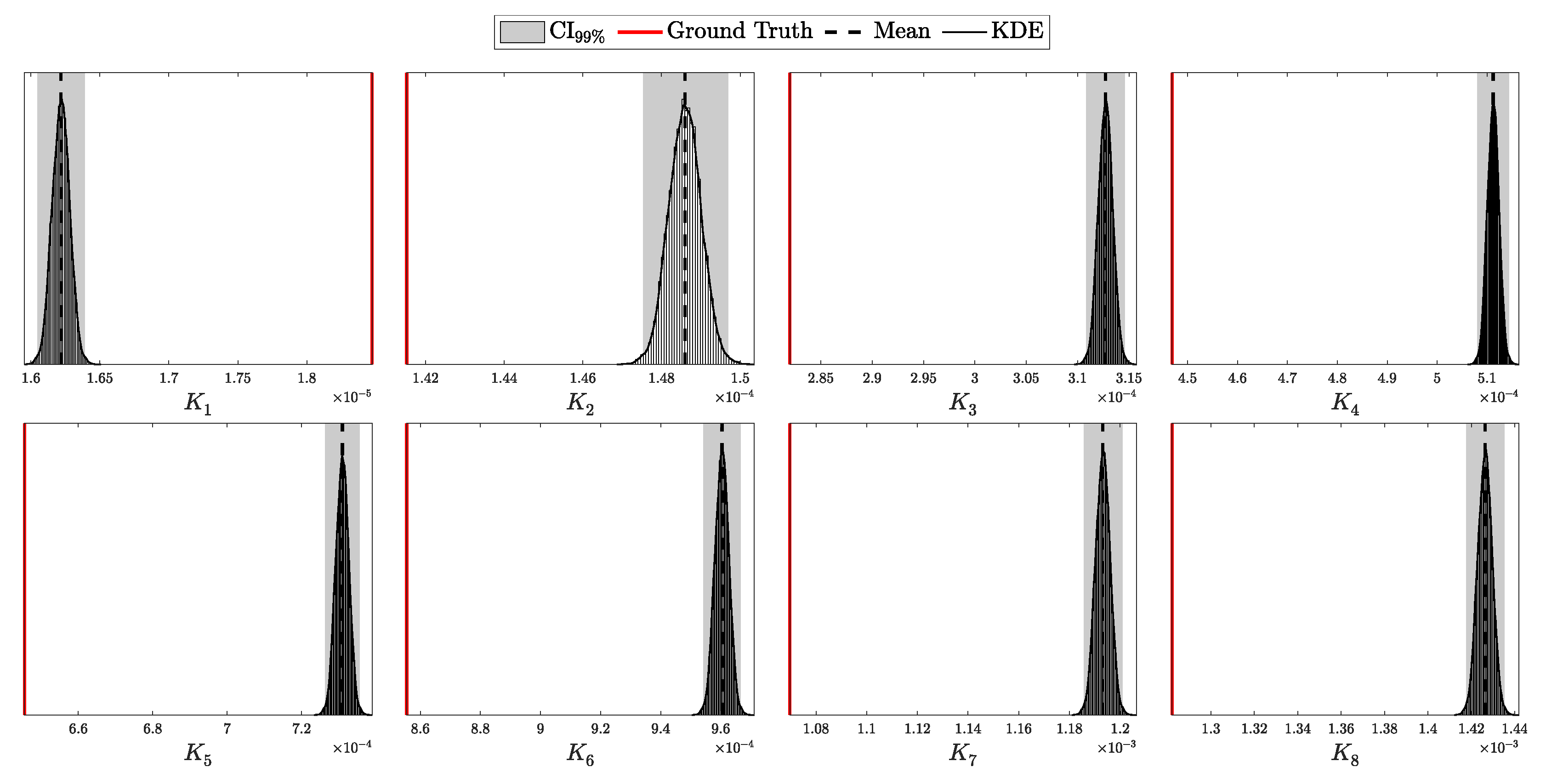

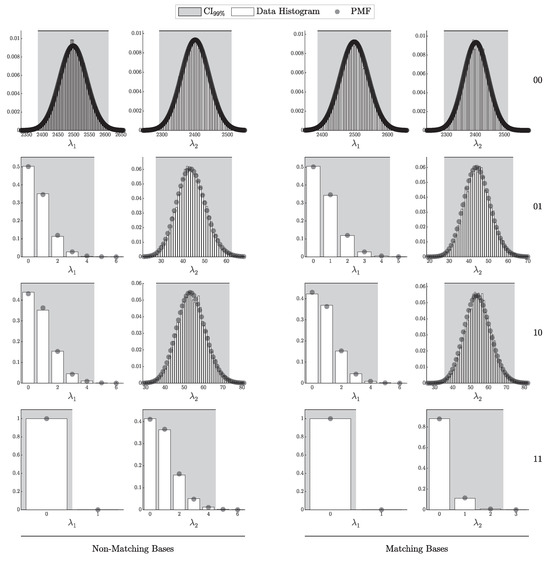

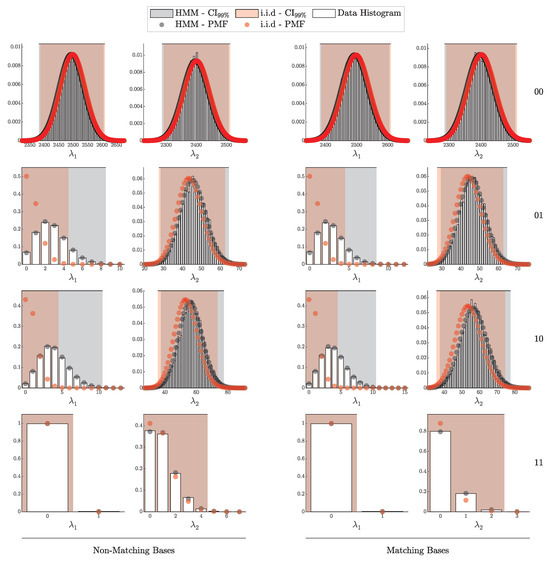

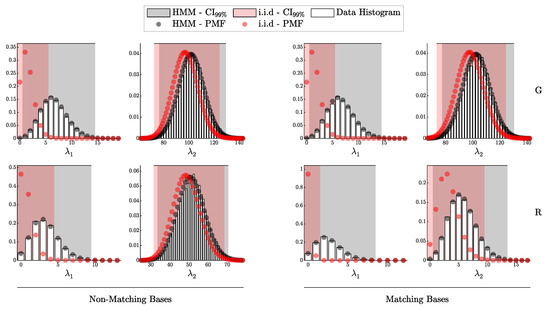

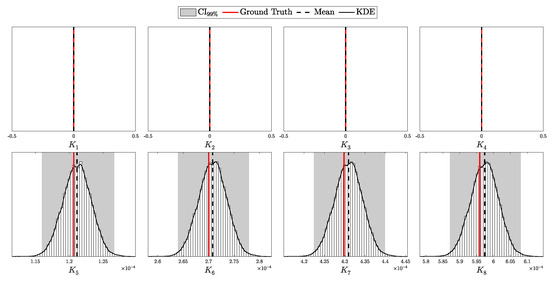

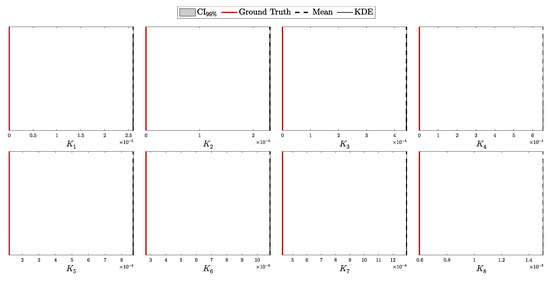

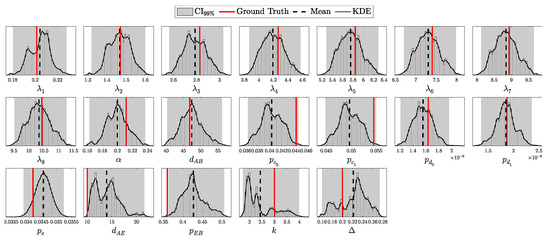

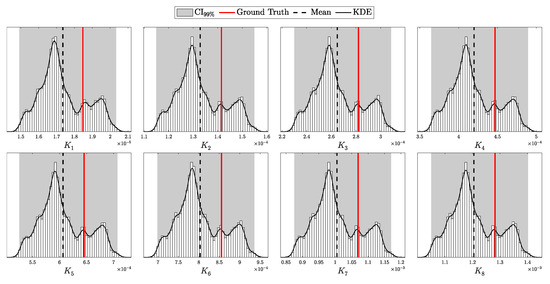

4.1.1. Validation of the i.i.d. Model

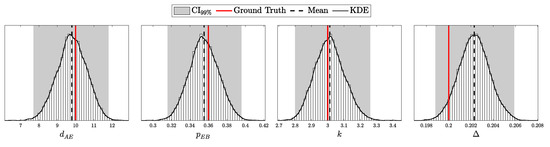

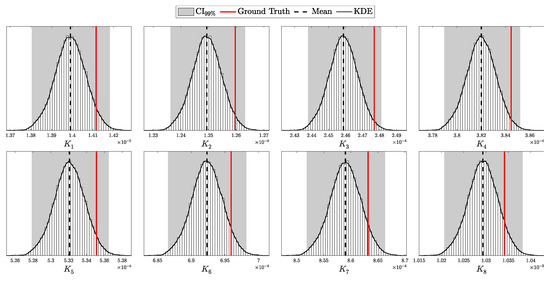

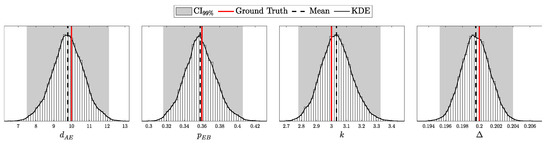

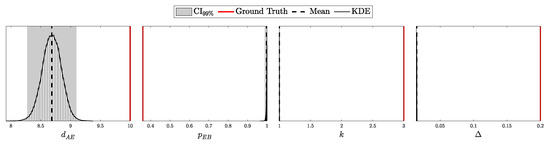

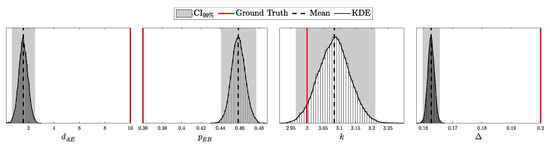

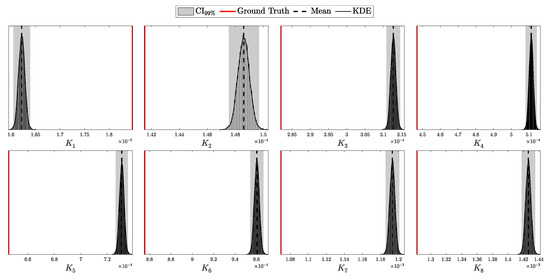

According to Theorem 3, the distribution of clicks in the i.i.d. scenario should follow a multinomial distribution with parameters N and . Here, is a probability vector of length , corresponding to two intensity levels ( and ), four possible detection outcomes (00, 01, 10, and 11), and two basis configurations (matching and non-matching).