1. Introduction

Causal discovery using methods such as FCI [

1] or IC [

2], as well as the many variants and extensions of these classic methods developed over the past several decades [

3,

4,

5,

6,

7], involves searching super-exponential spaces as the number of causal DAGs grows extremely large in the number of variables. To reduce this intractable search space, it is often possible to form equivalence classes of observationally equivalent causal models (see

Figure 2). There are approximately

DAG models on just 11 labeled variables. To make matters worse, DAG models capture only a tiny portion of the space because for

, there are 18,300 conditional independence structures, but DAG models capture only roughly 1% of this space! As we illustrate later in

Section 2, constructing causal models for pancreatic cancer requires dealing with many thousands of potentially mutated genes that combine in a dozen known pathways, leading to a search space of causal models that can be astronomically large. More powerful models like integer-valued multisets (imsets) [

8] that model conditional independences by mapping the powerset of all variables into integers grow even larger still (of the order of

). Representing this space efficiently with categorical representations like affine CDU categories [

9] or Markov categories [

10] will require defining equivalence classes over string diagrams to combat this curse of dimensionality. This challenge motivates the need for a deeper categorical understanding of the equivalence classes of observationally indistinguishable models [

11]. While allowing for arbitrary interventions on causal models enables accurate identification [

6,

7], such interventions are rarely practical in the real world. Insights such as the Meek–Chickering theorem [

3,

12,

13] allow a deeper understanding of connected paths among equivalent causal DAG models, which we propose to study using a homotopy framework in this paper.

To generalize the Meek–Chickering theorem to the categorical setting, some challenges need to be addressed.

Figure 1 shows a string diagram representation of a pollution causal model first used in our previous paper on universal causality [

14]. Such string diagrams are used in affine CD [

9] and Markov categories [

10]. As the number of causal models grows exponentially, so does the number of string diagrams, and to develop deeper insight into the underlying topological structure of causal equivalences, we introduce a coalgebraic theory of causal inference based on a categorical structure we call cPROP, which is defined as a functor category from a PROP [

15] to a symmetric monoidal category [

16].

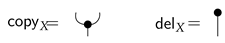

To help motivate the need for cPROPs, note that in a causal model, variables are “reused” across different local causal mechanisms. A simple example is the DAG

, whose joint distribution

decomposes in a way that reflects the conditional independence structure of the DAG. Here, the variable

B is used twice, and to make it accessible across multiple expressions, any such variable must be “copied”. Such a copy mechanism has been used in previous work on categorical causal models based on string diagrams [

9,

10,

17], which have been referred to as “copy-delete-uniform” (CDU) categories. Here, “deletion” refers to the requirement that any distribution

P can be marginalized to 1 by summing over all its values, which in categorical terms are modeled by a “delete” mechanism

(where

X is some object that represents a distribution). cPROPs provide a way to define such an “internal” category over an external category that specifies such “copy” and “delete” mechanisms by modeling them as “comonoid” objects within a category.

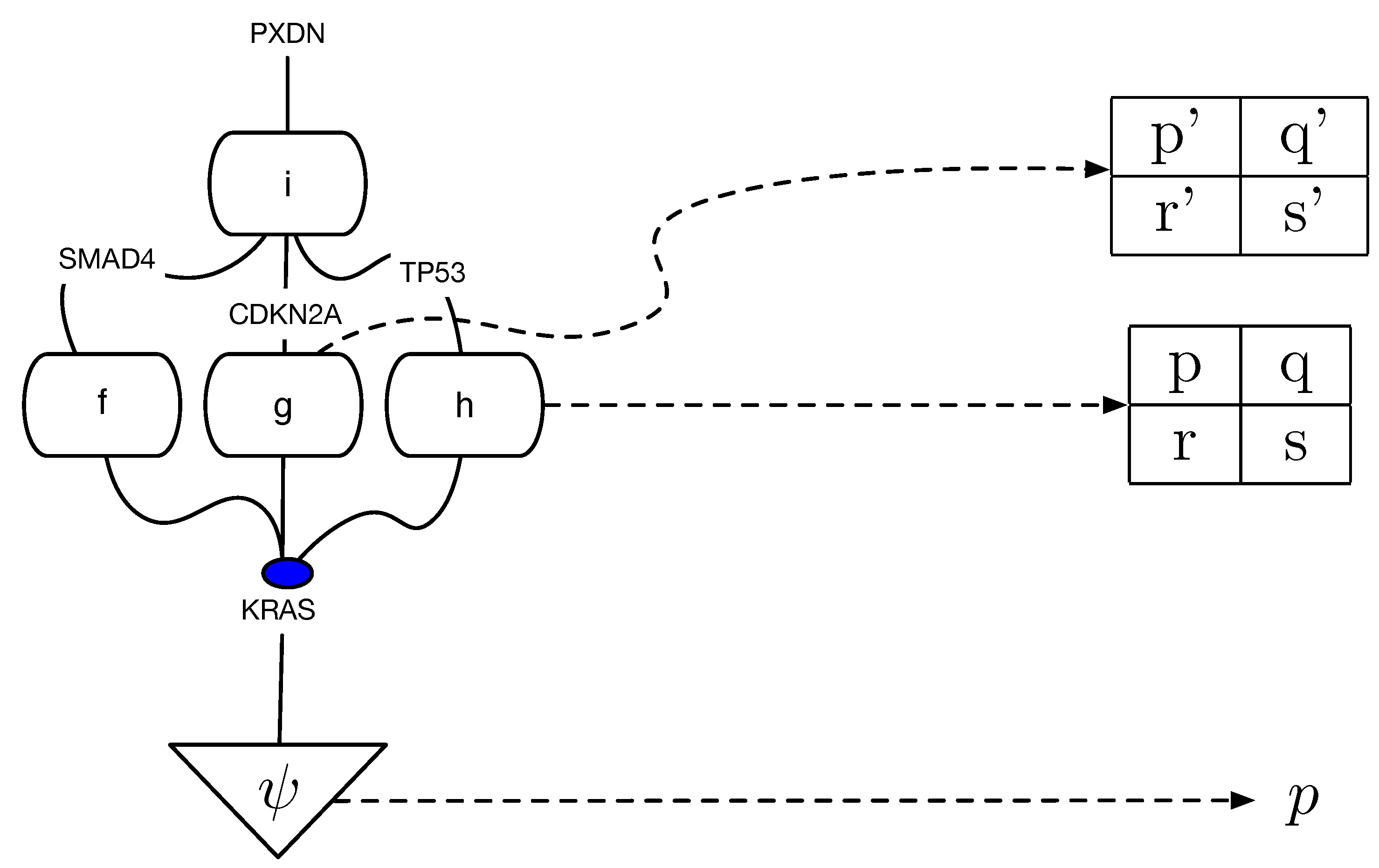

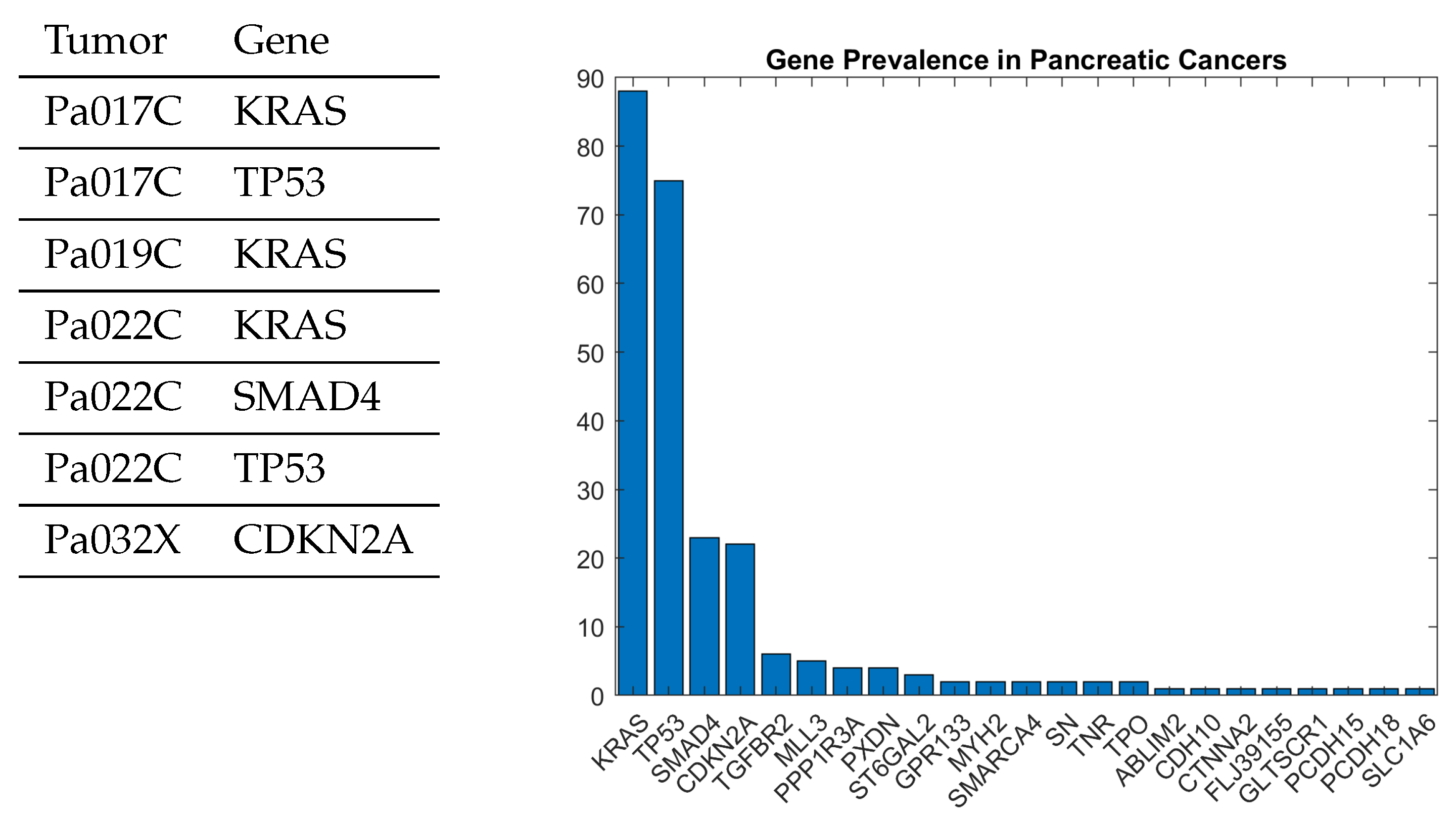

Causal discovery poses some unique challenges for categorical modeling.

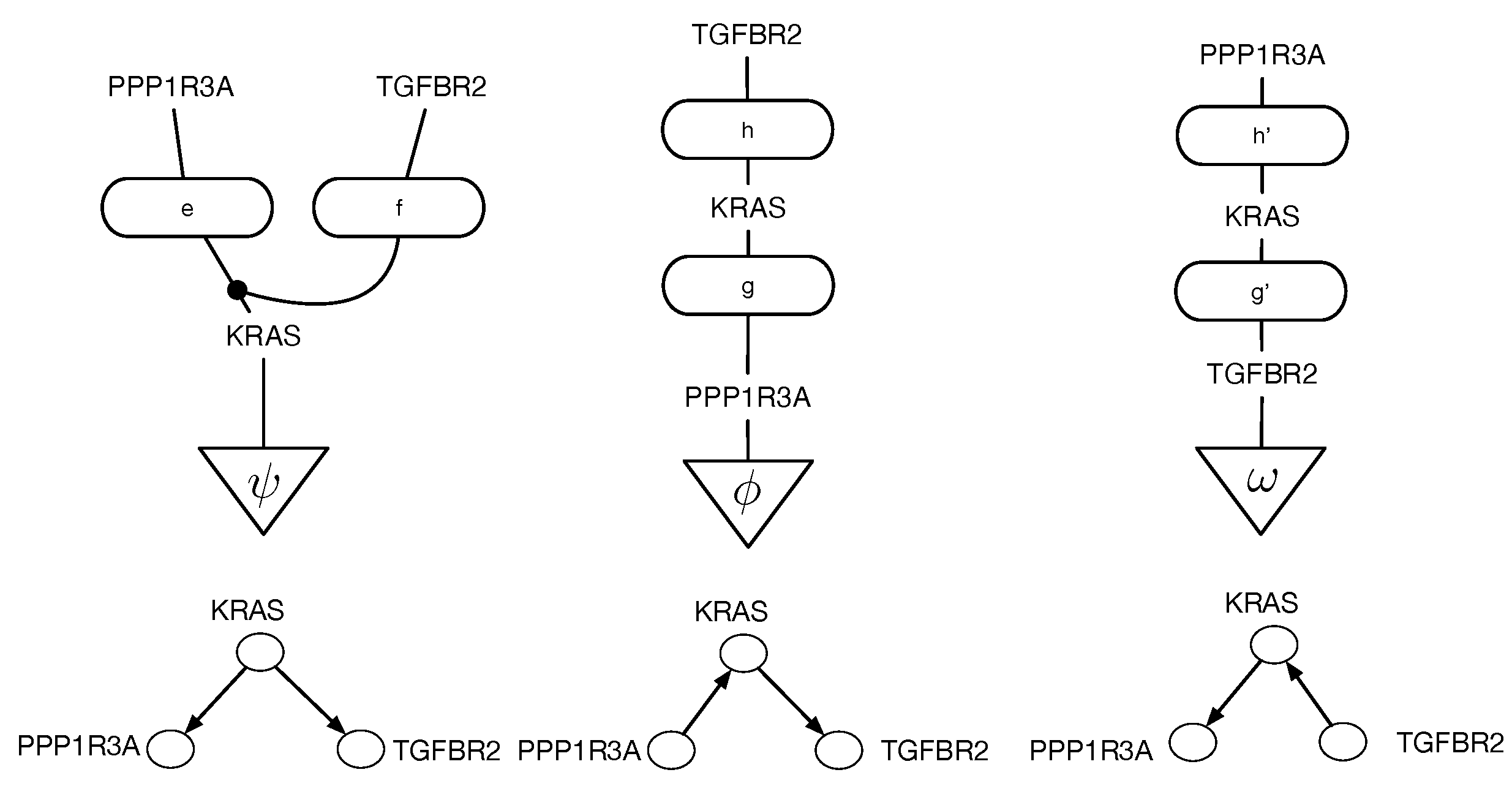

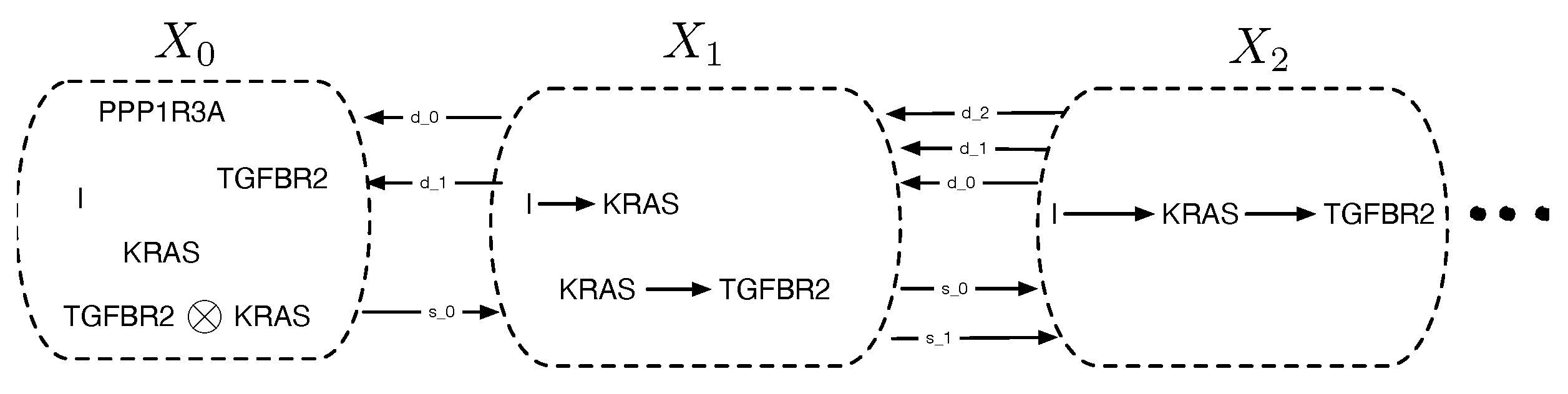

Figure 2 illustrates the structure of causal equivalence classes on causal DAGs for a simple causal model in the pancreatic cancer domain described in

Section 2. As first observed in Verma and Pearl [

11], two DAGs are equivalent if their underlying skeletons (undirected graph structure ignoring edge directions) and V-structures

are the same. Our goal here is to build on the ideas in [

3] on connected paths between observationally equivalent models, in particular the Meek–Chickering theorem, which we want to generalize to the categorical setting. As Chickering [

3] notes, this theorem, which was originally a conjecture by Meek, implies that there exists a sparse search space, where each candidate model is connected to a small fraction of the total space, given a generative distribution that has a perfect map in a DAG defined over the observables. This property leads to the development of a greedy search algorithm that in the limit of training data can identify the correct model.

Figure 2.

Equivalence classes of causal DAGs and cPROP string diagrams on 3 variables from a pancreatic cancer domain described in

Section 2 in more detail. KRAS, TGFBR2, and PPP1R3A define three genes which are mutated in many pancreatic cancer tumors, and the challenge in causal modeling is to discover a partial ordering of the gene mutations. For each DAG at the bottom, the corresponding cPROP string diagram is shown above. The three DAGs shown form a single equivalence class, which implies the three string diagrams also are equivalent. The causal discovery method GES [

3], described in

Section 3, searches in the space of such equivalence classes.

Figure 2.

Equivalence classes of causal DAGs and cPROP string diagrams on 3 variables from a pancreatic cancer domain described in

Section 2 in more detail. KRAS, TGFBR2, and PPP1R3A define three genes which are mutated in many pancreatic cancer tumors, and the challenge in causal modeling is to discover a partial ordering of the gene mutations. For each DAG at the bottom, the corresponding cPROP string diagram is shown above. The three DAGs shown form a single equivalence class, which implies the three string diagrams also are equivalent. The causal discovery method GES [

3], described in

Section 3, searches in the space of such equivalence classes.

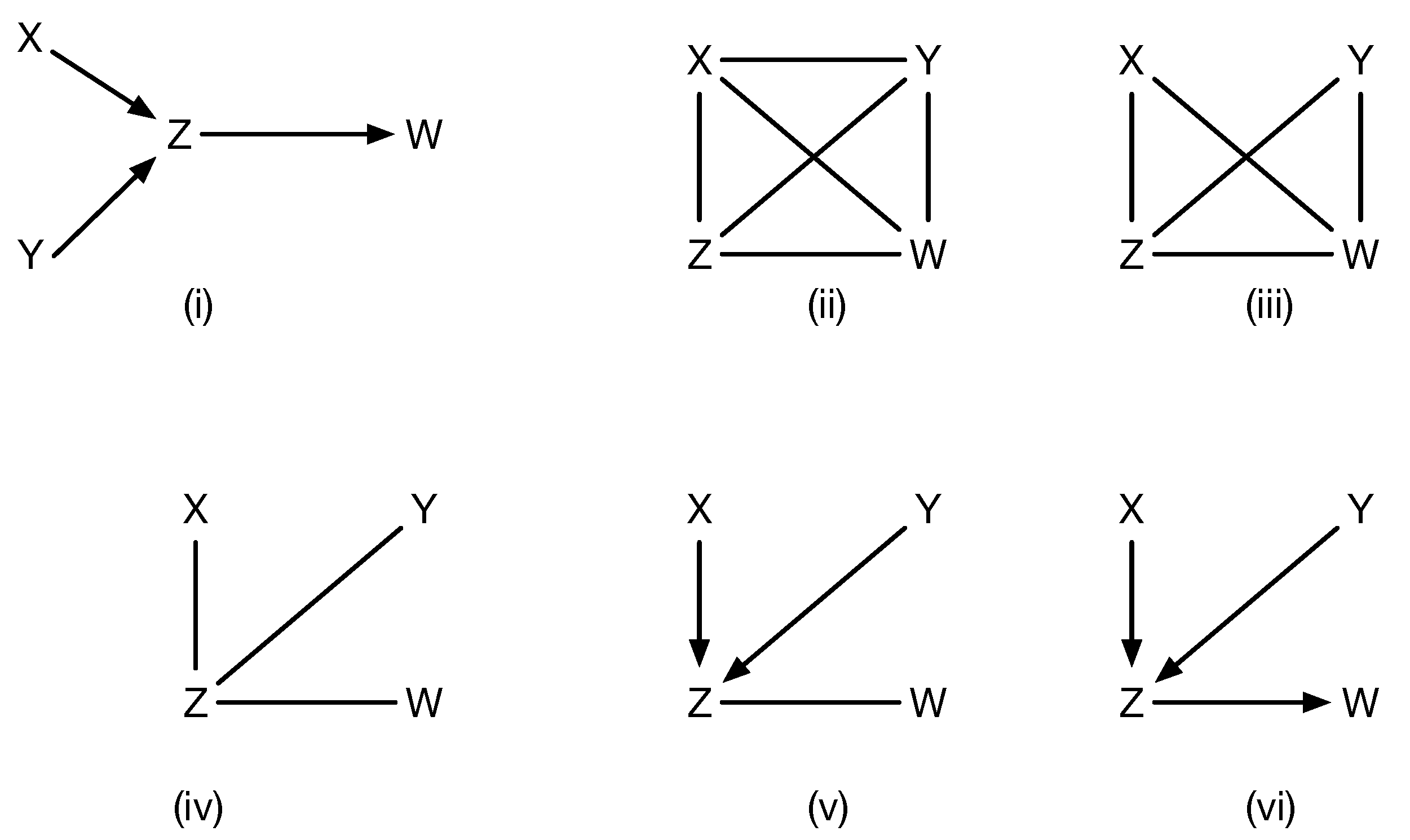

In practice, existing causal discovery algorithms, such as PC [

1] or IC [

2] or their many extensions and variants, combine both directional and non-directional encoding of causal models. Specifically, a common assumption, such as in PC, is that given an unknown true causal model (shown in

Figure 3 by panel (i)), the initial causal model (shown as (ii) in

Figure 3) is an undirected graph connecting all variables to each other, which satisfies no conditional independences, and is progressively refined (panels (ii)–(vi) in

Figure 3) based on conditional independence data and using edge orientation and propagation rules, such as the Meek rules [

12]. For example, the initial stage is to simply check all marginal independences, and given that

, that eliminates the undirected edge between

X and

Y. However, each undirected edge between two vertices, say

A and

B, that needs to be eliminated due to conditional independence must be checked for increasingly large subsets

, and while methods like FCI and later enhancements [

6,

7] incorporate rather sophisticated methods to prune the space, this process remains computationally expensive, and its practicality remains in question, as in the real world, interventions on arbitrary separating sets [

6] may be infeasible. While remarkable progress has been made over the past few decades (see [

7] for a state of the art method), it still can be prohibitive and does not always end up with the right model. Edges that remain undirected are interpreted to indicate latent confounders.

This paper builds on the work of Fox [

18], who studied functor categories mapping PROPs to symmetric monoidal categories in his PhD dissertation in 1976. Crucially, Fox [

18] studied a particular functor category from a coalgebraic PROP to symmetric monoidal categories that defined a right adjoint from the category

MON of all symmetric monoidal categories to

CART, which is the category of all Cartesian categories. We use the term coalgebraic in the universal algebraic sense as used by Fox [

18]. It differs from the modern interpretation as in [

19]. In this sense, cPROPs are formally an algebraic theory in the sense of Lawvere [

20].

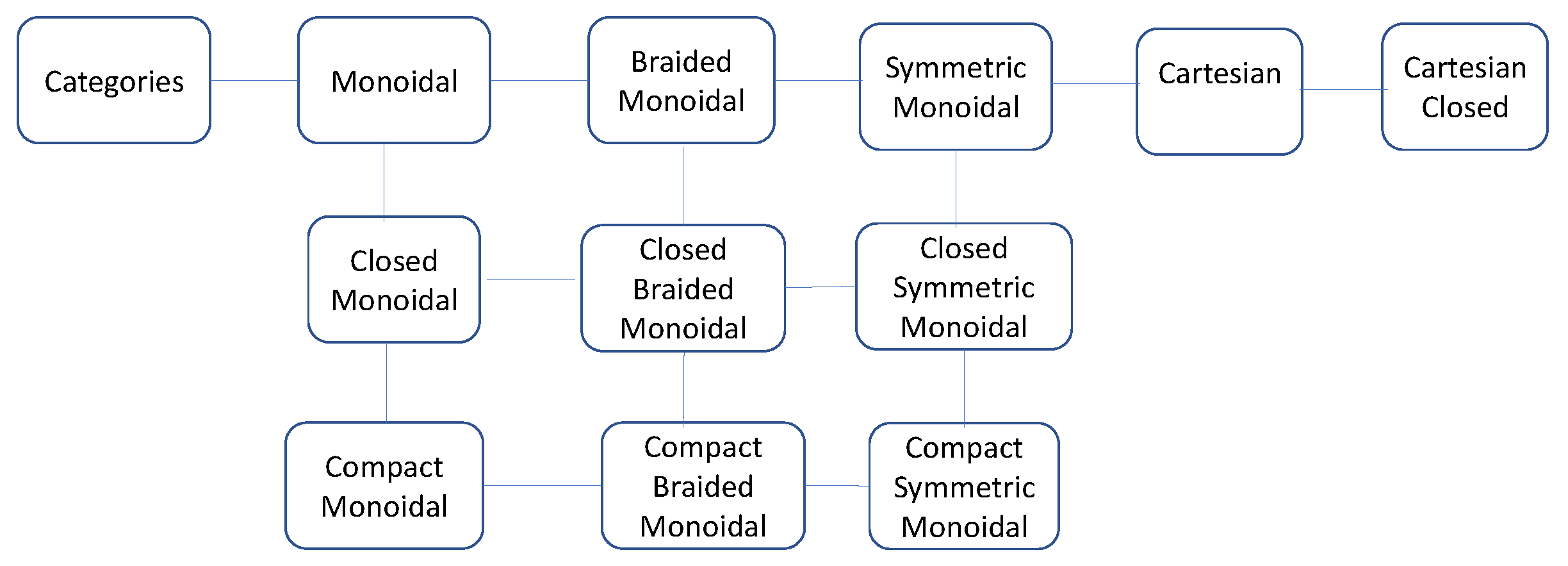

Objects in a cPROP are functors mapping a PROP

P—a symmetric monoidal category over natural numbers—to a symmetric monoidal category

. The structure PROP (for Products and Permutations) was originally introduced by Maclane [

15], and it has seen widespread use in many areas such as modeling connectivity in networks [

21,

22]. A trivial example of a PROP is the free monoidal category

, whose objects can be interpreted as the natural numbers, the unit object is 0, and the tensor product is addition. More generally, a PROP

P is a small monoidal category with a strict monoidal functor

that is a bijection on objects. A cPROP is a functor category

, where C is a symmetric monoidal category, where in addition there are usually some constraints placed on the specific PROP

P.

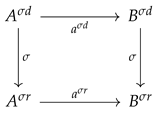

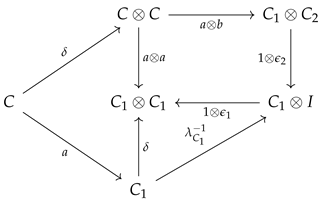

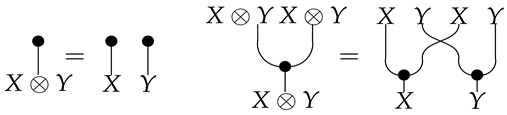

As a simple example, we consider cPROPs where the PROP

P is generated by a

coalgebraic structure defined by the maps

and

satisfying a set of commutative diagrams. Such cPROPs are closely related to symmetric monoidal category structures used in previous work on categorical models of causality, probability, and statistics [

10,

14,

23,

24,

25]. In particular, Markov categories [

10,

17] and affine CDU (“copy-delete-uniform”) categories used to model causal inference include a comonoidal “copy delete” structure corresponding to such a cPROP, which we note is distinctive in that “delete” has a uniform structure but “copy” does not, leading to a semi-Cartesian category.

In my previous work on universal causality [

14], I proposed the use of simplicial sets, which provide a way to encode both directional and non-directional edges, as well as form the basis for topological realization for cPROPs and play a central role in higher-order

∞-categories [

26,

27]. We study the classifying spaces [

28] of cPROPs in this paper, showing that they provide deeper insight into the connections between different cPROP categories that correspond to Markov categories, such as

FinStoch [

17].

In particular, this work builds on longstanding ideas in abstract homotopy theory on modeling the equivalence classes of objects in a category [

29] by mapping a category into a topological space, where (weak) equivalences can be modeled in terms of topological structures, such as homotopies. To make this more concrete, Jacobs et al. [

9] modeled a Bayesian network as a CDU functor

between two affine CDU or Markov categories, with one specifying the graph structure of the model and the other modeling its semantics as an object in the category of finite stochastic processes defined as

FinStoch. A CDU functor is a special type of cPROP functor. Two Bayesian networks modeled as cPROP functors that are observationally equivalent—such as

and

, since the edge

is a covered edge that can be reversed—induce a natural transformation

. Using the associated classifying spaces

and

, the natural transformation induces a homotopy between

and

.

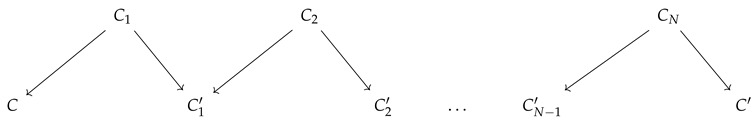

The idea of associating a topological space with a category goes back to Grothendieck but was popularized by Segal [

28]: map a category

to a sequence of sets (or objects)

, where the

k-simplex

represents composable morphisms of length

k. A standard topological realization proposed by Milnor [

30] constructs a topological CW complex out of simplicial sets. Segal called such a construction the classifying space

of category

. This paper can be seen as an initial step in building a higher algebraic K-theory [

31] for causal inference, using as a concrete example the study of classifying spaces of cPROPs. A 0-simplex in a simplicial cPROP would be defined by its objects

, which map to 0-cells in its classifying space. An example 2-simplex in a cPROP, such as

, maps to a 2 cell or simplicial triangle.

This paper builds on the insight underlying Fox’s dissertation on universal coalgebras [

18], which shows that the subcategory of coalgebraic objects in a monoidal category forms its Cartesian closure. The adjoint functor theorems show that cofree algebras—right adjoints to forgetful functors—exist in such cases. In particular, Fox’s theorem implies that cPROPs that come with a type of “uniform copy-delete” structure [

32] are Cartesian symmetric monoidal categories, where the tensor product

becomes a Cartesian product operation through natural transformations rather than the standard universal property. It is noted that Markov categories are semi-Cartesian because the comonoidal

structure is not uniform, but only

is. However, they contain a subcategory of deterministic morphisms that induce a Cartesian category using the uniform copy delete structure. It is worth noting here that Pearl [

2] has long advocated causality as being being intrinsically deterministic in his structural causal models (SCMs), where the role of probabilities is reflected in the uncertainty associated with exogenous variables that cannot be causally manipulated.

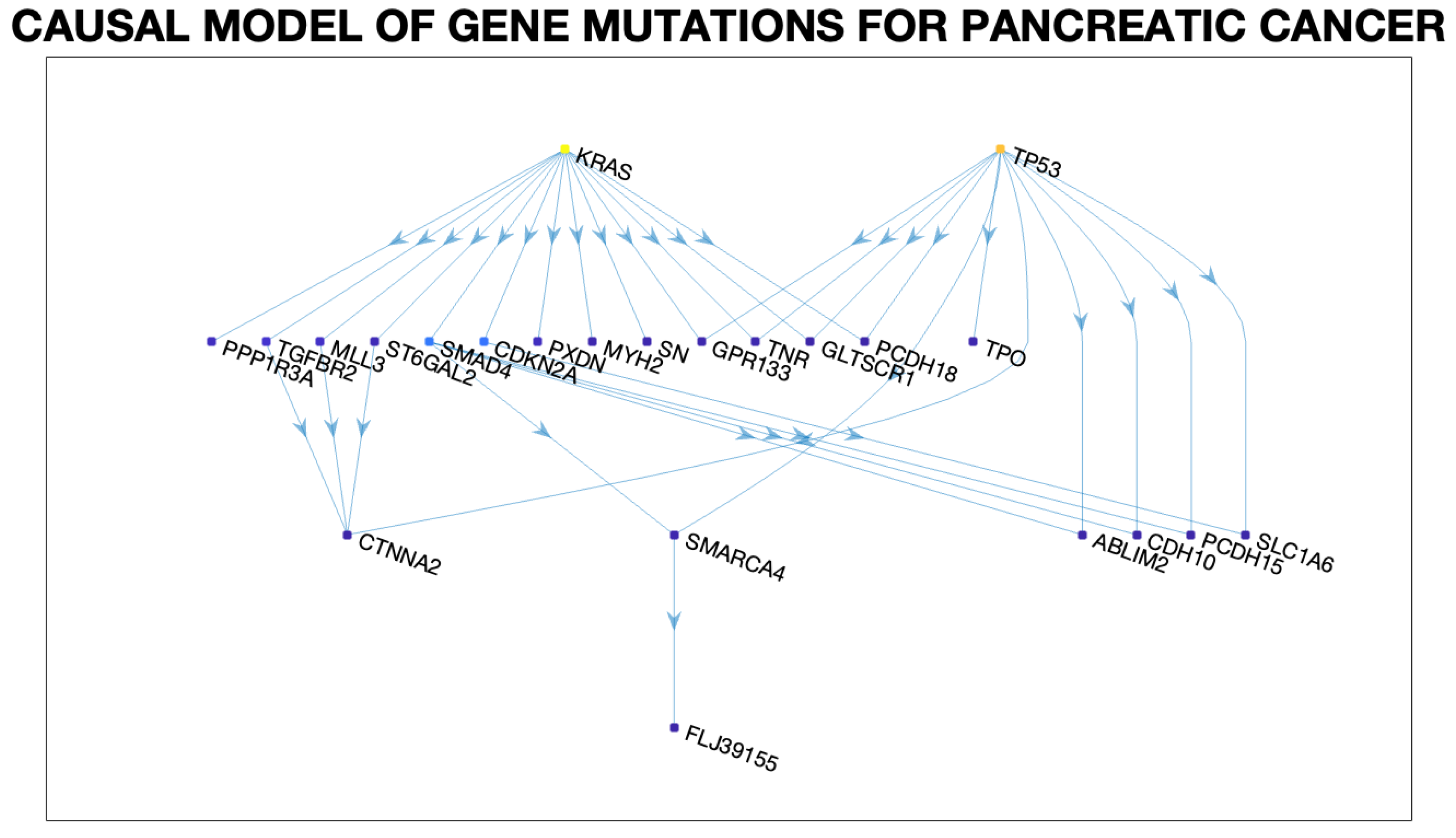

Here is a roadmap to the rest of the paper. To concretize the abstractions presented in the paper, it begins in

Section 2 with an application to constructing causal models of pancreatic cancer [

33,

34,

35].

Section 3 describes a concrete procedure for causal discovery called Greedy Equivalent Search (GES) [

3,

12] that uses a specific notion of causal equivalence based on a transformational characterization of Bayesian networks, which are generalized to a homotopical setting. GES is also illustrative of a broad class of similar algorithms. Numerous refinements are possible, including the ability to intervene on arbitrary subsets [

6,

7], which are overlooked in the interests of simplicity.

Section 4 begins with an introduction to algebraic theories of the type proposed by Lawvere [

20], a brief review of symmetric monoidal categories, and an introduction to PROPs and cPROPs. Functor categories mapping a PROP to a symmetric monoidal category are defined. The central result of Fox is reviewed, showing that the inclusion of all Cartesian categories CART in the larger category of all symmetric monoidal categories MON has a right adjoint, which is defined by a coalgebraic PROP functor category. This coalgebraic structure relates to the “uniform copy-delete” structure studied by [

32]. In

Section 5, the relationships between cPROPs with uniform copy and delete natural transformations and previous work on affine CDU categories [

25] and Markov categories [

17] are explored.

Section 6 explores the relationship between Pearl’s structural causal models (SCMs) and Cartesian cPROPs defined by deterministic morphisms, exploiting the property that SCMs are defined by purely deterministic mappings from exogenous variables to endogenous variables. In

Section 7, simplicial objects in cPROP categories are defined.

Section 8 defines the abstract homotopy of cPROPs at a high level.

Section 9 drills down into showing the homotopic structure of cPROP functors that represent Bayesian networks, which closely relates to the work on CDU functors [

9]. Natural transformations in the functor category of Bayesian networks modeled as cPROPs using Yoneda’s coend calculus [

16] are characterized, and an equivalence relationship among functors is defined. In particular, categorical generalizations of the definitions of equivalent causal models in [

3,

12] are presented, and a homotopic generalization of the well-known Meek–Chickering theorem for cPROPs is stated. Each edge reversal of a covered edge corresponds to natural transformation between its corresponding cPROP functor. This work formally characterizes the classifying spaces of cPROPs in terms of associative and commutative

H-spaces [

29]. Finally, the results of the previous section in

Section 11 are combined, stating the main result that the Grayson–Quillen procedure applied to cPROP yields a category

that represents a Grothendieck group completion of cPROP category

and whose connected components that define the 0th order homology (loop) space are isomorphic to the Meek–Chickering equivalence classes. In

Section 12, a more advanced application of the framework to open games [

36] and network economics [

37] is defined, wherein both of these fields can be defined using symmetric monoidal categories and are therefore amenable to the approach given here. In

Section 13, the paper is summarized, and an outline of a few directions for further work is given.

3. Greedy Equivalence Search

To motivate the theoretical development in subsequent sections, we focus our attention in this section to a specific causal discovery algorithm, Greedy Equivalence Search (GES), originally proposed by Meek [

12], whose correctness and asymptotic optimality were subsequently shown by Chickering [

3], constituting an a algorithmic proof of the Meek–Chickering theorem. This framework is not presented as a state-of-the-art causal discovery algorithm (e.g., Zanga and Stella [

4] provide a detailed survey of many causal discovery methods), but rather as an exemplar of the idea of searching in a space of equivalence classes of DAG models. The ultimate goal is to provide a topological and abstract homotopic characterization of the search space in causal discovery, both for DAG and non-DAG models. It would help to concretize the following theoretical abstractions to ground out the ideas in a specific algorithm. The notion of a

covered edge is fundamental to the work on causal equivalence classes in [

3,

12].

Definition 1. Let be any causal DAG model. An edge is covered if X and Y have identical parents, with the caveat that X is not a parent of itself. In other words, the parents of Y in are the parents of X along with X itself.

For the sake of space, the discussion of GES will be brief, and we shall relegate all missing details to the original paper [

3]. Broadly, the idea underlying GES is to search over equivalence classes of DAGs by moving at each step to a

neighbor—meaning a model outside the current equivalence class by edge addition or deletion—that has the highest Bayesian score on a given IID dataset if it improves the score. GESs in the space of DAGs that result from adding one edge to a given equivalence class in the forward direction (see

Figure 7). Similarly,

Figure 8 shows the two equivalence classes of DAGs that result from

deleting a single edge to the DAGs in

Figure 2. This describes the second reverse phase of the GES. It is proven in [

3] that this two-phase procedure is asymptotically optimal in the limit of large datasets, provided the data were generated from some DAG. The challenge addressed in this paper is how to mathematically model the equivalence classes used in a method like GES by mapping them into equivalence among topological embeddings of string diagrams. Our motivation is to see if these ideas can be generalized to a larger class of causal models than DAGs and ultimately design improved methods, although that goal lies beyond the scope of this paper.

Bayesian approaches to learning models from data use a scoring function, such as the Bayesian Information Criterion (BIC), denoted as

, where

D is an IID (independent and identically distributed) dataset sampled from the original (unknown) model. It is commonly assumed that such a score is locally decomposable, meaning that

The overall score of a candidate DAG G is the sum of local scores for each node that is purely a function of the projected data D onto the node and its parents . Given a DAG G and a probability distribution , G is a perfect map of p if (i) every independence constraint in p is implied by the structure of G and (ii) every independence constraint implied by the structure of G holds in p. If there exists a DAG G that is a perfect map of distribution , p is called DAG-perfect. Under the assumption that the dataset D is an IID sample from some DAG-perfect distribution , the GES algorithm consists of two phases that are guaranteed to find the correct DAG G optimally in the limit of large datasets. The precise statement is as follows.

Theorem 1 ([

3])

. Let denote the equivalence class that is a perfect map of the generative distribution , and let m be the number of samples in a dataset D. Then in the limit of large m, for any equivalence class . Here, is a Bayesian scoring method, like the BIC, and it is assumed to score all DAGs in an equivalence class the same. The notion of equivalence classes is obviously fundamental to the GES, and the formal statement of this characterization comes from the following transformational characterization of Bayesian networks. As previously noted, a covered edge in a DAG G is an edge with the property that the parents of Y are the same as the parents of X along with X itself.

Theorem 2 (

Meek–Chickering Theorem [

3,

12])

. Let and be any pair of DAGs such that , meaning that is an independence map of , that is, every independence property in holds in . Intuitively, implies that contains more edges than . Let r be the number of edges in that have opposite orientations in , and let m be the number of edges in that do not exist in either orientation in G. Then, there is a sequence of edge reversals and additions in with the following properties:Each edge reversed is a covered edge.

After each reversal and addition, is a DAG, and .

After all reversals and additions, .

To relate this result and the ensuing GES algorithm to the original PC algorithm illustrated in

Figure 3, unlike the PC, the GES begins at the opposite end of the lattice of DAG models shown in

Figure 2, the empty DAG (which can be viewed as the

DAG in Theorem 2), then progressively adds edges in the first phase, and then deletes edges in the second phase. In

Section 9, this theorem will be generalized to construct a topological and abstract homotopical equivalence across functors between cPROP categories. These functors are equivalent to the CDU functors proposed by Jacobs et al. [

9] to model Bayesian networks previously. Edge reversals or additions will correspond to natural transformations.

A further characterization of causal equivalence classes emerges from our application of higher algebraic K-theory [

28,

31]. Informally, we can define the notion of connectedness of a category in terms of the equivalence class of the relation defined over morphisms (two objects are in the same equivalence class if they are connected by a (perhaps zig-zag) morphism). We can treat each equivalence class as a topologically locally connected space, and then the homotopy groups

of the classifying space BC of cPROP category

gives us an algebraic invariant of causal equivalence classes.

Exploiting Additional Constraints in Causal Discovery

The basic idea behind the GES is to search in the space of causal equivalence classes and use a Bayesian scoring function to find the most plausible model. One significant challenge in applying the GES to the cancer domain described in

Section 2 is that the datasets available are of limited size (∼100 tumor samples) but feature high-dimensionality (∼19,000 genes). The theoretical result stated in Theorem 1 may be of limited use in such situations. The approach that is generally taken in actual real-world applications of causal inference (e.g., [

38]) is to bring in additional structural constraints that are motivated from particular domains. Some of these are briefly described below:

Domain constraints: In cancer, genes mutate in particular sequences, and once a gene has mutated, then it stays mutated. In other words, rather than search over all possible DAG models, it may suffice to consider restricted subclasses of DAGs, such as

conjunctive Bayesian networks [

34] (these are also referred to as “noisy-AND” models in [

40]). It is often necessary to bring in such domain constraints in most real-world applications. This strategy was studied in our previous work [

35] and applied to the pancreatic cancer domain.

Topological representations of causal DAG models: It is possible to convert a DAG model—viewed as a partially ordered set—into a finite space topology [

41,

42] by using the Alexandroff topology. In simple terms, each variable in the model is associated with its

downset (all variables that it dominates in the partial ordering) or

upset (all variables that dominate it in the partial ordering). The intersection of all such open or closed sets defines the Alexandroff topology embedding for each variable. This transformation can be used to determine whether two DAG (poset) models are

homotopic and used to produce a more scalable way to enumerate posets. The results in [

35,

41] show several orders of magnitude improvement, at least for relatively small models.

Asymptotic combinatorics: It is a classic result from extremal combinatorics [

43] that almost all partial orders of comprised of just three levels. This result is initially surprising, but the intuition behind this combinatorial result is that by carefully counting the set of all possible partial orders on

N variables, it can be shown that as

, there is a concentration phenomena that occurs where almost all partial orders are of height 3. An intriguing physical explanation is given in [

44] based on phase transitions. The ramifications of this concentration phenomena were explored in a previous paper on asymptotic causality [

45].

To summarize, while we will focus in the remainder of the paper on characterizing the equivalence classes of causal models using categorical techniques, it is important to point out that real-world applications will invariably require bringing in other sources of knowledge. We will return to discuss this point in

Section 13.

7. Simplicial Objects in cPROPs

We now turn to the embedding of cPROPs in the category of simplicial sets, which will be a prelude to constructing “nice” topological realizations and the study of their classifying spaces.

Figure 11 gives the high level intuition. A simplicial set

X is defined as a collection of sets

, which is combined with face maps (indicated as

in the figure) and degeneracy maps (indicated as

in the figure). As a simple guide to help build intuition, any directed graph can be viewed as a simplicial set, where

is the set

V of vertices,

is the

E of edges, and the two face maps

and

from

to

yield the initial and final vertex of the edge. The single degeneracy map

between

and

adds a self loop to each vertex. Simplicial sets generalize graphs when we consider higher-order simplices. For example, between

and

, there are three face maps, mapping a simplicial triangle (a 2-simplex)

to each of its 1-simplicial components, namely, its edges.

A brief review of simplicial sets is given, summarizing some points made in my previous paper on simplicial set representations in causal inference [

14]. A more detailed review can be found in many references [

29,

48]. Simplicial sets are higher-dimensional generalizations of directed graphs, partially ordered sets, and regular categories themselves. Importantly, simplicial sets and simplicial objects form a foundation for higher-order category theory [

26,

27]. Using simplicial sets and objects enables a powerful machinery to reason about both directional and non-directional paths in causal models and to model equivalence classes of causal models.

Simplicial objects have long been a foundation for algebraic topology [

48,

49] and more recently in higher-order category theory [

26,

27,

50]. The category

has non-empty ordinals

as objects and order-preserving maps

as arrows. An important property in

is that any many-to-many mapping is decomposable as a composition of an injective and a surjective mapping, each of which is decomposable into a sequence of elementary injections

called

coface mappings, which omit

, and a sequence of elementary surjections

, called

co-degeneracy mappings, which repeat

. The fundamental simplex

is the presheaf of all morphisms into

, that is, the representable functor

. The Yoneda Lemma [

16] assures us that an

n-simplex

can be identified with the corresponding map

. Every morphism

in

is functorially mapped to the map

in

.

Any morphism in the category

can be defined as a sequence of

codegeneracy and

coface operators, where the coface operator

is defined as

Analogously, the codegeneracy operator

is defined as

Note that under the contravariant mappings, coface mappings turn into face mappings, and codegeneracy mappings turn into degeneracy mappings. That is, for any simplicial object (or set) , we have , and likewise, .

The compositions of these arrows define certain well-known properties [

29,

48]:

Example 2. The “vertices” of a simplicial object X in a cPROP category are the objects in , and the “edges” are its arrows , where and are objects in . Note that is a contravariant functor , and since has only one object, the effect of this functor is to pick out objects in . The simplicial object is . Given any such arrow, the face operators and recover the source and target of each arrow. Also, given an object X of category , we can regard the degeneracy operator as its identity morphism .

Example 3. Given a cPROP category , we can identify an n-simplex of a simplicial object in a cPROP category with the following sequence:and the face operator applied to yields the sequencewhere the object is “deleted” along with the morphism leaving it. Example 4. Given a cPROP category and an n-simplex of the simplicial object in a cPROP category , the face operator applied to yields the sequencewhere the object is “deleted” along with the morphism entering it. Example 5. Given a cPROP category and an n-simplex of the simplicial object, the face operator applied to yields the sequencewhere the object is “deleted”, and the morphisms are composed with morphism . Example 6. Given a cPROP category and an n-simplex of the simplicial object defined over the cPROP category, the degeneracy operator applied to yields the sequencewhere the object is “repeated” by inserting its identity morphism . Definition 23. Given a cPROP category and an n-simplex of the simplicial object associated with the category, is a degenerate simplex if some values in are an identity morphism, in which case and are equal.

7.1. Nerve of a Category

There is a general way to construct a simplicial set representation of any category by constructing its

nerve functor [

28]. This construction formalizes what was illustrated in the above examples.

Definition 24. The nerve of a category is the set of composable morphisms of length n for . Let denote the set of sequences of composable morphisms of length n. The set of n-tuples of composable arrows in C, denoted by , can be viewed as a functor from the simplicial object to . Note that any non-decreasing map determines a map of sets . The nerve of a category C is the simplicial set , which maps the ordinal number object to the set .

The importance of the nerve of a category comes from a key result [

29,

51], showing that it defines a full and faithful embedding of a category.

Theorem 10. The nerve functor

Cat

→

Set

is fully faithful. More specifically, there is a bijection θ defined as Unfortunately, the left adjoint to the nerve functor is not a full and faithful encoding of a simplicial set back into a suitable category. Note that a functor G from a simplicial object X to a category can be lossy. For example, we can define the objects of to be the elements of and the morphisms of as the elements , where , , , and define the identity morphisms . Composition in this case can be defined as the free algebra defined over elements of , which is subject to the constraints given by elements of . For example, if , we can impose the requirement that . Such a definition of the left adjoint would be quite lossy because it only preserves the structure of the simplicial object X up to the 2-simplices. The right adjoint from a category to its associated simplicial object, in contrast, constructs a full and faithful embedding of a category into a simplicial set. In particular, the nerve of a category is such a right adjoint.

7.2. Topological Embedding of cPROP Categories

Simplicial objects in cPROP categories can be embedded in a topological space using a construction originally proposed by Milnor [

30].

Definition 25. The geometric realization of a simplicial object X in the cPROP category is defined as the topological spacewhere the n-simplex is assumed to have a discrete

topology (i.e., all subsets of are open sets), and denotes the topological

n-simplex The spaces can be viewed as cosimplicial

topological spaces with the following degeneracy and face maps: Note that , whereas .

The equivalence relation ∼ above that defines the quotient space is given as 7.3. Topological Embeddings as Coends

We will now bring in the perspective that topological embeddings of simplicial objects in cPROP categories can be interpreted as a coend [

16] as well. Consider the functor

where

where

F acts

contravariantly as a functor from

to

Sets mapping

, and

covariantly mapping

acts as a functor from

to the category

of topological spaces.

The coend defines a topological embedding of a simplicial object

X in a cPROP category, where

represents composable morphisms of length

n. Given this simplicial object, we can now construct a topological realization of it as a coend object

where

is the simplicial object defined by the contravariant functor from the simplicial category

into the category of simplicial objects in cPROP categories, and

is a functor from the topological

n-simplex realization of the simplicial category

into topological spaces

. As MacLane [

16] explains it picturesquely, the “coend formula describes the geometric realization in one gulp”. The formula says essentially to take the disjoint union of affine

n-simplices, one for each

, and glue them together using the face and degeneracy operations defined as arrows of the simplicial category

.

9. Classifying Spaces of Bayesian Networks

In this section, we will drill down from the abstractions above to prove a set of more concrete results regarding the classifying spaces of cPROP functors that correspond to Bayesian networks [

40] and that can be seen as analogous to CDU functors in affine CD categories [

9]. In this section, we will restrict our attention to the cPROP category

defined by the coalgebraic PROP

defined by the PROP maps

and

, as discussed earlier in

Section 4. We will also build on the results of the previous sections to state a categorical generalization of the Meek–Chickering (MC) theorem for cPROP categories [

3,

12]. This theorem, originally stated as a conjecture in Meek’s dissertation [

12], was formally proved by Chickering [

3]. The MC theorem states that, given any two causal DAG models

and

,

is an

independence map of

that is any conditional independence implied by the structure of

that is also implied by the structure of

. Furthermore, there exists a finite sequence of edge additions and

covered edge reversals such that after each edge change,

remains a DAG,

remains an independence map of

, and, finally,

after the sequence is completed.

To begin with, we build on the characterization of a causal DAGs

, or Bayesian networks [

2,

40], as functors from the cPROP (or equivalently CDU) category

to

FinStoch (see [

9] for more details). It is assumed that the reader is familiar with the terminology of DAG models in this section, and the reader is referred to [

3] for additional details that have been omitted in the interests of space. A brief overview of the Markov category

FinStoch is given (which was called

Stoch in [

9]), whose objects are finite sets and morphisms

. States are stochastic matrices from a trivial input

, which are essentially column vectors representing marginal distributions. The counit is a stochastic matrix with a row vector consisting only of 1’s. The composition of morphisms is defined by matrix multiplication. The monoidal product ⊗ in

FinStoch is the Cartesian product on the objects and Kronecker product of matrices

. The Kronecker product corresponds to taking product distributions.

FinStoch realizes the “swap” operation defined by the string diagram in Definition 11 as

given by

, making it into a symmetric monoidal category.

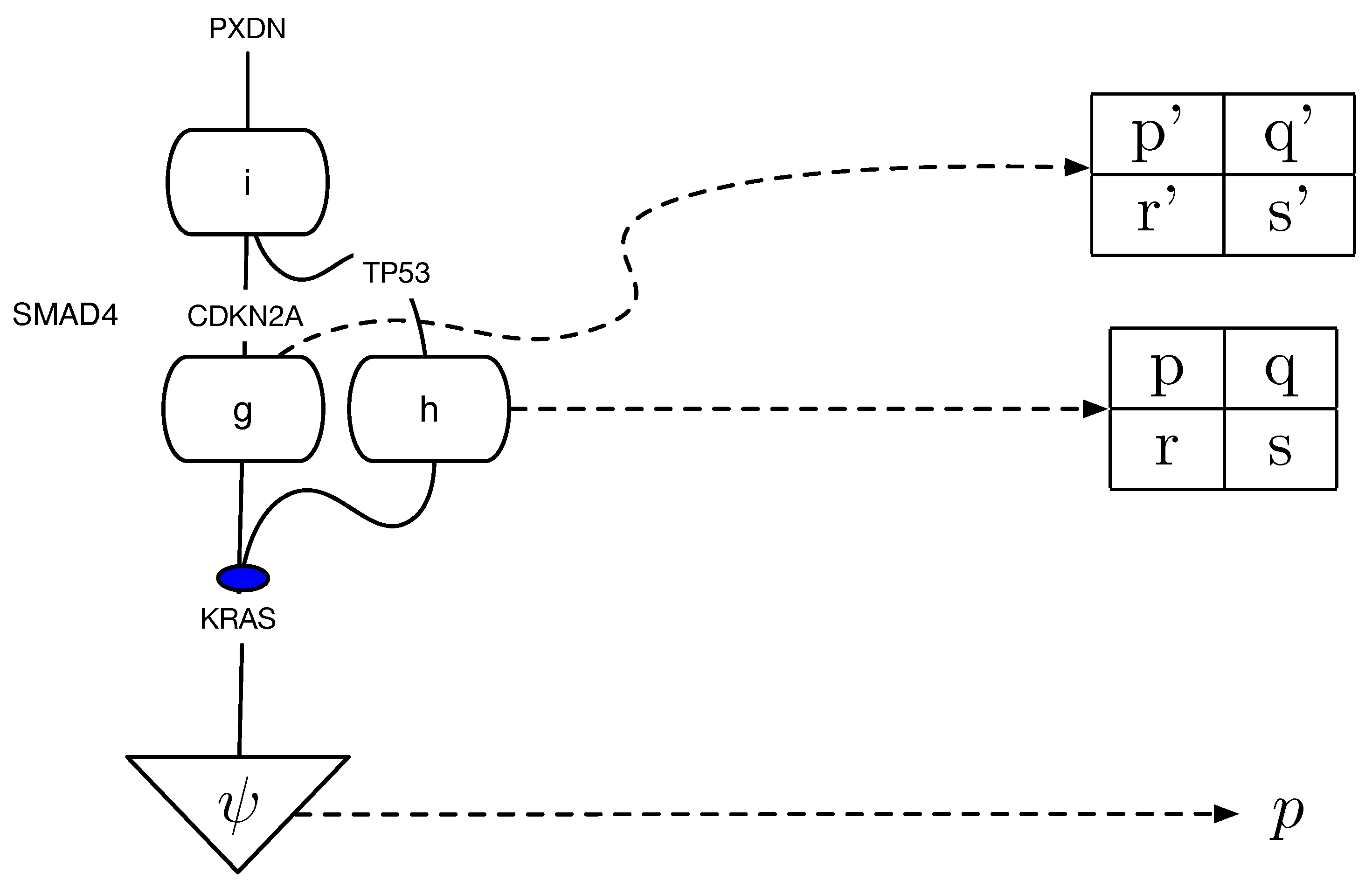

Figure 12 gives an example of such a functorial representation of Bayesian networks from the pancreatic cancer domain described in

Section 2. The probability values shown in the figure are estimated frequencies from actual data in [

38].

Figure 12.

Example showing a cPROP functor modeling a causal model from the pancreatic cancer domain. The domain category is a string diagram represented as a Markov category [

17]. The codomain category is

FinStoch, the category of finite stochastic processes. The dashed arrows show how each morphism in the domain category is mapped to a corresponding conditional probability table, in the usual manner for Bayesian network models (each

entry defines the conditional probability of whether a gene has mutated or not, depending on the mutation status of the gene that precedes it in the partial ordering). Not all object or morphism mappings are shown: to fully specific a functor, each object and morphism in the domain category must be mapped into a suitable object and morphism in the codomain category.

Figure 12.

Example showing a cPROP functor modeling a causal model from the pancreatic cancer domain. The domain category is a string diagram represented as a Markov category [

17]. The codomain category is

FinStoch, the category of finite stochastic processes. The dashed arrows show how each morphism in the domain category is mapped to a corresponding conditional probability table, in the usual manner for Bayesian network models (each

entry defines the conditional probability of whether a gene has mutated or not, depending on the mutation status of the gene that precedes it in the partial ordering). Not all object or morphism mappings are shown: to fully specific a functor, each object and morphism in the domain category must be mapped into a suitable object and morphism in the codomain category.

Theorem 11 (Proposition 3.1, [

9])

. There is a 1–1 correspondence between Bayesian networks based on a DAG and cPROP functors of the type FinStoch.

This theorem is essentially the same as that in [

9], since functors between the CDU categories

and

FinStoch are special types of functors between cPROP categories. We can model the category of all Bayesian networks as a functor category

on cPROP categories. In this section, we will explore the homotopic structure of this functor category, whose objects are Bayesian networks represented as functors and whose arrows are natural transformations.

Let us now build on the homotopic structures defined earlier in

Section 8 in terms of viewing each cPROP category

in terms of its classifying space

. The following theorem is straightforward to prove.

Theorem 12. Each Bayesian network encoded as a cPROP functor

FinStoch

induces a continuous and cellular map of CW complexes (i.e., compactly generated spaces with a weak Hausdorff topology [55]). Proof. Recall that

is a functor from the category

Cat to the category Top of topological spaces defined as the classifying space of a category, which is constructed by forming the simplicial set using the nerve of the category (where each

n-simplex represents composable morphisms in a category of length

n) and using its topological realization as defined by Milnor [

30]. □

We can define an equivalence structure on cPROP functors representing DAG models, generalizing the classical definitions in Pearl [

2] and using Theorem 11 above.

Theorem 13. Two cPROP functors

FinStoch

and

FinStoch

are

equivalent

and denoted as , where we use the same symbol ≈ used in [3] for DAG equivalence if they are constructed from DAG models and , respectively, that have the same skeletons and the same V-structures. Proof. Two DAGs are known to be equivalent, meaning they are distributionally equivalent and independence equivalent if their skeletons, namely, the underlying undirected graph ignoring edge orientations, are isomorphic and have the same V-structures, meaning an ordered triple of nodes where contains the edges and , and X and Z are not adjacent in . Given that Theorem 11 gives us a 1–1 correspondence between the DAG models and cPROP functors, the theorem follows straightforwardly. □

9.1. Natural Transformations Between Causal Models

I now introduce another significant concept from category theory—

natural transformations—and use it to define the relationships between two causal models, such as Bayesian networks. In a range of situations in causal inference, from representing the effect of an intervention [

2,

9] to searching the space of DAG models in the GES algorithm [

3], it is necessary to relate two causal models with each other. In the previous section, it has been shown how each causal model, such as a Bayesian network, can be viewed as a functor from a “syntactic” category to a “semantic” category. Here, the section will introduce how natural transformations between two functors capture the relationships between two Bayesian networks or other causal models.

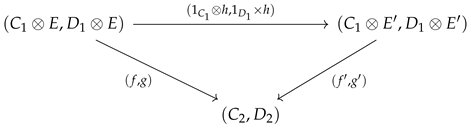

Definition 34. Given any two functors and between the same pair of categories, a natural transformation from F to G is defined through a collection of mappings: one for each object c of C, thereby defining a morphism in D for each object in C. They are defined as follows:

A natural isomorphism is a natural transformation in which every component is an isomorphism.

To concretize this abstract notion of a natural transformation, let us define as the functor

F the causal model shown in

Figure 12, and let the functor

G be defined by the causal model in

Figure 13, which deletes the morphism from

to

. In terms of the natural transformation, note that each path in the above commutative diagram defines a morphism in the codomain “semantic” category. For the specific deleted morphism

f, note that

will continue to denote the original mapping from

to

, but

will now be mapped into the empty mapping, as there is now no causal pathway in the intervened model.

To generalize from the example just given, if we want to define the set of all possible natural transformations between two causal models represented as functors, we can use an elegant framework introduced by Yoneda called (co)end calculus [

16]. Specifically, we can characterize the interaction between two Bayesian networks represented as cPROP functors through Yoneda’s (co)end calculus, where for simplicity we use the same cPROP category

to denote that these DAGs have the same skeleton and V-structures.

Theorem 14. Given two cPROP functors

FinStoch

and FinStoch representing two DAG models, the set of natural transformations between them can be defined as an end

Proof. The proof of this result follows readily from the standard result that the set of natural transformations between two functors is an end (see page 223 in [

16]). □

We can this use this result to construct a homotopic structure on the topological space of all continuous and cellular maps of CW complexes defined in Theorem 12 above.

Theorem 15. The topological space of all continuous and cellular maps of CW complexes, where each map is defined asis decomposed into equivalence classes by the equivalence relation ≈ defined in Theorem 13. Proof. The equivalence relation ≈ on cPROP functors is reflexive, symmetric, and transitive, because as Theorem 11 showed, there is a 1–1 correspondence between causal DAG models and cPROP functors. Each equivalence class of DAG models maps precisely into an equivalence class of cPROP functors. □

Theorem 16. We can now bring to bear some properties of the classifying space developed by Segal [28] to construct a homotopy on cPROP categories and functors: For any two cPROP functors

FinStoch

and

FinStoch, a natural transformation induces a homotopy between and .

If and is an adjoint pair of functors, then is homotopy equivalent to (here, is a subcategory of that is defined by the mapping of each object and morphism in ).

Proof. We can think of the natural transformation

as a functor

from

to

. We define the action of

on objects as

and

. On morphisms

, we can set

and

. For the only non-trivial morphism

in

, we define

. The composite structure

yields the desired homotopy.

Given any adjoint pair of functors and , we can define the induced natural transformations and . From the just established results on the natural transformation , the desired homotopy follows.

□

9.2. Generalizing the Meek–Chickering Theorem to cPROP Categories

Since cPROP functors are in 1–1 correspondence with DAG models from Theorem 11, we can associate with any covered edge in a DAG model an equivalent covered morphism in the Markov category associated with the DAG model .

Theorem 17. Let be any DAG model, with associated cPROP functor , let ’ be the result of reversing the edge , and let be the corresponding modified cPROP functor. Then, there is an induced natural transformation corresponding to reversing an edge and using the definition of cPROP functor equivalence in Theorem 13 if and only if the edge is a covered edge in .

Proof. The proof of this theorem follows readily from Lemma 2 in [

3], showing that

’ is a DAG model that is equivalent to

if and only if the edge that is reversed, namely,

is covered in

. □

Theorem 18 ([

3])

. Let and be a pair of equivalent cPROP functors corresponding to two equivalent DAG models and , for which there δ edges in that have the opposite orientation in . Then, there exists a sequence of δ corresponding natural transformations transforming the functor into the functor , where natural transformation can be implemented by constructing the cPROP functor for each intervening DAG model that is based on reversing a single additional edge, satisfying the following properties:Each natural transformation in must correspond to a covered edge in .

After each natural transformation, the functors .

After all natural transformations are composed, the two functors .

Proof. Once again, the proof follows readily from the equivalent Theorem 3 in [

3] exploiting the isomorphism between causal DAG models and cPROP functors from Theorem 11. □

To state the homotopic generalization of the Meek–Chickering theorem for functors between cPROP categories, we need to define the partial ordering on cPROP functors.

Definition 35. Define the partial ordering to indicate that the corresponding causal DAG is an independence map of . Here, ≤ implies that if , then by necessity contains more edges than .

Once again, it follows from the 1–1 correspondence between Bayesian networks and cPROP functors that the corresponding cPROP category must contain more morphisms than . We can now state the generalized Meek–Chickering theorem for functors between cPROP categories.

Theorem 19. Let and be cPROP categories corresponding to any pair of DAGs and such that . Let r be the number of edges in that have the opposite orientation in , and let m be the number of edges in that do not exist in either orientation in . These edges translate correspondingly to the differences in morphisms in and . Then, there exists a sequence of at most natural transformations that map the cPROP functor into the cPROP functor satisfying the following properties:

Each edge reversal and corresponding natural transformation corresponds to a covered edge.

After each natural transformation corresponding to an edge reversal and edge addition, .

After all natural transformations are composed, is a natural isomorphism.

Proof. The proof generalizes in a straightforward way from Theorem 4 in [

3], since we are exploiting the 1–1 correspondences between causal DAG models and cPROP functors. The proof of this theorem in [

3] is constructive, since it involves an algorithm, and it would take more space than we have to sketch out the entire process of categorifying it. But, each step in the Algorithm

APPLY-EDGE-ORIENTATION in [

3] can be equivalently implemented for cPROP categories using the correspondences between causal DAGs and cPROP functors. □

9.3. Homotopy Groups of Meek–Chickering Causal Equivalences

We can now define the equivalence classes under the Meek–Chickering formulation in a more abstract manner using abstract homotopy. First, we define the notion of an equivalence class of objects in any category

simply as that defined by the connectedness relation defined by the morphisms. Two objects

C and

are in the same equivalence class

in a category

if the following structure holds true:

Definition 36. Define the set of path components of a category as the set of equivalence classes of the morphism relation on the objects by .

Theorem 20 ([

29])

. The set of path components of the topological space , namely, , is in bijection with the set of path components of . This relationship between the original category

and its topological realization

now gives us a homotopic characterization of the GES algorithm described in

Section 3. More formally, the GES proceeds by moving from one equivalence class of causal models to the next by addition or removal of (non-covered) edges. These steps can be characterized in terms of the natural transformations between equivalence classes of the cPROP (or CDU [

9]) functors that define the causal DAGs. We treat the equivalence class of DAGs within each connected component as a locally connected topological space. Thus, the set

is exactly the number of equivalence classes, which is again the same as the number of connected components in

, defining the 0

th homotopy group in the topological realization of the category

.

Theorem 21. The GES procedure can be formally characterized topologically as moving from one equivalence class of connected topological spaces in to another, where an equivalence class of connected objects in is defined by the connectedness relation of natural transformations that correspond to reversals of covered edges within an equivalence class.

Proof. The proof of this theorem follows directly from Theorems 2 and 20 and its homotopic version stated as Theorem 19. □

To summarize the results of this section, it has been described how to construct the classifying spaces corresponding to Bayesian network causal models, which lets us construct a homotopic equivalence across causal models represented as functors on cPROP categories. Categorical generalizations of the definitions in [

3] have been introduced, and the categorical generalization of the Meek–Chickering theorem for Markov categories has been stated.