1. Introduction

Many quantum information protocols require two parties (Alice and Bob) to share a two-qubit singlet state:

where Alice holds the first qubit and Bob the second. Common examples of such two-party protocols include teleportation [

1], summoning tasks [

2,

3,

4,

5] and other forms of distributed quantum computing (e.g., [

6]), entanglement-based key distribution protocols (e.g., [

7]), communication and information processing between collaborating agents in some protocols for position verification and position-based cryptography (e.g., [

8,

9,

10]), and in relativistic quantum bit commitment (e.g., [

11]).

The two parties should be confident they share singlets, both to ensure the protocol will execute as intended and to preclude the possibility of an adversarial third-party having interfered with the system for their own advantage. A natural method for distinguishing singlets from other quantum states is to measure a quantity for which the singlet attains a unique maximum. Common examples are the expressions in the CHSH [

12] and Braunstein–Caves [

13] inequalities. These have the additional advantage that they test Bell nonlocality. They can thus detect any adversarial attack that replaces the singlet qubits with classical physical systems programmed to produce deterministic or probabilistic results in response to measurements, since these can be modelled by local hidden variables. In this paper, we examine a quantity that has the same properties but has not previously been studied as a singlet test, the (anti-)correlation of outcomes of random measurements separated by a fixed angle

, and compare it to schemes derived from the CHSH and Braunstein–Caves inequalities (e.g., [

14,

15,

16,

17]).

The singlet testing schemes we examine in this paper only require both parties to accurately perform projective measurements. Unlike singlet purification schemes [

18], they do not need quantum computers or quantum memory. This makes them potentially advantageous when users’ technology is limited, or more generally when single-qubit measurements are cheaper compared to multi-qubit operations.

We assume that Alice and Bob are separated, and the singlet is created either by Alice or by a source separate from both Alice and Bob, before each qubit is transmitted to the respective party. An adversarial third party may intercept the qubits during transmission and alter them, either to obtain information or to disrupt the protocol. We refer to her as ‘Eve’, but emphasize that we are interested in protocols beyond key distribution and that her potential interference need not necessarily involve eavesdropping.

We compare the power of our proposed singlet testing schemes against four commonly studied attacks. These do not represent the full range of possible adversarial action, but illustrate why random measurement testing schemes can be advantageous in a variety of scenarios:

Single-qubit intercept–resend attack: Eve intercepts Bob’s qubit, performs a local projective measurement, notes the outcome, and sends the post-measurement state on to Bob. This could occur in a setting where Alice creates the singlet and transmits a qubit to Bob.

Bipartite state transformation: Eve intercepts both qubits and performs a quantum operation on them, replacing the singlet with some other two-qubit state that is sent to Alice and Bob.

LHV replacement: Eve replaces the singlet with a non-quantum system chosen so that Alice’s and Bob’s measurement outcomes are determined by local hidden variables instead of quantum entanglement.

Noisy quantum channel: This is described by a physically natural noise model (and is hence a special case of scenario 2, if we consider the noise as being due to Eve). This alters the singlet state as it is transmitted to Alice and Bob.

The advantages of these various attacks for Eve, in disrupting or obtaining information from the protocol, will depend on the context. We assume each offers Eve some potential advantage and focus on the extent to which Alice and Bob can detect the attacks.

We consider two different types of scheme that Alice and Bob may use to test the purported singlet:

Braunstein–Caves test: Testing the Braunstein–Caves inequality [

13] with a specific set of

N measurement choices for which the singlet uniquely induces the maximum violation [

19]. We often particularly focus on the

case, the CHSH inequality [

12], for which self-testing schemes have been extensively studied (e.g., [

14,

15,

16,

17]).

Random measurement test: Alice and Bob choose random local projective measurements that are constrained to have a fixed separation angle on the Bloch sphere [

20] and calculate the anti-correlation of their measurement outcomes. For a wide range of angles, this is uniquely maximized by the singlet.

The intuition, which we test and quantify, is that the random measurement test may generally be more efficient than Braunstein–Caves, as it tests anti-correlations for the same set of axis separations , but chooses axes randomly over the Bloch sphere, providing Eve with less information about the test measurements, and hence offering her less scope to tailor her attack to minimize its detectability. In particular, the random measurement test is rotationally symmetric, and is hence sensitive to any attack by Eve that breaks rotational symmetry. It can also be applied to any , not just the discrete set of the form .

We first describe these schemes and analyse their efficiency. We discuss their feasibility in the final section.

3. Results

We will now describe and compare hypothesis tests for the singlet using (i) the Braunstein–Caves samples with parameter N or (ii) random measurement samples with

. We link our choice of

to

N in this way, as this ensures both tests induce equal expected correlations when measuring singlets, allowing for a clear comparison of the effect of deviations. We recall that the test samples both follow shifted Bernoulli distributions:

with parameters defined through the expectation values in (

10) and (

25) as

If we denote the sample mean of

n Braunstein–Caves samples as

and the sample mean of

n random measurement samples as

, then they both follow shifted binomial distributions:

3.1. Description of Hypothesis Tests

We aim to test the following hypotheses:

If we wish to conduct the test using Braunstein–Caves samples, we generate

n samples of

(as in

Section 2.1) and let the test statistic be

.

If we wish to conduct the test using random measurement samples, we generate

n samples of

(as in

Section 2.2) and let the test statistic be

.

Let

be the desired size of the test, defined as the probability the null hypothesis is erroneously rejected when Alice and Bob do in fact share a singlet. The critical region

R is a set of values for the test statistic for which the null hypothesis is rejected. For both tests, we wish to define

R as follows:

where

is defined as the upper

-quantile of a

distribution. However, binomial quantiles can only take discrete values, so we are often unable to select one exactly correponding to

. To rectify this, we instead set

to be the smallest integer, such that

and extend

R to a critical decision region

, where if our test statistic exactly equals

, we decide to reject the null hypothesis with probability

q, where

q is chosen so that

.

Through (

28), it follows that

, as

and

are identically distributed under the null hypothesis.

The power functions

and

for each test describe the probability the null hypothesis is rejected given the density matrix of the state being tested, and are defined using (

27)–(

30) as follows:

It is clear that

so whether (i) or (ii) is better at detecting non-singlet states in a given scenario can be determined by comparing the values of

and

associated with the testing of typical states arising from that scenario.

For large

n, the asymptotic power functions are described by the central limit theorem. If

is the cumulative distribution function of a

distribution, then, as

, the following holds:

where the ‘+0.5’ terms are the appropriate correction for a continuous limit of a discrete distribution and

is the upper

-quantile of a

distribution, with

.

3.2. Comparison for Simple Intercept-Resend Attack

Consider the scenario in which Eve manages to intercept Bob’s qubit and performs the following measurement:

before sending the post-measurement qubit on to Bob. As the singlet can be expressed as follows:

it is clear that the post-measurement state will be the following:

The expected test samples are independent of Eve’s measurement outcome and are calculated in

Table 1 using (

10) and (

25).

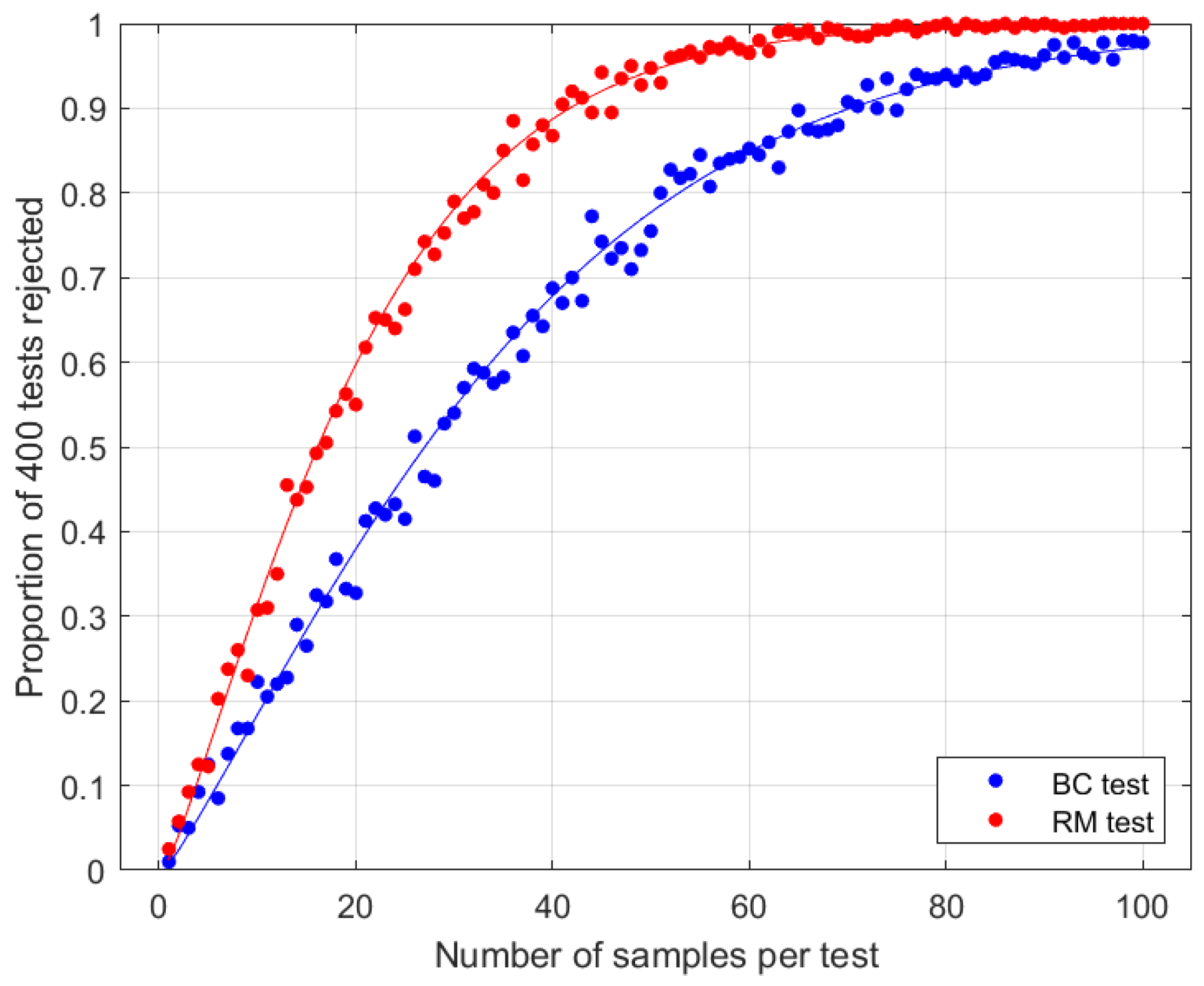

These results show that a single-qubit intercept–resend attack reduces to of its singlet value, and reduces to between and of its singlet value, depending on the measurement made by Eve.

Eve will choose

to achieve a desired balance of minimal disruption and maximal information gain; hence, her choice will depend on the parent protocol within which Alice and Bob intended to use the singlet. For example, if BB84 is the parent protocol, it is known [

21] that the Breidbart basis

is optimal for Eve; hence,

and the measurement test has greater power. More generally, if Eve’s priority is to choose a basis which minimises disruption, the random measurement test will be more powerful (see

Figure 1).

It is clear that choosing

N to be as large as possible will maximise the difference between the expected correlations under the null and alternative hypotheses, leading to a more powerful test for both schemes. This implies that choosing

is optimal for the random measurement test in this scenario; hence, it is optimal for Alice and Bob to use the same randomly chosen measurements if they know they are testing a singlet and a post-measurement state. However, it is known [

22] (see

Section 3.4) that for

, the test does not distinguish the singlet from a class of simple LHV models.

3.3. Comparison for Bipartite State Transformation Attack

Consider the scenario in which Eve intercepts both qubits and manipulates them so that the singlet is transformed into some other state

, with the following singlet fidelity:

While we permit any

, we are particularly interested in small values. The expected test samples are calculated in

Table 2 using (

10) and (

25).

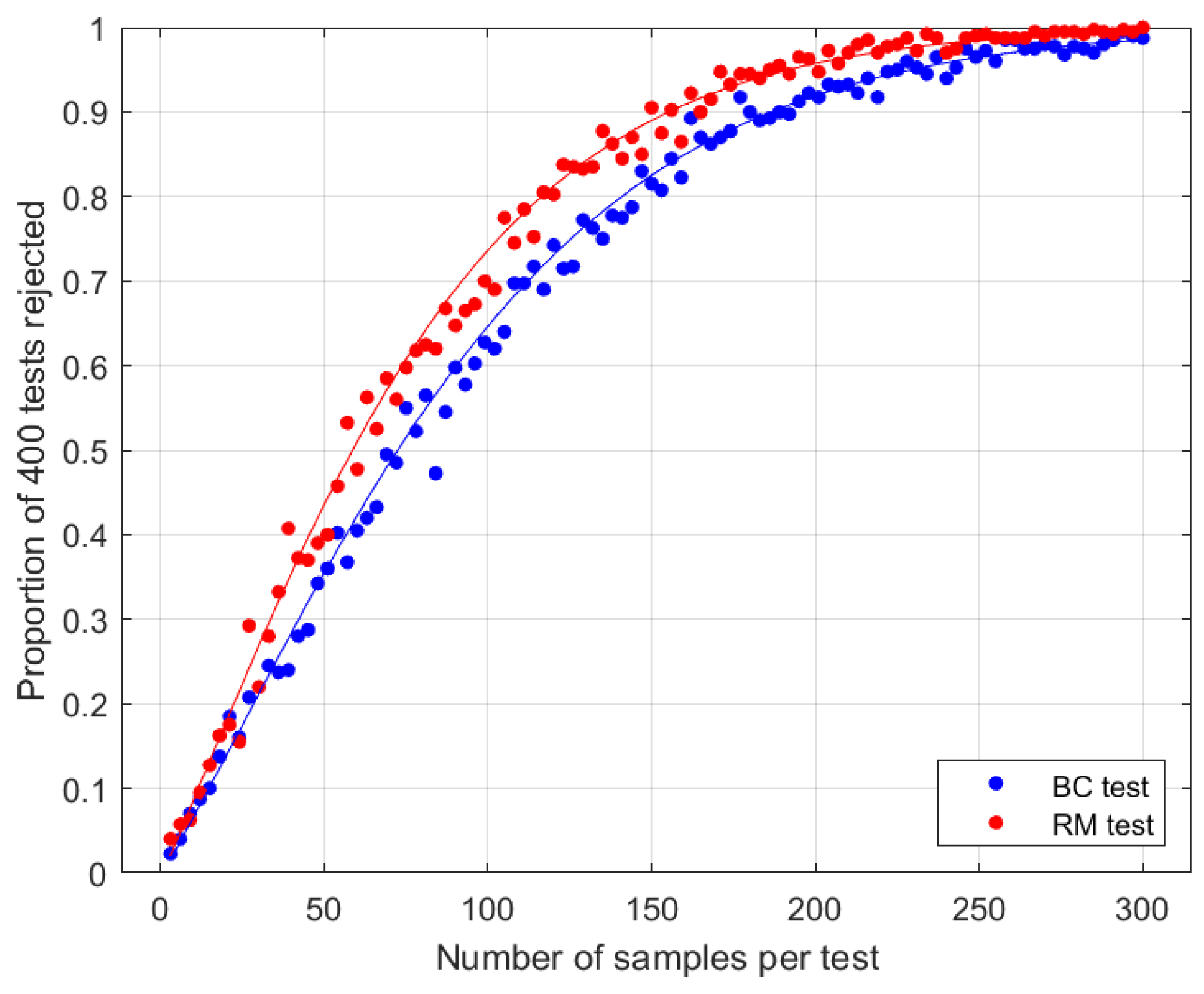

The results show that increases with at linear rate , while increases with at linear rate ; thus, the test of greater power can be identified by comparing the values of and . Note that the orthogonality of Bell states imposes the constraint .

When , we have , and when , we have , while for , both tests are equally strong.

As an example, in a scenario where Eve prioritises being as undetectable as possible for a given

, she would choose a transformation with

, so the random measurement test would be superior in this case (see

Figure 2).

One way to overcome the uncertainty in the value of

is to require Alice and Bob to apply the same randomly chosen unitary operation

U to both of their qubits before measurement, without remembering the identity of

U. This effectively transforms their shared system to a mixed state of the following form:

as the singlet component remains invarient under a

transformation, while the complement becomes maximally mixed. This ensures that both tests are equivalently strong when testing the resulting state, as

.

The largest possible choice of parameter

N leads to the test of greater power for each type of scheme, much as it did in

Section 3.2.

3.4. Comparison for LHV Replacement Attack

Consider the scenario in which Eve intercepts both qubits and replaces them with a not necessarily quantum system where the correlation between Alice and Bob is governed entirely by a local hidden variable (LHV) theory. Using the Braunstein–Caves inequality [

13], the expected value of the Braunstein–Caves sample is bounded for integers

as

for measurements of a two-sided LHV system. It is also known (Theorem 1 in [

20]) that the expected value of the random measurement sample for

is bounded for integers

as follows:

for measurements of a two-sided LHV system. The strictly positive difference between correlations resulting from LHV models and singlets implies that both tests can detect when the correlation between Alice and Bob’s measurement outcomes is caused by an LHV theory, with a power that is uniformly bounded for all possible LHV theories.

For

, the optimal parameters for both types of scheme are found by selecting the value of

N that maximises the difference between expected singlet correlations and the bound on LHV correlations. This difference is defined in (

39) and (

40) as follows:

As , and for , it follows that is maximised by over integer inputs greater than 1, providing an optimal minimum bound on test power for both schemes.

This result does not identify which value of

leads to the random measurement test with the greatest power for detecting LHV models, as it is possible to use any

, not just the discrete selection considered above, and the gap is not generally given by (

41). This question was explored further in [

23] and resolved numerically in [

24]. The optimal value for detecting general LHV models that are optimized to simulate the singlet is

; the optimal value for detecting general LHV models that are optimzed to simulate the singlet, with the constraint that they provide perfect anticorrelations for measurements about the same axis, is

.

It is also interesting to compare the optimal value of

for detecting the LHVs given by Bell’s original model [

22], which is defined such that Alice’s measurement on one hemisphere of the Bloch sphere leads to outcome

and the other leads to outcome

, with Bob’s measurement providing opposite values on the same hemispheres. For this model, it is easy to verify that

with the difference between this expected correlation and that for the singlet being

This quantity is maximised by

, leading to

. Hence, the random measurement test with this parameter has the greatest power for detecting this class of LHV models (see

Figure 3). For comparison,

.

Since for all , a test with any in this range would detect Bell’s LHV models with some efficiency.

3.5. Comments on LHV Model Testing with Measurement Errors

When Alice and Bob program their measurement devices during a test, there is a possibility they incur small calibration errors. These could be realised as small deviations in their measurement angles on the Bloch sphere. We fix as a bound on the magnitude of a deviation in any single measurement for both Alice and Bob.

We examine the effect of such errors on the random measurement and Braunstein–Caves schemes.

3.5.1. Random Measurement Scheme

Theorem 1 of Ref. [

20] provides a bound on the expected value of a random measurement sample from an LHV model in this error regime. The theorem equivalently states that for any LHV model, any integer

and any

, we have

.

For

, it follows that the expected value of a random measurement sample from any LHV model with chosen angle

satisfies

In this setting, the greatest assured difference between the expected correlation for a singlet and that for an LHV model over all possible

-bounded errors is

is positive when , implying that the random measurement test can distinguish between singlet and LHV models in the presence of -bounded measurement errors when .

For , this required bound on converges monotonically to 0 as N increases. This implies that the scheme can only reliably tolerate a smaller range of absolute measurement errors when N is large, suggesting that schemes with reasonably large may be more robust.

3.5.2. Braunstein–Caves Scheme

The expected value of a Braunstein–Caves sample from an LHV model in this error regime is still bounded as

as the Braunstein–Caves inequality holds independently of Alice and Bob’s measurement choices.

In this setting, the expected correlation for a singlet over all

-bounded measurement errors can be calculated using (

6) and (

9) by shifting the usual Braunstein–Caves measurement angles for Alice by

and likewise for Bob by

, where both

and

represent

-bounded errors, leading to the following:

This implies that the greatest assured difference between the expected correlation for a singlet and that of an LHV model over all possible

-bounded errors is

is positive when , implying that, under this condition, the Braunstein–Caves test can distinguish between singlet and LHV models in the presence of -bounded measurement errors.

For , this required bound on converges monotonically to 0 as N increases. This implies that the scheme can only reliably tolerate a smaller range of absolute measurement errors when N is large, again suggesting that schemes with a small N may be more robust.

3.5.3. Conclusions

In summary, it is shown that both schemes are still able to distinguish between singlet and LHV models in the presence of small deviations in the intended measurement angle. As N becomes large, we become less sure of the robustness of each scheme, as the proven range of tolerable measurement errors decreases.

3.6. Comparison for Noisy Quantum Channel

Consider the scenario in which Eve takes no action, but the quantum channel used for state transmission to Alice and Bob is affected by noise. Different quantum channels are afflicted with different types of noise; however, as a simple example, we can consider a depolarising channel that replaces the singlet with the maximally mixed state with probability .

The effect of this noise on a singlet can be modelled using two-qubit Werner states, with these being the only set of states that is invariant under arbitrary unitary transformations acting equally on both qubits [

25].

The two-qubit Werner state can be defined as follows:

where

parametrises the strength of the noise, with

corresponding to a pure singlet state in the absence of noise.

The expected test samples are calculated in

Table 3 using (

10) and (

25).

Hence, both tests are equally powerful in testing for depolarising noise. As in

Section 3.2 and

Section 3.3, a larger value of

N leads to a test of greater power, so the choice of a large

N and

would be optimal.

As an additional example, we can consider the effect of a simple dephasing channel which acts on a qubit as Pauli gate

Z with probability

p. The effect of this noise on a singlet can be described as follows:

where we restrict

.

The expected test samples are calculated in

Table 4 using (

10) and (

25).

It is clear that the random measurement test has greater power in testing for this type of dephasing for any

. Just as in

Section 3.2 and

Section 3.3, a larger value of

N leads to a test of greater power, so a choice of large

N and

would be optimal.

4. Discussion

While there is no universally superior choice of singlet test, we have seen that the random measurement test is theoretically superior or equal in many natural scenarios, including in the detection of intercept–resend or transformation attacks, where Eve prioritises minimising her chance of detection, distinguishing LHV models, and detecting rotationally invariant noise.

These results provide a rationale for considering the random measurement test for singlet verification over more conventional CHSH schemes (e.g., [

14,

15,

16,

17]). A complete analysis would consider the full range of attacks open to Eve and the full range of tests available for A and B. This would define a two-party game (with A and B collaborating as one party and Eve as the other), in which the optimal strategy for each party is likely probabilistic. However, Eve’s actions may be limited depending on how the singlets are generated and distributed and on the technologies available to her. Also, Alice and Bob may be able to exclude non-quantum LHV attacks if they can test qubits before measurement to ensure they are in the appropriate physical state.

Our discussion has mainly focussed on the ideal case, in which Alice and Bob can carry out perfectly precise measurements. Establishing that random measurement tests have an advantage in this case shows they are potentially valuable options, and motivates the development of technology that can implement them more easily and precisely. However, at present, imprecisions need to be taken into account when assessing the relative feasibility, advantages and costs of all the considered tests. For example, the Braunstein–Caves test only requires the calibration of measurement devices in a finite number () of orientations around a great circle on the Bloch sphere, while the random measurement test requires the ability to measure all possible orientations. The Braunstein–Caves test may thus be a more desirable choice if calibrating detectors or, equivalently, if manipulating qubits precisely is difficult. An analysis of the feasibility of carrying out random measurement tests with current or foreseeable future technology—a task for future work—would illuminate these tradeoffs.

In principle, the random measurement protocol can be implemented in various ways, each of which requires some resources. One option is for Alice and Bob to pre-coordinate their measurements. This requires secure classical communication and/or secure classical memory, albeit not necessarily a large amount. For example, if Alice and Bob choose from a pre-agreed list of approximately uniformly distributed axes on the Bloch sphere, they can specify a measurement pair with about 40 bits, choosing pairs separated by the chosen to within error . Consuming secure classical communication and/or memory at this rate is not hugely demanding, and may be a reasonable option in many quantum cryptographic and communication scenarios. However, relatively precise pre-coordinated measurements effectively define (if pre-agreed) or consume (if securely communicated) large amounts of a shared secret key. Singlet verification may be required for only a small fraction of the shared singlets. Still, the advantage is, at best, context-dependent in protocols that aim to generate one-time pads.

An alternative, if Bob has short-term quantum memory, is for Alice to communicate her measurement choice after Bob receives and stores his qubit. Each can then define their measurement choice using locally generated or stored random bits, and Bob can delay his measurement choice until he receives Alice’s, with no additional security risk.

Another possible option is for Alice and Bob to choose measurements randomly and independently, and then sort their results into approximately

-separated pairs post-measurement for some discrete set of

in the range

. This effectively means carrying out random measurement tests for each

in the chosen set, up to some chosen finite precision. This protocol effectively uses a random variable

, and further analysis is needed to characterise its efficiency. The Braunstein–Caves protocol can be similarly adapted to avoid pre-coordination if Alice and Bob each independently choose measurements from set (

2) and then sort their results into pairs that correspond to complete elements of (

2). For a test with parameter

N, they would, on average, retain a fraction

of their samples. If the remainder are discarded, this requires them to multiply their initial sample size by

to compensate. However, some of the discarded data could be used for further Braunstein–Caves tests if N is factorisable. Other anti-correlation tests could, in principle, be carried out on the remainder (although the finite precision loophole for measurements on the circle [

23] needs to be allowed for). In the

(CHSH) case, there is no loss of efficiency, as all choices by Alice and Bob would correspond to an allowed pair.

Larger values of N provide more powerful tests for detecting bipartite state transformation attacks and rotationally invariant noise, while the smallest possible N is optimal for detecting LHV correlations. Alice and Bob should thus either choose N according to which type of attack is most likely or—if they are in the type of game-theoretic scenario discussed above—act against the potential use of any of the attacks by employing a probabilistic strategy that mixes different values of N.

In the case

, there is a natural sense that the random measurement test is at least as good at, or better than, the Braunstein–Caves test in every scenario. In

Section 3.2, Eve’s goal could be to carry out an intercept-resend with minimum probability of detection (i.e.,

or

), in which case the random measurement test is more powerful. In

Section 3.3, Eve’s goal could be to carry out a state replacement that achieves fidelity

with minimum probability of detection (i.e.,

), in which case the random measurement test is again more powerful. In

Section 3.4 and

Section 3.6, both tests are equally good for all variations. In

Section 3.5, it is shown that both tests are still effective in the presence of small measurement calibration errors.

Our results thus make a clear case for the consideration of random measurement tests, and add motivation to continue work [

23,

24] focused on identifying their power for the full range of

.

Random measurement tests are, at present, technologically challenging. More work is also needed to characterise their robustness in real-world applications where finite precision is inevitable, with various plausible error models, and where there may be a wide range of plausible adversarial attacks. For example, Eve might employ a mixture of the attacks discussed above, choosing different attacks randomly for different singlets, and/or combinations of these attacks on each singlet. That said, our results suggest that random measurement tests should be considered, as and when the technology allows, in scenarios where efficient singlet testing is critical and the costs of classical and/or quantum memory resources are relatively negligible. The optimal testing strategies against general attacks likely also involve random mixtures of tests. It would thus also be very interesting to explore the advantages of random measurement tests in more sophisticated testing strategies, such as mixtures of random measurements with different angles [

24] and routed singlet tests [

26] using random measurements.