Abstract

Oftentimes in a complex system it is observed that as a control parameter is varied, there are certain intervals during which the system undergoes dramatic change. In biology especially, these signatures of criticality are thought to be connected with efficient computation and information processing. Guided by the classical theory of rate–distortion (RD) from information theory, we propose a measure for detecting and characterizing such phenomena from data. When applied to RD problems, the measure correctly identifies exact critical trade-off parameters emerging from the theory and allows for the discovery of new conjectures in the field. Other application domains include efficient sensory coding, machine learning generalization, and natural language. Our findings give support to the hypothesis that critical behavior is a signature of optimal processing.

1. Introduction

Criticality has been a hallmark of many fundamental processes in nature. For instance, in classical work, Landau [1] and others [2] investigated critical phase transitions between discrete states of matter. More generally, this concept has been used to describe a complex system that is in sharp transition between two different regimes of behavior as one varies a control parameter. Often, a critical point borders ordered and disordered behavior, such as what occurs in the classical 2D Lenz–Ising spin model of statistical physics at special temperatures [3]. For instance, if a system is too hot, then, usually, disorder prevails, while, when it is too cold, it freezes into a low entropy configuration. The tipping points between such regimes have been the subject of much study in science.

In the recent literature, the notion of criticality [4,5,6] has grown to encompass a computational element [7,8], with an emphasis on how to apply it to understand information processing in natural dynamical systems such as organisms [9,10]. In these applications, it is frequently argued that optimal properties for computation emerge [11,12,13,14,15,16,17] when systems are critically poised at some special set of control parameters. Of particular excitement is how criticality can help neuroscientists understand the brain and where such signatures have been observed [18,19,20,21,22,23,24,25,26]. Another exciting area where criticality may be present is in large neural networks, which seem to generalize to certain problems at critical scales, training epochs, or dataset sizes [27,28].

Here, we leverage concepts from rate–distortion (RD) theory [29,30] to give insight into how normative principles governing a system [31], such as efficient coding [32,33], can lead to critical behavior. Consider a system A that evolves its internal steady-state dynamics through changes in a continuous control parameter t. The equilibrium behavior of A at t is modeled as a conditional distribution , which defines the probability that any given input x results in system state y. We say that A exhibits a critical phase transition about a special value of the control variable if changes dramatically near this parameter setting.

To quantify the change at a given t, we average over inputs x the Kullback–Leibler divergence () between distributions and , with small. We call this a divergence rate for the evolution of the system’s behavior along control parameter t. Given a sample of parameters t, we determine the significant peaks in the divergence rate, which we identify as critical control settings for the system. As we shall see in several examples, local maxima in the divergence rate seem to coincide with locations of phase transitions in a system (Figures 1–3). Intruigingly, this measure can uncover higher-dimensional manifolds of criticality (Figure 2b). This is the equivalent of finding the local maxima in the rate of change in evolving information-theoretic measures [34].

In the language of RD theory, these stochastic Input/Output systems are called codebooks and they determine—via a rate parameter —a minimal communication rate R for encoding the state x as y, given a desired average distortion D. More specifically, the control parameter indexes a pair on the RD function, which separates possible from impossible encodings by a continuous curve in the positive orthant (see Figures 4 and 5).

For example, any lossy compression of a signal class has a particular rate and reconstruction error, which must necessarily lie on or above this curve. Intriguingly, the RD function contains a discrete set of special points that correspond to critical control parameters and codebooks , where some states y disappear or reappear in a coding. Intuitively, these are the behavioral phase transitions that must occur when traversing the RD curve from zero to maximal distortion.

In biology, the system A could be an organism that lossily encodes a signal through a channel bottleneck. For example, the retina communicates via the optic nerve to the cortex, which is argued to be a several-fold compression of information capture from raw visual input [31]. We may also zoom out in scale to consider collections of organisms. In this setting, the theory of punctuated equilibrium in evolution [35] proposes that species are stable (in equilibrium) until some outside force requires them to change, upon which, species adapt quickly (punctuation). Here, the control parameter might correspond to some environmental variable in the habitat of the species, and sharp phase transitions [36,37] could emerge from some underlying normative dynamics [38,39,40,41,42].

We first validated our approach on classical RD problems with a number of states n small enough for comparison with mathematically exact calculations. We demonstrate that the critical arising from theory match those that correspond to significant peaks in the divergence rate. We also discovered in a large-scale numerical experiment that there is an explicit relationship between counts of these critical control parameters and the number of Input/Output states. Namely, under mild assumptions, we conjectured that there are critical , corresponding to critical codebooks and critical pairs , for an RD function on n states (Conjecture 1). As another application, we show how experiments with our measure shed light on the weak universality conjecture [43], which has implications for efficient systems in engineering and nature.

We next explored several applications in more practical domains. In image processing, for instance, it is commonly desired to encode a picture with a small number of bits that nonetheless represent it faithfully enough for further computation down a channel. In human retina, this is thought to be accomplished by the firing patterns of ON and OFF ganglion neurons, which represent intensity values above or below a local mean, respectively. Using a standard database of natural images [44], we computed the RD function for ON/OFF encodings of small patches and studied the structure of its critical . We found that our measure uncovers several significant phase transitions for encoding these natural signals. Interestingly, the number of such critical points on the RD function seems to be significantly smaller than that predicted for a generic RD problem. This finding suggests that natural images define a special class of distributions [45] that might be exploited by visual sensory systems for efficient coding [46].

In machine learning, a common problem is to adapt model parameters to achieve optimal performance on a task. Clustering noisy data is one such challenge, and information theory provides tools for studying its solution [33,47]. We found that critical RD codebooks uncovered by the divergence rate can reveal original cluster centers and their count. Another challenge is to store a large collection of patterns in a denoising autoencoder. We examined a specific example of robustly storing an exponential number of memories [48,49] in a Hopfield network [50], given only a small fraction of patterns as training input. We show that as the number of training samples increases, the divergence rate detects critical changes in dynamics, which allow the network to increase performance until generalizing to the full set of desired memories (graph cliques). Thus, we believe that the method of finding criticality described in this paper can be applied to understanding phase transitions in machine learning algorithms. For example, of particular interest is when large language models begin to be able to give the correct answers to certain types of questions [51]. This represents a type of generalization criticality in the space of model parameters.

As a final application, we studied critical phenomena in writing. It has been pointed out that phase transitions arise in the geometry of language and knowledge [52,53,54,55,56,57]. We studied agriculture during the 1800s in the United States using journal articles and uncovered conceptual phase transitions across certain years. In particular, we found that an important shift in written expression occurred during the year 1840. Upon closer examination of the data, there were indeed significant language changes coinciding with the influences of war, religion, and commerce that were occurring at the time.

The outline of this paper is as follows. We give the requisite background for defining divergence rate in Section 2. Next, we explain in Section 3 the findings from applying our measure to various domains such as RD theory, sensory coding, machine learning, and language. We close with a discussion in Section 5 and a conclusion in Section 6.

2. Background

We first define a measure of conditional distribution change over an independent control variable, which we call a divergence rate. As the control parameter varies, peaks in this divergence rate can be used to predict critical phase transitions in a system’s behavior. Our methodology is inspired by the work of [54], who used the same measure to compute surprise in a sequence of successive debates of the French Revolution and, hence, which topics tended to gain traction. We also give a brief background on rate–distortion theory, which provides a powerful class of examples to validate our approach and its utility as a tool for discovering new results in the field (e.g., Conjecture 1).

2.1. Definition of Measure

Let X be a set of data points with underlying distribution ; that is, the probability of is given by . Also, suppose for real parameters t and each that there are conditional distributions specifying the probability of an output y given input x. The variable t could be a rate–distortion parameter in RD theory, the size of a training dataset, the number of epochs for estimating a model, or simply time. Where is written as M, the independent variable should be inferred as t. In this work, we restrict our attention to discrete distributions so that X is a finite set.

Definition 1 (Divergence rate).

The following non-negative quantity measures how much a system changes behavior from t to :

The quantity is the Kullback–Leibler divergence of two distributions u and v, although other such functions could be used. The divergence rate is a simple proxy for the rate of change of a system’s behavior. In particular, to detect critical t, we find local maxima in across a range of values of the parameter t.

The following lemma validates the intuition that the divergence rate measures phase transitions.

Lemma 1.

Suppose that for any and that is differentiable at t. Then, the divergence rate limits to zero as goes to zero.

Proof.

Set . Consider the quantity with small for a fixed x, which looks like

Since , we have , so that the limit of as goes to zero is indeed zero. □

On the other hand, it is easy to verify by its definition that the divergence rate is infinite for any such for all , but for which there is some state y with .

2.2. Determining Critical Control Parameters

To locate the critical control parameters of an evolving system , we find all significant local maxima produced by the measure. In practice, numerical imprecision or the presence of noise results in a divergence rate that has several local maxima that likely do not represent critical changes in system behavior. To filter out such false positives, we first normalize the set of divergence rates over samples by subtracting the mean value and then dividing it by the standard deviation. This allows us to filter out peaks that are not significant (for example, peaks for some ). From here onwards, divergence rate will refer to this normalized divergence rate.

In practice, the divergence rate is potentially non-concave and since t is sampled, we are left with finding peaks in a piecewise linear function. To this end, we utilize a discrete version of the Nesterov momentum algorithm [58] to find significant peaks. We find that the approach is fairly insensitive to hyperparameters when implemented. We provide explicit details of this method and its pseudocode in Section 4.

2.3. Rate–Distortion Theory

Rate–distortion (RD) theory ([59], Chapter 10) has frequently been used to analyze and develop lossy compression methods [60] for images [61], videos [62], and even memory devices [63]. It also offers some perspective on how biological organisms organize themselves based on their perceptions of the world around them [64]. For example, rate–distortion theory has been used to understand fidelity–efficiency tradeoffs in sensory decision-making [42] and has also been useful in understanding relationships between perception and memory [65]. At a molecular level, it has been used to study the communication channels and coding properties of proteins [39,66].

Suppose that we have a system with n states and an input probability distribution defined on X. We seek a deterministic coding of x to y, with the overall error arising from this coding determined by a distortion function , which expresses the cost in setting x to y (typically, we assume for all x).

Remarkably, provided only with q and d, the Blahut–Arimoto algorithm [67,68] produces the convex curve of minimal rate over all deterministic codings with a fixed level of average distortion D. For example, when , so that error is not allowed in a coding, the optimal rate is the Shannon entropy of the input distribution q. In general, when , the minimal rate achievable for a coding with average distortion D is less than and is determined by a unique point on the RD curve. This is intuitive as a sacrifice of some error in coding should yield a dividend in a better possible rate.

Although the RD curve determines a theoretical boundary between possible and impossible deterministic coding schemes, the curve itself is determined via an optimization over stochastic codings, which do not directly lend themselves to practical deterministic implementations. Nonetheless, computing the RD curve is still useful for understanding the complexity of a given problem and benchmarking. See Figures 4 and 5 for examples of RD curves in various settings.

Mathematically, the RD curve is parameterized by a real number that indexes an optimal stochastic coding of x to y as a codebook or matrix of conditionals (so that ). The average distortion is given by . We also set to be the corresponding distribution for a given output y.

It is classical, using Lagrange multipliers, that points on the RD curve arise from solutions to a set of equations in the codebooks and output distributions. For expositional simplicity in the following, we drop in subscripts and set . We also identify and with and , respectively. The governing equations for the RD curve are then given by the following.

Definition 2.

The Blahut–Arimoto (BA) equations for the RD curve are

When the numbers are non-negative integers, solutions to the BA equations form a real algebraic variety, which allows them to be computed symbolically using methods of computational algebra [69].

Example 1

(two states). When , the three equations in (1) translate to these for and :

More generally, set W to be the matrix defined by . (Note that W is invertible near ). Then, the BA Equation (1) are easily seen to combine into a single compact equation for any n as follows, where :

Here, ⊙ is the point-wise (Hadamard) product of two vectors, is the element-wise ratio of the vectors u and v, and is the transpose of W. Note that for any p and q in the simplex, the right-hand side of (3) is also in the simplex.

Interestingly, when the BA equations are written in this form, we can identify the RD curve as a fixed-point using Browder’s fixed point theorem [70,71]. We summarize this finding with the following useful characterization.

Proposition 1.

The RD curve is given by the following equivalent objects.

- 1.

- The minimization of the RD objective function.

- 2.

- Browder’s fixed-point for the function on the right-hand side of (3).

- 3.

- Solutions to the BA equations.

Proof.

The equivalence of the first and third statement is classical theory. For the equivalence of the second and the third, consider the map from the product of an interval and the simplex to the simplex given by . By Browder’s fixed-point theorem, this has a fixed-point given by a curve , satisfying , which is Equation (3). □

When some of the indeterminates become zero at some , then the BA equations still make sense, we simply end up with fewer variables. Such equations involving inverses of linear forms also appear in work on the entropic discriminant [72] in algebraic geometry and maximum entropy graph distributions [73].

When is large (w near 0), optimal codebooks are close to the identity, corresponding to average distortion near zero. In this case, it can be shown directly using Equations (3) that there is an explicit solution [30]. Set to be the all-ones vector.

Lemma 2.

The exact solution for small w (large β) of the RD function is given by

Proof.

When is very large, all are non-zero. In this case, we may cancel p on both sides of Equation (3) to give

The lemma now follows by multiplying both sides of this last equation by . □

It turns out that the rate–distortion curve has critical points on it where dramatic shifts in codebooks occur, an observation that was one of our inspirations. For the purposes of this work, these critical are defined when some goes from positive to zero (or visa-versa) as varies (we remark that in the literature, another equivalent definition is usually used, which is when the derivative of the RD curve has a discontinuity). Note that the third equation in (1) implies that this is when also has such a change. Given this definition, Lemma 1 shows that the divergence rate characterizes these critical points.

The first critical can be determined using Lemma 2 as follows.

Corollary 1.

The first critical on the RD curve going from to is the first value of β that makes some entry of the vector in Equation (4) equal to zero.

Proof.

In the next section, we shall continue with our two-state Example 1 above and compare this theoretical calculation from Corollary 1 to the first critical found by our criticality measure (see Figure 1).

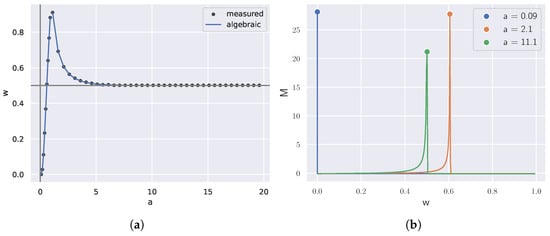

Figure 1.

(a) Critical RD parameters determined mathematically from the theory (algebraic) match up exactly with significant peaks in the divergence rate (measured); see Section 3.1.1. (b) Plotting M vs. w for three different values of a shows the single peak in the divergence rate M.

3. Applications

We present applications of finding peaks (Section 2.2) in the divergence rate (Definition 1) to the discovery of phase transitions in various domains such as rate–distortion theory, natural signal modeling, machine learning, and language.

3.1. Rate–Distortion Theory

Recall that given a distribution q and a distortion function d, the rate–distortion curve characterizes the minimal rate of a coding given a fixed average distortion. A point on the curve is specified by a parameter and is determined by an underlying codebook . In particular, the setup for RD theory can be seen as a direct application of our general framework with the control parameter .

In the following subsections, we shall validate our approach to finding critical phase transitions by comparing our peak finder estimates of critical with those afforded by RD theory. In cases where the number of states is small, such as and , we can compute explicit solutions to the RD Equation (1) and compare them with those estimated by our divergence rate approach (see Figure 1 and Figure 2a,b).

After demonstrating that peaks in the divergence rate agree with theory in these cases, we next turn our attention to exploring what our criticality tool can uncover theory-wise for the discipline. In particular, our experiments suggest new results for RD theory, such as that for a random RD problem, the number of critical on n states goes as (Conjecture 1). We also use our framework to help shed light on a universality conjecture [43] inspired by tradeoffs in sensory coding for biology (Conjecture 2).

3.1.1. Exact RD on Two States

The case of states with varying distortion is already interesting. Consider source distribution and distortion matrix for fixed given by

In this case, using Lemma 2, one can explicitly solve the equations for the output distributions before the first critical point in terms of (recall, ):

From Corollary 1, the first critical is determined when one of these expressions becomes zero. One can check that for , first has a zero value for some critical . At this value, is automatically determined to be . For , on the other hand, it is that becomes zero.

We consider the case , as the other is similar. Our main tool is the generalized version of Descartes’ Rule of Signs [74,75]. This rule says that an expression in a positive indeterminate w, such as from above, has a number of positive real zeroes bounded above by the number s of sign changes of its coefficients. Moreover, the true number of positive zeroes can only be s, , …, or .

In our particular setting, we have so that there can only be two or no positive zeroes. As is a zero, it follows that there is some other positive number making this expression zero. It follows that our first critical point is . In particular, when , we have

Given these explicit calculations from the theory in hand, we would like to compare them with critical parameters determined from significant peaks in the divergence rate.

We summarize our findings in Figure 1. To produce the “measured” points in this plot, we varied the distortion measure using the parameter a, computed codebooks parameterized by with the BA algorithm, and then found critical values of at which the divergence rate peaked. At all values of , the critical value of w found by the noisy peak finder matched the corresponding exact value from the theory (the “algebraic” line). Note that near , the critical approached zero but was not defined there. Also, from the plot, one can guess that approaches − (resp. infinity) as a goes to infinity (resp. zero). This can also be verified directly from the theoretical calculations above.

3.1.2. Exact RD on Three States

We now consider a slightly more complicated example from Berger [76] with states. In this case, we assume a varying source for a parameter , but we fix a distortion matrix:

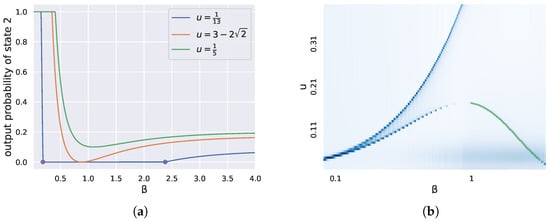

Figure 2.

(a) Consider for a parameter u and a fixed distortion d given by (5). For each , we plot the output probabilities of the second state as a function of , determined by the exact theory. The dots were determined from finding significant peaks in the divergence rate at . Note that they coincided with the vanishing and re-emergence of state 2 (). (b) We plotted a heatmap of the divergence rate as a function of u and . We also plotted in green the theoretical calculation (7) that matched the lower-right part of the curve in the heatmap.

Again using Lemma 2, we find that for large , the output distributions on the RD curve are given by

For example, when , this becomes

In this special case, one can check that the first critical occurs when becomes zero; that is, when

More generally, one can check that as long as , a critical is determined from the formula

In Figure 2a, we plotted for three different settings of the values of the output distribution for the second state as a function of , computed from the general solution (6). On the same plot, we placed two dots on the x-axis where we found representing peaks in the divergence rate in the case of . Notice they are located where the output probability of state two went to zero or emerged from zero.

Figure 2b was obtained by plotting the divergence rate as a heatmap against u and . The peak of the bottom convex shape corresponded to the value of , where a discontinuity appeared in the number of critical given a fixed u. Also shown in Figure 2b is a theoretically determined part of this curve, which matched precisely with the estimated lower-right piece determined using only the divergence rate.

3.1.3. Criticality for Generic RD Theory

With these examples as evidence that the empirical divergence rate is able to detect critical for RD theory, we explored its potential implications for the general case as we increased the number of states n.

We next considered random RD problems on n states in which the probabilities for q were chosen independently from a uniform distribution in the interval (and then normalized to sum to one) and the distortion matrices were chosen to have diagonal zero and other entries drawn independently and uniformly in the interval . (Our experiments appeared to be insensitive to the particular underlying distributions used to make q and d).

As shown in Figure 3, we varied n and plotted the number of significant peaks in the divergence rate for these random RD problems, averaged over 10 trials. The divergence rate was imperfect and, thus, there were error bars. The accuracy of the divergence rate depended highly on whether the range of was adequately densely sampled and whether the range of samples included all critical . It should be noted that this method of finding critical points was an estimate and, therefore, may have indicated large changes in the codebooks where there was no theoretical critical point.

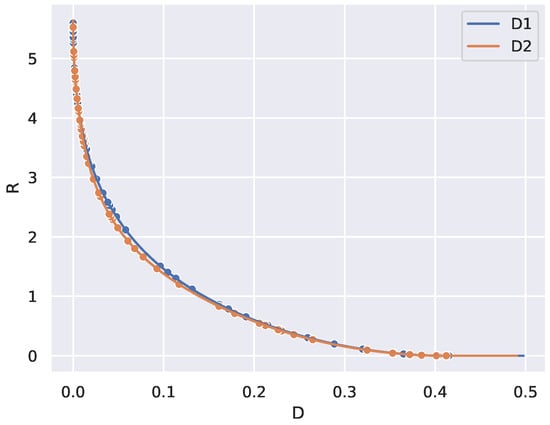

Figure 3.

The number of peaks in divergence rate estimates the number # of critical for a random RD problem with n states and is close to the line (coefficient of determination ).

The numerical results shown in Figure 3 suggest the following new conjecture in the field of RD theory.

Conjecture 1.

Generically, there are critical along the rate–distortion curve as a function of the number of states n.

Here, the word “generic” is used in the sense that an RD problem on n states with data distribution q and distortion matrix d “chosen at random” will have this number of critical points. More precisely, if and are non-negative indeterminates with on the simplex, then outside of a set of measure zero, the number of critical on the RD curve determined by d and q will be exactly .

3.1.4. Weak Universality

Consider the case where all n states have the same probability and two distortion matrices , have entries drawn from the same distribution, for example, uniform in . Then, a conjecture in RD theory, called weak universality, says that the RD curves for and lie on top of each other for large n.

Conjecture 2

(Weak universality [43]). Distortion matrices with entries drawn i.i.d from a fixed distribution define the same limiting RD curves as the number of states goes to infinity.

To illustrate weak universality in Figure 4, we took two randomly drawn distortion matrices and and plotted their RD curves, computed using the BA algorithm. We then plotted the critical points found from determining peaks in the divergence rate on each of the two RD curves. As predicted by Conjecture 2, the RD curves are close. However, notice that the two sets of critical points do not align with each other.

Figure 4.

Taking two random distortion matrices D1 and D2 with entries drawn independently and uniformly in gives two RD curves on states that look similar but whose critical points differ (points indicated along the curves), as estimated from divergence rate maxima.

This experiment suggests that we can rule out a line of attack for proving weak universality that attempts to show critical points on the RD curve that also coincide with each other. In particular, this stronger form of the conjecture is likely not true.

3.2. Natural Signals

Discrete ON/OFF encodings of image patches have been shown to contain much perceptual information [77], and Hopfield Networks trained to store these binary vectors can be used for the high-quality lossy compression of natural images [78,79].

It was also observed in [80] that these ON/OFF distributions of natural patches exhibited critical at a few special points and codebooks along the RD curve. Applying our noisy peak finder to the divergence rate on the codebooks at rate parameters , we were able to discover all the critical codebooks that were previously found by hand, as well as four more that had deviations in microstructure. We remark that it is also possible that some of these extra critical codebooks found may have arisen from imperfections in the tuning of the peak finder.

To obtain Figure 5, two-by-two pixel patches x were ternarized by first normalizing the pixel values within each patch to have variance one, and then each normalized value v was mapped to a ternary value b via the following rule:

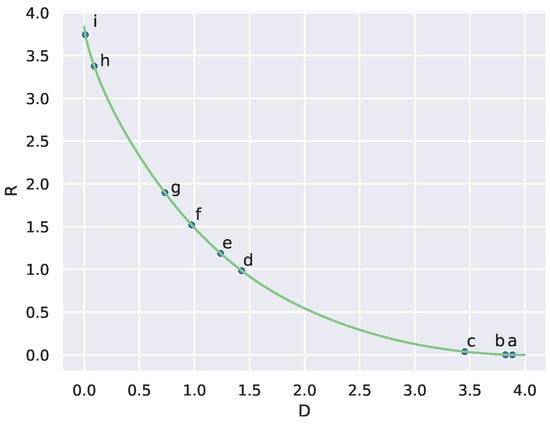

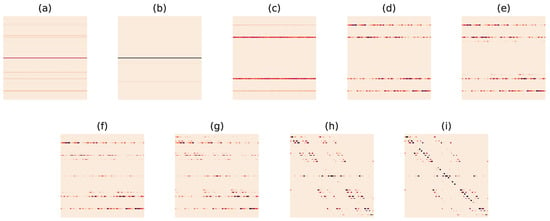

Figure 5.

RD curve (in green) and locations of critical codebooks (circles a–i) for ON/OFF natural image patches (compare with [80], Figure 9a).

We used as source q the probability distribution over this ternary representation of patches, and the Hamming distance between two binary vectors specified the distortion matrix. Codebooks along the RD curve were then used as conditional distributions and peaks in the divergence rate were plotted as the nine blue dots.

In Figure 6, we display the codebooks that arose from the critical found using the divergence rate. These codebooks correspond to the lettered points in Figure 5.

It is interesting to note that there were an order of magnitude fewer critical codebooks estimated than would have been expected by Conjecture 1. This suggests that this set of natural signals does not represent a random or generic RD problem, indicating extra structure in the signal class.

3.3. Machine Learning

We considered a warm-up problem on 18 states to test the divergence rate on the problem of clustering. The data contained three disjoint groups, each with six states, that had low intra-cluster distortion but high inter-class distortion.

We applied the noisy peak finder to the divergence rate of codebooks parameterizing the RD curve for this setup and plotted a few of the interesting critical codebooks in Figure 7. We noticed that critical codebooks coded for when the cluster centers were found and when the code progressively broke away towards the identity codebook.

Figure 7.

Critical codebooks for clusters corresponding to critical points determined by peaks in the divergence rate. Each column is the conditional distribution given an input (increasing light to dark).

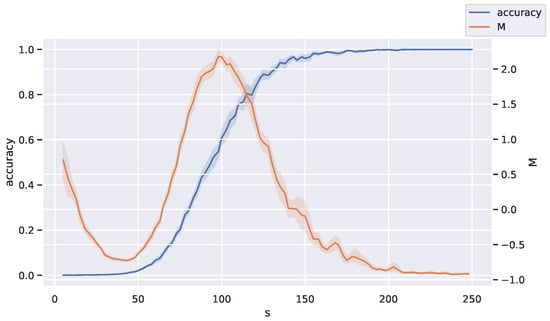

Our next machine learning example came from the theory of auto-encoding with Hopfield networks. First, we determined networks to store cliques as fixed-point attractors (memories) using Minimum Energy Flow (MEF) learning, as in [48,49]. Then, we looked for peaks in the divergence rate as networks were trained with different numbers of samples s. Our hypothesis was that as s was varied, there would be some critical s where the nature of the network dynamics changes drastically. For the clique example in Figure 8, the possible data consisted of binary vectors representing the absence or presence of an edge in a graph of size 16, with each sample being a clique of size 8.

Figure 8.

Peaks in the divergence rate M are signatures of phase transitions in performance accuracy for Hopfield recurrent neural networks as the number of training samples s increases.

By randomly sampling a different set of training data for each trial, we obtained a codebook for each number of training samples as the deterministic map on binary vectors given by the dynamics of the Hopfield network. Specifically, the codebook was the 0/1-matrix of the output of the dynamics determined by the association of the sth network; that is, each map formed a conditional matrix entry that was if the network dynamics took state x to y and zero otherwise, where is the total number of cliques. We used one output state to code for all non-cliques, leading to in the denominator.

We then plotted the accuracy (proportion of cliques correctly stored by the network) in blue and the normalized divergence rate in orange against s. We averaged this over 10 trials in the experiment. A significant peak in the divergence rate as a function of s can be observed in Figure 8. This suggests a critical sample count for auto-encoding in the Hopfield networks trained using MEF. Note that this peak appeared to occur when exactly half of the test data were accurately coded.

The fact that signatures of criticality in self-organizing recurrent neural networks arise during training is also corroborated by [81]. It would be interesting to further explore the changes that happen near the critical sample count shown in Figure 8.

3.4. Language

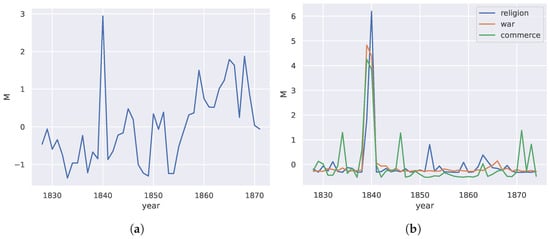

In [55], the possibility of punctuated equilibrium and criticality in language evolution was studied using a similar approach based on the divergence rate. Naively using the divergence rate on raw word frequency distributions over time, we can also obtain a measure of language change over time. In this case, the divergence rate is over a single condition (fixed topic). We show that peaks are also observed in this measure of a language dataset. Figure 9a indicates that critical changes are not only common but a natural part of the way humans solve problems in communication.

Figure 9.

(a) Normalized plot of divergence rate for a horticultural journal corpus against time. (b) Peaks along time in the corpus associated with the indicated words.

For the language example, the codebook was conditioned on a single state, which was the topic of the dataset, namely, agriculture in America. This meant that we only considered the distribution of words of one topic over time as the independent variable t. The divergence rate was thus applied over a single state in the language concept space.

To discover what the underlying cause of this large change could be, we clustered the words in the corpus using Word2Vec [82] and found several interesting clusters. We then chose semantically similar words from those clusters, which represented concepts in war, religion, and commerce. Again, by conditioning on words from these subtopics, we used the divergence rate to obtain the changes in the probability distributions of these clusters individually in Figure 9b. We noticed that peaks occurred exactly in the year of the most significant peak in Figure 9a.

Investigating possible reasons why this might be the case, we looked into the history of agriculture in the United States of America. In [83], Frolik mentioned that many innovations and technologies were brought to bear on the American agriculture industry (such as the cast iron plow, manufacturing of drills for sowing seeds, and horse-powered machines for harvesting grain) around the years leading up to and including the 1840s, which led to a booming agricultural industry. This possibly accounts for the peak in the usage of words related to commerce.

4. Methods

The measure is the sum of the distance over between probability distributions f conditioned on x at different points t and . It tracks the change in f over t. In our experiments, we used the Kullback–Leibler (KL) divergence for D.

The value of t here could be replaced by some independent variable, such as (in the case of the RD curve), the size of training dataset, the number of epochs (for training a model), or time. The codebook of a model f (for a discrete state space) is a distribution conditioned on each input state. For a set of models with random initializations, we normalized the distribution of the maps with respect to the inputs over all the maps. The measure over t was then obtained by taking the distance between pairs of consecutive codebooks. Critical phase transitions were then where peaks occurred along this 1-dimensional signal.

The measure is potentially non-concave and the variable t is usually sampled at discrete intervals, thus producing a piecewise linear function. Thus, to detect local maxima in the measure that represent points in t where the measure changes significantly (a critical point in t), we needed a noisy peak finder, as there may have been spurious local maxima that did not correspond to any critical change in behavior. To this end, we developed a discrete version of the Nesterov momentum algorithm [58] that we encapsulate in Algorithms 1–4.

Given a distortion measure (non-negative matrix describing costs of encoding an input state with an output state), the rate–distortion curve describes the minimum amount of information required to encode a given distribution of symbols at a level of distortion specified by the gain parameter . There is a corresponding codebook at any that describes a distribution over all symbols X for each symbol x with which to optimally code for x. This allowed us to directly use the measure, using as the independent variable t.

| Algorithm 1 Noisy local maxima finder |

Require: List of 2D points p Step size Momentum Convergence threshold Ensure: p is sorted by increasing x values Compute potential local maxima with Find All Local Maxima with input p points in p with Compute local minima with Find All Local Maxima with input u new empty set of 2D points while

do compute next local maxima with Find Next Local Maxima with inputs p, q, , , and Add to o for do if then break end if end for end while |

| Algorithm 2 Find All Local Maxima |

Require: List of 2D points p new empty list of 2D points for

do if then Add to o end if end for return o |

| Algorithm 3 Find Next Local Maxima |

Require: List of 2D points p List of 2D local maxima points q Current x-value Step size Momentum Convergence threshold while

do compute gradient with Piecewise gradient with input p and end while for

do if then end if end for return |

| Algorithm 4 Piecewise gradient |

Require: List of 2D points p Point to compute gradient at t for

do if then end if end for return g |

For the simple two-state rate–distortion example in Figure 1, we varied the distortion measure using a parameter a and found the critical value of at which the measure peaked. At all values of a, the critical value of found by the noisy peak finder matched the analytic critical value of . Note that there was no analytic critical value of at and, thus, there was a gap in the plot.

For the three-state case proposed by Berger in Figure 2a,b, the distortion matrix was held constant and we varied the probability distribution q. As an example, Figure 2a shows the marginal probability of state 2 at , and the points show where the peak finder detected a critical change, which were visually exactly where the marginal probability went to or emerged from 0. Figure 2b is a plot of the measure as a heatmap against u, .

To arrive at Figure 3, we generated a random distortion matrix for a uniform distribution on k states for each trial. We varied k and plotted the number of critical against k for the RD curve on this setup. We performed 10 trials to obtain this plot.

To illustrate weak universality in Figure 4, we took two distortion matrices D1 and D2 drawn from the same distribution (uniform in ). We then plotted the critical points found by the measure on the RD curve.

To test this measure on the coding of clusters, we consider an 18-state distribution, with disjoint groups of six states that have low intra-cluster distortion, but high inter-class distortion. We then apply the noisy peak finder to the measure on the RD curve for this setup and plot some of the interesting critical codebooks in Figure 7.

For the clique example in Figure 8, the data is binary vectors representing absence or presence of an edge in a graph, with each sample being a clique of size , where v is the number of nodes in the graph, taken to be 16 in our experiment. The distortion matrix is computed using Hamming distance. By randomly sampling a different set of training data for each trial, we obtain a codebook for each number of training samples. We then plot the accuracy (proportion of cliques correctly stored exactly by the network) in green and the normalized measure in purple against the number of training samples.

For the natural image patch example in Figure 5, the data were a ternarized form of two by two pixel patches. Each pixel was normalized and then represented by two bits. If the normalized value of the pixel was greater than a threshold value , it was represented as ; if it was less than , it was represented by ; otherwise, it was represented by . Thus, in a two-by-two patch, there were states. Again, the distortion matrix was the Hamming distance between two bit vectors. We obtained codebooks along the RD curve and plotted points where critical transitions were found.

For the language example, the codebook was conditioned on a single variable, which was the topic of the dataset: agriculture in America. This meant that we only considered the distribution of words of one topic over time as the independent variable t. The measure was thus over a single state in the language concept space.

5. Discussion

In this section, we discuss implications of our criticality measure. Our experiments show that criticality in system behavior appears to be relatively common, as predicted by RD theory. In this paper, we have shown that optimization procedures can also lead to critical transitions in function behavior and that in natural processes such as language evolution, such critical changes can also occur. It could be that these processes find encodings that are close to rate-optimal, as there may be a significant discontinuity in the rate of change of encoding cost with respect to encoding error at these points.

This analysis, however, only accounts for the critical points on the RD curve. The critical points of a process would depend on the details of the process itself, and we can only expect the critical points to line up with the ones on the RD curve if the process is efficient enough to follow the RD curve near its critical points. It is possible to detect these discontinuities in , though measuring it would require far more samples.

Another point that can be made is that criticality does not necessarily manifest as points but as manifolds. In Figure 2b, a 1-dimensional critical manifold is observed in u. This is akin to how the boiling point of a substance is a function of pressure and temperature. Further work could involve studying the behavior of these manifolds.

Observing the codebooks at the critical points in order of increasing distortion, we found that the codebook broke away further from encoding the distribution as the identity function. Further work could be to observe the microstructure of codebooks at the critical points to try to understand which states are chosen to reduce the cost of coding perfectly at each critical point.

There are several direct implications for critical behavior, some of which were outlined by Sims in [84]. One open question is how to apply these ideas to deep learning [85] to minimize a typically squared error loss. One insight is to choose models and normative principles that have critical signatures [65] (working memory). Given that optimal continuous codings are discrete [80,86,87,88], it is not that surprising for criticality to arise from near-optimal solutions to normative principles applied to information processing. Furthermore, it has been observed that generalization beyond overfitting a training dataset, such as that seen in grokking by large language models, is related to the double descent phenomena [28], which has been shown to be dependent on multiple factors [89]. These relationships could be further explored using the method described in this paper by, for example, estimating the of more complex distributions using [90].

Further work would involve continuous distributions, which require different treatment to obtain the RD curves [80], and the use of different probability distribution distance metrics. Other algorithms for mapping discrete spaces could also be analyzed with the techniques developed in this work.

Finally, it would be interesting to investigate how other criticality measures relate to the information theory-inspired divergence rate that is presented here. For instance, in the field of reinforcement learning, it can be useful to identify critical choices of an agent’s actions [91] when performing a task. As another example, in graph analyses, it is often crucial to identify nodes that are critical for optimal flows on the graph. In this case, a maximum entropy approach using path trajectories can be found in [92]. Adapting these ideas to our setting will be explored in future work and is beyond the scope of this paper.

6. Conclusions

Our initial hypothesis was that an information processing system that compresses and reinterprets information into a useful form usually has critical transitions in the way the information is transformed as some control parameter of the system is tweaked. This was confirmed with the use of the divergence rate—the measure we developed to track the change in the encodings along the control parameter—and a noisy peak finder, which helped to identify critical points. We believe that this may provide fertile ground for further research into critical phenomena in other areas such as the behavior of learning algorithms.

Author Contributions

Conceptualization, T.C.; methodology, C.H. and T.C.; validation, T.C. and C.H; formal analysis, C.H.; investigation, T.C. and C.H.; resources, C.H.; data curation, T.C.; writing—original draft preparation, C.H.; writing—review and editing, C.H. and T.C.; supervision, C.H. and D.W.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

No primary research software or code have been included. This study was carried out using publicly available data from “Van Hateren’s Natural Image Database” at https://github.com/hunse/vanhateren (accessed on 7 November 2024), and from “American Farmer; Devoted to Agriculture, Horticulture and Rural Life 1819–1897” at https://archive.org/details/pub_american-farmer-devoted-to-agriculture-horticulture (accessed on 7 November 2024).

Acknowledgments

We thank Sarah Marzen and Lav Varshney for their input into this work.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| RD | rate–distortion |

| Kullback–Leibler divergence | |

| MEF | minimum energy flow |

References

- Landau, L. The Theory of Phase Transitions. Nature 1936, 138, 840–841. [Google Scholar] [CrossRef]

- Anisimov, M.A. Letter to the Editor: Fifty Years of Breakthrough Discoveries in Fluid Criticality. Int. J. Thermophys. 2011, 32, 2001–2009. [Google Scholar] [CrossRef]

- Peierls, R. On Ising’s model of ferromagnetism. Math. Proc. Camb. Philos. Soc. 1936, 32, 477–481. [Google Scholar] [CrossRef]

- Bak, P.; Chen, K. The physics of fractals. Phys. D Nonlinear Phenom. 1989, 38, 5–12. [Google Scholar] [CrossRef]

- Paczuski, M.; Maslov, S.; Bak, P. Avalanche dynamics in evolution, growth, and depinning models. Phys. Rev. E 1996, 53, 414–443. [Google Scholar] [CrossRef]

- Watkins, N.W.; Pruessner, G.; Chapman, S.C.; Crosby, N.B.; Jensen, H.J. 25 years of self-organized criticality: Concepts and controversies. Space Sci. Rev. 2016, 198, 3–44. [Google Scholar] [CrossRef]

- Langton, C.G. Computation at the edge of chaos: Phase transitions and emergent computation. Phys. D Nonlinear Phenom. 1990, 42, 12–37. [Google Scholar] [CrossRef]

- Prokopenko, M. Modelling complex systems and guided self-organisation. J. Proc. R. Soc. New South Wales 2017, 150, 104–109. [Google Scholar] [CrossRef]

- Mora, T.; Bialek, W. Are Biological Systems Poised at Criticality? J. Stat. Phys. 2011, 144, 268–302. [Google Scholar] [CrossRef]

- Muñoz, M.A. Colloquium:Criticality and dynamical scaling in living systems. Rev. Mod. Phys. 2018, 90, 031001. [Google Scholar] [CrossRef]

- Bertschinger, N.; Natschläger, T. Real-Time Computation at the Edge of Chaos in Recurrent Neural Networks. Neural Comput. 2004, 16, 1413–1436. [Google Scholar] [CrossRef] [PubMed]

- Kinouchi, O.; Copelli, M. Optimal dynamical range of excitable networks at criticality. Nat. Phys. 2006, 2, 348–351. [Google Scholar] [CrossRef]

- Legenstein, R.; Maass, W. Edge of chaos and prediction of computational performance for neural circuit models. Neural Netw. 2007, 20, 323–334. [Google Scholar] [CrossRef] [PubMed]

- Boedecker, J.; Obst, O.; Lizier, J.T.; Mayer, N.M.; Asada, M. Information processing in echo state networks at the edge of chaos. Theory Biosci. 2012, 131, 205–213. [Google Scholar] [CrossRef]

- Hoffmann, H.; Payton, D.W. Optimization by Self-Organized Criticality. Sci. Rep. 2018, 8, 2358. [Google Scholar] [CrossRef]

- Wilting, J.; Priesemann, V. Inferring collective dynamical states from widely unobserved systems. Nat. Commun. 2018, 9, 2325. [Google Scholar] [CrossRef]

- Avramiea, A.E.; Masood, A.; Mansvelder, H.D.; Linkenkaer-Hansen, K. Long-Range Amplitude Coupling Is Optimized for Brain Networks That Function at Criticality. J. Neurosci. 2022, 42, 2221–2233. [Google Scholar] [CrossRef]

- Kelso, J.A. Phase transitions and critical behavior in human bimanual coordination. Am. J. Physiol.-Regul. Integr. Comp. Physiol. 1984, 246, R1000–R1004. [Google Scholar] [CrossRef]

- Shew, W.L.; Plenz, D. The Functional Benefits of Criticality in the Cortex. Neuroscientist 2013, 19, 88–100. [Google Scholar] [CrossRef]

- Tkačik, G.; Bialek, W. Information Processing in Living Systems. Annu. Rev. Condens. Matter Phys. 2016, 7, 89–117. [Google Scholar] [CrossRef]

- Erten, E.; Lizier, J.; Piraveenan, M.; Prokopenko, M. Criticality and Information Dynamics in Epidemiological Models. Entropy 2017, 19, 194. [Google Scholar] [CrossRef]

- Cocchi, L.; Gollo, L.L.; Zalesky, A.; Breakspear, M. Criticality in the brain: A synthesis of neurobiology, models and cognition. Prog. Neurobiol. 2017, 158, 132–152. [Google Scholar] [CrossRef] [PubMed]

- Zimmern, V. Why Brain Criticality Is Clinically Relevant: A Scoping Review. Front. Neural Circuits 2020, 14, 54. [Google Scholar] [CrossRef]

- Cramer, B.; Stöckel, D.; Kreft, M.; Wibral, M.; Schemmel, J.; Meier, K.; Priesemann, V. Control of criticality and computation in spiking neuromorphic networks with plasticity. Nat. Commun. 2020, 11, 2853. [Google Scholar] [CrossRef]

- Heiney, K.; Huse Ramstad, O.; Fiskum, V.; Christiansen, N.; Sandvig, A.; Nichele, S.; Sandvig, I. Criticality, Connectivity, and Neural Disorder: A Multifaceted Approach to Neural Computation. Front. Comput. Neurosci. 2021, 15, 611183. [Google Scholar] [CrossRef]

- O’Byrne, J.; Jerbi, K. How critical is brain criticality? Trends Neurosci. 2022, 45, 820–837. [Google Scholar] [CrossRef]

- Power, A.; Burda, Y.; Edwards, H.; Babuschkin, I.; Misra, V. Grokking: Generalization beyond overfitting on small algorithmic datasets. arXiv 2022, arXiv:2201.02177. [Google Scholar]

- Liu, Z.; Kitouni, O.; Nolte, N.S.; Michaud, E.; Tegmark, M.; Williams, M. Towards understanding grokking: An effective theory of representation learning. Adv. Neural Inf. Process. Syst. 2022, 35, 34651–34663. [Google Scholar]

- Shannon, C.E. Probability of Error for Optimal Codes in a Gaussian Channel. Bell Syst. Tech. J. 1959, 38, 611–656. [Google Scholar] [CrossRef]

- Berger, T. Rate Distortion Theory and Data Compression. In Advances in Source Coding; Springer: Vienna, Austria, 1975; pp. 1–39. [Google Scholar] [CrossRef]

- Sterling, P.; Laughlin, S. Principles of Neural Design; The MIT Press: Cambridge, MA, USA, 2015. [Google Scholar] [CrossRef]

- Barlow, H.B. Possible Principles Underlying the Transformations of Sensory Messages. In Sensory Communication; Rosenblith, W.A., Ed.; The MIT Press: Cambridge, MA, USA, 1961; pp. 216–234. [Google Scholar] [CrossRef]

- Rose, K. Deterministic annealing for clustering, compression, classification, regression, and related optimization problems. Proc. IEEE 1998, 86, 2210–2239. [Google Scholar] [CrossRef]

- Wibisono, A.; Jog, V.; Loh, P.L. Information and estimation in Fokker-Planck channels. In Proceedings of the 2017 IEEE International Symposium on Information Theory (ISIT), Aachen, Germany, 25–30 June 2017; pp. 2673–2677. [Google Scholar]

- Gould, S.J.; Eldredge, N. Punctuated equilibria: An alternative to phyletic gradualism. In Models in Paleobiology; Freeman, Cooper: San Francisco, CA, USA, 1972. [Google Scholar]

- Wallace, R.; Wallace, D. Punctuated Equilibrium in Statistical Models of Generalized Coevolutionary Resilience: How Sudden Ecosystem Transitions Can Entrain Both Phenotype Expression and Darwinian Selection. In Transactions on Computational Systems Biology IX; Istrail, S., Pevzner, P., Waterman, M.S., Priami, C., Eds.; Series Title: Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2008; Volume 5121, pp. 23–85. [Google Scholar] [CrossRef]

- King, D.M. Classification of Phase Transition Behavior in a Model of Evolutionary Dynamics. Ph.D. Thesis, University of Missouri, St. Louis, MO, USA, 2012. [Google Scholar]

- Wallace, R. Adaptation, Punctuation and Information: A Rate-Distortion Approach to Non-Cognitive ‘Learning Plateaus’ in evolutionary processes. Acta Biotheor. 2002, 50, 101–116. [Google Scholar] [CrossRef]

- Tlusty, T. A model for the emergence of the genetic code as a transition in a noisy information channel. J. Theor. Biol. 2007, 249, 331–342. [Google Scholar] [CrossRef] [PubMed]

- Tlusty, T. A rate-distortion scenario for the emergence and evolution of noisy molecular codes. Phys. Rev. Lett. 2008, 100, 048101, arXiv:1007.4149. [Google Scholar] [CrossRef] [PubMed]

- Gong, L.; Bouaynaya, N.; Schonfeld, D. Information-Theoretic Model of Evolution over Protein Communication Channel. IEEE ACM Trans. Comput. Biol. Bioinform. 2011, 8, 143–151. [Google Scholar] [CrossRef]

- Marzen, S.E.; DeDeo, S. The evolution of lossy compression. J. R. Soc. Interface 2017, 14, 20170166. [Google Scholar] [CrossRef]

- Marzen, S.; DeDeo, S. Weak universality in sensory tradeoffs. Phys. Rev. E 2016, 94, 060101. [Google Scholar] [CrossRef] [PubMed]

- van der Schaaf, A.; van Hateren, J. Modelling the Power Spectra of Natural Images: Statistics and Information. Vis. Res. 1996, 36, 2759–2770. [Google Scholar] [CrossRef]

- Ruderman, D.L.; Bialek, W. Statistics of natural images: Scaling in the woods. Phys. Rev. Lett. 1994, 73, 814–817. [Google Scholar] [CrossRef]

- Li, Z. Understanding Vision: Theory, Models, and Data, 1st ed.; Oxford University Press: Oxford, UK, 2014. [Google Scholar]

- Sugar, C.A.; James, G.M. Finding the Number of Clusters in a Dataset: An Information-Theoretic Approach. J. Am. Stat. Assoc. 2003, 98, 750–763. [Google Scholar] [CrossRef]

- Hillar, C.J.; Tran, N.M. Robust Exponential Memory in Hopfield Networks. J. Math. Neurosci. 2018, 8, 1. [Google Scholar] [CrossRef]

- Hillar, C.; Chan, T.; Taubman, R.; Rolnick, D. Hidden Hypergraphs, Error-Correcting Codes, and Critical Learning in Hopfield Networks. Entropy 2021, 23, 1494. [Google Scholar] [CrossRef] [PubMed]

- Hopfield, J.J. Neural networks and physical systems with emergent collective computational abilities. Proc. Natl. Acad. Sci. USA 1982, 79, 2554–2558. [Google Scholar] [CrossRef] [PubMed]

- Humayun, A.I.; Balestriero, R.; Baraniuk, R. Deep networks always grok and here is why. arXiv 2024, arXiv:2402.15555. [Google Scholar]

- Bowern, C. Punctuated Equilibrium and Language Change. In Encyclopedia of Language & Linguistics; Elsevier: Amsterdam, The Netherlands, 2006; pp. 286–289. [Google Scholar] [CrossRef]

- Tadic, B.; Dankulov, M.M.; Melnik, R. The mechanisms of self-organised criticality in social processes of knowledge creation. Phys. Rev. E 2017, 96, 032307. [Google Scholar] [CrossRef]

- Barron, A.T.J.; Huang, J.; Spang, R.L.; DeDeo, S. Individuals, institutions, and innovation in the debates of the French Revolution. Proc. Natl. Acad. Sci. USA 2018, 115, 4607–4612. [Google Scholar] [CrossRef]

- Nevalainen, T.; Säily, T.; Vartiainen, T.; Liimatta, A.; Lijffijt, J. History of English as punctuated equilibria? A meta-analysis of the rate of linguistic change in Middle English. J. Hist. Socioling. 2020, 6, 20190008. [Google Scholar] [CrossRef]

- Gupta, R.; Roy, S.; Meel, K.S. Phase Transition Behavior in Knowledge Compilation. In Principles and Practice of Constraint Programming; Simonis, H., Ed.; Series Title: Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2020; Volume 12333, pp. 358–374. [Google Scholar] [CrossRef]

- Seoane, L.F.; Solé, R. Criticality in Pareto Optimal Grammars? Entropy 2020, 22, 165. [Google Scholar] [CrossRef]

- Nesterov, Y. A method for solving the convex programming problem with convergence rate O(1/k2). Proc. USSR Acad. Sci. 1983, 269, 543–547. [Google Scholar]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory, 2nd ed.; OCLC: Ocm59879802; Wiley-Interscience: Hoboken, NJ, USA, 2006. [Google Scholar]

- Davisson, L. Rate Distortion Theory: A Mathematical Basis for Data Compression. IEEE Trans. Commun. 1972, 20, 1202. [Google Scholar] [CrossRef]

- Fang, H.C.; Huang, C.T.; Chang, Y.W.; Wang, T.C.; Tseng, P.C.; Lian, C.J.; Chen, L.G. 81MS/s JPEG2000 single-chip encoder with rate-distortion optimization. In Proceedings of the 2004 IEEE International Solid-State Circuits Conference (IEEE Cat. No.04CH37519), San Francisco, CA, USA, 15–19 February 2004; Volume 1, pp. 328–531. [Google Scholar] [CrossRef]

- Choi, I.; Lee, J.; Jeon, B. Fast Coding Mode Selection with Rate-Distortion Optimization for MPEG-4 Part-10 AVC/H.264. IEEE Trans. Circuits Syst. Video Technol. 2006, 16, 1557–1561. [Google Scholar] [CrossRef]

- Zarcone, R.V.; Engel, J.H.; Burc Eryilmaz, S.; Wan, W.; Kim, S.; BrightSky, M.; Lam, C.; Lung, H.L.; Olshausen, B.A.; Philip Wong, H.S. Analog Coding in Emerging Memory Systems. Sci. Rep. 2020, 10, 6831. [Google Scholar] [CrossRef] [PubMed]

- Sims, C.R. Rate–distortion theory and human perception. Cognition 2016, 152, 181–198. [Google Scholar] [CrossRef] [PubMed]

- Jakob, A.M.; Gershman, S.J. Rate-distortion theory of neural coding and its implications for working memory. Neuroscience 2022. preprint. [Google Scholar] [CrossRef]

- Wallace, R. A Rate Distortion approach to protein symmetry. BioSystems 2010, 101, 97–108. [Google Scholar] [CrossRef]

- Blahut, R. Computation of channel capacity and rate-distortion functions. IEEE Trans. Inf. Theory 1972, 18, 460–473. [Google Scholar] [CrossRef]

- Arimoto, S. An algorithm for computing the capacity of arbitrary discrete memoryless channels. IEEE Trans. Inf. Theory 1972, 18, 14–20. [Google Scholar] [CrossRef]

- Cox, D.A.; Little, J.; O’shea, D. Using Algebraic Geometry; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2005; Volume 185. [Google Scholar]

- Browder, F.E. On continuity of fixed points under deformations of continuous mappings. Summa Bras. Math. 1960, 4, 183–191. [Google Scholar]

- Solan, E.; Solan, O.N. Browder’s theorem through brouwer’s fixed point theorem. Am. Math. Mon. 2023, 130, 370–374. [Google Scholar] [CrossRef]

- Sanyal, R.; Sturmfels, B.; Vinzant, C. The entropic discriminant. Adv. Math. 2013, 244, 678–707. [Google Scholar] [CrossRef]

- Hillar, C.; Wibisono, A. Maximum entropy distributions on graphs. arXiv 2013, arXiv:1301.3321. [Google Scholar] [CrossRef]

- Wang, X. A Simple Proof of Descartes’s Rule of Signs. Am. Math. Mon. 2004, 111, 525. [Google Scholar] [CrossRef]

- Haukkanen, P.; Tossavainen, T. A generalization of Descartes’ rule of signs and fundamental theorem of algebra. Appl. Math. Comput. 2011, 218, 1203–1207. [Google Scholar] [CrossRef]

- Berger, T. Rate-Distortion Theory. In Wiley Encyclopedia of Telecommunications; Proakis, J.G., Ed.; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2003; p. eot142. [Google Scholar] [CrossRef]

- Hillar, C.; Marzen, S. Revisiting Perceptual Distortion for Natural Images: Mean Discrete Structural Similarity Index. In Proceedings of the 2017 Data Compression Conference (DCC), Snowbird, UT, USA, 4–7 April 2017; pp. 241–249. [Google Scholar] [CrossRef]

- Hillar, C.; Mehta, R.; Koepsell, K. A Hopfield recurrent neural network trained on natural images performs state-of-the-art image compression. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 4092–4096. [Google Scholar] [CrossRef]

- Mehta, R.; Marzen, S.; Hillar, C. Exploring discrete approaches to lossy compression schemes for natural image patches. In Proceedings of the 2015 23rd European Signal Processing Conference (EUSIPCO), Nice, France, 31 August–4 September 2015; pp. 2236–2240. [Google Scholar] [CrossRef]

- Hillar, C.J.; Marzen, S.E. Neural network coding of natural images with applications to pure mathematics. In Algebraic and Geometric Methods in Discrete Mathematics; American Mathematical Society: Providence, RI, USA, 2017; pp. 189–221. [Google Scholar]

- Del Papa, B.; Priesemann, V.; Triesch, J. Criticality meets learning: Criticality signatures in a self-organizing recurrent neural network. PLoS ONE 2017, 12, e0178683. [Google Scholar] [CrossRef] [PubMed]

- Mikolov, T. Efficient estimation of word representations in vector space. arXiv 2013, arXiv:1301.3781. [Google Scholar]

- Frolik, E. The History of Agriculture in the United States Beginning with the Seventeenth Century. Trans. Neb. Acad. Sci. Affil. Soc. 1977, 4, 453. [Google Scholar]

- Jung, J.; Kim, J.H.J.; Matějka, F.; Sims, C.A. Discrete Actions in Information-Constrained Decision Problems. Rev. Econ. Stud. 2019, 86, 2643–2667. [Google Scholar] [CrossRef]

- Grohs, P.; Klotz, A.; Voigtlaender, F. Phase Transitions in Rate Distortion Theory and Deep Learning. Found. Comput. Math. 2021, 23, 329–392. [Google Scholar] [CrossRef]

- Smith, J.G. The information capacity of amplitude- and variance-constrained scalar gaussian channels. Inf. Control 1971, 18, 203–219. [Google Scholar] [CrossRef]

- Fix, S.L. Rate distortion functions for squared error distortion measures. In Proceedings of the Annual Allerton Conference on Communication, Control and Computing, Urbana, IL, USA, 24 September 1978; pp. 704–711. [Google Scholar]

- Rose, K. A mapping approach to rate-distortion computation and analysis. IEEE Trans. Inf. Theory 1994, 40, 1939–1952. [Google Scholar] [CrossRef]

- Schaeffer, R.; Khona, M.; Robertson, Z.; Boopathy, A.; Pistunova, K.; Rocks, J.W.; Fiete, I.R.; Koyejo, O. Double descent demystified: Identifying, interpreting & ablating the sources of a deep learning puzzle. arXiv 2023, arXiv:2303.14151. [Google Scholar]

- Borade, S.; Zheng, L. Euclidean information theory. In Proceedings of the 2008 IEEE International Zurich Seminar on Communications, Zurich, Switzerland, 12–14 March 2008; pp. 14–17. [Google Scholar]

- Spielberg, Y.; Azaria, A. The concept of criticality in reinforcement learning. In Proceedings of the 2019 IEEE 31st International Conference on Tools with Artificial Intelligence (ICTAI), Portland, OR, USA, 4–6 November 2019; pp. 251–258. [Google Scholar]

- Lebichot, B.; Saerens, M. A bag-of-paths node criticality measure. Neurocomputing 2018, 275, 224–236. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).