Abstract

The principles of Lattice Field Theory (LFT), in particular Lattice Gauge Theory (LGT), are explained for a nonspecialist audience. We describe some of the successes of the program; we also discuss the relationship between LFT and Quantum Cellular Automata (QCA).

1. Introduction

Since Einstein and Minkowski, we are used to considering space–time as a continuum. But nowadays this view is more and more being questioned [1,2,3]. Space–time is considered as “emergent” from something deeper, whatever that may be, or it is, due to quantum effects, assumed to have a “grainy” structure at the smallest distances comparable to the Planck length.

A much more mundane idea is to approximate the space–time continuum by a discrete structure, such as a lattice. This also has a long history: for example, there were the attempts by F. Bopp in the 1960s [4]; later there was the use of a lattice approximation in the enterprise of constructing Quantum Field Theories (QFTs) with mathematical rigor [5,6].

A pivotal event was the invention of Lattice Gauge Theory (LGT) by Ken Wilson [7], followed by the pioneering numerical study of a LGT by Michael Creutz [8]. Out of these beginnings developed a major industry: hundreds of physicists are working on extracting the physical implications of LGT with the aid of computing farms, sharing their results yearly in a major conference. The arXiv, the main repository of preprints in physics and related sciences, has had a section called hep-lat (for high-energy physics—lattice) since its inception in 1991. A number of good textbooks on LGT are also available, for example [9,10].

The main reason for the growth of LGT is that it made Quantum Chromodynamics (QCD), which describes the strong interaction of particles, also known as the nuclear force, amenable to numerical computation. Quantities like the masses of strongly interacting particles, called hadrons, which are not accessible by the usual perturbative treatment of QFT using Feynman graphs, could finally be computed. More about this in Section 7.

And then there is the approach to QFT featured in this volume, namely Quantum Walks and Quantum Cellular Automata (QCA) [11,12,13,14,15,16]. Of course, QCA are of great interest not only as discretizations of quantum systems but also as universal quantum computers or quantum Turing machines [17,18].

All these ideas and approaches share a central issue: they have to show the re-emergence of the continuum in the long wavelength limit, where the grainy or lattice nature at the microscopic scale should become invisible. We will discuss this for a simple case in Section 4 and briefly for QCD in Section 7.

2. From the Real to the Imaginary World

We are used to describing quantum systems by states (wave functions) evolving under unitary time evolutions; this is so also in QW and QCA. Typically, time evolution is generated by a self-adjoint, non-negative Hamiltonian

H being nonnegative means that its spectrum is nonnegative. The ground state has the lowest eigenvalue, which is taken to be 0 (if necessary by subtracting a constant from H).

The unitary time evolution is then given by

applying to a state gives the solution of the Schrödinger equation

The first thing to notice is that can by analytically continued to complex times , in particular negative imaginary time, a procedure also know as “Wick rotation” [19,20,21]. So, we replace t by and define

For is self-adjoint, bounded, and has spectrum between 0 and 1. It contains still all the information contained in ; in particular, the spectra (the eigenvalues) of K and U just differ by a factor i. K describes the evolution in the “imaginary world” but can also be used to describe finite temperatures state by identifying with the inverse absolute temperature , and defining the thermal state by the density matrix

In particular, since the ground state has energy zero, we have

and

As is well known, the time evolution can be transferred from the wave functions to the operators (observables) via

(known as the Heisenberg picture); likewise, we can consider an imaginary time evolution via

which implies

where we use the notation

In quantum mechanics, the wave function is a function on the configuration space; for a nonrelativistic quantum particle, the configuration space, if simply the 3D space in which the particle moves with elements and the wave function is a function . For two particles, the configuration space consists of pairs of vectors , a wave function is a function , and so on for several particles.

In a (classical or quantum) field theory, the role of the configuration space is played by field configurations, which give the value of the field, such as the complex valued Higgs field of the Standard Model (SM) of elementary particles, for each space point. Denoting a generic field by , a field configuration is thus given by a function and a wave function is a function of a function, called a functional .

Feynman taught us that the unitary time evolution

has an integral kernel given by the “sum over paths”or “sum over histories”, weighted by a certain phase; symbolically, for a particle like an electron it is

where S is the action functional

and L is the Lagrangian corresponding to the Hamiltonian H. The integral is over “all paths” moving from to in the time interval from to .

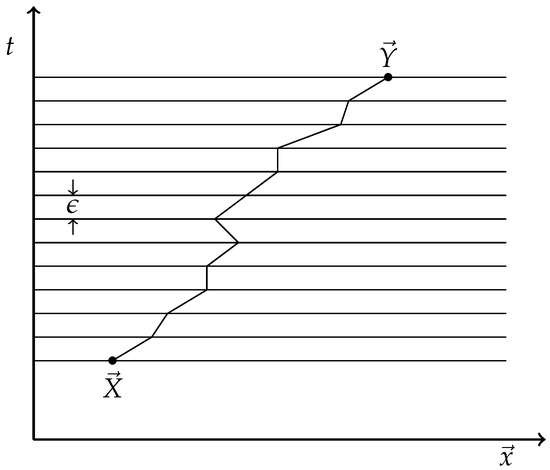

To give the meaning of the mysterious integration symbol , Feynman and Hibbs [22] (see also [23]) approximate each path by a piecewise linear one, as illustrated in Figure 1.

Figure 1.

A piecewise linear path from to .

The path is cut up into small steps

happening in a small time interval . In each step from to , the path is approximated by a straight line and “weighted” with a phase , where the action for the linear piece from to . We assume L to be a continuous function of , let us say of the form

To approximate , we take for midpoint between and ; for , we take the constant velocity on that linear piece. So, we approximate by

The approximate expression for (13) using the piecewise linear path is then

is a normalization that has to chosen in such a way that the limit exists. In the book [22], this limit is computed explicitly for various examples. Note also that is an approximation to the total action S (14).

The path integral does not really exist in the mathematical sense, but the mathematician Mark Kac [24] showed that using the imaginary time introduced above, the formal expression

where S is also analytically continued to imaginary time, can be given a precise sense, with the paths now in imaginary time.

If we are dealing with a relativistic quantum field theory, with a generic field denoted , the formula analogous to (19) is

where now is the Lagrangian density. Here, the integral over is the “sum of all histories” of the fields with and . As before, the action functional is

so that we have

In the imaginary world, the path integral is (typically) real, whereas in the real world it is complex. Moreover, in many cases in the imaginary world, the path integral is not only real, but is actually positive, so expressions like

where is some functional of the field , can be considered as expectation values in some probability distribution. This is the key to the numerical evaluation discussed in Section 6.

To make mathematical sense of (20) is not easy and so far has been achieved only in space dimension ; see [5,6]. It is important to note that in those cases it has been shown that the theory is invariant under the Euclidean group (rotations and translations) acting on the Euclidean space–time continuum. For this reason one also talks of the imaginary time version of QFT as Euclidean QFT.

3. Return to the Real World

As remarked, the imaginary world is also known as the Euclidean world, because in QFT it leads to a theory invariant under rotations and translations of the Euclidean space–time . The Euclidean formalism had been used by Schwinger [21] long before it was known how to return from the Euclidean to the real (Minkowskian) world. This was achieved by Osterwalder and Schrader in the 1970s [25,26]. They formulated certain conditions on the Euclidean correlation functions (also known as Schwinger functions) that allowed the recovery of the Minkowski correlation functions (also known as Wightman functions) with all the right properties. The crucial property they identified is Reflection Positivity (RP).

RP is just an expression of the fact that Quantum Theory is based on a Hilbert space, i.e., a state space with a positive definite scalar product. In particular, the scalar product of a state (wave function) with itself is positive:

can be seen as the time reflected version of , hence the term “Reflection Positivity”.

In QFT, the fields become operators; we use the symbol to denote them. So, we now take for A a product of field operators and consider

where the ground state is now usually known as the vacuum. We find, using

Again, this is the vacuum expectation value of a time reflected operator times the unreflected one.

All these so far are obvious properties of the real quantum world. But, let us now express (28) by the Euclidean (imaginary time) functional integral:

As noted in (23) in the previous section, the expression (29) can be read as probabilistic expectation value , where A is some functional involving fields at positive imaginary times and is the time reflected and complex conjugate version of A.

An attentive reader may notice that in (28) the symbol appears, whereas in (29), shows up. The reason behind this is that in (28), represents an operator, with denoting the adjoint; in (29), is just a (complex) random variable and its complex conjugate. The two incarnations of the field are related in the following way: the probabilistic correlation functions of the random variables are equal to the vacuum expectation values of the field operators evolved to imaginary time:

where we assumed that ; stands for either or , stands for either or .

We denote the set of functionals (actually a commutative algebra) living at positive imaginary times as . RP is then the statement

RP allows us to create a Hilbert space from : first one takes the quotient space of , where is the null space of elements which satisfy . This gives a so-called pre-Hilbert space, which can be completed to a Hilbert space:

Quantum states (wave functions) are thus represented (non-uniquely) by elements of . In particular, the unit element represents the vacuum since it is invariant under imaginary time translations.

Translations in the positive direction by an amount

produce matrix elements of

as follows: let , and the functional obtained by replacing each by ; then,

By studying this type of expression for suitably chosen , one can extract information about the spectrum of H, in particular masses of particles (see Section 5 and Section 6).

Everything so far is completely “formal”, in the sense of dealing with mathematically ill-defined quantities. In the next section, we introduce a space–time lattice to remedy this.

4. Lattice Field Theory

We now replace the space–time continuum by a simple hypercubic lattice . Some people might miss a lattice constant a, but as will be explained, this is not a parameter but rather a dynamically determined quantity and we work with the unit lattice until further notice.

The vertices of the lattice have coordinates

where bold-face symbols refer to (Euclidean) space–time vectors.

We think of as the discretized imaginary time, but in principle there is complete symmetry between the different coordinates.

A typical lattice action for a scalar field is

Here, the symbol denotes nearest neighbor pairs (links), oriented from to ; the first two terms describe a free lattice field, whereas the the last term defines an interaction.

To make sense of the “sum over histories”, we first have to restrict ourselves to a finite piece of the lattice and specify boundary conditions. Then, we integrate over all , , defining

where is some functional, typically a polynomial of the fields. In the end, the thermodynamic limit has to be taken.

What we have defined here can be considered as a system of classical statistical mechanics (CSM); so, all the techniques of CSM, like cluster expansions and concepts like phase transitions and critical phenomena of CSM can be applied.

Luckily, with the action (36) RP holds (see [27,28,29]), provided the lattice is symmetric under time reflections. If the infinite volume limit in time direction has been taken, and the spatial extent of does not depend on the time coordinate, we can also introduce the time shift introduced before (albeit only by integer amount); shifting by one lattice spacing in imginary time, we obtain an operator to be interpreted as

is known in CSM as the transfer matrix.

Let us return to the issue of the lattice constant. If we look at the case in (36), all the integrals are Gaussian and can be evaluated explicitly. We find, for instance (after taking the infinite volume limit),

This expression decays exponentially for large distances :

with for small . is the so-called correlation length in lattice units. It should be used to ‘calibrate’ the lattice by using it to define the standard of length. For instance, we may declare to represent the physical length fermi (fm); in this new incarnation, we denote it by without a subscript, which now has the dimension of length.

If, for instance, in lattice units, the lattice constant in physical units is

A vector in lattice units thus corresponds to a vector

with components measured in fermi. Likewise, a wave vector as appearing in Equation (40) corresponds to a momentum vector

and the mass parameter corresponds to a mass

in physical units. Here, we used a convention that is standard in particle physics: we set the speed of light and the reduced Planck constant ℏ equal to 1, and the mass M then has indeed dimension 1/length, as shown in (45). One fm then corresponds to a mass of GeV, which is of the order of the baryon masses.

We have obtained quantities carrying a dimension from the dimensionless lattice quantities by the simple declaration making a physical length. This is an instance of known as dimensional transmutation.

The continuum limit is obtained by sending the lattice constant to 0, or equivalently the correlation length to ∞ and the the mass to zero. In the language of statistical mechanics, one is going toward a critical point. By rescaling and renormalizing in accordance with (43)–(45) we obtain the renormalized two-point function

with for (in one has to take ). It is easy to see that the limit of exists and is

(for the mathematically scrupulous, the integral is to be interpreted as the Fourier trasnformation of a distribution).

In the continuum limit, we find the simple relation

which is the well-known relation between mass and Compton wavelength.

5. Lattice Gauge Theory

Lattice Gauge Theory, in its general form, was invented in 1974 by Ken Wilson [7]; a special case of it (the gauge invariant Ising model) had been introduced earlier by Franz Wegner [30].

The idea of gauge transformations and gauge invariance originates in the classical theory of electromagnetism, where the vector potential is determined by the electromagnetic field only “up to gauge transformations”. H. Weyl first introduced the term “gauge” in 1918 in an (unsuccessful) attempt to unify Einstein’s General Relativity with electromagnetism, but in 1929 he successfully introduced the concept in quantum mechanics, where a gauge transformation refers to space dependent shift of the phase of the wave function combined with a related transformation of the electromagnetic vector potential. This is based on the underlying group of transformations (see below).

The next step was the introduction of non-abelian (non-commutative) generalization by C. N. Yang and R. L. Mills in 1954 [31], formulating what is known today as Yang–Mills theory. This concept is an essential ingredient of the Standard Model (SM) of particle physics, which describes—so far perfectly—the strong, weak and electromagnetic interactions of so-called elementary particles, including electrons, muons and quarks.

The gauge group of the SM is rather complicated: it is the direct product of the groups . Here

is the group of rotations in the plane, which is abelian (=commutative); is the non-abelian group of unitary matrices with determinant

Instead of explaining the original continuum version of gauge field theories, I will immediately go to Lattice Gauge Theory (LGT), which is easier to describe anyway. I will focus on the simplest non-abelian group .

The elements of can be written es

where the are real numbers satisfying

so they are describing the unit sphere in four dimensions.

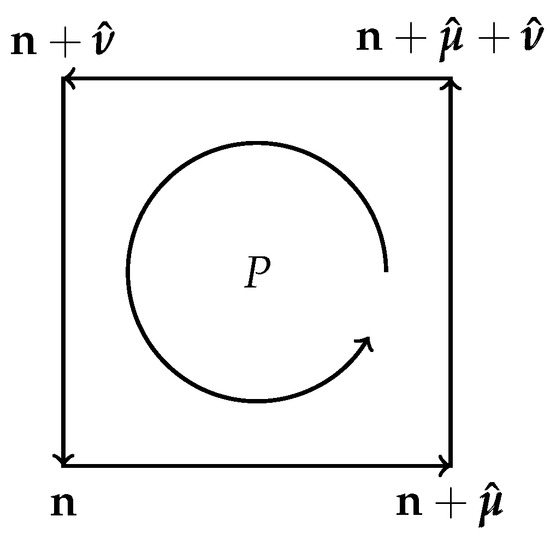

To define LGT, we need, in addition to the vertices and links (nearest neighbor pairs) encountered in the previous section, the so-called plaquettes of the lattice: a plaquette is an oriented elementary square of the lattice, i.e., the simplest closed loop made from for links (see Figure 2). Denoting by the unit vector on the lattice in direction , we define a lattice gauge field as a map from the links into the gauge group:

with the property

i.e., reversing the direction of a link requires taking the inverse of the gauge field U.

Figure 2.

An oriented plaquette.

Gauge field action: The simplest action is Wilson’s action (there are other choices):

where the sum is over all the plaquettes P and

and is the (bare) coupling constant. In a naive continuum limit, this expression corresponds to the action density , in close analogy to the well-known action of the electromagnetic field.

Gauge Invariance: The action (54) is invariant under the transformation of the gauge field

where is any assignment of a unitary matrix to the vertices .

The gauge field can be coupled to matter fields living at the vertices of the lattice:

Scalar matter field: We need a scalar lattice field which has several components, such that the gauge transformations (56) can act (mathematically speaking, the scalar field has to allow a representation of the gauge group). The simplest choice for the case of is a two-component complex field (actually, the right choice for the Higgs field of the SM).

A gauge-invariant action for a scalar field interacting with the gauge field is

where a gauge transformation acts on the scalar field as

Fermionic matter field: We denote a fermionic field by , indicating that there are several components for each lattice site . The fields anticommute and are nilpotent, forming a so-called Grassmann algebra.

For the lattice functional integral we need two more prescriptions:

(a) The integration over the fermion field is the so-called Berezin integral, ref. [32] which is defined by the rule

together with the anticommutation relations

(b) The integration over the lattice gauge field has to be performed with an invariant measure (Haar measure); for the case of , this is simply the rotation invariant measure on the three-sphere defined by (51).

A typical form of the action for a LGT with gauge and fermion fields, such as Quantum Chromodynamics (QCD) is

where the fermionic action is, for instance,

Here, is another fermion field, independent of , and is a lattice version of the Dirac operator, made gauge covariant by the coupling to the gauge field. The version proposed by Wilson [7] is:

the are the Dirac matrices and is the covariant difference operator

Wilson proposed (63), which contains the naively unexpected second order difference operator in order to solve the so-called fermion doubling problem that would arise without that term [9,10,33]. A detailed discussion of this issue is beyond the scope of this introduction. Some idea of the complexity of this issue can be gained by consulting the Wikipedia article on fermion doubling [34].

In the SM, the fermion fields describe the leptons and quarks. They are multicomponent fields with the gauge group acting unitarily on the indices, e.g.,

6. Numerical Evaluation of Lattice Gauge Theory

The Berezin integral is not well suited for direct numerical evaluation, but the actions used in the Standard Model are always quadratic in the fermion fields and therefore these fields can be “integrated out” using the general formulae like

where the indices now stand for both the lattice points and the components of the fermion field. A derivation of this formula is given in the Appendix A.

A “lucky coincidence” is the fact that the determinant appearing in (66) is generally positive (exceptions are field theories with a nonzero chemical potential for the fermions and those with a so-called topological term, which pose a serious difficulty for numerics, the so-called sign problem). This positivity makes it possible to evaluate the functional integrals via “importance sampling”, such as the Monte Carlo method. Nowadays, refined versions of this are in use, for instance, for instance something called “hybrid Monte Carlo” (HMC).

Generically, the idea is to define an algorithm that produces stochastically a sequence of field configurations; on each configuration, a number of quantities (“observables”) are evaluated. The algorithm is set up in such a way that in the limit of infinitely long sequences the averages of the observables converge to their expectation values in the functional integral. For a detailed description on how this works, I recommend the textbooks mentioned before [9,10].

Let me now give a rough sketch of how particle masses are extracted from LGT, at first in unphysical lattice units. First of all, since

where the right hand side is the projection operator onto the ground state of H, the true ground state will emerge in the infinite volume limit automatically; remember that it is represented by the function in the functional integral.

Physical states representing particles are obtained by inserting functionals (operators) with the appropriate quantum numbers. In LGT, these functionals have to be chosen gauge invariant, unless we want to represent some unphysical infinitely heavy external charges. By looking at the exponential decay rate of the two-point correlator of such operators, we obtain the lowest energy (=mass) among the states with the quantum numbers chosen, as described in Section 4.

Let us, for instance, consider the meson in a simplified version of QCD, in which only the two lightest quarks (“up” and “down”) are present. It is a pseudoscalar meson with isospin 0, so an operator with the right quantum numbers is

is obviously gauge invariant. We consider the correlation function

where is the unit vector in the time direction. This function will decay exponentially for large lattice distances, as long as we are not at a critical point. The exponential decay rate will in general depend on the quantum numbers used; since we chose those of the meson, we interpret the asymptotic behavior as , where is the mass of the meson, so far in the unphysical lattice units. To obtain such masses in physical units, we have to calibrate the lattice spacing in physical units. How this is accomplished was described in Section 4 for a free field and we will return to it in the next section.

Of course, there are more clever choices for the operator ; the first improvement is to take the sum over the spatial part of the lattice, i.e., fix the momentum to 0.

7. Renormalization and Continuum Limit

We have described in some detail in Section 4 how the continuum limit is constructed for the trivial case of a free scalar field. We now sketch how this is accomplished in the context of a more complicated theory, such as lattice QCD.

In this case, there is more than one correlation length, depending on the quantum numbers of the observables whose correlations we consider. As in the trivial free case, these correlation lengths are proportional to the inverse masses (in lattice units) of the particle appearing in this channel. One of these masses has to be picked to define the reference mass (which corresponds to the inverse of a standard of length as described in Section 4) by equating it with the corresponding experimentally measured mass. Again, by this choice, we produce a dimensional transmutation that gives dimension to the lattice parameters.

The first step of renormalization is expressing of all lengths and masses in physical units by using the reference mass (or the corresponding correlation length) as the standard.

After this trivial renormalization has been performed, one can try to take the continuum (=long wavelength) limit. Taking the continuum limit of a lattice field theory is necessary even if one does not believe in the reality of the continuum. This is so because in the absence of any detailed knowledge about the possible breakdown of the continuum at short distances, one still wants to extract those aspects of the theory that do not depend on the unknown features of that breakdown.

A QFT is completely determined by its Euclidean or Minkowskian correlation functions. The continuum limit for these correlation functions of LFT/LGT requires the following: we first renormalize all masses and lengths as stated above, and then we vary the parameters of the theory in such a way that the reference scale grows to infinity in lattice units. As explained in Section 4, this means that we have approach a critical point of the model in question; in a theory like QCD, the critical points actually form a manifold and a priori any point on that manifold determines a continuum limit.

To describe renormalization and the continuum limit for the correlation functions, we have to renormalize also the field strength (cf. Section 4). Let us consider a scalar field theory to keep the notation simple. So, let

be the correlation function of N fields on the unit lattice. The renormalized correlation function on the lattice with lattice constant (where is the correlation length chosen to fix the length standard) is then

where is the integer part of . Then, the continuum correlation functions should be defined as

(“wave function renormalization”) is a factor that has to go to zero as in such a way that the limit exists and is nontrivial (as discussed in detail in Section 4 for a free scalar field). If everything works out, can be chosen independent of the number N of fields and the limit satisfies a number of properties; in particular, it is invariant under rotations. As remarked earlier, this is not easy and has so far only be achieved in space–time dimensions less than four. The continuum limit will always inherit RP from its lattice approximation.

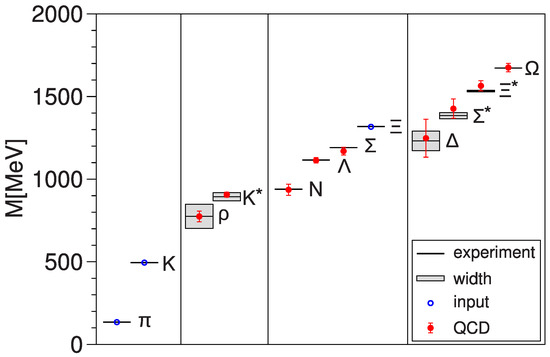

In the numerical evaluation of a LGT, such as Lattice QCD, one is not so much interested in correlation functions; rather, one wants to extract the masses of the hadrons (protons, neutrons, hyperons, pions, kaons etc.).

QCD has a number of free parameters, such as bare quark masses. The critical manifold is parameterized by some of the parameters of the theory. One has to use a number of experimental inputs to fix the point on the critical manifold one wants to approach, but the number of inputs is much smaller than the number of masses predicted by lattice QCD. So, comparison of theory and experiment is quite stringent. Its success gives us confidence that QCD is indeed the correct theory describing the strong nuclear interactions.

I include a rather ancient plot (Figure 3) to illustrate this success.

Figure 3.

Hadron masses: comparison of theory and experiment. From [35].

There are many other successful lattice computations of experimentally measurable quantities. They include decay rates, quark masses and mixing matrix (CKM) elements. Many of these results can be found on the FLAG web site [36], which collects and critically evaluates them.

To sum up, LGT provides a very effective, nonperturbative way of comparing theory (for instance QCD) with experiment; so far, no significant discrepancies have been found.

8. Lattice Field Theory vs. Quantum Cellular Automata

Quantum Cellular Automata (QCA) [11,12,14,16] and LFTs can both be viewed as lattice versions of QFT. The main difference is that QCA are using a real-time evolution, which is unitary, whereas LFTs are using imaginary time steps. If all goes well, the two approaches should lead to the “same” continuum limit, meaning that the continuum limit of a LFT is the analytic continuation of the continuum limit of the corresponding QCA.

A minor point is that the QCA corresponding to a LFT containing bosons will require an infinite dimensional Hilbert space at each lattice point; since in the definition of a QCA it is often (but not always) assumed that each lattice point carries a finite dimensional space, this will then require a further approximation argument.

There is an alternative approach to LGT, which is even closer to QCA: the so-called Hamiltonian LGT proposed by Kogut and Susskind (KS-LGT) [37]. It defines a Hamiltonian on a spatial lattice; time is continuous. The theory can be seen as the limit of a QCA in which the time step has been taken to zero. Conversely, the discrete-time path integral for bosonic lattice field theory is a QCA [38] (albeit with an infinite dimensional Hilbert space at each lattice point).

One could let the unitary time evolution, exponentiating the Kogut–Susskind Hamiltonian, run for a finite time and so obtain a unitary evolution resembling a QCA. But, because this would involve infinitely many infinitesimal time steps, the evolution would be nonlocal, coupling infinitely many lattice points after the finite time step . So, it would not be a QCA in the proper sense. Nevertheless, a proposal has been made to use the KS-LGT as a basis for a quantum simulation of the real-time evolution of a two-dimensional gauge theory [39].

Another difference between LFT on one side and QCA and Hamiltonian lattice field theory on the other is that in the latter time and space are treated differently, whereas in the former there is complete symmetry between time and space. The discretization in LGT breaks rotation invariance, whereas in QCA and KS-LGT it violates Lorentz invariance. In both approaches, it is necessary to take the continuum limit to have a chance to recover rotation invariance or Lorentz invariance, respectively. Conceivably QCA has a better chance for achieving this than KS-LGT because it already has a finite speed of propagation built into its formalism.

On the other hand, the Kogut–Susskind Hamiltonian (of course with a spatial cutoff in place) works directly in the appropriate Fock space for the gauge bosons and the fermionic matter fields; it does not require a somewhat elaborate construction like the one given in the QCA case for fermions [15,16], where at first a much larger Hilbert space of distinguishable particles is built, only to be reduced afterwards to the physical subspace.

QCA, as well as KS-LGT, require finding appropriate wave functions in the Fock space of the fields. A crucial requirement for a physical wave function in a gauge theory is the so-called Gauss constraint, which demands that the wave functions are independent of time-independent gauge transformations. The physical meaning of the constraint is the absence of immovable external charges, which in LGT is automatically assured by considering only gauge invariant functionals. Finding correct physical wave functions is difficult; even finding the correct vacuum wave function for an interacting QCA or a Hamiltonian model is a challenge, but it may be a rewarding task.

In LFT/LGT, on the other hand, one does not have to solve this problem: since

as we have seen, the true ground state will emerge in the infinite volume limit automatically and is represented by the function in the functional integral. On the other hand, finding the wave functions for the ground state, as well as other states in QCA or Hamiltonian LGT, might reveal physically interesting features. Some inspiration about how to approach this problem might be found in some older work on two-dimensional models in which the Dirac sea was “first drained and then refilled” [40,41].

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

No new data were created or analyzed in this study.

Acknowledgments

I am grateful to Todd Brun for the invitation to contribute to this volume. Leonard Mlodinow was a great help in improving this article through extensive discussions.

Conflicts of Interest

The author declares no conflicts of interest.

Appendix A. Derivation of Equation (66)

As mentioned, the Grassmann variables are nilpotent because they anticommute with themselves. In a finite volume, the number of linearly independent elements among these variables is finite. Call this number , and then the power series

actually stops at the order N.

The Berezin integral over an element of the Grassmann algebra according to Equation (59) picks out the coefficient of the highest order term, so

Note that

(A2) still contains a sum over terms, but most of them vanish because of the nilpotency. The surviving terms are the ones in which every and appears exactly once. There are of them because each possible permutation of the , as well as the , appears.

By moving (commuting) bilinears around, we can always order the terms under the integral in such a way that the appear in their natural order . That way, each term will appear times (from the permutations of the ), so that different terms remain.

So, the surviving terms can be written as

where p ranges over the group of permutations of the N elements and is the sign of the permutation p, arising from moving the elements into the standard order.

(A4) is just the definition of .

References

- Available online: www.latimes.com/archives/la-xpm-1999-nov-16-mn-34060-story.html (accessed on 6 March 2025).

- Available online: https://www.youtube.com/watch?v=GL77oOnrPzY (accessed on 6 March 2025).

- Available online: https://www.quantamagazine.org/tag/the-unraveling-of-space-time/ (accessed on 6 March 2025).

- Bopp, F. Diracgleichung im Gitterraum als Beispiel einer aus Elementarprozessen folgenden Theorie. Z. Phys. 1967, 200, 117–132. [Google Scholar] [CrossRef]

- Guerra, F.; Rosen, L.; Simon, B. The P(ϕ)2 Euclidean quantum field theory as classical statistical mechanics. Ann. Math. 1975, 101, 111–189. [Google Scholar]

- Glimm, J.; Jaffe, A. Quantum Physics, a Functional Integral Point of View; Springer: New York, NY, USA, 1987. [Google Scholar]

- Wilson, K.G. Confinement of Quarks. Phys. Rev. D 1974, 10, 2445–2459. [Google Scholar] [CrossRef]

- Creutz, M. Monte Carlo Study of Quantized SU(2) Gauge Theory. Phys. Rev. D 1980, 21, 2308–2315. [Google Scholar] [CrossRef]

- Montvay, I.; Münster, G. Quantum Fields on a Lattice; Cambridge University Press: Cambridge, UK, 1997. [Google Scholar]

- Gattringer, C.; Lang, C.B. Quantum Chromodynamics on the Lattice; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Arrighi, P. An overview of quantum cellular automata. Nat. Comput. 2019, 18, 885–899. [Google Scholar] [CrossRef]

- Farrelly, T. A review of Quantum Cellular Automata. Quantum 2020, 4, 368. [Google Scholar]

- Mlodinow, L.; Brun, T.A. Discrete spacetime, quantum walks and relativistic wave equations. Phys. Rev. A 2018, 97, 042131. [Google Scholar] [CrossRef]

- Mlodinow, L.; Brun, T.A. Quantum field theory from a quantum cellular automaton in one spatial dimension and a no-go theorem in higher dimensions. Phys. Rev. A 2020, 102, 042211. [Google Scholar] [CrossRef]

- Mlodinow, L.; Brun, T.A. Quantum cellular automata and quantum field theory in two spatial dimensions. Phys. Rev. A 2020, 102, 062222. [Google Scholar] [CrossRef]

- Mlodinow, L.; Brun, T.A. Fermionic and bosonic quantum field theories from quantum cellular automata in three spatial dimensions. Phys. Rev. A 2021, 103, 052203. [Google Scholar] [CrossRef]

- Watrous, J. On One-Dimensional Quantum Cellular Automata. In Proceedings of the IEEE 36th Annual Foundations of Computer Science, Milwaukee, WI, USA, 23–25 October 1995; IEEE: New York, NY, USA, 1995; pp. 528–537. [Google Scholar] [CrossRef]

- Raussendorf, R. Quantum cellular automaton for universal quantum computation. Phys. Rev. A 2005, 72, 022301. [Google Scholar] [CrossRef]

- Wick, G.C. Properties of Bethe-Salpeter Wave Functions. Phys. Rev. 1954, 96, 1124–1134. [Google Scholar] [CrossRef]

- Dyson, F.J. The S matrix in quantum electrodynamics. Phys. Rev. 1949, 75, 1736. [Google Scholar]

- Schwinger, J. On the Euclidean structure of relativistic field theory. Proc. Natl. Acad. Sci. USA 1958, 44, 956. [Google Scholar] [PubMed]

- Feynman, R.P.; Hibbs, A.R. Quantum Mechanics and Path Integrals; Dover: Mineola, NY, USA, 2005. [Google Scholar]

- Nakahara, M. Geometry, Topology and Physics; IOP Publishing: Bristol, UK, 2003. [Google Scholar]

- Kac, M. On Distributions of Certain Wiener Functionals. Trans. Am. Math. Soc. 1949, 65, 1. Available online: https://www.jstor.org/stable/1990512 (accessed on 6 March 2025).

- Osterwalder, K.; Schrader, R. Axioms for Euclidean Green’s Functions. Commun. Math. Phys. 1973, 31, 83–112. [Google Scholar] [CrossRef]

- Osterwalder, K.; Schrader, R. Axioms for Euclidean Green’s Functions. 2. Commun. Math. Phys. 1975, 42, 281–305. [Google Scholar] [CrossRef]

- Osterwalder, K.; Seiler, E. Gauge Field Theories on the Lattice. Ann. Phys. 1978, 110, 440. [Google Scholar] [CrossRef]

- Lüscher, M. Construction of a Selfadjoint, Strictly Positive Transfer Matrix for Euclidean Lattice Gauge Theories. Commun. Math. Phys. 1977, 54, 283. [Google Scholar] [CrossRef]

- Seiler, E. Gauge Theories as a Problem of Constructive Quantum Field Theory and Statistical Mechanics. Lect. Notes Phys. 1982, 159, 1–192. [Google Scholar]

- Wegner, F.J. Duality in Generalized Ising Models and Phase Transitions Without Local Order Parameters. J. Math. Phys. 1971, 12, 2259–2272. [Google Scholar] [CrossRef]

- Yang, C.N.; Mills, R.L. Conservation of Isotopic Spin and Isotopic Gauge Invariance. Phys. Rev. 1954, 96, 191–195. [Google Scholar] [CrossRef]

- Berezin, F.A. The method of second quantization. Pure Appl. Phys. 1966, 24, 1–228. [Google Scholar]

- Nielsen, H.B.; Ninomiya, M.M. A no-go theorem for regularizing chiral fermions. Phys. Lett. B 1981, 105, 219. [Google Scholar] [CrossRef]

- Wikipedia: Fermion Doubling. Available online: https://en.wikipedia.org/wiki/Fermion_doubling (accessed on 6 March 2025).

- Dürr, S.; Fodor, Z.; Frison, J.; Hoelbling, C.; Hoffmann, R.; Katz, S.D.; Krieg, S.; Kurth, T.; Lellouch, L.; Lippert, T.; et al. Ab-Initio Determination of Light Hadron Masses. Science 2008, 322, 1224–1227. [Google Scholar] [CrossRef]

- Available online: http://flag.unibe.ch (accessed on 6 March 2025).

- Kogut, J.B.; Susskind, L. Hamiltonian Formulation of Wilson’s Lattice Gauge Theories. Phys. Rev. D 1975, 11, 395–408. [Google Scholar] [CrossRef]

- Farrelly, T.; Streich, J. Discretizing quantum field theories for quantum simulation. arXiv 2002, arXiv:2002.02643. [Google Scholar] [CrossRef]

- Martinez, E.A.; Muschik, C.A.; Schindler, P.; Nigg, D.; Erhard, A.; Heyl, M.; Hauke, P.; Dalmonte, M.; Monz, T.; Zoller, P.; et al. Real-time dynamics of lattice gauge theories with a few-qubit quantum computer. Nature 2016, 534, 516–519. [Google Scholar] [CrossRef]

- Bergknoff, H.; Thacker, H.B. Method for Solving the Massive Thirring Model. Phys. Rev. Lett. 1979, 42, 135. [Google Scholar] [CrossRef]

- Bergknoff, H.; Thacker, H.B. Structure and Solution of the Massive Thirring Model. Phys. Rev. D 1979, 19, 3666. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).