Abstract

Prompt tuning visual-language models (VLMs) for specialized tasks often involves leveraging task-specific textual tokens, which can tailor the pre-existing, broad capabilities of a VLM to more narrowly focused applications. This approach, exemplified by CoOp-based methods, integrates mutable textual tokens with categorical tokens to foster nuanced textual comprehension. Nonetheless, such specialized textual insights often fail to generalize beyond the scope of familiar categories, as they tend to overshadow the versatile, general textual knowledge intrinsic to the model’s wide-ranging applicability. Addressing this base-novel dilemma, we propose the innovative concept of SparseKnowledge-guided Context Optimization (Sparse-KgCoOp). This technique aims to fortify the adaptable prompts’ capacity to generalize to categories yet unencountered. The cornerstone of Sparse-KgCoOp is based on the premise that reducing the differences between adaptive prompt and their hand-crafted counterparts through sparsification operations can mitigate the erosion of fundamental knowledge. Specifically, Sparse-KgCoOp seeks to narrow the gap between the textual embeddings produced by both the dynamic prompts and the manually devised ones, thus preserving the foundational knowledge while maintaining adaptability. Extensive experiments of several benchmarks demonstrate that the proposed Sparse-KgCoOp is an efficient method for prompt tuning.

1. Introduction

Leveraging the expansive repository of image–text correlation pairs, the refined visual-language model (VLM) harnesses a broad spectrum of fundamental knowledge, enhancing its capacity to generalize across diverse tasks. The landscape of visual-language modeling has seen a flourishing of innovative approaches like contrastive language-image pre-training (CLIP) [1], Flamingo [2], ALIGN [3], etc. While VLMs are proficient in capturing visual cues and textual interpretations, their refinement is contingent upon a substantial dataset of exceptional quality. Yet, the compilation of such extensive data for model refinement in genuine visual-language scenarios is often impractical. In light of this challenge, the technique of prompt tuning has emerged, offering a means to fine-tune pre-established VLMs for specific downstream applications, and it has demonstrated remarkable efficacy in a plethora of few-shot or zero-shot visual recognition endeavors [4,5,6,7,8,9,10,11].

Visual-language prompt tuning methods, as utilized in this paper, focus solely on textual prompts without delving into visual prompts. This technique typically harnesses task-relevant textual tokens to integrate specific textual knowledge conducive for predictive tasks. In the context of CLIP, the prompt template “a photo of a [Class]” is instrumental in constructing text-based class embeddings for zero-shot predictive tasks [1]. The knowledge encapsulated by these static, crafted prompts—termed general textual knowledge—is known for its broad applicability to novel tasks. However, this knowledge may not sufficiently capture the nuances of downstream tasks as it does not account for each task’s unique requirements. To capture more tailored, task-specific knowledge, approaches like Context Optimization (CoOp) [12] and Conditional Context Optimization (CoCoOp) [13] have been developed, introducing an array of adjustable prompts shaped by labeled samples in few-shot learning settings. The knowledge produced by these adaptable-prompt methods is referred to as specific textual knowledge. However, methods based on CoOp can exhibit limited generalization to unseen categories within the same task, potentially underperforming compared to CLIP for novel classes, as depicted in Table 1.

Table 1.

Compared to existing methods, the proposed Sparse-KgCoOp is an efficient method, obtaining a higher performance. HM denotes the harmonic mean of base and new class accuracy. All values are reported in percentage (%).

Given that specific textual knowledge derives from labeled samples in a few-shot setting, it is inherently tuned to recognized classes, which may inadvertently skew it against classes not seen during training, resulting in subpar outcomes in novel domains. For instance, when not trained, CLIP exhibits enhanced accuracy with new, previously unseen classes over methods based on CoOp, as illustrated by respective accuracies: 74.22% for CLIP against 67.99%, and 71.69% for CoOp and CoCoOp. This higher accuracy achieved by CLIP underscores its general textual knowledge’s robustness towards novel classes. Nevertheless, the specialized textual knowledge shaped by CoOp approaches tends to overlook this broad general knowledge, a phenomenon described as catastrophic forgetting. In essence, the more pronounced this forgetting, the more substantial the decline.

In tackling the challenge of preserving general textual knowledge while enhancing the versatility for unseen classes, we propose a pioneering prompt tuning approach, Sparse Knowledge-guided Context Optimization (Sparse-KgCoOp). The crux of Sparse-KgCoOp lies in mitigating the deviation from general textual knowledge, achieved by diminishing the divergence between the learnable and the predefined prompts. Conventionally, the predefined prompts “a photo of a [Class]” are processed by the text encoder of CLIP to procure the general textual embedding, epitomizing the general textual knowledge. On the flip side, Sparse-KgCoOp cultivates a suite of modifiable prompts to yield task-specific textual embedding. Additionally, Sparse-KgCoOp endeavors to shrink the euclidean distance between general and specific textual embeddings, thus retaining crucial general textual knowledge. Echoing the methodology of CoOp and CoCoOp, Sparse-KgCoOp employs the contrastive loss between the task-specific textual and visual embeddings for the refinement of the learnable prompts. Extensive experiments across various experimental settings—including base-to-new generalization, few-shot classification, and domain generalization—demonstrate the effectiveness of Sparse-KgCoOp, spanning 11 image classification datasets. The results, delineated in Table 1, attest to Sparse-KgCoOp’s efficiency: it achieves heightened performance. Our main contributions are summarized as follows:

- We investigate a problem called the base-novel dilemma, i.e., most prompt-tuning visual-language models (e.g., CoOp or CoCoOp) achieve good base-class performance with a sacrifice of new-class accuracy, which is beyond the capability of these methods in real-world data with a mix of base and new classes.

- We propose Sparse-KgCoOp to address the problem. It surpasses existing methodologies in terms of end performance. Notably, it significantly bolsters outcomes for the new class compared to CoOp and CoCoOp, reinforcing the wisdom and indispensability of incorporating general textual knowledge.

- Sparse-KgCoOp’s training expediency aligns with that of CoOp, marking a quicker pace relative to CoCoOp. Results demonstrate the effectiveness of Sparse-KgCoOp.

2. Related Work

2.1. Vision-Language Models

Advances in recent studies indicate that models synthesizing image–text connections outperform those solely analyzing visual inputs, leading to the creation of potent visual-language models. Such models, rooted in image–text correlations, are referred to as visual-language models (VLMs). Enhancements in VLMs are achieved through several methods: firstly, by deploying more robust textual or visual encoders, such as Transformers [14]; secondly, by applying contrastive representation learning techniques [15]; and thirdly, by integrating an expanded set of imagery [1,3]. Given the dependency of VLM training on extensive labeled datasets, methods like unsupervised or weakly supervised learning are employed to educate the VLMs using images without annotations [16]. Notably, techniques like Masked Language Modeling (MLM) enhance the resilience of visual and textual embeddings by intentionally omitting words from the text [17,18]. Concurrently, Masked Autoencoders [19], which obscure random sections of an input image, have proven to be efficient self-supervised learners. An exemplar of this field is CLIP, which leverages contrastive loss with a visual encoder over an enormous corpus of 400 million image–text pairs, showcasing its adaptability to categories it has not previously encountered. In our approach, we leverage the pre-trained CLIP model, similar to its predecessors CoOp and CoCoOp, to facilitate knowledge transfer. Our proposed method aims to balance the generalization ability of base and novel classes and further improve the performance.

2.2. Prompt Tuning

For tailoring pre-trained VLMs to specific downstream applications, prompt tuning methods [1,20] regularly enlist task-specific textual tokens to deduce specialized textual knowledge for the task [1,20]. As an illustration, in CLIP [1], a crafted template like “a photo of a [CLASS]” is instrumental for constructing text embeddings designed for zero-shot predictions. Yet, these fixed prompts might not fully capture the intricacies of downstream tasks due to their generic nature. To surmount this limitation, Context Optimization (CoOp) [12] introduces mutable soft prompts that are shaped using a limited set of annotated samples, thus supplanting the rigid hand-crafted prompts. A notable shortfall of CoOp is that these adaptable prompts remain constant across all images for any given task, thereby disregarding the unique attributes each image may possess. To enhance this, Conditional Context Optimization (CoCoOp) [13] has been introduced, formulating a context tailored to each individual image, and amalgamating it with a context dependent on textual conditions for refining prompt tuning. Notably, it leverages a streamlined neural network to formulate vectors that constitute modifiable textual prompts. ProDA [21] takes this a step further by considering the learning of the prompt’s prior distribution to procure superior task-oriented tokens. Complementarily, ProGrad [22] refines only those prompts whose gradient resonates with the “general knowledge” elicited by the initial prompts. Moreover, DenseCLIP [9] adopts a context-aware strategy for prompt formulation in dense prediction tasks, while CLIP-Adapter [23] incorporates an adapter to fine-tune visual or textual representations. CLIP-Adapter has achieved a significant performance.

Among the current techniques, CoOp and CoCoOp are closely related to our method. CoOp serves as a foundational model for our advanced Sparse-KgCoOp. In contrast to CoOp, Sparse-KgCoOp incorporates a novel element that maintains a minimal divergence between the learnable and original prompts, thereby enhancing its efficacy in classifying novel categories. CoCoOp shares a similar concept with Sparse-KgCoOp in aligning specific learnable knowledge with broader general knowledge. Nevertheless, CoCoOp limits its optimization to aligned prompts and excludes updates that introduce inconsistencies, effectively disregarding substantial conflicting information during the prompt tuning process. In contrast, Sparse-KgCoOp preserves all knowledge, focusing solely on the proximity of specific learnable insights to universal knowledge. Moreover, Sparse-KgCoOp outstrips CoCoOp in terms of efficiency as it eschews the need for supplementary computational efforts. Extensive assessments demonstrate that Sparse-KgCoOp is a proficient technique that achieves superior performance with reduced training durations.

3. Methodology

In this paper, we first introduce the contrastive language-image pre-training method (CLIP) and Context Optimization (CoOp). Then, our proposed Sparse Knowledge-guided Context Optimization (Sparse-KgCoOp) is introduced.

3.1. Contrastive Language-Image Pre-Training Method

The contrastive language-image pre-training (CLIP) method comprises two encoders—a visual encoder (typically ViT [24] or ResNet [25]) and a text encoder (typically Transformer [14]). The objective of CLIP is to align the embedding spaces of visual and language modalities, and it can be used for zero-shot classification in aligned embedding space. The text is obtained by a predefined template, such as “a photo of a .”, where represents the i-th class name. This input text is then fed into the text encoder to generate , a set of weight vectors, each representing a different category (a total of K categories). Simultaneously, image features x are generated by the image encoder. Then, similarities between the image vectors and the text vectors are computed, followed by a softmax operation to derive prediction probabilities, which is formulated as

where denotes cosine similarity and is a learned temperature parameter.

3.2. Prompt-Based Learning

To enhance the transfer capabilities of the CLIP model and mitigate the need for prompt engineering, the CoOp [12] approach is introduced. CoOp is a prompt tuning technique for vision-language models, which enhances model performance on downstream tasks by optimizing textual prompts. CoOp introduces learnable prompt parameters into pre-trained vision-language models, fine-tuned based on a limited amount of labeled data to adapt to diverse visual understanding tasks. Instead of using “a photo of” as the context, CoOp introduces M learnable context vectors, , each having the same dimension with the word embeddings. The prompt for the i-th class, denoted by :

where is the word embedding for the class name. The context vectors are shared among all classes. Let denote the text encoder, the prediction probability is then

where denotes cosine similarity and is a learned temperature parameter. Notably, the base model of CLIP remains frozen throughout the entire training process.

3.3. Sparse Knowledge-Guided Context Optimization

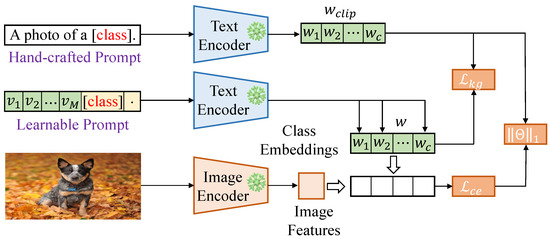

Building upon CoOp, Sparse Knowledge-guided Context Optimization (Sparse-KgCoOp) incorporates external knowledge, particularly from knowledge graphs, to further guide the generation of textual prompts, as shown in Figure 1. Sparse-KgCoOp leverages the rich structured information within knowledge graphs to enhance the model’s understanding of specific tasks and improve generalization when recognizing unseen categories. It combines information from knowledge graphs with vision-text data to generate richer and more instructive textual prompts, thus improving model performance in the face of diverse data.

Figure 1.

The framework of the Sparse Knowledge-guided Context Optimization for prompt tuning. is the standard cross-entropy loss, and is the proposed Knowledge-guided Context Optimization constraint to minimize the discrepancy between the special knowledge (learnable textual embeddings) and the general knowledge (the textual embeddings generated by the hand-crafted prompt). is the sparse loss, which aims to learn more information.

Sparse-KgCoOp is based on the assumption that by introducing sparsity in training through feature- and cue-based regularization, we can reduce the model’s overfitting on seen classes while preserving its generalization ability for unseen classes. In the sparse variant of the VLM model, we adjust the embeddings to prioritize key features while reducing the dimensions of our knowledge representation. We denote the sparse textual embedding generated by the CLIP as and the refined embedding after sparsification as , where encapsulates the original vectorized textual tokens, and represents the learned prompt enriched with sparse representations for the i-th class.

The alignment between specialized knowledge, embedded within , and general knowledge, encapsulated by , is quantified by the cosine distance, which is a surrogate for the Euclidean distance in a normalized space. This distance, denoted as , is inversely proportional to the cosine similarity between the embeddings:

where represents the cosine similarity, and is the number of classes. The score captures the extent to which the specialized knowledge diverges from the general knowledge, with a lower score indicating closer alignment. By minimizing , we can effectively enhance the model’s ability to generalize to unseen classes by ensuring that our sparse representations maintain core semantic meanings. Meanwhile, the standard contrastive loss is

To enhance the generalization capabilities of our model and to encourage it to focus on the most salient features, we employ a process of sparsification on the learned embeddings. Sparsification is a technique that aims to reduce the complexity of the model by encouraging the majority of the weights to be zero or near zero, effectively reducing the number of active connections within the network. This is achieved by applying a regularization term that penalizes the norm of the weights, inherently promoting sparsity. The refined embedding is thus given by

where denotes the sparsification operation applied to the embedding .

The overall loss function of our model is a composite of the cross-entropy loss for classification and the sparsification-driven knowledge gap loss , which is weighted by a hyperparameter . Additionally, we incorporate an regularization term scaled by a factor to induce sparsity in the parameters. The total loss is thus expressed as

where is the cross-entropy loss, represents the norm of the model parameters, and and are the hyperparameters controlling the strength of the knowledge gap penalty and the sparsity regularization, respectively.

not only ensures proper classification but also drives the model towards a more interpretable and generalizable form by reducing overfitting and promoting the use of fewer, more critical features.

4. Experiments

We evaluated the proposed method based on the following settings: (1) generalization from base to new classes within a dataset; (2) few-shot image classification; and (3) domain generalization. All experiments were conducted based on the pre-trained CLIP [1] model.

4.1. Experimental Setting

Dataset. Following CLIP [1], CoOp [12], and CoCoOp [13], the base-to-new generalization was conducted on 11 image classification datasets, i.e., ImageNet [26] and Caltech [27] for generic object classification; OxfordPets [28], StanfordCars [29], Flowers [30], Food101 [31], and FGVCAircraft [32] for fine-grained visual categorization; EuroSAT [33] for satellite image classification; UCF101 [34] for action recognization; DTD [35] for texture classification; and SUN397 [36] for scene recognition.

Training Details. Our implementation was based on CoOp’s codes [12] ( https://github.com/KaiyangZhou/CoOp (accessed on 10 March 2025)) with the CLIP model. We conducted the experiments based on the vision backbone with ResNet-50 [25] and Vit-B/16 [24]. Inspired by CoOp, we fixed the context length to 4 and initialized the context vectors using the template of “a photo of a []”. The final performance was averaged over three random seeds for a fair comparison. We followed the same training epochs, training schedule, and data augmentation setting in CoOp. The hyperparameter was set to 8.0. All experiments were conducted based on RTX 3090.

Baselines. We considered the baseline models to compare the performance, including CLIP [1], which applies the hand-crafted template “a photo of a []” to generate the prompts for knowledge transfer. CoOp [12] replaces the hand-crafted prompts with a set of learnable prompts inferred by the downstream datasets, which is our baseline. CoCoOp [13] generates the image-conditional prompts by combining the image context of each image and the learnable prompts in CoOp.

4.2. Generalization from Base to New Classes

Comparable to the earlier methods, CoOp and CoCoOp, we partitioned each dataset into two segments: foundational classes (base) and emerging classes (new). As shown in Table 2, the proposed Sparse-KgCoOp displayed a higher average performance in terms of harmonic mean (HM) than existing methods on all settings, demonstrating its superiority for the generalization from base to new classes. Specifically, Sparse-KgCoOp outperforms CoOp and CoCoOp in several cases, with the highest HM in Avg dataset, Caltech101, and OxfordPets datasets, where it achieves an HM of 78.25 %, 96.07 %, and 96.90%, respectively. This highlights the robustness of Sparse-KgCoOp when adapting to unseen classes.

Table 2.

Base-to-new generalization performance of baselines and our method on 11 datasets. All values are reported in percentage (%).

Among the existing methods, CoOp obtains the best performance in terms of base classes on all settings while obtaining a worse new performance than CoCoOp. The reason is that a higher performance on base classes makes the CoOp have serious overfitting on the base class, thus producing a biased prompt for the new classes, leading to a worse new performance. Compared with CoCoOp, the proposed Sparse-KgCoOp slightly improves the base classes.

4.3. Few-Shot Image Classification

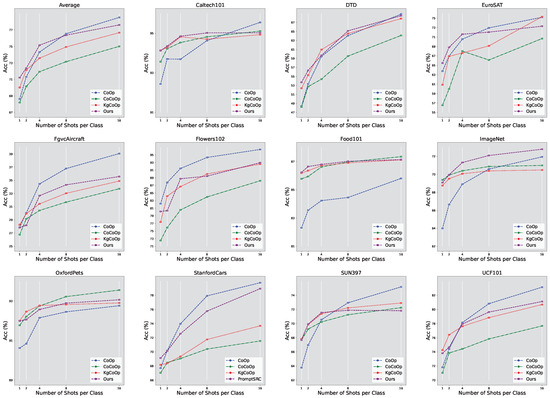

The base-to-new configuration posits that the new classes belong to distinct categories compared to the base classes, thereby illustrating the versatility across different categories. To further exhibit the adaptability of our approach, we implemented few-shot classification, training the model with a limited number of labeled images and evaluating it on a dataset comprising the same categories as those used during training. The outcomes from the 4-shot scenario are depicted in Figure 2. It is evident that our developed Sparse-KgCoOp achieves superior average performance relative to existing methods, such as CoOp.

Figure 2.

Few-shot learning in image classification on 11 datasets.

4.4. Domain Generalization

Domain generalization seeks to assess generalization by testing the trained model on a target dataset, which shares the same class but exhibits a different data distribution from the source domain. The corresponding outcomes are detailed in Table 3.

Table 3.

Domain generalization performance of baselines and our method on 11 datasets. All values are reported in percentage (%).

From Table 3, it is apparent that CoOp achieves optimal results on the source dataset, ImageNet. This top performance indicates that CoOp can generate a discriminative prompt for the base class, aligning with findings from the base to new scenario. Analogous to the comparison in the base-to-new framework, CoOp displays limited generalization to broader, unseen classes. Among the methods evaluated, CoCoOp demonstrates greater domain generalizability than CoOp. In comparison, our Sparse-KgCoOp surpasses both on the source and target datasets, for instance, elevating the average performance on the target from 62.91% to 66.10%. This comparison underscores that the learnable prompts in Sparse-KgCoOp exhibit enhanced domain generalizability.

5. Conclusions

To address the limitations of current CoOp-based prompt tuning methods, which diminish the generalizability for unseen classes, we have developed a prompt tuning strategy called Sparse Knowledge-guided Context Optimization. This method enhances the generalizability of unseen classes by reducing the gap between general textual embeddings and specific learnable textual embeddings. Thorough evaluations across various benchmarks reveal that our proposed Sparse-KgCoOp is a competent prompt tuning approach. While Sparse-KgCoOp enhances generalizability for unseen classes, it might compromise the discriminative capabilities for seen classes; for instance, Sparse-KgCoOp demonstrates suboptimal base performance in seen classes. Future research will focus on developing a balanced approach that effectively addresses both seen and unseen classes.

Author Contributions

Conceptualization, Q.T.; methodology, Q.T. and M.Z.; writing—original draft, Q.T.; writing—review and editing, M.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Acknowledgments

The numerical calculations in this paper have been done on the supercomputing system in the Supercomputing Center of Hangzhou City University.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

References

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models From Natural Language Supervision. In Proceedings of the 38th International Conference on Machine Learning, ICML 2021, Virtual Event, 18–24 July 2021; Meila, M., Zhang, T., Eds.; PMLR Proceedings of Machine Learning Research. Volume 139, pp. 8748–8763. [Google Scholar]

- Alayrac, J.; Donahue, J.; Luc, P.; Miech, A.; Barr, I.; Hasson, Y.; Lenc, K.; Mensch, A.; Millican, K.; Reynolds, M.; et al. Flamingo: A Visual Language Model for Few-Shot Learning. arXiv 2022, arXiv:2204.14198. [Google Scholar]

- Jia, C.; Yang, Y.; Xia, Y.; Chen, Y.; Parekh, Z.; Pham, H.; Le, Q.V.; Sung, Y.; Li, Z.; Duerig, T. Scaling Up Visual and Vision-Language Representation Learning With Noisy Text Supervision. In Proceedings of the 38th International Conference on Machine Learning, ICML, Virtual, 18–24 July 2021; Volume 139, pp. 4904–4916. [Google Scholar]

- Cho, J.; Lei, J.; Tan, H.; Bansal, M. Unifying Vision-and-Language Tasks via Text Generation. In Proceedings of the 38th International Conference on Machine Learning, ICML 2021, Virtual Event, 18–24 July 2021; Meila, M., Zhang, T., Eds.; PMLR Proceedings of Machine Learning Research. Volume 139, pp. 1931–1942. [Google Scholar]

- Gan, Z.; Li, L.; Li, C.; Wang, L.; Liu, Z.; Gao, J. Vision-Language Pre-training: Basics, Recent Advances, and Future Trends. arXiv 2022, arXiv:2210.09263. [Google Scholar]

- Jia, M.; Tang, L.; Chen, B.; Cardie, C.; Belongie, S.J.; Hariharan, B.; Lim, S. Visual Prompt Tuning. In Proceedings of the Computer Vision-ECCV 2022-17th European Conference, Tel Aviv, Israel, 23–27 October 2022; Proceedings, Part XXXIII. Avidan, S., Brostow, G.J., Cissé, M., Farinella, G.M., Hassner, T., Eds.; Lecture Notes in Computer Science. Springer: Berlin/Heidelberg, Germany, 2022; Volume 13693, pp. 709–727. [Google Scholar] [CrossRef]

- Liu, P.; Yuan, W.; Fu, J.; Jiang, Z.; Hayashi, H.; Neubig, G. Pre-train, Prompt, and Predict: A Systematic Survey of Prompting Methods in Natural Language Processing. arXiv 2021, arXiv:2107.13586. [Google Scholar] [CrossRef]

- Petroni, F.; Rocktäschel, T.; Riedel, S.; Lewis, P.S.H.; Bakhtin, A.; Wu, Y.; Miller, A.H. Language Models as Knowledge Bases? In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing, EMNLP-IJCNLP 2019, Hong Kong, China, 3–7 November 2019; Inui, K., Jiang, J., Ng, V., Wan, X., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 2463–2473. [Google Scholar] [CrossRef]

- Rao, Y.; Zhao, W.; Chen, G.; Tang, Y.; Zhu, Z.; Huang, G.; Zhou, J.; Lu, J. DenseCLIP: Language-Guided Dense Prediction with Context-Aware Prompting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2022, New Orleans, LA, USA, 18–24 June 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 18061–18070. [Google Scholar] [CrossRef]

- Tsimpoukelli, M.; Menick, J.; Cabi, S.; Eslami, S.M.A.; Vinyals, O.; Hill, F. Multimodal Few-Shot Learning with Frozen Language Models. In Proceedings of the Advances in Neural Information Processing Systems 34: Annual Conference on Neural Information Processing Systems 2021, NeurIPS 2021, Virtual, 6–14 December 2021; Ranzato, M., Beygelzimer, A., Dauphin, Y.N., Liang, P., Vaughan, J.W., Eds.; pp. 200–212. [Google Scholar]

- Yao, Y.; Zhang, A.; Zhang, Z.; Liu, Z.; Chua, T.; Sun, M. CPT: Colorful Prompt Tuning for Pre-trained Vision-Language Models. arXiv 2021, arXiv:2109.11797. [Google Scholar] [CrossRef]

- Zhou, K.; Yang, J.; Loy, C.C.; Liu, Z. Learning to Prompt for Vision-Language Models. Int. J. Comput. Vis. 2022, 130, 2337–2348. [Google Scholar] [CrossRef]

- Zhou, K.; Yang, J.; Loy, C.C.; Liu, Z. Conditional Prompt Learning for Vision-Language Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2022, New Orleans, LA, USA, 18–24 June 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 16795–16804. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All you Need. In Proceedings of the Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017, Long Beach, CA, USA, 4–9 December 2017; Guyon, I., von Luxburg, U., Bengio, S., Wallach, H.M., Fergus, R., Vishwanathan, S.V.N., Garnett, R., Eds.; pp. 5998–6008. [Google Scholar]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G.E. A Simple Framework for Contrastive Learning of Visual Representations. In Proceedings of the 37th International Conference on Machine Learning, ICML 2020, Virtual Event, 13–18 July 2020; PMLR Proceedings of Machine Learning Research. Volume 119, pp. 1597–1607. [Google Scholar]

- Wang, Z.; Yu, J.; Yu, A.W.; Dai, Z.; Tsvetkov, Y.; Cao, Y. SimVLM: Simple Visual Language Model Pretraining with Weak Supervision. In Proceedings of the Tenth International Conference on Learning Representations, ICLR 2022, Virtual Event, 25–29 April 2022. [Google Scholar]

- Kim, W.; Son, B.; Kim, I. ViLT: Vision-and-Language Transformer Without Convolution or Region Supervision. In Proceedings of the 38th International Conference on Machine Learning, ICML 2021, Virtual Event, 18–24 July 2021; Meila, M., Zhang, T., Eds.; PMLR Proceedings of Machine Learning Research. Volume 139, pp. 5583–5594. [Google Scholar]

- Lu, J.; Batra, D.; Parikh, D.; Lee, S. ViLBERT: Pretraining Task-Agnostic Visiolinguistic Representations for Vision-and-Language Tasks. In Proceedings of the Advances in Neural Information Processing Systems 32: Annual Conference on Neural Information Processing Systems 2019, NeurIPS 2019, Vancouver, BC, Canada, 8–14 December 2019; Wallach, H.M., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E.B., Garnett, R., Eds.; pp. 13–23. [Google Scholar]

- He, K.; Chen, X.; Xie, S.; Li, Y.; Dollár, P.; Girshick, R.B. Masked Autoencoders Are Scalable Vision Learners. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2022, New Orleans, LA, USA, 18–24 June 2022; IEEE: New York, NY, USA, 2022; pp. 15979–15988. [Google Scholar] [CrossRef]

- Zang, Y.; Li, W.; Zhou, K.; Huang, C.; Loy, C.C. Unified Vision and Language Prompt Learning. arXiv 2022, arXiv:2210.07225. [Google Scholar]

- Lu, Y.; Liu, J.; Zhang, Y.; Liu, Y.; Tian, X. Prompt Distribution Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2022, New Orleans, LA, USA, 18–24 June 2022; IEEE: New York, NY, USA, 2022; pp. 5196–5205. [Google Scholar] [CrossRef]

- Zhu, B.; Niu, Y.; Han, Y.; Wu, Y.; Zhang, H. Prompt-Aligned Gradient for Prompt Tuning. arXiv 2022, arXiv:2205.14865. [Google Scholar]

- Gao, P.; Geng, S.; Zhang, R.; Ma, T.; Fang, R.; Zhang, Y.; Li, H.; Qiao, Y. CLIP-Adapter: Better Vision-Language Models with Feature Adapters. arXiv 2021, arXiv:2110.04544. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. In Proceedings of the 9th International Conference on Learning Representations, ICLR 2021, Virtual Event, Austria, 3–7 May 2021. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016, Las Vegas, NV, USA, 27–30 June 2016; IEEE Computer Society: Washington, DC, USA, 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2009), Miami, FL, USA, 20–25 June 2009; IEEE Computer Society: Washington, DC, USA, 2009; pp. 248–255. [Google Scholar] [CrossRef]

- Li, F.-F.; Fergus, R.; Perona, P. Learning generative visual models from few training examples: An incremental Bayesian approach tested on 101 object categories. Comput. Vis. Image Underst. 2007, 106, 59–70. [Google Scholar] [CrossRef]

- Parkhi, O.M.; Vedaldi, A.; Zisserman, A.; Jawahar, C.V. Cats and dogs. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; IEEE Computer Society: Washington, DC, USA, 2012; pp. 3498–3505. [Google Scholar] [CrossRef]

- Krause, J.; Stark, M.; Deng, J.; Fei-Fei, L. 3D Object Representations for Fine-Grained Categorization. In Proceedings of the 2013 IEEE International Conference on Computer Vision Workshops, ICCV Workshops 2013, Sydney, Australia, 1–8 December 2013; IEEE Computer Society: Washington, DC, USA, 2013; pp. 554–561. [Google Scholar] [CrossRef]

- Nilsback, M.; Zisserman, A. Automated Flower Classification over a Large Number of Classes. In Proceedings of the Sixth Indian Conference on Computer Vision, Graphics & Image Processing, ICVGIP 2008, Bhubaneswar, India, 16–19 December 2008; IEEE Computer Society: Washington, DC, USA, 2008; pp. 722–729. [Google Scholar] [CrossRef]

- Bossard, L.; Guillaumin, M.; Gool, L.V. Food-101-Mining Discriminative Components with Random Forests. In Proceedings of the Computer Vision-ECCV 2014-13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part VI. Fleet, D.J., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Lecture Notes in Computer Science. Springer: Berlin/Heidelberg, Germany, 2014; Volume 8694, pp. 446–461. [Google Scholar] [CrossRef]

- Maji, S.; Rahtu, E.; Kannala, J.; Blaschko, M.B.; Vedaldi, A. Fine-Grained Visual Classification of Aircraft. arXiv 2013, arXiv:1306.5151. [Google Scholar]

- Helber, P.; Bischke, B.; Dengel, A.; Borth, D. EuroSAT: A Novel Dataset and Deep Learning Benchmark for Land Use and Land Cover Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 2217–2226. [Google Scholar] [CrossRef]

- Soomro, K.; Zamir, A.R.; Shah, M. UCF101: A Dataset of 101 Human Actions Classes from Videos in The Wild. arXiv 2012, arXiv:1212.0402. [Google Scholar]

- Cimpoi, M.; Maji, S.; Kokkinos, I.; Mohamed, S.; Vedaldi, A. Describing Textures in the Wild. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2014, Columbus, OH, USA, 23–28 June 2014; IEEE Computer Society: Washington, DC, USA, 2014; pp. 3606–3613. [Google Scholar] [CrossRef]

- Xiao, J.; Hays, J.; Ehinger, K.A.; Oliva, A.; Torralba, A. SUN database: Large-scale scene recognition from abbey to zoo. In Proceedings of the Twenty-Third IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2010, San Francisco, CA, USA, 13–18 June 2010; IEEE Computer Society: Washington, DC, USA, 2010; pp. 3485–3492. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).