The Statistical Thermodynamics of Generative Diffusion Models: Phase Transitions, Symmetry Breaking, and Critical Instability

Abstract

1. Introduction

2. Contributions and Related Work

3. Preliminaries on Generative Diffusion Models

Training Diffusion Models as Denoising Autoencoders

4. Preliminaries on the Curie–Weiss Model of Magnetism

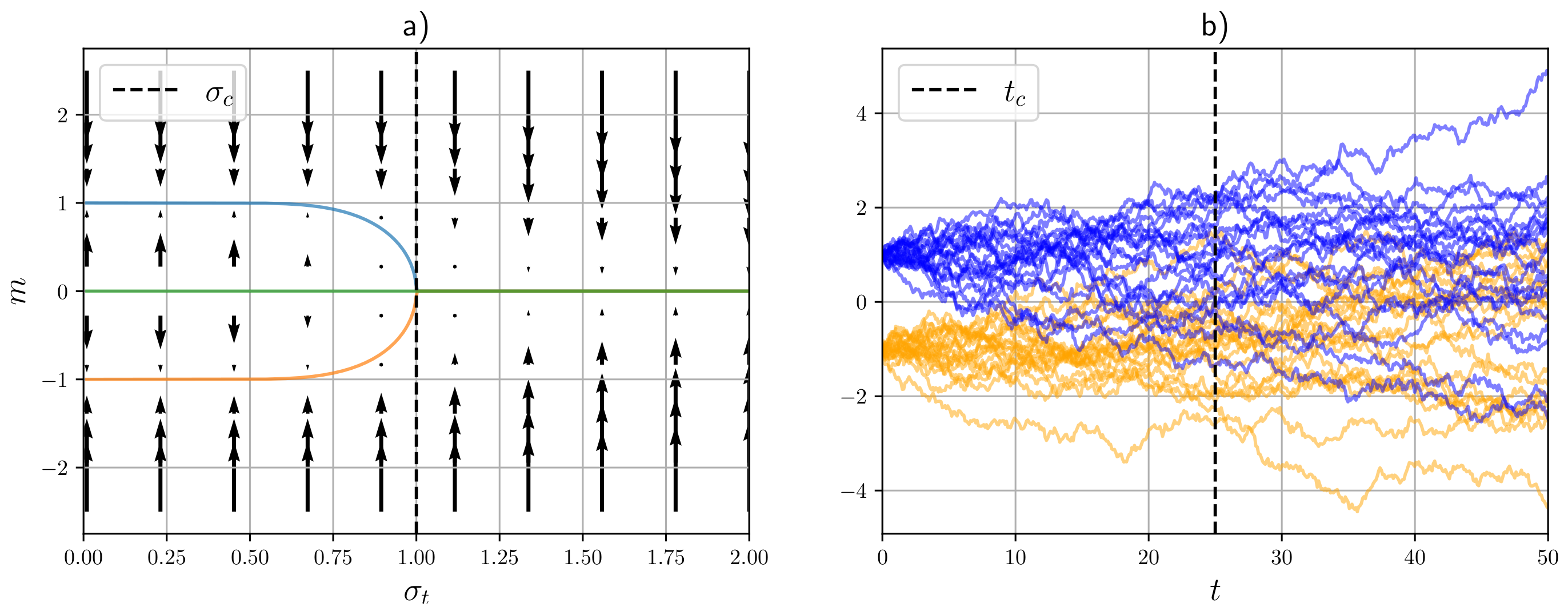

5. Diffusion Models as Systems in Equilibrium

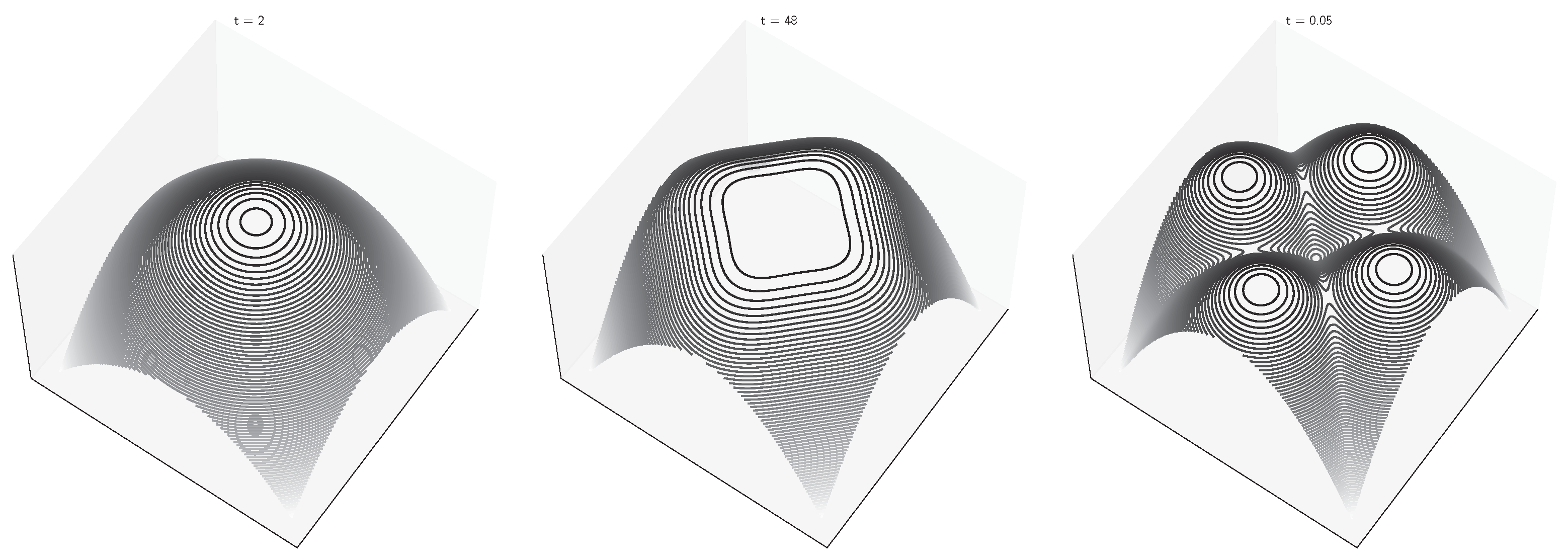

5.1. Example 1: Two Deltas

5.2. Example 2: Discrete Dataset

5.3. Example 3: Hyper-Spherical Manifold

5.4. Example 4: Diffused Ising Model

6. Free Energy, Magnetization, and Order Parameters

The Susceptibility Matrix

7. Phase Transitions and Symmetry Breaking

Generation and Critical Instability

8. Generation as an Adiabatic Free Energy Descent Process

9. Beyond Mean-Field Theory: A Multi-Site ‘Generative Bath’ Model

9.1. Connection Between the Multi-Site Model and the Fixed-Point Structure of Diffusion Models

9.2. Brownian Dynamics in a ‘Generative Bath’

9.3. The Two Delta Model Revisited

10. Associative Memory and Hopfield Networks

11. The Random Energy Thermodynamics of Diffusion Models on Sampled Datasets

Memorization as ‘Condensation’

12. Experimental Evidence of Phase Transitions in Trained Diffusion Models

13. Conclusions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Bond-Taylor, S.; Leach, A.; Long, Y.; Willcocks, C.G. Deep generative modelling: A comparative review of vaes, gans, normalizing flows, energy-based and autoregressive models. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 7327–7347. [Google Scholar] [CrossRef] [PubMed]

- Sohl-Dickstein, J.; Weiss, E.; Maheswaranathan, N.; Ganguli, S. Deep unsupervised learning using nonequilibrium thermodynamics. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015. [Google Scholar]

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. Adv. Neural Inf. Process. Syst. 2020, 33, 6840–6851. [Google Scholar]

- Song, Y.; Sohl-Dickstein, J.; Kingma, D.P.; Kumar, A.; Ermon, S.; Poole, B. Score-Based Generative Modeling through Stochastic Differential Equations. In Proceedings of the International Conference on Learning Representations, Vienna, Austria, 4 May 2021. [Google Scholar]

- Chen, N.; Zhang, Y.; Zen, H.; Weiss, R.J.; Norouzi, M.; Chan, W. WaveGrad: Estimating gradients for waveform generation. arXiv 2020, arXiv:2009.00713. [Google Scholar]

- Kong, Z.; Ping, W.; Huang, J.; Zhao, K.; Catanzaro, B. Diffwave: A versatile diffusion model for audio synthesis. arXiv 2020, arXiv:2009.09761. [Google Scholar]

- Liu, H.; Chen, Z.; Yuan, Y.; Mei, X.; Liu, X.; Mandic, D.; Wang, W.; Plumbley, M.D. Audioldm: Text-to-audio generation with latent diffusion models. arXiv 2023, arXiv:2301.12503. [Google Scholar]

- Ho, J.; Salimans, T.; Gritsenko, A.; Chan, W.; Norouzi, M.; Fleet, D.J. Video diffusion models. arXiv 2022, arXiv:2204.03458. [Google Scholar]

- Singer, U.; Polyak, A.; Hayes, T.; Yin, X.; An, J.; Zhang, S.; Hu, Q.; Yang, H.; Ashual, O.; Gafni, O.; et al. Make-a-video: Text-to-video generation without text-video data. arXiv 2022, arXiv:2209.14792. [Google Scholar]

- Song, J.; Meng, C.; Ermon, S. Denoising diffusion implicit models. In Proceedings of the Internetional Conference on Learning Representations, Vienna, Austria, 4 May 2021. [Google Scholar]

- Raya, G.; Ambrogioni, L. Spontaneous symmetry breaking in generative diffusion models. Neural Inf. Process. Syst. 2023, 36, 66377–66389. [Google Scholar] [CrossRef]

- Biroli, G.; Mézard, M. Generative diffusion in very large dimensions. arXiv 2023, arXiv:2306.03518. [Google Scholar]

- Biroli, G.; Bonnaire, T.; de Bortoli, V.; Mézard, M. Dynamical Regimes of Diffusion Models. arXiv 2024, arXiv:2402.18491. [Google Scholar]

- Sclocchi, A.; Favero, A.; Wyart, M. A Phase Transition in Diffusion Models Reveals the Hierarchical Nature of Data. arXiv 2024, arXiv:2402.16991. [Google Scholar]

- Li, M.; Chen, S. Critical windows: Non-asymptotic theory for feature emergence in diffusion models. arXiv 2024, arXiv:2403.01633. [Google Scholar]

- Lucibello, C.; Mézard, M. Exponential Capacity of Dense Associative Memories. Phys. Rev. Lett. 2024, 132, 077301. [Google Scholar] [CrossRef] [PubMed]

- Ambrogioni, L. In search of dispersed memories: Generative diffusion models are associative memory networks. arXiv 2023, arXiv:2309.17290. [Google Scholar]

- El A., A.; Montanari, A.; Sellke, M. Sampling from the Sherrington-Kirkpatrick Gibbs measure via algorithmic stochastic localization. In Proceedings of the 2022 IEEE 63rd Annual Symposium on Foundations of Computer Science (FOCS), Denver, CO, USA, 31 October–3 November 2022; IEEE: New York, NY, USA, 2022; pp. 323–334. [Google Scholar]

- Huang, B.; Montanari, A.; Pham, H.T. Sampling from spherical spin glasses in total variation via algorithmic stochastic localization. arXiv 2024, arXiv:2404.15651. [Google Scholar]

- Montanari, A. Sampling, diffusions, and stochastic localization. arXiv 2023, arXiv:2305.10690. [Google Scholar]

- Benton, J.; De Bortoli, V.; Doucet, A.; Deligiannidis, G. Nearly d-linear convergence bounds for diffusion models via stochastic localization. In Proceedings of the Twelfth International Conference on Learning Representations, Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Alaoui, A.E.; Montanari, A.; Sellke, M. Sampling from Mean-Field Gibbs Measures via Diffusion Processes. arXiv 2023, arXiv:2310.08912. [Google Scholar]

- Anderson, B.D. Reverse-time diffusion equation models. Stoch. Process. Their Appl. 1982, 12, 313–326. [Google Scholar] [CrossRef]

- Kochmański, M.; Paszkiewicz, T.; Wolski, S. Curie–Weiss magnet—A simple model of phase transition. Eur. J. Phys. 2013, 34, 1555. [Google Scholar] [CrossRef]

- LeCun, Y.; Chopra, S.; Hadsell, R.; Ranzato, M.; Huang, F. A tutorial on energy-based learning. Predict. Struct. Data 2006, 1, 1–59. [Google Scholar]

- Hoover, B.; Strobelt, H.; Krotov, D.; Hoffman, J.; Kira, Z.; Chau, H. Memory in Plain Sight: A Survey of the Uncanny Resemblances between Diffusion Models and Associative Memories. arXiv 2023, arXiv:2309.16750. [Google Scholar]

- Friston, K. The free-energy principle: A unified brain theory? Nat. Rev. Neurosci. 2010, 11, 127–138. [Google Scholar] [CrossRef] [PubMed]

- Mézard, M.; Parisi, G.; Virasoro, M.A. Spin Glass Theory and Beyond: An Introduction to the Replica Method and Its Applications; World Scientific Publishing Company: Singapore, 1987; Volume 9. [Google Scholar]

- Hopfield, J.J. Neural networks and physical systems with emergent collective computational abilities. Proc. Natl. Acad. Sci. USA 1982, 79, 2554–2558. [Google Scholar] [CrossRef]

- Abu-Mostafa, Y.; Jacques, J.S. Information capacity of the Hopfield model. IEEE Trans. Inf. Theory 1985, 31, 461–464. [Google Scholar] [CrossRef]

- Krotov, D. A new frontier for Hopfield networks. Nat. Rev. Phys. 2023, 5, 366–367. [Google Scholar] [CrossRef]

- Strandburg, K.J.; Peshkin, M.A.; Boyd, D.F.; Chambers, C.; O’Keefe, B. Phase transitions in dilute, locally connected neural networks. Phys. Rev. A 1992, 45, 6135. [Google Scholar] [CrossRef]

- Volk, D. On the phase transition of Hopfield networks—Another Monte Carlo study. Int. J. Mod. Phys. C 1998, 9, 693–700. [Google Scholar] [CrossRef]

- Marullo, C.; Agliari, E. Boltzmann machines as generalized Hopfield networks: A review of recent results and outlooks. Entropy 2020, 23, 34. [Google Scholar] [CrossRef]

- Krotov, D.; Hopfield, J.J. Dense associative memory for pattern recognition. In Proceedings of the 30th International Conference on Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016. [Google Scholar]

- Demircigil, M.; Heusel, J.; Löwe, M.; Upgang, S.; Vermet, F. On a model of associative memory with huge storage capacity. J. Stat. Phys. 2017, 168, 288–299. [Google Scholar] [CrossRef]

- Ramsauer, H.; Schäfl, B.; Lehner, J.; Seidl, P.; Widrich, M.; Adler, T.; Gruber, L.; Holzleitner, M.; Pavlović, M.; Sandve, G.K.; et al. Hopfield networks is all you need. In Proceedings of the Internetional Conference on Learning Representations, Vienna, Austria, 4 May 2021. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ambrogioni, L. The Statistical Thermodynamics of Generative Diffusion Models: Phase Transitions, Symmetry Breaking, and Critical Instability. Entropy 2025, 27, 291. https://doi.org/10.3390/e27030291

Ambrogioni L. The Statistical Thermodynamics of Generative Diffusion Models: Phase Transitions, Symmetry Breaking, and Critical Instability. Entropy. 2025; 27(3):291. https://doi.org/10.3390/e27030291

Chicago/Turabian StyleAmbrogioni, Luca. 2025. "The Statistical Thermodynamics of Generative Diffusion Models: Phase Transitions, Symmetry Breaking, and Critical Instability" Entropy 27, no. 3: 291. https://doi.org/10.3390/e27030291

APA StyleAmbrogioni, L. (2025). The Statistical Thermodynamics of Generative Diffusion Models: Phase Transitions, Symmetry Breaking, and Critical Instability. Entropy, 27(3), 291. https://doi.org/10.3390/e27030291