Abstract

Deep reinforcement learning (DRL) as a routing problem solver has shown promising results in recent studies. However, an inherent gap exists between computationally driven DRL and optimization-based heuristics. While a DRL algorithm for a certain problem is able to solve several similar problem instances, traditional optimization algorithms focus on optimizing solutions to one specific problem instance. In this paper, we propose an approach, AlphaRouter, which solves routing problems while bridging the gap between reinforcement learning and optimization. Fitting to routing problems, our approach first proposes attention-enabled policy and value networks consisting of a policy network that produces a probability distribution over all possible nodes and a value network that produces the expected distance from any given state. We modify a Monte Carlo tree search (MCTS) for the routing problems, selectively combining it with the routing problems. Our experiments demonstrate that the combined approach is promising and yields better solutions compared to original reinforcement learning (RL) approaches without MCTS, with good performance comparable to classical heuristics.

1. Introduction

In NP-hard combinatorial optimization (CO) problems, finding global optimum solutions is computationally infeasible. Instead of finding global optima, numerous heuristics have shown promising results. Despite the high effectiveness of heuristics, their application in real-life industries is often hindered by a variety of problems and uncertain information. Indeed, heuristics, which have mathematical origins, are dependent on problem formulations for proper application. However, an exact formulation of constraints is quite challenging in reality, as some constraints are rapidly changing or highly stochastic in a distributional sense. For instance, a few constraints vanish at a time, and other constraints, e.g., a newly unknown coefficient, should be estimated by an assumed distribution and a certain procedure.As real-life domains are entangled with various participants and requirements, some constraints are too complex to formulate. In such situations, particularly when simulation is possible as in a game, the approach of RL has recently attracted attention in the literature and industry.

Mostly, heuristics aim to solve one specific problem. That is to say, heuristics made for capacitated vehicle routing problems (CVRP), for example, cannot apply to bin-packing problems. To deal with versatile constraints and complex problems, the use of deep neural network architectures coupled with RL, called DRL, has recently been considered effective [,]. The DRL approach is flexible, as translating a problem into a reinforcement learning framework is straightforward by appropriately defining both state and reward and running computational simulations. In the long run, the ultimate goal of the DRL approach is to find a new and computational way to solve a complex problem that surpasses the performance of mathematically exact algorithms and their heuristics [].

Nonetheless, the current stage of the performance of DRL has not achieved the performance of heuristic solvers, and there are ongoing research studies to improve its performance. Our goal is to improve DRL performance by attempting to reduce the gap between heuristic solvers and DRL. Motivated by the AlphaGo series [,], we propose a deep-layered network for RL equipped with the selective application of a Monte Carlo Tree Search (MCTS), a general framework applicable to various types of CO problems. We modify some components of the MCTS for application to routing domains that are different from a game.

Unlike the AlphaGo series, we observe that applying MCTS to every action choice is inefficient. To address this, we propose an entropy-based strategy for selectively applying MCTS, which is justified from an information-theory perspective and designed to enhance performance in routing problems. To the best of our knowledge, this represents a novel attempt to refine network architectures in the context of customized MCTS for routing tasks.

The introduced RL framework is quite beneficial if the resulting network is applicable to other similar problems, using the same network architecture as suggested in [,,].

The contributions of our paper are threefold: (1) We propose a deep-layered neural network architecture, fitted to the routing problem, with an exact definition of states and rewards and a policy gradient using a value network. (2) We propose a new MCTS strategy, demonstrating that the integration and entropy-based selective application of our MCTS into the neural network architecture improves the solution quality. (3) We also demonstrate the usefulness of an activation function to numerically improve the solution quality. In short, the main focus of this paper is to propose an effective RL architecture with a modified MCTS strategy for routing problems and to improve search performance. Notably, although we have proposed a neural network architecture specialized to routing problems, any neural network architecture containing a policy and a value, which will be described in later sections, can be integrated into MCTS [], and the domain, a class of routing problems in this paper, can be extended to other problems.

We organize the rest of the paper as follows: In Section 2, we provide an overview of previous works related to combinatorial optimization, routing, and MCTS. In Section 3, we briefly introduce a general formulation of capacitated vehicle routing problems and our problem’s objective. In Section 4, we expound on our proposed approach, AlphaRouter. In Section 5, we present our experimental results.

2. Related Works

Routing problems are among the most well-known set of problems in combinatorial optimization. A traveling salesman problem (TSP), one of the simplest routing problems, seeks to find the sequence of nodes with the shortest distance. On the other hand, a vehicle routing problem (VRP), similar to TSP, is a routing problem with the concept of depots and demand nodes. Numerous variants of VRP exist in the literature such as VRP with time windows and VRP with pickup and delivery []. In this paper, we focus on the capacitated VRP (CVRP), where a vehicle has a limit on its loading amount. Although some variants handle multiple vehicles, we only consider one vehicle for simplicity.

Traditionally, solutions for these problems mainly belong to two types: using math-based approaches like mixed integer programming or applying carefully designed heuristics for a specific type of problem. An example of the latter is the Lin–Kernighan heuristic []. In the past decade, hybrid genetic searches with advanced diversity controls have been introduced and applied to various CVRP variants successfully, greatly improving computation time and performance [,]. We agree that the current stage of DRL does not surpass the performance of the analytically driven heuristics but emphasize that there should be efforts to solve problems using fewer mathematics-entangled methods, such as dynamic programming and stochastic optimization.

Recent research on neural networks for routing problems can be broadly categorized into two approaches based on the type of input they use: graph modules and sequential modules. Graph modules take a graph’s adjacency matrix as an input and employ graph neural networks to solve routing problems, which are naturally suited to graph structures of routing problems [,]. In contrast, sequential models use a list of node coordinates as input and are designed to be compatible with certain types of exact solution inputs. In this paper, we focus on the sequential module approach.

The pointer network [] was an early model for solving routing problems. It suggested a supervised way to train a modified Seq2Seq (sequence-to-sequence) network [] with an early attention mechanism [] by producing an output that is a pointer to the input token. However, a significant disadvantage of the pointer network is that one cannot obtain enough true labels to train large problems since routing problems are NP-hard. To overcome this limitation, the approach in [] introduced the RL method for training neural networks using the famous and simple policy gradient theorem [].

Similar to machine translation evolving from Seq2Seq to Transformers [], routing also adopted the Transformer architecture in [], using both the attention layer to encode the relationships between nodes and the encoding in decoder to produce a probability distribution for the most promising nodes. Replacing the internal neural network only, they kept the training of RL the same as in []. Due to its effectiveness in capturing complex relationships between nodes and generating high-quality solutions, attention-based DRL methods have become one of the most commonly used approaches in DRL for routing problems [,,].

In addition to designing neural architectures, some works focus on the search process itself. For example, the work [] introduced a parallel in-training search process, named POMO, based on attention network designs. The POMO algorithm assigns a different start node for several rollouts and executes multiple episodes concurrently. An episode or rollout can be understood as a process in which a vehicle travels to the next customer until all customers are visited. Among the many episodes, they selected one best solution as the final solution. Although it does not introduce any more parameters to the model, the size of the input is bound to usually, where N is the number of total nodes in the problem. Another approach is to adjust the weights of a pre-trained model during inference to fit the model into a single problem instance as proposed in [].

MCTS is a decision-making algorithm commonly used in games, such as chess, Go, and poker. The algorithm selects the next actions by simulating the game and updates the decision policy using data from the simulation. The original algorithm consists of four phases: selection, expansion, rollout, and backpropagation. In the selection phase, the algorithm starts at the root node and recursively goes down to a child node, maximizing the upper confidence bound (UCB) scores. This score balances exploration and exploitation in the selection process by considering the visit counts and the average value (i.e., average winning rate) gained on that node. A more detailed explanation of UCB is presented in [].

When the selection phase ends and the current node is a leaf node, the expansion phase is executed, in which a new child node is appended to the leaf node following a specific policy named “expansion policy”. Then, the rollout phase, using “rollout policy”, simulates the game until it ends and gathers the result (i.e., win or lose). In the backpropagation phase, by backtracking the path (the sequence of selected nodes), the evaluated result from the rollout policy is updated for each node. For example, the updates increase visit counts by one and update the average win rate with the result from the rollout phase. We note that there are two different policies used in the original MCTS, but in our MCTS implementation, only the expansion policy exists, and the rollout policy is replaced by the value network. We further describe this in Section 4.

Since MCTS shares similarities with routing problems, in that sequential decision-making is involved, we adopt MCTS as an additional search strategy for routing problems. Indeed, there have been some efforts to integrate MCTS into CVRP solvers, demonstrating its potential to enhance decision-making in combinatorial optimization tasks. Upper Confidence Bounded Tree (UCT; which is an extension of MCTS)-based vehicle routing solver was suggested in []. An extension to HF-CVRP (Hybrid Fleet CVRP) was shown in []. However, these approaches applied MCTS in a non-selective manner without utilizing DRL methods.

In the game of Go, the next move is selected sequentially based on the board situation. In routing, the next node to visit is selected sequentially based on the current position and other external information, such as nodes location and demands. Thus, the similarity between Go and routing is obvious.

Neural networks have been successfully integrated with MCTS in AlphaGo [], and AlphaGo Zero achieved even better results by introducing a self-play methodology for training the network []. The first AlphaGo used two different neural networks for “expansion” and “rollout”, inducing a computational burden because of many recursive calls for the “rollout” network until the end of the game. This problem is solved by AlphaGo Zero, in which one call for the value network predicts the expected result from any given state (or node). This was originally gathered in the rollout phase. In short, only one call for the value network replaced numerous calls for the rollout network, saving substantial computations. Our work adopts this idea for efficient MCTS simulation. Neural network architectures have previously been explored in reinforcement learning (RL) contexts [,,].

Building on these efforts, as well as prior work applying AlphaZero [,], similar DRL-MCTS combinations in the pre-marshalling problem [] or coordinated route planning [] and some work that integrated neural network with MCTS exist not only in the area of games but also in the Q-bit routing challenge [,]. As far as we know, there is no research on modified MCTS specifically for routing. Thus, we aim to bridge the gap between DRL and heuristic solvers by selectively applying MCTS to VRP tasks using entropy, making it more effective for addressing various routing challenges.

3. Preliminaries

Before explaining our work, we introduce the formulation of CVRP with one vehicle to connect with our routing problem. We notice that TSP can be easily formulated from CVRP by modifying some conditions.

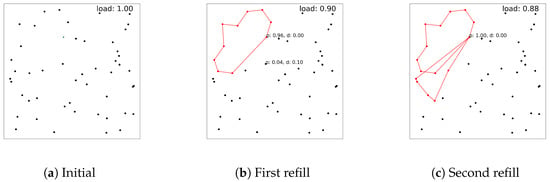

We start with a set of n customers, and each customer i, , has a predefined positive demand quantity . To fulfill the demands of the customers, the vehicle starts its route at the depot node, indexed as 0. The vehicle must visit each customer only once, and its capacity cannot be more than . In conventional settings, presumably, is set to be sufficiently large to fulfill all customers’ demands. In reality, however, a vehicle may start with small due to lack of information, and its load should be refilled. We observe that the problem formulation itself below cannot reflect vehicle refilling, but we aim to handle this situation as an example of the dynamic routing problem [], using our RL approach in the next section. For example, as described in Figure 1, we present a scenario where the vehicle refills with a smaller load after its first subroute is created and solved with DRL. In the figure, dots represent customer nodes, and red dots denote the nodes visited, which are also connected by the red line. Figure 1a represents the initial setting with , Figure 1b shows the situation after the vehicle and is refilled with a slightly different , and Figure 1c shows the routing result with . Usually, to handle these dynamics of the environment, a complicated mathematical formulation or expert engineering techniques are needed []. However, with DRL, one can just adjust , which changes only one line in our implementation.

Figure 1.

Routing results in red in dynamic load settings.

As a graph representation of the problem is common in the literature, we also represent the problem using a graph where , meaning all the nodes in the problem, 0 and , are the same depot node. The last node is just an extra term for the ease of the formulation as the final depot of a tour. We define to be the node visited at time with and a tour, , from time up to is defined as a sequence of visited nodes: for example, , in which T is the last time point in the tour. The terms route and tour are used interchangeably. Additionally,

refers to all the edges from all node combinations. Note that the demand of depot node is 0, meaning . We also introduce a binary decision variable which is 1 if there is a direct route from customer i to j, and 0 otherwise. The distance of edge is denoted by . The cost is the cumulative distance calculated so far at t, given the sequence of visited nodes: , and . We formulate the one-vehicle CVRP as follows:

Subject to

We briefly present a list of equations as follows: Equation (1) is the objective of the problem, the minimization of the distance traveled by the vehicle; Equation (2) is a constraint to regularize all customers being visited only once; Equation (3) controls the correct flow of a tour, the visit sequence, by ensuring the number of times a vehicle enters a node is equal to the number of times it leaves the node; Equation (4) imposes that only one vehicle leaves the depot; and Equations (5) and (6) jointly express the condition of vehicle capacity. Note that variants for the constraints are possible, and the main reference to the above formulation is Borcinova []. We also notice that the finding of solution is equivalent to the construction of tour : for example, represent . Noticeably, in the formulation, the finding of leads to the construction of . On the route up to a visit to node , a continuous variable represents the accumulated demands and is dependent on the decision variable : for instance, on tour ], . To migrate this formulation into TSP, one only needs to remove constraints regarding the capacity of a vehicle and the demands of customers, so that only decision variable remains.

4. Proposed Network Model, AlphaRouter

In this section, we present our approach, named AlphaRouter, to solving the routing problem using both reinforcement learning and MCTS frameworks. We revise the above routing problem by adding the possibility of refilling the vehicle to reflect realistic situations. We notice that the above routing problem is unable to include the refilling action. We begin by defining the components to bring the environment into our RL problem, followed by neural network models of policy and value. We then outline our idea and implementation to adapt MCTS to the routing problem. Our overall process consists of two stages: training the neural network using reinforcement learning and combining the pre-trained network with the modified MCTS strategy to search for a better solution, meaning tour or in the CVRP formulation. Due to the computational demands associated with the application of MCTS, we adopt a selective application of our MCTS when ambiguity arises in choosing the next customer node that is proposed by the output distribution of the policy network, where the output distribution refers to the distribution of possible next nodes. This selective application enhances computational efficiency while maintaining the effectiveness of the MCTS strategy.

4.1. Reinforcement Learning Formulation

The input is denoted by , which represents a set of coordinates for customer i. The demand of a node can be included in the vector if the problem is a type of CVRP, i.e., the input for CVRP is then , where the semicolon ; represents a concatenation operation. Also, with n customers, the total number of nodes is for TSP, and for CVRP as one depot node exists. Thus, the input matrix is denoted as for TSP problems, and for CVRP.

To bring the problem into a reinforcement learning framework, we define the state, action, and cost (inversely convertible to reward). In our work, the observation state at timepoint t, denoted by , is a collection of the node data , containing coordinates and demands; the currently positioned node ; a set of available nodes to visit, denoted by ; and a masking vector for unavailable nodes , of which the element in the vector is filled with 0 if and if : . Though masking vector stems from the available-node set in our formulation, we intentionally add both and to the state so that the masking vector can be adjusted and redefined to reflect domain requirements just as several masking techniques are possible in Transformer [,,].

We omit t for , as the node data are invariant over time in this problem: for all time points, stays unchanged. However, one could make node data varying in time depending on the domain requirement, and the proposed network model is able to handle time-varying . For CVRP, the current vehicle’s load, denoted by , is also added to : . The node set holds nodes, not visited yet, that are able to fulfill the demands considering .

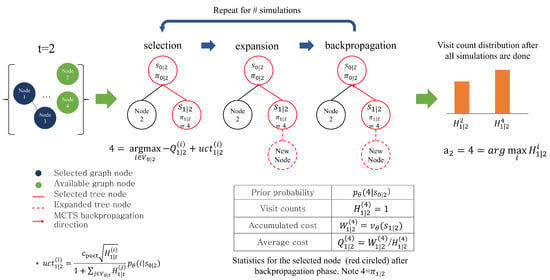

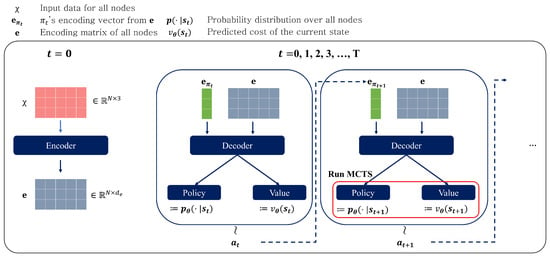

The action, denoted by , is to choose the next customer node and move to it. The action in an episode, a sequence of possible states, is chosen by our policy neural network, as shown in Figure 2, which outputs a probability distribution over all the nodes given the state at t, . We use to describe the policy network output at time t during the episode rollout. In the training phase, the action is sampled from action distribution , , as the next node to visit, meaning , with . The sampling operation aims to give the vehicle (or agent) a chance to explore a better solution space in our training phase. In the inference phase, however, we choose the action with the maximum probability, meaning if unvisited nodes exist, and otherwise.

Figure 2.

Overall process of routing using our proposed neural networks. It auto-regressively selects the next node. The encoder is executed once per episode, and the decoder is executed at every timestep t.

A value network is designed to predict the overall cost (or distance) in the episode at state . This is later used in updating the MCTS tree’s statistics. We describe in detail how other components work in Section 4.2. Specifically, an episode, , is a rollout process in which the state and action are interleaved over until the terminal state is reached: . In this problem, the terminal state is the state in which all customers are visited and the vehicle has returned to the depot if it is CVRP. Because of the possibility of multiple refillings of the vehicle, the last time point T can vary in episodes of CVRP problems. For example, even when the problems have the same size (for example, ), the optimal solution path can vary due to different customer locations and demands. Upon reaching the terminal state, no more transitions are made, and the overall distance, , is calculated.

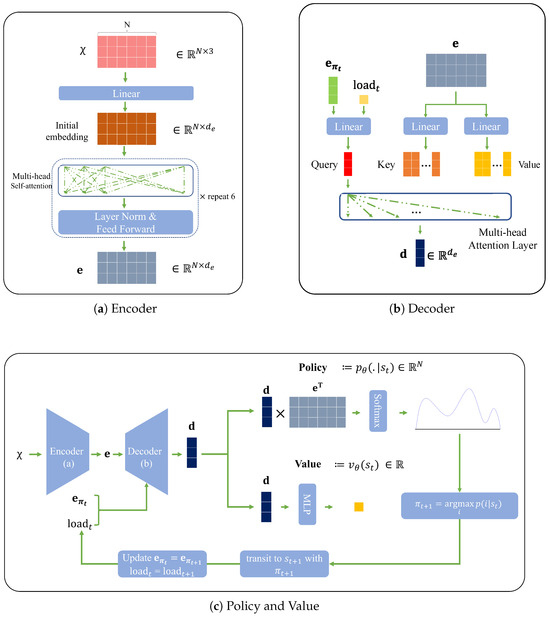

4.2. Architecture of the Proposed Network Model

The neural network architecture of our policy network for calculating the probability distribution is similar to the one used in previous studies [,]. However, to solve the routing problem, we modify the decoder part, relying on the transformer []. We aim to extract meaningful, possibly highly time-dependent and complex, features that are specific to the current state while maintaining the whole node structure. We make the two networks share the same embedding vector, transformed by the current input, at time t. The design of the shared input transformation is a deep-layered network, consisting of an encoder and decoder, to take advantage of both the whole node structure and the current node. The structure of the two networks with the shared feature transformation is reminiscent of the architecture from the AlphaGo series [,] and previous related works [,]. In essence, the input produces the estimated probability of possible next actions via the policy network, , and the predicted cost via the value network, . For simplicity, we denote all learnable parameters as , which consists of parameters from the shared transformation, those from the policy network, and those from the value network.

In detail, we explain the proposed network, dividing it into three parts: encoder in the feature transformation, decoder in the feature transformation, and policy and value. The objective of the encoder is to capture the inter-relationship between nodes. The encoder takes only the node data input from the , passing it to a linear layer to align with a new dimensionality, , via the multi-head attention layers, expressed by with input tensors of query , key , and value . The output of the multi-head attention is an encoding matrix, denoted by . Each row vector represents the node in the encoding matrix, denoted by . So, the currently positioned node at time t’s encoding is , the embedding vector reflecting the complex and interweaved relationship with the other nodes. In summary, the encoder process is self-attention to the input node data expressed as . This is repeated over several layers in the model. Relying on the idea of hidden states and current inputs in recurrent networks, we execute the encoder process once per episode, thereby reducing the computational burden, and use the current-node embedding and the current loading as inputs for the decoder in a sequential manner. We provide a detailed explanation later in this section.

The decoder is responsible for revealing the diluted relationships in the encoding matrix with additional information if it is given. Specifically, the decoder captures the relationships between the current node and the others. For example, let us assume that the vehicle is currently on node i and the current node’s embedding is . Notice that we ignore time t in the encoding matrix since it does not change in an episode as the output of the encoder is reused over the episode once it has been executed. By using this as the query and the whole encoding matrix as the key and value, the decoder can reveal the relationships between the current node and the others. When passing the query, key, and value, we apply linear transformations to each of them. One should note that TSP and CVRP have different inputs for the query. In CVRP, the current load, , is appended to the query input, while TSP is not. While there are several layers for the encoder, we only use one layer of MHA for the decoder. A summarization of the decoder is as follows:

The policy layer and value layer are responsible for calculating the final policy , a probability distribution on all nodes given , and the predicted distance output, respectively. We compute as follows with a given hyper-parameter C that regulates the clipping:

To compute , we multiply the decoder output by the transposed encoding matrix and divide it by . The output goes through the tanh function, and we add the mask for the unavailable nodes using . Finally, we apply a softmax operator to this result.

For , we pass the same decoder output to two linear layers of which the shape is similar to the usual feed-forward block in the transformer: , in which is an activation function such as ReLU and SwiGLU [,]. A diagram for each neural network design is presented in Figure 3.

Figure 3.

Components of the neural network.

When training the model for an episode, the encoding process is only required once as the input of the encoder (the coordinates of nodes) is fixed along the rollout steps. The decoder, on the other hand, takes the inputs that change over time, i.e., the current node and current load. Thus, on first execution of the model, we execute both the encoder and the decoder. After the first execution, we execute only the decoder and policy and value parts, saving considerable computations. The encoder and decoder share the same parameters, while the policy and value networks do not. Figure 2 explains the overall process.

Additionally, we intentionally exclude residuals in the encoder layers, as we have observed that, unlike the original transformer and its variants, residual connections greatly harm the performance of the model. Another variation we have added to the previous model is the activation functions. Recent studies on large language models (LLMs) exploited different activation functions for their work. We take this into account and test SwiGLU activation, just as Google’s PaLM did in []. We report the results in Section 5.

4.3. Training the Neural Network

To train the policy network , we use the well-known policy gradient algorithm, ‘reinforce with the baseline’ []. This algorithm deals with high-variance problems prevalent in policy gradient methods by subtracting a specially calculated value, called the baseline. This algorithm collects data during an episode and updates the parameters after each episode ends. For , the distance traveled by the vehicle following the sequence , the policy network aims to learn a stochastic policy that outputs a visit sequence with a small distance over all problem instances. The gradient of the objective function for the policy network is formulated as follows:

in which is a deterministic greedy rollout from the best policy trained so far as a baseline in order to reduce the variance of the original formulation []. After training model parameter for an epoch, we evaluate it with a validation problem set, setting as the evaluated cost in the validation. One can think of this procedure as the training-validation mechanism in general machine learning.

The mere use of a baseline incurs additional computational costs arising from the rollouts of several episodes, being an expensive procedure. To alleviate this burden, we introduce a value network, , instead of the greedy rollout baseline.

The value network’s objective is to learn the expected cost at the end of the episode from any state during episode rollout. We keep track of the value network’s output throughout a rollout and train the network with the loss function

As in the POMO approach [], we test the baseline using the average cost over a batch of episodes in addition to the baseline using value network . For instance, we calculate the baseline as the mean of all 64 episodes as a batch size, representing the number of concurrent episode runs. This value network is also used in the MCTS process described in the next section. Since our model shares the parameters in the encoder and decoder between the policy network and the value network, an update in the value network affects the parameters in the policy network with the gradient of the final loss as follows:

4.4. Proposed MCTS for the Routing

The main idea of MCTS is to improve the solutions, good in general, of trained policy and value networks to be problem specific by further investigating possible actions. In essence, without MCTS, we make a transition from to by taking action , which is the output from the policy network only. However, in our proposed MCTS as described in Figure 2, we select the next node by considering costs, which is the output of the value network, in addition to the prior probabilities from the policy network. In addition, we selectively apply the MCTS at time t when the highest probability from the current policy network fails to dominate, meaning actions other than the highest-probability action need to be considered. In practice, when the difference between the highest probability and the 5 highest probability is less than , we apply the MCTS, expounded below.

MCTS comprises three distinct phases: selection, expansion, and backpropagation. They iterate with a tree, initialized by the current node and updated as iterations continue, for a given number of simulations, denoted by as the total number of the MCTS iterations. At each iteration, the tree keeps expanding, and the statistics of some nodes in the tree are updated. As a result, a different set of tree node paths is explored throughout the MCTS iterations. Figure 4 describes an MCTS procedure in which a few MCTS iterations are run. Given time t, we use to represent a tree node positioned at level k. The definition of is the same as with the only difference being that represents inner time step k temporarily used in MCTS selection. Thus, in an MCTS iteration, with fixed t, level k advances as different levels are selected in the selection phase.

Figure 4.

An overall process of transition using MCTS depicts a situation in which MCTS is run at , and some simulation iterations are performed.

In the beginning, we initialize the root tree node with , meaning that MCTS starts from , therefore the vehicle position in is the same as the position at t, . To describe the MCTS phases, we introduce new notations: for the customer (or depot) node, denotes an accumulated visit count, and an accumulated total cost, both at the level of the tree. Then, we compute the ratio , called the Q-value. The Q-value, , for the i node represents an averaged cost at the level k. We normalize all Q-values in the simulation by min-max normalization.

In the selection phase, given the current MCTS tree, we recursively choose child nodes until we reach a leaf node in the tree. For instance, at the level of the tree node, among possible nodes, denoted by , we select the next node at according to Equation (13), thereby moving to a tree node at the level:

in which hyper-parameter adjusts the contribution of the policy-network evaluation in comparison with the negative of averaged cost for node i. Let us use ℓ to denote the leaf level in the tree in the selection phase. We obtain an inner state path and an inner node path . Then, the total node path from time 0 to the level ℓ becomes a concatenation of outer-path nodes and inner-path nodes : . The selection phase continues until no more child nodes are available to traverse from the current position, meaning that the node is a leaf node in the tree. In Figure 2, for instance, node 4 is selected, highlighted in red, from the root node in the first selection phase, and . Note that, in the next MCTS iteration, the selection phase starts again from the root node again, not from the leaf node selected from the previous iteration.

After the selection phase, the expansion phase starts, updating the MCTS tree by expanding new child nodes in at node and moving to the backpropagation phase. Note that in the early stages of the MCTS iterations, the tree may not have expanded enough to select a terminal node, meaning . As the MCTS iteration advances, the tree expands enough so that the final selected node from the selection phase, , becomes the terminal node, , meaning that routing has ended with no available node to move to. In the latter case, the MCTS iteration continues until it reaches in order to explore a variety of possible node paths.

Finally, in the backpropagation phase, tracing back , we update and for all selected tree nodes in and all selected customer nodes . Specifically, the update follows the rule below:

As the MCTS iteration continues, the selected leaf node can be either a terminal node (), meaning that the routing has ended, or a non-terminal node (). In the former case, determines the cost by evaluating the selected path of customer nodes, . However, in the latter, we use the predicted distance . This is possible, as we train the value network to predict the final distance at any state following Equation (11). In updating accumulated total cost as in Equation (15), we obtain the predicted cost using at the final selected node , then by greedily selecting the next customer node until routing finishes.

When finishing all simulations, we collect a visit-count distribution from the ’s child nodes and choose the most visited node as for the next node to visit in the rollout:

Algorithm 1 summarizes the overall process of our MCTS. Additionally, application of the MCTS is computationally expensive, making it impractical for real-world use. For each moment in time t, the entropy of the probability distribution is computed by the formula . We find that most outputs have low entropy, meaning the highest probability, , dominates other values. Our idea is that we selectively apply our MCTS to the rollout when fails to dominate, i.e., when the difference between the highest probability and the fifth highest probability is less than . We empirically obtain a strategy to improve solution quality via computation time trade-off.

| Algorithm 1 Overall simulation flow in MCTS |

Require: : root state initialized by , : trained policy network, : trained value network, : number of simulations to run

|

We present the pseudo-code for each MCTS phase in Algorithm 2. We highlight the modifications made to adapt MCTS to the routing problems. Firstly, we apply min-max normalization to the Q-value calculated during the entire search phase. Since the Q-value range is in , which is equal to the range of cost (distance), this can cause a computational issue as the term typically falls within the range . Using a naïve Q-value could lead to a heavy reliance on the Q-value when selecting the child node because of the scale difference. To apply min-max normalization to the Q-value in the implementation, we record the maximum and minimum values in the backpropagation phase. Secondly, to minimize the distance, we negate the Q-value so that the search strategy aims to minimize distance. In the pseudo-code, the STEP procedure, which we do not include in the paper due to its complexity, accepts the chosen action as input and processes the state to transit to the next state. Internally, we update the current position of the vehicle as the chosen action in addition to the current load of the vehicle if the problem is CVRP. In addition, the mask for unavailable nodes, , is updated to prevent the vehicle from returning to visited nodes.

| Algorithm 2 List of functions in MCTS. |

Require: = 1.1: hyper-parmeter

|

5. Experiments

At first, we generate problems by constructing N, the number of all nodes, random coordinates of which each coodinate is uniformly distributed in range . For CVRP, we set the first node as the depot node. In addition, the demand of each customer, , is assigned an integer between 1 and 10, scaled by 30, 40, and 50 for the problem size (n) 20, 50, and 100, respectively. We also apply POMO [] in our training, setting the pomo size as the number of customer nodes. However, in the inference phase, we exclude POMO, as the utilization of MCTS is infeasible. Our implementation, available at the github https://github.com/glistering96/AlphaRouter (accessed on 19 February 2025), is built on Pytorch Lightning [] and Pytorch []. For the setting of MCTS, we set as , and vary the total number of simulations, , by 100, 500, and 1000. We measure the performance on 100 randomly generated problems as described above. In all tables presented in this section, “dist” refers to the average total distance traveled across all instances, while “time” represents the average inference time. For the selective MCTS approach, the “time” includes both the DRL inference time and the additional computational cost incurred by the selective application of MCTS.

For the encoder and decoder settings, the size of each head’s dimension is 32 with 4 heads, summed up to the embedding dimension , and 6 encoder layers are used. We train the model for 300 epochs with batch size 64, and episodes. Note that this batch size is the number of parallel rollouts in training, meaning that 64 episodes are simultaneously executed. We fix the clipping parameter C to 10. We use Adam [] with a learning rate of , eps of , and betas without any warm-up or schedulings. For fast training, we use 16-bit mixed precision.

We conduct the experiment on a machine equipped with i5-13600KF CPU, RTX 4080 16 GB GPU, and 32 GB RAM on Windows 11. For heuristic solvers in the experiment, we use the same machine except with a WSL setting on Ubuntu 22.04.

5.1. Performance Comparison

In this section, we compare the performance of the proposed MCTS-equipped model with that of some heuristics for the two routing problems, TSP and CVRP. The baseline models for TSP are heuristic solvers, LKH3 [], Concorde [], Google’s OR Tools [], and Neareset Insertion strategy []. These heuristic solvers, developed by optimization experts, serve as benchmarks for assessing optimization capabilities in solving routing problems. For Google’s OR Tools, we add a guided-search option, and the result reported here is better than the result reported in the previous research [,]. For a fair comparison, the time limit for OR Tools is set to be similar to the longest MCTS runtime, , for each n.

For comparison, additionally, we denote the proposed model without the MCTS strategy as an attention model (AM) that leverages solely the proposed neural network without MCTS. When integrating the MCTS strategy with the network model, we vary the number of simulations to investigate its impact on performance. We evaluate one case using 100 simulations randomly generated by the same generation strategy employed during training. The comparative results of TSP and CVRP problems are summarized in Table 1 and Table 2, respectively. The column named represents the number of simulations in the MCTS strategy, and denotes the AM result without the MCTS strategy. Thus, the ‘DRL’ method includes the proposed method and the AM method. The column named ‘baseline’ represents the different baseline used in (10), in which “mean” represents the mean-over-batches baseline, and ‘value’ represents the baseline with the value network, . The baseline term here differs from the heuristic baselines reported in the tables. The best results in the experiment cases of the DRL methods are presented in bold.

Table 1.

Results of TSP problems.

Table 2.

CVRP problem result.

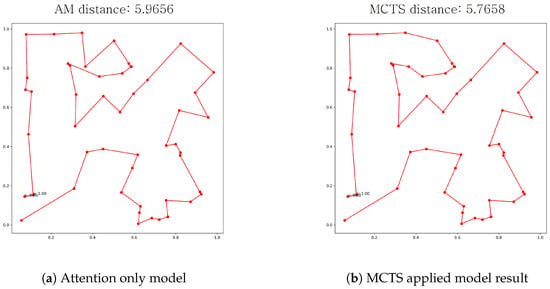

For TSP, none of the records from AM, denoted by , are presented in bold, meaning that the MCTS application improves solution quality. For CVRP, some records using AM are bold, but the best records are all from the cases with MCTS. We provide the visualization of the two different methods results in Figure 5 for a clearer understanding of the effectiveness of MCTS. Visually and quantitatively, the solution in Figure 5b of the proposed model is better than that in Figure 5a of the AM. As for the scalability analysis, our results indicate that as the problem size increases, the runtime exhibits an upward trend. As shown in our table, the computational cost increases substantially when scaling from smaller instances (e.g., 20 nodes) to larger instances (e.g., 100 nodes). This trend is expected, as larger problem sizes inherently introduce greater complexity in both the DRL-based inference process and the selective MCTS execution. Furthermore, our results demonstrate that as the number of simulations (ns) increases, the runtime also increases correspondingly. This observation aligns with the expected behavior, as a higher number of MCTS iterations leads to a more extensive search process, thereby incurring an additional computational overhead.

Figure 5.

Visualization of the two methods’ routing results in red on the same test data.

The results reveal that while the application of the MCTS contributes to performance enhancement compared to the ones without the MCTS, it still falls short of the performance achieved by the heuristic models, as other research shows [,]. Contrary to our expectations, an increase in the number of simulations does not consistently lead to solution improvement, i.e., a decrease in distance. The analysis indicates the lack of a discernible relationship between the number of simulations and the resulting distance. Specifically, for problems with a size of 50, Pearson’s correlation coefficient between the two is −0.72, and for the case of CVRP with a size of 100, it is −0.47. In other cases, correlation scores are generally low, below .

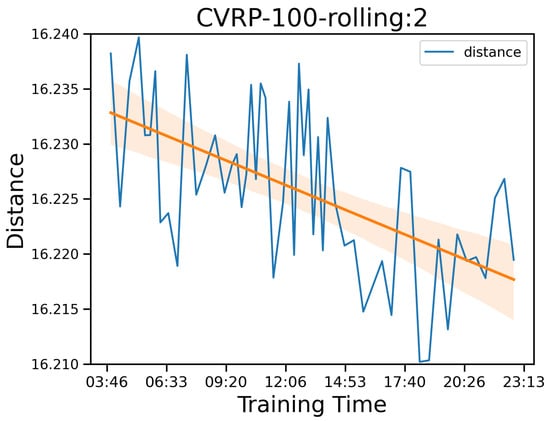

In addition, the MCTS strategy induces little runtime overhead compared to the method without MCTS, AM. For CVRP problems, the average runtimes of the proposed method are considerably shorter than those of the LKH3 method. However, the runtime increases according to the problem size n. Our explanation is that, as the problem gets bigger, some large problems are hard to solve only by the probability outputs from , therefore utilizing MCTS more. We argue that to improve the solution quality of AM, numerous samples of solutions, which take a huge amount of time to generate, are required. This result is also shown in the experiment results from []. Also, we point out that training the network with more learning epochs to lower a small amount of distance takes quite a long time. For example, to lower about in distance by training the network after 300 epochs, we need approximately 24 h as described in Figure 6, in which the orange line is the regressed line over the observations. However, with MCTS, we can consistently obtain better results within a few seconds, and the method is deterministic, unlike sampling methods. Nonetheless, we believe there still is room to improve the runtime of MCTS. The heuristic solvers are written in C, while our MCTS is written in Python 3.10.12, which is very slow. Implementing MCTS in C++ with parallelism should decrease the runtime.

Figure 6.

Training score with the distances in blue and the fitting in orange according to training time after 300 epochs on the CVRP-100 problem smoothed on a window size of 2.

To statistically confirm the effectiveness of the proposed MCTS, we include paired t-test results for two different cases. Table 3 reports the test results with the same conditions, including activation function and baselines. The records in the AM column follow the format “activation-baseline” and the records in the MCTS column follow “activation-baseline-MCTS simulation number”. In this setting, the test shows that applying the MCTS improves the solution except for TSP-20 and CVRP-50. We suspect that for relatively small-sized problems, relying on policy network only (AM) can be good enough, but if the problem gets bigger, introducing the MCTS can result in better solutions. Table 4 shows the test results regardless of the conditions, and thus the lowest p-value for each problem type and size. Application of the MCTS appears worthwhile for a given problem type, even when change in the activation and baseline is allowed.

Table 3.

A t-test report with the same conditions.

Table 4.

A t-test report regardless of the conditions.

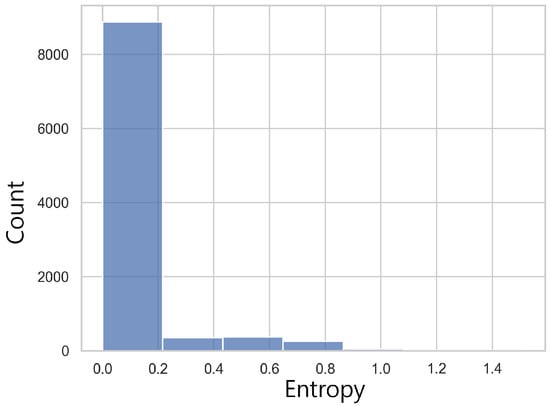

We also show the entropy of for all test cases in TSP with size 100 in Figure 7. We find that 86% of entropies are below , meaning that the outcomes of are dominated by a few suggestions and mere application of might lead to local optimal. Therefore, while controlling time overhead, applying MCTS selectively is a good strategy. We show the evaluation result for selective MCTS application in the ablation analysis in Section 5.2.

Figure 7.

Histogram of entropies of policy network for TSP with size 100.

5.2. Ablation Study

In this section, we present some ablation results for the activation function and baseline. We aggregate the results based on the activation function used and then calculate the mean and standard deviation of the score over all settings, i.e., AM, MCTS-100, MCTS-500, and MCTS-1000, and the results are described in Table 5.

Table 5.

Performance results according to activation functions.

We can easily see that as the problem size increases, SwiGLU produces shorter distances overall than ReLU. Also, we notice that as the problem size gets bigger, the difference between ReLU and SwiGLU becomes more apparent. For example, for CVRP problem types, when the problem size is n = 20, the difference in the distances is about 0.01, while at n = 100, the value reaches around 0.07. The scalability of SwiGLU is much better than ReLU.

For the baseline, we suggest two different approaches: mean over batches and a value-net-based approach. This baseline is used in (10) as . We calculate the mean and standard deviation of the distances over all settings based on the type of baseline used, like we did in the activation function analysis, and the result is described in Table 6. Surprisingly, the mean baseline approach dominates over the value net baseline except for CVRP with a problem size 100, which is the hardest problem in the settings. We presume that if the problem becomes complex, the value net baseline may perform better than the mean baseline. For instance, CVRP with a time window and pick-up may be solved better with the value net baseline.

Table 6.

Aggregated results of baselines.

We also report the non-selective MCTS experiment results here in Table 7 and Table 8. The readers should be aware that the hardware environment here is a little different from the results in the experiment section. For non-selective MCTS, we run the experiment on a workstation shared among others that runs on Linux with RTX 4090 24GB and Intel Xeon w7-2475X. Therefore, the runtime recorded in the tables below cannot be directly compared to the results in the table from the experiment, but they do indicate the trends in runtime. Despite different hardware settings and computing settings, the difference in runtime between selective MCTS and non-selective MCTS is substantial, and suggests that selective MCTS is meaningful.

Table 7.

Non-selective MCTS applied on TSP.

Table 8.

Non-selective MCTS applied on CVRP.

We can see that compared to the selective MCTS results from Table 1, a huge amount of runtime is required for MCTS. Also, the bold values for each group are almost the same or a little bit better with the selective MCTS strategy.

We can also observe similar trends on CVRP. One can easily see the huge deviation in runtime for records in both TSP and CVRP. Application of MCTS to every transition does not fully account for this deviation, and the shared resource characteristic of the workstation may have also affected it. Therefore, considering the runtime and performance, selective MCTS is the better approach.

5.3. Mcts Application Rule

In this section, we report two different methods for applying MCTS. The first is the introduced method, which uses the difference between the highest probability and the fifth-highest probability (diff_cut), and the second is to use the entropy of the policy network directly (ent_cut). For each method, we compare how different pivot numbers affect the performance and runtime. By adopting the results from activation, we only use the SwiGLU activation for this test, and for better visibility of the changes, we focus on the problem with size 100. The difference method’s results are reported in Table 9 and Table 10, and the entropy method’s results are reported in Table 11 and Table 12.

Table 9.

TSP 100 difference pivot result.

Table 10.

CVRP 100 difference pivot result.

Table 11.

Results for TSP 100 with entropy pivots.

Table 12.

Results for CVRP 100 with entropy pivots.

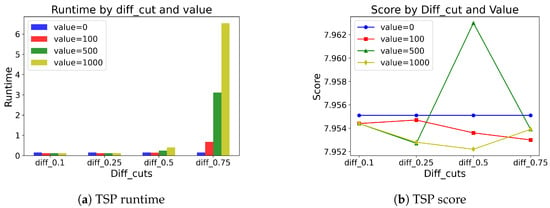

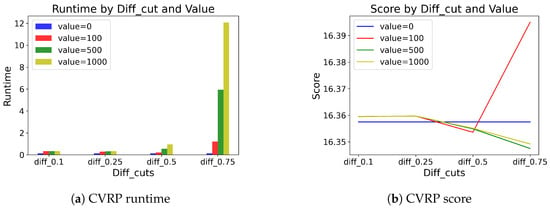

Table 9 and Table 10 show the results of different pivot values selected in the MCTS application. For example, diff_cut = 0.25 denotes that we apply MCTS when the difference between the highest probability and the fifth-highest probability is less than 0.25. If the difference is large, suggestions from are convincing, and if the difference is small, relying on only is not enough. Therefore, the higher the diff_cut is, the more MCTS is applied, and vice versa. For both problem types, not much difference is observed when the diff_cut < 0.5. However, if the diff_cut ≥ 0.5, we observe that applying MCTS does make a greater difference. We also find that SwiGLU coupled with the value baseline outputs generally produces better solutions than with the mean baseline outputs. Moreover, for the sake of intuitive visualization, we illustrate the results of SwiGLU coupled with the value baseline outputs for both TSP and CVRP problems in Figure 8 and Figure 9.

Figure 8.

Visualization of TSP results on runtime and score.

Figure 9.

Visualization of CVRP results on runtime and score.

Table 11 and Table 12 show the results of the different pivot values selected for MCTS application using entropy value of . For example, we only apply MCTS when the entropy of exceeds the given pivot value. Thus, the higher the ent_cut is set, the less MCTS is applied. Overall, the performance of ent_cut is not better than the diff_cut method in general. In addition, we can observe that the value baseline generally works better with MCTS than the mean baseline in CVRP. We suspect that the diff_cut method leads to a narrower search boundary than the ent_cut method, which is similar to the trust region in gradient descents.

In summary, the heavy application of MCTS may result in unsatisfactory performance, increasing the runtime significantly. Finding the optimal pivot for MCTS application may be an important issue. Therefore, we choose the diff_cut method with 0.75 as our final choice in the proposed work.

5.4. Modified CVRP Problems

In this section, we report the performance results for a modified CVRP to demonstrate the flexibility of our proposed method. For this purpose, we consider two cases, vehicle refilling amount and multiple depots, in which neither the classical formulation nor its heuristic solvers apply in their original form. To solve these cases with a classical formulation, we have to reconstruct the formulation from scratch. Solving these cases with heuristic solvers also requires reformulation. Meanwhile, our proposed method only needs one pre-training of the AM per modified CVRP problem, rather than reformulating the entire problem. Therefore, only the results of our proposed methods are provided here since classical formulations and heuristic solvers require reformulating the entire problem. Obviously, the results will be different from those in previous sections. We select two difference cut hyper-parameters, 0.25 and 0.75, use SwiGLU as the activation function, and set the total number of nodes n to 100.

In the first case, when visiting the depot node, we vary the refilling amount from 1 to 0.8 and 1.2, while the standard refilling amount for all other experiments is 1. We pretrain the AM for each refilling amount. As the refill amount decreases, the optimal solution should have a higher score because the vehicle must visit the depot node more frequently. We expect our model’s solution to exhibit this tendency. As expected, Table 13 shows that our model is able to find better solutions when the refill amount is larger, indicating that our proposed method is robust and capable of handling changes in the refill amount. Moreover, as the refill amount increases, the application of MCTS improves performance.

Table 13.

Performance for vehicle refills.

In the second case, we examine the performance of the modified CVRP problem with three depot nodes. We pretrain the AM for each number of depot nodes. As the number of depot nodes increases, the score of the optimal solution should decrease because the vehicle has more flexibility to choose a depot node when refilling is required. As expected, Table 14 shows that our model is able to find better solutions for more depot nodes, demonstrating that our model is robust to changes in the number of depot nodes. However, it is difficult to conclude that the application of MCTS results in better results compared to the use of AM in multi-depot CVRP problems since only a few MCTS-application cases show significant improvement.

Table 14.

Performance for multiple depot nodes.

In summary, our proposed method is robust to changes in CVRP formulation and sufficiently flexible to apply to modified problem formulations. However, the application of MCTS improves the solution for the vehicle-refill change, while its results remain unclear for multiple depot changes.

6. Conclusions and Future Works

We applied MCTS selectively in routing problems to determine whether it generated better solutions. Although the performance was still inferior to heuristic solvers, applying MCTS did generate better solutions than the case without MCTS. We also confirmed that using SwiGLU activation rather than typical ReLU can produce better solutions. The results of the baseline experiment remain controversial, but using mean-over-batches baselines generally helps generate better solutions. We believe that applying MCTS to different VRP problems with more complex situations may reveal the efficacy of the value-network-based baseline.

Future works can be developed from this paper. Firstly, the runtime of applying MCTS can be reduced if it is implemented in C++, which is much faster than Python. Also, if it is implemented in C++ (6.0), a parallelized version of MCTS [] could help boost simulation time. Second, MCTS can be extended to other NP-hard problems in the field, e.g., bin-packing and knap-sack. Third, it is worth checking whether MCTS helps generalization across different problem sizes. Besides the changes in the refill amounts and depot numbers, it is also worth reflecting on other meaningful changes that real-world VRP may face, such as changes in traffic conditions or vehicle availability.

Author Contributions

Conceptualization, W.-J.K.; software, W.-J.K.; writing—original draft preparation, W.-J.K. and K.L.; writing—review and editing, T.K. and J.J.; supervision, K.L.; project experiments, W.-J.K., J.J. and T.K. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Ministry of Education of the Republic of Korea and the National Research Foundation of Korea (NRF-2018R1A5A7059549).

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The data will be available upon request.

Conflicts of Interest

Author Won-Jun Kim was employed by the company Hyundai Glovis. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Kwon, Y.D.; Choo, J.; Yoon, I.; Park, M.; Park, D.; Gwon, Y. Matrix encoding networks for neural combinatorial optimization. Adv. Neural Inf. Process. Syst. 2021, 34, 5138–5149. [Google Scholar]

- Kwon, Y.D.; Choo, J.; Kim, B.; Yoon, I.; Gwon, Y.; Min, S. POMO: Policy Optimization with Multiple Optima for Reinforcement Learning. Adv. Neural Inf. Process. Syst. 2020, 33, 21188–21198. [Google Scholar]

- Fawzi, A.; Balog, M.; Huang, A.; Hubert, T.; Romera-Paredes, B.; Barekatain, M.; Novikov, A.; Ruiz, F.; Schrittwieser, J.; Swirszcz, G.; et al. Discovering faster matrix multiplication algorithms with reinforcement learning. Nature 2022, 610, 47–53. [Google Scholar] [CrossRef] [PubMed]

- Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; van den Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M.; et al. Mastering the Game of Go with Deep Neural Networks and Tree Search. Nature 2016, 529, 484–489. [Google Scholar] [CrossRef] [PubMed]

- Silver, D.; Schrittwieser, J.; Simonyan, K.; Antonoglou, I.; Huang, A.; Guez, A.; Hubert, T.; Baker, L.; Lai, M.; Bolton, A.; et al. Mastering the Game of Go without Human Knowledge. Nature 2017, 550, 354–359. [Google Scholar] [CrossRef] [PubMed]

- Vinyals, O.; Fortunato, M.; Jaitly, N. Pointer Networks. arXiv 2017, arXiv:1506.03134. [Google Scholar]

- Schrittwieser, J.; Antonoglou, I.; Hubert, T.; Simonyan, K.; Sifre, L.; Schmitt, S.; Guez, A.; Lockhart, E.; Hassabis, D.; Graepel, T.; et al. Mastering atari, go, chess and shogi by planning with a learned model. Nature 2020, 588, 604–609. [Google Scholar] [CrossRef] [PubMed]

- Kumar, S.N.; Panneerselvam, R. A Survey on the Vehicle Routing Problem and Its Variants. Intell. Inf. Manag. 2012, 4, 66–74. [Google Scholar] [CrossRef]

- Lin, S.; Kernighan, B.W. An Effective Heuristic Algorithm for the Traveling-Salesman Problem. Oper. Res. 1973, 21, 498–516. [Google Scholar] [CrossRef]

- Vidal, T.; Crainic, T.G.; Gendreau, M.; Lahrichi, N.; Rei, W. A Hybrid Genetic Algorithm for Multidepot and Periodic Vehicle Routing Problems. Oper. Res. 2012, 60, 611–624. [Google Scholar] [CrossRef]

- Vidal, T. Hybrid genetic search for the CVRP: Open-source implementation and SWAP* neighborhood. Comput. Oper. Res. 2022, 140, 105643. [Google Scholar] [CrossRef]

- Dai, H.; Khalil, E.B.; Zhang, Y.; Dilkina, B.; Song, L. Learning Combinatorial Optimization Algorithms over Graphs. arXiv 2018, arXiv:1704.01665. [Google Scholar]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to Sequence Learning with Neural Networks. arXiv 2014, arXiv:1409.3215. [Google Scholar]

- Luong, M.T.; Pham, H.; Manning, C.D. Effective Approaches to Attention-based Neural Machine Translation. arXiv 2015, arXiv:1508.04025. [Google Scholar]

- Bello, I.; Pham, H.; Le, Q.V.; Norouzi, M.; Bengio, S. Neural Combinatorial Optimization with Reinforcement Learning. arXiv 2017, arXiv:1611.09940. [Google Scholar]

- Sutton, R.S.; McAllester, D.; Singh, S.; Mansour, Y. Policy gradient methods for reinforcement learning with function approximation. Adv. Neural Inf. Process. Syst. 1999, 12, 1057–1063. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Kool, W.; van Hoof, H.; Welling, M. Attention, Learn to Solve Routing Problems! In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Kim, M.; Park, J. Learning collaborative policies to solve np-hard routing problems. Adv. Neural Inf. Process. Syst. 2021, 34, 10418–10430. [Google Scholar]

- Xin, L.; Song, W.; Cao, Z.; Zhang, J. Multi-decoder attention model with embedding glimpse for solving vehicle routing problems. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtually, 19–21 May 2021; Volume 35, pp. 12042–12049. [Google Scholar]

- Hottung, A.; Kwon, Y.D.; Tierney, K. Efficient Active Search for Combinatorial Optimization Problems. arXiv 2022, arXiv:2106.05126. [Google Scholar]

- Bubeck, S.; Cesa-Bianchi, N. Regret Analysis of Stochastic and Nonstochastic Multi-armed Bandit Problems. arXiv 2012, arXiv:1204.5721. [Google Scholar]

- Mańdziuk, J.; Świechowski, M. Simulation-based approach to Vehicle Routing Problem with traffic jams. In Proceedings of the 2016 IEEE Symposium Series on Computational Intelligence (SSCI), Athens, Greece, 6–9 December 2016; pp. 1–8. [Google Scholar] [CrossRef]

- Barletta, C.; Garn, W.; Turner, C.; Fallah, S. Hybrid fleet capacitated vehicle routing problem with flexible Monte–Carlo Tree search. Int. J. Syst. Sci. Oper. Logist. 2023, 10, 2102265. [Google Scholar] [CrossRef]

- Hottung, A.; Tanaka, S.; Tierney, K. Deep learning assisted heuristic tree search for the container pre-marshalling problem. Comput. Oper. Res. 2020, 113, 104781. [Google Scholar] [CrossRef]

- Luo, G.; Wang, Y.; Zhang, H.; Yuan, Q.; Li, J. AlphaRoute: Large-Scale Coordinated Route Planning via Monte Carlo Tree Search. In Proceedings of the AAAI Conference on Artificial Intelligence 2023, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 12058–12067. [Google Scholar] [CrossRef]

- Cohen-Solal, Q.; Cazenave, T. Minimax strikes back. arXiv 2020, arXiv:2012.10700. [Google Scholar]

- Sinha, A.; Azad, U.; Singh, H. Qubit Routing Using Graph Neural Network Aided Monte Carlo Tree Search. In Proceedings of the AAAI Conference on Artificial Intelligence 2022, Online, 22 February–1 March 2022; Volume 36, pp. 9935–9943. [Google Scholar] [CrossRef]

- Kilby, P.; Prosser, P.; Shaw, P. Dynamic VRPs: A Study of Scenarios; University of Strathclyde Technical Report; University of Strathclyde: Glasgow, UK, 1998; Volume 1. [Google Scholar]

- Borcinova, Z. Two models of the capacitated vehicle routing problem. Croat. Oper. Res. Rev. 2017, 8, 463–469. [Google Scholar] [CrossRef]

- Cheng, B.; Misra, I.; Schwing, A.G.; Kirillov, A.; Girdhar, R. Masked-attention mask transformer for universal image segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 1290–1299. [Google Scholar]

- Du, Y.; Xie, P.; Wang, M.; Hu, X.; Zhao, Z.; Liu, J. Full transformer network with masking future for word-level sign language recognition. Neurocomputing 2022, 500, 115–123. [Google Scholar] [CrossRef]

- Agarap, A.F. Deep learning using rectified linear units (relu). arXiv 2018, arXiv:1803.08375. [Google Scholar]

- Shazeer, N. Glu variants improve transformer. arXiv 2020, arXiv:2002.05202. [Google Scholar]

- Chowdhery, A.; Narang, S.; Devlin, J.; Bosma, M.; Mishra, G.; Roberts, A.; Barham, P.; Chung, H.W.; Sutton, C.; Gehrmann, S.; et al. Palm: Scaling language modeling with pathways. arXiv 2022, arXiv:2204.02311. [Google Scholar]

- Williams, R.J. Simple Statistical Gradient-Following Algorithms for Connectionist Reinforcement Learning. Mach. Learn. 1992, 8, 229–256. [Google Scholar] [CrossRef]

- Team, T.P.L. PyTorch Lightning, Opensource, 2023. Available online: https://github.com/Lightning-AI/lightning (accessed on 19 February 2025).

- Paszke, A.; Gross, S.; Chintala, S.; Chanan, G.; Yang, E.; DeVito, Z.; Lin, Z.; Desmaison, A.; Antiga, L.; Lerer, A. Automatic Differentiation in Pytorch. 2017. Available online: https://openreview.net/forum?id=BJJsrmfCZ (accessed on 19 February 2025).

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Helsgaun, K. An Extension of the Lin-Kernighan-Helsgaun TSP Solver for Constrained Traveling Salesman and Vehicle Routing Problems: Technical Report; Roskilde Universitet: Roskilde, Denmark, 2017. [Google Scholar]

- Applegate, D.; Bixby, R.; Chvátal, V.; Cook, W. Concorde Tsp Solver. 03.12.19. Available online: https://en.wikipedia.org/wiki/Concorde_TSP_Solver (accessed on 19 February 2025).

- Furnon, V.; Perron, L. OR-Tools Routing Library. 2023. Available online: https://developers.google.com/optimization (accessed on 19 February 2025).

- Rosenkrantz, D.J.; Stearns, R.E.; Lewis, P.M., II. An Analysis of Several Heuristics for the Traveling Salesman Problem. SIAM J. Comput. 1977, 6, 563–581. [Google Scholar] [CrossRef]

- Chaslot, G.M.B.; Winands, M.H.; van Den Herik, H.J. Parallel Monte-Carlo Tree Search. In Proceedings of the Computers and Games: 6th International Conference, CG 2008, Beijing, China, 29 September–1 October 2008; Springer: Berlin/Heidelberg, Germany, 2008; pp. 60–71. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).